Supporting statement Part B revised 12-7-2006

Supporting statement Part B revised 12-7-2006.doc

Study of Teacher Preparation in Early Reading Instruction

OMB: 1850-0817

U.S. Department of Education

Institute of Education Sciences

National Center for Education Evaluation

Study of Teacher Preparation in Early Reading Instruction

Office of Management and Budget

Clearance Package Supporting Statement

and Data Collection Instrument: Part B

Revised December 7, 2006

TABLE OF CONTENTS

B. Collections of Information Employing Statistical Methods 2

B1. Describe the Potential Respondent Universe 2

B2. Describe the Procedure for Collection of Information 3

B3. Methods to Maximize Response Rates……………………………………………4

B4. Test of Procedures or Methods to be Undertaken 5

B5. Individuals and Organizations Involved in this Project 5

B. Collections of Information Employing Statistical Methods

B1. Describe the Potential Respondent Universe

Because both institutions and students will be levels of analyses in the study, the study goal is to be able to report with enough precision at each level. In addition, because many of the analyses will be conducted at various subgroup levels (e.g., institution type, program type, student characteristics, etc.) the goal is to be able to report analyses with as few as a couple of hundred cases per cell with enough precision so that our confidence interval would be no less than 10 percent (with a 95% confidence level). The sample size also takes into account a possible design effect of 2 (due to the clustering existing among students from the same program). The study will sample 100 institutions and at each institution 30 pre-service teachers will be selected at random from education programs that yield elementary school teachers. The total sample size for students then is 3000.

First Stage Sampling: Geographic Sample

Every state in the continental U.S. has at least one institution that graduates a minimum of 50 elementary education teachers a year. Because of this, it would be ineffective from an operations perspective to use institutions as the first stage of sampling, as it is likely that the sample would then include institutions scattered across all 48 states. To reduce the cost and time of administration, we will use geographic clustering as a first stage of sampling. Twenty-five of the 48 states will be selected probability proportional to size, using a stratified systematic random sampling procedure. The measure of size is the number of elementary education graduates across all institutions of higher education in the state.

The frame will be sorted according to the following characteristics, in this order, using a serpentine order (high to low, then low to high):

NAEP region

Measure of size

Second Stage Sampling: The Institution Sample

The sampling design for the second stage is a stratified systematic random sample, with sampling probabilities proportionate to size (PPS). The measure of size is the number of elementary education graduates at an institution.

Institution Selection

The frame will be sorted according to the following institution characteristics, in this order, using a serpentine order (high to low then low to high):

State

School type (public vs. private)

Minority enrollment (high vs. low, defined by median)

Measure of size

This sorting will ensure a good spread of key characteristics across all institutions selected in the sample. The sample will be systematically selected from the ordered frame. The sampling interval will be calculated by dividing the cumulative measure of size by the sample size.

Third Stage Sampling: The Student Sample

The third and final stage of selection will consist of a sample of 3,000 degree-seeking elementary education students in their last semester of instruction before graduation. The sampling design will be a stratified systematic random sample. The alphabet as applied to the last and first name of students will be used as an implicit stratifying variable because there is no reason to assume a correlation between one’s last and first name and one’s ability. Hence, at each institution, eligible students will be sorted alphabetically by last name and first name. After identifying a random start, 30 students will be selected in a systematic manner at each institution.

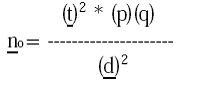

The formula used for the student sample size is from W. Cochran’s (1977) textbook on sampling (Sampling Techniques, Wiley). The formula simplifies to:

Where t = value for selected alpha level of .025 in each tail = 1.96 (the 95% confidence level), where (p)(q) = estimate of variance = .25 (maximum possible proportion (.5) * 1- maximum possible proportion (.5) produces maximum possible sample size), and where d = acceptable margin of error for proportion being estimated (0.03 or 3%).

In the formula above, n = 1067. However, since the study sample is clustered within schools, assuming a design effect of 2 produces a student sample size of 2134. Our proposed sample size of 3000 students will allow the study to obtain the targeted sample size of 2134 with a response rate of about 71% at the student level. The study will strive for a higher response rate, but must allow for the difficulty of recruiting busy students in their last semester of school prior to graduation.

B2. Describe the Procedure for Collection of Information

Information to answer the first research question will be gathered through the utilization of the pre-service teacher survey. Information to answer the second research question will be gathered using the pre-service teacher assessment as previously described. The student sample will be limited to individuals in their final year of study. Research team staff will proctor assessment sessions scheduled at each institution.

B3. Methods to Maximize Response Rates and Dealing with Issues of Non-Response

The response rate goal for the institutions is 92% and the goal at the student level is 85% (about 78% overall response rate). Optimal and AIR have a distinguished history of producing high rates of response when recruiting for multi-site large-scale studies. For this study, we have recruited and trained staff members who possess the necessary qualities to work in a sensitive environment such as colleges and universities, particularly teacher education programs. Prior to recruitment, recruitment staff will utilize the institution’s web site in order to research and become familiar with the teacher education program. Each recruiter will be required to have a full understanding of the study and be able to provide answers to any questions that might be posed. Personal meetings with School of Education Deans at the different institutions to discuss and explain the merits of the study will result in higher rates of response compared with communicating strictly through mail or via telephone.

We will also sort institutions within explicit strata; the frame will be sorted according to the following institution characteristics, in this order, using a serpentine order:

State

School type (public vs. private)

Minority enrollment (high vs. low, defined by median)

Measure of size

This sorting will help to ensure a good spread of key characteristics across all institutions selected in the sample. The sample will be systematically selected from the ordered frame. Within each explicit stratum a sampling interval will be calculated by dividing the cumulative measure of size by the sample size.

Each sampled institution will be assigned two replacement institutions in the sampling frame. However, a sampled institution cannot be designated as a replacement institution, and a replacement institution cannot be assigned to substitute for more than one sampled institution. For each sampled institution, the next two institutions immediately following it in the sampling frame will be designated as its replacement institutions. The use of implicit stratification variables, and the subsequent ordering of the institution sampling frame by size, will ensure that any sampled institution’s replacements will have similar characteristics.

When a sampled institution is the last institution listed in an explicit stratum, then the two institutions immediately above it will be designated as its replacement institutions. If a sampled institution is the next-to-last institution listed in an explicit stratum, then the institutions immediately above and below it will be designated as its replacement institutions.

NCES statistical standards on response rates with substitutions1 state:

“In multiple stage sample designs, where substitution occurs only at the first stage, the first stage response rate must be computed ignoring the substitutions. Response rates for other sampling stages are then computed as though no substitution occurred (i.e. in subsequent stages, cases from the substituted units are included in the computations). If multiple stage sample designs use substitution at more than one stage, then the substitutions must be ignored in the computation of response rate at each stage where substitution is used.”

Thus, while replacement institutions will not count toward the institution-level response rate, students from replacement institutions will count toward the student-level response rate.

B4. Test of Procedures or Methods to be Undertaken

Survey Instrument Pilot

A key element in the survey design process was trying out item formats and wording with pre-service teachers. Education students from universities in and around Washington, DC participated in the two phases of the survey instrument pilot strategy—focus groups and cognitive laboratories. Each phase had a specific purpose. In Phase 1, focus groups were conducted to inform use of terminology and response formats. In Phase 2, cognitive laboratory sessions were conducted to ensure that items were being interpreted as intended and that response formats were appropriate for the universe of students’ experiences. The data collected in the pilot testing informed the final instrument (refer to Appendix A).

Assessment Instrument Pilot

The instrument for the teacher assessment was piloted in a previous study with 500 teachers across the country (OMB 1850-0803). Additional testing of the assessment occurred in late spring of 2006 involving a group of local students from the DC area to ensure that the instrument was appropriate for pre-service teachers.

B5. Individuals and Organizations Involved in this Project

The information for this study is being collected by Optimal Solutions Group and its subcontractor, American Institutes for Research, on behalf of the U.S. Department of Education. Contact information for key personnel is provided below.

Input to the design was received from the following individuals, as well as the study’s Technical Work Group (listed in section A8).

Company Contact Name Telephone Number

Optimal Solutions Group

Co-Project Director Dr. Mark Turner 443-451-7061

Task Leader Dr. Joanne Meier 301-306-1170

Task Leader Dr. Adam Bickford 573-823-7144

Project Manager Dr. Eric Asongwed 301-306-1170

American Institutes for Research

Principal Investigator Dr. Terry Salinger 202-403-5037

Co-Project Director Dr. David Baker 202-403-5036

Task Leader for Sampling,

Design, and Analysis Dr. Stéphane Baldi 202-403-5615

APPENDIX A

Data Collection Instruments

| File Type | application/msword |

| Author | tracy.rimdzius |

| Last Modified By | DoED |

| File Modified | 2006-12-07 |

| File Created | 2006-12-07 |

© 2026 OMB.report | Privacy Policy