OMB Package - HUDQC

OMB Package - HUDQC.doc

Quality Control for Rental Assistance Subsidy Determination

OMB: 2528-0203

OMB Clearance Package

Quality Control for Rental Assistance Subsidy Determinations

Submitted by:

Office of Policy Development and Research

Department of Housing and Urban Development

Washington, DC 20410

Prepared by:

ORC Macro

11785 Beltsville Drive

Calverton, MD 20705-3119

July 26, 2006

Table of Contents

1. Circumstances that make the collection of information necessary 1

4. Efforts to identify duplication 5

5. Minimization of burden on small entities 5

6. Consequences of not collecting the information 5

8b. Consultation with persons outside the agency 6

9. Payments or gifts to respondents 7

11. Justification of questions 10

14. Annualized costs to Federal Government 13

15. Program changes or adjustments 14

16. Plans for tabulation and publication 14

17. Non-display of expiration date 15

18. Exception to certification statement 15

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS 16

1. Respondent universe and sampling methods 16

2. Procedures for collection of information 19

3. Maximization of response rates 24

4. Tests of procedures or methods 27

5. Individuals consulted on statistical aspects of design 27

Appendix A Federal Register Notice

Appendix B Project-Specific Information Cover Letters

Project-Specific Information Forms

Appendix C Data Collection Instrument

Household Interview

Appendix D Project Staff Questionnaire Cover Letter

Project Staff Questionnaire

Appendix E Analysis Plan

A. JUSTIFICATION

1. Circumstances that make the collection of information necessary

Explain the circumstances that make the collection of information necessary. Identify legal or administrative requirements that necessitate the collection of information.

Introduction

The U.S. Department of Housing and Urban Development (HUD) has been conducting comparable Assisted Housing Quality Control (QC) error measurement studies since 2000. The findings from the most recent study indicate that subsidies for at least 34 percent of households receiving assistance through the Public Housing and Section 8 programs contain some type of error resulting in $375 million dollars in net annual erroneous payments. This is a great improvement over the findings from the 2000 study according to which at least 56 percent of the subsidies for households receiving housing assistance contained some type of error resulting in more than $1 billion in net annual erroneous payments. HUD continues to need information that will help sharpen its management efforts to correct the most serious of such errors and meet the requirements of the Improper Payments Act of 2002.

This series of three quality control studies will provide HUD with updated estimates on the type, severity, and cost of errors in the income (re)certification and rent calculation process. It will also analyze changes in the type and severity of such errors since the previous studies. This information, along with other HUD efforts, will provide HUD with mechanisms to improve determinations of assisted-housing tenants’ income, rent, and subsidy amounts. HUD will use the findings from this study to focus efforts on correcting the most serious errors and determine the effectiveness of corrective measures.

Authorization to Collect Information

The collection of survey data by HUD is authorized under the Housing and Urban Development Act of 1970 (12USC 17012-1):

Sec. 501. The Secretary of Housing and Urban Development is authorized and directed to undertake such programs of research, studies, testing, and demonstration relating to the mission and programs of the Department as he determines to be necessary and appropriate.

Sec. 512(g). The Secretary is authorized to request and receive such information or data as he deems appropriate from private individuals and organizations, and from public agencies. Any such information or data shall be used only for the purposes for which it is supplied, and no publication shall be made by the Secretary whereby the information or data furnished by any particular person or establishment can be identified, except with the consent of such person or establishment.

Sec. 210 of the Housing and Community Development Amendments of 1979 states—

The Secretary shall establish procedures which are appropriate and necessary to assure that income data provided to public housing agencies and owners by families applying for or receiving assistance under this section is complete and accurate. In establishing such procedures, the Secretary shall randomly, regularly, and periodically select a sample of families to authorize the Secretary to obtain information from these families for the purposes of income verification, or to allow those families to provide such information themselves. Such information may include, but is not limited to, data concerning unemployment compensation and Federal income taxation and data relating to benefits made available under the Social Security Act, the Food Stamp Act of 1977, or title 38, United States Code. Any such information received pursuant to this subsection shall remain confidential and shall be used only for the purpose of verifying incomes in order to determine eligibility of families for benefits (and the amount of such benefits, if any) under this section.

Title I, Section 1, Sec. 8(k) of the Housing Act of 1937 as amended [Public Law 93-383, 88 Stat. 633] (42 U.S.C. 1437) states—

The Secretary shall establish procedures which are appropriate and necessary to assure that income data provided to public housing agencies and owners by families applying for or receiving assistance under this section is complete and accurate. In establishing such procedures, the Secretary shall randomly, regularly, and periodically select a sample of families to authorize the Secretary to obtain information on these families for the purposes of income verification, or to allow those families to provide such information themselves. Such information may include, but is not limited to, data concerning unemployment compensation and Federal income taxation and data relating to benefits made under the Social Security Act, the Food Stamp Act of 1977, or title 38, United States Code. Any such information received pursuant to this subsection shall remain confidential and shall be used only for the purpose of verifying incomes in order to determine eligibility of families for benefits (and the amount of such benefits, if any) under this section.

To further institutionalize Federal agency efforts to eliminate improper payments, the President signed the Improper Payments Information Act (IPIA) of 2002 (Public Law 107-300) into law on November 26, 2002. The central purpose of the IPIA is to enhance the accuracy and integrity of Federal payments. To achieve this objective, the IPIA provides an initial framework for Federal agencies to identify the causes of, and solutions to, reducing improper payments. In turn, guidance issued by the Office of Management and Budget (OMB) in May of 2003 (Memorandum 03-13) requires agencies to: (i) review every Federal program, activity, and dollar to assess risk of significant improper payments; (ii) develop a statistically valid estimate to measure the extent of improper payments in risk susceptible Federal programs; (iii) initiate process and internal control improvements to enhance the accuracy and integrity of payments; and (iv) report and assess progress on an annual basis.

2. Use of information

Indicate how, by whom, and for what purposes the information is to be used; indicate actual use the agency has made of the information received from current collection.

HUD will use the information to identify the amount and source of error and to develop corrective measures that can be taken to reduce the amount of error in eligibility determinations and rent calculations. HUD and its agents need information concerning the type and severity of errors that are occurring in order to construct effective remedies. If those data are not collected, no assessment as to the amount and type of errors can be made nor can corrective actions be developed and implemented. Without such corrective action it is believed that a portion of HUD findings subsidies will be misused. For example, the FY 2004 study (the most recent study with published findings) found various net payment errors, detailed below.

All summary error estimates represent the summation of net case-level errors. That is, a case is determined to have a net overpayment error, no error, or a net underpayment error. Major findings were—

Rent Underpayments of Approximately $681 Million Annually (down from $896 in FY 2003). For tenants who paid less monthly rent than they should pay (18%), the average monthly underpayment was $72. For purposes of generalization, total underpayment errors were spread across all households (including those with no error and overpayment error) to produce a program-wide average monthly underpayment error of $13 ($156 annually). Multiplying the $156 by the approximately 4.4 million units represented by the study sample results in an overall annual underpayment dollar error of approximately $681 million per year.

Rent Overpayments of Approximately $306 Million Annually (down from $519 in FY 2003). For tenants who paid more monthly rent than they should pay (16%), the average monthly overpayment was $37. When this error was spread across all households, it produced an average monthly overpayment of $6 ($70 annually1). Multiplying the $70 by the approximately 4.4 million assisted housing units represented by the study sample results in an overall annual overpayment dollar error of approximately $306 million per year.

Aggregate Net Rent Error of $375 Million Annually. When combined, the average gross rent error per case is $19 ($13 + $6). Over- and underpayment errors partly offset each other. The net overall average monthly rent error is $7 ($13 – $6). HUD subsidies for Public Housing and Section 8 programs equal the allowed expense level or payment standard minus the tenant rent, which means that rent errors have a dollar-for-dollar correspondence with subsidy payment errors, except in the Public Housing program in years in which it is not fully funded (in which case errors have slightly less than a dollar-for-dollar effect). The study found that the net subsidy cost of the under- and overpayments was approximately $375 million per year ($681 million – $306 million).

Subsidy over- and underpayment dollars are summarized in Exhibit A2.1.

Exhibit A2.1

Subsidy

Dollar Error

Type Dollar Error |

Subsidy Overpayment |

Subsidy Underpayment |

Average Monthly Per Tenant Error for Households with Errors |

$72 (18% of cases) |

$37 (16% of cases) |

Average Monthly Per Tenant Error Across All Households |

$13 |

$6 |

Total Annual Program Errors |

$681 million |

$306 million |

Total Annual Errors—95% Confidence Interval |

$574–$789 million |

$247–$366 million |

Exhibit A2.2 provides estimates of program administrator error by program type.

Exhibit A2.2

Estimates

of Error in Program Administrator Income

and Rent Determinations

(in $1,000’s)

Administration Type |

Subsidy Overpayments |

Subsidy Underpayments |

Net Erroneous Payments |

Gross Erroneous Payments |

Public Housing |

$173,172 |

$68,904 |

$104,268 |

$242,076 |

PHA-Administered Section 8 |

$366,492 |

$154,728 |

$211,764 |

$521,220 |

Total PHA Administered |

$539,664 |

$223,632 |

$316,032 |

$763,296 |

Owner-Administered |

$141,708 |

$82,740 |

$58,968 |

$224,448 |

Total |

$681,372 |

$306,372 |

$375,000 |

$987,744 |

|

(+/-$107,203) |

(+/-$59,293) |

(+/-$113,149) |

(+/-$131,201) |

3. Information technology

Describe whether, and to what extent, the collection of information involves the use of automated, electronic, mechanical, or other technological collection techniques or other forms of information technology, (e.g., permitting electronic submission of responses, and the basis for the decision for adopting this means of collection). Also describe any consideration of using information technology to reduce burden.

Automation of tenant data collection. We will continue to use the computer-assisted data collection technology developed for the 2000 study (data was collected for actions taken in 1999 and early 2000) and enhanced for the FY 2003, FY 2004, and FY 2005 studies to gather nearly all of the data from project files and tenants. Our field staff will use laptops with modules designed specifically for selecting the tenant sample, abstracting tenant file data (including the 50058/59), and interviewing the tenant. In addition, automated tracking and data monitoring systems will ensure that the data and supporting paper documents are collected and accurate. This approach offers the following advantages:

More objective data collection. QC field staff will apply a consistent set of procedures, questions, and probes. Branching and skip patterns applied by the system will prevent field staff from mistakenly skipping sections, omitting questions, or asking the wrong questions during the tenant interview.

Onsite editing of abstraction and interview data. The computer-assisted data collection process will apply logic, consistency checks, and computational checks on all information provided.

Monitoring of field staff’s productivity and accuracy. Field data, uploaded daily, will be monitored by ORC Macro’s field supervisors for accuracy to assure HUD of high-quality data at a reasonable cost.

4. Efforts to identify duplication

Describe efforts to identify duplication. Show specifically why any similar information already available cannot be used or modified for use for the purposes described in Item 2 above.

There is no duplication of the data to be collected in this study.

5. Minimization of burden on small entities

If the collection of information impacts small businesses or other small entities, describe any methods used to minimize burden.

Procedures for the data collection at the primary participating entities (i.e., PHAs and owners) have been designed to minimize burden as much as possible. Since the data must be collected in a consistent manner, no special procedures are possible for small entities. Due to the structure of the smaller entities, it is likely that fewer staff members will have to be interviewed at the smaller entities since one staff member will likely have the responsibility for several management areas (and therefore be able to answer questions about them) while in larger agencies several staff may have to be interviewed to obtain all the required information.

The only other small entities involved in this study might be a small business at which a member of the sample is employed, therefore requiring verification from the employer. A verification request requires only a small amount of information so the burden on any one employer will be small. We do not anticipate that any one small employer is likely to have more than one sample member in their employ.

6. Consequences of not collecting the information

Describe the consequences to Federal program or policy activities if the collection is not conducted or is conducted less frequently as well as any technical or legal obstacles to reducing burden.

If this data collection did not occur, HUD would be in violation of the Improper Payments Information Act (IPIA) of 2002 (Public Law 107-300). The central purpose of the IPIA is to enhance the accuracy and integrity of Federal payments. To achieve this objective, the IPIA provides an initial framework for Federal agencies to identify the causes of, and solutions to, reducing improper payments. In turn, guidance issued by the OMB in May of 2003 (Memorandum 03-13) requires agencies to: (i) review every Federal program, activity, and dollar to assess risk of significant improper payments; (ii) develop a statistically valid estimate to measure the extent of improper payments in risk susceptible Federal programs; (iii) initiate process and internal control improvements to enhance the accuracy and integrity of payments; and (iv) report and assess progress on an annual basis.

7. Special circumstances

Explain any special circumstances that would cause an information collection to be conducted more often than quarterly or require respondents to prepare written responses to a collection of information in fewer than 30 days after receipt of it; submit more than an original and two copies of any document; retain records, other than health, medical, government contract, grant-in-aid, or tax records for more than three years; in connection with a statistical survey that is not designed to produce valid and reliable results that can be generalized to the universe of study and require the use of a statistical data classification that has not been reviewed and approved by OMB.

This data collection effort does not entail any of these special circumstances.

8a. Federal Register notice

If applicable, provide a copy and identify the date and page number of publication in the Federal Register of the sponsor’s notice, required by 5 CFR 1320.8(d), soliciting comments on the information collection prior to submission to OMB. Summarize public comments received in response to that notice and describe actions taken by the sponsor in responses to these comments. Specifically address comments received on cost and hour burden.

In accordance with the Paperwork Reduction Act of 1995, HUD published a notice in the Federal Register announcing the agency’s intention to request an OMB review of data collection activities for the Quality Control for Rental Assistance Subsidy Determinations. The notice was published on May 5, 2006, in Volume 71, Number 87, pages 26,553–26,554 and provided a 60-day period for public comments. A copy of this notice appears in Appendix A. No public comments were received regarding this notice.

8b. Consultation with persons outside the agency

Describe efforts to consult with persons outside the agency to obtain their views on the availability of data, frequency of collection, clarity of instructions and recordkeeping, disclosure or reporting format, and on the data elements to be recorded, disclosed or reported. Explain any circumstances which preclude consultation every three years with representatives of those from whom information is to be obtained.

ORC Macro worked with the consultants listed below, all of whom are fully conversant with the HUD programs included in the study and the policies and procedures of those programs.

Judy Lemeshewsky

Independent Consultant

Former lead Occupancy expert at HUD headquarters for the Office of Housing.

Phone Number: 703-670-5033

Virgina Viles

Independent Consultant

Current owner of private company, Housing and Computer Resources and Development.

Former Housing Specialist and Rehabilitation Specialist for the Housing Authority of the City of Houston

Phone Number: 972-641-7737

9. Payments or gifts to respondents

Explain any decision to provide any payment or gift to respondents, other than remuneration of contractors or grantees.

No payment or gift is provided to respondents.

10. Confidentiality

Describe any assurance of confidentiality provided to respondents and the basis for the assurance in statute, regulation, or agency policy.

All ORC Macro and data collection staff are required to sign a data confidentiality pledge associated specifically with this study. A copy of this pledge is located on the following page.

Confidentiality Pledge

I, Field Interviewer Name, in my role as an employee of Hiring Subcontractor Name, working as a Field Interviewer for the Quality Control for Rental Assistance Subsidies Determinations study, HUD/ORC Macro contract GS-23F-9777H (Task Order #: C-CHI-00829,CHI-T0001), understand and agree to comply with the following:

Confidentiality of Data

All information I obtain, from either formal interviews or in casual observation or conversation, will be treated as confidential and not discussed with any parties not authorized to have access to such data, including (but not limited to) project/PHA staff, other households I may contact, and HUD staff.

Support for Goals of Study/Objectivity

I support the goals of this study and will collect, to the best of my ability, complete and accurate data, and will report the data objectively and without regard to how it might affect the results of this study. I will be objective in all dealings with study participants. I will voice no opinions I may have about assisted housing, assisted housing tenants, and how assisted housing programs are administered, and I will not discuss them with any study participants (including PHA/project staff and households).

Treatment of Hardcopy Documents

All information I obtain, from hardcopy documents will be treated as confidential and not discussed with or shown to any parties not authorized to have access to such information, including (but not limited to) project/PHA staff, other households I may contact, and HUD staff.

My signature below signifies my agreement with the above stipulations.

Field Interviewer Signature:

Date:

Assisted-housing tenants are provided with a letter to introduce the study to them and request their participation in an in-person interview. In both the letter and memo provided below, the box at the bottom of the form contains the following text regarding confidentiality.

Office of Management and Budget (OMB) clearance for this study has been obtained, and the OMB clearance number is 2528-0203. All information collected is subject to confidentiality requirements, but may be shared with the staff responsible for your rent determinations.

11. Justification of questions

Provide additional justification for any questions of a sensitive nature, such as sexual behavior and attitudes, religious beliefs, and other matters that are commonly considered private; include specific uses to be made of the information, the explanation to be given to persons from whom the information is requested, and any steps to be taken to obtain their consent.

Almost all questions in the tenant questionnaire concern household income and expenses and certain characteristics of household members, all of which could be considered to be sensitive areas. Because the purpose of the study is to measure error in rent and eligibility determinations, and such determinations are based on household income, expenses, and certain characteristics (e.g., tenant disability or elderly status, number of dependents), those questions are absolutely necessary to conduct the rent calculations.

Before the in-person tenant interview, the field interviewer will read aloud the following consent form.

12. Hour burden

Estimate of the hour burden of the collection of information.

Four phases of data collection activities with two separate groups of respondents are associated with this study. Phases I and IV entail collecting information from the PHA/owners who represent the project staff that is responsible for administering the assisted-housing programs. Five hundred and fifty PHA/owners will be sampled. In earlier executions of this study, phases I and IV were combined into one phase. With experience we concluded that the data collection is simplified by splitting the one phase into two (i.e., two instruments: a Project-Specific information form and a project staff questionnaire, administered at two separate times).

Phase II of the data collection has no respondent burden. This phase entails a tenant file abstraction task that the data collector executes on site at the PHA/owner site. The PHA/owner is not asked to conduct any recordkeeping or provide any information that is outside the realm of what would normally be accomplished in administering the assisted housing programs under study.

The second respondent group is that of adult tenants who are members of the sampled households. In phase III, 2,400 households will be interviewed.

Phase I Data Collection

Project-Specific

Information Form

The project-specific information form is completed by the PHA/owner at the outset of the study. This form, which has four versions based on program type, covers the following topics: project location, project contact information, location of tenant files and requests for specific values needed to calculate tenant rent (e.g., welfare rent, passbook rates, gross rent). The version that is used for the voucher program also contains sections on rent comparability and utility allowances. This form is mailed in paper form to sampled projects for their completion and return. The estimated burden is 550 respondents x 15 minutes = total burden of 8,250 minutes or 138 hours. Copies of the Project-Specific Information Forms and accompanying cover letters are located in Appendix B.

Phase II Data Collection

Tenant File

Abstraction

Phase II of the data collection has no respondent burden as described above.

Phase III Data Collection

Household

Interview

This interview will be conducted with one household member from each of the 2,400 sampled households to obtain data concerning income, expenses, and household composition to be used in identifying error. The length of the interview will vary depending on the household’s circumstances (e.g., an elderly household with income only from Social Security and no medical expenses would be considerably shorter than an interview with a family in which several members are employed and that has substantial assets or other unusual circumstances). The estimated range is from 40 to 60 minutes, or an average of 50 minutes. It is not possible to reduce this time since all reasonable income sources must be checked for each household. The estimated burden is 2,400 respondents x 50 minutes=total burden of 120,000 minutes or 2,000 hours. A copy of a paper representation of the computer-assisted personal interview is located in Appendix C.

Phase IV Data Collection

Project Staff

Questionnaire

The Project Staff Questionnaire is administered on paper (and possibly via a web interface in future studies) to PHA/owner staff during the mid to end of the data collection cycle. This document collects information on the number and types of staff administering the assisted housing programs under study, staff training and communication of changes in HUD policy, quality control procedures, conduct of tenant interviews, computer automation, and verification procedures. The estimated burden is 550 respondents x 25 minutes = total burden of 1,375 minutes or 229 hours. A copy of the Project Staff Questionnaire is located in Appendix D.

Table A12.1 summarizes the anticipated burden of each of the data collection components.

Table A12.1. Respondent Burden Estimate by Data Collection Phase

Phase |

Respondent Group |

Data Collection Instrument |

Estimated Number of Survey Respondents |

Minutes per Respondent |

Respondent Burden Hours |

I |

PHA/owner |

Project-Specific Information Form |

550 |

15 |

138 |

II |

Tenant File Abstraction |

No burden |

|||

III |

Tenant |

Household Interview |

2,400 |

50 |

2,000 |

IV |

PHA/owner |

Project Staff Questionnaire |

550 |

25 |

229 |

13. Cost burden

Provide an estimate of the total annual cost burden to respondents or recordkeepers resulting from the collection of information. (Do not include the cost of any hour burden shown in Items 12 and 14).

The cost to respondents will be the time required to respond to the survey.

14. Annualized costs to Federal Government

Provide estimates of annual cost to the Federal Government. Also, provide a description of the method used to estimate cost, which should include quantification of hours, operation expenses (such as equipment, overhead, printing, and support staff), and any other expense that would not have been incurred without this collection of information. Agencies also may aggregate cost estimates from Items 12, 13, and 14 in a single table.

OMB Clearance is being sought for three iterations of this data collection effort. Each data collection (i.e., FY study) lasts for approximately a 20-month period with 95 percent of the cost falling within a one year period. The values provided in Table A14.1 are the total costs to execute an FY study and include: 1) updating of the instruments, correspondence and administrative forms, 2) development of the sampling plan and project and tenant sample selection, 3) review and documentation of existing HUD policy and the study operationalization of that policy, 4) development of the management and analysis plans, 5) systems programming of the data collection software and tracking systems, 6) study pretest, 7) field interviewer training, 8) data collection, 9) data cleaning and processing, 10) data tabulation and analyses, 11) report writing, and 12) overall project management. These costs were estimated by calculating the number of person-hours required to execute the study tasks and adding the associated other direct costs.

Table A14.1 Cost by Study |

||

Study |

Study Timeframe |

Cost |

FY 2006 |

May 2006–December 2007 |

$4,073,072 |

FY 2007 |

May 2007–December 2008 |

$4,191,878 |

FY 2008 |

May 2008–December 2009 |

$4,344,064 |

Total |

$12,609,014 |

|

15. Program changes or adjustments

Explain the reason for any changes reported in Items 13 or 14 above.

There are no changes to items 13 and 14.

16. Plans for tabulation and publication

For collections of information whose results will be published, outline plans for tabulation and publication. Address any complex analytical techniques that will be used. Provide the time schedule for the entire project, including beginning and ending dates of the collection of information, completion of report, publication dates, and other actions.

The primary purpose of the study is to determine the type, severity, and cost of errors associated with income certification and rent calculations. This study will produce national estimates of error in each program and be published in a final report. Fourteen study objectives have been outlined, each having corresponding tabulations and analyses. The analysis plan and table shells for each of the 14 study objectives are located in Appendix E.

The schedule for data collection and reporting is shown in Table A16.1.

Table A16.1. Data Collection and Reporting Schedule |

|

Activity |

Time schedule |

Project Sample Selection |

August 2006 |

Phase I—Project-Specific Information Mailing |

Nov 2006 |

Phase II—Tenant File Abstraction |

Feb–June 2007 |

Phase III—Tenant Household Interview |

Feb–June 2007 |

Phase IV—Project Staff Questionnaire |

April–May 2007 |

Data Cleaning and Analysis |

July–Sept 2007 |

Final Report |

Oct 2007 |

17. Non-display of expiration date

If seeking approval to omit the expiration date for OMB approval of the information collection, explain the reasons that display would be inappropriate.

Approval is not being sought.

18. Exception to certification statement

Explain each exception to the certification statement identified in Item 19, “Certification for Paperwork Reduction Act Submissions,” of OMB 83-I.

There are no exceptions.

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

1. Respondent universe and sampling methods

Provide a numerical estimate of the potential respondent universe and describe any sampling or other respondent selection method to be used. Data on the number of entities (e.g., households or persons) in the universe and the corresponding sample are to be provided in tabular format for the universe as a whole and for each stratum. Indicate expected response rates. If this has been conducted previously include actual response rates achieved.

Currently, the allocations have not been completed for the drawing of the sample. However the methodology and the population are very similar to those used last year, so previous sample figures will be provided. The sample sizes, number of primary sampling units (PSUs) and total number of respondents will be identical. The stratification approach will be the same, but because of the use of implicit stratification and population changes, the exact number of units per stratum will vary.

The universe includes all assisted housing projects and tenants located in the continental United States, Alaska, Hawaii, and Puerto Rico. The following programs will be included in the sample:

Public Indian Housing (PIH)-administered Public Housing (i.e., Public Housing)

PIH-administered Section 8 projects

Moderate Rehabilitation

Vouchers

Office of Housing-administered projects (i.e., owner-administered)

Section 8 New Construction/Substantial Rehabilitation

Section 8 Loan Management

Section 8 Property Disposition

Section 202 Project Rental Assistance Contracts (PRAC)

Section 202/162 Project Assistance Contracts (PAC)

Section 811 PRAC

The sample will be designed to obtain a 95 percent likelihood that estimated aggregate national rent errors for all programs are within 2 percentage points of the true population rent calculation error, assuming an error of 10 percent of the total rents (based on OMB criteria). In previous studies, we determined that a tenant sample size of 2,400 will yield an acceptable precision for estimates of the total average error.

In addition to the overall estimates, error rates will be estimated for each of the three major program types (Public Housing, PIH-administered Section 8, and Owner-Administered programs). Assuming each constitutes a third of the sample, we will require a 95 percent confidence interval within 5 percent of their population values. Assuming a design effect of 2.0, we multiplied that by 400, a number slightly larger than the number required for the desired precision in case of a random sample, and obtained a tenant sample size of 800 per program, for a total sample size of 2,400. The design effect is the ratio of the variance of the estimate to the variance of the estimate for a random sample of the same size. Past experience has shown a design effect of 2 to be a reasonable assumption for this design.

The FY 2004 study found an average QC rent of $189.50 and an average error of $44.75 and a standard error of $2.72. This yields a 95 percent confidence interval of $5.30. This constitutes 2.8 percent of the QC rent. HUD considered this an acceptable confidence interval and hence the basic elements of the design and the sample sizes are being preserved.

As will be described later, the sample will be a three stage sample with 60 PSUs consisting of counties or groups of counties, ten projects within each PSU and four tenants per project. HUD regions will be used as implicit strata in PSU selection, and the three program types will sub-stratify the PSUs. Table B1.1 illustrates the classification of states, the District of Columbia, and Puerto Rico to HUD regions.

Table B1.1. Allocation of States to HUD Regions |

|

HUD Region |

States |

1 |

CT, MA, ME, NH, RI, VT |

2 |

NJ, NY |

3 |

Washington DC, DE, MD, PA, VA, WV |

4 |

AL, FL, GA, KY, MS, NC, Puerto Rico, SC, TN |

5 |

IL, IN, MI, MN, OH, WI |

6 |

AR, LA, NM, OK, TX |

7 |

IA, KS, MO, NE |

8 |

CO, MT, ND, SD, UT, WY |

9 |

AZ, CA, HI, NV |

10 |

AK, ID, OR, WA |

Table B1.2 presents the population and expected sample size by region from the FY 2005 study.

Table B1.2. Number of

Projects and Units in Sampling Frame by HUD Region |

||||||||||

HUD Region |

Projects |

Units |

Expected PSU Sample |

Actual PSU Sample |

||||||

PIH-Admin Sec 8 |

Public Housing |

Owner Administered Sec 8 |

Total |

PIH-Admin Sec 8 |

Public Housing |

Owner Administered Sec 8 |

Total |

|||

US |

13,484 |

12,007 |

17,445 |

42,936.00 |

2,194,240 |

1,086,499 |

1,270,214 |

4550,953 |

60 |

60 |

1 |

1,001 |

662 |

1,481 |

3,144.00 |

148,417 |

63,930 |

110,843 |

323,190 |

4.27 |

5 |

2 |

1,696 |

988 |

1,463 |

4,147.00 |

298,237 |

236,205 |

151,071 |

685,513 |

9.45 |

10 |

3 |

1,117 |

1,039 |

1,743 |

3,899.00 |

180,830 |

98,048 |

146,597 |

425,475 |

5.76 |

6 |

4 |

2,484 |

3,614 |

3,235 |

9,333.00 |

390,774 |

298,751 |

230,227 |

919,752 |

12.69 |

12 |

5 |

1,989 |

1,986 |

3,817 |

7,792.00 |

313,429 |

158,498 |

286,845 |

758,772 |

10.29 |

10 |

6 |

1,702 |

1,747 |

1,525 |

4,974.00 |

269,186 |

108,752 |

100,387 |

478,325 |

6.04 |

6 |

7 |

699 |

659 |

1,070 |

2,428.00 |

98,984 |

38,722 |

58,259 |

195,965 |

2.53 |

2 |

8 |

487 |

332 |

746 |

1,565.00 |

67,569 |

16,893 |

35,960 |

120,422 |

1.49 |

2 |

9 |

1,868 |

750 |

1,588 |

4,206.00 |

354,427 |

55,481 |

116,523 |

526,431 |

6.09 |

6 |

10 |

441 |

230 |

777 |

1,448.00 |

72,387 |

11,219 |

33,502 |

117,108 |

1.39 |

1 |

Response Rates

Two types of non-response may effect this data collection: that by PHAs/owners and tenants.

PHAs/owners

Project-Specific Information

Participation by selected PHAs/owners is mandatory such that their contracts with HUD require their participation in studies of this type. In the FY 2005 study all PHAs/owners completed the Project-Specific Information Form resulting in a 100 percent response rate. We anticipate a similar response rate for the upcoming studies.

In an effort to ensure PHA/owner participation, the initial mailing is conducted using an overnight delivery service to catch their attention. PHAs/owners are given a date by which the information is needed and if that time elapses, follow-up telephone calls are made to obtain the needed information. If further follow-up is required, a list of the non-responsive PHAs/owners are provided to HUD and contacted by them as well.

Project Staff Questionnaire

Participation by selected PHAs/owners is mandatory such that their contracts with HUD require their participation in studies of this type. For the FY 2005 study, currently 510 of the 544 PHAs/owners completed the Project Staff Questionnaire resulting in a 94 percent response rate.

In an effort to ensure PHA/owner participation, the initial mailing is conducted using an overnight delivery service to catch their attention. PHAs/owners are given a date by which the information is needed and if that time elapses, follow-up telephone calls are made to obtain the needed information. If further follow-up is required, a list of the non-responsive PHAs/owners are provided to HUD and contacted by them as well. HUD is currently contacting the 6 percent of PHAs/owners who have not responded. Study time limits and budget constraints do not allow us to further pursue these PHAs/owners if they do not respond to HUD’s request for information.

Tenants

Participation by selected tenants is mandatory; refusal to participate could result in their termination of assistance. In the FY 2005 study, 75 tenants were non-responsive out of 2,412 total tenants, resulting in a 94 percent tenant response rate.

The most common reason for tenant non-response is that the tenant moved out of the sampled unit between the file abstraction and household interview phases of the study. Sixteen tenants were seriously ill while 13 clearly refused participation in the study. An additional 13 tenants were away for extended periods and could not be contacted for an interview during the four month data collection window. Field interviewers will make at least four in-person contacts with the tenant to conduct interviews with individuals who try to evade the interview. For the FY 2006 study a similar tenant non-response rate is anticipated. Study time limits and budget constraints do not allow us to further pursue tenants who evade, refuse or are away during the data collection period.

2. Procedures for collection of information

Describe the procedures for the collection of information, including: Statistical methodology for stratification and sample selection; the estimation procedure; the degree of accuracy needed for the purpose in the proposed justification; any unusual problems requiring specialized sampling procedures; and any use of periodic (less frequent than annual) data collection cycles to reduce burden.

Basic Cluster Design

Two levels of clustering will be used in this study:

Projects clustered within PSUs, which are generally groups of counties

Tenants clustered within projects

The optimum number of tenants per project is based on a cost ratio of two additional tenants for each additional project, PSU intraclass correlation (), project cost (C), and tenant cost (c):

opt. n=[(C(1-))/(c)]1/2

References for this formula can be obtained in Hanson, Hurwitz and Madow, Vol I., 1953, formula 16.2. We estimate that adding a project would result in a cost comparable to adding two tenants. In the FY 2003 study, we applied this formula and determined that a sample size of 2.74 tenants per project would be optimal. We chose four tenants per project in order to preserve an acceptable measure of intra-project variance and to take advantage of the fact that errors appear to be concentrated in projects. In the FY 2005 study we used the same design.

The optimal number of projects and tenants per cluster is a function of logistics. The same two to one ratio that was applied to calculate the optimal number of tenants per project can be used to define cost units. A cost unit is the cost of including a tenant in the survey. Cost units are a function of the data collector’s time and other factors. Ten projects and four tenants per project in a PSU produces sixty cost units (2*10 + 1*10*4 = 60). A design with six projects and eight tenants per project would also have sixty cost units (2*6 + 1*6*8 = 60). Experience has shown that greater than sixty cost units results in an impractical amount of work for one data collector to handle. We believe that sixty cost units provide the best balance between logistical requirements and design effect. Given these issues, we decided to sample four tenants per project, ten projects per cluster, and sixty clusters, for a total of 2,400 tenants.

Definition, Allocation and Sampling of Clusters

A sample of 60 PSUs will be designed, with ten projects per PSU and four tenants per project (allowing PSUs and projects to be selected more than once if sufficiently large). Size measures for PSUs and projects will be inflated to add to the same amount. While sampling variance could result in differences in the number of units to be sampled from each of the three programs, this precludes forcing the number of tenants from each of the programs to be the same. The design calls for equal allocation of the three HUD programs: Public Housing, PIH-administered Section 8, and owner-administered projects.

Source files used for sample selection

Owner-Administered Projects. HUD provided one file of information on owner-administered projects, with a record for each property, including the address. SUPP, RAP, service coordinator, and expired contracts will be excluded from the frame. Contracts covering fewer than 14 units will also be excluded.

Voucher and Moderate Rehabilitation Projects. HUD provided two files with voucher tenant counts for each PHA. One file has information on voucher and moderate rehabilitation tenants, including geographic information. The other file contains one record per PHA, and has information on the number of the PHA’s voucher units. The total number of tenants by FIPS can be obtained from the first file. Out-of-state tenants (tenants with transport vouchers who used them in another state) will be counted in the proportion, but the county of their residence will be dropped from possible selection afterwards. Counties where the estimated number of tenants is under 14 will also be dropped.

Public Housing Projects. Two Public Housing files were provided by HUD, and included geographic information for all but a few projects. We will use the county of the PHA or the county from a previous year file to classify these Public Housing projects into counties. Projects with less than 14 tenants, and projects involved in the Move to Work program will be dropped. Louisiana parishes affected by Hurricane Katrina (i.e., Jefferson, Orleans, Plaquemines, St. Bernard, St. Charles, St. John the Baptist, St. Tammany, Calcasieu, Cameron) will also be excluded from the frame.

Once the above files are processed, it will be possible to estimate the number of tenants in each program in each county. In the past we have used the total PHA voucher counts from one file and the geographic distribution from the other. This will be done this year contingent on resolution of some apparent discrepancies in the files.

Sample cluster size

The clustering procedure will use counties as the initial cluster. Clusters will be restricted to those with a minimum number of tenants and projects (in the FY 2005 study the numbers were 30 projects and 1,000 tenants, and at least two PHA/county combinations). For these purposes, vouchers will be counted as one project for the first 300 tenants, and as an additional project for every 200 tenants above that (e.g., 500 tenants would count as two voucher projects, but 501 would count as three). When a county does not meet the criterion, we will identify the nearest county in the same state and merge the two. A total of 531 PSUs were created for the FY 2005 study. The clustering program has been highly effective in previous years’ efforts, except that from time to time the resulting PSUs have been unnecessarily large. This has been resolved in the past by a manual revision of PSUs after selection. We will use the new files to create PSUs anew, and will examine the resulting PSUs to determine whether it is desirable to modify the resulting parameters.

We will select PSUs with probabilities proportional to size (PPS), a standard approach followed in most national surveys. However, the study calls for an equal number of tenants to be selected from each of the three major classes of programs. In order to accomplish this, we will select PSUs with a size measure calculated as the average of the proportions of tenants from each of the three programs found in the PSU. The number of tenants in each program within a PSU will be divided by the number nationwide. The three values will be averaged to create a measure of size that sums to one.

The size measure will then be multiplied by 60—the number of PSUs to be selected—to obtain the expectation of selection for each PSU. If this expectation is less than one it will be interpreted as the probability of selection of the PSU. If it is greater than one, the PSU will be selected with certainty. The integer part of the expectation will indicate the minimum number of times the PSU can be selected and the fractional part will indicate the probability that the PSU will be selected one additional time.

Sample cluster selection

States will be sorted in a random order within regions, and PSUs will be randomly sorted within states. As the frame is prepared for the selection of PSUs, PSUs will be arranged in order and each assigned an expectation value. A random number will be generated as a starting point to select the PSUs. A cumulative distribution of the expectations will be calculated by adding the expectation of a PSU to the cumulative expectation of the previous one (starting with the random number). Thus the real numbers between 0 and 60 will be divided into segments where each PSU is represented by the segment between the cumulative expectation of the previous PSU (or 0 for the first PSU) and its cumulative expectation. A random number x between 0 and 1 will be selected, and the integers from 0 to 59 will be added to the random number. The numbers x, 1+x, 2+x ... 59+x will define the selected PSUs and a PSU will be selected as many times as one of these numbers falls into its corresponding segment.

This is essentially the Goodman-Kish approach (1950) but using sampling with minimal replacement (Chromy, 1979)2,3. This procedure results in sample sizes roughly proportional to the number of tenants in each region, but counting tenants in smaller programs more than those in larger programs. Rather than allocate a number of clusters to this region, this method implicitly stratifies the sample and essentially allows a fractional allocation. In other words, if the expectation for a region should be 4.6 PSUs, it would have a 40 percent chance of getting 4 and a 60 percent chance of getting 5.

In addition, once the PSUs are selected, the larger PSUs will be divided and one of the parts will be selected with PPS. The decision to divide or not will be implemented subjectively, using a map to determine data collection burden. Once a division is made, one of the parts will be selected with PPS using the same combined size measure used in selecting the PSUs.

The FY 2005 study selected 59 distinct PSUs. Three PSUs had expectations greater than 1.0, but only one was selected more than once.

F igure

B1.1 displays a map of the geographic location of the PSUs selected

for the FY 2005 study.

igure

B1.1 displays a map of the geographic location of the PSUs selected

for the FY 2005 study.

Figure B1.1 Location of PSUs for FY 2005 Study

Allocation and Sampling of Projects

Unlike previous years’ second stage sampling, in FY 2005 the selection of projects was done as a second phase. This means that the PSUs were pooled and the projects selected from the combined set of counties. This was done so as to make the expected number of projects to be approximately ten per county, but the actual number was allowed to vary. It permitted sampling the three HUD programs independently, insuring both equal initial weights and the ability to replace a project with minimal disruption.

In the FY 2004 study allocations were made for every combination of PSUs and program types. These were created so every PSU would have ten projects for every time it was selected and every program type would have 200 projects sampled. The allocations were calculated so that the probability of selection of a tenant would be the same as those of other tenants in the same program type.

In the FY 2005 study, samples were drawn independently for each of the three program types (converting what had been a two-stage sample into a two-phase sample). This approach improved the evenness of probabilities of selection, but resulted in different numbers of projects sampled in each PSU. Variation in the number of projects in PSUs created logistic difficulties in distributing the work among data collectors. In an effort to identify an approach that continued to improve the evenness of probabilities of selection, and always resulted in 10 projects in each PSU, we conducted simulations and developed a new method of assigning allocations to program type/PSU combinations for the FY 2006 study.

Essentially this method first draws PSUs, and then uses simulations to obtain multiple stratifications of the PSUs which lead to exactly ten in each PSU and 200 for each program type. These are obtained using the same method as used in the last study and using the number of units falling in each program type in each PSU as the allocation for the PSU/program combination. Then a second stage probability sample is drawn using allocations obtained from one of the simulations. Since it is possible that an allocation obtained in the simulations would be zero in a particular cell, we use the average of the probabilities of selection for each allocation before the random selection of one. That way every unit has a known probability of selection.

Thus, a sample of projects will be selected from each sampling cell (program type/PSU combination) with probabilities proportional to the number of tenants. As in previous years, our methodology will allow PHA administered Section 8 projects to be selected more than once, but Public Housing and Owner-Administered projects will be selected only once. The same PPS systematic approach used to select PSUs will be used to select projects.

Selection of Tenants

The initial tenant sample will be approximately self-weighting because the measures of size used in selecting PSUs will not always correspond to the sum of the measures of size of projects within the PSU. In addition, the number of occupied units found in a project may not correspond to the number of units listed in the frame. To compensate for this issue, we will make individual decisions by project once the project is sampled and its real size determined.

Consider the initial theory behind the sample. Let f be the fixed sampling rate desired for all tenants in the nation. Let p be the overall probability that a project with N tenants is selected. The needed number of tenants to be sampled (n) from the project to equalize weights is given by: n = fN/p. (We note that n may be greater or less than n, the desired fixed sample size.) As a practical matter, project sample size will not be permitted to vary in accordance with this formula, as this would create highly disparate interviewer workloads. It will, however, be allowed to vary if more than a two to one ratio between projected and actual weight is discovered. Though this rule was in effect in previous years, its application has not been required in recent years.

Because the selection of tenants will be completed at the PHA/project site, the sampling procedures need to accommodate a variety of possible situations related to the availability of tenant lists. Some lists are computer generated and include optimum information; other lists are manually prepared by project staff and include minimal information. Interviewer procedures will provide instruction on how to select the sample and ORC Macro headquarters staff will be available to provide sampling assistance to the field interviewers by telephone.

Weighting

The probability of selection of a tenant will be the product of the following:

The probability of selection of the PSU.

The probability of selection of the sub-PSU when the PSU was divided.

The probability of selection of the project from the set of projects in the PSU. This is the probability described in the appendix, but capped by 1.0 for tenant-based Section 8 projects.

The probability of selection of the tenant from the set of in-scope tenants in the project—this is the total number of tenants sampled from the project divided by the estimated number of tenants in scope. The estimate is obtained by multiplying the total number of tenants by the proportion of tenants selected who are in scope. As an example, if a total of six tenants are reviewed to find four tenants who are in scope, one is out of town and one is no longer subsidized, though his name is still in the list, then the estimate would be 120x(5/6)=100 tenants.

The four probabilities will be multiplied together to form the preliminary weight. The weights will then be adjusted to sum to estimates of the national total of tenants in each program. The final step will be trimming the weights. Extreme weights will be reduced and the weights will be re-adjusted so that they sum to the same national totals.

3. Maximization of response rates

Describe methods used to maximize the response rate and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield “reliable” data that can be generalized to the universe studied.

Two types of non-response may effect this data collection: that by PHAs/owners and tenants.

PHAs/owners

Participation by selected PHAs/owners is mandatory such that their contracts with HUD require their participation in studies of this type. In an effort to ensure PHA/owner participation, the initial mailing is conducted using an overnight delivery service to catch their attention. PHAs/owners are given a date by which the information is needed and if that time elapses, follow-up telephone calls are made to obtain the needed information. If further follow-up is required, a list of the non-responsive PHAs/owners are provided to HUD and contacted by them as well. Appendix B contains study letters that are provided to PHAs/owners at the outset of the study (i.e., Phase I) and again in Phase IV.

Tenants

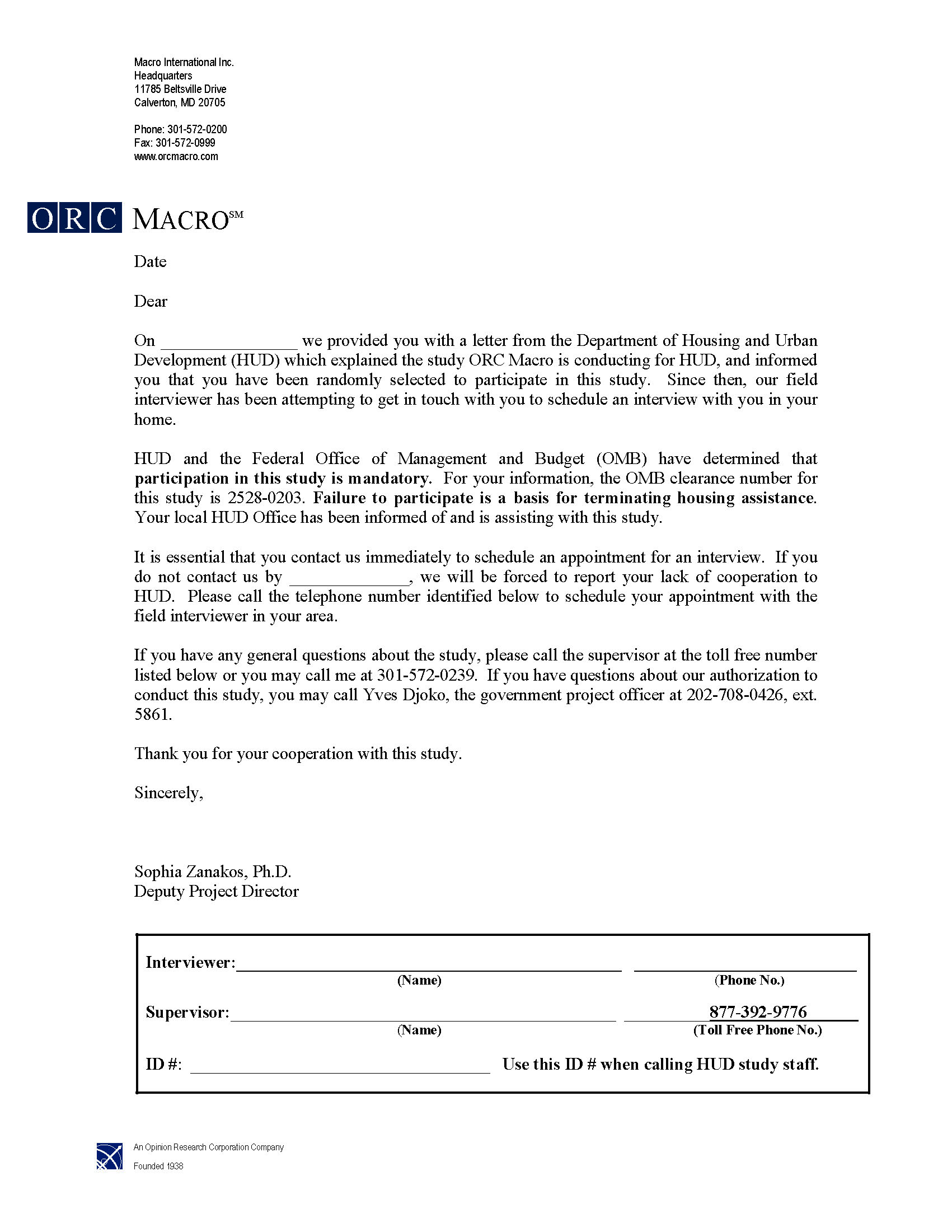

Participation by selected tenants is mandatory; refusal to participate could result in their termination of assistance. Field interviewers will make at least 4 in-person contacts with the tenant to conduct interviews with individuals who try to evade the interview. Page 9 of this document contains a letter that is provided to tenants regarding this study. In addition, the following letter is occasionally used to encourage tenant participation.

4. Tests of procedures or methods

Describe any tests of procedures or methods to be undertaken. Testing is encouraged as an effective means of refining collections to minimize burden and improve utility. Tests must be approved if they call for answers to identical questions of 10 or more individuals.

Previous iterations of this data collection serve as the pretests for this data collection effort. As mentioned previously, similar studies have been conducted in 2000 (data was collected for actions taken in 1999 and early 2000) and enhanced for the FY 2003, FY 2004, and FY 2005 studies. Before each data collection cycle, all changes or enhancements to the study are tested in an in-house procedure that evaluates the administrative and computer systems-related aspects of the study. Prepared case examples (those used in training our field interviewers) are abstracted and entered into our data collection system. Additionally, mock household interview data is entered into our data collection system and all associated administrative paperwork is created and processed. Finally, tracking reports are produced to determine that our reporting system is in place and accurate.

5. Individuals consulted on statistical aspects of design

Provide the name and telephone number of individuals consulted on statistical aspects of the design and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

ORC Macro Staff—Design and Data Collection

Mary K. Sistik, Project Director, (301) 572-0488

Dr. Sophia Zanakos, Deputy Project Director, (301) 572-0239

Dr. Pedro Saavedra, Senior Sampling Statistician, (301) 572-0273

1 The actual average monthly value is $5.84. $5.84 * 12 = $70.

2 Chromy JR. Sequential sample selection methods. In Proceedings of the Survey Research Methods Section, American Statistical Association, pp 401–406, 1979.

3 Goodman R. and Kish, L. (1950) “Controlled Selection—A Technique in Probability Sampling” J. Americ. Statist. Assoc. 45, 350–372.

OMB

Clearance Package

| File Type | application/msword |

| Author | JoAnn.M.Kuchak |

| Last Modified By | HUD |

| File Modified | 2007-01-11 |

| File Created | 2007-01-08 |

© 2026 OMB.report | Privacy Policy