Per the terms of clearance for 1220-0141, attached are summaries of BLS Cog Labs conducted in Fiscal Year 2006

FY06_Annual Research Report.doc

Cognitive and Psychological Research

Per the terms of clearance for 1220-0141, attached are summaries of BLS Cog Labs conducted in Fiscal Year 2006

OMB: 1220-0141

BUREAU OF LABOR STATISTICS

ANNUAL REPORT

of

COGNITIVE AND PSYCHOLOGICAL RESEARCH

CONDUCTED IN FY2006

Prepared by

SCOTT S. FRICKER

BEHAVIORAL SCIENCE RESEARCH LABORATORY

OFFICE OF SURVEY METHODS RESEARCH

BUREAU OF LABOR STATISTICS

October 31, 2006

Summary Report of Research Conducted Under the

Umbrella Clearance to Conduct Cognitive and Psychological Research

OVERVIEW

The Bureau of Labor Statistics' Behavioral Science Research Laboratory conducts psychological research focusing on the design and execution of the data collection process in order to improve the quality of data collected by the Bureau. The following report summarizes the results of research completed in fiscal year 2006, in accordance with Terms of Clearance provided in the Notice of Action for OMB Approval 1220-0141.

Title Experimental Study of Navigation Elements on a Web-

based form

Project Number 2601

Primary Investigator William Mockovak, Office of Survey Methods Research

Burden Hours Approved 70

Burden Hours Used 35

Data collection for this study is approximately half complete. Work on this study had to be postponed this past summer due to higher-priority projects, which occupied our lab.

Recently, the Internet Data Collection Staff (IDCF) at BLS announced that due to the introduction of a new survey form for the Survey of Occupational Injuries & Illnesses and a revised gatekeeper function, they would be unable to maintain the control version of the instrument being tested (the control instrument was maintained on a separate server).

Once IDCF testing and implementation is complete, they may agree to once again support the study, but no restart date has been negotiated at this time.

Title: CE Quarterly 2007 Changes Pre-testing

Project Number: 2602

Burden Hours Requested: 180

Burden Hours Used: 101

Objective: The purpose of this study was to ensure that proposed changes to the 2007 CE instrument are clear and understandable to respondents, and are interpreted as intended.

Method:

Sampling Participants were recruited from a variety of sources, including the OSMR participant database, outside recruiting and market research firms, to ensure that participants have experience with the topics being tested. Screening questions aided recruitment of participants with desired target knowledge. Efforts were made to select participants with varying levels of education, income, and occupation, based on self-reported information provided during the initial recruitment process. Experience with the topic being studied was considered as part of the scheduling process and this information was used to assign topics to participants.

In addition to recruiting in more traditional ways, a list of potential participants was purchased from a marketing company. This list consisted of known timeshare owners and renters, and vacation home owners. The company provided the names and contact information for these people, and they were contacted via email or telephone to explain the purpose of the study and request cooperation. If they were willing to participate, they were interviewed over the telephone using the same script as used in the lab. Participants completing a telephone interview were offered monetary compensation for their time due to logistic complications.

To gain a better understanding of how the proposed changes are understood by a variety of participants, testing was be conducted outside of the Washington DC metro area. Three cities were selected on the basis of their demographic distributions, city size and location in the country: Appleton, WI, Richland, WA and Albuquerque, NM. A private market research firm was used to recruit and screen participants on the same criteria used for participants in Washington DC. Interviews were conducted in area hotels, as arranged by the market research firm. All face to face participants were paid $40 for their participation in this study.

Procedure: Cognitive interviewing was used to gain insight into the topics addressed in the changes. Cognitive interviews provide an in-depth understanding about the respondent’s thought processes and reactions to the questions. These interviews were done one-on-one in the Office of Survey Methods Research (OSMR) laboratory, and were be audio taped, with an observer taking notes from a separate room using the video system. Interviews were conducted run by staff from OSMR or the CE Branch of Research and Program Development (BRPD) who are experienced in conducting this type of research.

The study followed an iterative design, with question wording being modified as problems and improvements are identified. After wording was drafted, the revised question was tested using cognitive interviews. The last step in the study was to cognitively test the proposed final wording of each question to ensure that the intended meaning is being understood by participants, and that the questions can be answered by the general public.

Results.

Thirty five participants were interviewed in the cognitive lab, twelve participants were recruited from the list purchased from the market research firm and participated over the telephone, and fifty four participants were interviewed in the regional locations. All of the proposed changes to the 2007 CEQ were finalized, with most having been modified from their original proposed wording. A list of finalized changes is available upon request.

Title: CE Individual Diary Feasibility Study

Project Number: 2603

Burden Hours Requested: 390

Burden Hours Used: 453 (using respondent reported average time to complete a diary)

Objectives: To the feasibility of using individual diaries designed for others within the consumer unit, which would supplement the major diary kept by the key respondent for major household expenses.

Method: Using 2000 Census data, a sample was drawn of addresses (n=549) with two or more occupants and distributed among 24 FRs around the country. At each address, the FR asked how many people ‘usually live there’ to determine eligibility, only CUs with 2 or more members over the age of 12 were eligible to participate in the test. After determining that the CU was eligible, the FR attempted to place one main diary, the same form as currently used in CE production, with the main diary keeper (MDK) and one individual diary with each CU member aged 12 and over. A $20 debit card was given to all eligible CUs. All individual diary keepers (IDKs) were trained on how to complete the diary, either directly by the FR or by the MDK who was trained by the FR. When possible, standard CE diary procedures were followed, the CU kept the diary for two one-week intervals, and the FR picked up the diaries at the end of each week. To attain a complete case, the FR had to pick up the main diary and at least 50% of the individual diaries. FRs were instructed to return to the CU for additional diaries until enough were collected to constitute a complete case.

Multiple sources of data contributed to the results of this test. All respondents were asked a series of assessment questions at the end of the second week, and also received a self-administered evaluation form. Staff from Census headquarters called a sample of respondents and debriefed them about their experiences. Debriefing sessions were also held with FRs and CE supervisors, and the FRs also completed a questionnaire asking multiple questions about the test. Finally, the data collected in the diaries and travel time information recorded by FRs were keyed and compared to production data.

Results

Response rate

The response rate for the test cases was 61%. The production CED response rate in for May 2005 (data used for comparison in this test) was 77%.

A total of 355 interviews were collected (177 CUs participated in week 1 and 178 in week 2)

Respondents

The Main Diary Keepers (MDKs) in the study were overwhelmingly female (74.4% vs. 25.4%), whereas individual diary keepers were mostly male (64.8% vs. 33.2%).

The average CU size was similar between the test (2.8) and production (3.1), after screening production data to include only CUs with two or more members.

Measures of Data Quality

The percent distribution of purchases in each of the diary sections (FDB, MLS, CLO and OTH) varied little between the IDFT data and production.

Checkboxes are used in the diaries to describe attributes of expenditures, such as meal type for MLS or age/sex for CLO. These were missing more often in individual diaries, than the MDK’s diary for MLS and CLO, but slightly less often for FDB.

When data from the MDK’s diary and individual diaries were combined, the pattern across sections still held. The individual diary approach yielded more missing checkboxes than production for MLS and CLO, but did slightly better for FDB.

Expenditure Data

When the mean number of reports was considered, the individual diary approach (MDK and individual diaries combined) exceeded production on all measures, with increases ranging from 5.2 reports in OTH to 6.8 reports in FDB.

An increase was again seen when comparing the mean expenditures to production data (CU Size >2).

Individual diaries collected over $100 per week. Main diaries alone collected $78 more in week 1 and $177 more in week 2 than their production counterparts.

Per CU, the increase was also significant, compared to production data, the data collected in the test was, on average, $281 higher in week 1 and $540 higher in week 2.

Respondent Assessment

Respondents were asked a series of assessment questions when their second diary was picked up.

Most MDKs (87.7%) reported that everyone in the CU ‘had a good idea of what they were supposed to do.’ This was consistent with the 83% of individual diary keepers (IDK) who said the task did not confuse them.

It took MDKs between ten minutes and two hours each week to complete the diary; it took IDKs between five minutes and two hours. The average time was 41 minutes for a main diary and 23 minutes for an individual diary.

81.1% of IDKs said that their CU had no problems completing the diaries.

Most respondents reported that 16 was an appropriate age for participation in the CE diary, with answers ranging from 10 to 20 years old.

Follow-up phone interviews were completed with 48 CUs. In about 30% of the cases, respondents reported that the MDK entered the expenses of other CU members into the individual diaries.

Over 200 completed evaluation forms. They rated the difficulty of completing the diary (“How much work was it to complete the diary?” where 0 was “no work at all” and 10 was an “extreme amount of work”). MDKs gave a mean rating of 3.9; individual diary keepers had a mean rating of 3.7.

FR Reactions

Some FRs believed that a benefit of individual diaries may be that it emphasizes the importance to the MDK of getting expenses from everyone. FRs felt that having individual diaries emphasized the importance of within-CU communication and, as a result, reporting improved in the main diary.

Previous concerns about obtaining parental permission, maintaining privacy, and duplicate reporting among household members were not a problem.

Most FRs reported that it was either easier (13%) or about the same (65%) to convince a MDK to complete a diary as production. However, about half of the FRs reported that individual diaries were a lot more work to place and to pick up.

The FRs felt that the use of individual diaries generally did not lead to more refusals.

During a secret vote, two-thirds of FRs voted to stop research on individual diaries. In later discussions, FRs vocalized support for individual diaries, as long as the small diaries were not required for all households and did not impact response rates.

Participating in the test put an extra burden on the FRs, which may have caused ‘burnout’.

Title: Post-Test Evaluations of MWR Web Application

Project Number: 2604

Burden Hours Requested: 10.0

Burden Hours Used:

Purpose

The Quarterly Census of Employment and Wages (QCEW) program at the Bureau of Labor Statistics (BLS) collects information about employment and wages associated with the payment of Unemployment Insurance (UI) taxes. Each quarter, businesses send a Quarterly Contributions Report (QCR) to their State UI offices. The QCR shows the business's employment for each month of the quarter, the total amount of wages paid that quarter, and a calculation of the UI tax the business owes for the quarter. Employers submit a tax payment with their QCRs.

The Multiple Worksite Report (MWR) supplements the QCR for employers with more than one work location (worksite) in a state. These employers receive the MWR form showing all known worksites. The MWR form directs the employers to provide information on monthly employment and quarterly wages for each worksite. Employers are also asked to update the list of worksites with new locations and to identify any locations that have closed or been sold. States compile the detailed information from the MWRs (along with the data from the QCRs), and submit that data to the Bureau of Labor Statistics. The MWR is mandatory in about half of the States.

BLS has been working with the States to develop a web version of the MWR form. Rather than completing the form on paper, respondents will have the option of submitting their information over the Internet. The web version of the MWR is part of the Internet Data Collection Facility or IDCF. The aim of the Internet Data Collection Facility is to provide an easy, secure way for people to report their data.

Recently, a subset of employers in four States was given the opportunity to report their quarterly data using the new MWR web application. In order to evaluate how well the new web application worked for these respondents, the Office of Survey Methods Research (OSMR) proposes to conduct telephone interviews with respondents in order to receive feedback on the web application and reporting process. The feedback will be used to evaluate the MWR web application, in order to identify ways in which the system may be improved prior to deployment to additional States.

Telephone interviews will be conducted with three types of respondents: 1) Cooperative respondents (i.e., respondents who successfully completed their report using the web application, 2) Incomplete respondents (i.e., respondents who attempted to use the web but did not submit a complete report via the web, and 3) Paper-form respondents (i.e., respondents who chose to report using the paper form despite separate invitations that were sent in two different quarters asking respondents to try the web application.

The goals of the evaluation interviews will be to obtain the respondent’s perspective on how well the system worked, how the web application compares to the paper form, and how the application or process may be improved.

Methodology

OSMR staff conducted telephone interviews with 10 respondents who completed the MWR report via the IDCF system. Respondents were randomly selected from a list of employers who have registered in the IDCF system and submitted their data online.

Interviews with respondents who chose not to complete their report via the IDCF system, despite two quarters of solicitation are currently underway. These respondents will be randomly selected form a list of employers who were invited to report over the web, but instead submitted their data through the paper form. Ten interviews are anticipated to be conducted by December 2006.

Originally, we had proposed to interview respondents who registered with the IDCF system but failed to submit the data over the web. However, after two quarters of testing, there were not enough of these respondents to warrant investigation.

Participants and Burden Hours

Participants were recruited from the list of MWR web and paper respondents in the four test States (Alabama, Missouri, Virginia and Washington). Ten interviews were conducted. Each interview took an average of 15 minutes. The number of burden hours used was approximately 2 hours.

Results to Date

Interviews with the cooperative respondents (i.e., successfully used the MWR web application to submit their data) revealed that the respondents generally found the system easy to use. They preferred to submit the data via the web because they already use this mode for completing other data requests or surveys. They also reported that they liked how the system is able to check their inputs, through edit functions.

Some found the registration process to be onerous and reported that they had made several attempts before they were able to log on to the system. This is similar to what we observed during usability testing.

Over 80% of the respondents who had reported via the web during the first quarter of the test also chose to use this mode again during the second mode.

Title: Cognitive Testing for New Questions for the Housing Survey

Project Number: 2605

Burden Hours Requested: 15

Burden Hours Used: 7

Introduction

Efficiently identifying a sample of rental housing units in the BLS CPI Housing survey has important cost implications, as well as direct impacts on respondent burden. Therefore, the purpose of this study was to test alternative mailing materials for a new mail survey screening method for the Housing Survey and to improve the introductions used in the three mailings for the survey.

This study asked participants to complete a mail survey that will be used to screen out owner occupied housing (rental units are the target sample). The mail survey will become the first step in screening the new sample (approximately 40,000 urban postal addresses). After this initial screening, the sample will be handed over to the BLS Office of Field Operations for further screening and initiation by telephone and personal visit. This initial screening should eliminate a large number of owner occupied units, which comprise about 68.5% of housing units according to the CPS April 2005, and thereby greatly reduce the cost of screening. Respondent burden will also be reduced, since in-person or telephone contacts tend to take longer due to the social interaction necessary to complete an interview.

Methodology

Twenty participants were selected from a database of volunteers maintained by the Office of Survey Methods Research. Participants completed the test version of the Housing screening survey (See Attachment A1) as they would have if they received it in the mail (i.e., based on their living situation). This survey obtains the bare minimum information required: whether the address is owner occupied. If an address is identified as owner occupied, it will be screened out of the sample based.

After completing the survey form, participants were debriefed using a set of structured questions. Following this, they completed an alternate version of the survey questions (see Attachment A2) and were asked to provide feedback on the alternate wording. The alternate wording includes other screening questions used in the field interviews to screen the sample. Since only owners will be removed from the sample, the mail survey data could be compared to the results of the field interviews to determine if sometime in the future more of the sample can be screened via the mail survey. The alternate wording also uses a simpler introduction. Following this, participants were then asked to read the introduction to the two follow-up, nonresponse letters and to provide feedback.

Twenty cognitive interviews were conducted during two rounds of testing. Round 1 focused on the forms shown in Attachment A, Round 2 focused on the forms shown in Attachment B. Round 1 used an iterative approach and began with pre-testing done with four participants, after which minor revisions were made to the forms. This was followed by testing with 10 additional subjects and, again, modifications were made to the forms based on this testing. These data are combined as “round one” testing.

In Round 2, six additional interviews were conducted to test the final version of the questions, and then additional changes were made to the forms.

Results from Round 1

A pretest was completed with four participants, and a few changes were made to the survey instruments and to the protocol. Ten additional interviews were then conducted to conclude the first round of testing. Table 1 presents results from Round 1 (the pretest and the first 10 interviews).

Introduction

It was difficult to determine which introduction worked better, Version A1 or Version A2. Seven participants preferred Version A1 and five preferred Version A2. One participant did not like the survey at all and another participant could not make up his/her mind. Participants tended to view Version A1 as easier to understand, while Version A2 was seen as more thorough. Two participants commented that they wondered why their answers mattered if their participation was voluntary, while others said that they like the idea that the information was important and that it said participation was voluntary.

Some participants doubted that younger people would fill out and return the survey, including a younger participant who said she would not fill it out until she received the third letter. She said if we bothered to send it the third time then it must be important. Several participants expressed that a few specific features would persuade people to respond: using the word “help,” stating that the government is trying to reduce survey costs, and making the CPI personally relevant by mentioning children.

Question

While some participants were surprised by Version A1 (8 of 11) asking only one question, overall they preferred it over asking two questions, as used in version A2. One participant did not like the survey at all and two could not decide. Several participants mentioned that they preferred different combinations of the versions (such as question A1 paired with introduction A2). Participants did read all of the options and sometimes found some of the categories too specific for Version A2 (wondered why we wanted the information) or became confused and tried to answer both questions. This latter error was likely partly due to the laboratory setting; it might have encouraged some participants to over think the question. However, when asked, they all said they would be able to decide if they were a renter or an owner.

Follow-Up Survey Introductions

It was difficult to determine how well the follow-up letters would work because most participants said they would respond to the first mailing. The second letter is intended to add something more to peak interest to slow responders. Participants tended to want to add things from both letters to make up one “good” letter.

There was a tendency to be critical of the final follow-up letter, but it was not clear if that would be the case for participants who would not respond to the first two mailings. The intent of the last mailing is to “strike a different cord” for reluctant respondents. Participants tended to feel it was stated too strongly or felt a little like a reprimand. However, prior experience with two Internal Revenue Service studies that used a similar letter yielded between about an eight percent and ten percent increase in the response rate.

Revision Decision

The introductions were revised based on these comments and sentences from both were used to create a single first letter for subsequent testing. The decision was made to go with one question. It was modified based on the results and used for subsequent testing.

The two follow-up nonresponse letters were modified based on the comments and ideas expressed during the interviews (toned down the wording in the second follow-up, added a few points about the importance of the study to the first follow-up).

The Second Round of Testing Housing Survey

Six additional interviews were conducted to test the finalized forms. The interviewer only asked for the reactions to the survey introduction and the question. This round of testing used only one version of the three survey introductions and used a single question. Attachment B includes the three letters and the question tested.

Introduction

At least four of the six participants believed that both the introduction and the question on the first letter were clear. Specifically, the introduction is “accurate” and “complete,” and participants liked the part about reducing costs. However, some participants noted that the second paragraph would probably not be read. It was too long; reading it took longer than completing the survey and, thus, may deter responses to the survey.

Question

The question was thought to be clear, but three participants felt that it might be confusing because of their situations. One suggested making the answer options plural to include families because she felt like she should put renter, but add “and mother.” The other two were college students who thought that living with parents, moving out, or subletting would make them hesitate as what to put in as the answer.

Follow-up Letters

Like the results from the first set of cognitive interviews, the second set of interviews showed that in general, participants liked the second letter (or 1st follow up) and disliked the third letter (or second follow-up). Four of the participants noted that they would return the survey after the second letter. No one said they would return it after the third letter. This is similar with the first set of interviews, in which most participants said they would return it after the first or second letter rather than the third letter. One participant stated that if someone decided not to complete it after the first letter, he or she wouldn’t do it after a second or third letter; this was mentioned in the first set of interviews as well.

Regarding the second letter specifically, most participants believed it was clear. One participant believed that this introduction was clearer than the first (though she did not mention specifically why, just that she liked it better overall). However, another participant believed that it did not have enough information, like the first letter did. This participant suggested that the purposed be restated by reminding people how the survey helps people, such as children who receive school lunches. She felt this should also be done with the third letter.

In fact, there were many negative opinions of the third letter, most regarding the bolded sentence in the paragraph. This resulted in a paragraph that was described as “pushy,” though one participant said she didn’t feel that it was too harsh, but that others might. A suggestion to prevent this was maybe italicizing the sentence, which would stress the importance of the sentence without seeming too harsh. This criticism of the bold statements is unlike in the first set of interviews in which participants noted that they liked it because it drew their eyes to certain parts. Also, participants felt that the second sentence in the paragraph was unnecessary or deterring as people know that answers are different. One participant suggested that this introduction stress the importance of the representative sample so that it acknowledges it’s not just after that individual. One participant also suggested adding a sentence like “Your response is important, we know you’re busy and we’d appreciate your response. We’ll wait to hear from you” and that the information would be kept confidential “as promised.” Many participants in the previous set of interviews also noted that they liked it when they felt their information was important.

General Comments

One participant felt that low-income would have less knowledge and interest in filling out the survey, so she suggested including more information about the CPI. This can be achieved by including a fact sheet on the back or a listing of resources that people may explore. Another participant felt that there would be higher response rates if the letter was sent through email. Another participant stated that some people just mistrust the government and would not fill it out, and that there was nothing that could be done on the letters to change that. Many participants in the first set of interviews also expressed the view that some people just wouldn’t fill out this survey. However, at least two participants said that they don’t see why someone wouldn’t fill it out, because it is very short, clear, and unobtrusive.

Revision Decisions

Based on these findings the letters and the question were finalized as shown in Attachment C. The first letter minor edits were made, an additional sentence was added to the last paragraph referring to the contractor. The second paragraph was changed to bullets (since people thought it might not be read by everyone). No changes were made to the second letter, except italics was added to the plea sentence. The third letter was re-written, since it was not acceptable to several participants. This was done with some hesitation given the majority of the participants would be considered as cooperative respondents versus the target of the letter, the reluctant respondent. The question remained the same except the plural was added to the owner and renter options.

Formatting

mail surveys is rather difficult with the tools available to the

OSMR. The contractor is welcome to change the design of the final

layout for the survey with BLS having final say. Also, the

contractor should add reference to an 800 number if they have one

available. It is not clear who will sign the letter, nor if a

signature is really necessary. It worked fine in the first study

having no signature and produced a 50 percent response rate with only

two mailings (and using a less then optimal design for the

questions). Finally, the new Housing OMB Clearance Number should be

added once the clearance is approved.

Attachment A: Housing Survey Protocol

Housing- Survey Protocol

Date____________ Time __________ Version for Mail Survey: A First B First

Name ________________________________________________________

Have you ever been a renter? Yes No

Have you ever purchase something on the internet? Yes No

Okay, here is the mail survey I mentioned. Just fill it out like you would if you had received it in the mail.

Let’s begin with the question (questions) .What were some of the thoughts you had as you answered the question(s)?

What did you think about when you read the introduction? What thoughts came to mind?

Was there anything you found confusing?

Was there anything that might make you not want to complete the survey?

If the person does not respond the first time we will end the survey two more times. The questions would be the same, but the introductions would change. Would you read the first paragraph and share any thought you might have about it with me.

Please read the next one and we will discuss it too. What are you thoughts?

Do you think you would return the survey if you received it? At which point, the first, second, or third time?

Attachment A1: First Letter and Question

Bureau of Labor

Statistics U.S. Department of Labor

![]()

Residential Address Here

The U.S. Bureau of Labor Statistics would appreciate your help. We are getting ready to conduct the Consumer Price Index Housing Survey in your city. The Housing Survey is used to measure changes in housing costs, which account for approximately 40 percent of consumers' expenditures.

We have selected this address as a part of our sample to represent other households in your area. Before we begin our field data collection efforts, we would like to determine which households are owned and which are rented so we may further refine our sample for the Housing Survey. We are asking for a moment of your time to answer this simple question. Your answers are voluntary and will be kept confidential.

Please complete the question below and return the survey in the postage paid return envelope.

Who currently lives at this address?

c Owner(s)

c Renter(s)

c Vacant, no one currently lives at this address

c Other type of residence, please explain _________________________________________________.

c Does not apply, this is not a residence

Thank you for your help!

Your participation is voluntary.

The Bureau of Labor Statistics, its employees, agents, and partner statistical agencies, will use the information you provide for statistical purposes only and will hold the information in confidence to the full extent permitted by law. In accordance with the Confidential Information Protection and Statistical Efficiency Act of 2002 (Title 5 of Public Law 107-347) and other applicable Federal laws, your responses will not be disclosed in identifiable form without your informed consent.

Paperwork Reduction Act Notice

The time needed to complete this survey was estimated to be less than 5 minutes. If you have comments concerning the accuracy of this time estimate or suggestions for making the survey simpler, you can write to: Office of Prices and Living Conditions, 2 Massachusetts Ave., NE, Rm. 3655, Washington, DC 20212.

Attachment A2: Alternate Wording for Letter and Questions

Bureau of Labor

Statistics U.S. Department of Labor

![]()

Residential Address Here

The U.S. Bureau of Labor Statistics would appreciate your help. We are getting ready to conduct the Consumer Price Index Housing Survey in your local area. The Housing Survey is used to estimate changes in housing costs. Before we begin, we would like to determine which households are owned and which are rented to help us refine the list of addresses we will use for the study. All answers are voluntary and will be kept confidential.

Please complete the questions below and return the survey in the postage paid return envelope.

Who currently lives at this address?

c Owner(s)

c Renter(s)

c Vacant, no one currently lives at this address

c Something else, please answer the next question.

What is located at this address?

Choose one answer that best fits the situation.

c Part-time vacation or summer home

c Assisted living or some type of residential care facility

c Shelter, mission, group home

c Business or other non-residential structures such as churches or schools

c Vacant lot or under construction

Demolished, condemned, or unfit for habitation

c Don’t Know

c Other: ________________________________________________

Thank you for your help!

Your participation is voluntary.

The Bureau of Labor Statistics, its employees, agents, and partner statistical agencies, will use the information you provide for statistical purposes only and will hold the information in confidence to the full extent permitted by law. In accordance with the Confidential Information Protection and Statistical Efficiency Act of 2002 (Title 5 of Public Law 107-347) and other applicable Federal laws, your responses will not be disclosed in identifiable form without your informed consent.

Paperwork Reduction Act Notice

The time needed to complete this survey was estimated to be less than 5 minutes. If you have comments concerning the accuracy of this time estimate or suggestions for making the survey simpler, you can write to: Office of Prices and Living Conditions, 2 Massachusetts Ave., NE, Rm. 3655, Washington, DC 20212.

Attachment A3: Housing Follow-up Surveys

First Follow-up (all else the same)

A few weeks ago we sent you this short questionnaire and thus far we have not heard from you. Could you please take a moment and complete the questions below? It is important that your area be represented in the study or it may result in an inaccurate estimate of how many people own or rent their homes. As we mentioned, all answers are voluntary, will be kept confidential, and will be used only for statistical purposes. Your help would really be appreciated.

Second Follow-up (all else the same)

Sometimes people who do not respond to surveys are different from those who do respond. This can impact the quality of the information we collect. While this is a short survey, the information is important. Would you please reconsider and respond to our survey? We would really appreciate your help.

Attachment B1: Second Round Housing First Letter and Question

US Bureau of Labor Statistics Letterhead

Smith Residence

222 Donner Street

Victory Pass, Oregon

The Bureau of Labor Statistics would appreciate your help. We are getting ready to conduct the Housing Survey in your city. The question below allows us to refine the list of addresses for the study. By answering this question, you will help us reduce the overall cost of the survey (compared to calling you or coming by your home). Although your participation is voluntary, this information is very important and it will be kept confidential.

The Housing Survey is used to estimate changes in housing costs. This information and other data we collect are used to create the Consumer Price Index (CPI). The CPI is the mostly widely used measure of inflation and impacts most Americans (such as cost of living adjustments for about 48 million Social Security beneficiaries and about 27 million children who eat lunch at school).

Please complete the question and return the survey in the postage paid return envelope.

Who currently lives at this address? Choose the answer that best fits your situation.

c Owner

c Renter

c Vacant (such as a house, condo, apartment for sale or rent)

c Assisted living or some type of residential care facility

c No one lives here; it is a business or other non-residential structure

c Something else: ________________________________________________

Thank you for your help!

Your participation is voluntary.

The Bureau of Labor Statistics, its employees, agents, and partner statistical agencies, will use the information you provide for statistical purposes only and will hold the information in confidence to the full extent permitted by law. In accordance with the Confidential Information Protection and Statistical Efficiency Act of 2002 (Title 5 of Public Law 107-347) and other applicable Federal laws, your responses will not be disclosed in identifiable form without your informed consent.

Paperwork Reduction Act Notice

The time needed to complete this survey was estimated to be less than 5 minutes. If you have comments concerning the accuracy of this time estimate or suggestions for making the survey simpler, you can write to: Office of Prices and Living Conditions, 2 Massachusetts Ave., NE, Rm. 3655, Washington, DC 20212.

Attachment B2: Second Round Housing Second Letter and Third Letter

Second Letter

You should have recently received a letter telling you about an important survey the [insert contractor’s name] is conducting for the Bureau of Labor Statistics (Consumer Price Index Housing Survey). If you have already completed the survey, please accept our sincere thanks; there is nothing else you need to do. If you have not responded yet, we’d like to urge you to take a few minutes to do so now. As we mentioned, all answers will be kept confidential and will be used only for statistical purposes. Your participation is voluntary, but we would really appreciate your help.

Third Letter

A few weeks ago we sent you this short questionnaire, but we have not received a reply as yet. By collecting this information by mail, it will help reduce the overall cost of the Consumer Price Index Housing Survey (mail is less expensive than a calling or coming by your home). Could you please take a moment and complete the question below? As we mentioned, all answers will be kept confidential and will be used only for statistical purposes. Your participation is voluntary, but the information is really important.

Attachment C1: Final Version of the First Letter and Question

Housing Survey First Letter

The Bureau of Labor Statistics would appreciate your help. We are getting ready to conduct the Housing Survey in your city. By answering this question, you will help us reduce the overall cost of the survey (compared to calling you or coming to your home). Although your participation is voluntary, this information is very important and it will be kept confidential.

The Housing Survey, along with other data, is used to create the Consumer Price Index (CPI). The CPI is the most widely used measure of inflation and, among many uses, is used to adjust the:

Cost of living payments for about 48 million Social Security beneficiaries.

Cost of lunches for about 27 million school children.

Federal income tax structure to prevent inflation-induced increases in taxes.

Payments in collective bargaining agreements.

To learn more, please visit our website: http://www.bls.gov/cpi/

The [insert contractor name] is conducting this part of the Housing Survey for the Bureau of Labor Statistics. Please complete the question and return the survey in the postage paid return envelope.

Who currently lives at this address? Choose the answer that best fits your situation.

c Owner(s)

c Renter(s)

c Vacant (such as a house, condo, apartment for sale or rent)

c Assisted living or some type of residential care facility

c No one lives here; it is a business or other non-residential structure

c Something else (Please describe):

________________________________________________

Thank you for your help!

Your participation is voluntary.

The Bureau of Labor Statistics, its employees, agents, and partner statistical agencies, will use the information you provide for statistical purposes only and will hold the information in confidence to the full extent permitted by law. In accordance with the Confidential Information Protection and Statistical Efficiency Act of 2002 (Title 5 of Public Law 107-347) and other applicable Federal laws, your responses will not be disclosed in identifiable form without your informed consent.

Paperwork Reduction Act Notice

The time needed to complete this survey was estimated to be less than 5 minutes. If you have comments concerning the accuracy of this time estimate or suggestions for making the survey simpler, you can write to: Office of Prices and Living Conditions, 2 Massachusetts Ave., NE, Rm. 3655, Washington, DC 20212.

Attachment C2: Final Version of the Second Letter and Question

You should have recently received a letter telling you about an important housing survey the [insert contractor’s name] is conducting for the Bureau of Labor Statistics. If you have already completed the survey, please accept our sincere thanks; there is nothing else you need to do. If you have not responded yet, we’d like to urge you to take a few minutes to do so now. By collecting this information by mail, it will help reduce the overall cost of the Consumer Price Index Housing Survey (mail is less expensive than calling or coming to your home). As we mentioned, all answers will be kept confidential and will be used only for statistical purposes. Your participation is voluntary, but we would really appreciate your help.

To learn more, please visit our website: http://www.bls.gov/cpi/

Please complete the question and return the survey in the postage paid return envelope.

Who currently lives at this address? Choose the answer that best fits your situation.

c Owner(s)

c Renter(s)

c Vacant (such as a house, condo, apartment for sale or rent)

c Assisted living or some type of residential care facility

c No one lives here; it is a business or other non-residential structure

c Something else (Please describe): ______________________________________________

Thank you for your help!

Your participation is voluntary.

The Bureau of Labor Statistics, its employees, agents, and partner statistical agencies, will use the information you provide for statistical purposes only and will hold the information in confidence to the full extent permitted by law. In accordance with the Confidential Information Protection and Statistical Efficiency Act of 2002 (Title 5 of Public Law 107-347) and other applicable Federal laws, your responses will not be disclosed in identifiable form without your informed consent.

Paperwork Reduction Act Notice

The time needed to complete this survey was estimated to be less than 5 minutes. If you have comments concerning the accuracy of this time estimate or suggestions for making the survey simpler, you can write to: Office of Prices and Living Conditions, 2 Massachusetts Ave., NE, Rm. 3655, Washington, DC 20212.

Attachment C: Final Version of Third Letter and Question

Housing Survey Third letter

A few weeks ago we sent you this short questionnaire that is part of a survey to help the U. S. Bureau of Labor Statistics measure changes in the cost of housing in your neighborhood. We have not received a reply as yet. Please help us by taking a moment and complete the question below. As promised, all answers will be kept confidential and will be used only for statistical purposes. Your participation is voluntary, but the information is very important.

Would you please complete the question below and return it to us?

Who currently lives at this address? Choose the answer that best fits your situation.

c Owner(s)

c Renter(s)

c Vacant (such as a house, condo, apartment for sale or rent)

c Assisted living or some type of residential care facility

c No one lives here; it is a business or other non-residential structure

c Something else (Please describe):

________________________________________________

If you recently mailed the survey, please accept our sincere thanks!

Your participation is voluntary.

The Bureau of Labor Statistics, its employees, agents, and partner statistical agencies, will use the information you provide for statistical purposes only and will hold the information in confidence to the full extent permitted by law. In accordance with the Confidential Information Protection and Statistical Efficiency Act of 2002 (Title 5 of Public Law 107-347) and other applicable Federal laws, your responses will not be disclosed in identifiable form without your informed consent.

Paperwork Reduction Act Notice

The time needed to complete this survey was estimated to be less than 5 minutes. If you have comments concerning the accuracy of this time estimate or suggestions for making the survey simpler, you can write to: Office of Prices and Living Conditions, 2 Massachusetts Ave., NE, Rm. 3655, Washington, DC 20212.

Title: Testing of the BLS Public Website

Project Number: 2606

Primary Investigator: Christine Rho, Office of Survey Methods Research

Burden Hours Approved: 100.0

Burden Hours Used: 30.0

Round 1—Usability Test of Existing BLS.gov Site

Introduction

The BLS Website (http://www.bls.gov/) served an average of 1.5 million users each month in 2005. In its relatively short existence, this website has become the primary way for the overwhelming majority of our customers to obtain BLS data and for BLS to communicate with those customers.

The current design has been in place since 2001. Although it has served both the agency and the public well for the past five years, one reality of the Internet is that nothing can remain static for long. User demands, desires, and expectations constantly grow; BLS continues to post more and more information to the site and to expand the site’s uses; and technology continues to improve. Therefore, BLS has decided to redesign its website to better serve the needs of its customers and the mission of the agency. As part of the process of redesign, an initial usability test was conducted with the current website (http://www.bls.gov/) to obtain a baseline level of performance and satisfaction.

During this test, 10 participants each attempted to complete 12 scenarios. Participants also were asked to provide other non-scenario information that is included in this report.

Participants

This usability test included 10 participants. These participants were recruited from three sources: 1) the participant pool maintained by OSMR, 2) BLS staff and interns, and 3) current users of the BLS website. Participants were selected to match as much as possible the type of users of the BLS website. The pre-test question responses of these participants are summarized in the following tables:

Table 1. Demographics |

|||||

Gender |

Age |

Education |

|||

Female |

3 |

18-39 |

6 |

High school or less |

1 |

Male |

7 |

40-59 |

3 |

Some college |

2 |

|

|

60-75 |

1 |

College degree |

5 |

|

|

|

|

Advanced Degree |

2 |

Table 2a. Experience |

|||||

Computer Experience |

Computer Use |

Web Experience |

|||

Less than 1 year |

0 |

Less than 1 hour a week |

0 |

Less than 1 year |

0 |

1 to 4 years |

0 |

1-10 hours a week |

0 |

1 to 4 years |

1 |

5 to 9 years |

2 |

11-20 hours a week |

2 |

5 to 9 years |

3 |

10 years or more |

8 |

21 or more hours a week |

8 |

10 years or more |

6 |

Table 2b. Experience |

|

Web Use |

|

Less than 1 hour a week |

0 |

1-10 hours a week |

3 |

11-20 hours a week |

4 |

21 or more hours a week |

3 |

Table 2c. Experience |

|

BLS Website Use |

|

At least once a week |

2 |

At least once a month |

2 |

Once every 3-4 months |

4 |

Once every 6 months |

0 |

Once a year |

2 |

Scenarios

Participants were given a set of scenario to complete on the BLS website. The scenarios were developed by OSMR and the BLS Website Redesign team to test key components of the interface and access to commonly sought content. Each participant attempted to complete 12 scenarios. The scenarios used in this usability test are shown below. The scenarios were presented to participants in random order. Each scenario has been assigned a name to facilitate reading this report.

1 (PCI): You are interested in the Productivity and Costs indexes that BLS produces. You had heard that there were recent corrections made to the publication start date of some of the industries. Please check which industries are affected by this.

2 (NCS): You are opening a small pet supply store in Falls Church, VA and you would like to figure out how much to pay a cashier. Please find the average hourly pay for a cashier in private industry in northern Virginia.

3 (CBA): Does BLS produce the prevailing wage data? Where can you find information about the prevailing wage data under the Davis-Bacon Act?

4 (CPI): You want to know about the 2006 scheduled news releases for the Consumer Price Index (CPI). Please find the page that contains this information.

5 (CE): You're interested in statistics on household expenditures by region that BLS produces. Find which region of the country has the highest annual expenditure in household utilities.

6 (CPS): What was the national annual average unemployment rate for 1992?

7 (OOH): You are considering going to nursing school. What is the expected job growth for registered nurses?

8 (LAUS): Please find the unemployment rate (seasonally adjusted) in the District of Columbia for January 1996.

9 (QCEW): BLS publishes a quarterly count of employment and wages reported by employers covering 98 percent of U.S. jobs, available at the county, MSA, state and national levels by industry. Please find the page that contains this information.

10 (PPI): You heard that the prices received by producers of gasoline rose again in May. By what percentage did the producer price for gasoline increase in May?

11 (ECI): You're interested in finding out how rapidly labor costs are rising for employers. What was the percentage change in these costs (seasonally adjusted) for the first quarter of 2006 (that is, December 2005 to March 2006)?

12 (CES): You read that the manufacturing industry in Michigan has declined dramatically in recent years. How many manufacturing jobs did Michigan have in June 2000?

Test Objectives

Using the UTE application to run a scenario-based usability test, the scenarios were designed to address each of the major BLS programs: Employment and Unemployment (e.g., CES, CPS, LAUS, and QCEW), Prices and Living Conditions (e.g., CE, CPI, and PPI), Compensation and Working Conditions (e.g., CBA, ECI, and NCS), Productivity and Technology (e.g., PCI), and Employment Projections (e.g., OOH). The scenarios required the participants to find information or data that were specific to the above programs. Furthermore, the scenarios were also designed to address the following questions (corresponding scenario names appear in parenthesis):

1. Are users able to find and access historical data? Do users understand and use the dinosaur icon? (CPS, LAUS)

Are users able to find “Economy at a Glance” data? (CES)

Do users notice and use the latest numbers box on program homepages? (PPI, ECI)

Do users notice the information in the “People are asking” box? (CPS, CBA)

Are users able to find data from “Tables Created by BLS”? (CE)

Do users notice the information found under the Special Notices section of the program homepages? (PCI)

Are users able to find occupational information in the Occupational Outlook Handbook?(OOH)

Will users find the schedule news releases for a specific index? (CPI)

Do users notice and use the search engine? (CBA)

Will users find the FAQ link on a Program homepage? (CBA)

Successful Completion

Of the 120 tasks that could have been completed successfully, participants completed 63 or 52%. They completed another 14 (12%) but selected the wrong answer, and selected 'skip scenario' on 43 (36%).

Time to Complete

The average time to complete the 63 successful tasks was 200 seconds, or 3 minute(s) and 20 seconds. The average time to complete the tasks that were wrong, or where participants gave-up was 249 seconds, or 4 minute(s) and 9 seconds. Some of the skipped or wrong scenarios would have taken even longer, but they were stopped by the tester after participants were unable to find an answer in a reasonable amount of time.

Number of Viewed Pages

The average number of pages visited when completing the successful tasks was 9.5. The average number of pages visited when attempting to complete the tasks that were wrong or not completed was 12.2. Again, the number of pages most likely would have increased if some of the participants had not been stopped by the tester.

Discussion of Results: Test Objectives

1. Are users able to find and access historical data? Do users understand and use the dinosaur icon? (CPS, LAUS)

Yes and No. Most users are able to find and access historical data. As shown in Figure 2, users were successful in retrieving historical data 80% of the time (16 out of 20). However, of the successful 16 completions, the dinosaur icon was used only three times to access the historical data. The dinosaur icon was meant to be a quick and easy short cut to accessing historical data, but most participants either did not see the icon or failed to recognize it as a link to historical data. Although the successful completion rate for these scenarios was high, participants struggled to find the pertinent data. This difficulty is reflected in the long completion times (an average of 3-4 minutes) and the many number of pages viewed (an average of 12 pages).

Are users able to find “Economy at a Glance” data? (CES)

No. The “Economy at a Glance” sections are the quickest path to finding the type of information sought in this scenario. However, only one participant successfully found and used the “Economy at a Glance” section to complete this scenario. Three out of the four participants who successfully completed this task did so by creating customized tables.

Do users notice and use the “Latest Numbers” box on program homepages? (PPI, ECI)

Qualified Yes. There were only 40% successful completions for the PPI and ECI scenarios which addressed this issue. However, for those who were successful, the “Latest Numbers” box was very instrumental in being able to complete this task. Seven out of the 8 successful completions were the result of the participants noticing the “Latest Numbers.”

Do users notice the information in the “People are asking” box? (CPS, CBA)

No. The CPS scenario required users to find the unemployment rate for 1992. In the “People are asking” box, the first question was “Where can I find the unemployment rate for previous years?” Only one user found this question and used it to complete the task.

The response to the CBA scenario could also be found in the “People are asking” box of the Collective Bargaining Program homepage. However, since no one was able to complete this task successfully (none navigated to this page), there was not an opportunity to use the “People are asking” box.

Are users able to find data from “Tables Created by BLS”? (CE)

No. Of the 6 correct completions, only two users found the information through the “Tables Created by BLS”. Two other users attempted to use the “Tables Created by BLS” by clicking on the link, but failed to locate the relevant table among the several choices on the page. The majority of users completed this task by creating their own tables.

Do users notice the information found under the Special Notices section of the program homepages? (PCI)

Yes. Seventy percent of users successfully found the information contained in the Special Notices section without much difficulty. This scenario had one of the fewest number of pages viewed (average of 5 pages) and shortest time to completion (average of 122 seconds).

Are users able to find occupational information in the Occupational Outlook Handbook? (OOH)

Yes. Most users were able to find the occupational information in the Occupational Outlook Handbook (70% successful completion). Of the seven successful completions, four users found the link to the Occupational Outlook Handbook on the homepage (after some searching) and two users found the information through the Career Information for Kids page (which they accessed through a link on the A-Z index. One other user was able to found relevant information from another source (other than the Occupational Outlook Handbook) and used that information to choose the correct answer.

Will users find the schedule news releases for a specific index? (CPI)

Yes. In the CPI scenario, users were asked to find the list of schedule news releases for the current year. Most users (80%) found this information without much difficulty. This is also reflected in the relatively short completion time (average of 71 seconds) and the small number of viewed pages (average of 6 pages).

Do users notice and use the search engine? (CBA)

Yes. Seven out of the 10 users found and used the search engine to attempt to complete the CBA scenario. This scenario was a difficult one and none of the users were able to navigate to the Collective Bargaining Page which contained the information about whether BLS produces prevailing wage data. This may be due to the fact that there are no logical links for prevailing wages or the Davis-Bacon Act on the homepage (and users did not know to click on the Collective Bargaining link). Therefore, the users had to rely on either the search engine or the A-Z index to browse for this topic.

Will users find the FAQ link on a Program homepage? (CBA)

Not enough data. The correct response to the CBA scenario was contained in the FAQ section of the Collective Bargaining Agreement program page. However, since none of the users navigated to this page, there were no available observations about whether they would have found the Frequently Asked Questions link.

Satisfaction Scores

The overall System Usability Scale (SUS) satisfaction score was 44. SUS scores can range from 0 to 100, with average scores usually around 65-69. Scores below 60 are considered 'low' satisfaction, and scores above 70 are considered 'above average' satisfaction.

Round 1—Card Sort Exercise of BLS.gov Terms

Introduction

The BLS Website Redesign Team has been organized to design, test, and implement a new navigation structure and look-and-feel for the BLS Website that betters serves our customers by making BLS information easier to find and easier to use. This redesign will concentrate on the organization of materials on the BLS Website, the navigation between pages, and the common structural elements of individual web pages.

To address the goal of better organization of information on the website, a card sorting task was conducted with BLS data users.

Test Objectives

The objectives of the card sorting task are to gather information on how users group related concepts together. The concepts will include menu items from the BLS homepage. This information will be used to re-organize and reduce the vast number of links currently on the BLS homepage.

Method

Participants

Thirty-four participants were recruited from four sources: 1) the participant pool maintained by OSMR, 2) BLS contractors and interns, 3) current users of the BLS website, and 4) Census Bureau staff. Sixteen of the participants were considered BLS Data Users (i.e., frequents the BLS website regularly for their research or job related function). The remaining 18 participants were considered to be Casual Data Users (i.e., occasionally visits the BLS website for specific reasons, such as job-related search or for general interest). The classification of the participants as data vs. casual data users was done through post-test interviews where the participant gave information about what their occupation was and how they used BLS data. The demographic characteristics of the participants by user type are shown in Table 1.

Table 1. Demographic Characteristics of Participants by User Type

Gender |

Age |

Education |

|||

Data User Group |

|||||

Female |

8 |

18-39 |

6 |

High school or less |

0 |

Male |

8 |

40-59 |

8 |

Some college |

0 |

|

|

60-75 |

2 |

College degree |

8 |

|

|

|

|

Advanced Degree |

8 |

Casual Data User Group |

|||||

Female |

15 |

18-39 |

8 |

High school or less |

1 |

Male |

3 |

40-59 |

9 |

Some college |

7 |

|

|

60-75 |

1 |

College degree |

6 |

|

|

|

|

Advanced Degree |

4 |

As shown in Table 1, the two groups were similar in age distribution. However, the Data User Group had more participants with higher levels of education than the Casual Data User Group. All of the Data User Group participants had at least a college degree or higher. In contrast, only 56% of the Casual Data User Group had a college degree or higher.

The participants were experienced computer and web users (only two participants had less than 5 years of experience using the web). In general, the two groups had similar years of experience using the computer. However, the Data User Group tended to have more experience using the web. All of the Data User participants had at least 5 years of experience using the web and over 68% of them had 10 or more years of experience compared to the Casual Data User Group which was comprised of only 39% of participants with 10 or more years of experience.

As expected, the Data User Group frequented the BLS Website more often than the Casual Data User Group. For example, nine out of the 16 participants (or 56%) visited the BLS Website at least once a month. In contrast, of the 18 Casual Data Users, only four (or 22%) visited the BLS Website at least once a month.

Procedure

The card sorting task was conducted using two different apparatus 1) index cards with site content printed on them or 2) computer-aided application. The majority of the participants carried out the card sorting task on the computer, using a card-sorting application called CardZort, created by Jorge A. Toro and commercially available.

At the start of each session, participants were informed about the purpose of the exercise, i.e., to sort the terms into related categories of their own choosing. No definition of the terms was provided. They were instructed that each card can only be used once (that is they cannot place it in more than one category).

Participants were given the informed consent forms and a brief background questionnaire (See Appendix B). Each session was conducted with one participant at a time (with the exception of the Census Bureau participants who were all ran at the same time). Cards were shuffled and presented in a random order for each participant.

Each session lasted an average 40 minutes, including the debriefing at the end of the exercise. Participants received a payment of $40 at the completion of the task. However, the Census Bureau participants did not receive any payment.

Results

The first step of the analysis of the card sort results was to examine the number of categories that users created to group the 74 cards. Table 4 presents some simple descriptive statistics that characterize these data for the entire sample and separately for the Data User Group and the Casual Data User Group.

Tables 4. Number of Categories Created by User Type1

|

Descriptive Statistics |

||

Mean |

Median |

Stand. Dev. |

|

Total (n = 30) |

12.8 |

12 |

3.3 |

Data Users (n = 13) |

13.1 |

12 |

3.1 |

Casual Data Users (n = 17) |

12.5 |

12 |

3.5 |

From the first row of Table 4 we can see that when aggregating across the entire sample, there were 13 categories created by participants on average, with a median of 12 categories. As expected, however, user experience with BLS data and/or the BLS public website had a slight positive effect on the number of categories generated: participants in the Data User Group created on average about one more category than participants in the Casual Data User Group.

Consistent with this finding, when we restricted our analyses to the Census Bureau participants alone (see Table 5), we found that they tended to be more differentiated in their card sort than the overall Casual Data User Group (13.6 categories for Census staff on average vs. 11.6 for the overall Casual Data User group). This result was especially pronounced for Census Bureau employees who were users of BLS data (mean = 14.7). With the exception of results presented in Table 5, the analyses presented throughout the remainder of this paper are based on the full sample—we did not separate out data from Census Bureau participants. The key comparison remained between the Data User Group and the Casual Data User Group, with classification based on participants’ self-reported usage.

Tables 5. Number of Categories Created by User Type, Census Bureau Only

|

Descriptive Statistics |

||

Mean |

Median |

Stand. Dev. |

|

Total |

13.6 |

12 |

4.2 |

Data Users |

14.7 |

12 |

4.6 |

Casual Data Users |

12.8 |

12 |

5.1 |

Data from the individual participants was reviewed to determine if any should be excluded from the analyses. Based on an examination of outliers, one Data User and two Casual Data Users were removed because they appeared to be unrepresentative cases. Table 6 shows the simple descriptive statistics for the sample after removing these outliers. The results were similar to those found in Table 4 for the full sample, with a slight reduction in the number of categories generated in all three groups. The overall mean (and median) number of categories formed was 12, and the Data User Group generated on average one more category than the Casual Data User Group (see Table 6, below).

Tables 6. Number of Categories Created by User Type (excluding outliers)2

|

Descriptive Statistics |

||

Mean |

Median |

Stand. Dev. |

|

Total |

12.0 |

12 |

2.4 |

Data Users |

12.5 |

12 |

2.4 |

Casual Data Users |

11.6 |

12 |

2.4 |

Cluster Analyses

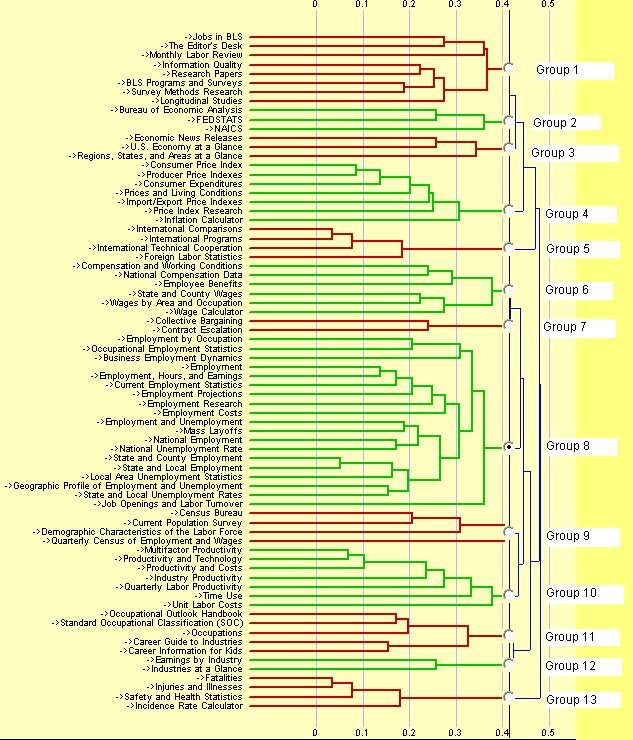

The next step in the data examination was to perform hierarchical cluster analysis on the processed card-sorting data. The standard output of cluster analysis is a hierarchical tree structure called a dendrogram. The dendrogram provides a graphical representation of the way card sort participants organized the materials. Figure 1 shows the dendrogram resulting from the cluster analysis of participants’ sorting of BLS.gov items. The figure was generated from the CardCluster application using data from the entire test sample; thirteen distinct groups of items are identified, corresponding to the mean number of groups generated by the overall sample.

There are several things to notice about the figure. First, the horizontal axes provide a measure of dissimilarity between items within a group. For example, the items International Comparisons and International Programs in Group 5 have a dissimilarity score near zero which means that they were placed in the same category by virtually all of the participants, and as a result were placed in the same group by the cluster analysis at an early stage of the procedure. By contrast, the items International Comparisons and Foreign Labor Statistics have a dissimilarity score around .2, indicating that these items were sometimes placed in different categories by participants.

Another way of conceptualizing dissimilarity is examine the compactness of a cluster. This represents the minimum distance at which a cluster was formed. The relative compactness of the items in Group 5, for instance, can be estimated by looking at the point at which all of its branches merge together, and the relative distance of that point from the left-hand side of the dendrogram (.18). The distinctiveness of a sub-cluster within any given cluster is a measure of the distance from the point at which it comes into existence to the point at which it is aggregated into a larger cluster. So, for example, the sub-cluster containing the items International Comparisons, International Programs, and International Technical Cooperation has a distinctiveness score of approximately 0.1 since they form together around 0.08 whereas the item Foreign Labor Statistics joins to form the larger cluster at around 0.18. Finally, the overall weight of the cluster simply refers to the number of items in the group divided by the total number of items. Using Group 5 as our example, then, this group has a weight of 4/74 or .054.

As illustrated in Figure 1, some groups of items were consistently categorized in a similar manner by participants, as reflected in the relatively compact and distinct clusters (Groups 5 and 13, and to a lesser extent Group 4). Some clusters proved more diffuse, however (e.g., Groups 1, 8, and 9). Based on the diagram we can conclude that this latter finding reflects participants’ misunderstanding or uncertainty about some of these items in the cluster (e.g., Quarterly Census of Employment and Wages, NAICS), above and beyond participants’ idiosyncratic categorization schemes.

F igure

1. Dendrogram for all users (13 categories)

igure

1. Dendrogram for all users (13 categories)

The next step in the analyses was to examine the effects of experience with BLS data and/or the BLS website on participants’ organizational decisions. We examined the data selecting the median number of categories for both groups (12). We present the results by cluster, using the cluster labels most frequently suggested by the respective data user groups (to copies of the dendrograms for each user group, please see the full report, “BLS Website Card Sort Study,” by Fricker and Rho, 9/25/06).

Price Indexes/Prices & Living Conditions

Group 1 for Data User Group, Group 10 for Casual Data User Group

There was a significant overlap between data user groups in the items categorized together under this label. The Data User Group had a nicely nested, hierarchical structure for this group, but the item Contract Escalation appears to have made this cluster less compact than it otherwise would have been without it. The Casual Data User Group did not include this item at all in this cluster, and in addition appears to have a fairly distinct sub-cluster for the items Inflation Calculator and Prices and Living Conditions. Both of the user groups included the item Import/Export Price Indexes in this cluster, rather than under their clusters related to International Statistics/International Topics.

Productivity & Costs

Group 2 for Data User Group, Group 5 for Casual Data User Group

There was less agreement between user groups for this cluster. Again, the Data User Group showed nice nesting among the 6 items, and a fairly compact cluster. The Casual Data User Group, by contrast, included 8 items in this category which appears to be separable into two distinct sub-groups. The items Quarterly Census of Employment and Wages, Unit Labor Costs, and Time Use are quite distant from other items in this category for the Casual Data Users. The items Quarterly Census of Employment and Wages and Unit Labor Costs also appear to be problematic for the Data Users, who placed them together in their own category (see Group 6, Figure 2).

BLS & Other Agencies

Group 3 for Data User Group, Groups 8 & 9 for Casual Data User Group

This “group” actually appears to be best represented as two discrete categories. Data Users did group 12 items under a single label, but had two noticeable sub-categories—one corresponding to information about BLS research, and another representing other statistical agencies. Casual Data Users grouped 6 items in a category they most frequently labeled “Other Statistical Agencies,” and 7 items in another category most frequently labeled “About BLS.” Interestingly, both Data Users and Casual Data Users placed the Current Population Survey in the “Other Statistical Agencies” cluster. In addition, the Casual Users grouped the item Demographic Characteristics of the Labor Force in this cluster, and placed NAICS with this cluster as well, which is unsurprising since many said during debriefing that they had been unfamiliar with the term3.

Economic Overview/At a Glance

Group 4 for Data User Group, Group 11 for Casual Data User Group

This cluster included only three items for Data Users and two for Casual Data Users (only the Data Users included Economic News Releases). The inclusion of the Regions, States and Areas at a Glance item in this cluster appears to have reduced compactness, and this is likely due to the fact that a number of participants alternatively placed this item in the categories that included other geographic-related items (e.g., State and County Wages).

Wages/Compensation and Wages

Group 5 for Data User Group, Groups 1, 6 & 7 for Casual Data User Group

Data Users grouped 8 items in this cluster, with two discernable sub-clusters—one defined by items with the term “wage” explicated stated, and another by items associated to compensation and other wage-related topics. Casual Data Users had a single cluster of items explicitly related to “wages” (Group 7). They also apparently misunderstood the term “compensation” to mean recompense for work-related injury and included these items in a cluster most frequently labeled “Safety & Health” (Group 6, Figure3). In addition, Casual Data Users appear to have not understood the meaning or relevance of the items Collective Bargaining, Contract Escalation, and Job Openings and Labor Turnover, and grouped these items with Jobs in BLS (Group 1) under the cluster most frequently labeled “Personnel” or “Employment.”

Employment & Unemployment

Group 7 for Data User Group, Group 3 for Casual Data User Group

This cluster was by far the largest for both data user groups, which primarily reflects the sizeable number of items in the card sort that explicitly referenced “employment” or “unemployment.” Data Users grouped 21 items in this category, and Casual Data Users grouped 18 together. The Data User Group has two noticeable sub-categories—one related to employment and unemployment generally, and another to occupational employment and occupations. The Casual Data User Group produced a more diffuse cluster, but tended to group employment items separately from unemployment items. The item Business Employment Dynamics appears to have been inconsistently categorized in both user groups. In addition, the item Occupational Employment Statistics seems to have been problematic for the Casual Data Users.

Industry Data

Group 8 for Data User Group, Group 4 for Casual Data User Group

Both groups of participants included 3 items in this cluster: the Data User Group included Industry at a Glance, Earnings by Industry, and (appropriately) NAICS, whereas the Casual Data User group included Industry Productivity, Industries at a Glance, and Earnings by Industry.

Job/Career Outlook/Career Information

Group 9 for Data User Group, Group 2 for Casual Data User Group