OMB Submission Post OMB Conf Call Final 04-14-2009_B

OMB Submission Post OMB Conf Call Final 04-14-2009_B.doc

Demonstration of Speed Management Programs

OMB: 2127-0656

TABLE OF CONTENTS

B. COLLECTION OF INFORMATION EMPLOYING STATISTICAL METHODS

1. Describe the potential respondent universe and any sampling or other respondent

selection method to be used............................................................................................….8

2. Describe the procedures for the collection of information...........................................….10

3. Describe methods to maximize response rates and to deal with issues of

non-response.................................................................................................................….19

4. Describe any tests of procedures or methods to be undertaken........................................ 20

5. Provide the name and telephone number of individuals consulted on statistical

aspects of the design......................................................................................................... 21

B. Collections of Information Employing Statistical Methods

The proposed study will employ statistical methods to analyze the information collected from respondents. The following sections describe the procedures for respondent sampling and data tabulation. However, before describing these procedures, we reiterate the hypothesis in this study.

The hypothesis in this study focuses on the attitudes of respondents in the test site of Tucson, Arizona and a control site. The hypothesis is that the Public Information and Education campaign associated with the automated and traditional speed enforcement demonstration will affect the general public's attitudes and levels of acceptance of Automated Speed Enforcement. That is, when automated speed enforcement is deployed as a concomitant countermeasure to traditional speed enforcement, there will be a significant increase in the number of people who approve of automated speed enforcement. The phone survey will measure the attitudes of people both “before” and “after” the Tucson demonstration project and Public Information and Education Campaign.

In addition to measuring the attitudes of people both “before” and “after” the demonstration project and Public Information and Education Campaign, there will be a comparison of a test site and control site. We will compare the respondent attitudinal differences for the test site (percentage approving “after” the Tucson demonstration minus the percentage approving “before” the demonstration) to the control site (percentage approving “after” the Tucson demonstration minus percentage approving “before” the demonstration). For the test site we expect a large increase in approval of automated speed enforcement. For the control site we expect little or no percentage increase in approval.

When comparing the test site to the control site differences observed, we expect the phone survey results will show a significant increase in the percentage of people approving of Automated Speed Enforcement in the test site, but this increase will not be observed in the control site. We expect a comparison of test and control group differences to yield statistically significant results. Having summarized the hypothesis, we now describe the respondent universe.

B.1. Describe the potential respondent universe and any sampling or other respondent selection method to be used.

The universe for this survey includes all licensed drivers (18 years and older) residing in the Tucson and control city area(s). This is the adult population that is age-eligible to drive motor vehicles and, given that the focus of this survey is on the reactions of all drivers in response to the countermeasures introduced in the program, it is important that the sampling frame encompass the full adult driving population.

Of the 662,055 Pima County, Arizona licensed drivers (as of 4/1/08), 800 Tucson telephone interviews and 800 telephone interviews in the control community (location(s) to be determined) are planned. As specified in Section A.12., the contractor will administer the survey once before and once after the countermeasure program is introduced in both the demonstration and control sites. Each administration of the survey will be to 400 randomly selected respondents. Identical methods will be used to draw the sample for both administrations.

For each administration of the survey, 400 respondents will be drawn from a probability sample of households in the Tucson and control site areas selected through a random digit dialing (RDD) sampling process.

The expected response rate target for this survey will be at least 50% based on the subject matter is not sensitive, interview length is only 15 minutes, and the purpose of the survey and its government sponsorship will be made known to the respondent. The following techniques will be used to increase the response rate:

Developing answers for the questions and objections that may arise during the interview.

Leaving message on answering machine with a toll free number .

Employing multi-lingual interviewers to reduce language barriers.

Eliminating non-residential numbers from sample.

Calling back respondents who initially refused or broke-off interview.

Callback procedures will also improve response rate. The contractor will review refusals and initiate another call with a refusal script. Callbacks will be scheduled and prioritized based upon the following criteria: first priority--scheduled callback to qualified household member; second priority--scheduled callback to “qualify” household (includes contact with Spanish language barrier households); third priority--callback to make initial contact with household (includes answering machine, busy, ring no answer); and fourth priority--callbacks that are the seventh or higher attempts to schedule interview.

Scheduled callbacks can be dialed at anytime during calling hours and as frequently as requested by the callback household up to five times. Callback attempts in excess of five are at the discretion of the interviewer based upon his/her perception of the likelihood of completing the interview. The basis of the interviewer’s perception, in part, will be determined by how vigorously the interviewer is being encouraged to call back to complete the interview by the potential respondent or another member of the household. The interviewer will then confer with a supervisor and a final determination will be made as to if the interviewer will continue calling.

Callbacks to Spanish language barrier households will be conducted by Spanish-speaking interviewers. Non Spanish-speaking interviewers who reach a Spanish-speaking household will schedule a callback that will be routed to a Spanish-speaking interviewer.

Callbacks for initial contact with potential respondents will be distributed across the various calling time periods and weekday/weekend to ensure that a callback is initiated during each time period each day. Two (Saturday and Sunday) to three (Monday through Friday) callbacks per number will be initiated per day assuming the number retains a callback status during the calling. There will be up to ten callback attempts without having reached a person. This protocol is designed for ring no answer and answering machines. When an interviewer reaches a household with an answering machine during the seventh time calling the interviewer will leave a message with the respective appropriate 800 number.

Callbacks to numbers with a busy signal will be scheduled every 30 minutes until the household is reached, disposition is modified, maximum callbacks are achieved or the study is completed.

B.2. Describe the procedures for the collection of information.

The most important elements of the study design of the Speed Management Demonstration Project are:

1) The survey population is defined as total non-institutionalized licensed driver population, age 18 and older, residing in Tucson, AZ and the control site area(s) households having telephones.

2) The survey will be conducted by telephone, using computer-assisted telephone interviewing from the contractor’s telephone center located in Philadelphia, Pennsylvania.

3) The survey will be administered twice; once before and once after the countermeasure program is introduced.

4) Identical methods will be used to generate probability samples for the surveyed locations. The before and after samples will be drawn independently for each location.

5) A probability sample of telephone households in Tucson, AZ and the control site(s) will be drawn using a List-Assisted Random Digit Dialing scheme.

6) Within a selected household one eligible licensed driver (18 or older) will be selected randomly using the “next birthday” scheme.

7) In total, fielding interviews will be completed with 1,600 people ages 18 and older. The questionnaire focuses on awareness and support for the Speed Management intervention and of Automated Speed Enforcement.

8) The survey will include a Spanish language version of the questionnaires used by bilingual interviewers to minimize language barriers.

9) Interviewers experienced in conducting phone surveys will be selected and trained.

10) Call will be made during morning, afternoon and evening hours from 9a.m. to 9p.m. local time, Monday through Sunday. The time of calling an individual respondent will be varied to increase response rates among hard-to-find potential respondents.

B.2.1 Sampling Frame

The purpose of this study is to determine the effect of the countermeasure program introduced on the adult licensed driver population of Tucson, AZ. A control site for the demonstration will include the adult licensed population also. The frame consists of households that contain one or more licensed drivers. Since, this is a telephone survey, the universe of households will be represented by the frame of household telephone numbers in these cities. Non-telephone households, which represent a small percentage of all households, will not be represented in the universe.

B.2.2 Random Digit Dialing Procedures

Random digit dialing (RDD) will be used to select telephone numbers within selected exchanges for household contact. Telephone numbers in the United States are 10 digits long - where the first three numbers are the area code, the next three are the exchange, and the last four numbers are the number within the exchange. The first two digits of the four digit number define a cluster, with each cluster containing 100 numbers or a “100-bank” (the last two digits). Selection will be made from those 100-banks containing residential listings. From the 100- banks, a two-digit number is randomly generated. Therefore, every telephone number within the 100- banks has an equal probability of selection, regardless of whether it is listed or unlisted.

B.2.3 Selection of Respondent within Households

The sample construction described above yields a population-based, random-digit dialing sample of telephone numbers. A random selection procedure will be used to select one designated respondent for each household sampled. The “next birthday method” will be used within household selection among multiple eligible respondents. Salmon and Nichols (19831) proposed the birthday selection method as a less obtrusive method of selection than the traditional grid selections of Kish, et al. In theory, birthday selection methods represent true random selection (Lavrakas, 19872). Empirical studies indicate that the birthday method produces shorter interviews with higher response rates than grid selection (Tarnai, Rosa and Scott, 19873).

Upon contacting the household, interviewers will briefly state the purpose of their call (including noting the anonymity of the interviewee), and then request to speak to the person in the household within the eligible age range who will have the next birthday. The CATI system will be programmed to accept the person with the next birthday. If the person who answered the phone is the selected respondent, then the interviewer will proceed with the interview. If the selected respondent is someone else who then comes to the phone, then the interviewer will again introduce the survey (with anonymity statement) and proceed with the interview. If the selected respondent is not available, then the interviewer will arrange a callback.

B.2.4 Precision of Sample Estimates

The objective of the sampling procedures described above is to produce a representative probability sample of the target population. A random sample shares the same properties and characteristics of the total population from which it is drawn, subject to a certain level of sampling error. This means that with a properly drawn sample one can make statements about the properties and characteristics of the total population within certain specified limits of certainty (confidence limits) and sampling variability. The confidence interval for sample estimates of population proportions, using simple random sampling without replacement, is calculated by the following formula:

____

z * [ se(x) = Ö p(q) ]

(n-1)

Where:

se(x) = the standard error of the sample estimate for a proportion

p = some proportion of the sample displaying a certain characteristic or

attribute

q = (1-p)

n = the size of the sample

z = the standardized normal variable, given a specified confidence level (1.96

for 95% confidence level)

Using this formula, the maximum expected sampling error at the 95% confidence level (i.e., in 95 out of 100 repeated samples) for a total sample of 400 is + 5% percentage points. It should be noted that the maximum sampling error is based upon the conservative estimate that p = q = 0.5.

This is an a priori estimate of precision. The standard error may be larger because of factors such as response rates and applied sampling weights. To test whether significant differences exist between proportions from different samples such as between before and after samples the following procedure and formula applies:

First: We state the hypothesis to be tested. The hypothesis being tested is called the null hypothesis and is denoted H0. Often, the null hypothesis states a specific value for a population parameter. The null hypothesis in this study is: for the phone surveys there will not be a significant difference between the test group time one and time two attitudinal difference and the control group time one and time two attitudinal difference toward automated speed enforcement.

Second: We choose the level of significance at which the test will be performed. This is called the size or level of the test. It is the probability of rejecting the null hypothesis when it is true. The level of the test determines the values of the test statistic (such as t) that would cause us to reject the hypothesis. The significance level will be .05 for this study.

Third: We then, and only then, collect the data and reject the hypothesis or not depending on the observed value of the test statistic.

For example, if H0: μx = μy (which can be rewritten H0: μx - μy = 0), the test statistic is:

![]()

If |z|> 1.96, reject H0: μx = μy at the .05 level of significance

Where

![]() is equivalent to p1 and

is equivalent to p1 and

![]() is equivalent to p2.

is equivalent to p2.

We conducted an analysis of the possible differences that might arise when testing the null hypothesis (that there will not be a significant difference between the test group time one and time two attitudinal difference and the control group time one and time two attitudinal difference toward automated speed enforcement) for the phone surveys. The responses to Question 10 (Q10) (How do you feel about photo radar enforcement cameras recording drivers exceeding the speed limit?) are the basis for testing the null hypothesis. There are four possible answers to Q10 which have been dichotomized for purposes of this analysis.

The answers “Strongly Approve” and “Approve” are designated at P and “Disapprove” and “Strongly Disapprove” are designated as Q , where P+Q = 1.

Since there are before and after surveys in Tucson and a control city, we wish to determine if there is a significant difference between the survey results of the before and after survey for each location. Next, we examine the before and after survey results for the dichotomized responses to Question 10. We have designated ∆1 as the differences in results for Tucson and ∆0 for the control city differences.

Test of Significance

First, we calculate whether combinations differences between ∆1 (test site of Tucson) and ∆0 (control site) will be significant at the 5% significance level with a two-tail test. The following table shows the outcome for selected combinations of values that will demonstrate a significant difference.

EXAMPLES OF |

|

|

|

DIFFERENCES THAT ARE SIGNIFICANT |

|||

∆1 |

∆0 |

|

|

0.3 |

0.1 |

|

|

0.3 |

0.2 |

|

|

0.3 |

0.4 |

|

|

0.4 |

0.1 |

|

|

0.4 |

0.2 |

|

|

0.4 |

0.3 |

|

|

0.5 |

0.1 |

|

|

0.5 |

0.2 |

|

|

0.5 |

0.3 |

|

|

0.5 |

0.4 |

|

|

Here we see that in the first case where there is a 30 percent (0.3) change in attitudes in the test site and a 10 percent (0.1) change in the control site, there is a significant difference. This means if the test site shows an approval (or disapproval) change of thirty percent and the control site shows an approval (or disapproval) change of 10 percent the results will be statistically significant. The same holds true for the other combinations listed above. Virtually all differences between the ∆ values of 10 percentage points are significant at the .05% level for a two-tailed test. If the difference between ∆1 and ∆0 is less than 5 percentage points it is likely that the differences will not be significant.

Power Analysis

Power is defined as 1-Probability of a Type II error and the Type II error is the probability of not rejecting the null hypothesis when it is false.

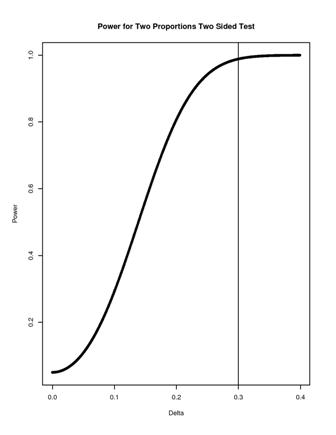

We conducted a power analysis for possible values of ∆1 and ∆0. The following two graphs display the results for a two sided (.05) and one sided test of significance.

The first graph indicates that if ∆1 is .3 and ∆0 is .2 the power value is about .8 and if ∆1 is .3 and ∆0 is .1, the power value decreases to about .3.

Virtually the same results are obtained for a one tailed power analysis. For instance, if ∆1 = .3 and ∆0 is .2 the power value is .8

B.2.5 Data Collection

Data collection for this survey will be conducted from the phone banks of an organization experienced in conducting large-scale national surveys. The contractor will administer the survey using computer-assisted telephone interviewing (CATI), and will have sufficient numbers of CATI stations to conduct the survey.

All interviewers on the project will have been previously trained in effective interviewing techniques as a condition of their employment. The contractor will develop an interview manual for this survey, and conduct a training session specific to this study with all interviewers prior to their conducting any interviews.

Interviewing will be conducted on a schedule designed to facilitate successful contact with targeted households (concentrating on weekends and weekday evenings). Interviewers will make 10 call attempts to ring no answer numbers before the number is classified as a permanent no answer. These call attempts shall be made at different times, on different days over a number of weeks, according to a standard call attempt strategy. However, the 10 call attempt protocol shall only apply to telephone numbers where nobody picks up the telephone. If someone picks up the phone but terminates the contact before in-house selection of a subject can be made, then the Contractor shall apply an alternative protocol of additional contact attempts designed to maximize participation but yet not annoy the prospective household participant. This alternative protocol will further diversify the calling days (weekdays and weekends) and times (morning, afternoon, and evening) to reach the respondent.

The Contractor shall implement a plan for gaining participation from persons in households where telephone contact is made with an answering machine. The Contractor shall leave a message on the machine encouraging survey participation and providing information that the household member can use to verify the legitimacy of the survey and contact the Contractor. The Contractor shall set up a toll free number that the prospective survey participant can call. The Contractor may also note these sources of verification to persons directly contacted on the phone if that would be deemed helpful in getting their participation.

When the household is reached, the interviewer will use a systematic procedure to randomly select one respondent from the household. If the respondent is reached but an interview, at that time, is inconvenient or inappropriate, the interviewer will set up an appointment with the respondent. If contact is made with the household, but not the designated respondent, the interviewer will probe for an appropriate callback time to set up an appointment. If contact is made with the eligible respondent, but the respondent refuses to participate, then the interviewer will record information for use in refusal conversion to be conducted at a later time (see section B.3.).

If the case is designated as Spanish language, it will be turned over to a Spanish-speaking interviewer. If the interviewer encounters a language barrier, the interviewer will thank the person and terminate the call. All interviewers on the study will be supervised in a manner designed to maintain high quality control. One component of this supervision will entail periodic monitoring of interviewers while they are working. Through computer and phone technology, supervisors can silently monitor an interviewer’s work without the awareness of either the interviewer or respondent. Second, supervisors will check interviewers’ completed work for accuracy and completeness.

B.2.6 Sample Weighting

The Contractor will carry out a multi-stage sequential process of weighting the survey data. Since this is RDD telephone survey, the base weight is the inverse of the probability of selecting a telephone number and is the number of telephone numbers in the frame divided by the sample of telephone numbers. For instance, nationwide, there are approximately three hundred million telephone numbers and for a nationwide sample of 1,000 households, the weight would be three hundred million/1,000 or 300,000. Further weighting adjustments are applied including at least the following essential adjustments:

Nonresidential telephones

Screener nonresponse

Multiple telephone households

Person selected within household

The previous steps correct the achieved sample for known biases in sample selection. There is also a self-selection bias in sample surveys in which participation is voluntary. Some self-selection biases in telephone surveys involve age, sex, race, household size, region, and urbanicity. A fourth weighting procedure will weight the (weighted) sample to the distribution of the population, using the Census Population Projections for characteristics of particular concern.

B.2.7 Variance Estimation

The statistics p and q are calculated from a probability sample of the entire population and are considered point estimates. Statistical theory enables us to make statements about the likelihood that the true population value is contained within a range. This range is commonly referred to as a confidence interval. Statistical formulae can be used to determine the lower and upper limit of the confidence interval. How close the upper and lower limits are, that is, the difference between the two values, determines the precision.

Generally, there are two factors that affect precision: sample size and data variability (the range of different values in a population). As the sample increases in size, the lower and upper limit values move closer to the value of p—that is, the estimate becomes more accurate. For example, consider a car repair survey. The larger the sample of owners selected, the more precise the estimates become. The upper and lower limits define the precision interval for a value of p and the level of certainty, which is 95 percent for all calculations in this document. Therefore, precision calculations were made before the survey for planning purposes and will be made after the survey is completed. The post survey calculations of precision will be more exact than the a priori estimates.

The estimates from a sample survey can be affected by non-sampling errors. Non-sampling errors can be generated by a variety of sources in the data collection and data processing phases of the survey. All possible steps will be taken to avoid the introduction of non-sampling error during the course of the survey.

A sampling error is usually measured in terms of the standard error of a particular statistic (e.g., proportion, mean), which is the square root of the variance. The standard error can be used to calculate confidence intervals about the sample estimate within which the true value for the population can be assumed to fall with a certain probability. The probability for the confidence interval can be set at different levels depending on the needs of the analysis.

The standard statistical formulas for calculating sampling error are based on the assumption of a simple random sample. The report produced from this project is intended for an audience that has limited interest in confidence intervals and, hence, the report tables do not include variance estimates. However, the methods report will provide estimates of the confidence intervals for the key variables from the survey.

B.2.8 Statistical Analysis

A detailed plan will be developed to analyze the survey results. The plan will define: (1) the purpose of the survey, (2) the objectives of the research (the main areas of investigation), (3) the data or variables to be investigated, (4) the analytic approaches and methods to be used to achieve the research objectives, and (5) the preliminary tabulations to be prepared from the completed data file after the data are processed. The kinds of analytical activities are:

1. Descriptive

This straightforward approach involves working out statistical distributions, constructing tables and graphs, and calculating simple measures such as means, medians, totals and percentages.

Statistical descriptions are the tabulations after the data are processed, to aggregate the features of the data file. Statistical descriptions often are reported in series, one variable or research question at a time being cross-classified with others, thus producing a descriptive summary of the potential associations between the study variables.

2. Inferential

Statistical inference is the linking of the results derived from data collected from or about a sample to the population from which the sample was drawn. Inference is the principal approach for analyzing the survey data and inferences can be made because the data are collected from a probability sample. Estimates will be made of the population characteristics from the sample as well as sampling errors.

3. Analytic Interpretation

Attempts will be made to explain the relationships and differences between variables using various statistical analyses and inference testing techniques. There are a number of statistical tools available for conducting these analyses including tests of significance, regression analyses, discriminant analyses, and others.

Constructing the analysis plan is a multi-step process. The project office should develop this with the assistance of Agency statisticians, computer programmers, specialists in the subject area of the research, and systems analysts, as appropriate.

B.3. Describe methods to maximize response rates and to deal with issues of non-response.

In order to attain the highest possible response rate, an interviewing strategy with the following major components will be followed. The initial contact script has been carefully developed and refined to be persuasive and appealing to the respondents. Only thoroughly trained and experienced interviewers, highly motivated and carefully monitored, will conduct the interviewing. During a two-day training program, interviewers and supervisors will be trained on every aspect of the survey. Emphasis will be placed on how to overcome initial reluctance, disinterest or hostility during the contact phase of the interview. The interviewing and supervisory team will include Spanish-speaking personnel to ensure that Spanish language is not a barrier to survey participation. The Contractor will make ten attempts to reach no-answer telephone numbers, and an interviewer will leave an approved message on answering machines according to study protocol.

The CATI program will record all refusals and interview terminations in a permanent file, including the nature, reason, time, circumstances and the interviewer. This information will be reviewed on an ongoing basis to identify any problems with the contact script, interviewing procedures, questionnaire items, etc., and the refusal rate by interviewer will be closely monitored. Using these analyses, a “Conversion Script” will be developed. This script will provide interviewers with responses to the more common reasons given by persons for not wanting to participate in the survey. The responses are designed to allay concerns or problems expressed by the telephone contacts.

The contractor will implement a soft refusal conversion plan in which each person selected for the sample who refuses to participate will be re-contacted by the contractor approximately one-to-two weeks following the refusal. The contractor will use the conversion script in an attempt to convince the individual to reconsider and participate in the survey. Only the most skilled interviewers will conduct the refusal conversions. Exceptions to refusal conversion will be allowed on an individual basis if for some reason the refusal conversion effort is deemed inappropriate.

There will be maintenance and regular review of field outcome data in the sample reporting file, derived from both the sample control and CATI files, so that patterns and problems in both response rate and production rates can be detected and analyzed. Meetings will be held with the interviewing and field supervisory staff and the study management staff to discuss problems with contact and interviewing procedures and to share methods of successful persuasion and conversion.

Previous surveys performed by the contractor performing similar surveys have achieved response rates over 50%. The 2006-2007 U.S. Department of Housing and Urban Development (HUD) Fair Market Rent Random Digit Dialing survey obtained an AAPOR Response Rate 3 of 52.8% for Los Angeles and 58.9% for Hawaii County with cooperation rates over 97%. In 2007-2008 HUD Fair Market Rent surveys obtained response rates of 78% in Duchesne and Uintah Counties, Utah, 50.2 % in Midland County, TX, 55.5% in Mesa County, Colorado, 62.7% in Ector County, Texas, 66.8% in Sweetwater, Uinta (Wyoming) and Moffat (Utah) Counties and 74.6% in Natrona County, Wyoming.

Non-Response Analysis

The two types – unit non-response and item non-response will be evaluated. A decision will be made as to what constitutes a usable return and a recognized formula will be used to calculate the overall response rate. The comparison of the characteristics of the completed and non-completed cases from the random digit dial sample will be conducted to determine whether there is any evidence of non-response bias in the completed sample.

Item non-response occurs when a response to a single question is missing and its impact on survey statistics can be as severe as unit non-response. Only certain statistics may be affected by item non-response and these will probably be the most sensitive questions on the questionnaire. It may be possible to use imputation methods to alleviate the impact of significant item non-response.

As the sample size is small, (two administrations at 800 interviews per administration), and the survey will employ a simple pre and post administration analysis, it is anticipated that any bias in the population will be present to an equal degree during both administrations. Nevertheless, NHTSA is concerned with any biases in the sample population and will look for any systematic trends that may indicate a refusal bias or any differences in the sample population. A comparison of the characteristics of the completed and non-completed cases from the random digit dialing sample will be conducted to determine whether there is any evidence of significant non-response bias in the completed sample. Sample characteristics will be collected during the course of the telephone survey. Any biases found in the sample population will be noted in the final report.

B.4. Describe any tests of procedures or methods to be undertaken.

The contractor will pre-test the survey calling an overall total of approximately 20 residents from Tucson and the control site(s) prior to fielding. Any problematic issues that are noted will be addressed and adjustments to the survey instrument will be made as appropriate.

B.5. Provide the name and telephone number of individuals consulted on statistical aspects of the design.

The following individuals have reviewed technical and statistical aspects of procedures that will be used to conduct the 2008 Survey:

Alan Block, MA

Office of Behavioral Safety Research

DOT/National Highway Traffic Safety Administration

1200 New Jersey Ave. SE

Washington, DC 20590

(202) 366-6401

John Siegler, PhD

Office of Behavioral Safety Research

DOT/National Highway Traffic Safety Administration

1200 New Jersey Ave. SE

Washington, DC 20590

(202) 366-3976

Mel Kollander, Consultant

Econometrics and Statistical Analysis

4521 Saucon Valley Ct.

Alexandria, VA 22312

1-703-642-8079

1 Salmon, C. and Nichols, J. The Next-Birthday Method of Respondent Selection. Public Opinion Quarterly, 1983, Vol. 47, pp. 270-276.

2 Lavrakas, P. Telephone Survey Methods: Sampling, Selection and Supervision. Beverly Hills: Sage Publications, 1987.

3 Tarnai, J., Rosa, E. and Scott, L. An Empirical Comparison of the Kish and the Most Recent Birthday Method for Selecting a Random Household Respondent in Telephone Surveys. Presented at the Annual Meeting of the American Association for Public Opinion Research. Hershey, PA, 1987.

| File Type | application/msword |

| File Title | TABLE OF CONTENTS |

| Author | ABlock |

| Last Modified By | Walter.Culbreath |

| File Modified | 2009-04-15 |

| File Created | 2009-04-15 |

© 2026 OMB.report | Privacy Policy