non substantive change Mathematica summary

Mathematica memo - survey, TAA evaluation, 1205-0460.doc

Plan for Evaluation of the Trade Adjustment Assistance Program

non substantive change Mathematica summary

OMB: 1205-0460

MEMORANDUM

FROM: Peter Schochet; Jillian Berk; Pat Nemeth DATE: 11/20/2008

SUBJECT: Short-Term Results of the New Survey Procedures for the TAA Evaluation

The National Evaluation of the Trade Adjustment Assistance (TAA) Program is a $10.4 million impact study being conducted by Social Policy Research Associates, with Mathematica Policy Research (MPR) as the subcontractor responsible for the survey data collection and the statistical aspects of the design. The evaluation, which began in 2004, is scheduled now to end in March 2010, due to delays associated with the initial Office of Management and Budget (OMB) clearance process1 and subsequent problems with recruitment of a number of states to participate in the evaluation.

The evaluation includes site visits, administrative records data collection, and baseline and follow-up surveys. The study sample was selected using data from Unemployment Insurance (UI) claims records and TAA-eligible worker lists that were provided by 26 states.2 The treatment group for the study includes UI claimants who were on the TAA-eligible worker lists, and the comparison group includes UI claimants who were statistically matched to the treatment group using propensity score matching methods. Matching was conducted using available baseline data from the UI claims records.

Data collection for the baseline survey began in early March 2008. Initially the baseline survey for the TAA evaluation included incentive payments of approximately $25 to encourage survey completion. In an August 2008 memo to OMB, DOL reported a lower than expected response rate and differences in response rates between the treatment and comparison groups. For sample members in the seven states where the survey had been conducted the longest, the overall response rate was about 46 percent, with values of 60 percent for treatment group members who received Trade Readjustment Allowance (TRA) benefits, 48 percent for other treatment group members, and 40 percent for comparison group members.

In September 2008, OMB approved a revised strategy to increase response rates to the baseline survey. This plan included changes in operational procedures and an incentive experiment where sample members could receive increased payments for survey completion. MPR implemented these plans on September 20, 2008.

This memo provides results on the extent to which the revised strategy increased baseline survey response rates during the eight-week period between September 20, 2008 and November 20, 2008. It also discusses options for future baseline interviewing which is scheduled to continue until February 2009.

The memo is in four sections. First, for context, we briefly discuss results of the original incentive experiment. Second, we summarize key features of the revised survey strategy. Third, we discuss analysis results, and finally, present options and recommendations for the future.

Before presenting the findings, it is useful for this memo to define four key sample groups:

Group A – TAA Participants, who are treatment group members who received TRA payments.

Group B – TAA Participant Comparison Group, who are matches to Group A.

Group C – TAA Non-Participants, who are treatment group members who did not receive (or had not yet received) TRA payments.

Group D – TAA Non-Participant Comparison Group, who are matches to Group C.

Most tables reported at the end of the memo present response rates separately for each group, as well as for all four groups combined.

1. THE ORIGINAL SURVEY INCENTIVE EXPERIMENT

Between March 6, 2008 and September 19, 2008, an experiment was conducted to test the impact of variations in the timing of the incentive payment on baseline interview response rates. About 60 percent of the individuals were randomly assigned to receive $25 for interview completion, and two groups of individuals were randomly assigned to receive small pre-payments with their initial contact letter. Twenty percent of the sample received a $2 pre-payment and was eligible for a $25 interview completion post-payment, and the other 20 percent received a $5 pre-payment and was eligible for a $20 interview completion post-payment.

The prepaid incentives had a small effect on interview completion rates (Table 1). The overall response rate was about 44 percent for the two prepayment groups, compared to 39 percent for the post-payment-only group. The overall difference in the response rates by incentive type is statistically significant, but the response rates were low regardless of incentive structure. A similar pattern holds across Groups A to D.

2. KEY FEATURES OF THE REVISED SURVEY DESIGN

The revised strategy to increase the baseline interview completion rate had two main components: (1) changes in operational procedures and (2) changes in incentive payments. These changes are discussed in detail in the August 2008 memo to OMB, but are summarized here.

For discussion purposes, it is convenient to distinguish between two types of cases when the new incentive experiment started on September 20, 2008: (1) those who were already contacted under the old regime—existing cases, and (2) those who were released after September 20, 2008—new cases. All existing cases who had yet to complete an interview were re-contacted under the new regime except for those who were recorded as a “final refusal” or a “had a final barrier.”

a. Existing Cases

The changes in procedures for existing cases were designed to increase contact and respondent willingness to participate. MPR started sending all correspondences to existing cases on U.S. Department of Labor (DOL) letterhead, over a DOL official’s signature, with a DOL contact number, rather than using MPR letterhead with an MPR manager’s signature. It was hoped that this more “official” correspondence would receive greater attention from respondents and would lend greater legitimacy to the request than a letter provided from MPR. MPR also used priority mail to send refusal conversion and locating letters, and sent, about two weeks later, a follow-up postcard with the incentive amount prominently displayed, so as to alert potential respondents and their families.

The new procedures were accompanied by a change in the incentive payment structure. Since the most effective incentive amount is unknown, DOL received permission to conduct an experiment to test different amounts of the incentive with different sample groups in the evaluation. The initial period of surveying indicated that the Group A sample was most willing to complete the survey, so the new incentive experiment included more modest changes for this group. For Group A, 50 percent continued to be eligible for a $25 interview completion payment, and the other 50 percent became eligible for a $50 payment. MPR tested more generous incentives for Groups B, C, and D—20 percent continued to be offered $25, 40 percent were offered $50, and the final 40 percent were offered $75.

The number of existing cases eligible for the new incentive experiment varies by sample group (Table 2). Because Group A had a higher completion rate in the initial survey period than other sample members, Group A had the smallest percentage of existing cases who were eligible for the new procedures.

b. New Cases

The revised survey design was also structured to facilitate the completion of interviews for new cases. New cases in Groups A to D were eligible for the higher incentive payments under the same structure as described above. For these cases, MPR used a new advance letter written on DOL letterhead with a DOL official’s signature, but sent these letters by regular mail. Postcards were not sent to these individuals. The analysis sample of new cases includes 1,622 workers from six states (490 in Group A, 887 in Group B, 249 in Group C, and 486 in Group D).

c. Other Procedural Changes

In addition to letterhead, envelope, and mail mode changes, MPR undertook other procedural changes to increase response rates. Locating procedures were reviewed to make optimum use of social security numbers to locate sample members, CATI productivity records were reviewed, and staff hours were increased at the most productive hours for outgoing and incoming calls. In addition, more interviewers and locaters were selected and trained, and scripts for operators answering the incoming toll-free number and the voicemail message on that number were revised. Finally, all interviewers, supervisors, and monitors attended a debriefing, which covered best practices for making contact with the sample and successful strategies for obtaining completed interviews.

3. RESULTS OF THE NEW PROCEDURES ON RESPONSE RATES

This section provides results on the effects of the revised survey design on baseline interview response rates during the eight-week period between September 20, 2008 (when the new procedures were implemented) and November 20, 2008. The results are presented first for the existing cases and then for the new cases.

a. Existing Cases

Response rates for existing cases increased under the new regime. During the eight week follow-up period, the overall response rate for existing cases increased from 41 percent to 55 percent (Table 3). Response rates increased for all sample groups, but the increases were larger for Groups B, C and D (about 16 percentage points) than for Group A (about 8 percentage points). While the response rate gap between treatment and comparison individuals is still statistically significant, the size of the gap has decreased. For example, the initial gap between Groups A and B was almost 17 percentage points, but after six weeks under the new regime, the gap fell to under 10 percentage points.

Importantly, there were significant increases in response rates in states that had already been in the field for some time (Table 4 and Appendix Table A.1). Response rate growth had been very slow in many of these states for several months, but response rates increased sharply after September 20, 2008. As an illustration, Figures 1 to 3 display the growth trajectory in response rates for Tennessee, Washington, and Texas. Thus, the sudden increase in state response rates after the new procedures were implemented is likely to be attributable to the new regime. While most of the new completions occurred within the first five weeks of the new regime, the completion rate is still on an upward trajectory (Figure 4).

The response rate increases could be due to both procedural and incentive payment changes. To isolate the effects of the procedural changes, we examined the completion rate of existing cases who, under the new regime, were randomly assigned to the $25 incentive group (Table 5). For these cases, the only regime changes were procedural, not financial.3 The completion rate for these cases was 12 percent for Group A, 18 percent for Group B, 23 percent for Group C, and 16 percent for Group D (Table 5). These results suggest that the procedural changes had some effect on improving response rates.

The procedural changes may have been more effective for Groups B to D than Group A for several reasons. First, the official letterhead sent by priority mail may have been more important for those in Groups B to D who may have had little or no awareness of the TAA program. Second, the original response rates were higher for Group A than for the other groups, suggesting that existing cases in Group A may have been more likely than their counterparts to be hard-core nonresponders.

The larger incentive payments also played a role in increasing response rates for the existing cases (Table 5). For Group A, the response rate was significantly higher for the $50 incentive group (22 percent) than for the $25 incentive group (12 percent). Similarly, for Groups B and D, the response rate was significantly higher for the $50 and $75 incentive groups (about 25 percent) than for the $25 incentive group (about 16-17 percent). Importantly, however, we find no significant differences between the $50 and $75 incentive for these cases. The response rate for Group C did not vary by the incentive amount, a finding for which we have no explanation. Finally, we find similar patterns of results across gender and age categories (Table 6).

b. New Cases

The analysis of new cases— those released for interviewing after September 20, 2008—is based on data from six states (Virginia, Colorado, South Carolina, Missouri, Georgia, and New York). The follow-up period for this analysis is only about six weeks on average, so it is still too early to predict final response rates for these cases. Individuals from Florida are excluded from the analysis, because these cases were released for interviewing within the last week.

We assessed the overall effects of the regime changes on new cases by comparing the response rates of the new cases to those of earlier cases during the first six weeks after they were released for interviewing. This analysis provides suggestive evidence, but may not be conclusive because there may be systematic differences between the two sets of states that are related to response rates (and because of small numbers of new cases).

Based on this analysis, it appears that the new regime increased response rates for new cases (Table 7). The overall response rate is 60 percent for the new Group A cases, compared to 44 percent for older cases six weeks after they were released for interviews. Furthermore, response rate differences between the new and older cases are even larger for Groups B to D. Under the old regime, their interview completion rate after six weeks was 29 percent, compared to 51 percent under the new regime. Thus, response rate differences between the treatment and comparison groups appear to be considerably smaller under the new regime.

There is evidence that response rates for new cases are positively correlated with the size of their incentive payment for the comparison groups (Groups B and D), but not for the treatment groups (Table 8). For example, the response rate for Group A did not vary by incentive amount, but the response rate in Group D was about 15 percentage points higher for those in the $50 and $75 incentive groups than for those in the $25 incentive group (51 percent versus 36 percent).

For new cases in Groups B to D, the response rates for the $75 incentive groups were larger than for the $50 incentive groups, but, in general, the differences are not substantial (Table 8). The differences are about 8 percentage points for Group B (and are statistically significant), but are only about 3 percentage points for Groups C and D (and are not statistically significant). For Groups B-D combined, the difference is statistically significant at the 10 percent level, a result which is due primarily to Group B.

Similar findings emerge by gender and age (Table 9). Small sample sizes may explain the instability of some results.

D. OPTIONS FOR FUTURE BASELINE INTERVIEWING

The TAA survey presents some unique challenges. Survey staff are typically starting with a single name, address, and telephone number from the UI claims records that are more than two years old. This contact information is out-of-date for many sample members. An additional challenge is that for individuals in Groups B to D, the survey request did not prove to be highly compelling, partly because they did not have any attachment to the TAA program. After the initial six months of baseline interviewing, the completion rate was lower than expected and there was a difference in response rates across the treatment and comparison samples. These results motivated the earlier request to OMB to modify the incentive payments.

There is evidence that the new regime increased response rates for both existing and new cases during the eight-week follow-up period. Under the new regime, the overall response rate for existing cases increased from 41 to 55 percent, and the response rate increased significantly in states where response rate growth had been very slow for some time. In addition, response rates were higher for new cases than comparable older cases. Importantly, under the new regime, the difference between response rates across the treatment and comparison groups fell for both the existing and new cases.

The response rate increases appear to be due both to procedural changes and higher incentive payments. The findings from the incentive payment experiment, however, differ somewhat for the existing and new cases. For Group A, the $50 incentive group had significantly higher response rates than the $25 incentive group for existing cases, but not for new cases. For Groups B-D, the $25 group had the lowest response rates for both new and existing cases. For these cases, however, there were no differences between the $50 and $75 incentive for existing cases, although there were some differences for new cases.

With these results in mind, options for future baseline interviewing are as follows:

The procedural changes discussed above should be continued. This would include, for example, sending all correspondence letters using DOL letterhead.

A postcard should be sent to hard-to-locate new cases in all sample groups. The postcard will be sent to nonrespondents about one month after they are released for interviewing.

Incentive Payment Option 1: Offer a $50 incentive to remaining nonrespondents and new cases in all sample groups, where individuals currently eligible for the $25 incentive will be notified by regular first-class mail that the incentive amount has increased. The justification for this approach is that (1) response rates were higher for the $50/$75 than $25 incentive groups for existing cases in all sample groups, and for new cases in Groups B-D; and (2) there were uneven differences in response rates between the $50 and $75 groups for those in Groups B-D.

Incentive Payment Option 2: Offer a $75 incentive to remaining nonrespondents and new cases in Groups B-D and a $50 incentive for those in Group A, where individuals who are currently eligible for the $25 or $50 incentive will be notified by regular first-class mail that the incentive amount has increased. The justification for this approach rather than Option 1 is that for Groups B-D, response rates for new cases in the $75 groups were about 3 to 8 percentage higher than for those in the $50 incentive groups, although the differences are statistically significant only for Group B.

Incentive Payment Option 3: Same as Option 1 or 2 for Groups B-D, and offer a $25 incentive payment to new cases in Group A. The justification for this approach is that for new cases in Group A, there were no differences in response rates between the $25 and $50 incentive (although there were large differences for existing cases).

After consultation with OMB regarding the most appropriate approach and a final decision is reached, the contractor will (1) notify existing nonrespondents about pertinent incentive rate increases, (2) release for interviewing cases from the final three states that will be included in the evaluation, and (3) release additional new cases across all states to achieve the target number of 8,000 completed baseline interviews.

TABLE 1

BASELINE INTERVIEW response rateS under THE first incentive experiment,

BY Sample group and incentive group

|

Sample Group |

||||

Incentive Group |

Group A |

Group B |

Group C |

Group D |

All |

$25 Post-Payment |

50.6* |

35.6 |

43.0 |

32.2* |

39.3* |

$2 Pre-Payment, $25 Post-Payment |

61.4 |

38.7 |

44.7 |

36.5 |

44.4 |

$5 Pre-Payment, $20 Post-Payment |

55.1 |

39.6 |

40.1 |

38.5 |

42.9 |

|

|

|

|

|

|

All Groups |

53.7 |

37.0 |

42.7 |

34.4 |

41.0 |

Total Cases |

1,610 |

2,865 |

820 |

1,537 |

6,832 |

Notes: Sample members were randomly assigned to one of three incentive groups: 1) a $25 post-payment only group (60 percent of cases), 2) a $2 pre-payment and $25 post-payment group (20 percent of cases), and 3) a $5 pre-payment and $20 post-payment group (20 percent of cases). The response rates are as of September 19, 2008.

*F-test of differences in response rates across incentive groups is significantly different from zero at the .05 level, two-tailed test.

TABLE 2

THE SAMPLE SIZE OF existing cases WHO WERE ELIGIBLE FOR THE new REGIME,

by incentive amount and sample group

|

Sample Group |

||||

Incentive Group |

Group A |

Group B |

Group C |

Group D |

All |

$25 Payment |

337 |

395 |

94 |

199 |

1,025 |

$50 Payment |

358 |

663 |

166 |

368 |

1,555 |

$75 Payment |

NA |

620 |

172 |

363 |

1,155 |

Total Cases |

695 |

1,678 |

432 |

930 |

3,735 |

Note: Sample sizes measured as of September 19, 2008.

NA = Not applicable

TABLE 3

BASELINE INTERVIEW response rateS FOR existing cases before and after

THE ONSET OF THE NEW REGIME, by sample group

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

53.7 |

37.0* |

42.7 |

34.4* |

41.0 |

After |

62.4 |

52.8* |

57.2 |

50.0* |

54.9 |

Total Cases |

1,610 |

2,865 |

820 |

1,537 |

6,832 |

Note: The response rates before the regime change are as of September 19, 2008, and the response rates after the regime change are as of November 20, 2008.

*The t-test of Group A (Group C) versus Group B (Group D) response rates differences is significantly different from zero at the .05 level, two-tailed test.

TABLE 4

overall BASELINE INTERVIEW response rateS FOR existing cases

before and after THE ONSET OF THE NEW REGIME, by STATE

|

Interview Release Date |

Response Rate Before New Regime |

Current Response Rate |

Total Number of Cases |

Tennessee |

3/06/2008 |

48.3 |

56.8 |

487 |

Washington |

3/06/2008 |

43.6 |

51.5 |

344 |

Minnesota |

3/17/2008 |

60.4 |

66.3 |

288 |

New Jersey |

4/04/2008 |

36.5 |

42.8 |

362 |

Indiana |

4/24/2008 |

46.7 |

57.4 |

411 |

Wisconsin |

4/24/2008 |

55.0 |

65.7 |

338 |

Arkansas |

5/29/2008 |

43.5 |

56.5 |

377 |

New Hampshire |

6/09/2008 |

34.7 |

45.1 |

277 |

Texas |

6/09/2008 |

26.3 |

41.5 |

354 |

Kentucky |

6/09/2008 |

41.6 |

54.7 |

322 |

Rhode Island |

6/16/2008 |

40.2 |

52.7 |

366 |

Illinois |

6/27/2008 |

36.1 |

49.6 |

452 |

Maryland |

6/27/2008 |

38.8 |

54.1 |

327 |

North Carolina |

6/27/2008 |

41.4 |

57.1 |

1,055 |

Ohio |

8/05/2008 |

40.9 |

58.0 |

474 |

Michigan |

9/05/2008 |

29.9 |

60.7 |

598 |

Overall Response Rate / Sample Size |

|

41.0 |

54.9 |

6,832 |

Note: The response rates before the regime change are as of September 19, 2008, and the response rates after the regime change are as of November 20, 2008.

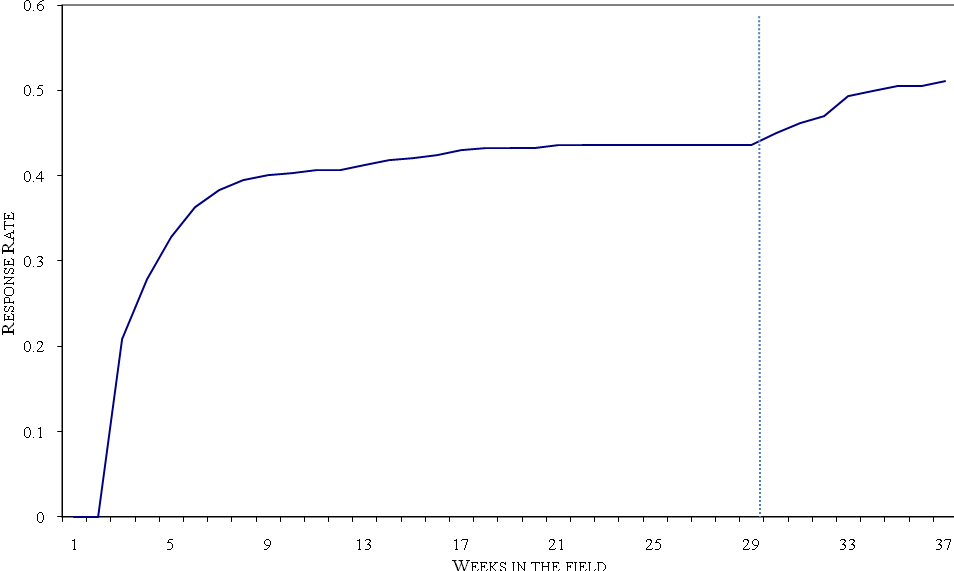

Figure 1

BASELINE INTERVIEW Response rateS in tennessee,

by weeks in the field

Note: The vertical line indicates the time when the new regime was implemented.

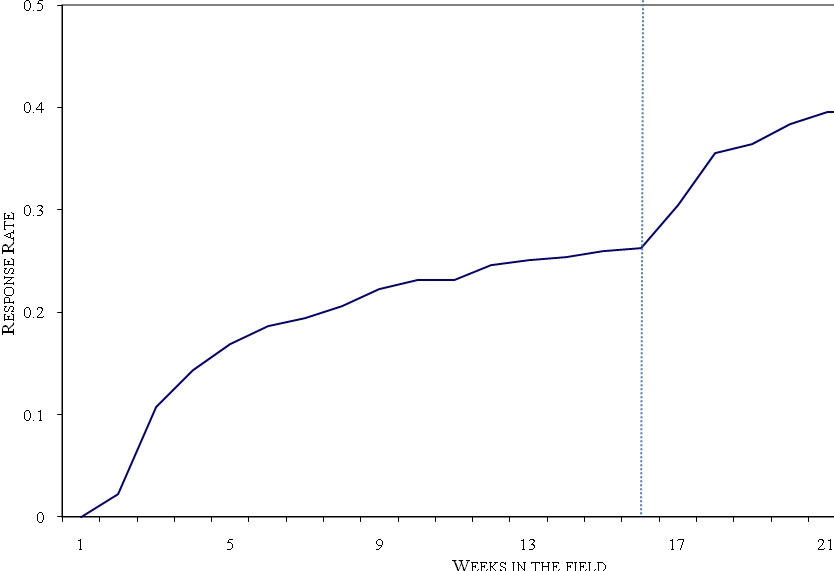

Figure 2

BASELINE INTERVIEW Response rateS in WASHINGTON,

by weeks in the field

Note: The vertical line indicates the time when the new regime was implemented.

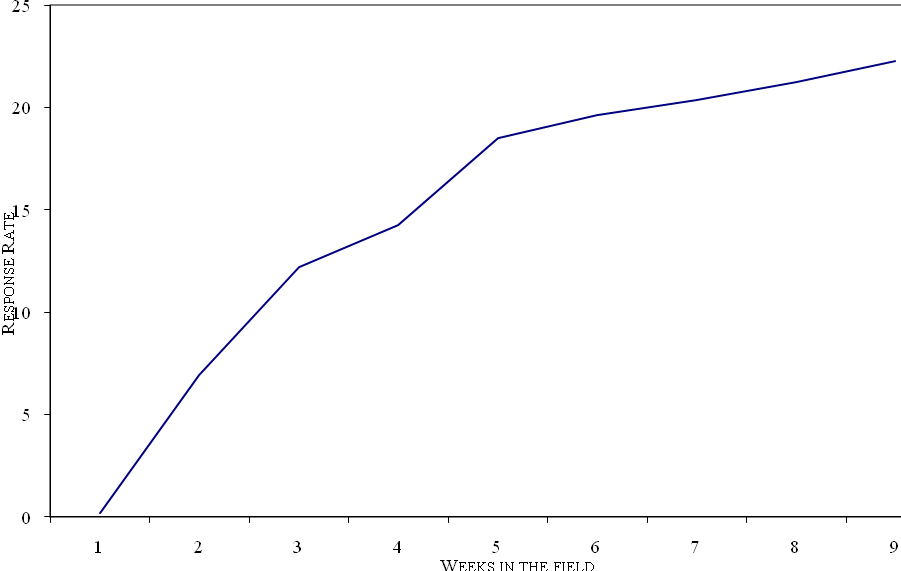

Figure 3

BASELINE INTERVIEW Response rateS in teXAS,

by weeks in the field

Note: The vertical line indicates the time when the new regime was implemented.

Figure 4

Response rateS of ALL existing cases under

new regime,

by weeks since the onset of the new regime

Note: The figure excludes sample cases from Michigan and Ohio because these states were in the field for fewer than 6 weeks prior to the onset of the new regime.

TABLE 5

percentage

of existing cases completing interviews after the start of

THE NEW REGIME, by incentive AND sample group

|

Sample Group |

||||

Incentive Group |

Group A |

Group B |

Group C |

Group D |

Groups B-D Combined |

$25 Payment |

12.0* |

17.7* |

23.4 |

16.1 |

18.0* |

$50 Payment |

22.3 |

25.4 |

26.5 |

25.4 |

25.6 |

$75 Payment |

NA |

26.3 |

25.3 |

23.5 |

25.2 |

Overall Rate |

17.3 |

24.0 |

25.4 |

22.6 |

23.7 |

Total Cases |

571 |

1357 |

359 |

778 |

2,494 |

Notes: The table excludes sample cases from Michigan and Ohio because these states were in the field for fewer than 6 weeks prior to the onset of the new regime. The response rates of the existing cases eligible for the new regime are as of November 20, 2008.

* F-test of differences in response rates across incentive groups is significantly different from zero at the .05 level, two-tailed test.

NA = Not applicable

TABLE 6

percentage

of existing cases completing interviews after the start of

THE NEW REGIME, by GENDER AND AGE

|

Sample Group |

|||

Subgroup |

$25 Payment |

$50 Payment |

$75 Payment |

Overall Rate |

Full Sample |

16.0 |

24.8 |

25.2 |

22.5 |

|

|

|

|

|

Female |

17.0 |

24.2 |

30.9 |

24.2 |

Male |

15.2 |

25.3 |

21.1 |

21.2 |

|

|

|

|

|

Under 40 |

15.9 |

24.6 |

22.8 |

21.7 |

40 – 49 |

14.0 |

25.9 |

24.7 |

22.4 |

50+ |

17.7 |

24.1 |

27.8 |

23.4 |

|

|

|

|

|

Total Cases |

836 |

1274 |

955 |

3065 |

Notes: The table excludes sample cases from Michigan and Ohio because these states were in the field for fewer than 6 weeks prior to the onset of the new regime. The response rates of the existing cases eligible for the new regime are as of November 20, 2008.

TABLE 7

response rate after six

weeks in the field, by incentive regime and sample group

|

Sample Group |

||||

Incentive Group |

Group A |

Group B |

Group C |

Group D |

Groups B-D Combined |

New Regime |

59.6 |

51.2 |

53.8 |

48.8 |

50.9 |

Old Regime After 6 Weeks |

43.6 |

29.7 |

33.9 |

26.5 |

29.4 |

Sample Size: New Cases Old Cases |

490 1,370 |

887 2,395 |

249 699 |

486 1,295 |

1,622 4,389 |

Notes: The response rates under the new regime excludes cases from Florida because this state was in the field for fewer than 2 weeks prior to November 20, 2008. The response rates under the old regime excludes Ohio and Michigan because these two states were in the field for fewer than 6 weeks under that regime. Response rates for the new cases are as of November 20, 2008.

NA = Not applicable

TABLE 8

BASELINE INTERVIEW

response rateS FOR NEW CASES RELEASED AFTER THE

ONSET OF THE NEW REGIME, by incentive and sample group

|

Sample Group |

||||

Incentive Group |

Group A |

Group B |

Group C |

Group D |

Groups B-D Combined |

$25 Payment |

59.6 |

40.6* |

51.1 |

36.3* |

40.9* |

$50 Payment |

59.6 |

49.9 |

52.6 |

50.8 |

50.5 |

$75 Payment |

NA |

57.8 |

56.2 |

52.5 |

55.9 |

Overall Rate |

59.6 |

51.2 |

53.8 |

48.8 |

50.9 |

Total Cases |

490 |

887 |

249 |

486 |

1,622 |

Notes: The table excludes new cases from Florida because this state was in the field for fewer than 2 weeks prior to November 20, 2008. Response rates for the new cases are as of November 20, 2008.

* F-test of differences in response rates across incentive groups is significantly different from zero at the .05 level, two-tailed test.

NA = Not applicable

TABLE 9

BASELINE INTERVIEW

response rateS FOR NEW CASES RELEASED AFTER THE

ONSET OF THE NEW REGIME, by GENDER AND AGE

|

Incentive Group |

|||

Subgroup |

$25 Payment |

$50 Payment |

$75 Payment |

Overall Rate |

Full Sample |

49.1 |

53.0 |

55.9 |

52.9 |

|

|

|

|

|

Female |

53.1 |

56.3 |

60.1 |

56.6 |

Male |

45.2 |

49.2 |

51.8 |

48.9 |

|

|

|

|

|

Under 40 |

41.5 |

45.9 |

51.5 |

46.7 |

40 – 49 |

44.9 |

56.0 |

53.3 |

52.6 |

50+ |

54.4 |

54.5 |

60.1 |

56.1 |

|

|

|

|

|

Total Cases |

548 |

913 |

651 |

2,112 |

Note: The table excludes new cases from Florida because this state was in the field for fewer than 2 weeks prior to November 20, 2008. Response rates for the new cases are as of November 20, 2008.

NA = Not applicable

APPENDIX TABLE A.1

BASELINE

INTERVIEW response rateS FOR existing cases before and after

THE ONSET OF THE NEW REGIME, by STATE AND sample group

ARKANSAS

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

58.0 |

40.6 |

40.0 |

35.7 |

43.5 |

After |

70.5 |

53.8 |

51.1 |

50.0 |

56.5 |

Total Cases |

88 |

160 |

45 |

84 |

377 |

ILLINOIS

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

46.5 |

38.0 |

36.5 |

22.1 |

36.1 |

After |

52.5 |

52.3 |

55.8 |

38.5 |

49.6 |

Total Cases |

101 |

195 |

52 |

104 |

452 |

INDIANA

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

55.7 |

47.9 |

49.0 |

34.7 |

46.7 |

After |

62.9 |

57.9 |

63.3 |

50.0 |

57.4 |

Total Cases |

97 |

167 |

49 |

98 |

411 |

KENTUCKY

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

52.9 |

35.5 |

40.0 |

39.5 |

41.6 |

After |

56.5 |

51.2 |

57.5 |

56.6 |

54.7 |

Total Cases |

85 |

121 |

40 |

76 |

322 |

MARYLAND

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

49.4 |

36.7 |

37.2 |

32.9 |

38.8 |

After |

60.0 |

51.9 |

53.5 |

51.4 |

54.1 |

Total Cases |

85 |

129 |

43 |

70 |

327 |

MICHIGAN

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

38.8 |

22.7 |

34.3 |

33.0 |

29.9 |

After |

64.2 |

56.8 |

64.2 |

63.2 |

60.7 |

Total Cases |

134 |

264 |

67 |

133 |

598 |

MINNESOTA

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

70.6 |

52.4 |

71.4 |

56.2 |

60.4 |

After |

73.5 |

61.0 |

76.2 |

61.6 |

66.3 |

Total Cases |

68 |

105 |

42 |

73 |

288 |

NEW HAMPSHIRE

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

46.8 |

31.2 |

24.4 |

31.3 |

34.7 |

After |

53.2 |

43.1 |

39.0 |

41.7 |

45.1 |

Total Cases |

79 |

109 |

41 |

48 |

277 |

NEW JERSEY

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

51.8 |

31.6 |

41.9 |

27.2 |

36.5 |

After |

54.2 |

37.4 |

51.2 |

37.0 |

42.8 |

Total Cases |

83 |

155 |

43 |

81 |

362 |

NORTH CAROLINA

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

56.8 |

67.1 |

50.4 |

30.1 |

41.4 |

After |

64.4 |

56.7 |

61.3 |

48.3 |

57.1 |

Total Cases |

236 |

464 |

119 |

236 |

1055 |

OHIO

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

58.5 |

35.9 |

37.0 |

35.2 |

40.9 |

After |

67.0 |

54.9 |

51.9 |

58.3 |

58.0 |

Total Cases |

106 |

206 |

54 |

108 |

474 |

RHODE ISLAND

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

50.6 |

34.0 |

42.9 |

40.2 |

40.2 |

After |

65.1 |

46.5 |

61.9 |

47.6 |

52.7 |

Total Cases |

83 |

159 |

42 |

82 |

366 |

TENNESSEE

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

66.1 |

47.4 |

43.7 |

34.6 |

48.3 |

After |

68.8 |

56.8 |

58.2 |

44.6 |

56.9 |

Total Cases |

109 |

213 |

55 |

110 |

487 |

TEXAS

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

29.9 |

21.6 |

32.6 |

27.1 |

26.3 |

After |

39.1 |

40.3 |

48.8 |

42.4 |

41.5 |

Total Cases |

87 |

139 |

43 |

85 |

354 |

WASHINGTON

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

63.0 |

36.7 |

41.5 |

37.3 |

43.6 |

After |

67.9 |

46.9 |

48.8 |

44.0 |

51.5 |

Total Cases |

81 |

147 |

41 |

75 |

344 |

WISCONSIN

|

Sample Group |

||||

Regime Change |

Group A |

Group B |

Group C |

Group D |

All |

Before |

65.9 |

52.3 |

52.3 |

48.7 |

55.0 |

After |

73.9 |

64.4 |

61.4 |

60.8 |

65.7 |

Total Cases |

88 |

132 |

44 |

74 |

338 |

1 OMB provided approval of the information collection request in November 2006. The approval expires November 30, 2009 and an extension will be sought well prior to the expiration.

2 Currently, data are available for 24 states, but data from two states are expected to available shortly.

3 For this analysis we excluded existing cases from Michigan and Ohio because these states that had been in the field for less than 3 months when the changes occurred.

| File Type | application/msword |

| Author | PSchochet |

| Last Modified By | naradzay.bonnie |

| File Modified | 2008-11-28 |

| File Created | 2008-11-28 |

© 2026 OMB.report | Privacy Policy