Att_IETE Supporting Statement_PART A.wbk

Att_IETE Supporting Statement_PART A.wbk

International Experiences with Technology in Education

OMB: 1875-0257

I. Introduction

The Policy and Program Studies Service (PPSS), Office of Planning, Evaluation and Policy Development, U.S. Department of Education (the Department), requests clearance for data collection activities using a survey and phone interviews for an evaluation of International Experiences with Technology in Education. This evaluation has two specific purposes: (1) to report on the current state of policies, initiatives, and investments in educational technology from a set of 25 countries, of which about 5 countries will be selected for more in-depth, qualitative examination and (2) to develop a framework, process and recommendations for the ongoing systematic collection and reporting of data to inform the educational technology policies and investments of the U.S. and other countries. In addition, this project will identify barriers and gaps in global knowledge, which may in turn suggest fruitful areas for future research and opportunities for further international collaborations. This data collection effort will be informed by findings from a systematic and comprehensive review of international data resources that are available online and are aligned with policy priorities developed in consultation with representatives from the Department. Resources for the online research include reports and instruments from multinational data collection efforts led by the Organization of Economic Co-operation and Development (OECD) and the International Association for the Evaluation of Educational Achievement (IEA). In this submission, we request clearance for the study design, sampling strategy, and data collection activities.

Distinctive aspects of this evaluation of International Experiences with Technology in Education (IETE) are the following:

Conceptual framework based on relevant research experience and deep expertise in international ITC policy and programs, evaluation of education technology programs, online and survey research, and the preparation of accessible reports for broad audiences.

Systematic approach to the identification of information sources for the online research task to gather baseline data on the target countries; this includes the development and use of a screening instrument, digital libraries, and a coding protocol to identify, review, and code relevant information from online sources.

Synthesis of findings from the online research describing the specific information on indicators, decision-making processes, major initiatives as well as the extent of the availability of national indicators of education technology investments, access, penetration, and effectiveness along with the frequency at which the data is collected.

Identification of appropriate contacts within ministries of education, leveraging existing relationships of the project team, the Department and Technical Working Group (TWG) members who participate in international networks of researchers, government and /or function as corporate representatives actively engaged in ICT initiatives.

Surveys, which include supplemental telephone interviews, to be conducted with representatives at the selected ministries of education as described above. Researchers will verify the baseline data collected and probe more deeply into the indicators and processes used to make decisions regarding major educational technology initiatives, and the structures established to support their implementation and monitoring. In addition, the survey will be used to collect data on the countries’ ongoing and planned major technology initiatives and to ask country representatives for descriptions of challenges faced associated with these initiatives.

Reader-friendly descriptive summaries of survey results in the form of a set of matrices displaying data for all countries by data type, including indicators, education technology budgets, and major initiatives, (pending the Departments’ decision regarding dissemination efforts.) This will likely include data on formal decision-making processes for educational technology initiatives, organizational structures for supporting and monitoring implementation, current and planned major initiatives (e.g., infrastructure, connectivity, bandwidth, online instruction, teacher professional development), and implementation challenges experienced.

This document supports the information provided in IC Data Form Parts 1 and 2. The remainder of this section provides background for the study, including research questions, a conceptual framework for study activities, preliminary findings from the Online Research task, and our approach to data collection. In the Supporting Statement for Paperwork Reduction Act Submission that follows, we provide a justification for the study and additional details regarding use of data collected.

A. Purpose of the Project and Research Questions

There is growing worldwide consensus that technologies will play an important role in improving the quality of global education in areas that range from data-based decision-making to teaching and learning in ways that prepare students for the 21st century economy (Kozma, in press). For the last two decades, countries around the world have been making substantial investments in information and communication technologies (ICT) within their educational systems. As far back as 1999, the Organization for Economic Cooperation and Development (OECD) estimated that member nations had invested about US$16 billion in ICTs for education (OECD, 1999). A decade ago, a comparison of ICT investments in the U.S. and the U.K. found that “investment in ICT in schools was greater in the U.S. than in England before about 1998, but that the level of investment in ICT in England overtook that in the USA toward the end of the 1990s” (Twining, 2002, p. 17). Singapore initiated a 5-year “Master Plan for IT in Education” in 1997 (Mui, Kan, & Chun, 2004). This US$1.2 billion project (in a country with 4.3 million people) aimed to create an ICT-enriched school environment for every child by installing computers and high-bandwidth Internet in schools and classrooms and training teachers on the use of computers. Subsequently, their second master plan launched in 2002 adopted a more systemic approach integrating ICT, curriculum, assessment, instruction, professional development, and school culture.

There is wide variety among international ICT programs and investments that provide a rich source of experience and strategies that can inform investments within the United States. Through the International Experiences with Technology in Education project, the U.S. Department of Education (ED) will develop a compendium of indicators that will best enable policymakers to learn from the growing worldwide experience base of ICT investments and implementations. The purpose of this project is twofold: (1) to report on the current state of policies, practices, initiatives, and investments in educational technology from a diverse set of 30 countries and (2) to develop a framework and process for ongoing systematic collection and reporting of the information to inform U.S. and other governments’ educational technology policies and investments. In addition, this project will identify barriers and gaps in global knowledge, which may in turn suggest fruitful areas for future research and opportunities for further international collaborations.

The proposed study will address the following primary research questions:

What international ICT indicators are currently being collected on an ongoing basis? What are the limitations of these data?

What national policies and systems are being used to guide effective ICT investments?

What set of ICT indicators will be most informative for U.S. policy and feasible to collect on ongoing basis?

What partnerships and data collection methodologies will be required to make possible an annual compendium?

B. Conceptual Framework

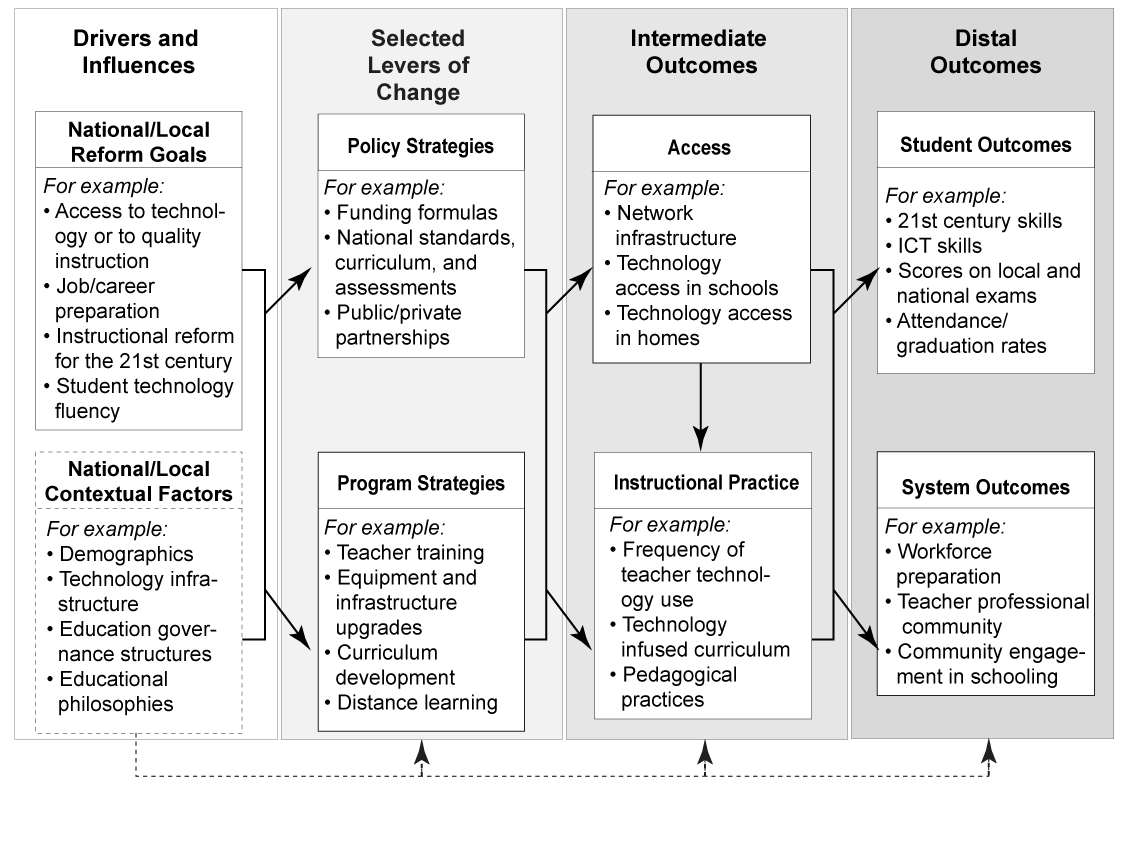

The contractor recognizes that a significant challenge associated with the collection of international indicators is the lack of standardization of measures across collections and the availability of indicators providing evidence of impacts of educational technologies on teaching and learning. Some of the important dimensions of variability across countries are depicted in the conceptual framework in Exhibit 1 below, which has been reviewed by TWG members and the Department. The conceptual framework presented in Exhibit 1 depicts one of the important challenges of this effort: local and national educational technology programs are driven by a country’s specific goals. For example, in developing countries or rural areas, deployment of educational technology often necessarily begins with a focus on improving technology access and infrastructure. By contrast, high-speed Internet access is nearly universal in Finland, so that country’s ICT programs are driven more by pedagogical goals (Kankaanranta & Linnakyla, 2004). This is an example too of the contextual factors that shape program design and implementation: aside from existing technology infrastructure, important contextual factors include community demographics, educational governance structures, and the predominant education philosophy.

Consistent with their goals and context, each country will choose a set of levers of change through which to enact ICT implementation in schools. These may range from policy strategies such as those that promote access or reform national education standards to program strategies such as a focus on teacher training or efforts to expand distance education. The constellation of levers selected by each country provides an important context for understanding the outcomes that are achieved.

Desired outcomes are also driven by the stage of reform that each country’s programs represent. For example, access to working technology in schools and classrooms often precedes outcomes related to changes in instructional practice, and progress in both areas is required before outcomes for student learning or for the educational system as a whole can be expected to emerge. This conceptual framework will inform the set of indicators and other necessary supporting information to include in the compendium and guide the design of data collection processes and instruments.

Exhibit 1. Conceptual Framework for Country-Specific Educational ICT Reforms

2. Selection of ICTEd Indicators

The project identified a set of online resources with information on ICTEd indicators to review, attending specifically to the availability of ICTEd information that would be relevant to current project goals and feasible to collect from a broad set of countries. As a result, our review of online sources focused primarily on reports from existing multi-national collections including such efforts as SITES, PISA, PIRLS, TIMMS and a survey of EU countries by Eurydice. Our review included both a review of the survey instruments used in these collections as well as the associated dissemination reports. In addition, the project also examined a set of country-specific reports from the U.S., U.K, Korea and Australia to supplement the information on existing ICTEd indicators from the multinational data collections. The reviews of 11 reports associated with 8 major multinational data collections (see Table 2) resulted in the identification of over 200 unique indicators for possible consideration in an annual compendium. Additional references providing ICTEd data have also been identified and will be reviewed pending further instruction from ED.

Exhibit 2. List of reports and the corresponding major data collections reviewed

Title of Report |

Major Data Collection referenced |

Year/s of Collection |

Are Students Ready For Technology-Rich World? |

PISA |

2003 |

Benchmarking Access And Use Of ICT In European Schools 2006 |

European commission eLearning Policy |

2006, 2001 |

Completing The Foundation For Lifelong Learning: An OECD Survey Of Upper Secondary Schools. |

ISUSS |

2001 |

Education Policy Analysis 2004 |

PISA |

2000, 2003 |

ICT Sub-Theme Final Paper: Using ICT For Teaching And Learning (2004) |

DC-APEC |

2003 |

[email protected]: Information And Communication Technology In European Education |

Eurydice

Report: Information and Communication |

2001 |

Key Data On Information And Communication Technology In Schools In Europe. 2004 Edition |

Eurydice

|

2002/03 |

PIRLS |

2001 |

|

Pedagogy And ICT Use In Schools Around The World: Findings From The IEA SITES 2006 Study |

SITES |

2006 |

PIRLS 2006 International Report |

PIRLS |

2003 |

TIMSS 2003 International Mathematics Report |

TIMSS |

2003 |

TIMSS 2003 International Science Report |

TIMSS |

2003 |

The preliminary set of criteria for selecting ICTEd indicators appears below.

Policy relevant within the US and globally.

High probability of response from participating countries including ministries of education.

High comparability of indicator data collected across participating countries.

Evidence of positive association between indicator and meaningful change in administrative efficiency, classroom instruction, teacher skills, student skills, and student learning.

The contractor notes the lack of indicators related to use of technology for increasing teacher capacity and the absence of indicators from major data collections related to the use of data to support continuous improvement. Furthermore, the indicators related to outcomes are based on teacher and student reports of their own competence, attitudes towards using ICTs, or the benefits of using ICT in instruction or on student achievement. During our review of instruments and reports associated with the major international collections, we did not identify any indicators that provide an independent and objective assessment of impacts on teachers and students. The contractor anticipates that survey respondents might be able to address this gap and point the contractor to available resources.

Data for selected indicators will then be used to inform the development of the survey, of which a key outcome would be to confirm, if not update the data from the online research task.

I. Supporting Statement for Paperwork Reduction Act

A. Justification

1. Circumstances Making Collection of Information Necessary

Title II, Part D of the ESEA (P.L. 107-110) has the explicit purpose “to enhance the ongoing professional development of teachers, principals, and administrators by providing constant access to training and updated research in teaching and learning through electronic means” as well as “to support the development and utilization of electronic networks and other innovative methods, such as distance learning, of delivering specialized or rigorous academic courses and curricula for students in areas that would not otherwise have access to such courses and curricula, particularly in geographically isolated areas.” As technology continues to evolve both in the US and abroad, it is important for the department to have a clear understanding of how it is changing the way that teaching and learning are taking place globally. This collection will allow the US to gain valuable information and awareness about where other countries stand in terms of the US EdTech priorities, thus enhancing the department’s ability to measure international competition in this area.

2. Use of Information

The data collection activities to be conducted by this study will provide three types of products for the Department and, pending approval by the Department, other ministries of education and international agencies with an interest in monitoring educational technologies: reader-friendly research syntheses and a final evaluation report, instruments, e.g., a paper survey and telephone interview protocol, to be used for the annual international compendium, and recommendations on structures and processes that will inform the annual collection and or other large-scale, multinational data collection efforts.

The final report will synthesize the results of the online search and the survey. Country profiles will be produced based on both sources of data. Tables will be created to show the availability of the different education technology indicators across the countries along with major initiatives currently being implemented or planned. The report will include a chapter describing the methodologies used to collect, code, and analyze the data. A separate chapter of the report will outline a process for the regular, systematic collection of data on common indicators. The description will include recommendations for the structures and guidelines needed to standardize the information collected across countries and enable cross-country comparisons. This section will include a discussion of indicator types that will be desirable to collect to inform policy decisions (e.g., impact of educational technology investments on student learning) but are not currently available across a majority of the countries. We anticipate being able to identify the various factors contributing to the non-collection of the data, e.g., the cost of the collection, measurement issues, or, in the case of impacts on teaching and learning, methodological issues associated with isolating impacts of educational technology investments on teachers and students from impacts of other educational reforms.

3. Use of Information Technology

The contractor will use a variety of advanced information technologies to maximize the efficiency and completeness of the information gathered for this evaluation and to minimize the burden that the evaluation could potentially place on respondents. For example, members of the project team will collect the quantitative and descriptive data available from the instruments and associated reports of eight major multinational data collections by accessing Websites and online databases. This practice will significantly reduce the amount of information that will need to be gathered through interviews.

Surveys will be provided in print-ready formats to give respondents the option to complete the information electronically or by handwriting their responses. Surveys will be distributed and collected via e-mail, although respondents will be given the option to request a printed copy. Upon receipt of the completed survey, the project team will customize the interview protocol in order to probe on details specific to the country. As it is inefficient to travel internationally for a single interview, these will be conducted via telephone. Telephone interviews will be audio-recorded to serve as a back-up to the real-time data capture. This will minimize the need for follow-up communication in the event that some notes are unclear.

By default, all communication including interview scheduling will be conducted via email. Alternative modes of communication, e.g., phone or fax, will be made only if email proves to be inefficient or if specifically requested. An e-mail address will be available to permit respondents to contact the contractor with questions or requests for assistance. The e-mail address will be printed on all the data collection instruments, along with the name and phone number of a member of the data collection team.

4. Efforts to Identify Duplication

The survey will be informed by the systematic review of data available online, which were collected as part of the Online Research Task. This data will serve as the country’s baseline data to be verified in the survey. The project team will access multiple sources related to the major data collections identified previously as well as other recommendations by TWG members who themselves have coordinated multinational collection efforts. Furthermore, we are focusing on countries which have demonstrated the capability to track ICTEd indicator data, as evidenced by their participation in multinational data collections. Countries who do not meet this criterion will be considered for future efforts.

We are also working to minimize burden by requesting respondents to inform us of updates to the information we have collected and identify the sources of the new information, e.g., websites maintained by government or external organizations, rather than have them provide the missing information themselves. Further, respondents will be encouraged to provide local resources including those that may be in non-English languages. The project team has contracted with a MATO-approved small business (Comprehensive Language Center, Inc.) to provide translation and interpretation services.

Instrumentation will be coordinated across Department studies to prevent unnecessary duplication (e.g. no repeating questions for which sufficient data are already available). These will also be presented to TWG members for review in case they are able to identify alternative sources of data.

5. Methods to Minimize Burden on Small Entities

No small entities will be involved in the study. Nevertheless, the contractor will make every effort to gather available information on the Web and through electronic means in order to reduce the burden on all respondents.

6. Consequences If Information Is Not Collected or Is Collected Less Frequently

This study provides the Department the opportunity to leverage the experiences that have been implemented elsewhere under various international, national, regional and local contexts. If information from this study is not collected, policymakers and educators will not have access to data that could inform the conditions and practices in which policies, programs and investments for ICT in education are most likely to be effective. This is a onetime survey that, if collected less frequently, could hamper the department’s ability to measure international competition in this area.

7. Special Circumstances

None of the special circumstances listed apply to this data collection.

8. Federal Register Comments and Persons Consulted Outside the Agency

A 60 day notice to solicit public comments was published in the Federal Register on July 15, 2009. In addition, throughout the course of this study, the contractor will draw on the experience and expertise of a technical working group (TWG) that provides a diverse range of experience and perspectives, including representatives from leading international organizations that periodically conduct multinational data collections, corporations with an interest in educational technologies, as well as researchers with expertise in relevant methodological and content areas. The members of this group as well as their affiliations and contact information are listed in Exhibit 3.The first meeting of the technical working group was held on February 19, 2009; the second meeting was held on May 2009, and a third is planned for the first quarter in 2010.

Exhibit 4. Technical Working Group Membership

Member |

Affiliation |

Contact Phone Number |

Location |

John Ainley |

Deputy CEO, Australian Center for Educational Research (ACER) |

+61 3 9277 555 |

Victoria, Australia |

Ronald Anderson |

Professor Emeritus, University of Minnesota |

+1 952 473 5910 |

Minneapolis, MN |

Charles Fadel |

Global Lead for Education, Cisco Systems |

+1 978 + 1 202936 1701 |

Boxborough, MA |

Don Knezek |

CEO, International Society for Technology in Education (ISTE) |

|

|

Keith Krueger |

CEO, Consortium for School Networking (CoSN) |

|

|

Christian Monseur |

Professor, University of Liege |

|

|

Francesc Pedro |

Senior Political Analyst, Organisation for Economic Co-operation and Development (OECD) |

|

|

Tjeerd Plomp |

Emeritus Professor, University of Twente in Enschede, The Netherlands |

|

|

9. Respondent Payments or Gifts

No payments or gifts will be provided to respondents.

10. Assurances of Confidentiality

Given the nature of the information being collected, most of which are publicly available data within local or national contexts and a focus on policies and programs, the contractor was exempt for work with human subjects. Nevertheless, information collected will be kept secure and official reports will be released only with approval from the Department.

Responses to this data collection will be used only for research purposes. Given that respondents are government officials who will be providing publicly available information, advice from the TWG is that no assurances of confidentiality will be given and names of respondents will be disclosed.

11. Questions of a Sensitive Nature

No questions of a sensitive nature will be included in the survey instrument or follow-up telephone interview protocol.

12. Estimate of Hour Burden

As described above, several types of data collection are intended including document analysis, surveys and interviews. In this section, we focus only on those parts of the data collection that add to respondent burden. The estimates in Exhibit 3 reflect the burden for the notification of study participants as well as the case study data collection activities.

Members of the project team have already collected the quantitative and descriptive data available from the instruments and associated reports of eight major multinational data collections by accessing Websites and online databases. This information will be used to pre-populate the survey thereby allowing respondents to simply verify the information, rather than collect it themselves. Surveys will be provided in print-ready formats to give respondents the option to complete the information electronically or by handwriting their responses. Furthermore, surveys will be translated into the local languages of respondents.

A total of 25 countries have been selected for inclusion in the survey. For each country, at least one representative from their ministry of education will be invited to participate in the study, e.g., to complete the survey and participate in a one-hour telephone interview. As such, we anticipate only a total of 25 survey and interview participants. When requested and provided reasonable justification, e.g., respondent unavailability during the administration period, a representative other than the survey respondent may complete the telephone interview. The contractor has made provisions for real-time telephone interpretation services in order to accommodate respondents who may have limited facility in English, though we recognize that other similarly qualified representatives could substitute in the telephone interview.

Exhibit 3. Estimated Burden for Notification and Data Collection Activities

Activity |

Total No. |

Hr. per participant |

Total number of hours |

Cost Per Hour |

Estimated burden |

Notification and Confirmation of Participation |

25 |

0.5

|

12.5 |

$48 |

600 |

Response to survey/ verification of information |

25 |

1

|

25 |

$48 |

1200 |

Scheduling of interview |

25 |

0.5 |

12.5 |

$48 |

600 |

Interview |

25 |

2 |

37.5 |

$48 |

1200 |

Total |

25 |

6.25 |

87.5 |

$48 |

3600 |

13. Estimate of Cost Burden to Respondents

There are no additional respondent costs associated with this data collection other than the burden estimate provided under Exhibit 5 above.

14. Estimate of Annual Costs to the Federal Government

The annual costs to the federal government for this study, as specified under contract, are:

Project Year 1 (2008-2009) $30, 376

Project Year 2 (2009-2010) $172, 320

Total $202, 696

15. Change in Annual Reporting Burden

This request is for a new information collection.

16. Plans for Tabulation and Publication of Results

The IETE project is on a tight schedule because of time needed to gain consensus among TWG and other stakeholders. Thus, the project will stick closely to the following schedule.

The MOE survey will be piloted in September 2009 and revised based on feedback received. The final version of the survey will be sent to MOEs in December 2009. Respondents will have 2 weeks to complete and submit the survey, after which, team members will conduct follow up phone interviews to clarify and expand upon survey items.

In the first quarter of 2010, the data collection team will conduct the synthesis of survey and interview data. These data will provide a more in-depth look at how investments in ICTEd are implemented, as well as the conditions and practices associated with emerging innovative policies, programs and funding mechanisms. Dr. Lawrence Gallagher will lead the quantitative descriptive analyses and presentation of survey results. Open-ended responses will be code by qualitative analysts. In addition to creating summary tables of indicators and country profiles, where the sample size is sufficient to support the statistical analyses, a limited set of cross-tabs or regressions will be run to examine the relationships between country-specific factors (e.g. population size, region, GDP) and ICT-related practices, initiative types and expenditures per student.

Country profiles will be produced based on both sources of data and will follow formats approved by ED. Tables will be created to show the availability of the different education technology indicators across the 25 countries along with major initiatives currently being implemented or planned. The report will include a chapter describing the methodologies used to collect, code, and analyze the data. A separate chapter of the report will outline a process for the regular, systematic collection of data on common indicators. The description will include recommendations for the structures and guidelines needed to standardize the information collected across countries and enable cross-country comparisons. This section will include a discussion of indicator types that will be desirable to collect to inform policy decisions (e.g., impact of educational technology investments on student learning) but are not currently available across a majority of the countries because of the cost of the collection, measurement issues, or, in the case of impacts on teaching and learning, methodological issues associated with isolating impacts of educational technology investments on teachers and students from impacts of other educational reforms.

Pending approval and additional funding from the Department, the final report, along with descriptive tables and country profiles, will be made accessible to the public in print and via the Web. The contractor has extensive experience producing research reports, results, and findings that are accessible to a wide audience and that are available through web pages created for the purpose of disseminating project information and materials.

17. OMB Expiration Date

All data collection instruments will include the OMB expiration date.

18. Exceptions to Certification Statement

No exceptions are requested.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 0000-00-00 |

© 2026 OMB.report | Privacy Policy