PISA 2012 Full Scale Part A

PISA 2012 Full Scale Part A.docx

Program for International Student Assessments (PISA) 2012 Main Study

OMB: 1850-0755

OECD

PROGRAM

FOR INTERNATIONAL STUDENT ASSESSMENT

(PISA 2012)

Main study

REQUEST FOR OMB Clearance

OMB# 1850-0755 v.12

SUPPORTING STATEMENT PART A

Submitted by:

National Center for Education Statistics

U.S. Department of Education

Institute of Education Sciences

Washington, DC

Submitted: June 2011

TABLE OF CONTENTS

A.1 Importance of Information 1

A.2 Purposes and Uses of Data 2

A.3 Improved Information Technology (Reduction of Burden) 5

A.4 Efforts to Identify Duplication 5

A.5 Minimizing Burden for Small Institutions 6

A.6 Frequency of Data Collection 6

A.8 Consultations Outside NCES 7

A.9 Payments or Gifts to Respondents 7

A.10 Assurance of Confidentiality 11

A.13 Total Annual Cost Burden 16

A.14 Annualized Cost to Federal Government 16

A.15 Program Changes or Adjustments 16

A.16 Plans for Tabulation and Publication 16

A.17 Display OMB Expiration Date 17

A.18 Exceptions to Certification Statement 18

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

B.1 Respondent Universe

B.2 Statistical Methodology

B.3 Maximizing Response Rates

B.4 Purpose of PISA 2012 and Data Uses

B.5 Individuals Consulted on Study Design

APPENDIX A: RECRUITMENT MATERIALS

APPENDIX B: PARENTAL CONSENT MATERIALS

APPENDIX C: field Test Questionnaires

Appendix D: Student Locator questions

Please see the accompanying PISA 2012 Full Scale Summary of Changes Memo for a description of changes from the last approved PISA 2012 Field Test package. Please note that OMB does NOT need to review the enclosed field test questionnaires provided in Appendix C because these are the field test versions that were approved in March 2011.

The Program for International Student Assessments (PISA) is an international assessment of 15-year-olds that focuses on assessing students’ mathematics, science, and reading literacy. PISA was first administered in 2000 and is conducted every three years. The fifth cycle of PISA, PISA 2012, is being administered at a time when interest is increasing, both worldwide and in the United States, in how well schools are preparing students to meet the challenges of the future, and how the students perform compared with their peers in other countries of the world. Participation in PISA by countries and jurisdictions1 has significantly increased since the initial survey in 2000: 43 countries/jurisdictions in 2000, 41 in 2003, 57 in 2006, 65 in 2009, and 67 (expected) in 2012. The United States has participated in all of the previous cycles, and will participate in 2012 in order to track trends and to compare the performance of U.S. students with that in other countries.

PISA 2012 is coordinated by the Organization for Economic Cooperation and Development (OECD). In the United States, PISA 2012 is conducted by the National Center for Education Statistics (NCES) of the Institute of Education Sciences, U.S. Department of Education. PISA is a collaboration among the participating countries, the OECD, and a consortium of various international contractors, referred to as the PISA International Consortium, led by the Australian Council for Educational Research (ACER).

In each administration of PISA, one of the subject areas (mathematics, science, or reading literacy) is the major domain and has the broadest content coverage, while the other two subjects are the minor domains. Other areas may also be assessed, such as general problem solving. PISA emphasizes functional skills that students have acquired as they near the end of mandatory schooling (aged 15 years). Moreover, PISA assesses students’ knowledge and skills gained both in and out of school environments. The focus on the “yield” of education in and out of school makes it different from other international assessments such as the Trends in International Mathematics and Science Study (TIMSS) and the Progress in International Reading Literacy Study (PIRLS), which are closely tied to school curriculum frameworks and assess younger and grade-based populations.

PISA 2012 will focus on mathematics literacy as the major domain. Reading and science literacy will also be assessed as minor domains. All three will be assessed through a paper-and-pencil assessment and there also will be computer-based assessments in mathematics and reading. In addition, there will be a general problem-solving assessment (computer-based only) and an assessment of financial literacy (paper-and-pencil only). PISA 2012 represents the second cycle with the major domain in mathematics literacy (PISA 2003 was the first). This is also the second cycle that includes an assessment of general problem-solving (2003 was the first), although the previous administration was a paper-and-pencil assessment. This will be the first assessment of financial literacy by PISA.

The paper-based mathematics, science, and reading literacy assessments and the computer-based problem-solving assessment are core components of PISA 2012 and all countries are required to participate. The computer-based mathematics and reading assessments and the financial literacy assessment are international options. PISA 2012 is the first time that the United States will participate in PISA computer-based assessments. There was a limited science computer-based assessment in 2006 and a computer-based reading assessment in 2009, but the United States did not participate in either. However, PISA is transitioning to being primarily computer-based and it is therefore vital that the United States gain experience in 2012 with PISA computer-based assessments. At the same time, however, not all countries will administer the computer-based assessments in 2012 (to date, 20 OECD countries and 29 countries altogether intend to participate) and the primary reporting of PISA 2012 mathematics, reading and science results will be based on the paper-and-pencil assessments. Based on the field test, in which the core and international options were successfully administered, the United States will administer all of these components in the main study. This will enable the United States to prepare for future cycles of PISA in which computer-based assessments will be a significant component and at the same time enable the United States to be included in the comparisons based on all PISA countries in 2012. At this time, funding for the financial literacy component has not been secured; Department of Education officials are actively seeking funding so the United States can participate.

In addition to the cognitive assessments described above, PISA 2012 will include questionnaires administered to assessed students and school principals.

The U.S. PISA main study will be conducted from September-November 2012. It will involve a nationally-representative sample of 5,600 students in the target population in approximately 168 schools. In addition, one state (Florida) will participate, using its own funding, in PISA in order to receive state-level results on PISA, and another state may do so as well. In Florida (and the other state that may join), 50 schools in addition to the schools already in the national sample from the state will participate.

Each student will be administered a 2-hour paper-and-pencil assessment that will include some combination of mathematics, reading, and science items or mathematics and financial literacy items and a 30-minute student questionnaire; 18 students per school will return for a second session to take a 40-minute computer-based assessment of reading, mathematics, and general problem-solving. The school principal of each sampled school will complete a 30-minute questionnaire. Students taking financial literacy will complete a short set of financial literacy background questions.

NCES is planning a future methodological study to validate PISA by relating student performance on PISA 2012 to other outcomes. To support the validation study, the PISA 2012 students will be asked to supply contact information so NCES can contact them in the future. Schools will also be asked if they are able to supply publically available directory information for the assessed students. The follow-up study, including any follow-up contact with students, will be carried out under a separate OMB clearance request (to be submitted at a later date). In this current request we are seeking approval only to gather student contact information.

In this clearance package, NCES requests OMB’s approval for:

recruiting for the 2012 main study;

conducting the PISA 2012 data collection; and

collecting student contact information for a future follow-up study.

Recruiting for the main study will begin in the fall of 2011 (12 months in advance of the data collection) and main study data collection will be in the fall of 2012. Recruitment materials, including letters to state and district officials and school principals, text for a PISA brochure, a “timeline” of within-school activities, and “Frequently Asked Questions” to be provided to recipients of the recruitment letters are included in Appendix A. Appendix A also includes a “Frequently Asked Questions” for students and a summary list of tasks for the School Coordinator (staff person in the school that liaises with PISA staff); these materials will be shared with participating schools. Parental consent letters and related materials are included in Appendix B.

It is important to note that because PISA is a collaborative international study, the U.S. administration of PISA operates under some constraints, particularly around the schedule and the availability of instruments, which are negotiated internationally. For example, at the time that this package is submitted, the final international versions of the main study student and school questionnaires are not available from the international contractor. NCES has included in Appendix C of this document the PISA 2012 field test student and school questionnaires, which were approved by OMB on March 16, 2011 and administered in the United States in the field test. The PISA 2012 final student and school questionnaires are expected to be subsets of the field test versions; field test data will be used to determine the best versions of items measuring the same constructs and to eliminate items that do not function as expected. The international versions of the questionnaires will be provided to countries in December 2011, after which they will need to be prepared for use in the United States. This is the public’s opportunity to provide comments on the field test versions of the student and school questionnaires so that such comments may be taken into consideration when the final versions are determined in the fall of 2011.

In submitting this package, NCES is seeking permission to submit the final questionnaires as 30-day public notice in March 2012, once the international versions of the questionnaires have been adapted for use in the United States. The 30-day notice will also include any additional changes to the main study plans described in the current request.

A.1 Importance of Information

As part of a continuing cycle of international education studies, the United States, through the National Center for Education Statistics (NCES), is currently and in the coming years participating in several international assessments and surveys. The Program for International Student Assessment (PISA), sponsored by the Organization for Economic Cooperation and Development (OECD), is one of these studies.

In light of the growing concerns related to international economic competitiveness, the changing face of our workplace, and the expanding international marketplace in which we trade, knowing how our students and adults compare with their peers around the world has become an even more prominent issue than ever before. Nationwide, interest in understanding what other nations are doing to further the educational achievement of their populations has increased, beyond simple comparisons.

Data at critical points during the education career of our students will help inform policymakers in their efforts to guide and restructure the American education system. These critical points may occur during primary, secondary, or tertiary education, as well as extending into adult education and training programs. Consequently, generating comparative data about students in school, at the end of schooling, and about adults in the workplace and in the community has become an important focus for NCES.

PISA 2012 is part of the larger international program that NCES has actively participated in through collaboration with and representation at the OECD, the Asia-Pacific Economic Cooperation (APEC), and the International Association for the Evaluation of Educational Achievement (IEA). Collaboration with Statistics Canada, Eurostat, and ministries of education throughout the world helps to round out the portfolio of data NCES compiles.

Through this active participation, NCES has sought to strengthen the quality, consistency, and timeliness of international data. To continue this effort, the United States must follow through with well-organized and executed data gathering activities within our national boundaries. These efforts will allow NCES to build a data network that can provide the information necessary for informed decision-making on the part of national, state, and local policymakers.

PISA measures students' knowledge, skills, and competencies primarily in three subject areas – reading, mathematics, and science literacy. The overall strategy is to collect in-depth information on student capabilities in one of these three domains every 3 years so that detailed information on each becomes available every nine years. During each 3-year survey cycle, the major focus is on one content domain, with a minor focus on the other two content domains. The major focus for the data collection in 2012 is on mathematics literacy, with a minor focus on science and reading. The 2012 data collection will be the second time the focus has been on mathematics literacy, thus allowing the first in-depth comparison of performance in mathematics. The target population for this project will be a nationally representative sample of 15-year-old students. PISA 2012 also includes computer-based assessments in mathematics, reading, and general problem-solving. In addition to enabling PISA to measure parts of the domain(s) that cannot be measured through traditional paper-and-pencil assessments, the inclusion of computer-based assessments in 2012 is part of PISA’s transition to being entirely computer-based in the future.

Over the last few decades, the world has become accustomed to hearing about Gross Domestic Products, Consumer Price Indices, unemployment rates, and other similar terms in news reports comparing national economies. The use of these economic indicators allows for discussion and debate of complex economic activities with well-respected measures of that activity. Education policymakers and the general public have a similar need to discuss what is going on in the field of education with indicators that are based on valid and reliable data and other information. Outcome data from PISA allow U.S. policymakers to gauge U.S. performance in relation to other countries, as well as monitor progress over time in comparison to these countries. The results of the PISA assessments, published every 3 years along with related indicators, will allow national policymakers to compare the performance of their education systems with those of other countries. Further, the results will provide a basis for better assessment and monitoring of the effectiveness of education systems at the national level. Without these kinds of data, U.S. policymakers will be limited in their ability to gain insight into the educational performance and practices of other nations as they compare to the United States, and would have lost an investment made in previous cycles in measuring trends.

The Australian Council for Educational Research (ACER), under contract with the OECD, is responsible for the international implementation of this project. Westat, the data collection contractor for the United States, will work directly with ACER and the PISA U.S. National Project Managers from NCES.

A.2 Purposes and Uses of Data

Governments and the general public want solid evidence of education outcomes. In the late 1990s, the OECD launched an extensive program for producing policy-oriented and internationally comparable indicators of student achievement on a regular basis and in a timely manner. PISA is at the heart of this program. How well are schools preparing students to meet the challenges of the future? Parents, students, the public, and those who run education systems need to know whether children are acquiring the necessary skills and knowledge, whether they are prepared to become tomorrow's workers, to continue learning throughout life, to analyze, to reason, and to communicate ideas effectively.

The results of OECD’s PISA, published every 3 years along with related indicators, allow national policymakers to compare the performance of their education systems with those of other countries. Further, the results provide a basis for better assessment and monitoring of the effectiveness of education systems at national levels.

Through PISA, OECD produces three types of indicators:

Basic indicators that provide a baseline profile of the knowledge, skills, and competencies of students;

Contextual indicators that show how such skills relate to important demographic, social, economic, and education variables; and

Trend indicators that emerge from the ongoing, cyclical nature of the data collection.

PISA 2012 Components

The primary focus for the assessment and questionnaires for PISA 2012 will be on mathematics literacy. The PISA mathematics framework defines mathematics literacy as:

“an individual’s capacity to formulate, employ, and interpret mathematics in a variety of contexts. It includes reasoning mathematically and using mathematical concepts, procedures, facts, and tools to describe, explain, and predict phenomena. It assists individuals to recognise the role that mathematics plays in the world and to make the well-founded judgments and decisions needed by constructive, engaged and reflective citizens.”

As in all administrations of PISA, reading and science literacy also will be assessed, although they will be “minor domains” in 2012. In addition, PISA 2012 includes computer-based assessments and a new financial literacy assessment. Questionnaires will be administered to students and school principals. As summarized in Table A-1, some components of PISA 2012 are “core”—countries are required to participate—while other components are “international options.” The United States will administer all components in the main study.

Table A-1. Assessment components of PISA 2012: Core and international options

Assessment Mode |

Core |

International Options |

Paper-and-pencil |

Mathematics Literacy Science Literacy Reading Literacy |

Financial Literacy |

Computer-based |

General Problem Solving |

Mathematics Reading |

The PISA 2012 instruments are described below.

Assessment instruments

Paper-and-pencil assessment. For the paper-based assessment, the main study will focus on mathematics, but will also include science and reading items as well as financial literacy. Altogether, there will be 15 test booklets. Thirteen test booklets will each have four 30-minute blocks of mathematics, reading and/or science items. There will also be 2 booklets that include financial literacy and mathematics items, as well as 5 minutes of background questionnaire items focused on financial literacy. Each student takes one booklet during a 2-hour session. The use of multiple booklets in a matrix design allows PISA to have broad subject matter coverage while keeping the burden on individual students to a minimum.

Computer-based assessments. A computer-based assessment will be administered in a separate 40-minute session to a subsample of students who take the paper-and-pencil assessment. There will be 11 forms of the computer-based assessment, each with two 20-minute blocks. A form could include problem-solving only, reading only, mathematics only, or a combination.

Questionnaires

School questionnaire. A representative from each participating school will be asked to provide information on basic demographics of the school population and more in-depth information on one or more specific issues (generally related to the content of the assessment in the major domain, mathematics). Basic information to be collected includes data on school location; measures of socio-economic context of the school, including location, school resources, facilities, and community resources; school size; staffing patterns; instructional practices; policies; and school organization. The in-depth information is designed to address a very limited selection of issues that are of particular interest and that focus primarily on the major content domain, mathematics. It is anticipated that the school questionnaire will take approximately 30 minutes to complete.

Student questionnaires. Participating students will be asked to provide basic demographic data and information pertaining to the major assessment domain, mathematics. Basic information to be collected includes demographics (e.g., age, gender, language, race and ethnicity); socio-economic background of the student (e.g., parental education, economic background); student's education career; and educational resources and their use at home and at school. Domain-specific information will include instructional experiences and time spent in school, as perceived by the students, and student attitudes. It is anticipated that the student questionnaire will take approximately 30 minutes to complete. In the main study there will be three forms of the student questionnaire with common and different items. While each student will complete a single questionnaire, multiple forms of the questionnaire will enable PISA to collect data on a broader set of variables.

As noted, final versions of the PISA 2012 main study questionnaires have not yet been finalized, as the field test has just finished, but the main study student and school questionnaires are expected to be subsets of the field test versions. The selection of items to carry forward to the main study will be based on analyses of the field test data. The field test versions administered in the United States are provided in Appendix C (Appendix C also include the financial literacy background items that are presented to students in the financial literacy test booklets).

Collecting Contact Information. NCES is planning a methodological study to validate PISA by relating student performance on PISA 2012 to other outcomes. To support the validation study we will collect contact information for students that participate in PISA 2012. The validation study, including any follow-up contact with students, will be carried out under a separate OMB clearance request (to be submitted at a later date). Appendix D contains items to be asked of students regarding their home address and phone numbers and the name, home address and phone numbers of a relative or close friend (the items mirror those used in past and current NCES longitudinal studies). To ensure we have as complete a database of student contact information as possible, we will also request from schools publically available directory information for the students that participate in PISA 2012.

A.3 Improved Information Technology (Reduction of Burden)

The PISA 2012 design and procedures are prescribed internationally. Data collection involves paper-and-pencil responses for the core mathematics, reading, and science assessment and the optional financial literacy assessment. In the computer-based mathematics, reading, and problem-solving assessments, to be administered in the United States for the first time in 2012, responses will be captured electronically. In the United States, the computer-based assessments will be implemented using laptops carried into schools by the data collection staff.

A.4 Efforts to Identify Duplication

A number of international comparative studies already exist to measure achievement in mathematics, science, and reading, including the Trends in International Mathematics and Science Study (TIMSS) and the Progress in International Reading Literacy Study (PIRLS). The Program for the International Assessment of Adult Competencies (PIAAC), to be administered for the first time in 2011, will measure the reading literacy, numeracy, and problem-solving skills of adults. In addition, the United States has been conducting its own national surveys of student achievement for more than 30 years through the National Assessment of Educational Progress (NAEP) program. PISA differs from these studies in several important ways:

Content. PISA is designed to measure “literacy” broadly, while other studies, such as TIMSS and NAEP, have a strong link to curriculum frameworks and seek to measure students’ mastery of specific knowledge, skills, and concepts. The content of PISA is drawn from broad content areas, such as understanding, using, and reflecting on written information for reading, in contrast to more specific curriculum-based content such as decoding and literal comprehension. Moreover, PISA differs from other assessments in the tasks that students are asked to do. PISA focuses on assessing students’ knowledge and skills in reading, mathematics, and science literacy in the context of everyday situations. That is, PISA emphasizes the application of knowledge to everyday situations by asking students to perform tasks that involve interpretation of real-world materials as much as possible. A study based on expert panels’ reviews of mathematics and science items from PISA, TIMSS, and NAEP reports that PISA items require multi-step reasoning more often than either TIMSS or NAEP.2 The study also shows that PISA mathematics and science literacy items often involve the interpretation of charts and graphs or other “real world” material. These tasks reflect the underlying assumption of PISA: as 15-year-olds begin to make the transition to adult life, they need to know not only how to read, or know particular mathematical formulas or scientific concepts, but also how to apply this knowledge and these skills in the many different situations they will encounter in their lives. The computer-based assessments to be included in 2012 add additional “real world” tasks, given the predominance of technology in the lives of young adults. Finally, the TIMSS and NAEP do not include measures of financial literacy.

Age-based sample. The goal of PISA is to represent outcomes of learning rather than outcomes of schooling. By placing the emphasis on age, PISA intends to show not only what 15-year-olds have learned in school, but outside of school, as well as over the years, not just in a particular grade. In contrast, NAEP, TIMSS, and PIRLS are all grade-based samples: NAEP (main) assesses students in grade 4, 8, and 12; TIMSS assesses students in grades 4 and 8; and PIRLS assesses students in grade 4. PISA thus seeks to show the overall yield of an education system and the cumulative effects of all learning experience. Focusing on age 15 provides an opportunity to measure broad learning outcomes while all students are still required to be in school across the many participating nations. Finally, because years of education vary among countries, choosing an age-based sample makes comparisons across countries somewhat easier than a grade-based sample.

Information collected. The kind of information PISA collects also reflects a policy purpose slightly different from the other assessments. PISA collects only background information related to general school context and student demographics. This differs from other international studies such as TIMSS, which collects background information related to how teachers in different countries approach the task of teaching and how the approved curriculum is implemented in the classroom. The results of PISA will certainly inform education policy and spur further investigation into differences within and between countries, but PISA is not intended to provide direct information about improving instructional practice in the classroom. The purpose of PISA is to generate useful indicators to benchmark performance and inform policy.

Alternate sources for these data do not exist. This study represents the U.S. participation in an international study involving 67 countries and jurisdictions in PISA 2012. The United States must collect the same information at the same time as the other nations for purposes of making international comparisons. No other study in the United States will be using the instruments developed by the international sponsoring organization, and thus no alternative sources of comparable data are available.

In order to participate in the international study, the United States must agree to administer the same core instruments that will be administered in the other countries. Because the items measuring academic achievement have been developed with intensive international coordination, any changes to the PISA 2012 instruments would also require international coordination.

A.5 Minimizing Burden for Small Entities

No small entities are part of this sample. The school sample for PISA will contain small-, medium-, and large-size schools from a wide range of school types, including private schools, and burden will be minimized wherever possible for all institutions participating in the data collection. Student burden will be reduced through the use of multiple forms of the student background questionnaire. The use of multiple forms will enable PISA to gather a broad set of information without additional administration time. In addition, contractor staff will assume as much of the organization and test administration as possible within each school. Contractor staff will undertake all test administration and these staff will also assist with parental notification, sampling, and other tasks as much as possible within each school.

A.6 Frequency of Data Collection

This request to OMB is for the PISA 2012 main study. PISA is conducted on a 3-year cycle as prescribed by the international sponsoring organization, and adherence to this schedule is necessary to establish consistency in survey operations among the many participating countries.

A.7 Special Circumstances

No special circumstances exist in the data collection plan for PISA 2012 that would necessitate unique or unusual manners of data collection. None of the special circumstances identified in the Instructions for Supporting Statement applies to the PISA 2012 study.

A.8 Consultations Outside NCES

Consultations outside NCES have been extensive and will continue throughout the life of the project. The nature of the study requires this, because international studies typically are developed as a cooperative enterprise involving all participating countries. PISA 2012 is being developed and operated, under the auspices of the OECD, by a consortium of organizations. Key persons from these organizations who are involved in the design, development and operation of PISA 2012 are listed below.

Organization for Economic Cooperation and Development

Andreas Schleicher

Indicators and Analysis Division

2, rue André Pascal

75775 Paris Cedex16

FRANCE

Tel: +33 (1) 4524 9366

Fax: +33 (1) 4524 9098

Australian Council for Educational Research

Ray Adams, Project Director

ACER

19 Prospect Hill Road

CAMBERWELL VIC 3124

AUSTRALIA

Tel: +613 92775555

Fax: +613 92775500

Westat

Keith Rust, Director of Sampling

1600 Research Boulevard

Rockville, Maryland 20850-3129

USA

Tel: 301 251 8278

Fax: 301 294 2034

A.9 Payments or Gifts to Respondents

Currently, the minimum response rate targets required by OECD are 85 percent of original schools and 80 percent of students, while the NCES minimum response rate target is 85 percent at the student level. These high response rates are difficult to achieve in school-based studies. The United States failed to reach the school response rate targets for the study in all previous PISA administrations (2000, 2003, 2006, and 2009) and had to adjust incentives upwards in the middle of the recruitment and data collection period in order to meet minimum response rate requirements (countries may have an original school response rate as low as 65 percent provided they can show through a non-response bias analysis that there is no bias in the sample; the United States has had great difficulty achieving even this minimum response rate—in 2009 our original school response rate of 68 percent was the lowest of all PISA countries). With the addition of a second session in PISA 2012 to enable administration of the computer-based assessments, and a larger sample size to accommodate the financial literacy assessment, we expect to will face even greater resistance from schools in the main study.

Gaining sufficient student cooperation is also challenging. While we met the NCES requirement in PISA 2006 by 6%, unweighted results from PISA 2009 suggest we just met the 85% response rate required by NCES with a final student response rate of 87%, and there were 33 schools below the 80% required by OECD. Moreover, asking students to return for a second session has not been tested in a full-scale administration of PISA and will likely interfere with the remainder of each student’s school day. Thus, we anticipate even greater difficulty getting students to return for a second session. In PISA, schools with less than 50% of students responding are considered “nonparticipating” so NCES is concerned not only with the overall student response rate but also the response rate within each school. Failing to meet international requirements for response rates puts the United States at great risk of not having its PISA results included in the international reports and database and, in effect, a loss of millions of dollars invested by the United States in PISA, a loss in the time invested by the schools and students that do participate, and the loss of the comparative data the United States is seeking through the project.

Because of these concerns, NCES used a multi-pronged approach to address the challenge of gaining school and student cooperation and to learn as much as possible during the field test about how to achieve acceptable participation rates. Focus group sessions were held with school principals and students (under a separate clearance request) to understand their reactions to PISA and to understand those elements of the study that were attractive to them and would influence participation. The results of the focus groups led to a reworking of advance materials describing the study and adding some additional pieces to the package given to school principals and other administrators. In addition, materials were developed for use with students prior to the assessment that provided information on PISA’s goals, why participation is important and what students should expect when taking the assessment. These materials were effective with schools and students in the field test and similar materials will be used in the main study. These materials are shown in Appendix A.

The field test also incorporated an incentive experiment to gain some understanding of the interaction between different levels of monetary incentives and response rates. In half of the schools, the school and school coordinator received the same incentive amounts as used in PISA 2009 (Incentive 1) and in the other half they received larger incentive amounts (Incentive 2) as shown in Table A-2. Students received the same incentive amount in both groups (described below).

Table A-2. Summary of incentives used in field trial incentive experiment

Recipient |

Incentive 1(2009 amounts)* |

Incentive 2 |

Schools |

$200* |

$800 |

School coordinators |

$100* |

$200 |

To meet field test sample size requirements (designed to provide a sufficient number of responses to new items in order to evaluate item quality and also to field test, in the United States, the computer-based reading literacy assessment, which was used internationally in 2009, but not in the United States), schools sampled for the field test were pre-assigned to one of two data collection modes. Half of the schools were assigned to participate in the paper-based and computer-based assessments (hereafter referred to as PB-CBA schools) and the other half were assigned to participate in the computer-based assessments only (hereafter referred to as CBA-only schools).

The general finding from the experiment was that incentive level had no effect on school response. The participation rate for those schools in the control group receiving 2009 incentive amounts was similar to the rate for schools receiving the higher amounts. We were particularly interested in the PB-CBA schools because this will be the data collection mode used in the main study. In PB-CBA schools, 22 schools (73.3 percent) from the control group cooperated and 19 schools (63.3 percent) from the treatment group cooperated. There was no significant difference between these two groups.

Table A.3. School response rates by data collection mode and incentive experiment group

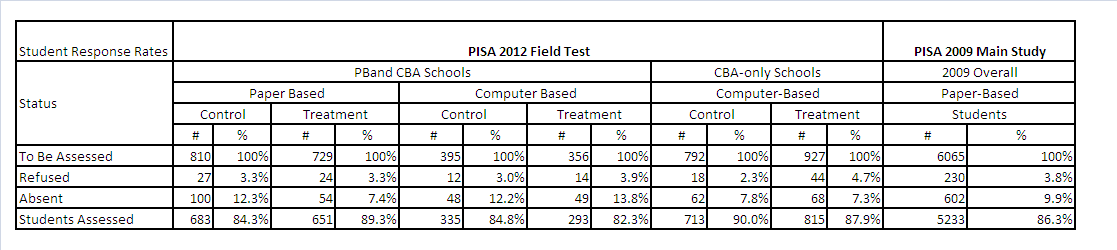

However, we did find a difference between the control and treatment groups with respect to the student response rates. Among schools administering both paper-based and computer-based assessments, the student response rate within the control group was lower in the paper-based session (84.3 percent) than the response rate in the paper-based session in the treatment group (89.3 percent). This difference is significant at the .01 level. Table A.4 presents these data.

Table A.4. Student response rate by data collection mode and incentive experiment group

Incentive for Schools

For the main study we propose to use the 2009 amount of $200 for participating schools, given that we did not find any real difference between the cooperation rates of schools receiving $200 or $800. However, we do anticipate difficulty in reaching our required school response rates, as we have in the past. Though the field test had more schools participate than required and the disposition of the schools was positive toward PISA, the recruitment of these schools did not follow the model that will be used in the main study because we were not required to build a response rate for original schools. In the field test, we had to obtain participation from an adequate number of schools to get the required number of student responses for the test items. Therefore, we pooled our schools (both originally sampled schools and their replacement schools), approached the original schools first, but moved to the replacement quickly with very little, if any, conversion effort. This will not be the case in the main study where we must pursue the original schools until we obtain a satisfactory rate of participation. The historical experience for the United States is that obtaining a sufficient response rate of 65 percent of originally sampled, eligible schools is difficult and has required additional efforts. Moreover, in the field test we did not go to schools in states where we have traditionally had difficulty gaining school cooperation.

Given these anticipated difficulties in securing school participation, we propose a second-tier incentive that would allow us to offer the higher amount used in the incentive experiment: $800 to participating schools. This second tier would not be initiated until near the end of the 2011-12 academic year, in May, after we have approached all original schools and had an opportunity to try different conversion efforts, such as addressing the specific concerns of refusing schools and making personal visits to schools to discuss the study face to face. If, at that time, we have not reached a participation rate of 68 percent of original schools, we would implement the higher incentive rate.

Incentives for School Coordinators

The school coordinators are an integral part of the success of PISA in schools. While we try to minimize their burden, they are our link to the school and students and are the central means we have to inform students and ensure that selected students attend the PISA session. The lower response rates observed in the control group paper-based sessions is of concern because it suggests that the school coordinators were not able or willing to work to ensure the students attended the session. For the main study, we propose providing school coordinators with $200. While we cannot conclude that the lower response rates were due to a lack of effort by the school coordinator, we think offering the higher incentive will be received positively and will remove that uncertainty. There are many reasons why student response can be low and why some school coordinators are more successful than others in getting their students to the sessions. Date changes, other school activities, parents, and sickness are elements that are out of the control of the school coordinator. We want to limit as much as we can, the possibility of the school coordinator feeling burdened or unrewarded for their time and effort.

Incentives for Students

We propose the same student incentives that were used in the field test. Students participating in the paper-based session will receive $25. Those students who are selected for, and participate in, the computer-based session will receive an additional $15.

Students participating in the assessment during non-school hours (after school or on a Saturday) will be offered $35 for the paper-based assessment, and, for the subsample selected for the computer-based component, an additional $15 for participating in the computer-based assessment. Holding an assessment during non-school hours is an accommodation offered when it is not possible to find a suitable time within school hours and is rarely used (only implemented in 11 schools in 2009). The increased incentive over and above the “normal” incentive compensates students for travel time and other activities (work, sports) that a student may miss to participate in the assessment out of hours. Incentives for students will only be provided with the explicit permission of the school principal.

A.10 Assurance of Confidentiality

The PISA 2012 plan for ensuring the confidentiality of the project and participants conforms with the following federal regulations and policies: the Privacy Act of 1974 (5 U.S.C. 552a), Privacy Act Regulations (34 CFR Part 5b), the Education Sciences Reform Act of 2002 ((ESRA 2002 (20 U.S. Code, Section 9573)), the Computer Security Act of 1987, NCES Restricted-Use Data Procedures Manual, and the NCES Statistical Standards. Procedures for handling confidential aspects of the study that will be used in PISA 2012 will change from those used in past administrations of PISA due to the collection of student contact information. Expertise in data security and confidentiality was a significant criterion in the selection of the PISA 2012 contractor.

The plan for maintaining confidentiality includes signed confidentiality agreements and notarized nondisclosure affidavits obtained from all personnel who will have access to individual identifiers (shown in Exhibit 1). Also included in the plan is personnel training regarding the meaning of confidentiality, particularly as it relates to handling requests for information and providing assurance to respondents about the protection of their responses; controlled and protected access to computer files under the control of a single data base manager; built-in safeguards concerning status monitoring and receipt control systems; and a secured and operator-manned in-house computing facility. To assure the confidentiality of student contact information collected to support the future validation study, student contact information will be collected, transmitted, and stored separately from responses to the test booklets and questionnaires. Contact information and responses to the 2012 instruments will be linked only by ID codes. Student contact information will never be made publically available.

Letters and other materials will be sent to parents and school administrators describing the voluntary nature of PISA 2012. The materials sent will describe the study and convey the extent to which respondents and their responses will be kept confidential (copies of letters to be used, brochure text, and “Frequently Asked Questions” are included in Appendix A and Appendix B). The following statement will appear on the front cover of the questionnaires (the phrase “gather the data needed, and complete and review the information collection” will not be included on the student questionnaire):

U.S. participation in this study is sponsored by the National Center for Education Statistics (NCES), U.S. Department of Education. All information you provide may only be used for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose except as required by law [Education Sciences Reform Act of 2002 (ESRA 2002), 20 U.S. Code, Section 9573].

According to the Paperwork Reduction Act of 1995, no persons are required to respond to a collection of information unless such collection displays a valid OMB control number. The valid OMB control number for this voluntary information collection is 1850-0755. The time required to complete this information collection is estimated to average 30 minutes per response, including the time to review instructions, search existing data resources, gather the data needed, and complete and review the information collection. If you have any comments concerning the accuracy of the time estimate(s) or suggestions for improving the form, please write to: U.S. Department of Education, Washington, D.C. 20202-4537. If you have comments or concerns regarding the status of your individual submission of this form, write directly to: Program for International Student Assessment (PISA), National Center for Education Statistics, U.S. Department of Education, 400 Maryland Avenue, SW, Washington, D.C. 20202.

Data files, accompanying software, and documentation for PISA 2012 will be delivered to NCES at the end of the project. No school names or addresses, or student names or addresses will be included on these files or documentation.

NCES understands the legal and ethical need to protect the privacy of the PISA respondents and has extensive experience in developing data files for release that meet the government’s requirements to protect individually identifiable data from disclosure. The contractor will conduct a thorough disclosure analysis of the PISA 2012 data when preparing the data files for use by researchers. This analysis will ensure that NCES has fully complied with the confidentiality provisions contained in PL 100-297. To protect the privacy of respondents as required by PL 100-297, schools with high disclosure risk will be identified, and a variety of masking strategies will be used to ensure that individuals may not be identified from the data files. These masking strategies include swapping data and omitting key identification variables (i.e., school name and address) from both the public- and restricted-use files (though the restricted-use file will include an NCES school ID that can be linked to other NCES databases to identify a school); omitting key identification variables such as state or ZIP Code from the public-use file; and collapsing categories or developing categories for continuous variables to retain information for analytic purposes while preserving confidentiality in public-use files.

Exhibit A.1. Affidavit of Nondisclosure

Affidavit of Nondisclosure

______________________________________________________________________

(Job Title)

______________________________________________________________________

(Date Assigned to Work with NCES Data)

______________________________________________________________________

(Organization, State or Local Agency Name)

______________________________________________________________________

(Organization or Agency Address)

____________________________________________________________

(NCES Individually Identifiable Data)

I, __________________________________ , do solemnly swear (or affirm) that I will not –

make any disclosure or publication whereby a sample unit or survey respondent (including students and schools) could be identified or the data furnished by or related to any particular person or school under these sections could be identified;

or use or reveal any individually identifiable information furnished, acquired, retrieved or assembled by me or others, under the provisions of the Education Sciences Reform Act of 2002 (20 U.S.C. § 9573 ) for any purpose other than statistical purposes specified in the NCES survey, project or contract.

___________________________________

(Signature)

[The penalty for unlawful disclosure is a fine of not more than $250,000 (under 18 U.S.C. 3571) or imprisonment for not more than five years (under 18 U.S.C. 3559), or both. The word "swear" should be stricken out when a person elects to affirm the affidavit rather than to swear to it.]

A.11 Sensitive Questions

Federal regulations governing the administration of questions that might be viewed by some as “sensitive” because of their requirement for personal or private information, require (a) clear documentation of the need for such information as it relates to the primary purpose of the study, (b) provisions to respondents that clearly inform them of the voluntary nature of participation in the study, and (c) assurances of confidential treatment of responses.

PISA 2012 does not include questions usually considered to be of a highly sensitive nature, such as items concerning religion, substance abuse, or sexual activity.

Several items in the background questionnaires may be considered sensitive by some of the respondents, even though they do not fall into any of the Protection of Pupil Rights Act (PPRA) domains. These items relate to the socioeconomic context of the school, parents’ education and occupation, family possessions, and students’ belongings. Research indicates that the constructs these items represent are strongly correlated to academic achievement, and they have been used in the four previous cycles of PISA (2000, 2003, 2006, and 2009). Therefore, the items are essential for the anticipated analyses and to retain consistency in planned comparisons with the international data.

A.12 Estimates of Burden

The burden to respondents for the PISA 2012 main study is calculated for the estimated time required of students and school staff (school administrators and school coordinators) to complete recruitment, pre-assessment, and assessment activities (see Table A.5). Table A.5 presents burden for the national sample in 168 schools (240 schools will be sampled and we expect 65% of eligible schools to participate), and up to two states to participate in PISA using their own funding (Florida has committed to do so and another state has indicated keen interest), and for the overall combined national and state information. Assessment activities include the time involved to complete student and school administrator questionnaires, as well as the time for assessment directions. The time required for students to respond to the assessment (cognitive items) portion of the study, and associated directions, are shown in gray font and are not included in the totals. Recruitment and pre-assessment activities include the time involved to decide to participate, complete class and student listing forms, distribute parent consent materials, distribute the school questionnaire, and arrange assessment space.

For the national sample, the average response burden for 6,800 students is based on a 30-minute questionnaire and, for a subsample of 1,344 of these students, 5 minutes of financial literacy background items. At an estimated $7.25 per hour (the 2009 Federal minimum wage) cost to students, the dollar cost of the main study for students is estimated at $25,375.

The average response burden of 4,616 hours for schools in the national sample is based on a 30-minute school questionnaire for 168 school administrators; 90 minutes for 240 school administrators during the recruitment process (all sampled schools); and an average of 4 hours for 168 school coordinators to coordinate logistics with the data collection contractor, supply a list of eligible students, and encourage students to attend the session.

Table A-5. Burden estimates for PISA 2012 main study

NOTES: Total student burden does not include time for cognitive assessment and its associated instructions.

A.13 Total Annual Cost Burden

Other than the burden associated with completing the PISA questionnaires and assessments (estimated above in Section A.12), the main study imposes no additional cost to respondents.

A.14 Annualized Cost to Federal Government

The total cost to the Federal Government for conducting the PISA 2012 main study as described in the current request is estimated to be $1,157,544 per year for a 3-year period for a total of $3,472,634 for the main study. This is based on the national data collection contract, valued at $6,270,425 over four years, from August 2010 to August 2014. These figures include all direct and indirect costs of the project, and are based on the United States administering the core assessments (paper and pencil mathematics, science, and reading and computer-based problem solving) and optional computer-based mathematics and reading assessments. In addition to these costs, financial literacy (not included in the total cost of the $6,270,425 contract because it is a contract option) is estimated to cost $383,158 for the main study. Thus, the total cost to the government, should all core and optional components be included in the field trial and main study, is $6,653,583. These values differ from what was submitted in the original clearance request in May 2011 because those estimates inadvertently did not include the cost for scoring, which is not reflected in the total contract values but rather is calculated on a per-student basis as an IDIQ.

Funding for the financial literacy component of the main study has not yet been secured. Department of Education staff are seeking funding but if funding is not secured prior to instrument production and student sampling, this component will not be included in the main study.

A.15 Program Changes or Adjustments

The request for OMB approval reflects an increase in burden, because the last approval was for the PISA 2012 field test, while this request is for PISA 2012 full scale data collection.

Also, with regard to content, there are some changes to PISA 2012 from the previous rounds of data collection. The main change is that the assessment will focus on mathematics literacy during this cycle. The result is that the bulk of the items will be mathematics items and that science and reading will be the secondary components. The inclusion of the computer-based mathematics, reading, and problem-solving, and paper-and-pencil based financial literacy assessments also represents significant changes. Additionally, there are minor changes in wording to some of the questionnaire items, and questions that focused on student attitudes toward science or reading now focus on attitudes toward mathematics. Another change to the student questionnaire is the use of multiple forms in the main study. While each student will still take a single 30-minute questionnaire, there will be three forms of the questionnaire with common and different content to allow PISA to collect more background information while keeping the burden on individuals at the same level as in past administrations of PISA.

A.16 Plans for Tabulation and Publication

For the main study in 2012, an analysis of the U.S. and international data will be undertaken to provide for an understanding of the U.S. national results in relation to the international results. Based on proposed analyses of the international data set by ACER, and the need for NCES to report results from the perspective of an American constituency, a plan is being prepared for the statistical analysis of the U.S. national data set as compared to the international data set. Analysis of data will include examinations of the reading, mathematics, and science literacy of U.S. students in relation to their international counterparts; and the relationships between reading, mathematics, and science literacy and student and school background variables.

All reports and publications will be coordinated with the release of information from the international organizing body. Planned publications and reports for the PISA 2012 main study include the following:

General Audience Report. This report will present information on the status of reading, mathematics, and science education among students in the United States in comparison to their international peers, written for a non-specialist, general American audience. This report will present the results of analyses in a clear and non-technical way, conveying how U.S. students compare to their international peers, and what factors, if any, may be associated with the U.S. results.

Survey Operations/Technical Report. This document will document the procedures used in the main study (e.g., sampling, recruitment, data collection, scoring, weighting, and imputation) and describe any problems encountered and the contractor’s response to them. The primary purpose of the main study survey operations/technical report is to document those steps taken by the United States in undertaking and completing the study. This report will include an analysis of non-response bias, which will assess the presence and extent of bias due to nonresponse. Selected characteristics of respondent students and schools will be compared with those of non-respondent schools and students to provide information about whether and how they differ from respondents along dimensions for which we have data for the nonresponding units, as required by NCES standards.

Electronic versions of each publication are made available on the NCES website. Schedules for tabulation and publication of PISA 2012 results in the United States are dependent upon receiving data files from the international sponsoring organization. With this in mind, the expected data collection dates and a tentative reporting schedule are as follows:

-

July 2011

Deliver raw field test data to international sponsoring organization

August – September 2011

Receive Field Trial Report from international sponsors

September 2011–September 2012

Prepare for the main study phase/recruit schools

June/July 2012

Summer conference for sampled schools

September 2012–November 2012

Collect main study data

March - April 2013

Receive final data files from international sponsors

August - December 2013

Produce General Audience Report, Survey Operations/Technical Report for the United States

A.17 Display OMB Expiration Date

The OMB expiration date will be displayed on all data collection materials.

A.18 Exceptions to Certification Statement

No exceptions are requested to the "Certification for Paperwork Reduction Act Submissions" of OMB Form 83-I.

1 Some PISA participants are subnational jurisdictions (e.g., Hong Kong, China).

2 Neidorf, T.S., Binkley, M., Gattis, K., and Nohara, D. (2006). Comparing Mathematics Content in the National

Assessment of Educational Progress (NAEP), Trends in International Mathematics and Science Study (TIMSS), and

Program for International Student Assessment (PISA) 2003 Assessments (NCES 2006-029). U.S. Department of Education. Washington, DC: National Center for Education Statistics.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | PREFACE |

| Author | Janice Bratcher |

| File Modified | 0000-00-00 |

| File Created | 2021-02-01 |

© 2026 OMB.report | Privacy Policy