NSECE Supporting Statement B_FINAL

NSECE Supporting Statement B_FINAL.doc

National Survey of Early Care and Education (NSECE)

OMB: 0970-0391

Collections of Information Employing Statistical Methods

1. Respondent Universe and Respondent Selection Method

An important feature of the NSECE survey design is support for analyses of the association between the utilization of early care and education/school-age (ECE/SA) services by families and the availability of such services offered by providers. Analysis of this association is critical to the development of a better understanding of how government policy can support parental employment and promote child development. The local nature of ECE/SA usage underscores the importance of collecting and analyzing data in matched geographic areas. In order to strengthen the tie between the utilization and provision of ECE/SA services, we have devised a “provider cluster” sampling approach. The approach allows providers to be selected from a small geographic area surrounding the locations of sampled households.

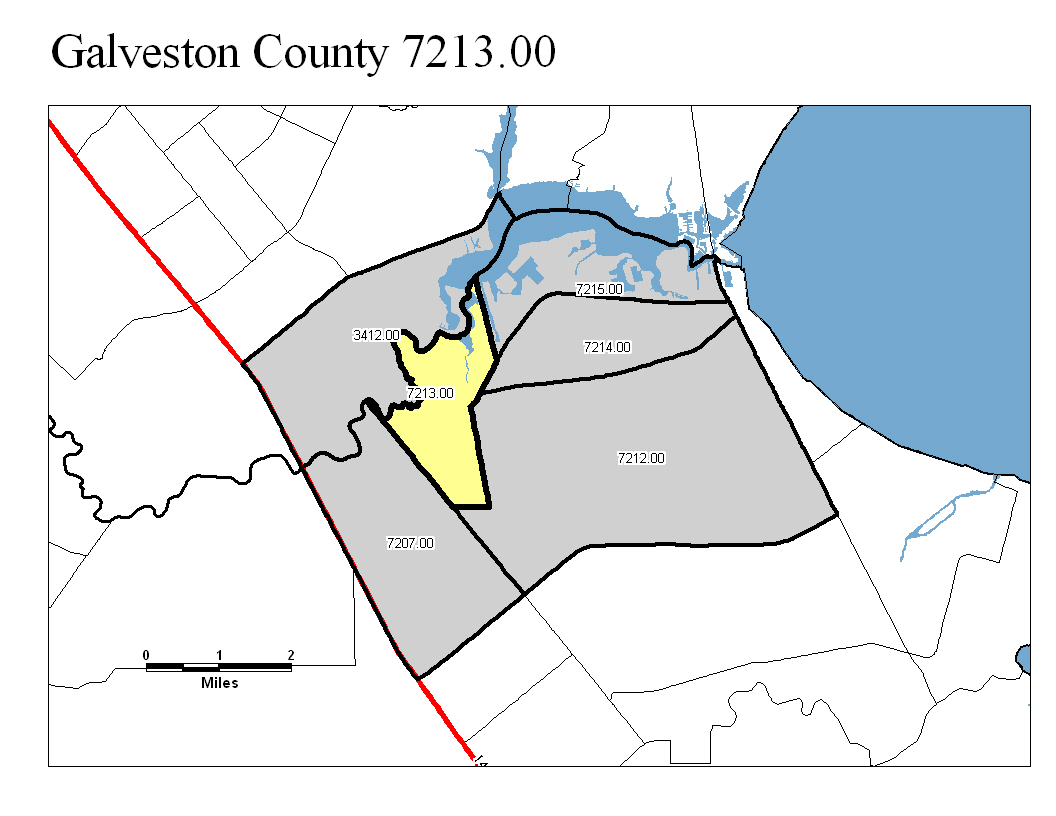

Figure 1

Shown in Figure 1 above is an example of a provider cluster in Galveston, Texas. The central yellow area represents the cluster’s core of households, while the gray shaded areas depict the remainder of the cluster. Households in the yellow core will be sampled for inclusion in the Household Survey and the Home-based Provider Survey. Formal providers, including center-based programs and regulated family day care, will be sampled from throughout the gray and yellow portions of the cluster, approximating the locations from which the central households might seek ECE/SA services. The co-location of households and providers will greatly enhance the value of the data about each. Parents’ search behaviors can be understood in the context of the choices actually available to them, while providers’ decisions about capacity and staffing can be seen as responses to their real audiences. Details on this sampling approach are provided below.

There are two types of respondents for this study: individuals living in households and ECE/SA providers. For the surveys of individuals in households, the eligible population of households is those households with children under 13 in the 50 states and the District of Columbia (DC). The eligible population of providers is those individuals and facilities who provide ECE/SA services in the 50 states and DC.

Design of the Household Survey

First Stage. At the first stage of sampling, we propose a stratified probability sample of primary sampling units (PSUs) representative of all geographic areas in the 50 states and DC. We define the PSU as a county or a group of contiguous counties. Counties are generally large enough to represent a bundle of child-care markets. Smaller counties will be linked to geographically adjacent neighboring counties until a minimum PSU size is met. We will stratify PSUs by state because, historically, policies for child care and early childhood education are set at the state level and vary widely across states. Stratification by state will be likely to reduce the sampling variance of NSECE estimators because of the relative homogeneity within states in child care policies. The number of PSUs will be allocated to each state in proportion to the number of households with age-eligible children, with a minimum of two PSUs for each state to support variance estimation. A probability-proportional-to-size (pps) method will be used to select PSUs within each stratum (or state). Given pps sampling, the probability of selecting a PSU is based on the measure of size (MOS) of the PSU. The number of households with at least one child under age 13 is the MOS for pps sampling. The MOS will be obtained from the most recent 5-year data from the American Community Survey for the period 2005-2009.Some of the largest PSUs may be selected with probability equal to 1.0 – such PSUs are typically called certainty or self-representing PSUs. The District of Columbia will be treated as a “self-representing” PSU or a stratum in its own right. Census tracts will serve as the PSU in DC and all other designated self-representing PSUs.

Second Stage. Within the selected PSUs, we will select a sample of census tracts, or second stage units (SSUs). As in constructing PSUs, smaller tracts will be linked to adjacent tracts to maintain a minimum size. Overall, we will select about 741 SSUs with about 3 SSUs per non-certainty PSU and more per certainty PSU on a proportional basis. The measure of size is the number of households with at least one child under age 13 in each SSU, and will be determined from 5-year data from the American Community Survey for the period 2005-2009, since only the 5-year data contain information at census tract level.

Third Stage. We will use a delivery sequence file (DSF) maintained by the United States Postal

Service (USPS) as the sampling frame for housing units (HUs) at the third stage of sampling. The DSF is a list of residential addresses that the mail carriers update continually. Direct mail marketers (e.g., InfoUSA, ADVO) license the list from the USPS and, in turn, NORC maintains a nationwide license for the ADVO (now Valassis) version of the DSF. NORC has conducted extensive evaluation of the quality of the DSF as a sampling frame for HUs (e.g., see O’Muircheartaigh, English, and Eckman, 2007). We have found that the list is of very good quality for non-rural areas. We will use a half-open interval procedure to include any missed HUs.

For the NSECE, we will compare the number of DSF addresses in a selected SSU to the corresponding census count. We may use a sample-and-go approach in lieu of the DSF sampling frame for rural SSUs in which the DSF count is unacceptably smaller than the census count. An interviewer will be sent into a selected SSU with a map and explicit instructions to start at a specified point and to traverse the SSU in a specific direction. The interviewer will be instructed to count housing units in order and to sample and screen every k-th unit, where k represents the sampling interval specified by the sampling statistician. If the sampled household is screened to be eligible, the interviewer will attempt to conduct the main interview with the household. This sample-and-go approach offers cost savings over the traditional custom listing approach since interviewers are required to go into the field fewer times. See Wolter and Porras (2002) for a successful application of the sample-and-go approach on American Indian/Alaska Native (AIAN) areas.

An analytic focus of the NSECE is on the low-income population, and thus the household sample oversamples low-income families. Screening on household income is not a worthwhile option because it would damage response rates and thus undermine the representativeness of the sample. Instead, we will achieve an oversample of low-income households and age-eligible children living in such households by oversampling addresses in high-density low-income tracts. Without oversampling, approximately 7,170 of 15,586 completed household interviews would be with households whose income falls below 250 percent of the federal poverty guideline (FPG).1 Through oversampling of addresses with high-densities of households whose income falls below 250 percent of FPG, however, we would expect to complete approximately 8,728 household interviews for households whose income is below 250 percent of the poverty guideline.

Overall, we will select 89,900 HUs within the selected tracts to obtain 15,586 completed household interviews. We will use equal probability systematic sampling to select HUs within a tract. Our goal will be to make the national sample as close to self-weighting (equal probabilities of selection) as possible within each of the high- and low-density strata.

Table 1 documents our plan for sampling and collecting data from HUs across three modes of data collection to achieve our goals of 15,586 completed household interviews. Ultimately, we expect to screen 79,818 households (46,998 by mail and 32,820 by personal visit); identify 20,114 eligible households; and complete 15,586 household interviews (2,000 households by telephone and 13,586 by personal visit).

Table 1. Sampling Plan for Household Survey to Generate 15,586 Completed Households for Multi-Mode Design: Assumed Rates and Numbers

|

Mode |

|

|

|

|

|

|

Phone |

|

|

Field |

|

|

|

Rate |

Number |

Rate |

Number |

Rate |

Number |

Required Number of Addresses |

|

89,900 |

|

|

|

|

To Phone Center |

|

|

|

|

|

|

Drop Points (To Field for Resolution) |

|

|

|

|

5.00% |

4,495 |

Matched Phone Numbers 1) |

46.00% |

41,354 |

|

|

|

|

|

|

|

|

|

|

|

Matched to TC |

0.00% |

0 |

|

|

|

|

|

|

95.00% |

85,405 |

|

|

|

To Field for Resolution/Scr/Int |

|

|

|

|

|

42,902 |

To Field for Screening/Int |

|

|

|

|

|

0 |

To Field for Interview |

|

|

|

|

|

9,844 |

Resolved Residential Households |

|

0 |

|

|

|

|

Non-residential/ Business at Confirmed Addresses |

|

0 |

|

|

|

|

Unresolved (To Field for Resolution) 2) |

|

0 |

|

|

|

|

Completed Screening Interviews |

|

0 |

|

|

|

|

Incomplete (To Field for Screening) |

|

0 |

|

|

|

|

Eligible Households - Census |

|

0 |

|

|

|

|

Coverage for Eligible Households |

|

0 |

|

|

|

|

Completed Household Interviews |

40.00% |

2,000 |

|

|

|

|

Incomplete (To Field for Interview) |

60.00% |

3,000 |

|

|

|

|

Number of Eligible Children |

1.72 |

3,440 |

|

|

|

|

|

|

|

|

|

|

|

SAQs for Screening |

|

|

|

|

|

|

Returned/Undeliverable (To FIELD FOR RESOLUTION) |

|

|

10.80% |

9,224 |

|

|

Unresolved (To Field for Resolution) |

|

|

34.17% |

29,183 |

|

|

Completed Screening Interviews |

|

|

55.03% |

46,998 |

|

|

|

|

|

|

|

|

|

Eligible Households - Census |

|

|

28.00% |

13,160 |

|

|

Coverage for Eligible Households (To Field for Interview) |

|

|

90.00% |

11,844 |

|

|

|

|

|

|

|

|

|

To Field |

|

|

|

|

|

|

Resolved Residential Households |

|

|

|

|

85.00% |

36,466 |

Known Ineligible (e.g., Vacant Hus) |

|

|

|

|

10.00% |

4,290 |

Unresolved 3) |

|

|

|

|

5.00% |

2,145 |

Completed Screening Interviews |

|

|

|

|

90.00% |

32,820 |

Incomplete Screeners |

|

|

|

|

10.00% |

3,647 |

Eligible Households - Census |

|

|

|

|

28.00% |

9,190 |

Coverage for Eligible Households |

|

|

|

|

90.00% |

8,271 |

Completed Household Interviews |

|

|

|

|

75.00% |

13,586 |

Number of Eligible Children |

|

|

|

|

1.72 |

23,367 |

|

|

|

|

|

|

|

Total |

|

|

|

|

|

|

Required Number of Addresses |

|

89,900 |

|

|

|

|

Resolved Residential Households |

92.84% |

83,465 |

|

|

|

|

Completed Screening Interviews |

95.63% |

79,818 |

|

|

|

|

Eligible Households |

25.20% |

20,114 |

|

|

|

|

Completed Household Interviews |

77.49% |

15,586 |

|

|

|

|

Number of Eligible Children |

1.72 |

26,807 |

|

|

|

|

Note: 1) Does not include matched multiple units; 2) Includes unconfirmed/wrong addresses; 3) Including P.O. Boxes that did not return a mail screener.

We propose use of multiple modes of interview (mail, telephone, and personal visit) for the hybrid sample of HUs. We will reverse match the 89,900 selected addresses to telephone numbers and conduct interviews by telephone to the maximum extent possible. Certain addresses (drop points) will never be suitable for telephone or mail screening and we will forward them directly to personal-visit screening. We will begin data collection by mailing a screening questionnaire to all addresses with the exception of the drop points. Selected addresses that have screened eligible (i.e., at least one child under 13 years of age) and have a high-quality telephone match will be worked by telephone interviewers initially. Other cases will be designated for follow-up with a personal visit to obtain the main interview for households. In recent years, many households have “cut the cord” and become cell-phone-only (CPO). In the operations with the household sample, all phoneless and CPO households will, by definition, be designated as unmatched to a landline telephone number, and will be transferred to personal-visit interviewing operations.

Design of the Home-Based Provider Survey

Two types of respondents will complete the Home-Based Provider Questionnaire. Licensed, license-exempt, or other home-based providers who are listed with state licensing agencies or other lists of registered providers, will be sampled for the Home-Based Provider Survey. The selection of ‘listable’ providers is described below.

Additionally, informal home-based providers, including those known as Family, Friend, Neighbor or Nanny providers, will be drawn from the sample of 89,900 household addresses. The Household Screener will determine the presence of one or more informal care providers within the household. If one such provider is identified, we will administer the Home-Based Provider questionnaire to the provider. In the event that more than one is identified, we will randomly select one provider and administer the survey to him/her.

Design of the Survey of Formal Providers (Center-Based and Home-Based from Administrative Lists)

Provider Sampling Frame. The sampling of ‘listable’ providers begins with the construction of a provider sampling frame. Administrative lists will be used to build a sample frame for providers appearing on licensing and regulatory lists.

Stratum |

Method of Frame Construction |

Community-based Child Care Centers |

Administrative lists such as state-based licensing, license-exempt, registration and other lists. |

Family Child Care |

Administrative lists such as state-based licensing, license-exempt, registration and other lists. Includes home-based Early Head Start. |

Head Start/Pre-K |

List from Office of Head Start; State-level public pre-kindergarten lists. |

School-Age Care |

Screening of K-6 schools (as listed in the Quality Education Data file) for presence of school-based school-age programs. Use of available lists from Statewide Afterschool Networks, United Way, CDBGs. Telephone contact with city parks, libraries, YMCAs, Boys and Girls Clubs. |

Based upon developmental and design work completed in advance of the NSECE, we believe that the sampling frame for providers should be virtually 100 percent complete in terms of the programs and providers in the early care and education and regulated and registered home-based strata, with somewhat lower but still nearly comprehensive coverage of school-age programs that meet the eligibility criteria for the provider survey (see below).

NORC will classify each provider location on the final sampling frame into one of the strata detailed above. Recognizing that different provider programs at the same provider location may be engaged in different types of ECE/SA work, the provider location will be classified into one and only one of the categories in which it is qualified. In this way, each provider location would be assigned to one and only one sampling stratum. Matching techniques -- based upon name, address, and other characteristics -- would be used to deduplicate the sampling frame within and across the strata within a provider cluster. For example, many programs will appear both on a list of Head Start providers and in a state list of licensed ECE/SA facilities; we would want the program to appear just once. We will use advanced matching systems based upon the Fellegi-Sunter matching algorithm supplemented by human review.

Selection of Providers from Provider Sampling Frame. The provider clusters will be used as SSUs for the survey of formal providers. We will sample providers from the frame in a specified radius around areas selected for the Household Screener. A provider program (also called a program) is a single entity that provides ECE/SA services to children at a single location. To be eligible as a provider program, the entity must meet four criteria, as follows:

Offer nonparental care, including early education, or supervision of children age < 13;

Operate on a regular schedule of at least three days per week and two hours per day;

Offer ECE services above and beyond ad-hoc drop-in care (excluding entities such as shopping malls and YMCA open gym programs); and

Offer care during the school year (excluding entities that offer only summer or holiday care).

An entity that fails one or more of these criteria is not considered to be a provider program for the purposes of the NSECE Center-Based Provider Survey.

NORC will classify all providers on the sampling frame into one of four provider-type categories as follows:

1) Community-based child care centers: child care centers, faith-based entities, and other ECE entities listed by state, local, or tribal governments;

2) Family child care homes;

3) Head Start and pre-K entities; and

4) After-school entities.

Recognizing that different provider programs at the same provider location may be engaged in different types of ECE/SA work, the provider location will be classified into one and only one of these categories (or strata) based on the first category, in the order of 3-1-2-4, in which it is qualified. Required numbers of programs will be randomly selected from each stratum.

Table 2 shows assumed rates and sample sizes in each stratum. We anticipate larger sample sizes for the first two strata, which constitute the vast majority of ECE/SA found on administrative lists: community-based child care centers and family child care programs. The larger sample sizes will support some within-stratum analyses of these programs. The other two strata, Head Start/Pre-K and after-school care, include many fewer programs. Their relative scarcity makes it difficult to increase their sample size within the provider cluster design. Their smaller proposed sample size will still be adequate to compare across strata. The fourth stratum includes school-based and community-based after-school programs, and is most affected by four eligibility criteria we have imposed for all center-based providers in the Survey of Formal Providers. Overall we will select 29,583 programs to obtain 18,780 completed interviews.

Table 2. Target Sample Sizes for the Surveys of Formal Providers and Classroom Staff (Workforce) |

|

|

||||||||

Stratum |

Released Programs

|

Not Obsolete or Unlocatable |

Eligible |

Completed Provider Interviews |

Completed Classroom Staff Interviews |

|||||

Rate |

Number |

Rate |

Number |

Rate |

Number |

Rate |

Number |

|||

Community-based Child Care Centers |

8,965 |

95.00% |

8,517 |

100.00% |

8,517 |

73.50% |

6,260 |

75.00% |

4695 |

|

Family Child Care |

9,382 |

85.00% |

7,975 |

100.00% |

7,975 |

78.50% |

6,260 |

0 |

0 |

|

Head Start/Pre-K |

4,393 |

95.00% |

4,173 |

100.00% |

4,173 |

75.00% |

3,130 |

75.00% |

2347 |

|

After-school Care |

6,843 |

|

6,521 |

|

4,347 |

|

3,130 |

75.00% |

2348 |

|

School-Based |

6,039 |

96.00% |

5,797 |

65.00% |

3,768 |

72.00% |

2,713 |

75.00% |

2035 |

|

Non-School Based |

804 |

90.00% |

724 |

80.00% |

579 |

72.00% |

417 |

75.00% |

313 |

|

Total |

29,583 |

|

27,186 |

|

25,012 |

|

18,780 |

|

9,390 |

|

Design of the Classroom Staff (Workforce) Survey

The Center-Based Provider survey includes questions about a randomly selected classroom or group within the program. The respondent is then asked to enumerate all personnel who are primarily assigned to that classroom. For the Classroom/Group Staff (Workforce) Survey, we will randomly select a classroom/group staff respondent from among those enumerated as belonging to the randomly selected classroom. The respondent will be sampled from the following staff roles: Lead Teacher, Instructor, Teacher (possibly including Director/Teacher), Assistant Teacher/Instructor, Aide. Other roles, for example, specialist personnel, are not eligible for the classroom staff survey even if they are enumerated as personnel associated with the randomly selected classroom. Questions about the teaching/caregiving workforce in home-based care are included in the Home-Based Survey and parallel the constructs that are included in the Classroom Staff (Workforce) Survey to be administered to the sampled staff in center-based programs.

Analysis of Non-response Bias

As part of the final NSECE data preparation and analysis, NORC will conduct a nonresponse bias analysis to further evaluate the impact of non-response across the household and provider samples and responses to the different surveys across modes. The nonresponse bias analysis will observe unit non-response across the different surveys by mode, address nonresponse bias overall and describe the weights constructed for estimation. Finally, the analysis will include an assessment of nonresponse bias in the NSECE program, including specific types of analyses, if any, that might be affected by non-response. A full annotated outline for the proposed nonresponse analysis is included in Appendix B.

2. Design and Procedures for the Information Collection

The NSECE data collection effort involves six questionnaires: Household Screener, Household Questionnaire, Home-Based Provider Questionnaire, Center-based Provider Screener, Center-Based Provider Questionnaire, and Classroom Staff (Workforce) Questionnaire. Two underlying principles influence the data collection approach for all questionnaires administered within the NSECE.

First, we use a multi-mode approach that attempts to complete interviews in the least-cost mode possible, resorting to higher-cost modes to address non-response in earlier steps. The multi-mode approach has two primary benefits: it saves costs by exploiting lower-cost modes, and it improves response rates by offering respondents a range of participation options that better accommodate respondent preferences for interviewing timing, mode, contact with interviewers, and other data collection factors.

Second, the nature of the NSECE questionnaires themselves constrains the data collection period somewhat. A central feature of the Household Questionnaire is the prior-week’s child care schedule, which captures non-parental care for all age-eligible children in the week prior to the interview, supplemented with the work, school, and training schedules of all adults in the household caring for the age-eligible children (including parents). So that these data can capture non-parental care practices, it is desirable for the data collection period to avoid extended periods when alternative non-parental care arrangements may be in use, for example, the end-of-December vacations and summer breaks. The constraint is not as severe for the timing of interviews with Center-Based Providers or their classroom staff (workforce) members, but they too are typically inaccessible during major vacation periods, and many programs such as Head Starts and school-based school-age programs may close altogether during the summer. For this reason, we confine the data collection period for the Household Questionnaire to the 17 weeks between winter vacations and the start of summer sessions around the middle of May. In order to reduce the burden on field interviewers during that period, we will offer self-administered web options for the Center-Based Provider and Home-Based Provider Surveys beginning in November 2011. The questionnaires will provide guidance for respondents on how to record their care schedules from the weeks of November 20 – 26 and December 25-31.

In combining these two principles, any given case is slated for only two data collection approaches, with the latter phase involving assignment to a field interviewer. The former phase may involve self-administration (for example, by web or paper-and-pencil) or calling in a centralized telephone effort. The latter, field effort, includes variation in mode as well, encouraging case completion by telephone as well as in-person for the household samples, and prompting for web completion as well as telephone or in-person completion for the formal (center-based and listed home-based) provider samples.

We discuss our procedures for administration of each questionnaire in turn:

Household Screener

The Household Screener will: 1) identify eligible respondents to complete the Household Questionnaire, and 2) identify eligible respondents for the Home-Based Provider Questionnaire. Eligibility criteria for these surveys are as follows:

Household Questionnaire criteria: Households where a child under age 13 usually lives. The adult most knowledgeable about youngest such child will be sought for interview.

Home-Based Provider Questionnaire criteria: Someone in the household age 18 or older regularly provides care in a home-based setting to a child age 13 or under who is not in the custody of the caregiver. And that person does not appear on administrative lists of regulated and registered home-based providers.

As discussed above, 89,900 households will be sampled for the Household Screener. Almost all selected addresses will be sent a letter along with a brief self-administered paper-and-pencil mail screener in October 2011. This mailing will be followed, one week later, by a postcard reminder. After another two weeks, we will send the first nonresponse follow-up mailing to households that have not yet returned the mail Household Screener. This will include another letter with different text and the mail Household Screener. A second nonresponse mailing will be sent three weeks later, which will again include a letter and the mail Household Screener.

All nonrespondents to the mail screener will be sent to interviewers to be worked by telephone or in-person for Household Screener completion. Completed mail screeners will be data entered, and eligible households will be assigned to interviewers who will attempt to complete the Household and/or Home-based Provider Interviews beginning in January 2012. Eligible households with only one child will be reserved for CATI interviewing along with any other cases for which telephone interviewing would not be overly burdensome. Other cases will be worked in-person by field interviewers beginning in January. The flowchart on the following page illustrates these data collection steps.

Household Surveys Flowchart 1

Household Questionnaire and Home-Based Provider Questionnaire (from Household Screener)

The Household Questionnaire collects information on a variety of topics including household characteristics (such as household composition and income), parents’ employment (employment schedule, employment history, and so on), the utilization of early care and education services (care schedules, care payments and subsidy, attitudes towards various types of care and caregiver, etc.) and search for non-parental care. The respondent is an adult in the household who is knowledgeable about the ECE/SA activities of the youngest child in the household. Based on preliminary timing tests, we project that this interview will take just over 30 minutes. To ensure that we do not exceed our burden request, we have estimated an average of 45 minutes per completed interview. One key feature of the Household Questionnaire is a full week’s schedule of all non-parental care (including early education and elementary school attendance) for all age-eligible children in the household, and all employment, schooling, and training activities of all parents or regular caregivers in the household.

The Household Screener may indicate that a household is eligible for one or both of the surveys. Interviewers will attempt to conduct the interview when eligible respondents (for the Household Questionnaire and/or the Home-Based Provider Questionnaire) are identified. If the household is eligible for both surveys, the Household Questionnaire will be administered first. Once that is complete, the interviewer will request to speak to the household member who is eligible to complete the Home-Based Provider Questionnaire (if that is not the same person as the Household survey respondent). If respondents are unable to complete the interview at that time, they will be asked to complete the interview either by telephone or in-person at a later date.

Home-Based Provider Questionnaire

The Home-Based Provider Questionnaire covers some of the same topics as the center-based provider questionnaire described below. Additional topics include the household composition of the provider, questions trying to understand the proportion of household income coming from home-based care, and an option to collect a full roster of children cared for in the prior week if the count of such children is small.

Respondents to this questionnaire may come from the household sample or the provider sampling frame built from administrative lists. Respondents from the household sample will be fielded in close parallel to the Household Questionnaire (that is, beginning with mail screening, then proceeding to field for telephone or in-person work as needed). One exception is that home-based providers identified through mail screening and not residing in a household with another home-based provider or children under age 13. These eligible respondents will be invited to complete a web questionnaire (through a series of three mailings) before they are sent to the field for follow-up.

Home-based providers drawn from the provider sampling frame will be fielded in parallel with the center-based providers as described below (that is, beginning with a web survey invitation, moving to the field for prompting efforts, then ultimately eligible for telephone or in-person completion by a field interviewer).

Center-Based Provider Screener

As mentioned earlier, we propose that a single physical location or “establishment” be the ultimate sampling unit for the Center-based Provider Survey. A given physical location may have one or more ECE/SA programs in operation within its walls. One or two ECE/SA providers operating at the sampled location will be randomly selected for interview. This way, every location, every provider, and every program in the targeted population will have a known probability of selection. For this approach to work, a screener will be administered to center-based providers that will both confirm and check the eligibility of provider programs located at the sampled addresses as shown on the sampling frame. The questionnaire also screens for the presence of additional eligible providers that are not covered by the sampling frame.

In October, selected field interviewers will administer this screener by telephone to “multi-site” providers in our sampling frame in order to confirm eligibility of the provider programs selected for participation. If we uncover additional eligible programs that are not covered in our sampling frame, resampling of the programs at that address will be done to determine which ones will be asked to complete the Center-Based Provider Questionnaire.

Center-Based Provider Questionnaire

The Center-Based Provider Questionnaire is designed to collect a variety of information about the provider, including structural characteristics of care, revenue sources, enrollment, and admissions and marketing. One feature of the questionnaire is a section collecting information about a randomly selected classroom, including characteristics of all instructional staff associated with that classroom.

In early November, sampled locations will be sent the first mailing, containing an advance letter that explains the purpose of the study, the reason for their selection, the Web survey URL, a login ID, and a password. One week later a Thank You/Reminder postcard will be sent, again requesting their participation if they have not yet completed the survey. The third and final mailing will follow two weeks later, informing respondents that a field interviewer will be contacting them in the near future to complete the interview in a different mode. Field interviewers will be assigned nonrespondents to the web survey in late November to prompt these cases to complete the survey and answer any questions they might have. In January field interviewers will offer alternative ways to complete the survey, through in-person interviewing or over the telephone. The flow chart on the following page shows these data collection steps.

Formal Provider Surveys Flowchart 1

Classroom Staff (Workforce) Questionnaire

The Classroom Staff (Workforce) Questionnaire collects information from classroom-assigned instructional staff working in center-based programs. Items cover: personal characteristics (demographic, employment-related, education/training); classroom activities (teacher-led/child-led; active/passive); attitudes and orientation toward caregiving (perception of teacher role, parent-teacher relationships, job satisfaction); and work climate (provider program management, opportunities for collaboration and innovation, support for professional development).

The Classroom Staff (Workforce) Questionnaire respondent will be an individual assigned to a classroom within a Center-Based Provider that has completed the Center-Based Provider Questionnaire. This questionnaire will be available for completion by web, hard-copy self-administration, or field CATI or CAPI with a field interviewer. Upon completion of a Center-Based Provider Interview, the standard automated handling of the interview will trigger spawning of a randomly selected classroom/group-assigned workforce member for the classroom staff (workforce) survey. The automated questionnaire program will identify the sampled staff member to the field interviewer immediately upon completion of the interview with the director so that the field interviewer may alert the director to the fact that an additional interview is requested. If the Center-Based provider Questionnaire was completed online, the sampled center-based provider classroom/group staff member will be contacted by mail and asked to complete the web version of the questionnaire. Following the procedures for the Center-Based Provider Questionnaire, members of the classroom staff (workforce) study sample will receive up to three mailings (sent to the address of the provider) to solicit participation. Sample members who have not completed the survey after the three rounds of mailing will be contacted by telephone by a field interviewer. If the Center-Based Provider Interview was completed in person, the field interviewer will attempt a contact with the sampled staff member at that time. The sampled center-based provider classroom/group staff members who are not immediately available at the time of the director’s questionnaire administration will be invited to complete the web version of the questionnaire and receive the same three mailings described above before the case is assigned to a field interviewer for prompting.

3. Maximizing Response Rates

Several issues in the NSECE data collection make it challenging to achieving high response rates: the data collection period is short and the rate of eligible households is relatively low for the Household Survey and very low for Home-Based Provider Respondents identified through the Household Screener. New phone technologies make reaching potential respondents difficult, and concerns about privacy make them less willing to participate. For center-based providers, concerns include reluctance to disclose competitive information such as staff qualifications and prices charged to parents, burden from various regulatory reporting requirements, site visits and inspections, and other government-supported data collections.

The NSECE project’s approach to maximizing response rates will emphasize reducing the perceived costs of participation to sampled individuals, and increasing the perceived benefits of doing so. The mixed-mode data collection approach offers respondents a range of methods and therefore locations and times for participating in the survey. Clear communication of confidentiality and privacy protections should further reduce the perceived costs of participation.

For increasing perceived benefits, the project has the benefit of being of likely high salience to all sampled respondents and providers. Below are some steps that will be taken prior to the start of main data collection to facilitate respondent cooperation.

Compelling contact materials: in coordination with principal investigators for each survey and project staff, ACF will approve contact materials that foster a successful first encounter with the respondent by communicating the importance of the study for each respondent group and anticipating concerns likely to prevent participation.

Strategic interviewer trainings: because the first few seconds of each call or in-person visit are crucial, NORC conducts innovative interviewer trainings designed to produce effective interviewers equipped with skills and information to build rapport with potential respondents and avert refusals.

Incentive plan: During the field test, NORC tested a number of incentive plans to find one that increased participation in the study while providing the best value for the government. The forthcoming findings strongly suggest the importance incentives add to securing cooperation and administering the interview.

During the data collection period, additional steps will be taken to maximize response rates. Data collection progress will be monitored using automated cost and production mechanisms for tracking sample and oversample targets, including monitoring sample progress, and where necessary, diagnosing the problem and developing a plan to reverse the trend.

ACF understands the broad impact that nonresponse can have on a data collection effort, including the potential for lengthening a field period, lowering response rates, and introducing possible bias in the resulting data. The first line of defense against non-response is refusal aversion. During interviewer training much effort is made to prepare interviewers with both general and project-specific techniques to successfully address respondent concerns. However, despite thorough training, some respondents will refuse to participate. Therefore, NSECE project procedures feature additional techniques geared toward completing the necessary number of interviews and achieving high response rates.

Refusal aversion and conversion techniques are initially introduced and practiced in training sessions so that interviewers are well-versed in NSECE-related facts and in the household and provider sample frame methodology by the time they start interviewing and can successfully address respondent concerns. Throughout the training process, interviewers are educated as to the relatively short time period they have to gain a respondent’s cooperation and that the process of averting refusals begins as soon as contact is made with a potential respondent. Although the individual answering the telephone or door may not be the person who ultimately completes the household screener or detailed interview, the way he or she is treated can directly impact the willingness of other household members to participate and provide quality data.

4. Testing of Questionnaire Items

The instruments for the NSECE were developed during the Design Phase preceding the project’s implementation. The twenty-eight month Design Phase included a substantial review of the literature, an extensive compendium of measures from ECE/SA-related surveys, multiple rounds of cognitive interviews per respondent type, and a feasibility test in which all questionnaires were administered with eligible samples. The feasibility test involved samples of approximately 1,200 households and 120 providers in two selected sites in two states. In addition, an NSECE field test ran from February through May, 2011, in which instruments were fielded using the modes and technologies planned for the main study. The field test samples involved 2,500 household addresses and 630 providers in six selected sites across three states.

The Classroom Staff (Workforce) Survey was introduced into the design in Fall, 2010, but the items in this questionnaire come primarily from other widely-used surveys. We conducted cognitive interviews on this questionnaire in Winter, 2011, and included the instrument in the NSECE field test.

5. Statistical Consultant

Dr. Kirk Wolter

Executive Vice President,

NORC at the University of Chicago

55 East Monroe Street

Chicago, IL 60603

(312) 759-4000

The sample design was conducted by NORC at the University of Chicago, which will also conduct the data collection for the proposed data collection effort.

REFERENCES

O’Muircheartaigh, C., E. English, and S. Eckman. (2007). Predicting the Relative Quality of

Alternative Sampling Frame. Paper presented at the Joint Statistical Meeting, Salt Lake City, UT.

Wolter, K.M. and J. Porras (2002). Census 2000 Partnership and Marketing Program Evaluation,

technical report prepared for the U.S. Bureau of the Census.

1 http://aspe.hhs.gov/poverty/09poverty.shtml

| File Type | application/msword |

| Author | connelly-jill |

| Last Modified By | Department of Health and Human Services |

| File Modified | 2011-08-16 |

| File Created | 2011-08-16 |

© 2026 OMB.report | Privacy Policy