OMB Itemized Respones Final 11.05.12

OMB Itemized Responses Final 11.05.12.docx

Laura Bush 21st Century Librarian Grant Program Evaluation

OMB Itemized Respones Final 11.05.12

OMB: 3137-0086

We understand that you do not intend to select a probability sample, and that any interpretations of the resulting data are confined to the sample selected which may not represent your target population and therefore, are exploratory in nature and are not meant for policy recommendation purposes. Rather, we understand that you will select a purposeful sample. Please modify the language in the Statements to reflect that you do employ a sampling strategy and include additional information on how, within sites, participants will be recruited. There is no description of who will be telephoned for the interview, and how each will be selected.

The study is designed to inform the future directions of the LB21 program. This includes examining identifiers of past effective grants, a better understanding of funding needs (e.g., ideal time frame), and best practices and lessons learned that can be considered for future programmatic emphasis. In addition, the study seeks to ascertain whether and how LIS training under the LB21 grant program has sustained benefits for LIS programs and partner organizations in addressing critical needs of the LIS profession.

The individuals selected to be interviewed are the principal investigators or project managers identified in the IMLS project grant database for each LB21 grant project to address the study’s intent. A member of the evaluation team contacted this person to verify that s/he was indeed the PI or program manager. If this person reported that someone else was the principal investigator or program manager, contact information was obtained for this other person. When the PI was no longer with the institution, the contracted evaluator asked for updated/current contact information. If this information was not available, the contracted evaluator searched online for contact information for the PI. In the event that the contractor was still unable to establish contact with the PI, a senior member of the contract evaluator’s with long standing in the LIS field, begun contacting the administration at the grantee’s organization to identify who should be contacted for an interview about the project grant. In the event that the contractor team cannot establish telephone communication with the PI, the project will be dropped from the case study analysis (at the time of submission, there are approximately 10 projects for which the contracted evaluator is still attempting to establish telephone contact with the PI). Copies of the protocols used by IMLS to verify contact information for interviews are attached to the resubmission in the package of PRA materials. (This information is summarized in the response to Part B, Item 2.)

Regarding the sampling strategy:

The selected sampling strategy was developed based on the structure of the LB21 grant program. This program has six grant categories that address substantially distinct Library and Information Science (LIS) training and recruitment needs. The categories are the following with total number of project grant awards in the time interval covered by the evaluation listed in parentheses:

Support of masters’ students through LIS academic departments (82).

Support of PhD students through LIS academic departments (21).

LIS continuing education through LIS academic departments and other non-profit entities (27).

Support for early career LIS faculty (13).

Support for improve institutional capacity of LIS academic departments (17).

Support for research on LIS professions (10).

The characteristics of the projects funded by the program differ substantially across the program categories mentioned above, which would make it imprudent to treat all project grant awards within the LB21 program as if they were in a unitary group. The evaluation instead is designed to identify differences and similarities within subsets of grant program categories, and where broader thematic comparisons are possible, across the across the six program categories. One broad theme that shall be compared across grant program categories concerns the recruitment of diverse populations for training. The LB21 program guidelines across all programs (except research) asked grantees to address the need for greater diversity in the library profession. Through discussions with IMLS program staff over time and our review of final project reports, it is clear that the extent to which grantees were able to address diversity varied widely within and across the five grant program categories. The lack of diversity in the LIS profession is an issue of broad concern within the sector and one that will need to be dealt with through a variety of mechanisms. This is part of the reason why IMLS is interested in exploring this issue across many different grant program types. As such, the evaluation will focus on learning more about their outreach and recruitment processes, understanding how the concept was applied in practice, and what challenges they may have faced along the way.

In order to learn more about similarities and differences within and across grant program categories, the research design provides for explicit comparison at two levels: (1) grant program categories; and (2) grant program category sub-sets. (See Part B, Statistical Methods, General Background.)

Exhibit 1. Levels of Analysis

-

Level 1 – Grant Program Category

Level 2 – Grant Program Category Subset

Masters Students

Masters Students Diversity

Masters Students Non-Diversity

PhD Students

PhD Students Diversity

PhD Students Non-Diversity

Continuing Education

Continuing Education Diversity

Continuing Education Non-Diversity

Institutional Capacity

Institutional Capacity Diversity

Institutional Capacity Non-Diversity

Early Career Faculty

Early Career Faculty Diversity

Early Career Faculty Non-Diversity

Research

The language in the Statements has been clarified to show that this evaluation adopts purposeful sampling (see Section B – General Background and Item 1 for respondent universe and selection methods to be used). Due to the variability within and across programs the design identifies more cases than is typical in comparative analysis to capture variations across six program categories and between the program grant category subsets. To allow for robust comparative analysis a proportional case size of 75 percent of the grants per grant type was selected. The results of this case selection strategy are identified below (Exhibit 2). IMLS believes that this design will help streamline collection and reduce respondent burden while still providing sufficient cases for comparative analysis that addresses different aspects of implementation. For instance, one of the major research issues for this evaluation concerns diversity. As seen in Exhibit 2, this issue affects project grants in only five of the six grant program categories. Further, the specific characteristics of how diversity shapes the projects varies across these five grant program categories. As a result, the questions posed for grant projects screened for diversity are not universal across these five grant program categories. Similar patterns emerge with almost all of the major research questions. (See Part B, Section 1.)

Exhibit 2. Cases in Grant Program Categories and Grant Program Category Subsets

-

Universe

Cases for Study

Masters Grant Program Category:

Diversity Subset

Non-Diversity Subset

55

27

42

21

PhD Grant Program Category:

Diversity Subset

Non-Diversity Subset

8

13

6

10

Continuing Education Grant Program Category

Diversity Subset

Non-Diversity Subset

7

20

7

15

Institutional Capacity Grant Program Category

Diversity Subset

Non-Diversity Subset

7

10

6

8

Early Faculty Career Grant Program Category

Diversity Subset

Non-Diversity Subset

3

10

3

9

Research Grant Program Category

10

8

The following approach was employed to identifying the grants to use within each grant type in doing the sampling. The first step was to identify the primary research goals. The primary goal of the research is to determine the approaches used by the more successful grant projects (best practices and lessons learned). Two secondary goals are to emphasize larger programs (since they have a greater monetary risk) and more recently employed practices (since these presumably will be more germane to the types of project grants that will be considered for future funding). To address these goals, cases were removed from consideration using this initial criterion: 1) Projects ranked with a value of 3 or below (using a 5-point ranking scale) based on an assessment of the project grants’ quality and richness from an overall analysis of each final project grant report. Afterwards, if projects still needed to be removed from any group, they were done so by following these two rules: 2) all else equal, larger grants were favored; and 2) all else equal, more recently awarded grants were favored. (See Part B, Section 1.)

The burden worksheet attached as an auxiliary document (rather than revising Supporting Statement A) does not indicate the expected number of participant type in each category. Please provide the number of librarians and professors anticipated to participate so this worksheet may explain the cost estimates more clearly.

IMLS has made changes to the worksheet and adjusted Section A.12 accordingly to discern the two groups of professionals who will be participating in the interviews –professors and librarians broken out across the six program categories. See the revised burden worksheet and Exhibit 3 (below). Each phone interview will take no more than 60 minutes. We anticipate respondents may need up to 30 minutes for scheduling and to prepare, depending on the accessibility of program information (e.g. number of graduates, etc.), yielding a total burden of up to 90 minutes per respondent.

Exhibit 3.

Grant Type |

# of Professors |

# of Librarians |

Total |

Masters |

35 |

28 |

63 |

PhD |

16 |

0 |

16 |

Research |

9 |

1 |

10 |

Early Career |

10 |

0 |

10 |

Institutional Capacity |

11 |

3 |

14 |

Continuing Education |

7 |

15 |

22 |

Total |

88 |

47 |

135 |

Anticipated Hours per Participant Group |

132 hours for professors |

70.5 hours for librarians |

202 hours |

The project timeline seems unrealistic. Even though the dates have passed, just the timing. For example concluding the data collection and analysis on the same day. Can you confirm this timeline is doable on your end?

The previous timeline was extremely ambitious and based on a contract period which has since been extended. In accordance with the extended contract, the project timeline has been updated which will allow additional time for several of the tasks. We are confident that we have the staff and resources to meet these deadlines. The updated timeline is summarized in Exhibit 4 and has been modified in Part A, 16.

Exhibit 4.

-

Action

Timeline

Interview data collection

Weeks 1-4

Data analysis: interview coding, triangulation of archival and interview data

Weeks 2-6

Data cleaning and database development

Weeks 2-6

Assemble draft report

Weeks 6-9

Submit draft report to Advisory Committee

Week 10

Incorporate feedback

Week 12

Submit draft report to IMLS

Week 13

Incorporate comments

Week 18

Submit final report to IMLS

Week 20

Can you explain more how the data will be taken from the interviews and analyzed?

Can you clarify what type of data will be collected from archival data and past grant submissions?

The sources for data collection and analysis for this evaluation combine archived final project grant reports and interview data from grantees to construct single base of evidence for each grant. Because these two sources of data are combined as part of the comparative analysis, we are addressing OMB’s questions 4 and 7 as one item. These responses also are incorporated into modifications made in Part B, Section 2. The approach to data collection and analysis is designed to support a qualitative comparative analysis and reduce respondent burden. The intent is to develop rich enough information to enable comparative assessments within each program grant category and across categories on select themes. The approach also enables comparisons of cases across program grant categories where there are thematic similarities, as in the case of diversity and curricular innovation. Additional comparisons will be made based on emerging patterns and where applicable.

Archival Data Collection and Analysis

The archival data includes final project grantee reports and project grant summaries which were posted on IMLS’s website. It does not include any other documents pertaining to past grant submissions. The collection and analysis of these archived final project reports preceded any data collection and analysis of interviews.

The objectives of the analysis are as follows:

Assessment of how well each report answered the research questions of the evaluation

Documentation of the following whenever present: nature of the grant activities; type of program participants and recruiting methods; outcomes of the grant; lessons learned and challenges as perceived by grantees

Identification of the goals of the grant program and whether they were reached

Assessment of whether the report demonstrated that the goals of the grant program were in line with the mission, goals and objectives of IMLS, including whether and how the grant aligned with or achieved the goals of IMLS and the LB21 program.

Contact information for grantees and beneficiaries, when provided.

In conducting the archival research, a contracted evaluator each final project grant report and identified any narrative that corresponded to a given operational question that could pertain to information contained in the first to fourth objectives listed above. This information was then coded and ranked in an ordinal variable which characterized the comprehensiveness and richness of the report’s content pertaining to the themes of interest in this study. The coding scheme was developed by the contracted evaluator in consultation with IMLS. Initial and periodic tests of inter-rater reliability will continue to be conducted to ensure consistency throughout the analysis process.

The final project reports also contained descriptive statistics for profiling project grants, such as number of program participants, information on grant year and grant award amount. This information has been separately organized and will be used to offer program summaries and potentially will be used in the comparative analyses within and across groups.

The archived codes and the rankings helped inform the design of the interview instruments and consequently reduced respondent burden while simultaneously increasing the richness of information for comparative analysis. They specifically identified areas where questions need not be included in the interview instruments. They also identified areas where questions were needed for further verification and/or clarification.

Interview Data Collection and Analysis

Prior to the interview, each interviewer will conduct a careful review of the archival data which will inform the interview process, resulting in a more concise and efficient phone interview process. This will include taking the coded data from the archival analysis and transferring it into a case study database that will be used for the analysis. Following the interview, the interviewer will use the transcript to write-up a 1-2 page grantee profile as part of the analysis. This profile will include a basic overview of the grant project as well as a section addressing each research question. In addition, interviewers will provide key points involving thematic descriptions of grantee qualitative responses provided as summary text from the interviews addressing each research question in the case profile database.

Each section of narrative in the grantee profile, and key points contained in the case study profile database will be coded by a separate group of investigators that are part of the contracted evaluator’s group to minimize the potential for erroneous coding, as the coding system is complex. This “analysis team” will continue to use and refine the coding schema developed during the archival analysis. Initial and periodic tests of inter-rater reliability will be conducted to ensure consistency throughout the analysis process. This will be done by having the coders code the same portion of text and comparing the codes for consistency. Any points of contention will be discussed and agreed upon.

The coding schema for the analysis has been iterative, beginning with the archival data analysis and will proceed to the interview data. The coding schema, as it currently stands, is based on the analysis of project final reports and emphasizes the following issues: project goals, outcomes, methods/strategies employed in the project, factors for success, and future endeavors. If information for each of these issues was present, the archival analysis provided for more detailed coding for each issue. The coding scheme is shown below in Exhibit 5. The coding for this analysis has been iterative and inductive and was updated based on interview responses and ongoing internal conversations with the evaluation team, in collaboration with IMLS.

Exhibit 5. Coding Schema.

Level of Coding |

Subject Area |

Code |

Explanation |

Level 1 |

Goal |

G |

Goals of the project |

Level 2 |

|

G-CAP |

Goals of increasing library capacity to better serve communities |

Level 3 |

|

G-CAP-POP |

Increasing library capacity by serving a particular population |

Level 4 |

|

G-CAP-POP-KID |

Increasing library capacity by serving children and youth |

Level 4 |

|

G-CAP-POP-SEN |

Increasing library capacity by serving aging adults and senior citizens |

Level 2 |

|

G-SKL |

Goals of building skills of librarians and archivists in targeted areas |

Level 3 |

|

G-SKL-CON |

Building skills of librarians and archivists related to Conservation/ Preservation Management Training |

Level 3 |

|

G-SKL-DIG |

Building skills of librarians and archivists related to Digital Skills Development |

The coded narrative data from the interview data will next be integrated with that for the archival analysis for a more robust textual content analysis using a pattern matching analysis method. Archival data and interview data will be treated equally during the analysis with an independent field be used to identify the origin of the data to address specific patterns emerging from the blending of archival data with the interview data. Codes will be compared within and across program grant categories and their subsets. The approach will examine emerging themes and patterns as well as contrasts and outliers, primarily involving looking for answers to each of the IMLS-developed research questions for the program grant categories and subsets.

There is very little discussion of how the data will be analyzed, reported, and presented beyond “reports” and 135 case studies. Please clarify if one main report will be produced, or several reports for each case study.

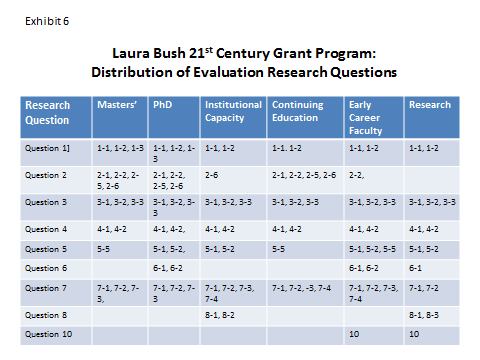

Because the research project is a comparative analysis of grant projects, the findings will be analyzed within each of the six project grant categories and for certain cross-cutting themes, across project grant categories’ subsets. As was mentioned above, there are slightly different research objectives within each grant type which warrants separate instrumentation and analysis. Exhibit 6 illustrates this by showing the extent to which certain research questions are unique to a selected project grant category or overlap into other project grant categories (justifying cross-group comparisons).

As discussed in the prior question, the coded data will be compared within and then the content analyzed using pattern matching. Codes will be compared within and across program grant categories and their subsets. The approach will examine emerging themes and patterns as well as contrasts and outliers, primarily involving looking for answers to each of the IMLS-developed research questions for the program grant categories and subsets.

Coding will be done of the interview transcripts and final grant reports, with the text source for each code annotated by a group of investigators from the contracted evaluator. Members of the coding team have been intimately involved with the LB21 program analysis project and are thus familiar with the LB21 program and the analysis objectives. Initial and periodic tests of inter-rater reliability will be conducted to ensure consistency throughout the analysis process. This will be done by having coders code the same portion of text and comparing the codes for consistency. Any points of contention will be discussed and agreed upon.

There will be one main report broken into sections framed around the cross-group comparisons. We anticipate the final report will include the following sections: executive summary; introduction to and brief history of the LB21 program and evaluation study goals, methodology; one chapter for each of the six project grant categories with sub-sections for further delineation to project grant subsets where diversity has been identified as an explicit objective (the chapters will each include a description for each IMLS grant subcategory as well as the findings from the corresponding grant cases); comparison findings across all grant types for those research questions pertaining to overlapping project grant categories, conclusions (with one section to address each of the six major research questions), recommendations, and appendices.

The fleet of protocols includes many overlapping questions, and each theme elicits similar information from the varied programs. Why not streamline the protocols so there are in essence three: diversity, innovation, research? Such streamlining might make the interviewer’s job easier and facilitate analyzing 135 interviews for producing 135 case studies (A,p.4). That would also rely on knowing and stating the project’s research questions.

Because the nature of the project grants substantially differs across the program categories it would be imprudent to treat all project grant awards as if they were in a single group with the same programmatic goals and objectives. The evaluation instead is designed to assess the extent of similarities and differences within and, in the case of curriculum innovation and diversity, across five of the six program categories. While diversity and innovation are indeed explicit emphasis within five of these six program categories we wanted to explore these issues separately within each category as our preliminary review of administrative data suggests that they may operate in substantially different ways depending upon the type of educational program in question. The questions in the interview protocols map to specific research questions, but utilize a semi-structured design and are often tailored in their language to the specific project grant category and/or project grant category emphasis. After numerous internal discussions with the team and IMLS, the decision was made to create 11 distinct interview protocols. The first ten protocols correspond to each of the two subsets in the five grant program categories in which diversity has been identified as an explicit objective. The eleventh interview protocol was developed for the sixth grant program category which does not have diversity as an explicit objective. The 11 protocols ensure the interviews provide reasonably rich information for understanding each grant category as a unique group and for analysis across similarly structured programs that happen to fall in other grant categories. For example, for three grant program categories – Masters students projects (both diversity and innovation subsets), PhD students’ projects (both diversity and innovation subsets), and continuing education (Innovation subset only), scholarships were identified as an important research question. As such, specific scholarship modules were added to these protocols for those programs who indicated funds were used for scholarships.

Each section of the protocols, e.g., use of funding, sustainability of programs, should list the estimated time to respond, to help interviewers stay on track and prioritize as the interview becomes pressed for time.

The interview protocols are semi-structured interviews to allow for a more “natural” discussion. Depending upon the information provided by respondents, some sections may take longer than others. However, we have updated the protocols to estimate average response times for each section. An internal analysis of time estimates by question had been conducted previously. These estimates have now been incorporated into all protocols, yielding a maximum of 60 minutes per interview (this includes 5 minutes for the introductory material, up to 50 minutes for the main questions, and 5 minutes for the final question and concluding section). This was done to further mitigate the chances of an interview extending beyond 60 minutes. In addition, interviewers have been trained on strategies for ensuring all research questions are asked within the 60 minute timeframe. The average time required by grantee will vary and we anticipate that many interviews will not take the full 60 minutes. Based on our question-level time estimates, we anticipate that interviews will range between 40 and 60 minutes, depending on the type of grant project being discussed (which dictates the number of questions being asked by the interviewer), the complexity of the grant projects, the level of detail provided by the respondents, and individual differences in conversation flow. (See updated protocols provided as attachments.)

Question #1 (diversity theme) “IF A DEFINITION OF DIVERSITY WAS PROVIDED OR IMPLIED IN ARCHIVAL DATA, ASK” …. “is this how you defined diversity for this grant project?” >> Is this question intended to confirm known information or trap a participant if the answer does not match the answer in the archival data?

This question, and others in the protocol like it, is designed to reduce respondent burden by incorporating known information from the archival research as opposed to asking for information that has already been collected elsewhere. The language used is not to “trap” the respondent, but rather to jog his/her memory and open the dialog for the fullest understanding of the grant.

If respondents provide a conflicting response, the interviewers are trained to work with them to clarify the discrepancy and understand why it is occurring. For example, it could be a difference between a formal definition that was used in reporting and a more informal definition used in practice, or it could be the result of a definition that evolved over time based on the needs of the program. The interviewers have access to the final grant reports, and the interviewees will be made aware of this before and during the interview. In addition, the interviewees are assured that their current or future funding through IMLS is not dependent on their responses. Discrepancies in definitions from formal reporting to actual practice are important information for IMLS to be aware of and may inform their future reporting guidelines. If conflicting information between the archival data and the interview responses cannot be resolved through the interview conversation, both responses will be noted in the final report, along with a discussion of the importance of noting the discrepancy.

A.1.Circumstances Making the Collection of Information Necessary:

10. A purpose of the study is to provide an “informing direction for IMLS” – what does that mean?

The study is designed to inform the future directions of the LB21 program. This includes examining identifiers of past effective grants, a better understanding of funding needs (e.g., ideal time frame), and best practices and lessons learned that can be considered for future programmatic emphasis. In addition, the study seeks to ascertain whether and how LIS training under the LB21 grant program has sustained benefits for LIS programs and partner organizations in addressing critical needs of the LIS profession.

11.

We understand that the LB21 grant program evaluation is to describe

success stories in order to serve as exemplars for current or future

programs. However, as such, the grants in the study will not

portray the full spectrum of grants which IMLS funds (A,p.1).

Perhaps, rephrase ‘full spectrum’ to recognize that the

study is focusing on only 135 cases to interview.’

We have rephrased to clarify that we are referring to the “full spectrum of currently funded LB21 grant types,” versus all grantees. All currently funded LB21 grant types are represented in our sample. This modification has been made to Part A, p.1.

A.3 Use of Improved Information Technology and Burden Reduction:

12. The first paragraph states that no information technology will be used for collection. Can you clarify? If there is no information technology in use, why include an extensive discussion of secure data handling.

We have removed the sentence “no information technology will be used for collection” in the Part A form and clarified that information technology will be used to store and secure data. IMLS’s contractors will employ electronic methods in keeping information secure while handling and storing data that has been collected. While the use of technology is not directly linked to the collection of data, it is necessary to ensure proper storage and exchange of the data once it is collected.

A.4. Efforts to Identify Duplication and Use of Similar Information:

13. Will there be an effort to use or align questions from the preliminary study of Master’s and Doctoral level grant programs to this new evaluation?

The preliminary study referenced previously has been removed from this section. This was erroneously reported as a study when it actually was a summary of program outputs written by IMLS program grant administrative staff. This evaluation will be the first full investigation of the LB21 program.

A.6. Consequences of Collecting the Information Less Frequently:

14. Any plans for follow-up questions if necessary?

There is no plan to follow-up with grantees beyond the scope of this study. In addition, we will not follow-up with respondents beyond the 60 minute phone interview. To avoid the need for follow-up questions, interviewers have been trained to carry out a thorough examination of both the final grant report and the archival data analysis in preparation for the interview. They will then use the information gleaned from these two sources to produce preliminary responses to the six research questions that will be addressed in the grantee profile and the final report. This highlights areas where additional clarification may be needed and often brings to light questions that should be addressed in the interview. Following the interview protocol will also help to assure the information gathered is robust enough to produce meaningful findings. By asking similar questions of all respondents within each grant type and paying careful attention to questions that arise based on the existing data, we will be able to gather useful and meaningful data during the interviews, which will be supported by the archival research data as well. Interviewers will have weekly internal communications to identify places where additional information may need to be added into the protocols. We will also be holding meetings between the interviewers and the evaluation team to identify when we have hit information saturation on some questions which can then be de-emphasized during interviews with future respondents in order to further lesson the burden on the respondents. These meetings are also to ensure that the interviews are providing the necessary data to produce a meaningful analysis.

A.8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside the Agency:

15. Why was the person named “Barbie Keiser” mentioned so specifically?

We apologize for the confusion regarding Ms. Keiser. The section describing her role and qualifications was inadvertently left off the previous submission. Barbie Keiser was mentioned by name because she is an expert in the field and is the principal investigator on the project. Ms. Keiser has thirty years of experience in the library profession (MSLS, Case Western Reserve University), first as a corporate information specialist and academic library director, and later as an information resources management consultant. Barbie Keiser was also the Project Manager for the IMLS study of Education and Training Opportunities Available to Preprofessional and Paraprofessional Library Support Staff from September 2001 to August 2002 (September 3, 2003). Since that time, she has worked on a wide number of national and international library research and consulting projects.

A.12. Estimates of Annualized Burden Hours and Costs:

16. Burden hours should be calculated for each group of person anticipated to be interviewed (i.e., librarians for 100 hours). Cost should also be broken down by group of person to be interviewed. These estimates do not include any opportunity for follow-up interviews.

Each phone interview will take no more than 60 minutes; we expect many interviews to take much less than that. We anticipate respondents may need up to 30 minutes for scheduling and preparation, depending on the accessibility of program information (e.g. number of graduates, etc.). As mentioned above, no follow-up interviews will be conducted. The burden statement has been recalculated to include the breakdown by librarians versus professors. See attached burden estimate worksheet. A breakdown of this burden is also shown in Exhibit 1.

A.16 Plans for Tabulation and Publication and Project Time Schedule:

17. The schedule suggests that data collection will conclude on the same day as data analysis concludes. How can that be?

The previous timeline was based on a contract period which has since been extended the time needed to collect and analyze data. Because we will be contacting all grantees at the outset of the data collection period to request an interview, it is anticipated that the data collection will be rapid at first but will slow down as the data collection period progresses. Data analysis will be an ongoing process to occur concurrently with data collection; however, it will extend an additional week after data collection has ended. Concurrent data collection and analysis will provide sufficient time to analyze the bulk of the grantee interview data during the data collection period, with only a few interviews needing to be analyzed after the data collection period has ended. As described previously, interviewers will be creating a grantee profile for each grantee studied that will draw from both the interview and the archival data. This will be completed within five days following the interview. The analysis team will then be coding the data entered by the interviewer into the MS Access “Case Study Database” and combining these codes for the analysis in the final report. This process will be ongoing as the interviews are being carried out.

18. The schedule suggests that the draft report will take just 10 days from the conclusion of data collection and data analysis (same day) and the final report will be submitted 7 days later. This seems unrealistic.

As shown in Exhibit 2, the scheduled has been extended and more than two weeks has been allotted for writing the draft report, with an additional three weeks for finalizing it. Because the analysis process will be completed prior to this and writing will occur – at least in part – concurrently with conducting the analysis, five weeks is sufficient time to produce the final report.

19. There is very little discussion of how the data will be analyzed, reported, and presented. The case study data will not be tabulated, so then what data will be presented in aggregate tables? Presumably the archival data about which very little, if any, information is provided.

A discussion of the data analysis and report has been described previously in response to Questions 4 and 7. The archival data includes information that lends itself to descriptive statistical analysis in helping present profiles of the grants within and across project grant categories, including information on grant year and project grant award level. Such information is critical in helping contextualize the later comparative analyses.

B.2 Describe the Procedures for the Collection of Information:

20. Will a federal employee at IMLS contact the selected cases to explain the study and solicit participation? Or will this be accomplished by the contractor?

IMLS will make the first contact with the interviewees via a mailed letter explaining the research study. This letter will alert grantees that ICF will be contacting them in the near future. Because IMLS is a familiar entity for these individuals and will add legitimacy to the project, they are likely to be responsive to this agency’s request, if possible. ICF will then be emailing the interviewees, soliciting participation and requesting that they contact us to schedule their interviews.

21. Will consent forms be distributed and collected – both for the archival record data collection and the interview data collection?

As previously discussed, the archival documents for this evaluation consist exclusively of final project grant reports already collected by IMLS. Consent is not necessary for the use of such documents in this evaluation. Verbal consent will be obtained during the phone interview after respondents are informed of their privacy protections and their right not to participate. Verbal consent is done to decrease burden on respondents by eliminating the need for an additional contact that would be required by using a written consent form that would need to be read, singed, and returned prior to the interview. We have added verification questions to ensure the respondents understand the consent process. See the section below, taken directly from the introductory section to each of the interview protocols. The contractor will have two individuals on the line to record verbal consent to these verification questions. The consent script is as follows:

Let me briefly review some administrative information and the Privacy Act Notification.

(Statutory Authority)

IMLS is authorized to collect this information under the Museum and Library Services Act of 2010.

(Purpose and Use)

As I mentioned in my initial email contact with you, the purpose of this interview is to better understand the ways in which the projects funded by LB21 grants pursued their goals and to learn more about the outcomes of the grant-funded projects. In particular, we want to learn what project methods, components, and features were used and how effective they were in helping to achieve the goals of the project. We would also like to learn about the lasting effects of the project, including project elements or curricular changes that persisted after the grant ended, changes to policy or practice, and effects on participants. The study we are conducting will help inform the awards made to future grantees and help ensure that the LB21 grant program continues to be effective in supporting and developing the field of Library and Information Science.

(Length of the Study)

The interview will take about one hour to complete. Is now still a good time to talk?

(Voluntary participation / Privacy act)

Your participation is strictly voluntary, and you may choose to end the interview at any point. Information gathered during this interview will be reported using a blended case-study format. That is, we will combine the information that you provide with information obtained from interviews with grant recipients that had similar project goals. Although we will avoid using the names of specific institutions and individuals, it may be possible for institutions or individuals to be identified from other project information that is reported. Of course, the purpose of this IMLS evaluation is to improve the grant program moving forward, by gathering information from all grantees included in this study. The goal is not to pinpoint weaknesses of a particular grant. In addition, none of your responses today will affect the review of your current or future funding.

The OMB Control Number for this study is: XXXX-XXXX. The collection expires MONTH ##, 20XX.

Verification Questions:

Do you understand that your participation is voluntary? [Yes/No]

Do you understand that while we will make every effort to protect the identity of the program and will only report data in the aggregate? [Yes/No]

Do you have any questions about this? [Yes/No]

Do you consent to continue with the interview? [Yes/No]

[INTERVIEWER AND TRANSCRIBER: Record responses to each question]

22. What multiple modes of communication will be used to schedule interviews? Will any form emails be sent to potential respondents?

Interviews will be scheduled using the following procedure:

IMLS contractor will email interviewees, soliciting participation and requesting that they make contact to schedule their interviews.

If a response from the interviewee is not received within 2 business days, the IMLS contractor will call them directly. If contact is not made, the contractor will leave a voice message for the interviewee (or a message with someone else in the organization) requesting that the interviewee call back to schedule an interview.

Phone calls will be followed within 30-60 minutes by emails providing the same information, as many of the interviewees have indicated they are best reached through email. Emails are to be sent even if the contractor is not able to leave a voice message.

This process of telephone calls followed by emails will be repeated two additional times, with a three day wait between contact attempts.

Copies of the protocols have been attached as separate documents with this PRA resubmission.

23. If the interview protocols will be tailored with questions relevant to the particular grant type, will there be no uniform questions across programs? How will that facilitate the production of a useful report? What are the general research questions that should be answered through this study?

We start by answering the last question first. The nine sets of general research questions that should be answered through this study are as follows:

(1a) What is the range of LIS educational and training opportunities that were offered by grantees under the auspices of LB21 program grants?

(1b) How many new educational and training programs were created by the program?

(2a) Among the sampled institutions, how many students received scholarship funds? Were any parts of these scholarship programs sustained with university or private funds?

(2b) How effective were the various enhancements to the classroom activities that were provided by the grants (mentoring, internships, sponsored professional conference attendance; special student projects, etc.)?

(3) How many of the training programs were sustained after the LB21 grant funds were expended? What types of programs were sustained? What funds were used to sustain these programs?

(4a) Did these new scholarship or training programs have a substantial and lasting impact on the curriculum or administrative policies of the host program, school or intuition?

(4b) If so, how were the curricula or administrative policies affected? The evaluation should consider especially LB21 grants for Building Institutional Capacity, which usually has included scholarship funds though it is not required.

(5a) What impact have these new programs had on the enrollment of master’s students in nationally accredited graduate library programs?

(5b) How have LIS programs leveraged LB21 dollars to increase the number of students enrolled in doctoral programs?

(6a) What substantive areas of the information science field are LB21 supported doctoral program students working in?

(6b) Are these programs that will prepare faculty to teach master’s students who will work in school, public, and academic libraries or prepare them to work as library administrators?

(7a) What is the full range of “diversity” recruitment and training opportunities that were created under the auspices of LB21 program grants?

(7b) What are the varied ways in which grant recipients have defined “diverse populations”?

(7c) Which of these programs were particularly effective in recruiting “diverse populations”?

(7d) What were the important factors for success?

(8) What is the most effective ways to track LB21 program participants over time? What is the state of the art in terms of administrative data collection for tracking LB21 program participation among grantee institutions?

(9) What has been the impact of the research funded through the LB21 program?

As this listing of research questions reveals, the research questions vary in the degree to which they are linked to one of the six program grant categories versus spread out across all program grant categories. Exhibit 6 (referenced earlier in Question 5) illustrates the break-out of research questions by program grant category using the comparative case study design.

Interview protocols have been tailored to each grant type in addressing their relevance to the research questions in best ensuring information richness for comparisons within and across groups as discussed in greater detail in Question 1. These variations reflect the nature of the evaluation’s comparative design. There is a great deal of uniformity in general across the protocols based on areas of overlap among all program grant categories to enable cross-group comparisons. However, the focus of the questions has been tailored to pertain to the unique circumstances to each specific program grant category such as the types of educational and training opportunities made available through the various LB21 program grant categories.. The inclusion of both individual and shared questions does not preclude the ability to draw meaningful conclusions of the program as a whole. While we are limited in the ability to draw completely uniform findings due to the uniqueness of several of the program grant categories, we will be able to draw overarching conclusions, such as best practices and common themes among those grant types that are similar.

Instruments and consent materials:

24. The Paperwork Reduction Act of 1995 (35 USC 44 §3506) and Implementing Regulations (5 CFR 1320.5) requires that the authority of collection (who and under what statute), purpose, use, voluntary nature (or if a mandatory collection), and privacy offered (and under what statute; if no statute, private to the extent permitted by law), if any, be conveyed to the participant of a study, in addition to explaining the length of the study. Please ensure that the PRA burden statement, OMB control number and expiration date are also provided on all study materials viewed by the participant. If the discussion guide will not be viewed by the participant, this information can be provided in the consent script. Please send us this script.

Respondents will receive an electronic packet of information prior to the interview that will include a confirmation of the interview date and time, a list of potential discussion topics, and a copy of their final grant report. This information will have the OMB control number, expiration date, and PRA burden statement. In addition, we have amended the consent script at the beginning of all interview protocols to more clearly emphasize this information (see below):

Introduction (5 minutes)

Hello, this is <<NAME>> from <<name of contractor>> calling on behalf of the Institute of Museum and Library Services. Is this <<NAME OF POC WITH WHOM THE INTERVIEW WAS COORDINATED>>?

We are interviewing recipients of grants from the Institute’s Laura Bush 21st Century Librarians grant program (LB21 for short). The interviews are designed to learn more about grant recipients’ experiences with the LB21 grant program. I understand that your department received a Master’s Program Grant in <<YEAR>>. Is this correct?

I’d like to verify that you are the primary point-of-contact for your organization’s LB21 grant project that began in <<INSERT YEAR>>. Are you knowledgeable about the grant project that your organization completed with LB21 funds?

[IF YES: CONTINUE INTERVIEW.]

[IF YES, BUT NOT A GOOD TIME: SCHEDULE A CALL BACK INCLUDING DATE, TIME, AND PHONE NUMBER TO USE.]

[IF NO: OBTAIN CONTACT INFORMATION FOR THE GRANT PROJECT’S MAIN POINT OF CONTACT.]

Let me briefly review some administrative information and the Privacy Act Notification.

(Statutory Authority)

IMLS is authorized to collect this information under the Museum and Library Services Act of 2010.

(Purpose and Use)

As I mentioned in my initial email contact with you, the purpose of this interview is to better understand the ways in which the projects funded by LB21 grants pursued their goals and to learn more about the outcomes of the grant-funded projects. In particular, we want to learn what project methods, components, and features were used and how effective they were in helping to achieve the goals of the project. We would also like to learn about the lasting effects of the project, including project elements or curricular changes that persisted after the grant ended, changes to policy or practice, and effects on participants. The study we are conducting will help inform the awards made to future grantees and help ensure that the LB21 grant program continues to be effective in supporting and developing the field of Library and Information Science.

(Length of the Study)

The interview will take about one hour to complete. Is now still a good time to talk?

(Voluntary participation / Privacy act)

Your participation is strictly voluntary, and you may choose to end the interview at any point. Information gathered during this interview will be reported using a blended case-study format. That is, we will combine the information that you provide with information obtained from interviews with grant recipients that had similar project goals. Although we will avoid using the names of specific institutions and individuals, it may be possible for institutions or individuals to be identified from other project information that is reported. Of course, the purpose of this IMLS evaluation is to improve the grant program moving forward, by gathering information from all grantees included in this study. The goal is not to pinpoint particular weaknesses of your particular grant. In addition, none of your responses today will affect review of your current or future funding.

The OMB Control Number for this study is: XXXX-XXXX. The collection expires MONTH ##, 20XX.

Verification Questions:

Do you understand that your participation is voluntary? [Yes/No]

Do you understand that while we will make every effort to protect the identity of the program and will only report data in the aggregate, there may be combinations of data that will uniquely identify you to other institutions or individuals? [Yes/No]

Do you have any questions about this? [Yes/No]

Do you consent to continue with the interview? [Yes/No]

[INTERVIEWER AND TRANSCRIBER: Record responses to each question]

*********************************************************************************

PHONE AND EMAIL NOTIFICATION TEMPLATES

*********************************************************************************

Hello, my name is [FIRST NAME] [LAST NAME] and I am calling on behalf of IMLS [IF PROMPTED, the Institute of Museum and Library Services], about the Laura Bush 21 Grant Program [IF PROMPTED, the LB21 program]? Can I speak with [INSERT GRANTEE NAME]?

When grantee takes the line, or answering individual questions purpose of call:

[Reintroduce SELF as necessary] I’m calling because IMLS and its research contractor ICF International are hoping talk to you [or name GRANTEE] about an ongoing study of the LB21 Grant Program. You should have received a letter from IMLS in the mail recently, explaining the purpose of the evaluation program and letting you know that we would be calling to schedule a phone interview with you. Did you receive this letter?

[IF YES]

I’m pleased to hear that. We are interested in understanding your motivations for seeking the grant funds, how you used the grant funds, and any sustained benefits the grant program has provided. The interview will take no longer than an hour and will be scheduled at your convenience. When during the next few weeks would be a good time for you to complete the interview regarding your experiences with the LB21 program?

[IF NO]

I’m sorry to hear that you did not receive that. Let me take a moment to explain the project. IMLS is conducting an evaluation of the LB21 grant program for the purposes of identifying best practices and lessons learned for the future of the grant program. As part of the evaluation being conducted by their research contractor ICF International, we will be conducting phone interviews with past recipients of the grants to learn more about their experiences with the grant program. We are interested in understanding your motivations for seeking the grant funds, how you used the grant funds, and any sustained benefits the grant program has provided. The interview will take no longer than an hour and will be scheduled at your convenience. When between [three days from now] and [END OF DATA COLLECTION] would be a good time for you to complete the interview regarding your experiences with the LB21 program?

[IF YES]

Great! We will be conducting the interviews between [three days from now] and [END OF DATA COLLECTION]. When is a good time for you?

[MATCH DATE/TIMES PROVIDED TO INTERVIEWER AND RECORDER SCHEDULES TO SCHEDULE THE INTERVIEW]

Also, I just want to take a minute to make sure we have the correct contact info for you for the summer months.

We currently have the following contact information for you from IMLS. [READ CURRENT LIST AND UPDATE AS NECESSARY]

-

Field

Current List

Update/Summer Info

Title

TITLE

TITLE

Last Name

LAST NAME

LAST NAME

First Name

FIRST NAME

FIRST NAME

Institution

INSTITUTION

INSTITUTION

Telephone Number

PHONE

PHONE

Email Address

EMAIL

EMAIL

Thanks so much for your help today. You will be interviewed by [INTERVIEWER NAME]. She will send an email to you later today verifying the date and time of the interview. The email will also have a toll free phone number for you to call in for the interview and a passcode. If you would any questions in the meantime, you may contact me at [PHONE].

Thanks once again.

[END CALL]

[IF NO]

Ok. Is there anyone else who was familiar with the grant project that we may be able to contact for an interview? We are looking for someone who is very familiar with the grant project from its initiation to its completion and would be able to provide information on the motivations for seeking the grant funds, how you used the grant funds, and any sustained benefits the grant program has provided.

Thank you so much. Can you please spell [his/her] name for me? [RECORD NAME]

What is the best way is to reach [new contact name]?

[RECORD CONTACT INFORMATION; PROBE FOR ALL INFORMATION BELOW]

-

Title

TITLE

Last Name

LAST NAME

First Name

FIRST NAME

Institution

INSTITUTION

Telephone Number

PHONE

Email Address

EMAIL

Thanks so much for your help today. If you would like to verify this study or if you have any questions, you may contact [ENTER NAME] AT XXX.XXX.XXXX .

Thanks once again.

[END CALL]

IF SENT TO VOICEMAIL:

Hello [CONTACT NAME], my name is [FIRST NAME] [LAST NAME] and I am calling on behalf of the Institute of Museum and Library Services, about the Laura Bush 21 Grant Program. I’m calling because IMLS and its research contractor ICF International are hoping talk to you this summer about an ongoing study of the LB21 Grant Program and if it was helpful. We would like to schedule a phone interview with you for some time between [three days from now] and [END OF DATA COLLECTION]. Please give me a call back to schedule a time for your interview. My number is [PHONE]. If you are not the correct person to contact regarding this data collection effort, please let me know so that I may contact the appropriate person. Thank you.

(Note: Follow up with email below within 30-60 minutes.)

IF ASKED TO LEAVE A MESSAGE WITH SOMEONE ELSE:

My name is [FIRST NAME] [LAST NAME] and I am calling on behalf of the Institute of Museum and Library Services, about the Laura Bush 21 Grant Program. I’m calling because IMLS and its research contractor ICF International are hoping talk to [GRANTEE] this summer about an ongoing study of the LB21 Grant Program and if it was helpful. Could you leave him/her a message to call me back to discuss the possibility of scheduling a short phone interview? My name is [NAME] and my number is [PHONE]. Thank you.

(Note: Follow up with email below within 30-60 minutes.)

FOLLOW UP EMAIL:

Dear [INTERVIEWEE],

I am writing on behalf of the Institute of Museum and Library Services (IMLS), about the Laura Bush 21 Grant (LB21) Program. IMLS and its research contractor ICF International are hoping talk to you this summer about an ongoing study of the LB21 Grant Program. I left a voicemail for you earlier.

You should have received a letter from IMLS in the mail recently, explaining the purpose of the evaluation program and letting you know that we would be contacting you to schedule a phone interview. In case you did not receive that letter, let me take a moment to explain the project. IMLS is conducting an evaluation of the LB21 grant program for the purposes of identifying best practices and lessons learned for the future of the grant program.

As part of the evaluation being conducted by their research contractor ICF International, we will be conducting phone interviews with past recipients of the grants to learn more about their experiences with the grant program. We are interested in understanding your motivations for seeking the grant funds, how you used the grant funds, and any sustained benefits the grant program has provided. The interview will take no longer than an hour and will be scheduled at your convenience.

Please respond to this email, or call me at [PHONE] to schedule a time for your interview. We will be conducting the interviews over the next few weeks.

Thank you,

[NAME]

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Matthew Birnbaum |

| File Modified | 0000-00-00 |

| File Created | 2021-01-30 |

© 2026 OMB.report | Privacy Policy