0920-12IG_Part_B_7-23-12

0920-12IG_Part_B_7-23-12.doc

Targeted Surveillance and Biometric Studies for Enhanced Evaluation of CTGs

OMB: 0920-0977

Targeted Surveillance and Biometric Studies for Enhanced Evaluation of

Community Transformation Grants

New

Supporting Statement

Part B—Collection of Information Employing Statistical Methods

July 23, 2012

Robin Soler, Ph.D.

Contracting Officer Technical Representative (COTR)

Division of Community Health

Centers for Disease Control and Prevention (CDC)

4770 Buford Hwy, N.E. MS K-45

Atlanta GA 30341

Telephone: (770) 488-5103

E-mail: [email protected]

TABLE OF CONTENTS

PART B. COLLECTION OF INFORMATION EMPLOYING STATISTICAL METHODS

B. Collection of Information Employing Statistical Methods

B1. Respondent Universe and Sampling Methods

B2. Procedures for the Collection of Information

B3. Methods to Maximize Response Rate and Deal with Nonresponse

B4. Test of Procedures or Methods to be Undertaken

B5. Individuals Consulted on Statistical Aspects or Analyzing Data

LIST OF ATTACHMENTS

Attachment Number |

|

Attachment 1A Authorizing Legislation: Public Health Service Act

Attachment 1B Authorizing Legislation: ACA Section 4201

Attachment 2 60-day Federal Register Notice

Attachment 3A IRB Approval Letter, Targeted Surveillance

Attachment 3B IRB Approval Letter, Youth and Adult Biometric Studies

Attachment 4A Strategic Directions and Examples of CDC-Recommended Evidence- and Practice-Based Strategies

Attachment 4B CTG Evaluation Plan

Attachment 4C List of CTG Awardees

Attachment 5 Other Data Sources Consulted

Attachment 6A Targeted Surveillance Consent to Participate in Research (Paper)

Attachment 6B Targeted Surveillance Consent to Participate in Research (Phone)

Attachment 6C Youth and Adult Biometric Studies: Youth Assent Form

Attachment 6D Youth and Adult Biometric Studies: Consent to Participate in Research (Adults Only)

Attachment 6E Youth and Adult Biometric Studies: Consent to Participate in Research (Adults with Children)

Attachment 7 Data Collection Flow

Attachment 8A Adult Targeted Surveillance Survey

Attachment 8B Adult Targeted Surveillance Survey – FAQ Guide

Attachment 9A Caregiver Survey

Attachment 9B Youth Survey

Attachment 10 Adult Bioemetric Measures Recruitment Screener (Phone/Paper)

Attachment 11A Mailed Lead Letter – Targeted Surveillance Sample

Attachment 11B Targeted Surveillance Reminder Postcard – Mail Sample

Attachment 11C Youth Survey Recruitment Screener for Parent/Guardian of Youth Ages 12-17

Attachment 11D Youth Survey Recruitment Screener for Youth Ages 12-17

Attachment 11E Caregiver Survey Recruitment Screener

Attachment 11F Adult Biometric Measures Screener (In Person)

Attachment 11G Adult Targeted Surveillance Survey Recruitment Screener

Attachment 12A Adult Biometric Measures

Attachment 12B Youth Biometric Measures (Ages 12-17)

Attachment 12C Child Biometric Measures (Ages 3-11)

Attachment 13A Accelerometry Instructions for Participants

Attachment 13B Adult Activity Diary

Attachment 13C Youth Activity Diary

Attachment 13D Accelerometry Reminder Scripts

Attachment S6A Targeted Surveillance Consent to Participate in Research (Paper) - Spanish

Attachment S6B Targeted Surveillance Consent to Participate in Research (Phone) - Spanish

Attachment S6C Youth and Adult Biometric Studies: Youth Assent Form - Spanish

Attachment S6D Youth and Adult Biometric Studies: Consent to Participate in Research (Adults Only) - Spanish

Attachment S6E Youth and Adult Biometric Studies: Consent to Participate in Research (Adults with Children) - Spanish

Attachment S8A Adult Targeted Surveillance Survey Spanish

Attachment S8B Adult Targeted Surveillance Survey – FAQ Guide - Spanish

Attachment S91A Caregiver Survey - Spanish

Attachment S9B Youth Survey - Spanish

Attachment S10 Adult Biometric Measures Recruitment Screener (Phone/Paper) - Spanish

Attachment S11A Mailed Lead Letter – Targeted Surveillance Sample - Spanish

Attachment S11B Targeted Surveillance Reminder Postcard – Mail Sample -Spanish

Attachment S11C Youth Survey Recruitment Screener for Parent/Guardian of Youth Ages 12-17 - Spanish

Attachment S11D Youth Survey Recruitment Screener for Youth Ages 12-17 - Spanish

Attachment S11E Caregiver Survey Recruitment Screener - Spanish

Attachment S11F Adult Biometric Measures Screener (In Person) - Spanish

Attachment S11G Adult Targeted Surveillance Survey Recruitment Screener - Spanish

Attachment S12A Adult Biometric Measures - Spanish

Attachment S12B Youth Biometric Measures (Ages 12-17) - Spanish

Attachment S12C Child Biometric Measures (Ages 3-11) - Spanish

Attachment S13A Accelerometry Instructions for Participants - Spanish

Attachment S13B Adult Activity Diary - Spanish

Attachment S13C Youth Activity Diary - Spanish

Attachment S13D Accelerometry Reminder Scripts - Spanish

B. Collection of Information Employing Statistical Methods

B1. Respondent Universe and Sampling Methods

Respondent Universe

Adult Targeted Surveillance Study

Each CTG Program awardee will implement intervention activities in defined geographic areas. Ten CTG Program awardees will be selected to participate in the ATSS in Year 1 (Group A), and 10 awardees will be selected in Year 2 (Group B) based on factors such as absence of other data sources for evaluation, expected program impact, and density of rural and minority populations. A list of current awardees is provided in Attachment 4C. For each awardee, cross-sectional follow-ups will occur 2 years and 4 years after baseline data are collected (Exhibit B-1-1). One thousand completed interviews will be collected for each awardee at each measurement occasion. The universe of respondents for the ATSS is adults aged 18 and older, who understand English or Spanish (because the ATSS questionnaire will be administered in only those two languages) from geographies targeted for intervention by 20 awardees.

Exhibit B-1-1. Data Collection Schedule

Group |

Data Collection Year |

|||||

1 |

2 |

3 |

4 |

5 |

6 |

|

A (10 awardees) |

X |

|

X |

|

X |

|

B (10 awardees) |

|

X |

|

X |

|

X |

For each awardee selected to participate in the ATSS, the contractor will use an address-based sampling (ABS) approach to select a stratified simple random sample of households in the communities targeted for interventions. The source of the ABS frame is the Computerized Delivery Sequence File (CDSF), a list of addresses that originates from the United States Postal Service. The CDSF contains nearly all (97%) addresses, P.O. boxes, and rural-route addresses. Although the CDSF also contains business addresses, only the residential portion of the file will be used for sampling purposes. Geographic information systems (GIS) technology will be used to determine longitude and latitude of each household address to allow construction of a sampling frame that will match the intervention geographies of each awardee.

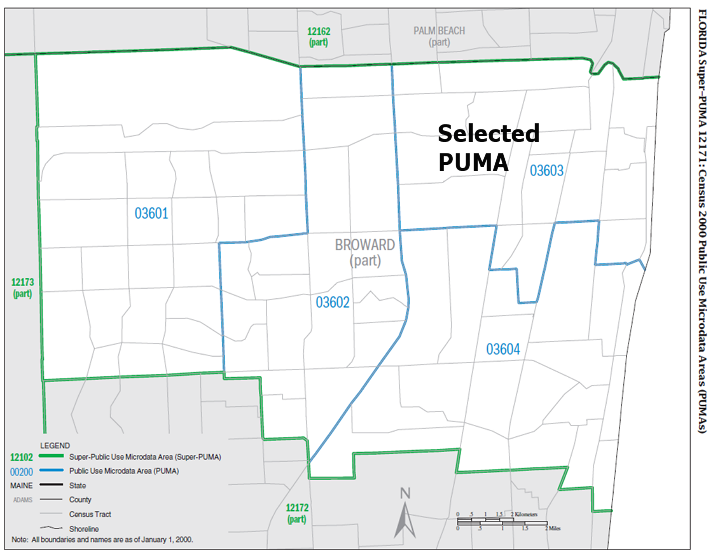

The size of the surveyed areas will vary by awardee. The areas may be neighborhoods (e.g., within the city boundaries of specific streets) or cities for county-level grantees, or whole counties for state-level awardees. A state-level awardee such as Iowa, for example, might target interventions in the counties of Adams, Appanoose, Calhoun, Decatur, Fayette, Floyd, Jefferson and Lee (Exhibit B-1-2). A county-level awardee such as Broward County, FL, might target the city of Fort Lauderdale for interventions. Fort Lauderdale is contained within PUMA 03603 (Exhibit B-1-3), where a PUMA is a U.S. Census Bureau–defined geographic areas used for the tabulation of census microdata.

Exhibit B-1-2. Map of Survey Areas for the State of Iowa

Exhibit B-1-3. Map of Survey Areas for Broward County

Residents from rural areas, as well as African American and Hispanic individuals, will be oversampled to allow monitoring of the CTG Program intervention effects on reducing health disparities in populations that historically have exhibited a greater burden of chronic diseases. Additionally, households with children 3 to 17 years of age will be oversampled to allow assessment of children’s exposure to household-level risk factors and to facilitate recruitment into the YABS. The oversampling strategy will ensure that sample sizes for each of these subpopulations will be large enough (> 4,000) to have good power to detect changes in means and prevalences over time.

Youth and Adult and Biometric Study

The universe of respondents for the YABS is persons aged 3 and older, who understand English or Spanish from geographies targeted for intervention by 10 of the 20 awardees participating in the ATSS (4 from Group A starting in Year 1 and 4 from Group B starting in Year 2; Exhibit B-1-1). The Caregiver Survey or Youth Survey and Youth or Child Biometric Measures will be completed on 400 youth (ages 3–17) and Adult Biometric Measures will be completed on 500 adults for each awardee at each measurement occasion (i.e., Years 1, 3, and 5 for Group A; Years 2, 4, and 6 for Group B). Within each awardee, a sub-sample of 125 youth (ages 3–17) and 125 adults residing in the same household will be selected from the above YABS samples to provide objective measures of physical activity using accelerometers. The four awardees chosen for the YABS in Group A will be selected based on awardee plans to implement school-based nutrition and physical activity–related interventions.

Sample Selection

Adult Targeted Surveillance Study

The ATSS sampling frame will be purchased from the Marketing Systems Group (MSG). In addition to residential addresses, MSG and other vendors will append geographic and household data from government and commercial databases to the frame to facilitate sampling stratification. Household level data will include name (match rate 85%+), telephone number (landline, match rate 60%+), and presence of children (yes/no). Although race and ethnicity are available at the household level, it can be missing for up to 25 percent of households. Therefore, for all addresses within a census block group (BG), the proportion of the population that is African American and Hispanic and the rural/urban designation will be appended using data from the 2010 Census.

To achieve the target sample size of 4,000 ATSS respondents for African American, Hispanic, rural subpopulations (across all 20 awardees selected for implementation of the ATSS), and the target of 8,000 respondents from households with children, we will subdivide the sampling frame for each awardee into strata consisting of cross-classification of the following characteristics:

Rural/urban designation

Proportion of the population African American (high or medium/low), Hispanic surname (yes/no)—from a list of the 650 most common Hispanic surnames

Telephone number match (yes/no)

Presence of child (yes/no)

Households from strata that include rural, high or medium African American density, Hispanic name, child presence indicator, and a telephone match will be oversampled (i.e., sampled with a higher probability) such that the target subpopulation sample sizes are achieved. Telephone matches will be oversampled because collecting data by phone yields a higher response rate than collecting data by mail and can maximize the effective sample size for a given cost. The sampling probabilities for each stratum will be monitored throughout the course of data collection and adjusted if necessary to ensure that we achieve our sample size targets.

Exhibit B-1-4. Recruitment (per awardee) into the Youth and Adult Biometric Study

Youth and Adult Biometric Study

Exhibit B-1-4 depicts the strategy for recruitment into the YABS participants from the Targeted Surveillance Sample and the School Catchment Area Sample (both derived from the ATSS sampling frame) to obtain an adequate number of households with children between the ages of 3 and 17.

The YABS has dual objectives of estimating the effect of all awardee interventions on health behaviors and health outcomes in children and adults, as well as the independent effect of school-based interventions in youth. Thus, the YABS participants are drawn from two distinct samples of addresses on the ATSS sampling frame (Exhibit B-1-4): (1) addresses for which the presence of a child is indicated (yes/no) and the geographic area that falls within catchment areas for schools targeted by awardees for school-based interventions (“School Catchment Area Sample”), and (2) all other addresses on the ATSS sampling frame (“Targeted Surveillance Sample”). Adult participants who complete the ATSS interview that have at least one age-eligible child in the home, both the respondent and one child will be invited to participate in the YABS (verbal consent from a parent/guardian will be obtained if the ATSS respondent is not the selected child’s parent/guardian). If the household has more than one eligible child (ages 3–17), youth in a middle-school aged stratum (7th or 8th grade) will be selected at a higher probability than those in the younger/older age stratum. If more than one child is eligible within an age stratum (e.g., two middle school age youth reside in the household), the child with a birth date closest to the interview date will be chosen. Adult participants who complete the ATSS interview who do not have a child present in the household will also be invited to participate in the YABS.

For each awardee, a sample size of 400 adult-youth (ages 3–17) household pairs is targeted for the YABS. From the Targeted Surveillance Sample, approximately 400 of the 1,000 households per awardee that participate in the ATSS are expected to have age-eligible children, among whom 140 are expected to agree to participate in the YABS. To reach the target sample size of 400 adult-youth pairs, an additional 260 households with age-eligible youth will be recruited from the School Catchment Area Sample. From the Targeted Surveillance Sample, approximately 286 of the 1,000 households per awardee that participate in the ATSS are expected to have no children, among whom 100 are expected to agree to participate in the YABS.

From the Targeted Surveillance Sample, approximately 35 percent of the ATSS respondents are expected to agree to participate in the YABS. In the Survey of the Health of Wisconsin (SHOW), participation rates among respondents who agreed to be screened were 46 percent in 2008–2009 and 56 percent in 2010.37

Statistical Power

Adult Targeted Surveillance Study

Exhibit B-1-5 displays the target sample size for all awardees combined, overall and by race and ethnicity, and rural or urban residence. The difference between the effective sample size and nominal sample size is the cost of unequal weighting that is a consequence of the complex sampling design—known as the unequal weighting effect (UWE). For power calculations, we have assumed UWE in the range of 1–3; however, we expect the average UWE to be approximately 2.

Exhibit B-1-5. Target Sample Size Across 20 Selected Awardees

Characteristic |

Nominal Sample Size |

Effective Sample Size |

Total |

20,000 |

10,000 |

Race/ethnicity |

|

|

Black/African American |

4,000 |

2,000 |

Hispanic |

4,000 |

2,000 |

Other |

12,000 |

6,000 |

Rural |

4,000 |

2,000 |

Assuming an alpha level of 0.05 and UWE = 2, the power calculations show that the target sample size of approximately 1,000 respondents per awardee provides 80 percent power to detect a difference in proportions between two time intervals of 5 percent when the initial proportion is 0.05 and a difference less than 10 percent in the most conservative case where the initial proportion is 0.5.1 Aggregating data across awardees would allow good power to detect smaller differences. For analyses of subpopulations of size 4,000 or larger (i.e., African American, Hispanic, rural), we have 80 percent power to detect difference in proportions between two time intervals of less than 5 percent in the case where the initial proportion is 0.5. Even smaller differences could be detected with the sample size for baseline proportions either greater or less than 0.50 (Exhibit B-1-6).

Exhibit B-1-6. Minimum Sample Size Required in an Analytic Cell to Detect a Difference in Proportions (p1 and p2) with 80 Percent Power Assuming Various Unequal Weighting Effects for a Two-Sided Test

Estimated Proportions |

Unequal Weighting Effect |

|||

P1 |

P2 |

1 |

2 |

3 |

0.05 |

0.10 |

435 |

870 |

1,305 |

0.05 |

0.15 |

141 |

282 |

423 |

0.05 |

0.20 |

71 |

142 |

213 |

0.10 |

0.15 |

686 |

1,372 |

2,058 |

0.10 |

0.20 |

199 |

398 |

597 |

0.10 |

0.25 |

97 |

194 |

291 |

0.15 |

0.20 |

906 |

1,812 |

2,718 |

0.15 |

0.25 |

250 |

500 |

750 |

0.15 |

0.30 |

121 |

242 |

363 |

0.20 |

0.25 |

1,094 |

2,188 |

3,282 |

0.20 |

0.30 |

294 |

588 |

882 |

0.20 |

0.35 |

138 |

276 |

414 |

0.25 |

0.30 |

1,251 |

2,502 |

3,753 |

0.25 |

0.35 |

329 |

658 |

987 |

0.25 |

0.40 |

152 |

304 |

456 |

0.30 |

0.35 |

1,377 |

2,754 |

4,131 |

0.30 |

0.40 |

356 |

712 |

1,068 |

0.30 |

0.45 |

163 |

326 |

489 |

0.40 |

0.45 |

1,534 |

3,068 |

4,602 |

0.40 |

0.50 |

388 |

776 |

1,164 |

0.40 |

0.55 |

173 |

346 |

519 |

0.50 |

0.55 |

1,565 |

3,130 |

4,695 |

0.50 |

0.60 |

388 |

776 |

1,164 |

0.50 |

0.65 |

170 |

340 |

510 |

Youth and Adult Biometric Study

Sample size requirements for the biometric studies were based on power calculations for detection of changes in measures of obesity and tobacco use/exposure between baseline years (Year 1 for Group A awardees; Year 2 for Group B awardees; Exhibit B-1-1) and follow-up years (3 and 5 for Group A; 4 and 6 for Group B). For example, the mean BMI estimated from 400 youth sampled from areas targeted for the CTG Program interventions for a given awardee in Year 1 will be compared to the mean BMI in the same geographic areas in Year 5. Individual youth will not be followed longitudinally; rather, changes in the mean BMI will be computed for each measurement occasion (i.e., a repeated cross-sectional design).

The calculation of statistical power requires the estimation of the probability of correctly rejecting a false null hypothesis (e.g., null hypothesis of no change in average BMI within an awardee between Year 1 and Year 5). Power depends on four quantities: (1) type I error, or the probability of rejecting a true null hypothesis (e.g., there is no change in average BMI between Year 1 and Year 5 within the awardee in question); (2) sample size (for all power computations we assume a sample size of 400 at baseline and 400 at follow-up year, within each awardee; (3) the variability of the measurement under investigation (values for variance to interpret power calculations are extracted from existing literature as described below); and (4) the effect size (i.e., the magnitude of change between baseline and follow-up year).

For all power calculations, we assume an UWE = 2.0, which results in an effective sample size of one-half of the actual sample size (i.e., with 400 completed home examinations, incorporating design effect yields 200 observations for power estimates). In the YABS, individual adults or youth are considered the unit of analysis; thus, changes at the level of the individual constitute the measure on which power is based. We estimate that if 400 youths per awardee complete the Youth or Child Biometric Measures form, we will have 80 percent power (two-sided type I error= 0.05) to detect a 2- or 4-year change for quantitative outcomes (e.g., BMI or cotinine level) of 0.28 standard deviation (SD) units or greater, and a change (or difference) of 14 percent or more in the prevalence of any binary outcome (e.g., smoking or exposure to tobacco smoke). The target sample sizes of 400 youth participants and 500 adult participants in the YABS per awardee (total sample size of 1,600 youth and 2,000 adults per year) will yield good power to observe subtle awardee-level impacts on childhood obesity- and tobacco-related outcomes (Exhibit B-1-7).

Exhibit B-1-7 provides additional sample size and power considerations. For example, if effective sample sizes were somewhat larger (e.g., after application of design effect, sample sizes are 260 at baseline and 260 at 2 and 4 years after initiation of the CTG Program interventions) power would be 81 percent to detect a change of at least 0.25 SD units. We consider analyses after 2 years of application of the CTG Program interventions to capture (possible) short-term changes in biometric measures or changes in behaviors related to biometric measures (e.g., whether households become smoke-free), and after 4 years of implementation when detectable changes in biometric measures are more likely to be observed.

Power for changes in physical activity measures was computed with a different set of parameters than the main YABS study. We estimate power based on comparisons of physical activity classifications (e.g., hours of sedentary time per day) between adults (or youth) prior to CTG Program implementation or adults (or youth) after CTG Program implementation. We estimate that if 500 adults complete accelerometry data collection at two time points (e.g., Year 1 and Year 3) across all 5 awardees, we will have 80 percent power to detect a change in average time spent sedentary of 0.252 standard deviation (SD) units or greater. This assumes that the effective sample size for adults (or youth) = 250 due to unequal weighting effect (UWE) = 2.0 (nominally 500 adult/500 youth for all 4 awardees combined) and that sampling yields equal sample sizes per group (effective n = 250 adults per time point).

Exhibit B-1-7. Power for Detectable Differences, Baseline versus Post-CTG

Difference = 0.25 SD Difference

= 0.28 SD

Exhibit B-1-8 provides interpretation of a change of 0.28 SDs in quantitative measurements. For example, from Kwon and colleagues,39 the average difference in abdominal circumference between adolescents with high metabolic syndrome scores and low metabolic syndrome scores was 2.4 cm. Our proposed study is powered to detect differences in this range (0.28 * SD = 2.36cm). Skelton et al.40 reported the prevalence of adult metabolic syndrome in a cohort of children and adolescents; the difference in prevalence between overweight and obese children was 18.9 percent, larger than our detectable difference of 14 percent. For salivary cotinine, a 0.28 SD magnitude of change is approximately 0.92 ng/mL (according to data reported by Halterman et al.41) or a change of 0.67 ng/mL (according to Butz et al.42). These changes are smaller than reported differences between homes with total smoking bans and homes with no smoking restrictions. As reported by Butz et al.,42 the average difference (no smoking restrictions versus total smoking ban) in saliva cotinine for 6- to 10-year-old children is 4.85 ng/mL – 0.63 ng/mL = 4.22 ng/mL; we are powered to detect a difference of 0.67 ng/mL, a smaller but more realistic short-term change. The proposed study is also powered to detect a 14 percent change from baseline to post-CTG (or difference between two groups) in binary outcomes.

Exhibit B-1-8 provides interpretation of detectable differences for accelerometry data. Healy et al.35 reported an overall SD = 1.45 h/day of sedentary time and an average difference in sedentary time for non-Hispanic Whites versus Mexican Americans = 1 hour. Our design will permit detection of even smaller differences for group comparisons, if they exist. A difference of 0.252 SD units translates into 0.252 x 1.45 h/day = 0.37 h/day, or 22 minutes per day spent sedentary.

Exhibit B-1-8. Differences Detectable from CTG Interventions Translated from SD Units into Biomedically Interpretable Quantities

Measurement |

Reference |

Outcome Values |

Detectable

Change or Difference |

Body

Mass Index |

Cook et al. 43 Adolescents 12 to 19 years |

Prevalence adolescent metabolic syndrome BMI 85th–<95th percentile (at risk): 6.8% BMI ≥ 95th percentile (Overweight): 28.7% Difference = 21.9% |

14% Change |

Waist Circumference (cm) |

Kwon et al.39 Adolescents |

According to Metabolic Syndrome Score: Score 4–5: Mean (SD) = 99.0 (7.1) Score 2–3: Mean (SD) = 96.6 (8.4) Difference = 2.4 |

2.36

|

Body

Mass Index |

Tirosh et al. 44 Age 17 followed to adulthood Age 30 |

CHD as adult (30 years) according to age 17 BMI decile CHD risk increased 12% for BMI increase = 1 |

14% Change |

Body

Mass Index |

Skelton et al.40 Children/Adolescents 2 to 19 years |

Prevalence adult metabolic syndrome BMI 95–97th percentile: 12.6% BMI ≥ 99th percentile: 31.5% Difference = 18.9% |

14% Change |

Salivary

cotinine |

Halterman et al.41 Children 3 to 10 years |

According to home smoking ban Total ban: Mean (SD) = 0.63 (0.74) Unrestricted: Mean (SD) = 2.50 (3.27) Difference = 1.87 |

0.92

|

Salivary

cotinine |

Butz et al.42 Children 6 to 10 years |

According to home smoking ban Yes: Mean (SD) = 0.63 (1.0) No: Mean (SD) = 4.85 (2.4) Difference = 4.22 |

0.67 |

Sedentary Time (h/day) |

Healy et al. 35 U.S. Adults |

Overall mean = 8.44 (1.45) h/day Mean (SD) non-Hispanic White = 8.56 (1.24) Mean (SD) Mexican American = 7.54 (2.37) Difference = 1.02 h/day |

0.37 h/day (22 minutes) |

Moderate-to-Vigorous Time (h/day) |

Healy et al. 35 U.S. Adults |

Overall median= 0.34 h/day IQR = 0.15, 0.61 h/day (= 4-fold difference 0.44/0.25 = 1.7 fold difference for male/female |

Note: we are powered to detect 0.252 SD units, under Gaussian assumption, 1 SD ≈ ¼ of range, so that we are powered to detect less than this quantity |

B2. Procedures for the Collection of Information

Adult Targeted Surveillance Study

The Adult Targeted Surveillance Survey will be conducted in English (Attachment 8A) and Spanish (Attachment S8A).

Who Collects the Data: ATSS CATI data will be collected by Greene Resources interviewers, under the supervision of RTI International’s project supervisors, in RTI’s call center located in Raleigh, North Carolina.

Where/What: The Adult Targeted Surveillance Survey will be administered biennially in a representative sample of residents living in geographic areas targeted for interventions by 20 CTG Program awardees (surveys will be conducted in 10 awardees in Years 1, 3, and 5 [Group A], and another 10 awardees in Years 2, 4, and 6 [Group B], see Exhibit A-1-1). A total of 10,000 adults selected will complete the survey each year (1,000 per awardee). For four of the awardees each year, the survey will be administered in approximately 371 additional households that (1) also agree to participate in the YABS; (2) have at least one child aged 3 to 17; and (3) reside in geographic areas that fall within catchment areas for schools targeted by the CTG Program awardees for school-based interventions (Exhibit B-1-4, see section B1 for a more complete description of this “School Catchment Area Sample”).

Frequency: The data collection will consist of biennial surveys in the geographic areas targeted for interventions by 20 awardees. Given the size and population of the geographic areas being surveyed, it is very unlikely that the same respondent will be surveyed more than once over the years.

Procedures: The CDSF obtained from Vallasis Communications, Inc. will be used to create the address-based ATSS sampling frame. Addresses on the sample frame will be submitted to two vendors (MSG, American List Council) to match landline and cellular phone numbers in preparation for CATI administration of the survey. The match rate is expected to be 60 percent or higher based on the experience of other address-based sampling designs.45 MSG will also append phone numbers to the frame, as well as an indicator of whether the associated surname is Hispanic. KBM will provide an indicator of the presence of a child in the household (yes/no). Two separate samples will be drawn from this frame and deduplicated: the Targeted Surveillance Sample and the School Catchment Area Sample (Exhibit B-1-4). Addresses in the Targeted Surveillance Sample will be further divided into those for which the vendors were or were not able to identify corresponding phone numbers; these groups are labeled as “Phone Append” and “No Phone Append” in Exhibit B-2-1.

Exhibits B-2-1 and B-2-2 list totals for the number of addresses we expect that we will need to attempt to contact in order to meet our goals for completing ATSS interviews. These totals are preliminary estimates. These estimates will be refined as data collection progresses. We plan to release the sample in replicates and closely monitor the response rates for each category of sampled address before releasing later replicates.

First Introductory Letter With Invitation. All potential respondents from either the Targeted Surveillance Sample or the School Catchment Area Sample will be mailed a lead letter (Attachment 11A) enclosed in a windowed #10 envelope displaying relevant color logos. A Spanish version of the lead letter (Attachment S11A) will also be enclosed for households with a Spanish surname or located in a high density Hispanic area. The contents of the letter will vary somewhat depending on the category in which the sampled address belongs. For households without a telephone number match, the consent form, paper questionnaire, and return envelopes will be sent with the lead letter. The School Catchment Sample lead letter will request participation in both the TS and YABS if the household contains a child age 3-17. For both the Targeted Surveillance Sample and School Catchment Area Sample, the envelopes will also contain a $2 bill as additional incentive for selecting an adult to participate.

Project staff members expect around 92 percent of the first class mailings to be delivered successfully. All cases with packets that are returned as undeliverable (around 8%) will be coded as ineligible insofar as the reasons stated for the returns are consistent with ineligibility.

Telephone Contact. For telephone-matched addresses, interviewers will attempt to contact the household by phone and complete the Adult Targeted Surveillance Survey by CATI. During phone contacts, connection of the phone number with the sampled address will be verified. If the phone number listed for a case does not belong to anyone living at the sampled address, the telephone number will be removed from our records.

Telephone Non-Contact. If the phone number is invalid for the address, or if 8 call attempts fail to result in a contact, a paper questionnaire will be mailed to the address with the $2 pre-incentive, exactly like the first mailing for addresses without phone appends. This mail attempt will be used only for early replicates when sufficient time remains in the data collection period.

Second Mailing. All addresses without valid telephone numbers and all addresses for which telephone contact attempts have been unsuccessful will be sent a reminder postcard (Attachment 11B). A Spanish version of the postcard (Attachment S11B) will also be sent for households with a Spanish surname or located in a high density Hispanic area. The postcard will reiterate the initial invitation to respond via mail or by calling a toll-free number to complete the Adult Targeted Surveillance Survey via CATI. The $2 incentive is not planned for enclosure in this second mailing. The process is illustrated in Exhibit B-2-1.

Exhibit B-2-1. Mode and Order of Contacts for the Targeted Surveillance Sample

Exhibit B-2-2. Mode and Order of Contacts for the School Catchment Area Sample

Youth and Adult Biometric Study

The Youth Survey (Attachments 9B, S9B), Caregiver Survey (Attachments 9A, S9A), and instructions for completing the Adult Biometric Measures (Attachments 12A, S12A), and Youth or Child Biometric Measures (Attachments 12B, 12C, S12B, S12C) will be provided in English and Spanish (note: the “S” prefix refers to Spanish versions).

Who Collects the Data: A10 Clinical Solutions, Inc. field interviewers with oversight by project supervisor at each data collection location and by RTI’s YABS project manager.

Where/What: The YABS will be conducted biennially in 8 of the 20 CTG Program awardees selected for the ATSS (Caregiver and Youth Suverys as well as the Adult and Youth or Child Biometric Measures will be conducted in 4 awardees in Years 1, 3, and 5 [Group A], and another 4 awardees in Years 2, 4, and 6 [Group B], see Exhibit A-1-1). Participants in the YABS will be drawn from a subsample of respondents to the ATSS and eligible households from the School Catchment Area Sample.

Frequency: The data collection will occur biennially in the geographic areas targeted for interventions by 8 awardees. Given the size and population of most geographic areas of coverage, it is very unlikely that the same respondent will participate more than once over the years.

Procedures: The approach for contacting potential participants for the in-home exam will link to the procedures for contacting households in the ATSS as shown in Exhibit B-2-1. The ATSS will obtain 1,000 respondents per awardee, and at the completion of the Adult Targeted Surveillance Survey, all adult respondents will be asked whether they are willing to participate in the YABS, and, if the respondent resides with at least one child age 3–17 years; if so, the child’s parent or guardian will be asked for the child’s participation. We plan to oversample households with children such that approximately 40 percent of the ATSS respondents will live in a household with at least one child 3–17 years, and that approximately one-third of those households will agree to participate in an in-home examination. An additional sample of adults from households without children will be invited to participate in the YABS, resulting in 100 adults per awardee. These efforts will result in 140 youth (ages 3–17) and 240 adults (140 with children, 100 without children) as shown on the left-hand side of Exhibit B-1-4.

In addition to the sample of respondents recruited for the YABS through the targeted surveillance method, we will obtain additional participation from a subset of this frame “enriched” with households from school catchment areas. We will obtain a subset of telephone numbers from the original targeted surveillance master frame for households in catchment areas near schools—which will contain a higher (than the original frame) proportion of households with youth ages 3–17. From this list of households we will obtain additional telephone surveys and in-home visits. We anticipate that 260 households will result from this effort, sufficient to yield a total of 400 youth (ages 3–17) and 500 adults (Exhibit B-1-4).

B3. Methods to Maximize Response Rate and Deal with Nonresponse

For both the ATSS and YABS, some nonresponse can be expected. Nonresponse may arise from noncontact, refusals, and inability to schedule the in-home examination during the data collection window (for the YABS). Nonresponse is a potentially serious methodological threat to the interpretation of the study findings, particularly if it occurs differentially across the years of data collection or across subpopulations (i.e., nonignorable nonresponse). To reduce the potential for nonresponse bias, several strategies will be used and are presented below.

Methods to Maximize Response

1. Minimizing Noncontacts

Adult Targeted Surveillance

Study

Every potential respondent with a telephone match

will be sent a lead letter (Attachments 11A, S11A)

explaining the survey and letting them know that they will be

called and asked to participate. Up to two mailings

will be sent to unmatched sample addresses to invite one adult in the

household to complete the survey (Exhibit B-2-1,

above). The first letter will

include $2 as added incentive. Lead letters and follow-up letters

have both been shown to increase survey response rates. 46,47

Interview staff will make at least eight attempts to contact sample members with a valid, working telephone number. Exceptions to the minimum attempts rule will only be made under appropriate circumstances, such as when a sample member completes an interview after fewer than eight attempts, when a sample member refuses participation, or when a sample member requests an appointment outside of the data collection window.

Youth and Adult Biometric

Study

Every potential household to be included in

the YABS will already have had an adult

participate in the ATSS (CATI or mailings)

and agree to the in-home examination. Field Interviewers (FIs) will

make at least five attempts to schedule the in-home visit.

2. Avoidance of Refusals

Participation rates will be enhanced through several means: incentives, interviewer training, and administration of the ATSS or the in-home YABS in Spanish or English.

Adult Targeted Surveillance

Study

Incentives. The lead letter sent to

every potential Targeted Surveillance Sample respondent will state

that $20 will be given as a token of appreciation for completing the

ATSS. Gift cards for $20 are given as incentives for several CATI

studies of similar length. The lead letter sent to potential School

Catchment Area Sample respondents will refer to the total of the

incentives offered for participation in both the ATSS and the YABS,

which totals to $60 for adults and $10 for children. CATI staff will

also mention the relevant incentive as they introduce the ATSS to

members of both types of ATSS sample. Offering an incentive will help

gain cooperation from a larger proportion of the sample and

compensate respondents on cell phones for the air time used. Promised

incentives have been found to be an effective means of increasing

response rates in telephone surveys2

(e.g., Cantor, Wang, and Abi-Habib48)

and reducing nonresponse bias by gaining cooperation from

those less interested in the topic. 49-51

All sampled households will also receive $2 along with their first

invitation letter, as an additional incentive to participate

(Exhibit B-2-1, above). Small prepaid

incentives have been found to produce modest improvements in screener

response rates (Cantor et al.52).

Interviewer Training and Contact Procedures (CATI). Response rates vary greatly across interviewers.53 Improving interviewer training has been found effective in increasing response rates, particularly among interviewers with lower response rates.54 For this reason, extensive interviewer training is a key aspect of the success of this data collection effort. The following interviewing procedures will be used to maximize response rates for the ATSS CATI survey:

Interviewers will be briefed on the potential challenges of administering this survey. Well-defined conversion procedures will be established.

If a respondent initially declines to participate, a member of the conversion staff will recontact the respondent to explain the importance of participation. Conversion staff are highly experienced interviewers who have demonstrated success in eliciting cooperation. The main purpose of this contact is to ensure that the potential respondent understands the importance of the survey and to determine whether anything can be done to make the survey process easier (e.g., schedule a more convenient contact time). At no time will staff pressure or coerce a potential respondent to change his or her mind about participation in the survey, and this will be carefully monitored throughout survey administration to ensure that no undue pressure is placed on potential respondents.

Should a respondent interrupt an interview for reasons such as needing to tend to a household matter, the respondent will be given two options: (1) the interviewer will reschedule the interview for completion at a later time; or (2) the respondent will be given a toll-free number, designated specifically for this project, for him or her to call back and complete the interview at his or her convenience.

Conversion staff will be able to provide reluctant respondents with the name and telephone number of the contractor’s project manager who can provide them with additional information regarding the importance of their participation.

The contractor will establish a toll-free number, dedicated to the project, so potential respondents may call to confirm the study’s legitimacy.

Special attention will be given to scheduling callbacks and refusal procedures. The contractor will work closely with CDC to set up these rules and procedures. Examples include the following:

Detailed definition when a refusal is considered final.

Monitoring of hang-ups, when they occur during the interview, and finalization of the case once the maximum number of hang-ups allowed is reached.

Calling will occur during weekdays from 9am to 9pm, Saturdays from 9am to 6pm, and Sundays from noon to 9pm (respondent’s time).

Calling will occur across all days of the week and times of the day (up to 9pm).

Refusal avoidance training will take place approximately 2–4 weeks after data collection begins. During the early period of fielding the survey, supervisors, monitors, and project staff will observe interviewers to evaluate their effectiveness in dealing with respondent objections and overcoming barriers to participation. They will select a team of refusal avoidance specialists from among the interviewers who demonstrate special talents for obtaining cooperation and avoiding initial refusals. These interviewers will be given additional training in specific techniques tailored to the interview, with an emphasis on gaining cooperation, overcoming objections, addressing concerns of gatekeepers, and encouraging participation. If a respondent does refuse to be interviewed or terminates an interview in progress, interviewers will attempt to determine their reason(s) for refusing to participate, by asking the following question: “Could you please tell me why you do not wish to participate in the study?” The interviewer will then code the response and any other additional relevant information. Particular categories of interest include “Don’t have the time,” “Inconvenient now,” “Not interested,” “Don’t participate in any surveys,” and “Opposed to government intrusiveness into my privacy.”

Languages of Survey Administration. Both the CATI and paper versions of the Adult Targeted Surveillance Survey will be offered in Spanish (Attachment S8A) or English (Attachment 8A). Thus, Spanish-speaking sample members who might otherwise have refused to participate because of their inability to complete the surveys in English may instead complete them in Spanish. Spanish-speaking interviewers will be trained to inform Spanish-speaking sample members about the survey and engage them in the process of participation, thereby reducing refusals in this population.

Youth and Adult Biometric

Study

Incentives. An incentive of $40 will

be given to adults completing the Adult Biometric

Measures, $10 will be given to children aged 12–17

completing the Youth Survey and Youth Biometric Measures, and

$10 will be given to caregivers of children age 3–11

who complete the Caregiver Survey (no additional incentive is

provided to the 3- to 11-year-old child

completing the Child Biometric Measures). Proposed incentives

are based on both the age of the participant (child vs. adult) and

the level of participation. The incentives are slightly lower than

for participants of the longer (5.9 hours) NHANES examination, where

an incentive of $70 is given to persons aged 16 and older, and $30 is

given to children 2–15 years of age.

An incentive of $20 will be given to adults who complete

accelerometry procedures; $10 will be given to children aged 3-17.

Appointment Procedures. ATSS CATI interviewers will recruit and schedule appointments for the YABS data collection at the end of the ATSS call. Field staff will re-schedule appointments if necessary. Respondents who complete the paper ATSS will be encouraged to call a toll-free number to schedule a YABS appointment, if eligible. A toll-free number will be given to the recruited households should they need to reschedule.

FIs will meet the sample members at the sampled address, at the appointed time. If a respondent is unavailable when the FI visits, another appointment will be scheduled. Should a potential respondent refuse participation at the time of the examination, the FI will provide him or her with the name and telephone number of the Data Collection Supervisor who can provide respondents with additional information regarding the importance of their participation.

Field Interviewer Training. Refusal will be mitigated through a wide array of methods, including hiring of high-quality bilingual scheduling staff and FIs, implementation of quality assurance procedures such as close supervision of the FIs by the Data Collection Supervisor, and through comprehensive training. FIs will attend a centralized training on participant scheduling, the interview and examination protocol, and handling and field storage procedures for samples. The goal of training will be to prepare staff to successfully perform field survey tasks in a consistent and standardized fashion as described in a manual of procedures. FIs will be required to show competency in general interviewing techniques (e.g., the appropriate way to ask questions and record answers, contacting and recruiting participants, professional behavior, and standards and ethics) and gaining cooperation and refusal conversion.

Languages of Survey Administration or Conduct of Examination. Every participant (adult or child) will be given the option of completing the Caregiver or Youth Survey and/or following instructions for biometric measurements in Spanish or English. Thus, Spanish-speaking sample members who might otherwise have refused to participate because of their inability to complete the Caregiver or Youth Survey or Adult, Youth or Child Biometric Measures in English may complete them in Spanish instead. Adults who provide accelerometry data will maintain a diary for themselves recording the time of getting up in the morning and going to bed and the time and reason the device was removed for 5 minutes or more for any activity such as swimming or showering; a caregiver will maintain the diary for younger children (< 12 years old) and older children will complete their own diary. These diaries will be provided in either English or Spanish.

Methods for Investigating the Impact of Nonresponse. Simple descriptive statistics, such as counts and frequencies, will be tabulated for respondents and nonrespondents at relevant stages of the sampling process (e.g., from phone contact to completion of ATSS, from completion of ATSS to participation in the biometric study). Nonrespondent statistics will be tabulated overall and by subtype (refusal vs. not contacted). Response rates will be calculated and comparisons between respondents and nonrespondents on sociodemographic characteristics (e.g., age, gender, and race/ethnicity) and other relevant factors. Techniques to minimize the potential bias resulting from nonresponse will be considered. If changes in protocol are warranted, plans will be developed for implementation after IRB and OMB review.

Management of Missing Data and Other Issues

Missing data

For variables with less than 10 percent missing data, an imputation strategy may be applied to estimate the missing data based on the distribution of each individual’s baseline characteristics such as age, gender, race/ethnicity, education, and household income. If the survey data were poorly collected resulting in substantial missing data for some variables (>20%), the characteristics of the missing data will be carefully examined and handled with appropriate strategies.

Of particular concern is the break-off phenomenon, where respondents tire and quit before the end of the questionnaire. Break-offs could lead to higher nonresponse for items in the latter part of the questionnaire. One option would be to transfer some questions from the ATSS to the YABS conducted in the home because break-offs are far less common in person, but the sample sizes for the YABS are substantially smaller and would limit the analysis on the transferred items. The preferred option is to randomize the order of the questionnaire modules to distribute break-offs more evenly, which will be done if the cost is not prohibitive. For the first year of data collection, the schedule does not permit the randomization, but it is an option for future years. Also, timing tests will be conducted, and attempts to shorten the questionnaire, if needed, will be seriously considered to reduce the rate of break-offs.

Procedures will be implemented to minimize missing accelerometer data: reminder calls to participants will be made twice during the week they have been asked to wear the monitor; the participant will be instructed that receiving the incentive is dependent on providing at least 4 days with at least 10 hours of data following the NHANES accelerometry protocol.36 Imputation techniques will be considered to address intermittently missing data.55 If data are not complete according to the criteria above, participants will be asked to wear the accelerometer for an additional 7 days. Implementation of "re-wear" strategies have been found to increase completeness of the physical activity database (James Sallis, PhD, personal communication).

Seasonality

Both the ATSS and the YABS will be executed during the same months of data collection. For Group A, the data collection period is expected to be July–November. For Group B, the data collection period is expected to be January–May. We do not anticipate seasonal effects to be a methodologic issue. Nevertheless, the date of data collection will be recorded for the Caregiver and Youth Surveys as well as the Adult and Youth or Child Biometric Measures to allow adjustments during analysis to account for potential seasonality effects, if necessary.

Description of Sample Weighting

Adult Targeted Surveillance Study

Sampling weights map the sample to the population. There are four steps in creating the sampling weights:

Calculate the initial weights as in the inverse of the probability of selection with an adjustment for unknown eligibility

Nonresponse adjustment

Adjust for household size and multiple households

Poststratification

Step 1: Calculate the Initial Weights

The following formula defines an initial weight which is the inverse of the probability of selection of the address for the jth frame member that adjusts for known eligibility status.

![]()

![]() = the

number of frame members in stratum i,

= the

number of frame members in stratum i,

![]() = the

number of responders in stratum i,

= the

number of responders in stratum i,

![]() = the

number of nonresponders in stratum i,

= the

number of nonresponders in stratum i,

![]() = the

number of unknowns in stratum i.

= the

number of unknowns in stratum i.

![]() = the

number of ineligibles in stratum i.

= the

number of ineligibles in stratum i.

![]() = number

selected

= number

selected

(For clarification, in this notation superscripts refer to the step in weighting, not a power.) Ineligible frame members are removed.

Step 2: Nonresponse Adjustment

The sample receives a model-based nonresponse adjustment. Census and American Community Survey (ACS) data by blockgroup are appended to the sample frame. A logistic regression model is fit predicting the probability of response using the census and ACS data as predictors.

A new weight is

calculated:

![]() .

.

where

![]() = nonresponse

adjusted probability for the jth frame member,

= nonresponse

adjusted probability for the jth frame member,

![]() = the

predicted probability of response for the jth respondent from

the logistic model.

= the

predicted probability of response for the jth respondent from

the logistic model.

Because the sum of

the nonresponse adjusted weights (![]() )

are not exactly equal to the sum of (

)

are not exactly equal to the sum of ( ![]() )

we make the following ratio adjustment.

)

we make the following ratio adjustment.

.

.

Step 3: Adjust for Household Size and Number of Residences

Each subject has a weight that reflects the inverse of the probability of selection and a nonresponse adjustment. In this step we adjust for household size and number of residences in the eligible geography.

![]()

where

![]() = the

number of adults in the household of respondent for the jth

listed frame member in stratum i,

= the

number of adults in the household of respondent for the jth

listed frame member in stratum i,

![]() = the

number of residences in the sampled geography of respondent for the

jth listed frame member in stratum i.

= the

number of residences in the sampled geography of respondent for the

jth listed frame member in stratum i.

Step 4: Poststratification

The last step of weighting is poststratification to the latest population estimates. We will poststratify to the following domains:

Geographical region

Age category (18–34, 35–49, 50–64, 65+)

Gender

Race (white, black, other)

Hispanic status

Youth and Adult Biometric Study

Sampling weights for each biometric study sample (adults and children) will be created independently. But, because the weighting methodology is identical for the sample (with the exception of the age categories used in poststratification), we describe the common methodology and only highlight the different age categories use in the poststratification.

The weighting methodology for the biometric studies includes the following four steps:

Calculate the initial weights as in the inverse of the probability of selection

Nonresponse adjustment

Adjust for household size and multiple households

Poststratification

The frame for the YABS comes from two nested geographies: the geography used in the targeted surveillance and the school catchment area—a subset of the targeted surveillance geography. Consequently, some frame members have multiple chances of selection which need to be accounted for in the weights. This is accomplished in the first step where the initial weights are calculated.

Step 1: Calculate the Initial Weights

We break the respondents into two subsamples: Subsample A has only one chance to be selected into the sample, while Subsample B has two chances to be selected into the sample.

Subsample A: Respondents from the targeted surveillance survey who do not reside in the school catchment areas (left-hand pathway in Exhibit B-1-4, above); and

Subsample B: Respondents from the targeted surveillance survey who reside in the school catchment areas and the respondents from the school catchment areas (right-hand pathway in Exhibit B-1-4, above).

For Subsample A, the following formula defines an initial weight which is the inverse of the probability of selection of the address for the jth frame member:

![]()

![]() = the

number of targeted surveillance (TS) frame members in stratum i,

= the

number of targeted surveillance (TS) frame members in stratum i,

![]() = the

number TS sample selected.

= the

number TS sample selected.

For Subsample B, we take the inverse of the following quantity, add the probability of selection from the TS frame and the probability of selection from the school catchment frame, and subtract the probability that they are selected in both frames. The following formula defines an initial weight:

![]() = the

number of TS frame members in stratum i,

= the

number of TS frame members in stratum i,

![]() = the

number of school catchment frame members in stratum i,

= the

number of school catchment frame members in stratum i,

![]() = the

number school catchment sample selected.

= the

number school catchment sample selected.

Step 2: Nonresponse Adjustment

The sample receives a model-based nonresponse adjustment. Census and ACS data by blockgroup are appended to the sample frame. A logistic regression model is fit predicting the probability of response using the census and ACS data as predictors.

A new weight is

calculated:

![]() .

.

where

![]() = nonresponse

adjusted probability for the jth frame member,

= nonresponse

adjusted probability for the jth frame member,

![]() = the

predicted probability of response for the jth respondent from

the logistic model.

= the

predicted probability of response for the jth respondent from

the logistic model.

Because the sum of

the nonresponse adjusted weights (![]() )

are not exactly equal to the sum of (

)

are not exactly equal to the sum of (![]() )

we make the following ratio adjustment.

)

we make the following ratio adjustment.

.

.

Step 3: Adjust for Number of Eligible Household Members

Each subject has a weight that reflects the inverse of the probability of selection and a nonresponse adjustment. In this step we adjust for selection within the household.

![]()

Where,

![]() = the number of eligible household members of respondent for the jth

listed frame member in stratum i,

= the number of eligible household members of respondent for the jth

listed frame member in stratum i,

Step 4: Poststratification

The last step of weighting is poststratification to the latest population estimates. We will poststratify to the following domains:

Geographical region

Age category

Gender

Race (white, black, other)

Hispanic status

For the adult biometric study we will use the following age categories (18–34, 35–49, 50–64, 65+). For the youth and child biometric studies we will use the following age categories (3–11, 12–17).

B4. Test of Procedures or Methods to be Undertaken

RTI conducted internal tests of the ATSS and YABS instruments, and made adjustments according to the results. The purpose of the internal testing was to:

Test and revise protocols for participant recruitment and survey administration

Ensure clarity of survey language

Identify timing, skip patterns, and other complex conceptual issues that may not be readily obvious from simple reading of the survey

Given that the majority of the items were drawn from previously fielded surveillance instruments (Attachment 5), that have been shown to be valid and reliable with the appropriate TS age groups, we expected that the majority of the questions would be easily understandable and accurately answered by the target group of respondents; problems resulting from use of vocabulary and complex sentence structure or validity problems resulting from misinterpretation of the questions were minimal. Therefore, perceived instances of misunderstandings, incomplete concept coverage, inconsistent interpretations were rare, and only a few words and answer choices were altered to address these concerns. The pilot testing focused on:

Consistency—We tested to ensure the instrument was applicable for all modes of administration and allowed maximal comparison to data from the source instruments from which the questions were drawn. For example, the paper ATSS needed to be adapted slightly from the CATI format, and questions were aligned between the Youth and Caregiver instruments to allow aggregation of data at the time of analysis. In addition, wording or answer choices were adjusted to permit the best comparisons between the instrument and the source instruments from which they were drawn.

Length—To ensure no excessive burden to respondents and to achieve the approximate time estimates provided in the 60-day Federal Register Notice for this ICR, we deleted questions based on the pilot results.

Question sequencing and overall flow—We pilot tested the full process of the CAPI interview including introduction, respondent selection method, and questionnaire flow. Based on the results, we eliminated redundancy and shifted the ordering of items to ensure a smooth flow of the data collection process to maximize the efficiency of collecting accurate responses. Skip patterns were adjusted to reflect changes in the ordering of items that were made to improve flow and eliminate potential errors.

Salience—Based on our pilot findings, we modified the recall periods to ensure as much consistency as possible, while permitting comparison to the source instruments. We also inserted the exact dates for recall as auto fill (e.g., During the past 12 months, that is since January 1, 2011. .). We also confirmed that allowing respondents to choose their reporting period was helpful (e.g., offering day, week or month for reporting foods eaten). We also modified the ordering of certain questions to better assist respondents with recall.

Ease of administration and response—Interviewers did not note any difficulty in administering the instruments. As expected, a few respondents did struggle to complete the food frequency items. Instruments were modified instruments to provide consistent recall periods, and reference dates as noted above. Based on the pilot test results, we offered additional examples within questions; for example, we updated the computer time use question to include time spent using an iPad.

Acceptability to respondents—Results from the pilot test suggested that participants were comfortable answering questions and the range of response options were generally comprehensive. However, additional response choices were added to certain questions as a result of the pilot. Importantly, respondents did not report that questionnaire items were too sensitive to answer.

After review by external experts in each of the content areas specific to the CTG Program evaluation, the Adult Targeted Surveillance Survey (both CATI and mail versions) and the Youth and Caregiver Surveys were revised and programmed for administration via CATI or CAPI, respectively (Exhibit B-4-1). Since the initial OMB submission, survey questions that have been changed or adapted by the Contractor were reviewed by experts identified in Section A.8. Questions have been programmed and tested for accuracy, flow, implementation of skip patterns (as described above), as well as testing for other features and content of the instrument as described above.

Exhibit B-4-1. Survey Instruments and Materials for Pilot Testing

Survey Instrument |

Pilot Testing Data Components |

ATSS Telephone Survey (CATI)

|

|

ATSS Paper (Mail)

|

|

Youth and Adult Biometric Studies: Caregiver Survey

|

|

Youth and Adult Biometric Studies: Youth Survey |

|

B5. Individuals Consulted on Statistical Aspects or Analyzing Data

Robin Soler, PhD (770-488-5103), Division of Community Health, CDC is the Principal Investigator and Technical Monitor for the study. She has overall responsibility for overseeing the design and administration of the surveys, and she will be responsible for analyzing the survey data.

RTI International is the project contractor responsible for developing the instruments and data collection protocols; providing training to interviewers; and collecting and analyzing from the ATSS and YABS. Debra Holden, PhD (919-541-6491), serves as RTI’s Project Director. In this role, she is the primary contact with the Technical Monitor and oversees work on all project tasks.

The survey instruments, sampling and data collection procedures, and analysis plan were designed in collaboration with researchers at HHS, CDC, and RTI (Exhibits B-5-1 and B-5-2). The following personnel have been involved in the design of the protocol and data collection instrument (note additional experts will be asked to review instruments before they are pilot tested and finalized but the respondent burden will not change):

Exhibit B-5-1. List of Individuals and Organizations That Were Consulted for the Study

Name |

Organization |

Contact Information |

Danielle Barradas, PhD |

Epidemiologist |

Phone: (770) 488-6286 |

Kristine Day, MPH |

Public Health Analyst |

Phone: (770) 488-5446 |

Joan Dorn, PhD |

Branch Chief Division of Nutrition, Physical Activity, and Obesity CDC |

Phone: (770) 488-6311 E-mail: [email protected] |

Janet Fulton, PhD |

Epidemiologist Division of Nutrition, Physical Activity, and Obesity CDC |

Phone: (770) 488-5430 E-mail: [email protected] |

Youlian Liao, MD |

Epidemiologist |

Phone: (770) 488-5299 |

Rashid Njai, PhD, MPH |

Epidemiologist |

Phone: (770) 588-5215 |

Paul Z. Siegel, MD, MPH |

Medical Epidemiologist |

Phone: (770) 488-5296 |

Robin Soler, PhD |

Senior Evaluator |

Phone: (770) 488-5103 |

Yechiam Ostchega, PhD, RN |

Nurse Consultant |

Phone: (301) 458-4408 |

Exhibit B-5-2. Leads in Data Collection, Research/Sampling Design, and Data Analysis

Task |

Lead |

Affiliation |

Reviewer |

Contact Information |

Data Collection |

||||

a. All data collection activities for ATSS |

Brenna Muldavin, MS |

RTI |

Kristina Peterson, PhD, MA |

Phone: (919) 541-6389

|

b. All data collection activities for YABS |

Jane Hammond, PhD |

RTI |

Dan Zaccaro, MS Brenna

Muldavin, MS |

Phone: (301)

770-8207 |

Study Design |

||||

|

Burton Levine, MS, MA |

RTI |

Rachel Harter, PhD Diane

Catellier, DrPH |

Phone: (919) 541-1252

|

|

Andrea Anater, PhD |

RTI |

Debra Holden, PhD Matthew

Farrelly, PhD |

Phone: (919) 541-6977

|

|

Burton Levine, MS, MA |

RTI |

Diane Catellier,

DrPH |

Phone: (919)

541-1252 |

|

Andrea Anater, PhD |

RTI |

Debra Holden, PhD |

Phone: (919) 541-6977

|

|

Burton Levine, MS, MA |

RTI |

Diane Catellier,

DrPH |

Phone: (919)

541-1252 |

|

Jane Hammond, PhD |

RTI |

Aten Solutions, Inc. (A10) |

Phone: (301)

770-8207 |

Data Analysis |

||||

|

Diane Catellier, DrPH |

RTI |

Rachel Harter, PhD |

Phone: (919)

541-6447 |

|

Jane Hammond, PhD |

RTI |

Diane Catellier, DrPH Dan

Zaccaro, MS |

Phone: (301)

770-8207 |

References

Nieto, F. J., Peppard, P. E., Engelman, C. D., McElroy, J. A., Galvao, L. W., Friedman, E. M., Bersch, A. J., & Malecki, K. C. (2010). The Survey of the Health of Wisconsin (SHOW), a novel infrastructure for population health research: rationale and methods. BMC Public Health, 10, 785.

Rosner, B. (1995). Fundamentals of biostatistics (Fourth Ed., p. 384). Belmont, CA: Duxbury Press.

Kwon, J. H., Jang, H. Y., Rho, J. S., Jung, J. H., Yum, K. S., & Han, J. W. (2011). Association of visceral fat and risk factors for metabolic syndrome in children and adolescents. Yonsei Medical Journal, 52(1), 39–44.

Skelton, J. A., Cook, S. R., Auinger, P., Klein, J. D., & Barlow, S. E. (2009). Prevalence and trends of severe obesity among US children and adolescents. Academy of Pediatrics, 9(5), 322–329.

Halterman, J. S., Borreli, B., Tremblay, P., Conn, K. M., Fagnano, M., Montes, G., & Hernandez, T. (2008). Screening for environmental tobacco smoke exposure among inner-city children with asthma. Pediatrics, 122(6), 1277–1283.

Butz, A. M., Halterman, J. S., Bellin, M., Tsoukleris, M., Donithan, M., Kub, J., Thompson, R. E., Land, C. L., Walker, J., & Bollinger, M. E. (2011). Factors associated with second-hand smoke exposure in young inner-city children with asthma. Journal of Asthma, 48(5), 449–457.

Cook, S., Weitzman, M., Auinger, P., Nguyen, M., & Dietz, W. H. (2003). Prevalence of a metabolic syndrome phenotype in adolescents. Archives of Pediatrics and Adolescent Medicine, 157(8), 821–827.

Tirosh, A., Afek, A., Rudich, A. Percik, R., Gordon, B., Ayalon, N., Derazne, E., Tzur, D., Gershnabel, D., Grossman, E., Karasik, A., Shamiss, A., & Shai, I. (2010). Progression of normotensive adolescents to hypertensive adults: A study of 26 980 teenagers. Hypertension, 56(2), 203–209.

DiSogra, C., Dennis, J. M., & Fahimi, M. (2010). On the quality of ancillary data available for address-based sampling. Presented at the 2010 Joint Statistical Meetings.

Link, M., & Mokdad, A. (2005). Advance letters as a means of improving respondent cooperation in RDD studies: A multi-state experiment. Public Opinion Quarterly, 69(4), 572–587.

De Leeuw, E., Callegaro, M., Hox, J., Korendijk, E., & Lensvelt-Mulders, G. (2007). The influence of advance letters on response in telephone surveys. A meta-analysis. Public Opinion Quarterly, 71(3), 413–443.

Cantor, D., Wang, K., & Abi-Habib, N. (2003). Comparing promised and pre-paid incentives for an extended interview on a random digit dial survey. Proceedings of the Survey Research Methods Section of the ASA, Nashville, TN.

Singer, E., Van Hoewyk, J., & Maher, M. P. (2000). Experiments with incentives in telephone surveys. Public Opinion Quarterly, 64(2), 171–188.

Groves, R. M. (2006). Nonresponse rates and nonresponse bias in household surveys. Public Opinion Quarterly, 70(5), 646–675.

Groves, R. M., Singer, E., & Corning, A. (2000). Leverage-saliency theory of survey participation—description and an illustration. Public Opinion Quarterly, 64, 299–308.

Cantor, D., O’Hare, B. C., & O’Connor, K. S. (2007). The use of monetary incentives to reduce non-response in random digit dial telephone surveys. In J.M. Lepkowski, C. Tucker, J. M. Brick, E. de Leeuw, L. Japec, P. J. Lavrakas, M. W. Link, & R. L. Sangster (eds.),.Advances in telephone survey methodology. John Wiley & Sons.

O’Muircheartaigh, C., & Campanelli, P. (1999). A multilevel exploration of the role of interviewers in survey non-response. Journal of the Royal Statistical Society, 162, 437–446.

Groves, R. M., & McGonagle, K. A. (2001). A theory-guided interviewer training protocol regarding survey participation. Journal of Official Statistics, 17, 249–265.

Catellier, D. J., Hannan, P. J., Murray, D. M., Addy, C. L., Conway, T. L., Yang, S. & Rice, J. C. (2005). Imputation of missing data when measuring physical activity by accelerometry. Medicine and Science in Sports and Exercise, 37(11 Suppl), S555–S562.

1 The test for the difference of two proportions is described in many places including Rosner (1995).38

2 Singer and colleagues49 have been cited as providing evidence toward the ineffectiveness of promised incentives to increase survey response rates. However, approximately 200 sample cases were assigned to each condition (with or without incentive) in their experiments, requiring very large differences to reach statistical significance. The pattern supported the effectiveness of promised incentives, as in all four of their experiments the response rate was higher in the condition with an incentive. Furthermore, the experiments were conducted in 1996 with response rates close to 70 percent, seemingly more difficult to be increased through incentives relative to the lower current response rates (below 50 percent on that same survey).

| File Type | application/msword |

| Author | chennessy |

| Last Modified By | Macaluso, Renita (CDC/ONDIEH/NCCDPHP) |

| File Modified | 2012-07-23 |

| File Created | 2012-06-22 |

© 2026 OMB.report | Privacy Policy