Att_1875-NEW 4869 Supporting Statesment A Task 7_revised 12-4

Att_1875-NEW 4869 Supporting Statesment A Task 7_revised 12-4.doc

Study of Strategies For Improving the Quality of Local Grantee Program Evaluation

OMB: 1875-0270

Section A. Justification

Introduction

The U.S. Department of Education provides support to states, districts and schools through a number of competitive and formula grant programs. Through these programs, the federal government funds a wide array of activities, from professional development for teachers to turnaround efforts for failing schools. High-quality evaluation plays an essential role informing policy makers about program performance, outcomes and impact. Performance reporting with high-quality data can indicate whether a funded project is meeting its goals and taking place as planned. Project evaluations can explore how best to implement a particular educational practice, whether positive student outcomes were attained, or whether a particular educational intervention actually caused the outcomes observed.

The Department has invested significant resources and developed a number of support strategies to improve the quality of grantee performance reporting and evaluations. Some of these strategies include:

Including a competitive priority for evaluation in grant applications

Convening group trainings for grantees on such topics as conducting high-quality evaluations and developing strong and measurable objectives and performance measures

Funding one-on-one technical assistance to individual grantees

Requiring the use of outside evaluators and/or submission of evaluation reports

Disseminating evaluation handbooks or other training materials

To date, the Department lacks comprehensive information about the quality or rigor of the performance reporting and evaluation activities its grantees are undertaking and whether the technical assistance provided has been useful in improving the quality of the performance reporting or evaluations. Accordingly, the focus of this study is to examine the influence of Department-funded technical assistance practices on the quality and rigor of grantee evaluations and performance reporting in two Department programs (described below). It will describe the technical assistance provided by the Department to support grantee performance reporting and evaluation; explore how grantees perceive the technical assistance has influenced their activities; assess the quality of performance reporting and evaluations undertaken; and determine how the findings from performance reporting and evaluations were used both by grantees and by the Department.

This study will be based upon a systematic review of existing documentation as well as interviews with selected grantees and with federal staff and federal contractors involved in grant monitoring and in the provision of technical assistance to grantees. The interviews with selected grantees are the subject of this OMB clearance request.

This study will focus on two grant programs within the Department’s Office of Innovation and Improvement: the Charter Schools Program: State Educational Agencies (CSP SEA) program and the Voluntary Public School Choice (VPSC) program. A brief description of each program is provided below.

Voluntary Public School Choice (authorized under the Elementary and Secondary Education Act of 1965, as amended, Title V, Part B, Subpart 3, (20 U.S.C. 7225-7225g)). The goal of the VPSC program is to support the creation and development of a large number of high-quality charter schools that are held accountable for enabling students to reach challenging state performance standards, and are open to all students. The program was first enacted as part of the No Child Left Behind Act of 2001 (Pub. L. No. 107-110, § 115, Stat. 1425) to support the emergence and growth of choice initiatives across the country. VPSC’s goal is to assist states and local school districts in creating, expanding, and implementing public school choice programs. The program has awarded two cycles of competitive grants to states, local education agencies, and partnerships that include public, nonprofit and for-profit organizations. In 2007, the most recent award year, the program awarded a total of 14 competitive grants to two states, eight school districts, a charter school, an intermediate school district, and KIPP schools in Texas.

Charter Schools Program: State Educational Agencies (authorized under the Elementary and Secondary Education Act of 1965, Section 5201-5211 (20 U.S.C. 7221a)). Federal support for charter schools began in 1995 with the authorization of the CSP. The CSP SEA program awards competitive grants to state education agencies to plan, design, and implement new charter schools, as well as to disseminate information on successful charter schools. The key goals of the CSP SEA program are to increase the number of charter schools in operation across the nation and to increase the number of students who are achieving proficiency on state assessments of math and reading. The CSP statute also addresses expanding the number of high-quality charter schools and encouraging states to provide support for facilities financing equal to what states provide for traditional public schools. Grants have been awarded to 40 states, including awards to 33 states since 2005. Grants are typically awarded for three years and may be renewed.

This study will review all technical assistance provided to CSP SEA and VPSC grantees on performance reporting and evaluations and how grantees conduct these activities. All CSP SEA and VPSC grantees are required by the Department to conduct performance reporting. Although grantees are not required to conduct any particular type of evaluation, the study will review both impact evaluations and non-impact evaluations conducted by grantees.1 The study approach, with respect to the review of performance reporting, impact evaluations, and non-impact evaluations, is described below.

Performance Reporting

The goal of performance reporting is to measure performance and track outcomes of the project’s stated goals and objectives. The collection of accurate data on program performance is necessary for the reporting required by the Government Performance and Results Act (GPRA), which was passed by Congress in 1993 and updated through the GPRA Modernization Act of 2010. The latter will require even more frequent reporting—quarterly instead of annually.

In addition to requiring grantees to collect annual data in support of GPRA reporting, the CSP SEA and VPSC programs encourage grantees to develop implementation and outcome measures in support of other program goals. Throughout this document, when we refer to grantee performance measures, we are referring to the measures grantees use not only for GPRA reporting, but also for reporting on other activities and outcomes.

The CSP and VPSC programs provide technical assistance to grantees on developing appropriate objectives and performance measures and on obtaining quality data in support of those measures. Because all grantees conduct some kind of performance reporting, this study’s examination of performance reporting encompasses all grantees. It will describe the type of technical assistance provided, categorize the types of performance measures that grantees address, determine whether the measures are responsive to the GPRA indicators defined for each program, review whether the initial set of performance measures changed as a result of the technical assistance received, and examine the quality and appropriateness of data collection for those measures.

Impact Evaluation

While documenting implementation activities and outcomes can be useful to school and district administrators, it does not provide information on the effectiveness of funded interventions. The only evaluation designs that provide credible evidence about the impacts of interventions are rigorous experimental and quasi-experimental designs. Impact evaluations can provide guidance about what interventions should be considered for future funding and replication.

This study will review the quality and rigor of all impact evaluations being conducted of higher-order outcomes, particularly student achievement, using criteria that were adapted from the What Works Clearinghouse review standards for grantees as part of the Department’s Data Quality Initiative. These criteria were revised by Abt Associates as part of its annual review of Mathematics and Science Partnership final-year evaluations. The criteria are listed in Appendix A. The study will also examine the completeness and clarity of evaluation reports submitted as part of an impact evaluation.

Non-Impact Evaluation

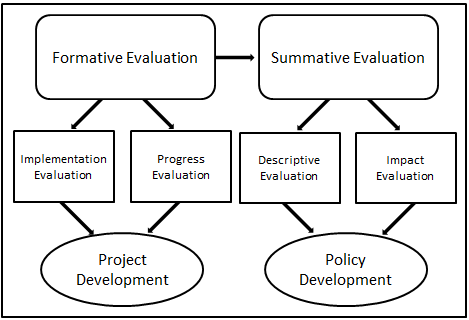

Grantees may choose to conduct non-impact evaluations to examine program outcomes and implementation processes. Non-impact evaluations may include both formative implementation and process evaluations that evaluate a program as it is unfolding, and summative descriptive evaluations that examine changes in final outcomes in a non-causal manner. A full framework of formative and summative evaluations is included in Appendix B.

The main focus of the review of non-impact evaluations will be on those that focus on a change in higher-order outcomes using a one-group pre-post design. For these evaluations, the study will examine the appropriateness of data collection strategies for the design chosen and whether the findings of the study are described appropriately based on the design. The study will also describe other non-impact evaluations that grantees have undertaken, without commenting on their quality.

Data collection, including conducting interviews and reviewing extant documents, is required to complete this study. Part A of this request discusses the justification for these data collection activities, while Part B describes the data collection and analysis procedures.

A.1. Circumstances Requiring the Collection of Data

While the Department has made a concerted effort, through technical assistance and other support strategies, to improve the quality of evaluations and performance reporting, there is little systematic information about the results of these efforts across grantees. Little is known about how grantees use the technical assistance and other support strategies provided to change their performance reporting and evaluation activities, or what activities they find most challenging. Similarly, there is no consistent information across grantees about the types of performance reporting and evaluation activities that are being undertaken and how the findings are used to inform project improvement or federal policymaking. A better understanding of these issues could help the Department ensure that its support to grantees is well targeted.

The study research questions that will be answered are listed below.

What technical assistance do Office of Innovation and Improvement grantees receive to help them improve the quality of their evaluations and performance reporting?

RQ1a. Who funds it? Who provides it?

RQ1b. To what extent does this technical assistance occur in group settings, such as program conferences, vs. through on-demand services that grantees can access when needed?

How do grantees examine project outcomes and effectiveness?

RQ2a. Do local grantees use performance indicators to measure progress and track performance? What performance indicators do grantees use? How is this performance measurement used for program improvement? Are performance measures aligned with GPRA indicators and other program and project goals? Are appropriate data being collected and analyzed regularly in support of the performance measures?

RQ2b. Do local grantee evaluations examine the effectiveness of their programs in meeting higher-level project goals and objectives? How do they define the goals and objectives? How do they examine and measure effectiveness? To what extent do such evaluations use designs that are appropriate to the evaluation questions? Are appropriate data available to support such analysis?

RQ2c. What are the barriers to conducting and improving grantee performance measurement or evaluations of project effectiveness?

RQ2d. Has the technical assistance provided to grantees helped them improve the quality of their performance reporting and/or local evaluations?

How are grantee evaluations and performance reporting used to inform project improvement and federal policymaking?

RQ3a. To what extent are grantee performance reporting and evaluations used to inform federal program monitoring and policymaking?

RQ3b. To what extent do grantees use them to adjust their current program vs. planning future programs?

RQ3c. What are the barriers to using evaluation or performance measurement results for project improvement?

RQ3d. Has the technical assistance provided to grantees helped them improve their use of performance measurement or evaluation results for project improvement?

Research Question 1 and each of its subquestions focus on the technical assistance provided to grantees; they will be answered by a review of the technical assistance materials and presentations provided to grantees and through interviews with grantees, federal program staff, technical assistance providers, and grantee monitors. Research Questions 2a, and 2b, which focus on how grantees examine project outcomes and effectiveness, will be answered by reviewing grantee documents that describe the performance reporting and evaluations being conducted by grantees. Research Questions 2c, 3a, 3b, 3c, and 3d, which focus on barriers and on how grantee findings are used, will be answered through interviews with grantees, federal program staff, technical assistance providers, and grantee monitors. Finally, Research Question 2d, which focuses on how the technical assistance helps grantees to improve their performance reporting and evaluations, will be answered by triangulating information collected from document reviews and interviews.

To maximize study efficiency and minimize respondent burden, we will use a purposive sample to select a diverse set of grantees for interviews. Therefore, the findings generated from the grantee interviews will not be generalizable to all CSP and VPSC grantees. However, the findings from all of the interview respondent groups will be triangulated to provide cohesive answers to each of the research questions.

A.2. Purposes and Uses of the Data

This study is intended to inform the Department’s decisions about how to structure future grant competitions; how to support evaluation and performance reporting activities among funded grantees, including technical assistance to improve the quality of evaluations and performance reporting; and how to make the best possible use of grantee evaluation findings.

A.2.1. Document Review

The review of existing documents will serve as the foundation for the study. The study will review extant documents describing the technical assistance supports provided to grantees and the activities conducted by grantees. The study will examine technical assistance materials provided to grantees, as well as a variety of performance reports, monitoring reports, and evaluation documents for funded grantees to obtain a complete understanding of their performance reporting and evaluation activities. The study will also collect materials from the grant application process because that is the point at which the CSP and VPSC programs define their expectations regarding evaluation. The document review will be the foundation of this study, insofar as it reflects the information that is actually being provided to the Department.

In the document review, the study team will assess parameters such as:

Alignment of performance measures with GPRA reporting requirements;

Clarity of objectives and performance measures;

Appropriateness of specific data sources or instrument; and

Completeness of data.

In addition, for grantees conducting an evaluation, the study team may assess the following types of parameters, depending on the type of evaluation:

Appropriateness of design for the research question;

Appropriateness of the timing of data collection in pre-post studies;

Baseline equivalence of comparison group; and

Attrition rate.

The study team will examine the parameters across all grantees. This will identify commonalities—for example, activities that are challenging for many grantees—as well as noteworthy exceptions, such as specific grantees that appear to be doing very well in areas that are difficult for many of their counterparts. These patterns will be useful in and of themselves, as they will provide the Department with a program-wide perspective on grantees’ performance reporting and evaluation activities. They will also be used to inform the selection of respondents for grantee interviews.

A.2.2. Interviews

Semi-structured interviews with a sample of grantees will supplement the information available in the extant documents. In order to more fully understand the factors that shape grantees’ performance reporting and evaluation activities, this study will also conduct interviews with grantee project directors, grantee data managers, and grantee evaluators. The grantee interviews are the subject of this OMB request.

The grantee interviews will examine the grantee experiences and attitudes that underlie what is included in the documentation they provide. Among the topics to be addressed are grantees’ perceptions of technical assistance received, reasons for changes in performance reporting or evaluations undertaken, challenges or barriers in this respect, and ways that grantees use the performance reporting and evaluation data they collect. Because the selection of grantees for interviews will use a purposive sample, the grantee interview findings will not be generalizable to all CSP and VPSC grantees.

In addition to interview with grantees, interviews with federal program directors and staff, technical assistance contractors, and grant monitoring contractors will help to provide important background and context. The role of each respondent group is described below.

Grantee project directors, data managers, and evaluators are all involved in grantee evaluation activities.

Grantee project directors are the points of contact between the project and the program office. They are responsible for implementing the project as defined in the grant application and for meeting all grant requirements.

Grantee data managers include those individuals responsible for collecting data, cleaning data, and, in some cases, analyzing and reporting on data collected.

Grantee evaluators typically use the project data to assess project implementation and outcomes.

Federal program directors and staff work within the CSP or VPSC program offices conducting the grant competitions, monitoring grantees, and reviewing completed grantee findings in an effort to inform federal policymaking.

Technical assistance contractors are hired by federal program offices to provide technical assistance to grantees regarding their evaluation activities. For the CSP SEA and VPSC grant programs, the technical assistance contractors assisted grantees in developing quality performance measures for their projects.

Grant monitoring contractors, also hired by the federal program offices, are tasked with monitoring the fidelity of each project’s implementation and ensuring grantee compliance with federal regulations.

Appendix C includes a crosswalk of key concepts covered across the interviews and Appendix E includes topics guides for interview with federal staff and contractors.

A.3. Use of Information Technology to Reduce Burden

Since the study team will be conducting telephone interviews with a relatively small number of respondents, there is no plan to use information technology for data collection. To safeguard against unnecessary follow-up inquiries with the interviewees, the data collection team will request permission to record all interviews for data accuracy. Additionally, to ensure respondents are prepared for the interview, a list of interview topics will be emailed to respondents in advance of the interviews.

A.4. Efforts to Identify Duplication

The study team has already begun to collect all available grantee documentation from the CSP SEA and VPSC program offices to avoid requesting information from grantees that they have already provided to the Department.

The Department of Education is currently conducting a Program Performance Data Audit under the Data Quality Initiative2 that is examining data collection, analysis, and reporting processes for a sample of grantees. However, the proposed study does not duplicate that effort because the Program Performance Data Audit does not include either of the Department programs included in this study. Furthermore, the proposed study addresses additional issues that are not covered under the DQI audit project, including the assistance strategies that are being used to improve the quality of evaluation and performance reporting, reviews whether grantees are using designs that are appropriate to the evaluation questions and that are internally valid, and identifies the barriers to conducting and improving grantee evaluations of project effectiveness.

A.5. Small Business

All information for this study will be collected from federal government staff and contractors, grantee staff, and evaluators. No small businesses will be involved as respondents. However, some grantees may be schools or small organizations that could be classified as small entities. The study will make every effort to schedule the interviews at times convenient to all respondents.

A.6. Consequences of Not Collecting the Information

This study will examine how various types of technical assistance supports affect the quality of grantee evaluations and performance reporting. Without such a study, the Department would not know the effectiveness of each type of technical assistance, and would not be well informed about how best to allocate technical assistance resources.

A.7. Special Circumstances Justifying Inconsistencies with Guidelines in 5 CFR 1320.6

There are no special circumstances associated with this data collection. The data collection will comply with 5 CFR 1320.6, which authorizes OMB to approve information collections.

A.8. Selection of Public Comments and Consultation with People Outside the Agency

A.8.1. Federal Register Announcement

The Department published a notice in the Federal Register on 6/17/12, Vol. 77, page 35665 announcing the agency’s intention to request OMB clearance for this study and soliciting public comments. No public comments were received.

A.8.2. Consultations Outside the Agency

Key study activities will be conducted by the research firm Abt Associates Inc., as a subcontractor to SRI International.

A.9. Payments or Gifts to Respondents

No payment or gift will be provided to respondents.

A.10. Assurance of Confidentiality

Data will be processed in accordance with federal and state privacy statutes. Detailed procedures for making information available to various categories of users are specified in the Education and Training System of Records (63 Fed. Reg. 264, 272, January 5, 1998). The system limits access to personally identifiable information to authorized users. Information may be disclosed to qualified researchers and contractors in order to coordinate programs and to a federal agency, court or party in court, or federal administrative proceeding, if the government is a party.

All study staff involved in collecting, reviewing, or analyzing the data will be knowledgeable about data security procedures. The privacy procedures adopted for this study include the following:

Respondents who serve as grantee project directors or staff members will be advised that they will not be identified by name in the study report.

Study staff will adhere to strict standards of confidentiality. Study staff will maintain the security and integrity of interview data.

Summaries of the interviews will be maintained on a secure server with appropriate levels of password and other types of protection.

No confidentiality assurances are given, although every attempt will be made to not provide information that identifies a subject or district to anyone outside the study team, except as required by law. The study team will be aggregating the responses within each program, in order to understand what seems most effective in supporting grantees. No individuals or grantee organizations will be identified by name in our reports. The grantee documents, interview notes, and interview recordings will be maintained on a secure server with appropriate levels of password and other types of protection. Respondents will be contacted through their work email addresses and telephone numbers, but no other personally identifiable information will be collected.

A.11. Questions of a Sensitive Nature

The study team does not plan to collect any information that is sensitive in nature. However, respondents may choose not to provide any information that they feel is sensitive. All interviews will be based on semi-structured interview protocols that have been reviewed by the contractor’s institutional review board.

A.12. Estimates of Response Burden

A.12.1. Number of Respondents, Frequency of Response, and Annual Hour Burden

The initial target population for this study includes 33 CSP SEA and 14 VPSC grantees. The document review process will include the entire universe of grantees. The team will use the results of the document review and the interviews with federal staff and contractors to develop a sampling strategy for the grantee interviews.

In consultation with PPSS, the study team will purposively sample approximately 15–20 grantees to interview, based on the challenges and strengths identified for each grantee from the document review as well as on other characteristics that may be of interest, such as whether grant is from the CSP SEA or VPSC program, whether or not they attempted to implement a full evaluation, and their reported level of utilization of technical assistance.

For each grantee, the study team will conduct telephone interviews with the grantee project director and, if applicable, the data manager and evaluator. Each interview is expected to last between 30 and 60 minutes. For the purposes of calculating burden estimates for conducting interviews, we assumed that interviews will be conducted with 20 grantees and each interview will be 60 minutes long. We also assume that all interviews will be conducted with three individuals (project directors, data managers, and evaluators), even though in some cases, the same individual may be responsible for all three functions. This represents the maximum burden of primary data collection associated with this study. As shown in Exhibit 1, the response burden for conducting interviews is estimated to be a maximum of 60 hours for a total of 60 respondents.

Exhibit 1. Estimated Total Burden Hours of Interviews on Respondents

Respondents |

Time per Response (Hours) |

Number of Respondents |

Total Time Burden (Hours) |

Grantee Project Directors |

1 |

20 |

20 |

Grantee Data Managers |

1 |

20 |

20 |

Evaluator |

1 |

20 |

20 |

Total |

60 |

60 |

|

Exhibit reads: Twenty grantee project directors will spend one hour each completing interviews, for a total of 20 hours.

The annual number of respondents, responses and burden hours are 20.

A.12.2. Estimates of Annualized Cost to Respondents for the Hour Burdens

As shown in Exhibit 2, the maximum cost to respondents is estimated to be $2,080. Where specific salary information is unavailable, estimated hourly salaries are based on average reported salaries of each respondent group or, in some cases, of individuals in a comparable occupation (see notes in Exhibit 2 for more details).

Exhibit 2. Estimated Annual Burden Cost to Respondents

Respondent Type |

Hourly Salary Estimate |

Burden Time per Respondent |

Estimated Cost to Respondent |

Number of Respondents in Category |

Estimated Cost Across all Respondents |

Grantee Project Directors |

$42 |

1 |

$42 |

20 |

$840 |

Grantee Data Managers |

$31 |

1 |

$31 |

20 |

$620 |

Evaluators |

$31 |

1 |

$31 |

20 |

$620 |

Total |

60 |

$2,080 |

|||

Exhibit reads: Twenty grantee project directors will spend one hour each at an estimated $42 per hour completing interviews, for a total of 20 hours and $840.

Salary Sources:

Grantee

project directors: average of five publicly available salaries of CSP

and VPSC project directors in different states.

Grantee data

managers and evaluators: based on average annual salary for

postsecondary education teachers ($64,370)

(http://www.bls.gov/oes/current/oes251081.htm).

A.13. Estimate of Total Capital and Startup Costs/Operation and Maintenance Costs to Respondents or Record Keepers

There are no additional cost burdens associated with this collection other than those listed at the end of A.12.

A.14. Estimates of Costs to the Federal Government

The annual cost to the federal government for the activities included in this request for approval is $105,956 over three years. This cost estimate includes study design, instrument development, data collection, data transmission and verification, analysis, and reporting. Indirect as well as direct costs are included in this total.

A.15. Changes in Burden

This is a new collection of information.

A.16. Plans for Publication, Analysis, and Schedule

A.16.1. Plans for Publication

The purpose of this research is to examine how the provision of technical assistance is related to the quality of evaluations and performance reporting. The study team will prepare a final report based on the findings of the study. The final report is intended to inform policy discussions related to the provision of technical assistance for evaluations and performance reporting within the Department.

All products of the collection are the property of the Department of Education. As such, the Department will be the exclusive publisher of the study findings.

A.16.2. Analysis

A.16.2.1. Review of Grantee Documentation

As described above, extant documents will provide important information. Grantee documentation will describe grantee activities associated with evaluation and performance reporting. A review of the materials developed by the technical assistance provider will provide an indication of the kinds of technical assistance supports available to grantees. For each grantee, the study team will conduct the following review activities.

At the grantee level, the study team will examine:

Whether grantees provide information for each of the GPRA indicators, and whether the information provides the exact information needed or just related information.

Changes in evaluation activities and performance measures from application to annual and final reports.

Describe the types of performance measures and evaluations conducted.

For all grantee performance measures, the following review activities will be conducted:

Categorize measures—determine categorization of each measure into one of the following areas: GPRA, student achievement, school quality, student outcome, public relations and communications, scale and scope, capacity building, operations, and financial management.

Determine whether measures are clearly defined.

Examine the quality of data collection in terms of data collection instruments, unit of analysis, and availability of data.

Determine the appropriateness of data collection activities for their intended purpose.

For grantees that conduct non-impact one group pre-post evaluations, the following review activities will be conducted:

Specify the type of design and its appropriateness for the research questions.

Assess the appropriateness of the data collection strategy in terms of methods and instruments, unit of measure, timing, and efforts to ensure completeness of data.

Determine whether outcomes are appropriately stated.

For all grantees that attempt to estimate the impacts of the intervention, the review process will assess the completeness and clarity of evaluation reports submitted, whether the grantee used an experimental or quasi-experimental design, and if so, whether the design met four criteria that examine whether these evaluations were conducted successfully and yielded scientifically valid results.

The criteria were developed as part of the Department’s Data Quality Initiative and adapted and revised by Abt Associates as part of its annual review of Mathematics and Science Partnership final-year evaluations. The four areas reviewed are listed below, and the detailed criteria are described in Appendix A.

Data reduction rates (e.g., attrition rates, response rates)

Baseline equivalence of groups

Quality of the measurement instruments

Quality of the data collection methods

A.16.2.2. Analysis of Interview Data

The study team will upload the interview notes into NVivo, a qualitative software package that facilitates data analysis. At the conclusion of the interview period, members of the study team will meet to discuss the themes that emerged and craft a coding scheme that will be applied to the interview data. This coding scheme will be updated based on patterns emerging from the data. The study team will generate and review data outputs from all interviews associated with a given theme to compare responses across grantees and key stakeholders. Analysis of the interview data will identify similarities and differences in the use and apparent effects of technical assistance for evaluations and performance reporting as well as document successes and challenges of grantee evaluations and performance reporting.

Extant documents and interviews will be summarized and triangulated across sources to respond to each of the research questions. A matrix of the data sources that will be used to answer each research question is shown in Exhibit 3.

Exhibit 3. Research Questions by Data Source

|

Research Questions |

||

Data Sources |

1. What TA do grantees receive to help them improve the quality of their evaluations and performance reporting? |

2. How do grantees examine project outcomes and effectiveness? |

3. How are grantee evaluations and performance reporting used to inform project improvement and federal policy making? |

Extant Documents |

|||

Grant requests for applications |

|

|

|

Grant applications |

|

|

|

Annual and final performance reports |

|

|

|

Grantee evaluation reports |

|

|

|

Grant monitoring reports |

|

|

|

Guidance to grantees from the technical assistance contractor, including technical assistance materials |

|

|

|

Interviews |

|||

Grantees project directors and data managers |

|

|

|

Grantee evaluators |

|

|

|

Federal program directors and staff |

|

|

|

Technical assistance contractors |

|

|

|

Grant monitoring contractors |

|

|

|

Exhibit reads: Among extant documents, grant requests for application will provide data to address Research Question 1.

A.16.3 Project Time Schedule

The estimated project timeline is presented in Exhibit 4 below.

Exhibit 4. Estimated Project Timeline

Fall 2011–spring 2012 |

|

Spring 2012 |

|

Fall 2012 |

|

Summer 2012–fall 2012 |

|

Fall 2012–spring 2013 |

|

A.17. Approval to Not Display Expiration Date

The data collection instruments will display the expiration date.

A.18. Exceptions to Item 19 of OMB Form 83-I

No exceptions are sought.

Appendix A: Criteria for Classifying Grantee Impact Evaluations

This appendix includes the criteria for classifying grantee evaluation designs that will be used to determine whether grantees successfully collected and analyzed performance measures to evaluate whether outcomes were caused by their programs. The criteria were developed as part of the Department’s Data Quality Initiative and revised by Abt Associates as part of its annual review of Mathematics and Science Partnership final-year evaluations.

Criteria for Classifying Designs of Grantee Evaluations

Experimental study. The study measures the intervention’s effect by randomly assigning individuals (or other units, such as classrooms or schools) to a group that participated in the intervention, or to a control group that did not, and then compares post-intervention outcomes for the two groups.

Quasi-experimental study. The study measures the intervention’s effect by comparing post-intervention outcomes for treatment participants with outcomes for a comparison group (that was not exposed to the intervention), chosen through methods other than random assignment. For example:

Comparison-group study with equating—a study in which statistical controls and/or matching techniques are used to make the treatment and comparison groups similar in their pre-intervention characteristics.

Regression-discontinuity study—a study in which individuals (or other units, such as classrooms or schools) are assigned to treatment or comparison groups on the basis of a “cutoff” score on a pre-intervention nondichotomous measure.

Criteria for Assessing Whether Experimental and Quasi-Experimental Designs Were Conducted Successfully and Yielded Scientifically Valid Results

Data Reduction Rates (e.g., Attrition Rates, Response Rates)3

Met the criterion. Key post-test outcomes were measured for at least 70 percent of the original sample (treatment and comparison groups combined) and differential attrition (i.e., difference between treatment group attrition and comparison group attrition) between groups was less than 15 percentage points.

Did not meet the criterion. Key post-test outcomes were measured for less than 70 percent of the original sample (treatment and comparison groups combined) and/or differential attrition (i.e., difference between treatment group attrition and comparison group attrition) between groups was 15 percentage points or higher.

Not applicable. This criterion was not applicable to quasi-experimental designs unless it was required for use in establishing baseline equivalence (see the baseline equivalence of groups criterion below).

Baseline Equivalence of Groups

Met the criterion (quasi-experimental studies). There were no significant pre-intervention differences between treatment and comparison group participants in the analytic sample on the outcomes studied, or on variables related to the study’s key outcomes.

The mean difference in the baseline measures was less than or equal to 5 percent of the pooled sample standard deviation; or

The mean difference in the baseline measures was more than 5 percent but less than or equal to 25 percent of the pooled sample standard deviation, and the differences were adjusted for in analyses (e.g., by controlling for the baseline measure).

If the data required for establishing baseline equivalence in the analytic sample were missing (and there was evidence that equivalence was tested), then baseline equivalence could have been established in the baseline sample providing the data reduction rates criterion above was met.

Met the criterion (experimental evaluations that did not meet the data reduction rates criterion above). There were no significant pre-intervention differences between treatment and comparison group participants in the analytic sample on the outcomes studied, or on variables related to the study’s key outcomes.

Did not meet the criterion. Baseline equivalence between groups in a quasi-experimental design was not established (i.e., one of the following conditions was met):

Baseline differences between groups exceeded the allowable limits; or

The statistical adjustments required to account for baseline differences were not conducted in analyses; or

Baseline equivalence was not examined or reported in a quasi-experimental evaluation (or an experimental evaluation that did not meet the data reduction rates criterion above).

Not applicable. This criterion was not applicable to experimental designs that met the data reduction rates criterion above.

Quality of the Measurement Instruments

Met the criterion. The study used existing data collection instruments that had already been deemed valid and reliable to measure key outcomes; or data collection instruments developed specifically for the study were sufficiently pre-tested with subjects who were comparable to the study sample.

Did not meet the criterion. The key data collection instruments used in the evaluation lacked evidence of validity and reliability.

Did not address the criterion.

Quality of the Data Collection Methods

Met the criterion. The methods, procedures, and timeframes used to collect the key outcome data from treatment and control groups were the same.

Did not meet the criterion. Instruments/assessments were administered differently in manner and/or at different times to treatment and control group participants

Appendix B: Evaluation Framework

An evaluation framework that associates evaluation types and appropriate purposes is displayed in Exhibit B.1 below (adapted from Frechtling 2010 and Lauer 2004).

Exhibit B.1: Evaluation Framework

There are two basic types of evaluations that can be used to examine the implementation, progress, outcomes and impacts of grantee projects.

Formative evaluations answer questions about how a project operates and document the procedures and activities undertaken. Such evaluations help to identify problems faced in implementing the project and potential strategies for overcoming these problems. Implementation evaluations help project staff ensure that the project is being implemented appropriately, and progress evaluations provide information on the extent to which specified program objectives are being attained. Both types of evaluations instruct project staff on changes needed to fully develop the project.

Summative evaluations focus on questions of how well a fully developed project reached its stated goals. Descriptive evaluations answer questions about what, how, or why something is happening, and impact evaluations answer questions about whether something causes an effect. Only well-designed and implemented summative evaluations can help to inform policy makers about future funding decisions. The Department of Education’s work to improve the quality of grantee evaluations has meant ensuring that grantees select evaluation activities that match Department and grantee needs, and successfully complete those activities.

Appendix C. Crosswalk of Interview Topics by Respondent Type

|

Respondent |

|||

Topic |

Grantee |

TA Contractor |

Grant Monitoring Contractor |

Federal Program Staff |

1. What technical assistance do OII grantees receive to help them improve the quality of their evaluations and performance reporting? |

||||

ED’s goals and priorities with respect to provision of TA |

|

|

|

|

Guidance given by ED to TA contractor about provision of TA |

|

|

|

|

Selection of TA contractor (process, reasons) |

|

|

|

|

Description of specific TA provided by federal program offices (topic, format, intensity, etc.) |

|

|

|

|

Description of TA specific provided from sources other than federal program offices to improve performance reporting/evaluation |

|

|

|

|

Grantees’ opinions/feedback about TA received |

|

|

|

|

Other strategies and supports offered to grantees (outside of TA provided by contractor) to improve performance reporting/evaluation |

|

|

|

|

Opinions about types of TA that would be useful to grantees |

|

|

|

|

2. How do grantees examine project outcomes and effectiveness? |

||||

Guidance given grantees about types of performance reporting/evaluation activities to undertake |

|

|

|

|

Issues related to ….

|

|

|

|

|

Grantee conduct of evaluation as part of ED grant |

|

|

|

|

Grantee conduct of evaluation outside of ED grant |

|

|

|

|

Degree to which TA has resulted in changes to (improvements in) grantee performance reporting and evaluation activities |

|

|

|

|

Degree to which other factors (besides TA) account for changes to (improvements in) performance reporting and evaluation activities |

|

|

|

|

3. How are grantee evaluations and performance reporting used to inform project improvement and federal policymaking? |

||||

Usefulness of performance data or evaluation findings to managing grantees’ current programs |

|

|

|

|

Usefulness of performance data or evaluation findings to planning grantees’ future programs |

|

|

|

|

Usefulness of performance data or evaluation findings to federal policymaking |

|

|

|

|

Barriers to greater or more effective grantee use of performance data or evaluation findings |

|

|

|

|

Appendix E. Interview Topic Guide for Federal Program Staff and Contractors

I. Goals of TA Provided (TA Contractor, Federal Program Staff)

Goals / priorities of TA efforts provided to grantees

Specific performance reporting/evaluation activities encouraged

Guidance, if any, given to grantees about GPRA reporting

Guidance, if any, given to grantees regarding reporting on effectiveness

Guidance, if any, given to grantees regarding evaluation designs

II. Format of TA Provided (TA Contractor, Grant Monitoring Contractor, Federal Program Staff)

Types of TA offered (e.g. workshops, conference presentations, webinars, handbooks, one-on-one consultations)

For each type of TA offered:

Topic

Process of curriculum development (why this topic, was content ED-prescribed)

Confirm funding

Timing (when offered, how often)

Format, method of delivery

Intensity (length of time)

Attendance (number, percent of eligible)

Participant feedback sought systematically?

Reasons for nonattendance (to extent known)

III. Perceptions of TA Provided (TA Contractor, Grant Monitoring Contractor, Federal Program Staff)

Grantee feedback received (re: helpfulness, perceived strengths of TA, suggestions for improvement)

Grantee questions asked during/after TA sessions

Perceptions of grantees’ most pressing issues re: performance measurement, performance reporting and/or evaluation

Your assessment of what aspects of TA worked well, suggestions for improvement

IV. Grantees’ Issues with respect to Performance Measurement/Evaluation (TA Contractor, Grant Monitoring Contractor, Federal Program Staff)

Perceptions of performance measurement/evaluation issues that are/ are not a challenge for grantees

Perceptions of types of TA most needed by grantees, with respect to:

Topic

Frequency

Format

Intensity

Perceptions (to the extent possible) of effects of TA on grantees’ performance measurement/evaluation activities

Perceptions (to the extent possible) of barriers to greater use

Perceptions (to the extent possible) of how grantees use results of performance measurement/evaluation to make changes to the program

Perceptions (to the extent possible) of barriers to greater use

Perceptions (to the extent possible) of how federal program staff use the results of grantees’ performance measurement/evaluations to inform program monitoring and policymaking.

Perceptions (to the extent possible) of barriers to greater use.

V. Performance Measurement/Evaluation Activities Outside of the CSP/VPSC Grants (Grant Monitoring Contractor, Federal Program Staff)

TA received by grantees outside of their CSP/VPSC grants

Topic

Supported by whom?

How does it relate to CSP SEA/VPSC-funded activities?

Performance measurements/evaluations that grantees undertake outside their CSP/VPSC grant

What

For whom?

How does it relate to CSP SEA/VPSC -funded activities?

VI. Selection of TA Contractor (Federal Program Staff)

How selected the TA contractor

Why

Guidance given about TA to provide

VII. Other Strategies and Supports offered to Grantees (outside of TA contractor) (Grant Monitoring Contractor, Federal Program Staff)

Goals and priorities with respect to improving quality of grantees’ performance measurement/evaluations (which aspects are more/less important, why)

Are other supports (besides the TA offered by the TA contractor) offered to grantees for performance measurement/evaluation?

If so, for each type:

Topic

Grantee feedback about helpfulness, what worked well, suggestions for improvement

Your assessment of whether supports were helpful, suggestions for improvement

Are other strategies used to improve the quality of grantee performance measurement/evaluations (e.g. competitive priority, selection points given for evaluations, evaluation requirements)

Description

Perceptions about effectiveness of each strategy

Suggestions for improvement

1 Impact evaluations are summative evaluations that, if implemented properly, could be used to make causal claims about the effects of an intervention. Non-impact evaluations include both formative and summative evaluations, but cannot be used to make causal claims.

2 The Program Performance Data Audit project (OMB Number: 1875-NEW(4647)) is being conducted for the Policy and Program Studies Service under contract ED-04-CO-0049.

3 The data reduction and baseline equivalent criteria were adapted from the What Works Clearinghouse standards (see http://ies.ed.gov/ncee/wwc/pdf/wwc_procedures_v2_standards_handbook.pdf).

Study

of Quality of Local Grantee Program Evaluations Appendix A.

Criteria for Classifying Impact Evaluations

| File Type | application/msword |

| Author | Noah Mann |

| Last Modified By | katrina.ingalls |

| File Modified | 2012-12-07 |

| File Created | 2012-12-07 |

© 2026 OMB.report | Privacy Policy