SEP Detailed Evaluation

Attachment A. SEP Detailed Evaluation Plan.docx

State Energy Program Evaluation

SEP Detailed Evaluation

OMB: 1910-5170

Detailed Study Plan: Final

National Evaluation of the United States Department of Energy’s State Energy Program

|

Oak Ridge National Laboratory

Prepared by KEMA Inc and its subcontractors

Fairfax, VA

VA

June 30, 2011

1. Introduction 1

1.1.1 SEP History 3

1.1.2 Program Year 2008 v. ARRA Period 5

1.2 Evaluation Objectives and Approach 8

1.2.1 Objectives 8

1.2.2 Overview of Approach 10

1.2.3 Selected Methodological and Logistical Challenges and Solutions 14

1.3 Structure of the Detailed Study Plan 15

2. Characterization of Programmatic Activities 17

2.1 ARRA-funded Programmatic Activities: PY2009 – 2011 21

2.1.1 Sources of Information 21

2.1.2 Decision Rules for Classifying Programmatic Activities 23

2.2 PY2008 Programmatic Activities 24

2.2.1 Sources of Information 24

2.2.2 Decision Rules for Classifying Programmatic Activities 26

2.3 Sub-categorization of BPACs 27

3. Sampling of Programmatic Activities and Expansion of Sample Results 31

3.1 Overview of Sampling Approach 31

3.1.1 Total Sample Size 31

3.1.2 Sampling Frame 31

3.1.3 Rigor Level 31

3.1.4 PA Sample Allocation 32

3.4 Implementing the PA Sample Design 49

3.4.1 Misclassification and Multiple Classifications 49

3.4.2 Independent State-Specific Evaluations 50

3.5 BPAC-Specific Impact Calculations 51

3.5.1 Portfolio-Level Impact Calculations 52

3.5.2 Error Bounds 52

4. Estimation of Energy Impacts 54

4.1.1 Framework for Specification of Impact Assessment Methods 54

4.1.2 Groupings of Programs for Energy Impact Assessment Planning 55

4.2 Evaluation Plans: Building Retrofit and Equipment Replacement 61

4.2.1 Introduction 61

4.2.2 Energy Impacts Assessment Approach 66

4.3 Renewable Energy Market Development Programs 78

4.3.1 Introduction 78

4.3.2 Energy Impacts Assessment Approach: Renewable Energy Market Development - Projects 80

4.3.3 Energy Impacts Assessment Approach: Renewable Energy Market Development - Manufacturing 84

4.3.4 Energy Impacts Assessment Approach: Renewable Energy Market Development – Clean Energy Policy Support 88

4.4 Information and Training Programs 90

4.4.1 Introduction 90

4.4.2 Energy Impacts Assessment Approach 90

4.5 Codes and Standards Programs 95

4.5.1 Introduction 95

4.5.2 Estimation of Potential Energy Effects 95

5. Attribution Approaches 98

5.1.1 Fundamental Research Questions 99

5.1.2 Available Methods for Assessing Attribution 107

5.1.3 Application of Available Methods to Evaluation PAs in Different Groups 112

5.2.1 Assessment of Market Actor Response 115

5.2.2 Relative effect of multiple programs 117

5.2.3 Influence of SEP on Other Programs 122

5.3.1 Renewable Energy Market Development – Manufacturing 123

5.3.2 Codes and Standards Programs 126

6. Evaluation of Carbon Impacts 131

6.1 Assessment of Carbon Impacts 131

6.2 Presentation of Results 133

7. Evaluation of Employment Impacts 134

7.1 Assessment of Employment Impacts 134

7.1.1 Broad Parameters of Jobs Assessment 134

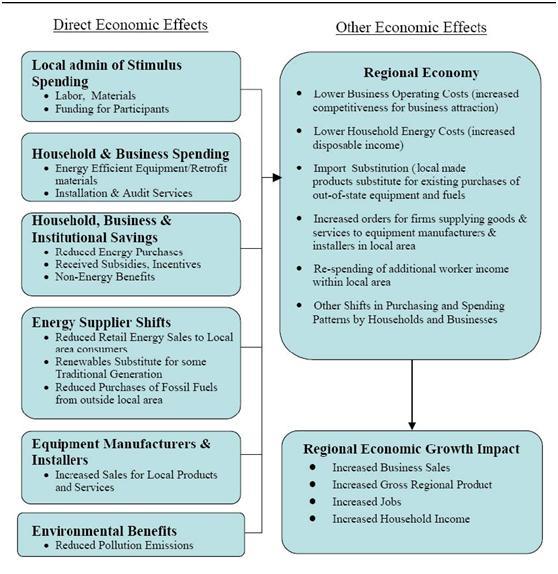

7.1.2 Economic Impact Model for identifying Job Impacts 134

7.1.3 Translating SEP Project Direct Effects into Economic Events 138

7.1.4 Presentation of Job Impacts 140

8. Benefit-Cost Analysis 141

8.1 Types of Benefit-Cost Metrics 141

8.2 Implementing Benefit-Cost Calculations 143

8.2.1 SEP RAC Test 143

8.2.2 Net present value of energy savings versus program costs 144

8.3 Level of Benefit-Cost Assessment 144

9. Timeline 145

FIGURES

Figure 1. SEP Funding Allocations by State (PY2008 and ARRA Period) 6

Figure 2. Key Evaluation Metrics 8

Figure 3. Distinguishing Attributes by Refined BPAC 19

Figure 4: BPACs and Subcategories 29

Figure 5: Percent of PY2008 SEP Budget by BPAC and Subcategory 35

Figure 6: Percent of ARRA SEP Budget by BPAC and Subcategory 37

Figure 7: Number of Available PAs for Selected BPAC/Subcategory (PY2008) 39

Figure 8: Number of Available PAs for Selected BPAC/Subcategory (ARRA) 41

Figure 9: Allocated Sampling Targets by BPAC/Subcategory and Rigor Level (PY2008) 45

Figure 10: Allocated Sampling Targets by BPAC/Subcategory and Rigor Level (ARRA) 47

Figure 12. Representation of Energy Savings from Retrofit 62

Figure 13. Sample Sizes Required for 90 Percent Confidence Intervals Around Ratio Estimators 71

Figure 15. Verification for Building Retrofit & Equipment Replacement Group: High Rigor 75

Figure 16. Ratepayer Program and SEP Budgets for Selected States ($ millions) 103

Figure 17. Applications of Attribution Assessment Methods to Evaluation of PAs in PA Groupings 113

Figure 18. Overview of Research on Market Actor Effects 117

Figure 20. Forecasts of Measure Sales: Baseline and Actual with Program Support 125

Figure 21. Acceleration of Code Adoption 127

Figure 22: REMI Economic Forecasting Model – Basic Structure and Linkages 136

Figure 23. Identifying Economic Impacts in the REMI Framework 137

This document provides a detailed plan for conducting an evaluation of the State Energy Program, a national program operated by the U. S. Department of Energy (DOE). DOE’s Office of Weatherization and Intergovernmental Program (OWIP), which manages the State Energy Program, has commissioned this evaluation. Its principal objectives are to develop an independent estimate of key program outcomes:

Reduction in energy use and expenditures

Production of energy from renewable sources,

Reduction in carbon emissions associated with energy production and use, and

Generation of jobs through the funded activities.

Because of the magnitude and temporal nature of ARRA funding, this evaluation effort has two different but coordinated paths. The contractor team will examine key program outcomes for both the SEP 2008 program year (July 2008 to June 2009) and for ARRA (2009 to present). Based on early feedback from stakeholders and program staff at DOE, this evaluation effort was refocused on 2008 because it will be more likely to characterize the SEP program after the ARRA period, when funding levels return to pre-ARRA levels.

The State Energy Program (SEP) provides grants and technical support to the states and U.S. territories which enables them to carry out a wide variety of cost-shared energy efficiency and renewable energy activities that meet each state’s unique energy needs while also addressing national goals such as energy security. Congress created the SEP in 1996 by consolidating the State Energy Conservation Program (SECP) and the Institutional Conservation Program (ICP), which were both established in 1975.

To be counted as part of SEP, an activity must be included in the State Plan submitted to SEP and supported, in part, by SEP funds. While it is not unusual for evaluators to refer to a related set of activities (e.g., multiple energy audits) performed in a single year under a common administrative framework as a “program,” such efforts are referred to in this document as “programmatic activities (PA).” Typically, the programmatic activities designed and carried out by the states with SEP support involve a number of actions (e.g., multiple retrofits performed or loans given). In some cases, they combine a number of different types of actions designed to advance the program’s objectives, for example: energy audits may be combined with financial incentives such as loans or grants to promote energy efficiency measures in targeted buildings.

In February 2009, the American Reinvestment and Recovery Act (ARRA) was signed into law and allocated $36.7 billion to the Department of Energy (DOE) to fund a range of energy-related initiatives: energy efficiency, renewable energy, electric grid modernization, carbon capture and storage, transportation efficiency, alternative fuels, environmental management and other energy-related programs. The primary goals for DOE programs funded by ARRA include rapid job creation, job retention, and a reduction in energy use and the associated greenhouse gas emissions; deadlines for fund expenditures were set to ensure that funds were spent within several years. SEP received $3.1 billion of these funds, which began to be disbursed in late 2009. The deadline for expenditure of all ARRA funds allocated to SEP is April 2012. This program period thus encompasses two and one-half years, spanning SEP’s Program Years (PY) 2009 – 2011.1 By way of contrast, SEP funding in PY2008 was $33 million.2

Under the American Recovery and Reinvestment Act (ARRA) the amount of funding available to support the states’ SEP activities increased dramatically, and as a result, the mix of programmatic activities changed from previous patterns. Once the ARRA funding has been expended, the volume and mix of SEP activities are expected to return to levels typical of the pre-ARRA period. For this evaluation, OWIP has elected to assess the outcomes of programmatic activities for one program year (PY2008) prior to the distribution of ARRA funding, as well as for the full set of programmatic activities that received ARRA support. OWIP believes that this approach will make best use of limited evaluation resources, given that future SEP program years are more likely to resemble pre-ARRA activities than the ARRA-funded activities. These latter will be implemented in Program Years 2009 – 2011. Given the strong differences in volume, scope, and relative priority of policy goals between the pre-ARRA and the ARRA-funded activities, the evaluation team believes it is most appropriate to treat the efforts as separate programs for purposes of sampling state-level activities and estimating national impacts.

The remaining sections of this Introduction provide an overview of SEP as it operated prior to ARRA, the organization and operation of SEP under ARRA funding, the objectives of this evaluation, and its basic methodological approach. Each of these topics is treated in considerable detail in subsequent chapters of this Detailed Study Plan.

Congress created the Department of Energy's State Energy Program in 1996 by consolidating the State Energy Conservation Program (SECP) and the Institutional Conservation Program (ICP). Both programs went into effect in 1975. SECP provided states with funding for energy efficiency and renewable energy projects. ICP provided hospitals and schools with a technical analysis of their buildings and identified the potential savings from proposed energy conservation measures.

Several pieces of legislation form the framework for the State Energy Program:3

The Energy Policy and Conservation Act of 1975 (P.L. 94-163) established programs to foster energy conservation in federal buildings and major U.S. industries. It also established the State Energy Conservation Program.

The Energy Conservation and Production Act of 1976

The Warner Amendment of 1983 (P.L. 95-105) allocated oil overcharge funds—called Petroleum Violation Escrow (PVE) funds—to state energy programs. In 1986, these funds became substantial when the Exxon and Stripper Well settlements added more than $4 billion into this mix.

The State Energy Efficiency Programs Improvement Act of 1990 (P.L. 101-440) encouraged states to undertake activities designed to improve efficiency and stimulate investment in and use of alternative energy technologies.

The Energy Policy Act (EPAct) of 1992 (P.L. 102-486) allowed DOE funding to be used to finance revolving funds for energy efficiency improvements in state and local government buildings. (However, no funding was provided for this activity.) EPAct recognized the crucial role states play in regulating energy industries and promoting new energy technologies. EPAct also expanded the policy development and technology deployment role for the states. Many EPAct regulations extended through 2000, and we are currently waiting for updates through the National Energy Policy.

The American Recovery and Reinvestment Act of 2009 provided $3.1 billion for SEP formula grants with no matching fund requirements.

SEP Goals and Metrics

The State Energy Program (SEP) is a cornerstone of a larger partnership between DOE and the states. SEP program goals therefore reflect the partnership's long-term strategic goals and each energy office's current year objectives.

Goals. The mission of the State Energy Program is to provide leadership to maximize the benefits of energy efficiency and renewable energy through communications and outreach activities, technology deployment, and accessing new partnerships and resources. Working with DOE, state energy offices address long-term national goals to:

Increase the energy efficiency of the U.S. economy,

Reduce energy costs,

Improve the reliability of electricity, fuel, and energy services delivery,

Develop alternative and renewable energy resources,

Promote economic growth with improved environmental quality,

Reduce reliance on imported oil.

The State Energy Program also helps states prepare for natural disasters and improve the security of the energy infrastructure. Specifically, SEP helps states meet federal requirements to:

Prepare an energy emergency plan,

Develop individual state energy plans. Each state shares its plan with DOE, sets short-term objectives, and outlines long-term goals.

The State Energy Program outlines this vision and mission in more detail in its Strategic Plan for the 21st Century." 4

Metrics. Through the State Energy Program, DOE provides a wide variety of financial and technical assistance to the states. States routinely add their own funds and leverage investments from the private sector for energy projects. Some results of the State Energy Program are thus easily measured; for example, energy and cost savings can be quantified according to the types of projects state energy offices administer. Other benefits are less tangible; for example, developing a plan for energy emergencies.

Funding Formulas and Competitive Procedures

SEP provides money to each state and territory according to a formula that accounts for population and energy use. In addition to these “Formula Grants,” SEP “Special Project” funds are made available on a competitive basis to carry out specific types of energy efficiency and renewable energy activities (U.S. DOE 2003c). The resources provided by DOE typically are augmented by money and in-kind assistance from a number of sources, including other federal agencies, state and local governments, and the private sector.

For program year (PY) 2008, the states’ SEP efforts included several mandatory activities, such as establishing lighting efficiency standards for public buildings, promoting car and vanpools and public transportation, and establishing policies for energy-efficient government procurement practices. The states and territories also engaged in a broad range of optional activities, including holding workshops and training sessions on a variety of topics related to energy efficiency and renewable energy, providing energy audits and building retrofit services, offering technical assistance, supporting loan and grant programs, and encouraging the adoption of alternative energy technologies. The scope and variety of activities undertaken by the various states and territories in PY 2008 was extremely broad, and this reflects the diversity of conditions and needs found across the country and the efforts of participating states and territories to respond to them.

A total of $33 million in SEP funding was made available during PY2008 to the states and territories as shown in Figure 1Figure 1. Under the American Recovery and Reinvestment Act (ARRA) the amount of funding available to support the states’ SEP activities increased dramatically and the mix of programmatic activities funded also changed considerably.

Figure 1. SEP Funding Allocations by State (PY2008 and ARRA Period)

State/Territory |

PY2008 SEP Formula Grant Allocation |

SEP ARRA Obligations |

Alabama |

$517,000 |

$55,570,000 |

Alaska |

$250,000 |

$28,232,000 |

American Samoa |

$160,000 |

$18,550,000 |

Arizona |

$476,000 |

$55,447,000 |

Arkansas |

$403,000 |

$39,416,000 |

California |

$2,151,000 |

$226,093,000 |

Colorado |

$518,000 |

$49,222,000 |

Connecticut |

$493,000 |

$38,542,000 |

Delaware |

$223,000 |

$24,231,000 |

District of Columbia |

$212,000 |

$22,022,000 |

Florida |

$1,135,000 |

$126,089,000 |

Georgia |

$734,000 |

$82,495,000 |

Guam |

$167,000 |

$19,098,000 |

Hawaii |

$233,000 |

$25,930,000 |

Idaho |

$259,000 |

$28,572,000 |

Illinois |

$1,398,000 |

$101,321,000 |

Indiana |

$800,000 |

$68,621,000 |

Iowa |

$472,000 |

$40,546,000 |

Kansas |

$422,000 |

$38,284,000 |

Kentucky |

$539,000 |

$52,533,000 |

Louisiana |

$620,000 |

$71,694,000 |

Maine |

$298,000 |

$27,305,000 |

Maryland |

$615,000 |

$51,772,000 |

Massachusetts |

$753,000 |

$54,911,000 |

Michigan |

$1,177,000 |

$82,035,000 |

Minnesota |

$716,000 |

$54,172,000 |

Mississippi |

$378,000 |

$40,418,000 |

Missouri |

$656,000 |

$57,393,000 |

Montana |

$244,000 |

$25,855,000 |

Nebraska |

$321,000 |

$30,910,000 |

Nevada |

$279,000 |

$34,714,000 |

New Hampshire |

$280,000 |

$25,827,000 |

New Jersey |

$964,000 |

$73,643,000 |

New Mexico |

$297,000 |

$31,821,000 |

New York |

$1,941,000 |

$123,110,000 |

North Carolina |

$750,000 |

$75,989,000 |

North Dakota |

$232,000 |

$24,585,000 |

Northern Marianas |

$160,000 |

$18,651,000 |

Ohio |

$1,311,000 |

$96,083,000 |

Oklahoma |

$463,000 |

$46,704,000 |

Oregon |

$427,000 |

$42,182,000 |

Pennsylvania |

$1,336,000 |

$99,684,000 |

Puerto Rico |

$412,000 |

$37,086,000 |

Rhode Island |

$258,000 |

$23,960,000 |

South Carolina |

$463,000 |

$50,550,000 |

South Dakota |

$226,000 |

$23,709,000 |

Tennessee |

$628,000 |

$62,482,000 |

Texas |

$1,858,000 |

$218,782,000 |

Utah |

$327,000 |

$35,362,000 |

Vermont |

$226,000 |

$21,999,000 |

Virgin Islands |

$174,000 |

$20,678,000 |

Virginia |

$742,000 |

$70,001,000 |

Washington |

$585,000 |

$60,944,000 |

West Virginia |

$366,000 |

$32,746,000 |

Wisconsin |

$740,000 |

$55,488,000 |

Wyoming |

$215,000 |

$24,941,000 |

Total |

$33,000,000 |

The overall objective of this evaluation is to develop independent, quantitative estimates of key program outcomes for the largest programmatic activities accounting for at least 80 percent of funding for each period of study, and aggregated to selected groups of Broad Programmatic Activity categories (BPAC) that share common energy savings mechanisms as described in Section 4.

Figure 2 lists the key metrics to be estimated along with elements of the working definitions DOE has assigned to them for purposes of this evaluation.

Figure 2. Key Evaluation Metrics

Metric Category/Metric |

Elements of the Working Definition |

Energy Savings Annual energy savings |

|

Lifetime energy savings |

|

Electric demand savings |

|

Figure 2 (continued). Key Evaluation Metrics

Metric Category/Metric |

Elements of the Working Definition |

Renewable Energy Capacity and Generation Capacity |

|

Annual Renewable Energy Generation |

|

Lifetime Renewable Energy Generation |

|

Energy Cost Savings Annual energy cost savings |

|

Lifetime energy cost savings |

|

Carbon Emissions Reductions Annual CO2 Emissions Avoided |

|

Lifetime CO2 Emissions Avoided |

|

Direct and Indirect Job Impacts Direct Job Impacts (Created or Retained) |

|

Indirect Job Impacts (Created or Retained) |

|

DOE expects that the analysis conducted to quantify the evaluation metrics listed in Figure 2 will help to identify lessons learned that can be applied to improve the outcomes and cost effectiveness of future SEP operations.

The basic steps or stages in the evaluation will be as follows.

Characterize the full set of PY2008 and ARRA-funded programmatic activities in terms of Broad Program Activity Categories (BPACs) and measures of size. In terms of the evaluation, the principal objectives of this step are to:

Develop the sample frame from which the individual PAs to be evaluated will be selected, and based on which results for individual PAs will be expanded to the full program.

Provide input data to support sample design, including the definition of subcategories in addition to Program Year and BPAC grouping and the allocation of sample resources to final set of sample subcategories.

Develop the information needed to expand the results from the sampled PAs to estimate total impacts for the BPAC Groups, PY 2008 Programmatic Activities, and ARRA-funded programmatic activities.

Gather information on the level and quality of available program documentation, which will be used to make final determinations of evaluation approaches to be taken in regard to specific BPACs.

Develop the sample of individual PAs for evaluation. The KEMA team will select a sample of at least 82 individual PAs from more than 450 in operation during PY2008 and 575 ARRA-funded PAs. See Section 3 for a description of the objectives, methods, and preliminary design of the PA sample selection process. Once a PA has been selected into the sample, the KEMA team will deploy the evaluation in the following steps.

Assess evaluability of the sampled individual PAs. The Evaluation Team will need some specific pieces of information in order to determine whether a PA that has been selected into the sample can be evaluated at the assigned level of rigor. These are as follows.

Match of actual program operations to the BPAC definition. As discussed below, the KEMA team has developed detailed working definitions for each BPAC. If, upon selection and detailed review of activities, we find that a PA has been misclassified, it will be evaluated consistent with its actual activity. Its expansion weight—or factor used to project an estimate to the population—will be based on the BPAC it was selected from.

Progress in implementation. In order to carry out high- or medium-high-rigor evaluations, the program needs to have resulted in a sufficient number of the targeted actions, such as completion of retrofit projects or installations of renewable energy equipment, for a sample to be drawn and tested by December 2011.

Quality and availability of program records. For high- and medium-high-rigor evaluations, it will be necessary to contact participants in the program. In most cases we will need to be able to characterize the services that participants received from the program at the individual level. If such records are not available at the time of PA selection and cannot, in the evaluator’s judgment, be reconstructed within schedule and budget constraints, then the PA will be dropped from the sample and a substitute selected. If a large proportion of the PAs in a BPAC have insufficient data to support a medium high rigor evaluation, it may be necessary to reduce the rigor level for the BPAC.

In the evaluation plans below we identify the criteria we will use to assess evaluability for each BPAC.

Prepare evaluation plans for the BPACs of selected individual PAs. Once the evaluability of the selected PA has been established, the next step will be to incorporate that PA into its associated BPAC plan that takes into account its specific goals and objectives, market environment, activities and service offerings, and the quality of its tracking records as necessary. As described in Section 4 below, the individual BPAC evaluation plans will be short, highly structured documents that specify the type and amount of data collection to be carried out, the types of analytic approaches to be applied, the staff and subcontractors to be used, the labor and direct costs required, and the implementation schedule. These plans are meant to serve primarily as a tool for managing overall project resources and for quality control.

Estimate the energy impacts of the selected individual PAs. For each selected individual PA, the KEMA team will carry out an assessment of energy impacts. That is, we will quantify the energy savings, renewable energy capacity and generation, and energy cost savings metrics listed in Figure 2 at the level of rigor specified by DOE. For this evaluation, DOE has identified three levels of rigor for assessment of energy impacts:

High-rigor evaluations require verification of savings through best practice methods, particularly methods recognized in the California Evaluation Protocols, DOE’s Impact Evaluation Framework for Technology Deployment Programs, and the International Performance Measurement and Verification Protocol. These methods include on-site verification and/or performance monitoring of a sample number of projects supported by the program, whole building utility meter billing analysis, surveys of participants and nonparticipants, and combinations of building simulation modeling and other engineering analysis with the first two methods.5 In some cases these verification methods may be mixed with less intensive approaches such as file review and telephone contact with program participants to increase sample size. Sample results are expanded to the population using statistical methods, such as ratio estimation or regression analysis.

Medium-high-rigor evaluations require verification of savings with individual participants, using less intensive data collection and analysis methods than those prescribed for high rigor. All input data may be collected through telephone contact with participants, supplemented by review of program documentation. These data are then combined with documented input assumptions and applied to standard engineering formulae to estimate savings for all or a sample of participants.6 On-site data collection, if used at all in medium rigor evaluations, will be applied either in exceptional cases, such as when a single project represents a large portion of potential savings for the PA, or where needed to support key assumptions used in the engineering-based assessments. Sample sizes will also be smaller in the medium-high-rigor assessments.

Medium-low-rigor evaluations will not include any primary data collection from individual program participants to estimate savings. Rather it will combine information that can be gained from program records with secondary sources and engineering-based methods to generate energy savings estimates.

See Section 4 for details of high, medium-high, and medium-low rigor energy impact assessment approaches to be applied in regard to specific BPAC groups.

Assess the attribution of estimated energy impacts to the individual PAs. For each selected individual PA, the KEMA team will carry out an analysis to assess the portion of estimated energy impacts that were attributable to the SEP programmatic activities under review, as opposed to other influences such as general developments in the market or the activities of other organizations offering similar kinds of programs or services. For assessing the attribution effects of the SEP, because multiple funding sources are common, impacts must be attributed to SEP and other sources. The ramifications of this are as follows:

Attribution of effects must be assessed separately for each individual programmatic activity study.

A multi-step attribution approach will be used to include logic models, model validation, cause and effect relationships, funding stream analysis, behavior change assessment, and other established techniques to quantify effects.

An examination of what SEP caused to happen will need to account for program-induced capacity developed over time.

See Section 5 for a discussion of our general approach to attribution and its application to evaluation of PAs in specific BPAC Groups.

Estimate effects of individual PAs on carbon emissions. The contractor team will use estimates of annual and lifetime energy savings attributable to the program as inputs to a model that estimates carbon emissions reductions based on the carbon content of fossil fuels and electricity consumption avoided. See Section 6 for a description of this analysis.

Estimate effects of individual PAs on employment. The energy savings estimates will be combined with other program information, such as matching funds contributed, participant expenditures for labor and materials, and direct program expenditure as inputs into a regional economic model to estimate employment impacts. See Section 7 for a description of these analyses.

Estimate costs and benefits. Program reporting guidelines7 require that sponsors use only one cost-effectiveness test, designated the SEP Recovery Act Cost (SEP RAC) Test which is computed as source BTUs saved per $1,000 in program expenditure or investment. The SEP Recovery Act Financial Assistance Funding Opportunity Announcement specified that states should seek to achieve annual energy savings of 10 million source BTUs per $1,000 of program investments. See Section 8 for further detail on benefit-cost analysis.

Once the individual PA evaluations have been completed and reviewed by the senior contractor team for accuracy and completeness, the effort will shift to aggregation of sample results to the national level and interpretation of findings. KEMA and its subcontractors will expand the sample results to the most well-funded BPACs, using the relationship between verified metrics for the sample PAs and information on measures of size (funding).

The remainder of this Detailed Study Plan contains separate and fairly extensive chapters on each of the key sets methodological requirements: assessment of energy impacts, program attribution, carbon emission reductions, job creation, and general benefits and costs. Our proposed methods take into account a number of considerations in addition to the challenges posed by the scope of SEP activities and DOE’s evaluation objectives. Principal among these are the following.

Paperwork Reduction Act Requirements. The Paperwork Reduction Act (PRA) requires that all data collection instruments and protocols that will be administered to 10 or more “people of the general public, including federal contractors” be reviewed and approved by the Office of Management and Budget (OMB). The 11-step process includes three periods of public comment totaling 120 days, and OMB has 60 days to make its final approval decision following the close of public comments. The process will likely require six to nine months to complete. Once data collection instruments have been reviewed and approved by OMB, they may not be changed except within prescribed bounds to facilitate their administration in a variety of settings. Given these constraints, the KEMA team has designed our overall research effort to optimize the number of data collection forms and protocols that will require OMB review.

Evaluations of ARRA-funded SEP programs funded and sponsored by individual states. As of the submission of this Detailed Study Plan, KEMA is aware of a number of evaluations of ARRA-funded SEP programmatic activities being conducted by state energy offices and other program sponsors.8 The KEMA team will coordinate with these efforts to avoid sampling programmatic activities that are being evaluated by the states. The KEMA team will assess the methods being used by the states to determine whether they meet the rigor levels and employ methods approved by DOE. In those cases, we will incorporate the results of these studies into the estimate of national impacts, using the sample stratification and weighting system described in Section 3. Any recommendations for importation of results from other efforts will be submitted to DOE for review and approval prior to implementation.

The remainder of the Detailed Study Plan is structured into the following sections.

Section 2: Characterization of Programmatic Activities. This section presents the methods and results of the evaluation team’s efforts to classify and characterize SEP programmatic activities at the state level for PY2008 and under ARRA funding. The results of this analysis form the basis of our proposed sampling plan.

Section 3: Sampling Plan and Expansion of Sample Results. This section presents the approach for selecting the programmatic activities to be evaluated, including sample segmentation, allocation of sample to segments defined by BPAC and rigor level, estimation of expected sampling error at the BPAC and program levels, and sample selection procedures. This section also summarizes methods to expand the findings from the sample programmatic activities to the full set of programmatic activities.

Section 4: Estimation of Energy Impacts. This section provides a summary of the methods to be applied in estimating energy savings and renewable energy generation associated with each Broad Program Activity Category.

Section 5: Attribution Assessment. This section presents the basic strategies and methods that will be applied to assess the attribution of observed outcomes to the effects of the sample programmatic activities, and their application to specific kinds of PAs in the BPACs.

Section 6: Evaluation of Carbon Impacts. This section presents the methods that will be applied to quantify national-level carbon reductions for the BPACs evaluated and for the program as a whole.

Section 7: Evaluation of Employment Impacts. This section presents the methods that will be applied to quantify net jobs created for the BPACs evaluated and for the program as a whole.

Section 8: Benefit-Cost Analysis. This section presents the methods that will be used to collect and analyze benefit and cost information at both the individual PA and aggregated levels. It covers the full range of benefit and cost metrics, as well as the relevant benefit-cost test.

Section 9: Project Schedule. This section presents the schedule of project activities and deliverables.

Transforming the PY2008 and ARRA program data into a format that can support evaluation research is a key task in the design and execution of this study plan. The program data will serve as the backbone for all major evaluation study elements, including the following:

An evaluability assessment

Sample development and stratification

Sample expansion of findings to the population

Methodological development for gross and net savings estimation

The KEMA team received the program tracking data from DOE for PY2008 (maintained in the WinSaga database system) and from ARRA (in the PAGE database system) and conducted an extensive review. On balance, neither dataset was well suited to support the kind of analyses required under this evaluation effort. The PAGE database for ARRA is uniformly more complete and internally consistent than is the WinSaga database for PY2008. However, the data content of each database lacked an organizational structure for the first key task of the evaluation team: sorting and classifying the programmatic activities into categories established by past SEP evaluation efforts and according to the requirements of the Statement of Work. KEMA manipulated the data into a structure that was organized by programmatic activity and produced data management tools that facilitated supplemental data collection for this project.

The Statement of Work for this study provides the following guidance for BPACs based on past SEP evaluation research and the metric categories provided in the Funding Opportunity Announcement (FOA) for the SEP grants under ARRA.9 Those original sixteen BPACs specified in the Statement of Work are as follows:

Retrofits

Renewable energy market development

Loans, grants, and incentives

Workshops, training, and education

Building codes and standards

Industrial retrofit support

Clean energy policy support

Traffic signals and controls

Carpools and vanpools

Technical assistance to building owners

Commercial, industrial, and agricultural audits

Residential energy audits

Government and institutional procurement

Energy efficiency rating and labeling

Tax incentives and credits

New construction and design

As the first key step, KEMA team members worked collaboratively with key study authors of past SEP evaluation research10 to develop standards and decision rules for the sorting and classification tasks. Since many of the activity descriptions provided in the SOW are derived from the FOA, contractor staff reviewed the FOA to ensure that the standards used to classify the programmatic activities were consistent with the FOA’s intent. KEMA then established a set of distinguishing attributes for the BPACs based on the information obtained from SEP researchers and the FOA language to ensure consistency in assignment across the team. In some cases, we thought it was necessary to decompose some of the BPACs by market segment or program delivery mechanism. Additionally, DOE directed the contractor team to bundle PAs relating to the Workshops, Education and Training (WET) BPAC into the remaining BPACs, removing the WET-related PAs as a BPAC altogether.

Finally, we began the process of assigning programmatic activities to the BPACs and the process thereafter was somewhat iterative. As the KEMA team learned more about the actual programmatic activities, the distinguishing attributes of the BPACs were further refined and the classifications were recast.

Figure 3 summarizes the distinguishing attributes of each BPAC as they have been settled through the iterative process.

Figure 3. Distinguishing Attributes by Refined BPAC

BPAC |

Distinguishing PA Attributes Relevant to Primary BPAC Designation |

Building Retrofits |

|

Technical Assistance |

|

|

|

|

|

Energy Audits: Commercial, Industrial and Agricultural |

|

|

|

|

|

Energy Audits: Residential |

|

|

|

|

|

Renewable Energy Market Development |

|

Clean Energy Policy Support |

|

Transportation |

|

|

|

|

|

Traffic Signals |

|

Building Codes and Standards |

|

Energy Efficiency Rating and Labeling |

|

|

|

|

|

Government, School and Institutional Procurement |

|

New Construction and Design |

|

|

|

|

|

Loans, Grants, and Incentives |

|

|

|

|

|

|

|

Tax Incentives and Credits |

|

|

|

Allocations To Be Removed from Sample Frame |

|

Administration |

|

Energy Emergency Planning |

|

The specific BPAC data classification activities for ARRA are described first for several reasons. DOE provided these data first, exported from the PAGE database.11 Additionally, these data are more complete and consistent across the states.

This section describes KEMA’s approach to developing the frame for analysis for the ARRA period. First, we describe the sources of information, the decision rules, and then some basic descriptive statistics on the results of those classification activities. Data quality issues are addressed throughout this section at each step in the process.

DOE delivered the PAGE database complete through the third quarter of 2010 in the form of five separate Excel spreadsheets. KEMA analyzed the data structure and established the relationships between each of the spreadsheets and imported the data into Microsoft Access. KEMA also interviewed key DOE staff on the key data contents and reviewed them for any value in classifying programmatic activities according to the BPACs. To complete the BPAC sorting and classification task, the data required the following:

A unique list of Programmatic Activities: The third quarter (Q3) 2010 PAGE data contained 443 Market Titles. Upon review of the data, these could be derived from either the Market Title data or the Activity data which, in turn, represented a complete set of finer composite data for each Market Title parent.

Funding data associated with each Programmatic Activity: The funding allocation could either be reported at the Market Title level or as the sum of funding for all Activities within a given Market Title.

Descriptive information to assist in the classification process: KEMA determined that the data field most closely aligned with the original 16 BPACs identified in the SOW is called the “Main Metric Area” and was associated with the Market Title records, but not the lower level Activity records.

KEMA performed a join query operation to associate all Activity level data with the associated Market Title data at the parent level.

The subsequent sorting and classification process that followed had four steps:

Preliminary BPAC data match: KEMA developed a matching algorithm to assign a preliminary BPAC to each Activity based on the Main Metric Area based on how closely it aligned with a given BPAC. In most cases the match was exact. For BPACs having no reasonable match in the Main Metric Area data, no preliminary BPAC was assigned.

PAGE Activity Record Review: KEMA regional coordinators reviewed the detailed record data for each activity and either confirmed or reassigned the preliminary BPACs as appropriate.

Internet Research on Programmatic Activities: KEMA regional coordinators organized teams of analysts to perform internet research on various programmatic activities and supplement information to the PAGE data as appropriate. KEMA updated preliminary BPAC assignments based on any new information uncovered.

Interviews with the assigned state DOE Project Officers: To minimize burden on, and build a rapport with, the DOE Project Officers (POs), the KEMA regional coordinators were assigned states to ensure that DOE Project Officers were communicating with only one KEMA staff member—namely the regional coordinator. KEMA developed a brief interview guide in consultation with ORNL/DOE and reviewed all programmatic activities and the BPAC assignments with the DOE POs.

Verification of PA data by State Energy Program staff. Because the sample data was developed from the Q3 2010 PAGE data, the KEMA contractor team verified the status of all PAs with the States’ Energy Offices, such as whether funding amounts changed, the PA was dropped, and in some cases whether the BPAC assignment had changed.

Throughout this iterative process described above, regular meetings informed the process to ensure consistency in the BPAC assignments across regional coordinators, and to refine and tighten the distinguishing attributes for each BPAC.

While the decision rules are presented above, KEMA needed to maintain some basic principles in its BPAC assignments because many, if not most, programmatic activities have elements of multiple BPACs in them. The basic principles were:

Assign the BPAC that most fits the programmatic activity.

Assign the highest level rigor possible that reasonably fits the programmatic activity.

Assign a secondary or tertiary BPAC if a programmatic activity exhibits such strong supporting elements.

Assign “Administration” as a BPAC for funded activities that are primarily administrative in nature and have no programmatic feature that would deliver energy savings.

As a result of the iterative process and basic principles described above, KEMA made some key refinements to the BPAC distinguishing attributes, including the following:

If the programmatic activity included a condition for a retrofit component, it was assigned to a retrofit BPAC. For example, if an audit program only provided funding for the audit if the retrofit was performed, this would be assigned as a Building Retrofit.

Because the gross estimation procedure and sampling unit for LED traffic signal upgrades—an efficiency measure—is so different from traffic control systems designed to reduce idling times and emissions, programmatic activities that primarily focused on reducing transportation emissions were assigned to the Transportation category, expanded from Carpools and Vanpools.

In this section, we describe how KEMA developed the frame for the PY2008 programmatic activities. Following the organization above, first we present the sources of information, the decision rules, and then some basic descriptive statistics on the results of those classification activities. Data quality issues are addressed throughout this section at each step in the process.

Based on the knowledge gained by KEMA through the PAGE database development process, KEMA supplied DOE with general specifications for the requested PY2008 data. DOE was required to complete a fairly extensive manual process to extract and build the requested program data sets from the WinSaga Program Tracking Database. DOE delivered the data sets covering the PY2008 period in six separate Excel spreadsheets. KEMA analyzed the data structure and established few relationships between each of the data sets, but determined that two data sets, namely the Market Title data set and the Metrics data set, contained the information required. KEMA also interviewed key DOE staff on the key data contents and reviewed them for any value in classifying programmatic activities according to the BPACS. Like the PAGE data, to complete the BPAC sorting and classification task, the data required the following:

A unique list of Programmatic Activities: Upon review of the data, these could be derived from the Market Title data which did not have any associated data for further disaggregation. The PY2008 WinSaga data contained 578 unique Market Titles covering 55 states/territories. KEMA had no way of verifying that the PY2008 records of Market Titles are complete and one state in particular, Maryland, had no programmatic activity data associated with it whatsoever.

Funding data associated with each Programmatic Activity: KEMA reviewed the data with a DOE program manager who explained several critical details which have direct impacts on the evaluability of the PY2008 program as well as the sample planning. Funding data from WinSaga differs from the PAGE data in the following ways:

Data pulled from any given program year do not add to the amount allocated to that program year. For PY2008, states were allocated $33 million for the SEP program; however, data on funding exceeded $62 million.

Market Titles frequently have no data associated with them. Since states have five years to use the SEP funding, unspent funding can roll over to the next program year, or be allocated over several years. KEMA observes that nearly one-third (35 percent) of 579 Market Titles had no funding data associated with them.

States’ planning cycles can differ substantially from the July 2008 to June 2009 SEP Program Year, which could impact how the funding by Market Title was maintained in the WinSaga database. For example, states were reporting their individual fiscal year, calendar year, or program year cycles which was often different than the federal program year cycle.

Descriptive information to assist in the classification process: KEMA determined that the data field most closely aligned with the original 16 BPACs identified in the SOW is called the “Metric Area,” but this was not associated with the Market Title records. In the exported WinSaga data, the Market Title and Metric Area data are completely unrelated; however, KEMA was able to determine that a one-to-many relationship generally existed between Market Titles (unique) and Metric Areas (unique to a Market Title).

To render the data usable, KEMA manually built a data set using the following steps:

Establish the unique set of Market Titles by comparing with the Metric data. The Metric data has 1,642 records covering 46 states/territories with market title data, but the number of records exceeded the number of unique market titles by almost three to one. The market title data matching process was only partially successful, requiring KEMA to manually match a large proportion of the data sets by market title. In the end, KEMA only discovered one market title in the Metrics data set that could not be matched to the Market Title data set and added in that particular record (making 579 records in the Market Title database).

Preliminary BPAC data match: KEMA developed a matching algorithm to assign a preliminary BPAC to each Metric Area in the Metrics data set based on how closely it aligned with a given BPAC. In most cases the match was exact. For BPACs having no reasonable match in the Metric Area data, no preliminary BPAC was assigned. In many cases, a given market title had multiple matched BPACs.

Narrowing of Metric data: Although the Metrics data are incomplete relative to the unique records in the Market Title data set, for the matched data records, a one-to-many relationship existed between the Market Title data and the Metrics data set. KEMA manually selected a unique record in the Metric data set to be merged into the Market Title dataset based on the matched BPAC with the highest incidence per market title.

Merge the Metrics and Market Title data sets: KEMA merged the narrowed Metrics data set into the Market Title dataset, creating a data frame with descriptive information on programmatic activity and the associated metric.

KEMA staff reviewed the detailed merged record data for each activity and either confirmed or reassigned the BPACs as appropriate, using the standards and distinguishing attributes established in developing the ARRA data from above. The review process had several iterations and included several KEMA staff involved in the BPAC assignments to ensure consistency in BPAC assignment between program periods.

Like the ARRA data classification process, the contractor team verified the data accuracy through interviews with NASEO Regional Coordinators and the assigned state DOE Project Officers. KEMA first reached out to the NASEO Regional Coordinators—many of whom were previously very senior in their own state energy offices during 2008—and a select group of nine states to compliment the states represented by the NASEO Regional Coordinators themselves. KEMA also verified the status of all PAs with the States’ Energy Offices, such as whether funding amounts changed, the PA was dropped, and in some cases whether the BPAC assignment had changed.

KEMA used the same principles learned from the ARRA data frame development process that is articulated above for assigning BPACs by programmatic activity:

Assign the BPAC that most fits the programmatic activity.

Assign the highest level rigor possible that reasonably fits the programmatic activity.

Assign a secondary or tertiary BPAC if a programmatic activity exhibits such strong supporting elements for further review and disaggregation if additional data were available through the State’s Energy Office.

Assign “Administration” as a BPAC for funded activities that are primarily administrative in nature and have no programmatic feature that would deliver energy savings.

Assign “Energy Emergency Planning” as a BPAC since the 2008 SEP funding included such a requirement that the ARRA funding did not.

The PY2008 data did not result in any further redefinition or refinement of the BPACs distinguishing attributes.

As required in the RFP, the contractor team subcategorized the BPACs as well. As stated in the Final SEP Evaluation White Paper:

“The results from this effort will be the development of a set of program evaluation groups with a description of the characteristics that make the group suitable for grouping and descriptive information about the characteristics of each program that need to feed the efforts for prioritizing programs within an evaluation group.”12

Upon review of the PA data, the contractor team determined that not only do the PAs within BPACs disaggregate into subcategories, but also the subcategories may overlap across BPACs as well. For example, the Loans, Grants and Incentives BPAC is at times hard to distinguish from a building retrofit or renewable energy rebate program. Workshops can be conducted across many BPACs, and building retrofit programs can be delivered through technical assistance or audits. As a result, the contractor team found that further specifying the BPACs to a finer level—such as the delivery mechanism or the targeted sector—became a useful basis for subcategorization. This is consistent with the SEP Evaluation White Paper which states that subcategorization efforts should: “…make sure these efforts reflect the way the programs are operated and to accurately capture the services provided.13”

The subcategories, largely grounded in the BPACs, developed for this evaluation effort are largely derived from the BPACs, but can be assigned independently of the parent BPAC depending on the delivery mechanism or program target. These are presented in Figure 4.

Figure 4: BPACs and Subcategories

BPAC |

Subcategory Derivation |

Building Codes and Standards |

Building Code Development and Support |

|

End Use Standards Development and Support |

Building Retrofits |

Building Retrofits: Nonresidential |

|

Building Retrofits: Residential |

Clean Energy Policy Support |

Policy and Market Studies; Legislative Support |

Energy Audits: Commercial, Industrial and Agricultural |

Energy Audits: Commercial, Industrial and Agricultural14 |

Energy Audits: Residential |

Energy Audits: Residential |

Energy Efficiency Rating and Labeling |

Energy Efficiency Rating and Labeling Development and Support |

Government, School and Institutional Procurement |

Government, School and Institutional Procurement Support |

Industrial Process Efficiency |

Industrial Retrofit Support |

New Construction and Design |

New Construction and Design Assistance |

Renewable Energy Market Development |

Renewable Energy Market Development: Manufacturing |

|

Renewable Energy Market Development: Projects |

Technical Assistance |

Technical Assistance to Building Owners |

Traffic Signals |

Traffic Signal Retrofits |

Transportation |

Alternative Fuels, Ride Share and Traffic Optimization |

Loans, Grants and Incentives |

[Never a subcategory]15 |

Tax Incentives and Credits |

[Never a subcategory] |

[Never a BPAC] |

Generalized Marketing and Outreach (Participants not traceable)

|

[Never a BPAC] |

Generalized Workshops and Demonstrations (Participants may be traceable) |

[Never a BPAC] |

Targeted Training and/or Certification (Participants are traceable) |

Additionally, the subcategories were also specified to be consistent with known gross savings estimation methods, such that estimated energy savings by BPAC can be reasonably reflected as the sum of all subcategories.

For each PA, the contractor team assigned a unique BPAC/Subcategory combination to effectively define the sample frame and prioritize. In some cases, this required splitting the record in the PAGE or WinSaga database after verifying the PA’s funding level and intent to address the guidance provided in the SEP Evaluation White Paper for prioritization and documentation of design detail.

The selection of PAs for sampling requires:

Establishment of the total sample size,

Establishment of the sampling frame, including classification of PAs into groups,

A rule or process for assigning the evaluation rigor level to sampled PAs, and

A process for allocating sampling points to the groups.

The approach can be summarized as follows.

The total number of PAs to be evaluated was set at 82, including 24 High-rigor and 58 Medium-High-rigor PAs, and a total sample size of 53 for PY2008 and 29 for ARRA. These numbers were determined based on an initial assessment of the distribution of funding by activity types, and the number of different types of evaluations that could be accommodated by the available budget.

The sampling frame for each period started as the largest BPAC-subcategory cells (in terms of program budget), that together account for at least and not a lot more than 80 percent of non-administrative budget. That is, we defined a minimum funding PA size threshold such that the cells total above but close to 80 percent of the total program budget. All these cells are included in the sampling frame. A few additional cells were then included for policy reasons despite being smaller than the size threshold. The included cells define the population that will be represented by the study.

After reviewing the activities in the course of the classification process, and in light of budget constraints, we determined that High-rigor evaluations would be meaningful only for evaluation of building retrofit activities. These activities fall into two BPACS: (1) Building Retrofit and (2) Loans, Grants and Incentives. Under each of these BPACS, there are Residential and Nonresidential building retrofit subcategories. These subcategories are assigned to High-rigor evaluation. All other cells are assigned Medium-High rigor.

Sample allocation to BPAC-subcategory cells occurs in a few steps.

Preliminary allocation. Initially PAs are allocated to cells proportional to budget only. This process tends to leave smaller cells, especially those included despite being below the minimum size threshold, with zero allocation.

Forced allocations. After reviewing the initial allocation strictly proportional to budget, some forced allocations are specified, to ensure the small cells that need to be covered have some sample.

Proportional allocation. The cells that received forced allocations are set aside. The remainder of the total sample points for each period are allocated to the remaining cells proportional to size (program budget).

Identification of certainty and non-certainty PAs. Allocation proportional to size means that one sample PA is allocated for about every $850,000 of budget for PY2008, and for every $77 million of budget for ARRA.

Any individual PA with budget above this amount is included with certainty in the sample. The PAs so selected are called “first-pass certainty” PAs. In some cases, the budget for an individual PA would mean an allocation of two or more PAs. However, we only select a given PA once.

Once the large, first-pass certainty PAs have been identified, the remaining sample points are allocated to the remaining cells, proportional to the remaining size.

We identify a second set of certainty selections within this remainder sample, using the same approach as for the first pass. That is, all PAs with budget greater than the ratio of total remaining budget to remaining sample size are included with certainty. The PAs so selected are called “second-pass certainty” PAs.

Once the first- and second-pass certainty PAs have been identified, the remaining sample points are allocated to the remaining cells, proportional to the remaining size. These allocations are referred to as the “non-certainty” or “remainder” sample.

In principle, proportional to size allocation could result in a target sample size greater than the number of PAs in a cell. In such cases it would be necessary to cap the allocations at the number that exist in the cell, and re-allocate the excess sample. This problem of over-allocation did not arise for this sample, so this step was not necessary. Pulling out the certainty cells in two passes helps to reduce the potential for the problem.

Assessment of achievability. Once we identified the target numbers of certainty and non-certainty selections for each cell, we assessed whether there are cells whose targets are unlikely to be met based on evaluability.

Each PA has an evaluability score indicating either a high or moderate chance of successfully completing an evaluation at the targeted rigor if we select that PA. (PAs with zero or low chance of successful Medium-High- or High-rigor evaluation account for a small fraction of total activity, and are excluded from the frame.) Specifically, we assume that a “likely” evaluable PA has an 80 percent chance of being evaluated at the targeted High or Medium-High rigor level, while a “possibly” evaluable PA has a 50 percent chance. Based on discussions with representatives from DOE, ORNL and the states who participated in the May 25th Network Committee Meeting, we feel that these are conservative estimates.

The assumed success rates should be very conservative for certainty PAs. Certainty PAs are high priority for successful completion because of their size. If after confirming with ORNL that we are unable to complete evaluation of one of these PAs, we will substitute a smaller PA. However, this substitution will be a last resort.

The remainder sample will be allocated to “likely evaluable” PAs at a higher rate than “possibly evaluable” PAs. This procedure ensures that both levels of evaluability are covered by the sample, but that evaluation resources are devoted more heavily to the PAs that have better chance of being evaluable.

Based on the assumed probabilities of successful evaluation at targeted rigor for likely and possible, we calculate the size of the oversample required to achieve the targeted sample sizes. With the assumed success probabilities, we need a sample of five “likely” PAs to complete four evaluations successfully. We need a sample of two “possible” PAs to complete one evaluation successfully.

If the total oversample required based on this calculation exceeds the number of PAs in the sample, we flag a potential shortfall. As it turned out, the current sample design does not have an anticipated shortfall in any cell. That is, unless the frequency of inadequate data availability is worse than projected in some cell, we expect to achieve these targeted sample sizes at the targeted rigor levels.

Final targets. After the iterative reallocation in steps 1-4, we reviewed the sample allocations and made some slight adjustments to be sure:

Total samples after rounding still matched the targeted number by time period and by rigor level, and

The iterative re-allocation of the remainder did not result in severe over- or under-allocation to any one cell.

The remainder of this section presents the results of each of these steps.

Figure 5 indicates the proportion of SEP spending for each BPAC and Subcategory for PY2008 and the Figure 6 presents similar data for the ARRA period. As noted, our starting point for frame definition is to select the BPAC/Subcategory combinations that sum to at least 80 percent of funding. The minimum funding percentage by BPAC/Subcategory combination is 3 percent for both periods (pink highlighted cells). In addition, we have included select BPAC/Subcategory combinations (yellow highlighted cells) that may be outside the sampling criteria for policy reasons to ensure adequate inclusion of important BPACs. The additional included cells are the following:

Building Codes and Standards—this BPAC is anticipated to produce savings disproportionate to spending.

Subcategories of Workshops/Demonstrations and Training/Certification that are likely to be evaluable, if the other subcategories of the associated BPAC are included.

Building Retrofit subcategories if not already included based on size.

As shown in Figure 5, the sampling approach represents 80.3 percent of SEP funding for PY2008, and as shown in Figure 6 the sampling approach represents 86.4 percent for the ARRA period. Figure 7 and Figure 8 display the number of available PAs within each of the selected BPAC/subcategory combinations for PY2008 and the ARRA period, respectively. Pink cells represent PA BPAC/subcategory combinations which exceed the 3 percent minimum threshold; yellow cells are those BPAC/subcategory combinations which are included for policy reasons. As shown, 173 PAs are included in the sampling frame for PY2008 and 355 are included in the sampling frame for the ARRA period.

Figure 6: Percent of ARRA SEP Budget by BPAC and Subcategory |

||||||||||||||||||||

BPACs |

Subcategory |

All Subcategories |

Selected BPACs/ Subcategories |

|||||||||||||||||

Alternative Fuels, Ride Share and Traffic Optimization |

Building Codes and Standards: Codes |

Building Retrofits: Nonresidential |

Building Retrofits: Residential |

Energy Audits: Commercial, Industrial and Ag |

Energy Audits: Residential |

Energy Efficiency Rating and Labeling |

Generalized Marketing and Outreach |

Generalized Workshops and Demonstrations |

Government, School and Institutional Procurement |

Industrial Retrofit Support |

New Construction and Design |

Policy and Market Studies; Legislative Support |

Renewable Energy Market Development: Manufacturing |

Renewable Energy Market Development: Projects |

Targeted Training and/or Certification |

Technical Assistance to Building Owners |

Traffic Signals |

|||

Building Codes and Standards |

0.0% |

0.4% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.7% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

0.0% |

0.0% |

1.3% |

1.3% |

Building Retrofits |

0.0% |

0.0% |

22.9% |

2.7% |

0.8% |

0.2% |

0.0% |

0.3% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

1.0% |

0.5% |

0.0% |

28.5% |

24.0% |

Clean Energy Policy Support |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

1.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

1.0% |

|

Energy Audits: Commercial, Industrial and Agricultural |

0.0% |

0.0% |

0.0% |

0.0% |

0.7% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.7% |

|

Energy Audits: Residential |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

|

Energy Efficiency Rating and Labeling |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

|

Industrial Retrofit Support |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.8% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.9% |

|

Loans, Grants and Incentives (Excl Retrofits and Projects) |

0.8% |

0.0% |

0.0% |

0.0% |

0.3% |

0.0% |

0.1% |

0.0% |

0.2% |

0.0% |

1.6% |

0.0% |

0.0% |

8.4% |

0.0% |

0.4% |

0.2% |

0.0% |

11.9% |

8.9% |

Loans, Grants and Incentives (Retrofits and Projects) |

0.0% |

0.0% |

18.6% |

5.1% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

11.6% |

0.0% |

0.0% |

0.0% |

35.3% |

35.3% |

New Construction and Design |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

0.0% |

0.0% |

0.0% |

0.0% |

0.1% |

0.0% |

0.4% |

|

Renewable Energy Market Development |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

4.7% |

11.7% |

0.6% |

0.2% |

0.0% |

17.2% |

16.9% |

Technical Assistance |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.3% |

0.0% |

0.3% |

|

Traffic Signals |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.2% |

0.2% |

|

Transportation |

2.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

0.0% |

2.1% |

|

All BPACs |

2.8% |

0.5% |

41.5% |

7.8% |

1.8% |

0.4% |

0.1% |

0.6% |

1.0% |

0.0% |

2.4% |

0.2% |

1.0% |

13.1% |

23.2% |

2.2% |

1.3% |

0.2% |

100.0% |

|

Selected BPAC/ Subcategories |

0.0% |

0.4% |

41.5% |

5.1% |

0.0% |

0.0% |

0.0% |

0.0% |

1.0% |

0.0% |

0.0% |

0.0% |

0.0% |

13.1% |

23.2% |

2.1% |

0.0% |

0.0% |

|

86.4% |

Figure 8: Number of Available PAs for Selected BPAC/Subcategory (ARRA) |

|||||

BPAC |

Subcategory |

Target Rigor |

SEP Budget |

Percent of SEP Budget |

Number of PA's |

Building Codes and Standards |

Building Codes and Standards: Codes |

MH |

$11,356,748 |

0% |

15 |

Building Codes and Standards |

Generalized Workshops and Demonstrations |

MH |

$19,223,610 |

1% |

2 |

Building Codes and Standards |

Targeted Training and/or Certification |

MH |

$2,489,921 |

0% |

10 |

Building Retrofits |

Building Retrofits: Nonresidential |

H |

$585,731,006 |

26% |

86 |

Building Retrofits |

Building Retrofits: Residential |

H |

$69,377,772 |

3% |

16 |

Building Retrofits |

Generalized Workshops and Demonstrations |

MH |

$667,990 |

0% |

1 |

Building Retrofits |

Targeted Training and/or Certification |

MH |

$26,537,692 |

1% |

11 |

Loans, Grants and Incentives (Excl Retrofits and Projects) |

Generalized Workshops and Demonstrations |

MH |

$4,047,962 |

0% |

3 |

Loans, Grants and Incentives (Excl Retrofits and Projects) |

Renewable Energy Market Development: Manufacturing |

MH |

$216,947,443 |

9% |

9 |

Loans, Grants and Incentives (Excl Retrofits and Projects) |

Targeted Training and/or Certification |

MH |

$9,558,163 |

0% |

3 |

Loans, Grants and Incentives (Retrofits and Projects) |

Building Retrofits: Nonresidential |

H |

$479,418,126 |

21% |

45 |

Loans, Grants and Incentives (Retrofits and Projects) |

Building Retrofits: Residential |

H |

$135,981,963 |

6% |

16 |

Loans, Grants and Incentives (Retrofits and Projects) |

Renewable Energy Market Development: Projects |

MH |

$295,725,557 |

13% |

57 |

Renewable Energy Market Development |

Generalized Workshops and Demonstrations |

MH |

$1,108,465 |

0% |

5 |

Renewable Energy Market Development |

Renewable Energy Market Development: Manufacturing |

MH |

$120,323,694 |

5% |

10 |

Renewable Energy Market Development |

Renewable Energy Market Development: Projects |

MH |

$299,531,840 |

13% |

58 |

Renewable Energy Market Development |

Targeted Training and/or Certification |

MH |

$14,852,017 |

1% |

8 |

|

|

|

$2,292,879,968 |

100% |

355 |

Our overall sampling targets were established based on the desired level of effort and available resources. The total number of PAs to be sampled was set at 53 for PY2008 and 29 for the ARRA period. Preliminary rigor level assignments were specified as follows:

PY2008: 24 High-rigor evaluations, 29 Medium-high-rigor evaluations

ARRA: 29 Medium-high-rigor evaluations

However, as noted, we determined that given the results of the activity classifications and in light of budget constraints, only a limited number of cells were amenable to High-rigor evaluation. Limiting High-rigor evaluation to PY2008, while retaining the target of 24, would heavily direct the PY2008 sample to only a few types of activities. Instead, we plan to distribute the High-rigor evaluations between PY2008 and ARRA.

Within these overall guidelines, we followed the steps outlined in Section 3.1.4 above. This allocation resulted in the sampling targets shown in Figure 9 and Figure 10 for the PY2008 and ARRA periods, respectively.

The figures show the allocation that would be assigned based strictly by allocating proportional to total budget (green highlighted columns), and also the allocations that would result from allocating strictly proportional to the number of PAs in the cell (red highlighted cells). Also shown is the total number allocated through the iterative process in the blue highlighted cells, combining the certainty and non-certainty PAs.

The figures show a few cells with allocations of zero. These are cells initially included in the frame, but that were too small to receive an allocation with proportional allocation. These were all cells that were included in the frame to ensure some coverage of evaluable Workshops/Demonstrations and Training/Certification (subcategory) activities. We did not force allocations to these cells, because enough other activities in these subcategories were included.

There are a few cells (highlighted in yellow) where the final proposed allocation differs from the iteratively allocated targets (in blue).

For PY2008, the iterative allocation results in a target of 10 for Clean Energy Policy Support. This allocation would be 19 percent of the sample, for 12 percent of the budget and 23 percent of the number of PAs. We reduced this allocation to eight, and added one each to Building Retrofit/Technical Assistance to Building Owners and to Renewable Energy Market Development/Generalized Workshops and Demonstrations (yellow highlighted cells).

For ARRA, the rounding of cell targets resulted in a total of 27 selections instead of the targeted 29. We added one each to Loans, Grants and Incentives/Renewable Energy Market Development: Projects and Renewable Energy Market Development/Renewable Energy Market Development: Projects (yellow highlighted cells).

The figures also show that in most cases the proposed targets are within the range bracketed by allocation proportional to size and allocation proportional to number of PAs. Allocations less than proportional to size are mostly associated with large numbers of certainty selections.

Finally, the figures indicate that the targets are expected to be achievable based on the numbers available in each cell and the assumed success rates. That is, the likely shortfall is zero.

Figure 10: Allocated Sampling Targets by BPAC/Subcategory and Rigor Level (ARRA) |

||||||||||||||

BPAC |

Sub-category |

Target Rigor |

Budget |

Population # PAs |

Iteratively Allocated Sample Size |

Likely Shortfall |

Final Proposed Target |

|||||||

Budget |

% Budget |

Sample Proportional to Budget |

Population # PAs |

% Population # PAs |

Sample Proportional to # PAs |

Certainty |

Non-Certainty |

Total |

% Sample Total |

|||||

Building Codes and Standards |

Building Codes and Standards: Codes |

MH |

$11,356,748 |

0% |

0 |

15 |

4% |

1 |

0 |

2 |

2.0 |

7% |

0 |

2 |

Building Codes and Standards |

Generalized Workshops and Demonstrations |

MH |

$19,223,610 |

1% |

0 |

2 |

1% |

0 |

0 |

1 |

1.0 |

3% |

0 |

1 |

Building Codes and Standards |

Targeted Training and/or Certification |

MH |

$2,489,921 |

0% |

0 |

10 |

3% |

1 |

0 |

1 |

1.0 |

3% |

0 |

1 |

Building Retrofits |

Building Retrofits: Nonresidential |

H |

$585,731,006 |

26% |

7 |

86 |

24% |