NEi3 OMB SS Part A 10-22-14

NEi3 OMB SS Part A 10-22-14.docx

National Evaluation of the Investing in Innovation (i3) Program

OMB: 1850-0913

S upporting

Statement for Information Collection Request—Part A

upporting

Statement for Information Collection Request—Part A

National Evaluation of the Investing in Innovation (i3) Program

October 22, 2014

Prepared for:

Tracy Rimdzius

Institute of Education Sciences

U.S. Department of Education

555 New Jersey Avenue, NW

Washington, DC 20208

Submitted by:

Abt Associates

55 Wheeler Street

Cambridge, MA 02138

A.1 Circumstances Requiring the Collection of Data 1

A.2 Purposes and Use of the Data 2

A.2.1 Goal One: Describe the intervention implemented by each i3 grantee 3

A.2.2 Goal Two: Present the evidence produced by each i3 evaluation 3

A.2.3 Goal Three: Assess the strength of the evidence produced by each i3 evaluation 3

A.2.4 Goal Four: Identify effective and promising interventions 4

A.2.5 Goal Five: Assess the results of the i3 Program 4

A.2.6 Alignment of the data collection goals and the data elements 5

A.3 Use of Information Technology to Reduce Burden 11

A.4 Efforts to Identify Duplication 11

A.6 Consequences of Not Collecting the Information 12

A.7 Special Circumstances Justifying Inconsistencies with Guidelines in 5 CFR 1320.6 12

A.8 Consultation Outside the Agency 12

A.8.1 Federal Register announcement 12

A.8.2 Consultations Outside the Agency 13

A.9 Payments or Gifts to Respondents 13

A.10 Assurance of Confidentiality 13

A.11 Questions of a Sensitive Nature 14

A.12 Estimates of Response Burden 14

A.14 Estimates of Costs to the Federal Government 16

A.16 Plans for Publication, Analysis, and Schedule 16

A.16.1 Approach to i3 analysis and reporting 17

A.16.2 Plans for publication and study schedule 20

A.17 Approval to Not Display Expiration Date 21

The Institute of Education Sciences (IES), within the U.S. Department of Education (ED), requests that the Office of Management and Budget (OMB) approve, under the Paperwork Reduction Act of 1995, clearance for IES to conduct data collection efforts for the National Evaluation of the Investing in Innovation (i3) Program. The i3 Program is designed to support school districts and nonprofit organizations in expanding, developing, and evaluating evidence-based practices and promising efforts to improve outcomes for the nation’s students, teachers, and schools. Each i3 grantee is required to fund an independent evaluation, in order to document the implementation and outcomes of the educational practices. The National Evaluation of i3 (NEi3) has two goals: 1) to provide technical assistance (TA) to support the independent evaluators in conducting evaluations that are well-designed and well-implemented and 2) to summarize the strength of the evidence and findings from the independent evaluations.

Data collection will be required to address the second goal of summarizing the strength of the evidence and findings of the individual i3 evaluations being conducted by independent evaluators contracted by each i3 grantee. The required data collection will entail each i3 evaluator completing a Primary Survey; some evaluators will also complete an Early or a Follow-Up Survey (using the exact same survey template as the Primary Survey). Part A of this request discusses the justification for these data collection activities. Part B, submitted under separate cover, describes the data collection procedures.

To date, ED has awarded over $1 billion in funding through i3. In September 2010, ED awarded approximately $650 million in grants to the Fiscal Year (FY) 2010 cohort of i3 grantees to support the implementation and evaluation of 49 programs (4 Scale-Up grants, 15 Validation grants, and 30 Development grants) aimed at improving educational outcomes for schools, teachers, and students. The following year, ED awarded approximately $150 million to the FY 2011 cohort of i3 grantees, including 23 programs (1 Scale-Up grant, 5 Validation grants, and 17 Development grants). Then, in December 2012, ED awarded approximately $140 million in grants to the FY 2012 cohort, including 20 programs (8 Validation grants and 12 Development grants). Finally, in December 2013, ED awarded approximately $112 million in grants to the FY 2013 cohort, including 25 programs (7 Validation grants and 18 Development Grants). The Scale-Up grants are intended to support taking to scale programs that have strong evidence of their effectiveness in some settings. The Validation grants were given to programs to validate their effectiveness, and the Development grants were given to programs to establish the feasibility of implementation of the program and test the promise for improving outcomes.

The i3 Program, overseen by ED’s Office of Innovation and Improvement (OII), requires each grantee to fund an independent evaluation in order to document the implementation and outcomes of the educational practices. While ED’s goal was always that grantees fund evaluations that estimate the impact of the i3-supported practiced (as implemented at the proposed level of scale) on a relevant outcome, this was explicitly stated as of the FY 2013 i3 competition. The Scale-Up and Validation grantees are expected to fund rigorous impact studies using experimental or quasi-experimental designs (QEDs). These evaluations have the potential to yield strong evidence about a range of approaches to improving educational outcomes. The Development grants, in turn, are also supporting independent evaluations, but are not necessarily expected to support rigorous evaluations. ED’s Institute of Education Sciences (IES) has contracted with Abt Associates and its partners1 (the i3 Evaluation Team) to conduct the NEi3.

Achieving the first goal of the NEi3 requires one group within the i3 Evaluation Team, called the Technical Assistance (TA) Team, to take an active role in maximizing the strength of the design and implementation of the evaluations, so that the evaluations yield the strongest evidence possible about what works for whom in efforts to improve educational outcomes. Achieving the second goal of the NEi3 requires a separate group within the i3 Evaluation Team, known as the Analysis and Reporting (AR) Team, to (1) assess the evaluation designs and implementation, both to inform ED of the progress of the evaluations2 and to provide important context for the summary of results, and (2) provide clear synopses of what can be learned from this unprecedented investment in educational innovation—summaries that are cogent and easily understood by a wide range of stakeholders, including educators and school administrators, policymakers, ED, and the public. To ensure that the work of the TA Team does not influence the assessments and analysis of the AR Team, the two groups of staff members do not overlap, and are separated by a firewall maintained by the Project Director. The systematic data collection proposed for the NEi3 is necessary to provide the information for the AR Team to review and summarize the findings from the independent evaluations funded by i3.The data collection will be led by the AR team, with oversight from the Project Director.

This section of the supporting statement provides an overview of the research design and data collection efforts planned to meet the main research questions and overall objectives of the NEi3. The section begins with an overview of the research design, including the main objectives of the evaluation and key research questions, followed by a description of the data collection activities for which OMB clearance is requested.

As noted earlier, the main objectives of the NEi3 are to (1) to provide technical assistance (TA) to support the independent evaluators in conducting evaluations that are well-designed and well-implemented and (2) to summarize the strength of the evidence and findings from the independent evaluations. The data collected for this evaluation will address this second objective. Specifically, the data will be used to support reviews and reports to ED that have five goals:

Describe the intervention implemented by each i3 grantee.

Present the evidence produced by each i3 evaluation, along with assessment of its strength.

Assess the strength of the evidence produced by each i3 evaluation.

Identify effective and promising interventions.

Assess the results of the i3 Program.

These five goals are described in more detail below, along with the research questions that address each goal.

While the programs are described in the applications submitted by the grantees for funding, it will be important to augment these descriptions with more detailed information than could be provided within the page limits of the applications and to reflect what happened (as opposed to what was planned during the application stage). This information will allow the i3 Evaluation Team to describe key components of each i3-funded intervention—for example, in the approach taken to training teachers and delivering instruction to students. An accurate description of the details of each project’s intervention is critical to ED’s understanding of the breadth and depth of the approaches funded, and of the implications for what these specific projects can potentially teach the field about promising approaches to education reform.

This goal will be fulfilled by answering the following research questions:

Q1. What are the components of the intervention?

Q2. What are the ultimate student and teacher outcomes that the intervention is designed to affect?

Q3. What are the intermediate outcomes through which the intervention is expected to affect student and teacher outcomes?

Beginning in Spring 2016, we will present the available findings from both the implementation and impact studies as they emerge from the i3 evaluations. In particular, we will report on the findings on implementation fidelity and the findings from the impact analysis. The NEi3 will report the information provided by the independent evaluators for each i3 intervention (after verifying and processing those data).

This goal will be fulfilled by answering the following research questions:

Q5. For each i3 grant, how faithfully was the intervention implemented?

Q6. For each i3 grant, what were the effects of the interventions on/promise of the interventions to improve educational outcomes?

Goal Three: Assess the strength of the evidence produced by each i3 evaluation

Periodic assessments of the i3 independent evaluations are essential to the NEi3. In addition to providing ED with information necessary to report key Government Performance and Reporting Act (GPRA) measures, these assessments provide important context for the interpretation of the findings generated by the i3 independent evaluations and summarized in the reports. Specifically, ED has explicitly articulated the expectation that Scale-Up and Validation grantees conduct evaluations that are “well-designed and well-implemented,” as defined by the standards of the What Works Clearinghouse (WWC), because findings generated by such studies provide more convincing evidence of the effectiveness of the programs than evaluations that do not meet this standard. ED expects evaluations of Development grants to provide evidence on the intervention’s promise for improving student outcomes.3

This goal will be fulfilled by answering the following research questions:

Q4. For each i3 grant, how strong is the evidence on the causal link between the intervention and its intended student or teacher outcomes?

Q4a. For Scale-Up and Validation grants, did the evaluation provide evidence on the effects of the intervention?

Q4b. For Development grants, did the evaluation produce evidence on whether the intervention warrants a more rigorous study of the intervention’s effects? For Development grants, if applicable, did the evaluation provide evidence on the outcomes of the intervention?

Goal Four: Identify effective and promising interventions

To help school and district officials identify effective interventions, we will produce lists of interventions with positive effects/outcomes. Since educational interventions are typically designed to improve outcomes in one or more domains, and are targeted for students at particular grade levels, we will list interventions by outcome domain and educational level (e.g., reading achievement for students in elementary school). Each list will include the names of the i3-funded interventions that produced positive effects, based on i3 studies that meet WWC evidence standards (with or without reservations).4

This goal will be fulfilled by answering the following research questions:

Q7. Which i3-funded interventions were found to be effective at improving student or teacher outcomes?

Q8. Which i3-funded interventions were found to be promising at improving student or teacher outcomes?

To inform federal policymakers, we propose to summarize the effectiveness of the i3-funded interventions. This goal will be fulfilled by answering the following research questions:

Q9. How successful were the i3-funded interventions?

Q9a. What fraction of interventions was found to be effective or promising?

Q9b. What fraction of interventions produced evidence that met i3 evidence standards (with or without reservations) or i3 criteria for providing credible evidence of the intervention’s promise for improving educational outcomes)?

Q9c. What fraction of interventions produced credible evidence of implementation fidelity?

Q9d. Did Scale-Up grants succeed in scaling up their interventions as planned?

As stated above, the NEi3 has nine research questions that address the five major goals of the evaluation. Exhibit 1 presents a crosswalk of the purposes of the data collection, the research questions, and the individual survey items. Appendix A contains the full data collection survey.

Exhibit 1. Crosswalk Aligning Goals, Research Questions, and Survey Items

Goal 1: Describe the intervention implemented by each i3 grantee. |

|

Research Question |

Survey Items (Sections and Question Numbers) |

What are the components of the intervention? |

IMPLEMENTATION: A, B, D, J, P, V, AB, & AG |

What are the ultimate student or teacher outcomes that the intervention is designed to affect? |

IMPACT: F; BACKGROUND: 9 |

What are the intermediate outcomes through which the intervention is expected to affect student or teacher outcomes? |

IMPACT: F; IMPLEMENTATION: A & B; BACKGROUND: 9 |

Goal 2: Present the evidence produced by each i3 evaluation. |

|

Research Question |

Survey Items (Sections and Question Numbers) |

For each i3 grant, how faithfully was the intervention implemented? |

IMPLEMENTATION: C, F-I, L-O, R-U, X-AA, AD-AF, AH-AO |

For each i3 grant, what were the effects of the interventions on educational outcomes? |

IMPACT: CA-CQ |

Goal 3: Assess the strength of the evidence produced by each i3 evaluation. |

|

Research Question |

Survey Items (Sections and Question Numbers) |

For each i3 grant, how strong is the evidence on the causal link between the intervention and its intended student or teacher outcomes? Was the evaluation well-designed and well-implemented (i.e., for Scale-Up and Validation grants, did the evaluation provide evidence on the effects of the intervention)? Did the evaluation provide evidence on the intervention’s promise for improving student or teacher outcomes (i.e., for Development grants, did the evaluation produce evidence on whether the intervention warrants a more rigorous study of the intervention’s effects)?

|

BACKGROUND: 17-19; IMPACT: A, B, F-CG;IMPLEMENTATION: E, K, Q, W, & AC

|

Goal 4: Identify effective and promising interventions. |

|

Research Question |

Survey Items (Sections and Question Numbers) |

Which i3-funded interventions were found to be effective at improving student or teacher outcomes? |

IMPACT: A, B, F-CG; BACKGROUND: 17-19 |

Which i3-funded interventions were found to be promising at improving student or teacher outcomes? |

IMPACT: A, B, F-CG; BACKGROUND: 17-19 |

Goal 5: Assess the results of the i3 Program. |

|

Research Question |

Survey Items (Sections and Question Numbers) |

How successful were the i3-funded interventions? What fraction of interventions was found to be effective or promising? What fraction of interventions produced evidence that met i3 evidence standards or i3 criteria? What fraction of interventions produced credible evidence of implementation fidelity? Did Scale-Up grants succeed in scaling-up their interventions as planned? |

BACKGROUND 1-20; IMPACT A, B, F-CG; IMPLEMENTATION: A-D, F-J, L-P, R-V, X-AB, AD-AO |

Data on the i3 evaluations’ designs, activities, and findings will be collected annually beginning in January 2015 from the evaluators of all of the i3 projects that have findings available that the evaluator determines are ready to be shared with the NEi3 (because these findings are final and will not be subject to future changes) at the time of our yearly data collection. The types of information that will be available from evaluators each year will vary across the i3 projects depending on the duration of their funding (which ranges from 3 to 5 years) and the cohort to which they belong (FY 2010, FY 2011, FY 2012 or FY 2013).

Each i3 evaluator will be required to participate in at least one of the five annual data collection periods planned by the NEi3. Three of these data collections are covered by this clearance package, and the remaining two will be included in a future OMB clearance package. While evaluators are permitted to submit data during each of the five annual data collection periods, we anticipate that evaluators will submit most of their data during a single period. We refer to this as the evaluators’ Primary Data Collection. We also expect that some evaluators may choose to submit a limited number of early findings prior to that period or additional findings after that period. We refer to these periods as evaluators’ Early Data Collection and Follow-Up Data Collection, respectively. During each grant’s Primary Data Collection (and Early Data Collection, for those grants that choose to submit early findings), we will ask the independent evaluator to complete a survey sharing all findings available to date. In subsequent years, we will follow up with evaluators to request any changes/updates to their evaluations by completing a Follow-Up Survey. The Follow-Up Survey will use the same exact survey template as the Primary Survey.

Prior to any data collection, we will pre-populate surveys for evaluators based on extant data they share with us (existing design documents, existing reports, conference presentations, etc.) and documents produced to fulfill requirements of ED’s cooperative agreements with the grantees.

Each i3 grant will undergo the following process on a timeline that is unique to its cohort and its award length (i3 grants were funded for periods of anywhere between three and five years), but will include the same key components.

For

each grant, we will: Review

documents produced by evaluators to

fulfill requirements of ED’s cooperative agreements with the

grantees. Collect

extant data on the study’s findings

during the later years of the grant when findings are available

(starting in January 2015). Use

documents described in 1) and 2) to pre-populate a Primary Survey.

Send

the evaluator a link to a customized, pre-populated Primary Survey

asking them to a) actively confirm pre-populated information and b)

enter missing data.

If

needed (because the evaluator produces findings after

completing a Primary Survey), we will repeat steps 2), 3) and 4)

for data related to the additional findings only.

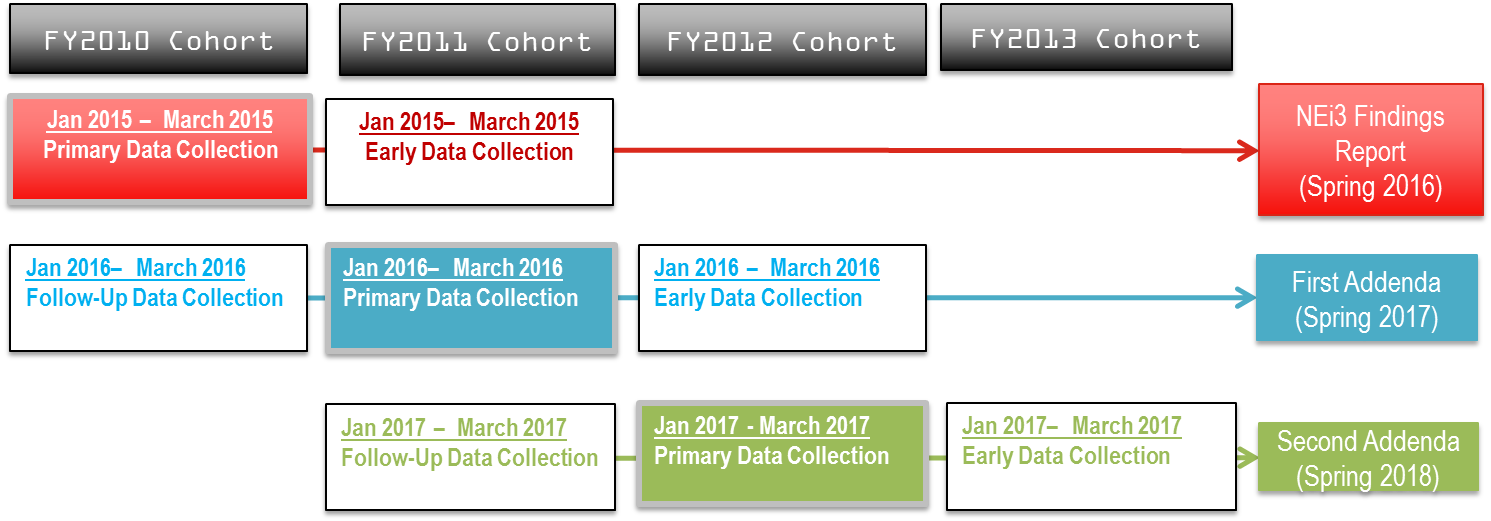

Exhibit 2, panel 1, below provides a summary of our report release dates associated with each of the three data collections described in this clearance package and the types of data to be collected during Early, Primary and Follow-Up Data Collections; panel 2 details when we expect each cohort will participate in Early, Primary, and Follow-Up Data Collections covered in this OMB package.

Exhibit 2: Data Collection and Reporting Plan Overview

Panel 1: Description of Reports and Data Collection |

|||

NEi3 Report |

Type of Data Collection |

Timing of Data Collection |

Type of Data to be Collected |

NEi3 Findings Report (Expected Release Date: Spring 2016)

|

Early and Primary Data Collection

|

January–March 2015

|

Early and Primary Data Collection

|

Report Addenda (Expected Release Dates: Spring 2017, Spring 2018)

|

Early, Primary and Follow-Up Data Collection |

January–March, annually from 2015 - 2017

|

Early and Primary Data Collection. The NEi3 will collect the data listed in the five above bullets from additional i3-funded studies that have not previously participated in Data Collection. Follow-Up Data Collection. The NEi3 will collect the following information from grants that have already participated in a Primary Data Collection and have additional findings to share.

|

Panel 2: Timing and Type of Data Collection by Cohort |

|||

|

|||

A.2.6.2 Early and Primary Data Collection—Information on study design to be pre-populated by NEi3

At the time of Primary Data Collection, NEi3 team members will pre-populate the following information into the Primary Survey (see Appendix A) for all i3 independent evaluators. NEi3 team members will use documents produced by evaluators to fulfill requirements of ED’s cooperative agreements with the grantees.

Information on interventions, including:

Descriptions of the interventions.

Roles played by the people and organizations involved with the grant.

People or aspects of educational organizations that the intervention aims to effect or benefit.

Logic models of the inputs, mediators, and expected outputs of interventions. (For Scale-Up grants this includes a logic model of the mechanisms and pathways of the scale-up process).

Key elements of intervention implementation.

Scale of the intervention (in terms of the number of students, teachers, schools, and/or districts intended to be served).

Evaluation plans, including:5

Research questions (specified as confirmatory and exploratory).

Research design (i.e., randomized controlled trials, quasi-experimental designs with comparison groups, regression discontinuity designs, or other types of designs).

Treatment and counterfactual conditions (specifically, how the counterfactual differs from the intervention).

Characteristics of the potential intervention versus comparison group contrasts to be analyzed.

Plans for measuring fidelity of intervention implementation.

Outcomes or dependent variables (e.g., name, timing of measurement for the intervention and the comparison groups, reliability and validity properties, whether outcome data were imputed in the case of missing data, and a description of the measurement instrument if not standard).

Independent variables (e.g., name, timing of measurement, and reliability and validity properties).

Analysis methods including descriptions of how impacts in the full sample and for subgroups will be estimated, how the baseline equivalence will be established (in QEDs and RCTs with high attrition), and how clustering of participants and noncompliance to treatment will be accounted for.

Role of the developers or grantee in the conduct of the evaluation (to assess the independence of the evaluators).

Description of the study sample, including:

Group sizes (overall and per group).

Characteristics (e.g., grades or age levels).

Location.

A.2.6.3 Early and Primary Data Collection – Information on study findings to be pre-populated by NEi3 whenever possible based on existing reports, conference presentations, etc. shared with the NEi3by i3 independent evaluators

Prior to Primary Data Collection, NEi3 team members will pre-populate surveys with the following information for all i3 independent evaluators based on any reports and other documents i3 independent evaluators share with us.

Evaluation findings from the implementation study, including:

Revised description of the intervention components.

Level of implementation fidelity.

Impacts on intermediate outcomes (e.g., teacher knowledge).

Evaluation findings from impact study for each contrast tested in the full sample or subgroups, plus information that is helpful in interpreting the impact findings, including:

Impact estimates.

Standard errors (to allow the NEi3 study team to conduct tests of statistical significance).

Standard deviation of the outcome measures (to allow the NEi3 study team to construct effect size measures).

Means and standard deviations for baseline variables (to allow the NEi3 study team to test for baseline equivalence).

Attrition rate and analysis sample sizes by group for each contrast.

A.2.6.4 Early and Primary Data Collection – Information to be completed by evaluators

Any of the above data that are not available in existing reports will be collected from evaluators via our Primary Data Collection. Evaluators will only be asked to complete Primary Survey items that the NEi3 was not able to pre-populate based on the prior steps. Evaluators will be asked to complete all missing fields, and to actively verify the accuracy of data pre-populated by the NEi3.

A.2.6.5 Follow-Up Data Collection

During Follow-Up Data Collection, information collected previously will be updated as necessary. Again, we will request existing reports, conference presentations, etc. for use in pre-populating the Follow-Up Survey. Specifically, we will request information about any recent findings (not already reported in previous years) as well as the associated information required for interpreting them. This information will be the same as the information requested during the Primary Data Collection, except that it will be updated with analyses conducted following the Primary Data Collection. As previously mentioned, the Follow-Up Survey will use the same exact survey template as the Primary Survey.

The data collections covered in this OMB clearance package will occur from January through March of 2015, January through March of 2016, and January through March of 2017. The type of data collection varies between Early, Primary and Follow-Up Data Collection, depending on the cohort and grant (see exhibit 2, panel 2, for more information).

Exhibit 3: Overview of data collection timelines covered in this OMB Package

Data Collection |

January–March 2015 |

January–March 2016 |

January–March 2017 |

The data collection plan for the NEi3 reflects sensitivity to issues of efficiency, accuracy, and respondent burden in several ways. Data collection will take place in a two-stage process in which (1) the AR team will pre-fill surveys for evaluators as much as possible based on evaluation documents previously received and (2) independent i3 evaluators will respond to a web-based Primary, Early or Follow-Up Survey that includes this pre-filled information for their review and new survey items to respond to that are appropriate for the timeline of their evaluation. This two-stage process will minimize the reporting burden on independent evaluators.

In addition, we will reduce burden on evaluators by using web-based data portals. Data will be collected using a combination of Microsoft Word-type survey items and Microsoft Excel-type tables and templates on secure website portals accessible only by individual i3 evaluation teams. The survey is designed so that respondents can complete part of the survey, check their records or research answers to questions about which they are unsure, and then complete the survey at a later time. An evaluation-specific username and password will be required each time the survey is accessed. No persons other than those selected to receive usernames and passwords and the study team will have editing access to the website. The study contractor will track completed surveys in real time.

Potential duplications of effort are of two general types: addressing research questions already answered and duplicating data collection. The NEi3 will not address research questions already answered. As explained in Section A.1., the i3 grants were newly funded in FY 2010, FY 2011, FY 2012, and FY 2013 and this evaluation is the only federally funded information collection with plans to collect data in order to assess the extent to which the i3 independent evaluations are well-designed and well-implemented, and report the results across the evaluations. To ensure we do not duplicate data collection efforts, independent evaluators will be encouraged to send study design documents, reports, summaries, and information tables that were constructed in their own work to the AR team prior to the survey each year. These documents will be reviewed by the AR team and information from the documents will be used to pre-fill survey item responses and sections that can be reviewed by the independent evaluator—rather than asking the evaluators to provide information that has already been reported in existing evaluation documents.

The primary entities for this study are independent evaluators employed by both large and small businesses as well as in some universities. Every effort is being made to reduce the burden on the evaluators through the collection of study design documents, reports, summaries, and information tables that evaluators constructed in their own work, as well as the use of a web-based data collection. The specific plans for reducing burden are described in Section A.3.

The data collection described in this supporting statement is necessary for conducting this evaluation, which is consistent with the goals of the Investing in Innovation program to identify and document best practices that can be shared and taken to scale based on demonstrated success (American Recovery and Reinvestment Act of 2009 (ARRA), Section 14007(a)(3)(C)). The information that will be collected through this effort is also necessary to report on the performance measures of the i3 Program, required by the Government Performance and Results Act:

Long-Term Performance Measures

The percentage of programs, practices, or strategies supported by a [Scale-Up or Validation] grant that implement a completed well-designed, well-implemented and independent evaluation that provides evidence of their effectiveness at improving student or teacher outcomes.

The percentage of programs, practices, or strategies supported by a [Development] grant with a completed evaluation that provides evidence of their promise for improving student or teacher outcomes.

The percentage of programs, practices, or strategies supported by a [Scale-Up or Validation] grant with a completed well-designed, well-implemented and independent evaluation that provides information about the key elements and the approach of the project so as to facilitate replication or testing in other settings.

The percentage of programs, practices, or strategies supported by a [Development] grant with a completed evaluation that provides information about the key elements and the approach of the project so as to facilitate further development, replication or testing in other settings.

There are no special circumstances required for the collection of this information.

In accordance with the Paperwork Reduction Act of 1995 (Pub.L. No. 104-13) and Office of Management and Budget (OMB) regulations at 5 CFR Part 1320 (60 FR 44978, August 29, 1995), IES published a notice in the Federal Register announcing the agency’s intention to request an OMB review of data collection activities for the i3 Evaluation. The notice was published on August 13, 2014 in volume 79, No. 156, page 4744 and provided a 60-day period for public comment. No public comments have been received to date.

The Abt study team assembled a Technical Work Group (TWG) (in consultation with ED) that consists of consultants with various types of expertise relevant to this evaluation. The TWG convened in April 2011 and discussed the overall approach to the NEi3, including providing TA and data collection and reporting. Individuals who served on the expert panel are listed in Exhibit 4.

Exhibit 4: Expert Panel Members

Name |

Affiliation |

David Francis, Ph.D. |

University of Houston |

Tom Cook, Ph.D. |

Northwestern University |

Brian Jacob, Ph.D. |

University of Michigan |

Lawrence Hedges, Ph.D. |

Northwestern University |

Carolyn Hill, Ph.D. |

Georgetown University |

Chris Lonigan, Ph.D. |

Florida State University |

Neal Finkelstein, Ph.D. |

WestEd |

Bob Granger, Ph.D. |

W.T. Grant Foundation |

Data collection for this study does not involve payments or gifts to respondents.

Abt Associates and its subcontractors follow the confidentiality and data protection requirements of IES (The Education Sciences Reform Act of 2002, Title I, Part E, Section 183), which require that all collection, maintenance, use and wide dissemination of data conform to the requirements of the Privacy Act of 1974 (5 U.S.C. 552a), the Family Educational Rights and Privacy Act of 1974 (20 U.S.C. 1232g), and the Protection of Pupil Rights Amendment (20 U.S.C. 1232h). The study team will not be collecting any individually identifiable information.

All study staff involved in collecting, reviewing, or analyzing study data will be knowledgeable about data security procedures. The privacy procedures adopted for this study for all data collection, data processing, and analysis activities include the following:

The study will not request any personally identifiable information (PII) data that was collected by independent evaluators. All of the data requested from independent evaluators will be in the form of aggregated reports of the methods, measures, plans, and results in their independent evaluations.

Individual i3 grants will be identified in this study. Their characteristics, results, and findings reported by independent evaluators, as well as assessments of the quality of the independent evaluations and i3 projects, may potentially be reported. This study cannot, however, associate responses with a specific school or individual participating in i3 independent evaluations in annual reports, since we are not collecting that information.

To ensure data security, all individuals hired by Abt Associates Inc. are required to adhere to strict standards and sign an oath of confidentiality as a condition of employment. Abt’s subcontractors will be held to the same standards.

All data files on multi-user systems will be under the control of a database manager, with access limited to project staff on a “need-to-know” basis only.

Identifiable data will be kept for three years after the project end date and then destroyed.

Written records will be shredded and electronic records will be purged.

The data collection for this study does not include any questions of a sensitive nature.

Burden for the data collection covered by this clearance request is 1,438 hours, for a total cost to respondents of $53,836. On an annual basis, 32 respondents will provide 43 responses, and the burden during each year of data collection will be 480 hours. Exhibit 5 presents time estimates of response burden for the data collection activities requested for approval in this submission. The burden estimates are based on the following assumptions:

The majority (80 percent) of evaluators will submit existing materials (reports, conference presentations, etc.) reporting their findings to the AR team.

The AR team will pre-fill survey item responses for evaluators with as much information as possible based on the documents they receive from evaluators reporting their findings and documents produced by evaluators to fulfill requirements of ED’s cooperative agreements with the grantees.

Evaluators will respond to a web-based Primary (and possibly also an Early or Follow-Up) Survey that includes pre-filled information for their review and new survey items.

Estimated hourly costs to independent evaluators are based on an average hourly wage for social scientists and related workers of $37.45 according to The United States Department of Labor, Bureau of Labor Statistics (U.S. Department of Labor 2009).

Exhibit 5. Estimate of Respondent Burden

Data Collection Wave and Activity |

Total Number of Expected Responses |

Total Burden Hours per Response |

Total Burden Hours |

Hourly Cost per Responsef |

Total Costs |

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

||||||||||||

Primary Data Collection: Respond to Surveya |

92 |

- |

- |

- |

- |

|

|

|

|

|

|

|

|

||||||

… Respondents who submit extant data on findingsb |

74 |

8.510 |

629.7 |

$ 37.45 |

$23,584 |

|

|

|

|

|

|

|

|

||||||

…Respondents who do NOT submit extant data on findingsb |

18 |

21.250 |

382.5 |

$ 37.45 |

$14,325 |

|

|

|

|

|

|

|

|

||||||

Early or Follow-Up Data Collection: Respond to Surveyc |

38 |

- |

- |

- |

- |

|

|

|

|

|

|

|

|

||||||

… Respondents who submit extant data on findingsd |

30 |

8.510 |

255.3 |

$ 37.45 |

$9,561 |

|

|

|

|

|

|

|

|

||||||

…Respondents who do NOT submit extant data on findingsd |

8 |

21.250 |

170.0 |

$ 37.45 |

$6,367 |

|

|

|

|

|

|

|

|

||||||

Total Number of Responses |

130 |

- |

1438 |

$ 37.45 |

$53,836 |

|

|

|

|

|

|

|

|||||||

Total Number of Respondentse |

97 |

|

|

|

|

|

|

|

|

|

|||||||||

Average Number of Responses per Respondent |

1.34 |

|

|

|

|

|

|

|

|

|

|||||||||

Overall Average Burden Hours per Respondent |

15 |

|

|

|

|

|

|

|

|

|

|||||||||

Overall Average Cost per Respondent |

$555.01 |

|

|

|

|

|

|

|

|

|

|||||||||

Annual Number of Responses |

43 |

|

|

|

|

|

|

|

|

|

|||||||||

Annual Number of Respondents |

32 |

|

|

|

|

|

|

|

|

|

|||||||||

Annual Burden Hours |

480 |

|

|

|

|

|

|

|

|

|

|||||||||

Notes:

This OMB Package covers the period from 1/1/2015 through 12/31/2017. Therefore it includes all data collection activities for the 49 FY2010 grants and 23 FY2011 grants, Early and Primary Data Collection for the 20 FY2012 grants and Early Data Collection for the 25 FY2013 grants.

Responding to the survey (during Primary Data Collection, Early and Follow-up Data Collection) includes reviewing data prepopulated by the NEi3 based on extant data on findings submitted to the NEi3 and confirming any information pre-populated by the NEi3 based on review of documents produced by evaluators to fulfill requirements of ED’s cooperative agreements with the grantees.

aWe expect 92 grants (all 49 FY2010 grants, all 23 FY2011 grants, and all 20 FY2012 grants) to participate in Primary Data Collection during the period covered by this OMB Package.

bWe expect approximately 80 percent (74) of the 92 Primary Data Collection participants to submit extant data prior to their Primary Data Collection.

c We expect 38 grants [40 percent (20) of the FY2010 grants, 40 percent (9) of the FY2011 grants, 20 percent (4) of the FY2012 grants, and 20 percent (5) of the FY2013 grants] to participate in Early or Follow-Up Data Collection.

dWe expect approximately 80 percent (30) of the 38 Early or Follow-up Data Collection participants to submit extant data prior to their Early or Follow-up Data Collection.

eDuring the period covered by this OMB package, we expect a total of 97 survey respondents (49 FY2010 grants, 23 FY2011 grants, 20 FY2012 grants and 5 FY2013 grants). This number differs from the number of responses because some of these grants will participate in Primary and Early or Follow-up Data Collection. Other grants (FY2013) will only participate in Early Data Collection. Among all grants that we expect to participate in data collection during the period covered by this OMB package, we anticipate a 100 percent response rate.

fAverage hourly wage for “Social Scientists, and Related Workers, All Others” from the Industry-Specific Occupational and Wage Estimates, U.S. Department of Labor (see http://www.bls.gov/oes/current/oes193099.htm#nat), accessed May 24, 2011

There are no annualized capital, start-up, or ongoing operation and maintenance costs involved in collecting this information.

The estimated cost to the Federal Government of the activities across all of the NEi3 contracts (FY2010/FY2011 cohort -contract no: ED-IES-10-C-0064, FY2012 cohort - contract no: ED-IES-13-C-0005, and FY2013 cohort – contract number ED-IES-14-C-0007) is $22,322,122 for the entire NEi3, including work on all contracts and tasks. These activities began in October, 2010 and will continue until February, 2020. Thus, the average annual cost to the federal government is approximately $2,391,366.

This is a request for a new collection of information.

In this section, we present our approach to analyzing the data collected in order to address the five goals of the data collection (and related research questions) introduced in Section A.2: (1) Describe the intervention implemented by each i3 grantee; (2) Assess the strength of the evidence produced by each i3 evaluation; (3) Present the evidence produced by each i3 evaluation; (4) Identify effective and promising interventions; and (5) Assess the results of the i3 Program.

After describing our approach to addressing the five goals, we discuss our plans for reporting the results. The five goals and research questions we plan to address over the course of our evaluation, presented in Section A.2 above, are reiterated in Exhibit 6.

Exhibit 6: NEi3’s Goals and Research Questions

Goal 1: Describe the intervention implemented by each i3 grantee. |

|

|

Q1. What are the components of the intervention? |

|

Q2. What are the ultimate student or teacher outcomes that the intervention is designed to affect? |

|

Q3. What are the intermediate outcomes through which the intervention is expected to affect student or teacher outcomes? |

Goal 2: Present the evidence produced by each i3 evaluation. |

|

|

Q5. For each i3 grant, how faithfully was the intervention implemented? Q6. For each i3 grant, what were the effects of the interventions on/promise of the interventions to improve educational outcomes?

|

Goal 3: Assess the strength of the evidence produced by each i3 evaluation. |

|

|

Q4. For each i3 grant, how strong is the evidence on the causal link between the intervention and its intended student or teacher outcomes? Q4a. For Scale-Up and Validation grants, did the evaluation provide evidence on the effects of the intervention? Q4b. For Development grants, did the evaluation produce evidence on whether the intervention warrants a more rigorous study of the intervention’s effects? For Development grants, if applicable, did the evaluation provide evidence on the outcomes of the intervention? |

Goal 4: Identify effective and promising interventions. |

|

|

Q7. Which i3-funded interventions were found to be effective at improving student or teacher outcomes? Q8. Which i3-funded interventions were found to be promising at improving student or teacher outcomes? |

Goal 5: Assess the results of the i3 Program. |

|

|

Q9. How successful were the i3-funded interventions? |

|

Q9a What fraction of interventions was found to be effective or promising? Q9b. What fraction of interventions produced evidence that met i3 criteria? Q9c. What fraction of interventions produced credible evidence of implementation fidelity? Q9d. Did Scale-Up grants succeed in scaling-up their interventions as planned? |

The nature of our analysis and reporting is to synthesize and review findings, rather than to estimate impacts using statistical techniques. In this section, we provide more information on how we plan to address the research questions posed for the NEi3 by analyzing the data we are requesting permission to collect.

Goal 1: Describe the intervention implemented by each i3 grantee. |

|

|

Q1. What are the components of the intervention? Components are defined as the activities and inputs that are under the direct control of the individual or organization responsible for program implementation (e.g., program developer, grant recipient), and are considered by the developer to be essential in implementing the intervention. Components may include financial resources, professional development for teachers, curricular materials, or technology products. We will review the evaluators’ reported intervention components, possibly rephrase them to maintain their original meaning but to be consistent with other key components named across other evaluations, and report them in a succinct manner to facilitate easing “browsing” of activities across interventions. |

|

Q2. What are the ultimate student or teacher outcomes that the intervention is designed to affect? We will report the ultimate student or teacher outcome domains that were evaluated for each grant. We may rephrase the outcome domains to maintain their original meaning but to be consistent with outcome domains named in other interventions, for example we may use “Mathematics” to describe outcome domains labeled by evaluators as “Math Achievement”, “Mathematics Achievement”, “Mathematical Understanding”, etc. |

|

Q3. What are the intermediate outcomes through which the intervention is expected to affect student or teacher outcomes? We will report the intermediate outcomes through which the intervention is expected to affect outcomes. We may rephrase the intermediate outcome names to maintain their original meaning but to be consistent with intermediate outcomes named in other interventions, for example we may use “Student Engagement” to describe mediators labeled by evaluators as “Student Participation”, “Student Commitment”, etc. |

Goal 2: Present the evidence produced by each i3 evaluation. |

|

|

Q5. For each i3 grant, how faithfully was the intervention implemented? We will ask evaluators to report their criteria for assessing whether each key component of the intervention was implemented with fidelity. In addition, we will ask evaluators to report annual fidelity estimates so that the NEi3 can assess whether or not the intervention was implemented with fidelity. We will state that an intervention was implemented with fidelity in a given year if the evaluator reports that the thresholds for fidelity were met for that year. As most grants are multi-year effort, we will determine overall fidelity as follows:

Q6. For each i3 grant, what were the effects of the interventions on/promise of the interventions to improve educational outcomes? We will convert all of the reported impact estimates into effect sizes by following WWC guidelines, and report these impact estimates and their statistical significance. |

Goal 3: Assess the strength of the evidence produced by each i3 evaluation. |

|

|

Q4. For each i3 grant, how strong is the evidence on the causal link between the intervention and its intended student or teacher outcomes? Q4a. For Scale-Up and Validation grants, did the evaluation provide evidence on the effects of the intervention? Our WWC-certified reviewers will apply the most recent WWC handbook rules to determine whether the evaluation meets standards without reservations, meets standards with reservations, or does not meet standards. For this reason, the data collection includes all elements that would be required to complete a WWC Study Review Guide (SRG). Q4b. For Development grants, did the evaluation produce evidence on whether the intervention warrants a more rigorous study of the intervention’s effects? For Development grants, if applicable, did the evaluation provide evidence on the outcomes of the intervention? Our WWC-certified reviewers will apply the most recent WWC handbook rules to determine whether the evaluation meets standards without reservations, meets standards with reservations, or does not meet standards. If it does not meet standards, the WWC-certified reviewers determine that an evaluation provides evidence on the intervention’s promise of improving student or teacher outcomes if:

|

Goal 4: Identify effective and promising interventions. |

|

|

Q7. Which i3-funded interventions were found to be effective at improving student or teacher outcomes?

Q8. Which i3-funded interventions were found to be promising at improving student or teacher outcomes? Promising interventions will be defined as Development Grant-funded interventions with evidence of positive and significant outcomes based on a study with a rating of Provides Evidence of the Intervention’s Promise for Improving Outcomes. The evidence will indicate a positive outcome if for any domain, at least one confirmatory impact estimate in the domain is positive and statistically significant, after correcting for multiple comparisons, and no estimated outcomes for any confirmatory contrasts in the domain are negative and statistically significant. |

Goal 5: Assess the results of the i3 Program. |

|

|

Q9. How successful were the i3-funded interventions? |

|

Q9a What fraction of interventions were found to be effective or promising? We will use basic tabulation methods to compute the fraction of interventions that were found to be effective or promising. Q9b. What fraction of interventions produced evidence that met WWC evidence standards and i3 criteria? We will use basic tabulation methods to compute the fraction of interventions that were found to meet criteria without reservations, meet criteria with reservations, provide promise of the intervention’s promise for improving outcomes, or not meet criteria. Q9c. What fraction of interventions produced credible evidence of implementation fidelity? |

|

We will use basic tabulation methods to compute the fraction of interventions that were found to produce credible evidence of implementation fidelity. Q9d. Did Scale-Up grants succeed in scaling-up their interventions as planned? We will describe the evaluator’s scale-up goals and compare those goals to the scale-up efforts that took place, based on the evaluator’s survey responses.

|

Reports of the NEi3 will summarize information from the evaluations as it becomes available. This means that the content of our reports is driven by the progress made by the independent evaluators. In our first report on study findings, expected to be released in Spring 2016, we expect that most FY2010 evaluations and some FY2011 evaluations will be reporting findings from their implementation and impact studies based on data collected during the 2013-14 school year. This report release date balances the demand for information on the evaluation findings with the need to allow interventions time for full implementation and evaluators’ time to collect and analyze data.

In future reports, the main focus of the report will be updating information on the evaluations to reflect progress during the year since the prior data collection.

Each of our reports will consist of two key sections:

An individualized Project Profile for each i3 evaluation with findings available that:

Describes the intervention implemented (goal 1).

Presents the evidence (goal 2).

Assesses the strength of evidence produced (goal 3).

A series of cross-site summary tables that:

Identify effective and promising interventions (goal 4)

Assess the results of the i3 Program (goal 5).

The schedule for published reports based on data collected between January 2015 and December 2017 is presented in Exhibit 9 below. The schedule assumes OMB approval will be received by January 1st, 2015.

Exhibit 9: Schedule of Reports

Report |

Expected Release Dates |

First Report on NEi3 findings |

Spring 2016 |

First Addenda to Report on NEi3 findings |

Spring 2017 |

Second Addenda to Report on NEi3 findings |

Spring 2018 |

No exemption is requested. The data collection instruments will display the expiration date.

The submission describing data collection requires no exemptions to the Certificate for Paperwork Reduction Act (5 CFR 1320.9).

References

Bloom, H. (2006). The core analytics of randomized experiments for social research. In Alasuutari, P., Bickman, L., & Brannen, J. (eds.), Handbook of Social Research, pp. 1-33. Sage Publications.

Coalition for Evidence-Based Policy and What Works Clearinghouse. (2005). Key items To get right when conducting a randomized controlled trial in education. U.S. Education Department’s Institute of Education Sciences (Contract #ED- 02-CO-0022). Downloaded from http://coalition4evidence.org/wp-content/uploads/2012/05/Guide-Key-items-to-Get-Right-RCT.pdf on March 7, 2014.

Flay, B. (2005). Standards of evidence: criteria for efficacy, effectiveness and dissemination. Prevention Science 6(3), 1-25.

Hedges, L. V., & Olkin, I. (1985). Statistical methods for meta-analysis. Orlando, FL: Academic Press.

Hedges, L. V., & Pigott, T. D. (2001). The power of statistical tests in meta-analysis. Psychological Methods 4(3), 486-504.

Higgins, J. P. T. & Green, S. (eds.). (2001). Cochrane Handbook for Systematic Reviews of Interventions Version 5.0.2. The Cochrane Collaboration, available from www.cochrane-handbook.org.

Higgins, J. P. T. & Green, S. (eds.). (2009). Cochrane handbook for systematic reviews of interventions version 5.0.2. The Cochrane Collaboration, available from www.cochrane-handbook.org.

Konstantopoulos, S. & Hedges, L. V. (2009). Analyzing effect sizes: fixed-effects models. In H. Cooper, L. V. Hedges, & J. C. Valentine (eds.), The handbook of research synthesis and meta-analysis. New York: Russell Sage Foundation.

Kratochwill, T. R., Hitchcock, J., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M & Shadish, W. R. (2010). Single-case designs technical documentation. Retrieved from What Works Clearinghouse website: http://ies.ed.gov/ncee/wwc/pdf/wwc_scd.pdf. on December 13, 2010, p 2.

Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Applied Social Research Methods Series (Vol. 49). Thousand Oaks, CA: SAGE Publications.

Lipsey, M. (2009). Identifying interesting variables and analysis opportunities . In H. Cooper, L. V. Hedges, & J. C. Valentine (Eds.), The handbook of research synthesis and meta-analysis. New York: Russell Sage Foundation.

Raudenbush, S. W. (2009). Analyzing effect sizes: Random-effects models. In Cooper, H., Hedges, L. V., & Valentine, J. C. (eds.), The handbook of research synthesis and meta-analysis. New York: Russell Sage Foundation.

Schochet, P., Cook, T., Deke, J., Imbens, G., Lockwood, J.R., Porter, J., Smith, J. (2010). Standards for regression discontinuity designs. Retrieved from What Works Clearinghouse website: http://ies.ed.gov/ncee/wwc/pdf/wwc_rd.pdf on December 13, 2010, p. 1.

Shadish, W.R., Cook, T.D., & Campbell, D.T. (2002). Experimental and quasi- experimental designs for generalized causal inference. Boston : Houghton- Mifflin.

Shadish, W. R. & Haddock, C. K. (2009). Combining estimates of effect size. In Cooper, H., Hedges, L. V., & Valentine, J. C. (eds.), The handbook of research synthesis and meta-analysis. New York: Russell Sage Foundation.

What Works Clearinghouse (2014). What Works Clearinghouse Procedures and Standards Handbook (Version 3.0). Retrieved from http://ies.ed.gov/ncee/wwc/pdf/reference_resources/wwc_procedures_v3_0_draft_standards_handbook.pdf, on May 14, 2014.

1 The partners are Dillon-Goodson Research Associates, Chesapeake Research Associates, ANALYTICA, Westat, Century Analytics, and American Institutes for Research.

2 It is important to note that the assessment of whether the independent evaluations are well-designed and well-implemented forms the basis for some of the Government Performance and Results Act (GPRA) measures for the i3 program.

3 The i3 Promise criteria operationalize OII’s GPRA requirements, which distinguish between Development and Validation/Scale-Up grants in their evidence requirements. These Promise criteria were developed to assess the quality of evaluations of Development grants that do not meet WWC evidence standards (with or without reservations). These criteria are intended to determine the extent to which the interventions funded by Development grants provide evidence of “promise” for improving student outcomes and may be ready for a more rigorous test of their effectiveness.

4 See http://ies.ed.gov/ncee/wwc/pdf/reference_resources/wwc_procedures_v3_0_draft_standards_handbook.pdf for WWC evidence standards.

5 We expect that some projects will use more than one research design. In order to be clear, in this plan we refer only to a single design per evaluation.

6 The NEi3 will treat an Interrupted Time Series Design or a pre-post design with a comparison group as a Quasi-Experimental Design.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Abt Single-Sided Body Template |

| Author | Katheleen Linton |

| File Modified | 0000-00-00 |

| File Created | 2021-01-26 |

© 2026 OMB.report | Privacy Policy