0990-OWH TIC TTA Evaluation_OMB_Supporting Statement B

0990-OWH TIC TTA Evaluation_OMB_Supporting Statement B.docx

Evaluation of the National Training on Trauma-Informed care (TIC)

OMB: 0990-0440

O MB

Supporting Statement B: Statistical Methods

MB

Supporting Statement B: Statistical Methods

Cross-site Evaluation of the National Training Initiative on Trauma-Informed Care (TIC) for Community-Based Providers from Diverse Service Systems

HHSP23320095624WC

Final

January 16, 2015

Prepared

for:

Dr. Adrienne Smith

OWH/OS

200

Independence Ave S.W.

Room 728F

Washington, DC 20201

Submitted

by:

Abt Associates

55

Wheeler Street

Cambridge, MA 02138

In

Partnership with:

Rutgers’ School of Criminal Justice

1. Respondent Universe and Sampling Methods 1

2. Procedures for the Collection of Information 2

B.2.2 Knowledge Assessment from Online Survey Instrument 8

3. Outcome Evaluation Analysis 9

B.3.1 Analysis of Key Outcomes and Data Reduction (full sample) 9

B.3.2 Outcome Analysis (total sample) 9

B.3.3 Outcome Analysis by Training Session Contrast 10

B.3.4 Analysis of Potential Contrasts 10

B.3.5 Outcome Analysis by Derived Contrasts 10

4. Methods to Maximize Response Rates and Deal with Nonresponse 10

B.4.2 Training Assessment from Online Survey Instrument 11

5. Test of Procedures or Methods to be Undertaken 11

B.5.2 Training Assessment from Online Survey Instrument 11

6. Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data 11

During the evaluative phase of the OWH cross-site evaluation of National Training Initiative on Trauma-Informed Care (TIC) for Community-Based Providers from Diverse Service Systems, the OWH contractor will conduct two major data collection activities: site visits and training assessments.

Of these activities, only training assessments employ statistical sampling methods in its design. Specifically, this consists of:

A web survey designed to gather demographic and professional information from all training participants, and collect responses to questions on survey scales devised to measure attitudes and beliefs, knowledge uptake and skills acquired as a result of the TIC TTA initiative.

All participants who have been trained will constitute the online survey target population. As the universe of training participants across the phases is not considerably large, the contractor intends to sample all 300 participants in order to preserve sample sizes at smaller units of analysis. Moreover, the online survey instrument places a low burden on respondents and should facilitate survey response.

Anticipated response rates for the two evaluative phase data collection efforts associated with training participants and organizations are as follows: 80 percent overall for the web survey and 90 percent for the site visits. The project team perceives the greatest difficulty in contacting participants from the pilot phase of training. As these trainings have already taken place, turnover of staff may lower response rates. Therefore, for the web survey of participants, the team assumes a minimum 60 percent response rate for those trained either in Phase I or II, and an 80 percent response rate for participants in phase III trainings.

Table B-1 contains information about the Respondent universe and estimated sample collection for each of these data collection activities.

Table B-1: Respondent Sample characteristics for OWH Trauma in Care Training Evaluation

|

Total |

Training Pilot Phase |

Training Phase II |

Training Phase III |

(n=) |

(n=) |

(n=) |

(n=) |

|

Universe of Training Participants |

300 |

85 |

115 |

100 |

Estimated Minimum Survey Respondent Sample Size |

200 |

51 |

69 |

80 |

Training Sites |

16 |

6 |

5 |

5 |

Sites to be Visited |

10 |

1 |

5 |

4 |

On-site Interviews |

144 |

4 |

80 |

64 |

Agency/Organization Observations |

36 |

1 |

20 |

16 |

This section describes procedures for:

Site Visit Protocols: review of perceived impacts of training within the training sites; and

Web Survey Training Assessment: follow up collection with all training participants on the incorporation of training values and beliefs, knowledge, and skills into their practice.

The information below is copied directly from the Site Visit Protocol (see Appendix A).

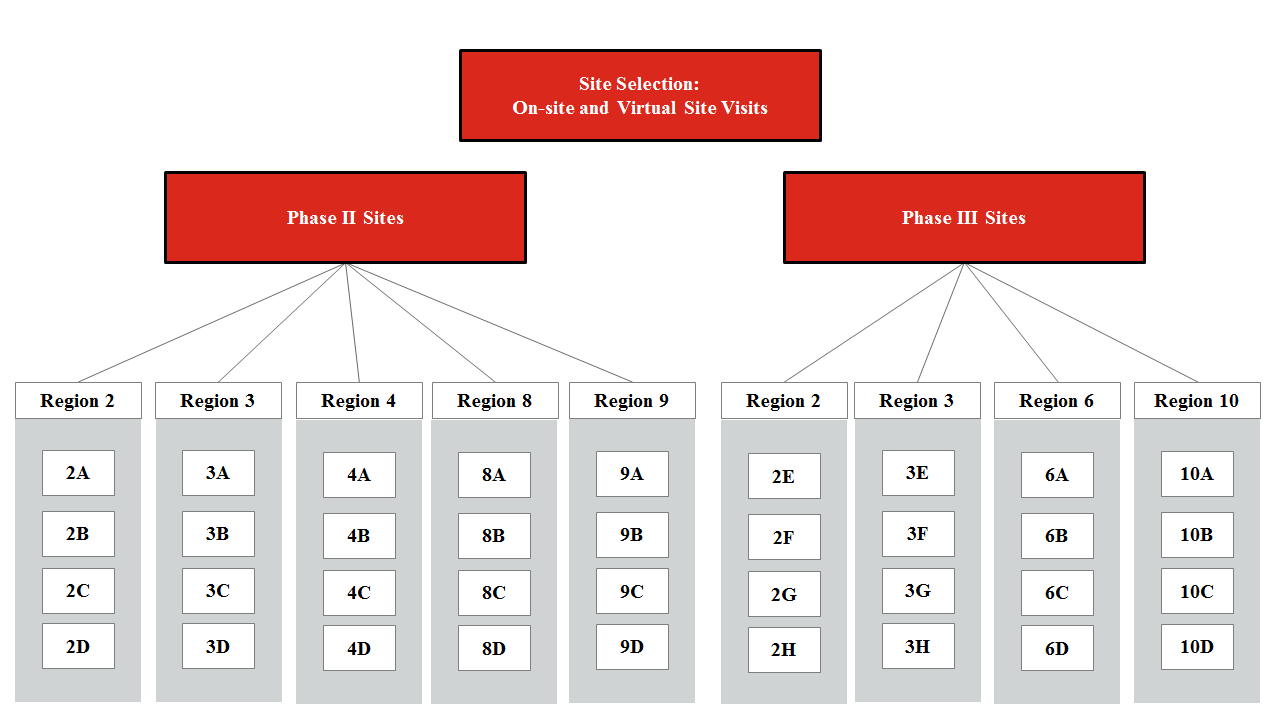

The project team plans to visit a number of agencies/organizations that participated in the National Training Initiative on Trauma-Informed Care for Community-Based Providers from Diverse Service Systems initiative within the 10 training sites. One site visit to a Phase I pilot site will be conducted during the formative phase of the project. Site visits within the remaining nine training sites will take place during the evaluative phase of the project. The project team will conduct site visits of agencies/organizations in five of the regions that received TIC training and follow-up TA in FY 2013 (Phase II) and site visits of agencies/organizations in four of the regions that received training and follow-up TA in FY 2014 (Phase III). The team anticipates visiting four agencies/organizations per region (see Exhibit A).

Selection of agencies/organizations within a region will be based on the consideration of a number of factors. Selection criteria will include:

Type of agency/organization

The contractor will work with OWH project staff to select specific sites to participate in the evaluation to ensure that they reflect a diversity of regions, settings, and other site characteristics identified by OWH and key informants as potentially relevant to the training and its objectives.

The contractor will select a range of sites, based on criteria such as agency/organization size, mission, and types of services provided.

The contractor plans to limit site visit duplication regarding types of agencies/organizations within a particular HHS region.

Capacity and willingness of an agency/organization to participate in site visits

As some of the participating agencies/organizations may have limited staff, they may be unable to accommodate the time necessary for site visits.

Geographic diversity

The contractor will consider geographic diversity when selecting sites with representation of both rural and urban areas.

Travel implications

Many HHS regions cover a large geographic area. Given the limited time available for each site visit, it is more prudent to pick agencies/organizations that are a reasonable distance from each other, rather than travelling long distances between each, assuming the team can identify representative organizations within these bounds.

The project team will include agencies/organizations from outside of the planned travel radius. The contractor has capabilities to conduct interviews in a virtual or telephonic manner to accommodate participants from HHS regions that are difficult to access physically. For example, in the case of the Region 9, if agencies/organizations from Guam or Hawaii are included, the team will conduct a telephonic/virtual visit, as the physical base for the Region 9 site visit will be California. In the case of Region 6, if agencies/organizations from Arkansas or Louisiana are included, the team will conduct a telephonic/virtual visit, as the physical base of the Region 6 site visit will be Texas.

While the site visit protocol will vary slightly for such agencies/organizations (i.e. no tour of physical space, but review of Environmental and Accessibility Checklist), interviews and review of documentation can be successfully conducted via telephone or teleconference. The contractor has had success conducting interviews in this manner in other evaluation projects.

Informed consent for participants for virtual site visits will be sent ahead of discussions, signed, scanned, and sent back prior to interviews.

EXIBIT A: Site Selection Overview

Site Selection: Remaining Teams

In addition to the on-site and virtual site visits of the selected agencies/organizations, the project team wishes to retain the option to conduct telephonic meetings with representatives of trainee organizations that are not selected for physical and virtual site visits. The purpose of these selected calls will be to fill gaps in information needed to better understand Phase I pilot testing or Phase II and Phase III training and technical assistance. Using the interview protocols developed for the on-site and virtual site visits, the team proposes to conduct approximately four conference calls with selected agency/organization teams drawn from those agencies/organization not selected for visits in the formative or evaluation phases (see Appendix H). These calls will differ from, and be considerably less time consuming than, both the on-site and virtual site visits in that there will be no travel time, no tour, no one-on-one interviews, etc. As such, these calls can be completed by project staff in tandem with the scheduling of on-site and virtual site visits.

Participant Selection: On-site and Virtual Site Visits

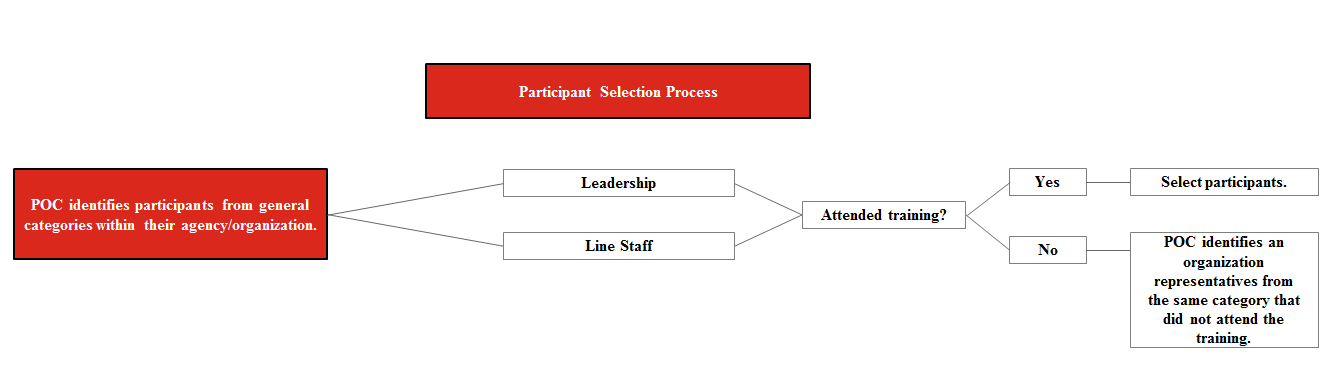

Once the sites within each Phase II and Phase III training site have been selected, the project team will select four representatives from within each agency/organization to interview during the site visits. Given the small number of organizations (five to six) that participated in each region’s training and technical assistance, and the numbers of participants from each site (also five to six), it is not feasible to randomize the selection of participants. The project team will engage the agency/organization points of contact, to be identified prior to initial contact (see Section 2.3 below), to select interview participants from among those who participated in the training (contact list provided by OWH and Westat) with representation of various roles in the agency/organization, and with the addition of interviewees who may offer a unique perspective of changes in the organization.

Specifically, the project team will ask the points of contact to identify participants from two general categories within the agencies/organizations:

Leadership

Leadership includes staff in roles such as Director of Agency, CEO, and/or Executive Director.

Leadership representatives may include staff from a peer organization that participated in the training.

Leadership representatives may also include individuals who are referenced by OWH as “peers” and have lived experience of trauma.

Program and Line Staff (to include clinicians, program director/manager, direct program/service staff, and other frontline staff); and

Line Staff should include those people who have an administrative role in the agency, as well as those who have front line program or service delivery responsibilities.

Line staff representatives may include individuals who are referenced by OWH as “peers” and have lived experience of trauma.

While the team would, ideally, like to speak with agency/organization representatives within the leadership and line staff roles who were also training participants, they are cognizant that a number of circumstances that may limit our ability to do so, including:

Staff turnover

Given the length of time between the trainings and the site visits, there is a strong likelihood that there will be staff turnover at a number of the agencies/organizations.

Staff unavailable/unwilling

Training participants from the selected agencies/organizations may be unavailable or unwilling to be interviewed.

Homogeneity of training participant roles

While agencies/organizations were asked to select training participants from a variety of roles/responsibilities some may have been unable to do so.

Given these circumstances, the team expects to interview some agency/organization representatives from the roles defined above who may not have attended the training. As agency/organization representatives, the perspectives of staff in these roles are valuable in gauging changes and impacts that have occurred as a result of the training.

In summary, the project team will request that the points of contact first attempt to select interviewees from leadership and line staff who were training participants. If such representatives are unavailable for the above reasons, team will request that the POCs identify other representatives from within those roles. The team will emphasize that while not all staff who participated in the OWH training and TA events may be interviewed during the site visits, all training participants will be provided the online survey portion of the evaluation.

EXIBIT B: Participant Selection Overview

Given the time requirement for sites and travel considerations for project staff, the project team anticipates contacting each evaluation site approximately two months prior to the site visit, by Region. The team anticipates that site visits will occur between October 2014 and March 2015, with each visit lasting approximately three days. Therefore, initial conversations with the first regions to be visited will begin in early August 2014, pending OMB approval. The plan is to have the date and time of each site visit confirmed at least one month prior to the proposed site visit. As mentioned earlier, some interviews may be conducted in a virtual or telephonic manner given the diverse geographic locations of participants within some HHS regions. As detailed in the following section, of the three days planned for each site visit, the team will reserve one travel day and two days for on-site meetings and review. Each site visit is divided into four half-day segments with the selected agencies/organizations being drawn from those that participated in the OWH TIC training and technical assistance.

Similar to the site visits, the project team anticipates contacting selected teams drawn from the remaining agency/organization teams approximately two months prior to scheduling the conference calls. The conference calls will occur in tandem with the site visits, generally between October 2014 and March 2015, with some flexibility if necessary. These calls are expected to take approximately one hour each.

Pre-Visit Planning and Preparation (Including Preliminary Contact)

The contractor will collaborate with OWH to identify a point of contact (POC) from each agency/organization to be visited. Once the POCs have been identified, OWH will send a preliminary email to the POCs at all selected agencies/organizations (to be drafted by the contractor) introducing the project, the evaluators’ role, and establishing OWH’s goals and objectives for the evaluation (see Appendix A).

Initial contact from the contractor, via email, will follow the OWH email and will focus on introductions and scheduling a collaborative preliminary conference call with the POCs and leadership representatives (if different from the POC) from all of the selected agencies/organization within the region (see Appendix A). Agency/organization representatives may elect to have additional staff present on the conference call. The initial email will include a one-page overview of the evaluation project for leadership to share with their staff (see Appendix C), a site visit process map, and site visit structure checklist to prepare for the site visit (see Appendix I). In advance of the conference calls, the team will develop a structured agenda, with a set of standardized questions and topic areas to be touched on during the discussions (see Appendices D, E, and F). Examples of topics to be discussed during the conference call include: general timing of and availability for the site visit; method by which one-on-one interviews will take place (in person vs. telephonically); location of interviews; suggested inclusion of individuals who OWH references as “peers” – persons with lived experience of trauma - in interviews while on-site; and the optional exit meeting.

Based on the results of the preliminary conference call, follow-up conversations (including logistical confirmation) will be held via email and/or telephone and will focus on establishing the date and time for the site visit and confirming the list of interviewees. Similar to the agencies/organizations selected for on-site and virtual site visits, the project team will identify a point of contact at selected organizations drawn from the remaining teams to send the initial contact email and corresponding information.

Pre-visit Documentation Request

During initial conversations with the each of the selected sites, the team will request a copy of their action plans (per the training curriculum), an organization chart, and documentation of any and all policies, procedures, and practices that have changed as a result of participation in the training. Any documentation that sites are unable to provide in advance of the visit will be requested while on-site.

Site Visit Structure

Site visits will take place over three days (including up to one travel day and a minimum of two on-site days) and will include two project staff (one senior-level staff and one junior-level staff). As a rule, the site visits will be divided into four half-day segments with selected agencies/organizations.

Each agency/organization site visit will begin with an initial group meeting which will include all representatives to be interviewed and any additional staff that leadership deems appropriate. During this initial meeting the team will:

Lead introductions;

Provide a brief overview of the evaluation project goals and objectives;

Review logistics for interviews;

Interviewee availability/timing

Space for conducting one-on-one interviews

Gather any documentation or information that was previously requested but not acquired; and

Discuss any changes made to the policies, procedures, practices and/or physical space of the organization as a result of the training; and

Interviews (2-3 hours)

One-on-one interviews with previously identified leadership and line staff will begin following the tour. Interviews will be conducted in a private space determined prior to the site visit and confirmed during the group meeting. The contractor estimate conducting approximately four interviews per site, allowing 30-45 minutes per interview. In the event that interviews finish early, and there are additional staff members who are willing and available to speak with us, additional interviews will be accommodated.

Before initiating any of the interviews, the interviewer will provide interviewees with a copy of the informed consent form to review. If the interviewee agrees to participate, the interviewers will continue with the interview protocol. If the interviewee declines to participate, the team will ask the site visit POC if they wish to make a substitution.

Tour (30 minutes)

Following the interviews, the contractor will request a tour of the physical space of the agency/organization. The tour is intended to help assess any changes in the physical space of the agency/organization that were made based on the environmental items covered in the training curriculum based upon the knowledge gained during the training and outlined in their Action Plan. The purpose of the tour is to help in evaluating the impact of the training, not to evaluate the success of the agency/organization itself. The contractor will use the environmental and accessibility checklist to capture such changes. The Environmental and Accessibility Checklist is an adaptation of Handout 2.2 that the training participants received as part of the training curriculum. For virtual site visits, project team will review items on the Environmental and Accessibility Checklist with participants, but will depend on self-reporting as the team will not be present for direct observation.

Exit and Follow-up (Optional)

The contractor will offer agencies/organizations the option of an exit meeting during the introductory conference call with agency/organization POCs and leadership. If the agency/organization requests an exit meeting, the project team will be available for a brief overview of interviewer impressions and general feedback. These meetings should last no longer than 15-20 minutes. If the agency/organization declines the exit meeting, the team will provide notice of departure upon leaving to the administrative offices of the agency/organization and will inform the agency/organization that a copy of any reports that OWH disseminates as a result of the evaluation will be provided to them. Within 10 business days of return from the site visit, all participating agency/organizations will be sent a letter of thanks addressed to the leadership of each organization.

Development of Site Visit Report

Interviewers will take notes during interviews and will later summarize these notes into succinct site visit summaries employing a unified format for use in qualitative analysis. The contractor will also provide OWH a general overview of activities conducted during site visits in the quarterly progress reports. The information collected during and surrounding site visits will be used to identify and assess common barriers as well as facilitators to implementation of training content across the ten Regions. Through the site-visit interviews, the project team will also examine whether, in each site and across sites, training alone or training with technical assistance increased the capacity of sites to provide trauma informed services.

After completing the formative site visit, the project team will develop a preliminary NVivo "codebook" that defines overarching themes and curriculum components we plan to discuss during site visits and team conference calls.

Implementation data will be summarized by site and by groupings in narrative and, where appropriate, in simple descriptive tables. For example, the team can examine across sites recruitment and completion rates for participants, participant satisfaction, etc. Specific comments may be coded in more than one theme and comments will be coded at the most specific theme. As visits are completed and notes are coded, two team members will review the content of all the themes to check for inconsistencies, redundancies and imprecision. All qualitative data will be coded using this theme structure.

For the purpose of the outcome evaluation, information from this phase may also be used to cluster training site characteristics and experiences to create contrasts for the purpose of understanding what works for whom and under what circumstances in regard to values and beliefs, knowledge and skills.

As mentioned previously, the contractor will deploy an online web survey to follow up with all training participants to assess changes in values and beliefs and uptake of knowledge and skills derived from their participation in the training (see Respondent Universe and Sampling Methods section above).

Programming and development of the analytic file for the web survey will be done by the contractor’s Client Technology group, which specializes in online survey creation, testing and execution. The survey format and content will be pretested with no more than 9 internal respondents, with minor adjustments made as needed. The contractor’s survey platform is a secure and user-friendly cloud-based system and allows the contractor to rapidly build and deploy sophisticated surveys for over 100 surveys every year at an extremely low cost. The system allows skip and branching logic and offers bank grade data encryption over Secure Sockets Layer (SSL). Data are housed in redundant servers in secure data centers and allows for full backups in case of loss. The contractor’s surveys are section 508 compatible.

Each survey will contain a call in number for respondents to use for questions or difficulties they may have in answering or submitting the survey. This line will be staffed by any of the contractor’s research analyst trained on the survey and familiar with the content area. If a respondent is unable or unwilling to complete the survey online, a contract staff member will offer to administer it as a telephone survey or mail a paper copy for completion.

A period of 30 days will be scheduled for response to the survey. All participants who have not responded in that time period will be contacted by telephone as a reminder and offered assistance in completing the survey.

As mentioned previously, the project team anticipates at a minimum an 80 percent response rate for all new training sites and a 60 percent response rate for earlier training sites (given the expectation of staff turnover) to the online survey. Each respondent is not identified by name for the online survey, but will be identified as to the type of agency they are representing. A formal consent form approved by the contractor’s IRB and OMB will precede the start of the online or other venue survey (see Appendices D and E). The contractor will provide staff support, available daily across time zones, to assist participants.

Survey data will be transformed into a common analytic file type (i.e. SAS/STATA) for analysis.

The effectiveness of NTI training and technical assistance will be analyzed at both the individual participant and the organization level. The effectiveness of the training (and TA) at the individual level will be measured by assessments of: (1) knowledge uptake, (2) values and beliefs, and (3) skill acquisition. At the organizational and environmental levels, the impact of the training together with the TA will be assessed by changes in agency environments, practice and policies.

The logic of these focused trainings follows that trainees return to their agencies, implement their action plans and continue to transmit what they have learned to others in the agency. While the project team has divided the approach to analysis into individual and organizational levels, the team views these two levels as both cumulative and interrelated in reality; that is, training and TA-derivative change cannot occur at the organizational level without effecting change on the individual trainee level first.

OWH has requested an analysis plan for a cross-site evaluation of the training and TA programming. The contractor understands this to include analyses that compare each of the 10 (Phase II and III) training sites to each other, as well as the analysis of effectiveness due to other variables that may affect success, including trainee demographic and other characteristics, the mode of training delivery and TA access, and agency/regional characteristics. The purpose of the cross-site evaluation is to collect uniform data via a shared protocol, so that differences in outcomes by contrast conditions (e.g., type of training method) can be directly assessed.

There are six stages to the individual outcome analysis:

Assessment of response rates and potential bias;

Analysis of key outcomes and data reduction;

Outcome analysis of the total sample;

Outcome analysis by training session contrast;

Analysis of potential contrasts; and

There are multiple measures of each outcome in several domains (e.g., knowledge, attitudes, and behaviors). Further, in some cases outcomes will be measured as change scores (i.e., the change in response between time 1 and time 2). Basic descriptive analyses will be conducted first, followed by analyses designed to evaluate how these measures might be combined in indices, or unweighted or weighted scales. For example, factor/principal components analysis will be used to evaluate the degree to which multiple measures tend to “hang together.” In this case, weighted factor scores may be used to create single measures of each outcome. The final outcome indices/scales will be determined through the process of these analyses.

The outcome analysis begins with determining whether there are demonstrable effects of the curriculum training (and TA) on all participants (i.e., full sample) on the primary outcomes (i.e., knowledge, attitudes, and behaviors).

The first stage of this analysis is an examination of frequency distributions for all variables, including demographic and other respondent characteristics and outcome variables. The second step is to analyze differences in outcomes by demographic and other respondent characteristics. The purpose of this step is to identify variables that are associated with outcomes. For example, do individuals with prior training in trauma have superior results compared to those without such training? Simple bivariate tests of significance (i.e., chi square, t- and F- tests) will be used to determine significant differences. Given a sample size of 300 respondents, there is adequate power to determine small to moderate effects. Once these bivariate tests are concluded, multivariate tests will be conducted. Models comprised of all significant variables (found at the bivariate level) will be estimated to identify the variables that predict outcomes while controlling for all other relevant variables. These analytic techniques are dependent on the nature of the dependent variables (i.e., outcomes). Possible techniques may include Ordinary Least Squares (OLS) regression, negative binomial regression, and Multivariate Analysis of Variance (MANOVA). The contractor will attempt to keep these models parsimonious given the sample size and the limitation of relatively small samples on power.

While individuals are likely to vary on outcomes, one of the most important aspects of this study is to determine whether training sites vary significantly on these same outcomes. This analysis begins with a set of bivariate tests of “training site” by each outcome. Because sites not only may differ on outcomes but also may differ on other key characteristics, multivariate tests will be conducted. Models similar to those described in the previous section will be estimated. In addition to the variables contained in the previous models, the variable, “training sites,” (included as a single variable or as a set of dummy-coded variables) will be included. If this variable is significant, this suggests that other idiosyncratic or site-specific characteristics are affecting the training outcomes.

There are several natural contrasts that may affect outcomes in addition to the “training site,” including wave (i.e., pilot, first set, second set), whether technical assistance was received and, if received, the nature and intensity of the TA, the trainer. The contractor will use the same analytic strategy as will be applied to the sites described above.

Further, the organizational assessment and site visits will provide a wealth of detailed information on the agencies and communities from which participants come. For example, possible contrasts may be size of annual budget, number of employees, and type of organization. These data will be analyzed to determine whether there are other natural groupings that can be used as contrasts.

Following the logic of the analysis of the training site effects, analyses using the derived contrasts from the previous step will be conducted. This will help us understand what organizational characteristics may affect training outcomes. For example, respondents who come from small organizations may have better (or worse) outcomes than respondents from large organizations.

The evaluation project team has developed protocols and tools with the intention of minimizing barriers to response. The following sections discuss how the project team will address perceived issues to response in our data collection efforts:

Responsiveness for on-site interviews will be aided by the use of virtual or conference telephone capabilities, enabling remote trainees to participate in these meetings without the burden of travel. While the team expects time costs to affect site visit response, visits have been limited to ½ day visits (per agency/program) over a two day period to be performed by two site visitors in an effort to minimize this burden. Moreover, the initial contacts to plan the site visit will be with a program director or administrator, who in turn will authorize staff participation in the site visit and approve the schedule for interviews to minimize program disruption and burden. Short interviews should further minimize burden (see Site Visit Protocol, Appendix A).

It is the project team’s experience that a motivated, primarily professional respondent sample coupled with a brief and attractive survey format results in high rates of response. In addition, offering multiple modes of reply (telephone, via a paper copy sent out) allows the respondent a number of avenues through which they may answer.

The online survey assessment scales, site visit protocols/interview guides, and action plan coding schemes have been developed during the formative phase of the project. The content and format was created by the contractor in collaboration with OWH to best reflect the intended objectives of the training.

A description of pilot measures for each collection effort follows:

To ensure that site visit materials are both appropriate and understandable to interviewees, site visit protocol will be tested during our formative site visit to a Phase I training site. No more than nine people will participate in these on-site discussions. The team anticipates few, if any, changes or additions to the protocols. If such changes are necessary, the contractor will advise all necessary parties (OWH, OMB, and IRB) and submit for approval. Protocols and focused interview guides have already been developed for and approved by OWH (see Site Visit Protocol, Appendix A).

The assessment scales have been developed by senior staff and derived directly from the curriculum. The scales have been pre-tested by internal project participants to test for timing and ease of understanding (using two readability tests, the scales come out at grade levels 7.5 and 8). Any needed language translations will be provided as necessary. Items or scales that have been used in prior research in this area were explored in a preliminary review of the literature and found to be unhelpful.

The following table lists the individuals at OWH in charge of approving and reviewing project materials:

NAME |

TITLE |

PHONE |

|

Adrienne Smith |

Project

Officer, |

202-690-5884 (dir.); 202-690-7172 (fax) |

|

Sandra Bennett-Pagan |

Regional Women’s Health Coordinator |

212-264-4628 (dir.); 212-264-1324 (fax) |

|

Ledia Martinez |

Regional Women’s Health Liaison |

202-205-1960 (dir.); 202-401-4005 (fax) |

The following table represents the contractor staff from Abt and the Rutgers’ School of Criminal Justice. These staff members are primarily responsible for the evaluation design, data collection, and analysis Abt Evaluation Team:

NAME |

ROLE |

TITLE |

PHONE |

|

Mica Astion |

Data Collection & Analysis |

Project

Manager,

|

617-520-2568;

|

|

Danna Mauch |

Research Design, Data Collection & Analysis |

Co-P.I.,

Abt |

617-349-2354;

|

|

Bonita Veysey |

Research Design, Data Collection & Analysis |

Co-P.I., Rutgers' School of Criminal Justice Professor & Acting Dean |

973-353-1929

(work); |

|

Helga Luest |

Data Collection & Analysis |

Abt, Senior Communications Manager |

301-347-5149 |

|

Kevin Neary |

Data Collection & Analysis |

Abt, Senior Analyst |

617-520-2548 |

|

Kamala Smith |

Data Collection & Analysis |

Abt, Senior Analyst |

617-520-3624 |

|

Meaghan Hunt |

Data Collection & Analysis |

Abt, Associate Analyst |

617-520-2566 |

|

Nick Murray |

Data Collection |

Abt, Web Implementation Specialist |

617-520-2541 |

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Abt Single-Sided Body Template |

| Author | Mica Astion |

| File Modified | 0000-00-00 |

| File Created | 2021-01-26 |

© 2026 OMB.report | Privacy Policy

Preparation

for Site Visits and Calls with Remaining Teams

Preparation

for Site Visits and Calls with Remaining Teams