1205-0NEW-JIAC 40260 OMB Suppt_Statement_Part A _OCIO Comments and edits 20150227-ETAedits_3-3-2015_CLEAN-FINAL

1205-0NEW-JIAC 40260 OMB Suppt_Statement_Part A _OCIO Comments and edits 20150227-ETAedits_3-3-2015_CLEAN-FINAL.doc

Job Innovation and Accelerator Challenge Grants Evaluation

OMB: 1205-0518

part a:

supporting statement for paperwork reduction act submission

The U.S. Department of Labor (DOL), Employment and Training Administration (ETA) contracted with Mathematica Policy Research and the W.E. Upjohn Institute for Employment Research to conduct an evaluation of the Jobs and Innovation Accelerator Challenge (JIAC) grants. In partnership with other federal agencies (Department of Commerce, Small Business Administration, and Department of Energy), the DOL awarded two rounds of grants to 30 self-identified regional industry clusters that have high growth potential. The main objective of the evaluation is to build a better understanding of how multiple federal and regional agencies worked together on these grant initiatives, how the ETA grants are being used, the training and employment-related outcomes that the clusters are able to achieve, lessons learned through implementation, and plans for sustainability.

This package requests clearance for two of the four data collection efforts to be conducted as part of the JIAC Evaluation:1

Site visit interviews. In-person visits to a subset of nine clusters will provide information on implementation of the JIAC initiative. The evaluation team will conduct interviews with cluster management staff, activity leaders, frontline staff, individual program participants, the local workforce investment board, private sector employers (businesses or for-profits and not-for-profits), and local economic development agencies. Protocols for these interviews are included in Attachments A through E.

Survey of grantees and partners. The evaluation team will administer a survey to up to 330 individuals from partner organizations (the cluster manager, the ETA funding stream administrator, and representatives from 9 to 10 partner agencies in each of the 30 clusters). The survey will focus on cluster organization, communication, funding sufficiency, the types and usefulness of federal support, and program management and sustainability. The survey instruments are included in Attachments F, G, H, and I. Before the survey, the evaluation team will solicit information on organizations involved in the clusters’ grant activities from the ETA funding stream administrators in order to construct a sampling frame. The template that the evaluation team will use to collect this information is included in Attachment J.

Circumstances Necessitating the Data Collection

As of May 2011 when the first round of JIAC grants was issued, the unemployment rate in the United States was 9 percent.2 Almost 14 million people were looking for jobs. Job growth surfaced to the top of the nation’s economic agenda. The economic downturn also led to greater attention on the role of regional innovation clusters as drivers for improving the economy, creating jobs and employment, and enhancing U.S. competitiveness.

ETA has been an active federal partner in the funding and promotion of regional innovation clusters for the past decade. Specifically, it has sought to address one of the challenges that clusters face as they pursue economic growth: employers in some high-wage industries with the potential for creating jobs report trouble finding American workers with the skills to fill the vacancies. Under the authority of section 414(c) of the American Competitiveness and Workforce Improvement Act of 1998, (ACWIA), as amended (29 U.S.C. § 2916a), ETA invests heavily in making grants to build the skills and qualifications of domestic unemployed workers so that they can fill these positions and reduce the need for foreign workers under the H-1B visa program. In 2011 and 2012, ETA partnered with other federal funding agencies to support the JIAC and Advance Manufacturing (AM) - JIAC grants competitions. ETA has commissioned this study to evaluate and learn from these investments.

The rest of this section provides additional information on the context and nature of the JIAC evaluation in three subsections. The first further describes the policy context of the JIAC and AM-JIAC grants and summarizes key features of the grants program. The second subsection provides an overview of the clusters and their proposed activities. The third outlines the main purposes and features of the JIAC Evaluation.

a. Policy Context and Key Features of the Grants Program

Regional innovation clusters are an important component of the U.S. government’s strategy for driving economic and job growth. In recognition of the importance of these clusters, the White House created the Taskforce for the Advancement of Regional Innovation Clusters (TARIC) in 2011 to better support cluster efforts. TARIC is a collaborative federal partnership designed to leverage and coordinate existing resources to provide streamlined and flexible assistance to clusters. In a 2011 report, Sperling and Lew present the administration’s rationale for funding such clusters:

Regional innovation clusters are based on a simple but critical idea: if we foster coordination between the private sector and the public sector to build on the unique strengths of different regions—while creating the incentives for them to do so—we will be better equipped to marshal the knowledge and resources that America needs to compete in the global economy.3

TARIC conceived of the JIAC and AM-JIAC grants as part of a strategy for encouraging regional investments that link economic, workforce, and small business development. ETA partnered with other federal agencies to fund 20 clusters through the JIAC grant initiative in 2011 and 10 more clusters through the AM-JIAC grant initiative in 2012. Through these two initiatives, the 30 clusters proposed strategies intended to bolster economic development by accelerating job creation or retention and technical innovation in high-wage, high-skill sectors.

As shown in Table A.1., ETA provided most of the funding for the JIAC grants—$20 million of the total $33 million—and served as a minority funder for the AM-JIAC grants—$5 million of the total $25 million. Because ETA funds were authorized through the ACWIA, activities conducted using ETA grant dollars must be directly related to education, training, and other related services that support high-growth industries and/or occupations for which employers are relying on workers with H-1B visas.

Table A.1. Federal Funding for the JIAC and AM-JIAC Grants

Federal Funding Agency |

Amount of Funding |

Typical

Length of |

JIAC Grants |

||

U.S. Department of Labor, ETA |

$20 million |

4 years |

U.S. Department of Commerce, Economic Development Administration (EDA) |

$10 million |

2 years |

U.S. Small Business Administration (SBA) |

$3 million |

2 years |

AM-JIAC Grants |

||

U.S. Department of Labor, ETA |

$5 million |

3 years |

U.S. Department of Commerce, EDA |

$10 million |

3 years |

U.S. Small Business Administration, SBA |

$2 million |

3 years |

U.S. Department of Commerce, National Institute of Standards and Technology, Hollings Manufacturing Extension Partnership (NIST/MEP) |

$3 million |

3 years |

U.S. Department of Energy (DOE) |

$5 million |

3 years |

Source: Federal Funding Opportunities for JIAC grants (2011) and AM-JIAC grants (2012).

The JIAC and AM-JIAC grants were offered as two separate funding opportunities that used a unique structure. For each set of grants, a single federal funding opportunity was issued, but separate grants were awarded by each funding agency. Each cluster had to submit a single application that requested grants from and proposed discrete activities for each federal funding partner. This proposal had to include an integrated work plan indicating the synergy between activities funded by each grant. A single entity within a cluster could apply as the formal grantee for all of the federal funds. Alternatively, multiple agencies within a cluster could work together to submit a single grant document, with different entities serving as the grantee for separate federal funds. For example, a JIAC cluster might have the local workforce investment board serving as the ETA grantee, a local economic development agency serving as the EDA grantee, and the Small Business Development Center at a local university serving as the SBA grantee.

This approach sought to eliminate the silos that typically exist when federal agencies implement their own unique initiatives. By working together, the federal agencies hoped to align their goals of fueling economic growth; breaking down the barriers to communication among federal, regional, and local entities; and generating a larger impact through the combined effort. In addition to the federal funding partners, more than a dozen other federal agencies offered to provide technical assistance and support to the JIAC and AM-JIAC clusters as they implemented their grant activities.

Table A.2 lists the main objectives of the JIAC and AM-JIAC grants, as described in the federal funding opportunities. The two grants share similar objectives, but the AM-JIAC grant objectives focus on the AM sector. Among these objectives, development of a skilled workforce and ensuring diversity in workforce participation align most closely with the ETA mission. ETA provided approximately $1 million per cluster for the JIAC grants for training and related employment activities to develop a skilled workforce for the cluster. For the AM-JIAC grants, ETA provided approximately $400,000 to fund similar activities for developing a skilled advanced manufacturing workforce for the cluster.

Table A.2. Objectives of Federal Funding Opportunities

JIAC Federal Funding Opportunity |

AM-JIAC Federal Funding Opportunity |

|

|

Source: Federal funding opportunities for JIAC and AM-JIAC grants.

b. Overview of the Clusters and Their Proposed Activities

JIAC and AM-JIAC grants were awarded to self-identified clusters that cover diverse industry sectors and geographic regions of the country. Table A.3 provides the name of the cluster, the list of grantee organizations (with funding agency noted in parentheses), the region covered by the grant, the industry of focus, and the total funding level for the cluster. Nine of the 20 JIAC clusters and 2 of the 10 AM-JIAC clusters involve a single entity that serves as the grantee for all of the federal funds. The remaining 11 JIAC clusters and 8 AM-JIAC clusters involve two or more organizations, each receiving one or more of the grants from the federal funding agencies. As expected, the JIAC grantees cover a wider array of industry sectors, ranging from food processing to health information technology to renewable energy. In fact, several of these JIAC grants involve AM sectors, overlapping in content with some of the AM-JIAC grants. Total funding ranges from $1.2 to $2.15 million per cluster for the JIAC grants and from $1.9 to $2.4 million for the AM-JIAC grants.

Table A.3. Overview of JIAC and AM-JIAC Clusters

Project Name |

Grantee Organizations |

Region |

Cluster Focus |

Funding |

JIAC Grantees |

||||

Advanced Composites Employment Accelerator |

Roane State Community College |

Knoxville and Oak Ridge, TN, and surrounding |

Advanced composites (low-cost carbon fiber technology) |

$1,627,185 |

Atlanta Health Information Technology Cluster |

Georgia Tech Research Corporation |

Georgia |

Health IT |

$1,650,000 |

Center for Innovation and Enterprise Engagement |

Wichita State University |

South Central KS |

Advanced materials |

$1,993,420 |

Clean Energy Jobs Accelerator |

Space Florida |

East Central FL |

Clean energy |

$2,148,198 |

Clean Tech Advance Initiative |

City of Portland (EDA); Worksystems, Inc. (ETA); Oregon Microenterprise Network (SBA) |

Portland, OR, and Vancouver, WA |

Clean tech |

$2,150,000 |

Finger Lakes Food Processing Cluster Initiative |

Rochester Institute of Technology, Center for Integrated Manufacturing Studies |

Finger Lakes Region, NY |

Food processing |

$1,547,470 |

GreenME |

Northern Maine Development Commission |

Northeastern ME |

Renewable energy |

$1,928,225 |

KC Regional Jobs Accelerator |

Mid-America Regional Council Community Services Corporation (EDA); Full Employment Council, Inc. (ETA); University of Missouri Curators on behalf of the University of Missouri–Kansas City Innovations Center KCSourceLink (SBA) |

Greater Kansas City (MO and KS) |

Advanced manufacturing and IT |

$1,891,338 |

Milwaukee Regional Water Accelerator Project |

University of Wisconsin–Milwaukee (EDA, SBA); Milwaukee Area Workforce Investment Board (ETA) |

Milwaukee, WI, and surrounding |

Water |

$1,650,000 |

Minnesota’s Mining Cluster—The Next Generation of Innovation and Diversification to Grow America |

University of Minnesota Natural Resources Research Institute (EDA); Minnesota Department of Employment and Economic Development (ETA); University of Minnesota Center for Economic Development (SBA) |

Northeastern MN |

Energy |

$1,948,985 |

New York Renewable Energy Cluster |

The Solar Energy Consortium (EDA); Orange County Community College (ETA); Gateway to Entrepreneurial Tomorrows, Inc. (SBA) |

Hudson Valley, NY |

Renewable solar energy |

$1,950,000 |

Northeast Ohio Speed-to-Market Accelerator |

Northeast Ohio Technology Coalition (EDA); Lorain County Community College (ETA); JumpStart, Inc. (SBA) |

Cleveland and Akron, OH, and surrounding |

Energy, flexible electronics |

$2,062,945 |

Renewable Energy Generation Training and Demonstration Center |

San Diego State University (SDSU) Research Foundation |

San Diego, CA, and surrounding |

Renewable energy |

$1,671,600 |

Rockford Area Aerospace Cluster Jobs and Innovation Accelerator |

Northern Illinois University (EDA; ETA); Rockford Area Strategic Initiatives (SBA) |

Rockford, IL, and surrounding |

Aerospace |

$1,769,987 |

Southeast Michigan Advanced Energy Storage Systems Initiative |

NextEnergy Center (EDA); Macomb/St. Clair Workforce Development Board (ETA); Michigan Minority Supplier Development Council (SBA) |

Detroit, MI, and surrounding |

Advanced energy storage systems |

$2,125,745 |

Southwestern Pennsylvania Urban Revitalization |

Pittsburgh Central Keystone Innovation Zone (EDA); Hill House Association (ETA); University of Pittsburgh (SBA) |

Southwestern PA |

Energy, health care |

$1,959,395 |

St. Louis Bioscience Jobs and Innovation Accelerator Project |

Economic Council of St. Louis (EDA); St. Louis Agency on Training and Employment (ETA); St. Louis Minority Supplier Development Council (SBA) |

St. Louis City and County |

Bioscience |

$1,825,779 |

The ARK: Acceleration, Resources, Knowledge |

Winrock International (EDA,SBA); Northwest Arkansas Community College (ETA) |

Northwestern AR and bordering counties in OK and MO |

IT |

$2,150,000 |

Upper Missouri Tribal Environmental Risk Mitigation Project |

United Tribes Technical College |

MT, ND, and SD Reservations |

Environmental risk mitigation |

$1,716,475 |

Washington Interactive Media Accelerator |

EnterpriseSeattle |

Seattle, WA, and surrounding |

Interactive media |

$1,229,000 |

AM-JIAC Grantees |

||||

AMP! - Advanced Manufacturing and Prototyping Center of East Tennessee |

Technology 2020 (EDA, SBA, DOE); Pellissippi State Community College (ETA); University of Tennessee (NIST-MEP) |

Eastern TN |

Additive manufacturing, lightweight metal processing, roll-to-roll processing, low-temperature material synthesis, complementary external field processing |

$2,391,778 |

Growing the Southern Arizona Aerospace and Defense Region |

Arizona Commerce Authority |

Southern AZ (Phoenix area) |

Aerospace, defense |

$1,817,000 |

Advanced Manufacturing Medical/Biosciences Pipeline for Economic Development |

East Bay Economic Development Alliance (EDA); Corporation for Manufacturing Excellence (NIST-MEP); the University of California–Berkeley (DOE); Laney College (ETA); Alameda and Contra Cost SBDCs (SBA) |

San Francisco area |

Medical and biosciences manufacturing |

$2,190,779 |

Innovation Realization: Building and Supporting an Advanced Contract Manufacturing Cluster in Southeast Michigan |

Southeast Michigan Community Alliance (EDA, ETA); Michigan Manufacturing Technology Center (NIST-MEP); National Center for Manufacturing Sciences (DOE); Detroit Regional Chamber Connection Point (SBA) |

Southeastern MI |

Lightweight automotive materials |

$2,191,962 |

Proposal to Accelerate Innovations in Advanced Manufacturing of Thermal and Environmental Control Systems |

Syracuse University (EDA, DOE); NYSTAR (NIST-MEP); The State University of New York’s College of Environmental Science and Forestry (ETA); Onondaga Community College (SBA) |

Syracuse, NY |

Thermal and environmental control systems |

$1,889,890 |

Rochester Regional Optics, Photonics, and Imaging Accelerator |

University of Rochester (EDA, DOE, ETA); NYSTAR (NIST-MEP); High Tech Rochester Inc. (SBA) |

Rochester, NY |

Optics, photonics, and imaging |

$1,889,936 |

Manufacturing Improvement Program for the Oil and Gas Industry Supply Chain and Marketing Cluster |

Oklahoma Manufacturing Alliance (NIST-MEP); New Product Development Center at Oklahoma State University (EDA, ETA, SBA); Oklahoma Department of Commerce, Center for International Trade and Development at Oklahoma State University, and Oklahoma Application Engineer Program (DOE) |

Oklahoma |

Oil and gas |

$1,941,999 |

Agile Electro-Mechanical Product Accelerator |

Innovation Works (EDA, SBA); Catalyst Connection (NIST-MEP); National Center for Defense Manufacturing and Machining (DOE); Westmoreland/Fayette Workforce Investment Board (ETA) |

Western PA |

Metal manufacturing, electrical equipment |

$1,862,150 |

Greater Philadelphia Advanced Manufacturing Innovation and Skills Accelerator |

Delaware Valley Industrial Resource Center |

Philadelphia, PA |

Additive manufacturing and composites technology |

$1,892,000 |

Innovations in Advanced Materials and Metals |

Columbia River Economic Development Council (EDA, DOE); Impact Washington (NIST-MEP); Southwest Washington Workforce Development Council (ETA); Oregon Microenterprise Network (SBA) |

Vancouver, WA, and Portland, OR |

Metals and advances materials |

$2,192,000 |

Source: ETA JIAC and AM-JIAC grant award documents

Note: The text in parentheses following the name of each grantee organization indicate the type of federal JIAC or AM-JIAC grant that the organization is receiving. In cases where one organization is listed without parentheses, that single organization is receiving all federal grants for the cluster.

DOE = U.S. Department of Energy; EDA = U.S. Department of Commerce, Economic Development Administration; ETA = U.S. Department of Labor, Employment and Training Administration; NIST-MEP = U.S. Department of Commerce, National Institute of Standards and Technology’s Hollings Manufacturing Extension Partnership; SBA = U.S. Small Business Administration.

c. Overview of the JIAC Evaluation

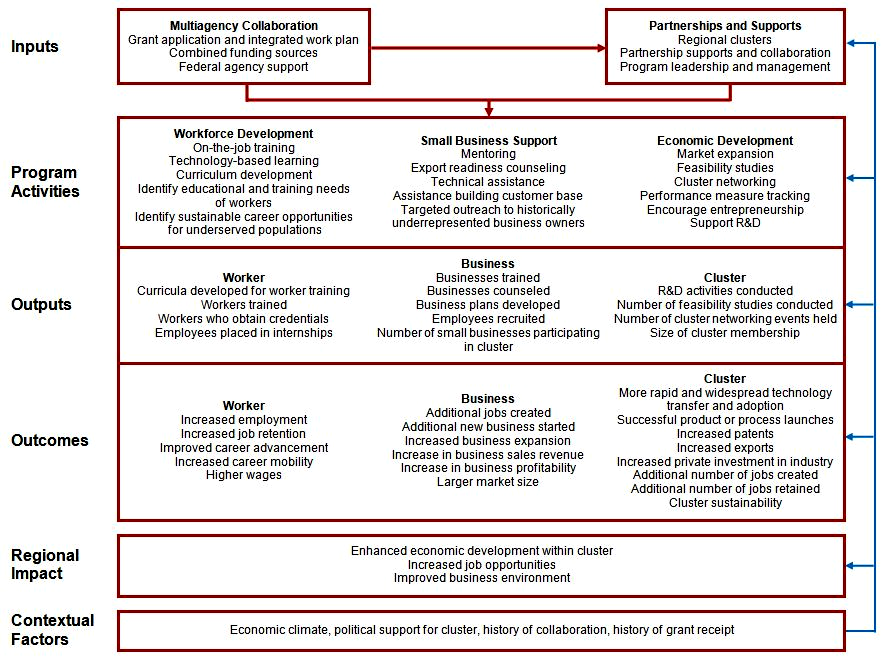

The JIAC evaluation will investigate how multiple federal and regional agencies worked together on these grant initiatives, how ETA grants are being used, the training- and employment-related outcomes that the clusters are able to achieve, lessons learned through implementation, and planning for sustainability. To guide the evaluation, the study team has developed a logic model for the typical cluster. A logic model provides a visual representation of the inputs that influence the development and ongoing operation of the cluster, the activities planned and implemented, the direct outputs of the activities conducted and services provided, the targeted outcomes, and the final intended regional economic development impact. The logic model in Figure A.1 is specific to the JIAC grants, but readily applies to the AM-JIAC grants. The list of inputs, activities, outputs, outcomes, impacts, and contextual factors included in the logic model is indicative and not exhaustive.

F

igure

A.1. Logic Model for JIAC and AM-JIAC Grants

Using this logic model as a starting point for framing program implementation, the evaluation of the JIAC and AM-JIAC grants will provide an in-depth understanding of how the initiatives unfold within the regions over time. Although many different federal agencies have provided funding or technical assistance to the clusters, this evaluation will focus primarily on the ETA-funded activities and associated outcomes. ETA specified that the evaluation should “focus on the implementation plans, processes, and strategies used to develop and accelerate regional economic development that translates into new jobs and increased wages through these regional partnerships.”4

To address ETA’s goals, the evaluation will focus on answering five key research questions:

What is the role of multiagency collaboration in the planning and implementation of cluster activities?

How and in what ways do regional clusters, programs, and partnerships develop under the grant?

What workforce-related outcomes did the clusters report achieving through this initiative?

How is the initiative managed within each cluster? What practices are being implemented to promote sustainability of grant resources, partnerships, and activities?

What are key lessons learned through implementation? How and under what circumstances might these lessons be replicated?

A range of topics will be covered within the domain of each question.

The role of the multiagency collaboration. Multiagency collaboration at the federal level was intended to be a key input into the operational success of the clusters and a distinguishing feature of the grants initiative. As noted in the logic model, federal agency funding and coordinated provision of technical assistance and support from both funding and nonfunding partners were designed to more effectively help clusters conduct economic development activities. This evaluation will examine several different aspects of multiagency collaboration. The research design investigates the context of the JIAC and AM-JIAC grant initiatives and how the ETA goals are embedded; the nature and structure of how the federal agencies interact; the types of supports and technical assistance that are provided to the clusters by funding and non-funding federal partners, especially as they are directed to or interact with the ETA-funded activities; and how the clusters are monitored by the federal agencies, particularly ETA.

Development of regional clusters, programs, and partnerships. The second domain moves to the cluster level. Clusters are responsible for using grant funding and federal technical assistance to conduct economic, workforce, and small business development activities that can drive improvements in outputs and outcomes. How the partners came together at the regional level is expected to serve as a significant input to program activities. Some clusters might be building upon existing relationships and previous collaborative efforts, whereas others might be forming for the first time. This in turn can affect the cluster’s propensity for collaboration, which can be a determinant of cluster progress and success. The clusters also had to decide on leadership and governance structures. Furthermore, the clusters had to determine what activities to implement and how to fund and monitor them. Because the grants funds came from multiple agencies, the clusters implemented multiple lines of activities. However, the intent of the grant initiatives was to gain synergies by blending the funding, so it will be important to observe how well the activities are integrated.

Outputs and outcomes. Clusters conduct grant activities with the support of federal agencies to generate positive outputs and outcomes at the worker, business, and industry levels, which will ultimately feed into lasting impacts on regional economic development. The logic model maps the types of outputs and outcomes that cluster activities aim to generate at each of these levels. (The evaluation will focus on the subset of outputs and outcomes for ETA activities, because separate EDA and SBA evaluations focus on the relevant metrics for activities funded by those agencies.) ETA-funded activities will primarily result in outputs at the worker level, such as the number of workers trained, the number of workers who receive certifications, and the number of people placed into internships. As noted in the logic model, these outputs are expected to lead to positive outcomes, such as increased employment, increased employment retention, and higher incomes.5

Program management and sustainability. New grant programs support, or initiate, activities around a particular goal, but typically have an underlying purpose to encourage sustainability after the funding infusion. Sustainability depends on the stability of the governance and leadership structures, the flexibility or agility of the cluster to react to changing circumstances and environments, the ability to garner the resources necessary to continue beyond the grant period, and the development of formal sustainability plans.

Program replicability and lessons learned. The last domain of research questions requires analysis across the clusters to assess promising practices and lessons learned. This information will illuminate initiative inputs and activities that seemed to work well in promoting regional economic development for use by ETA as well as current and future cluster administrators in the JIAC and AM-JIAC regions. The evaluation will also assess the factors that may influence replicability of the promising practices.

To address the research question in each of these domains, the study will include four types of data collection activities: (1) document reviews, (2) interviews of federal officials, (3) site visits, and (4) a survey. Each data collection activity will make specific contributions to the evaluation effort and support the team’s effort to understand how the grants unfolded over time. In particular, the review of grant applications has already informed the study design report and contributed to development of the protocols for the site visits and the survey instrument. Additional document review activities will prepare site visitors with background information for on-site data collection activities and will provide the primary source of data on grant outcomes. Interviews with federal officials highlighted the impetus for the grant effort, provided critical information on the roles of and collaboration among federal agencies, and provided background information to inform site selection. The site visits are designed to yield detailed qualitative information from a subset of clusters about grant implementation and experiences. Finally, the survey will collect quantitative data from all of the clusters about activities, outputs, and outcomes. Table A.4 shows how each data source will contribute to the study’s key research topics discussed earlier. Table A.5 provides the time frame for each data collection activity. In the following section, we will discuss how, for whom, and for what purposes data will be collected during site visits and through the survey.

2. How, by Whom, and for What Purposes the Information Is to Be Used

ETA has made significant investments in the JIAC and AM-JIAC grants; this evaluation will inform how those resources are being used and identify lessons that can be gleaned from cluster efforts supported by the grants. ETA can use this information to assess the success of the multiagency federal collaboration, further build its evidence base about the role of regional clusters in supporting workforce development, and inform the agency’s future decisions about funding efforts by regional clusters.

To support this aim, clearance is requested for two data collection efforts: (2) site visits to a subset of 9 grantees and (2) a survey of all 30 grantee organizations and a subset of partners. The following subsections describe in more detail the two data collection efforts and the information captured by each.

a. Site Visits

The evaluation contractor will conduct site visits to 9 of the 30 JIAC and AM-JIAC grantee clusters. The site visits are designed to yield detailed qualitative information from a subset of clusters about grant implementation and experiences. The evaluation contractor anticipates visiting approximately 7 JIAC and 2 AM-JIAC grantees. The evaluation contractor will spend two to three days in each site conducting semi-structured interviews. These interviews will be conducted with the following types of respondents:

Cluster management staff. The contractor will spend approximately a day interviewing cluster management staff, which will include the cluster manager, the ETA funding stream administrator, and individuals from the cluster oversight board and other non-ETA funding stream administrators. If possible, the contractor will also sit in on a cluster oversight board meeting during this time. These interviews will provide useful insights for ETA on the development of regional clusters; the goals and vision of the cluster’s efforts; steps taken to select, implement, and monitor grant activities; and the sufficiency of funding for grant activities, the types of federal support provided, perceptions about project outcomes, cluster management efforts and sustainability, challenges faced throughout implementation, and lessons learned

Table A.4. Study Topics Covered by Each Data Source

|

Document Review |

|

Federal Staff Interviews |

|

Site Visit Interviews |

|

Survey |

|||||||||

Topics and Subtopics |

Grant

|

Progress Reports |

Design |

Site Selection Stage |

Cluster Administrators |

Activity Leaders |

Frontline Staff |

Participants |

LWIB/ Employer Groups |

Cluster Manager and ETA Grantee Manager |

Grantee Partners |

|||||

I. Role of Multiagency Collaboration |

||||||||||||||||

A. History and goals of grants |

X |

|

X |

X |

|

|

|

|

|

|

|

|||||

B. Federal partner roles, organizational structures, and governance |

|

|

X |

X |

|

|

|

|

|

|

|

|||||

C. Federal support for grantees |

|

|

X |

X |

X |

X |

|

|

|

X |

|

|||||

D. Grant monitoring |

X |

X |

X |

X |

X |

|

|

|

|

X |

|

|||||

II. Development of Regional Clusters, Programs, and Partnerships |

||||||||||||||||

A. Overview of grants and clusters |

X |

X |

|

|

|

|

|

|

|

|

|

|||||

B. History of collaboration and federal grant receipt |

X |

|

|

|

X |

X |

X |

|

X |

X |

X |

|||||

C. Grant funding |

X |

X |

|

|

X |

X |

X |

|

|

X |

X |

|||||

D. Grantee and partner engagement, decision making, communication |

X |

|

|

|

X |

X |

X |

|

X |

X |

X |

|||||

E. Types of grant activities |

X |

X |

|

|

X |

X |

X |

X |

X |

X |

X |

|||||

F. Experience with grant |

|

|

|

|

X |

X |

X |

|

X |

X |

|

|||||

III. Project Outcomes |

||||||||||||||||

A. Metrics used for project output and outcome measurement |

X |

X |

|

X |

X |

X |

X |

|

|

|

|

|||||

B. Output and outcome monitoring |

|

|

|

|

X |

X |

X |

|

|

|

|

|||||

C. Assessment of quality and usefulness of monitoring data |

|

|

|

X |

X |

X |

|

|

|

X |

|

|||||

D. Beneficiaries of grant activities |

X |

X |

|

|

X |

X |

X |

X |

X |

X |

X |

|||||

E. Rate of outcome achievement |

X |

X |

|

|

X |

X |

X |

|

|

|

|

|||||

IV. Program Management and Sustainability |

||||||||||||||||

A. Cluster features |

X |

|

|

X |

X |

X |

|

|

X |

X |

|

|||||

B. Cluster agility |

|

|

|

X |

X |

X |

|

|

X |

X |

|

|||||

C. Cluster dependence on outside TA |

|

|

|

X |

X |

X |

X |

|

|

X |

|

|||||

D. Matching funds/leveraged funds |

X |

|

|

X |

X |

X |

|

|

|

X |

|

|||||

E. Plans for sustainability |

|

|

|

|

X |

X |

X |

|

|

X |

|

|||||

V. Program Replicability and Lessons Learned |

||||||||||||||||

A. Best practices |

|

|

|

X |

X |

X |

|

|

|

|

|

|||||

B. Replicability of or uniqueness of best practices |

|

|

|

X |

X |

X |

X |

|

X |

|

|

|||||

C. Lessons learned |

|

|

|

X |

X |

X |

X |

|

X |

|

|

|||||

ETA = U.S. Department of Labor, Employment and Training Administration; LWIB = Local Workforce Investment Board

Table A.5. Time Frame for Data Collection Activities

Data Collection Activity |

Anticipated Start Date |

Anticipated End Date |

Document Review |

September 2013* |

January 2016 |

Federal Staff Interviews |

|

|

Planning and design |

October 2013* |

November 2013* |

Site selection and implementation |

July 2014* |

September 2014* |

Survey |

March 2015 |

June 2015 |

Site Visits |

April 2015 |

July 2015 |

|

|

|

* Actual dates.

Partner organization staff and participants. The contractor will visit two partner organizations for the ETA grant at each site. At each organization, the contractor will spend approximately a third of a day conducting interviews with the activity leader, trainers or frontline staff, and participants, and conducting observations of the facility. These interviews will provide an in-depth view of the types of training and other activities conducted using ETA grant funds. In particular, interviews with activity leaders will capture detailed information about the planning process, collaboration among the cluster partners, perceptions of federal support for grantees’ activities, how activities unfolded, implementation successes and challenges, perceptions of program management, and sustainability efforts. Interviews with frontline staff will provide information on how implementation unfolded on the ground, including the role of partnerships in daily activities, the details of working with training participants, perceived program outcomes, and ground-level perceptions of implementation challenges and successes. Interviews with participants will cover their employment histories, the reasons they are participating in grant activities, their perceptions about the usefulness and quality of training, and suggestions for improvements. Findings from these interviews will not be representative of all participants but will provide a flavor for participants’ perspectives and might enable the evaluation team to illustrate overall findings in the reports with vignettes from actual workers affected by the grant.

Workforce and economic development agencies. Finally, the contractor will spend approximately a third of a day interviewing representatives from the local workforce investment board, the local economic development agency, and a local employer consortium. These interviews will provide a different perspective on the success of grantee cluster collaboration with the workforce and employer communities, the usefulness and quality of grant activities, the challenges faced and successes achieved by the cluster, and project sustainability efforts.

b. Survey

Information from the survey will help ETA gather information and understand grantees’ perspectives on grant activities; characteristics of and changes to grantees, partnerships, and clusters as a result of the grant; and interactions with federal funders and technical advisors. The survey will allow for the collection of objective information about topics (for example, tabulation of program activities and nature of cluster organization), as well as subjective information (for example, perspectives on the quality of technical assistance provided, the usefulness of the integrated work plan, and the likelihood of sustaining partnerships beyond the grant period). Differing, but largely overlapping, question sets developed for cluster managers, funding stream administrators, and partners enable many of the same questions to be asked of each group, while also tailoring the questions to fit the different experiences of each of these parties. The survey will enable the evaluation contractor to address some topics not covered by any other data source, and at the same time allow for triangulation of information on key topics covered by the document review, federal interviews, and site visits.

3. Uses of Technology for Data Collection to Reduce Burden

The evaluation contractor will use advanced technology in the administration of the evaluation’s survey to reduce burden on study participants. In particular, the survey will be conducted online, facilitating quick completion and submission and providing several burden-reducing benefits for respondents.

Plans for the online survey will reduce burden in several ways. First, advance emails to those selected for the survey will explain the study, request participation, and include a hyperlink to the survey website. This will reduce the effort and potential for error that could occur if respondents had to type the website address manually. Second, respondents may complete the survey at any time that is convenient for them, stopping and coming back to it later if necessary, obviating the need for them to dedicate the full block of time to the survey. Third, the web survey will employ skip logic, limiting questions to those that are appropriate for the respondent based on preloaded information on the individual’s position and on answers to prior questions. This allows fewer questions to be asked and is faster and less burdensome than paper-and-pencil surveys, which typically cannot involve complex skips or paths.

When the web surveys are complete, data are stored on secure servers and are immediately available to the evaluation team. The site visit component of the project involves in-person interviews and thus does not have burden that could directly be reduced through use of technology.

Efforts to Identify and Avoid Duplication

The evaluation of the JIAC and AM-JIAC grants will follow three strategies to avoid duplication:

Choosing a different focus than other federal studies involving JIAC and AM-JIAC grants. Two other federal funding agencies (EDA and SBA) have sponsored studies on the JIAC and AM-JIAC grants that involve primary and secondary data collection. The evaluation team has investigated the focus and data collection activities of these evaluations to inform the design of ETA’s evaluation. EDA is gathering information on the JIAC and AM-JIAC grants in the context of a larger study examining different strategies for developing performance metrics that capture economic development. The SBA evaluation consists of a survey fielded to businesses and entrepreneurs that receive JIAC or AM-JIAC services to determine their progress toward business development outcomes. To avoid duplication, this evaluation will focus primarily on ETA activities and ETA-related output and outcome metrics. It will examine the activities of other funding partners only if these activities are closely linked to ETA activities. In such cases, the focus will be on understanding the synergies and complementarities in these efforts.

Gathering unique data from the evaluation’s four data sources. The evaluation involves four types of data collection activities: (1) document reviews, (2) interviews of federal officials, (3) site visits, and (4) a survey. The evaluation is designed so that each data collection activity makes unique contributions to the evaluation effort and supports the team’s effort to understand how the grants unfolded over time. The evaluation began by conducting a careful document and monitoring data review before the survey and site visits. The document review has already informed the design of primary data collection instruments and helped ensure that the instruments focus on topics for which information is not already available in the documents and monitoring data. Interviews with federal partners provided their high-level impressions of cluster progress and contributing factors. Site visits will provide more in-depth information on factors affecting progress toward ETA activities in nine sites. The survey will provide information on the same topic for all 30 sites, not only from ETA activity leaders but also from their partners.

5. Methods to Minimize Burden on Small Businesses or Entities

The SBA is one of the funding partners for both the JIAC and the JIAC-AM grants. Therefore, all of the grantees included some form of small business outreach or engagement in their grant applications. Although this evaluation focuses on the ETA-funded grant components, small businesses or entities might be involved in ETA-funded activities. Therefore, small businesses or entities might be included as respondents for the survey and/or the site visits. The evaluation team structured the survey instruments and the site visit protocols to minimize the burden on all respondents, including small businesses or entities. If individuals from small businesses are specified as site visit respondents, their interviews will last approximately 30 minutes and will be scheduled at respondents’ convenience. Item 3 addresses how the burden has been reduced using technology.

6. Consequences of Not Collecting the Data

The JIAC Grants Evaluation provides an important opportunity for ETA to understand how grant resources are used and to learn lessons from its investment in the grants. If the information collection requested by this clearance package is not conducted, policymakers and grantees will lack high quality information on the synergies between activities funded by ETA and other agencies. This study will enable ETA and other policymakers to understand the advantages and disadvantages of this groundbreaking strategy of multiagency collaboration; the development of regional clusters, programs, and partnerships; project outcomes; and program replicability.

All the primary data collection activities that the evaluation contractor will conduct will ensure that the evaluation team gains a comprehensive understanding of the grants and their implementation. In particular, site visit interviews will provide information about the implementation of the JIAC and JIAC-AM grants. Without information collected through site visit interviews, ETA will be limited to the information provided in grantees’ quarterly reports. Through site visit interviews, ETA will gain information on the inputs to and implementation of the clusters’ activities as well as the degree to which grant activities are likely to be sustained. In addition to site visit interviews, the survey of 11 individuals in each of the 30 clusters will enable ETA to learn about cluster organization, communication, funding sufficiency, the types and usefulness of federal support, and program management and sustainability across all funded clusters.

7. Special Data Collection Circumstances

There are no special circumstances surrounding data collection. All data will be collected in a manner consistent with federal guidelines.

8. Federal Register Notice and Consultations Outside of the Agency

a. Federal Register Notice and Comments

A Federal Register notice announcing plans to submit this data collection package to OMB was published on [October 27, 2014, Volume 79 (Federal Register, vol. 79, no. 207, p. 63945)] consistent with the requirements of 5 CFR 1320.8 (d). The Federal Register notice described the evaluation and provided the public an opportunity to review and comment on the data collection plans within 60 days of the publication, in accordance with the Paperwork Reduction Act of 1995. A copy of this 60-day notice is included as Attachment K. No comments were received during the 60-day period.

A second Federal Register notice, providing the public with another opportunity to comment, will be published for a 30-day period, coinciding with this submission to OMB.

b. Consultations Outside of the Agency

The data collection instrument, research design, sample design, and analysis plan have been developed based on the expertise of DOL and the contractor and in consultation with a technical working group (TWG).6 They have also been influenced by, and modified based on, a first round of interviews with federal staff involved with the grants. The survey instrument was piloted with nine individuals in various positions in two clusters and improvements made accordingly. The purposes of these consultations were to ensure the technical soundness of the evaluation and the relevance of its findings and to verify the importance, relevance, and accessibility of the information sought in the study.

9. Respondent Payments

No incentives will be provided. Participation in an evaluation was a condition of the grants awarded to the participating clusters. Each cluster has received a two- to four-year grant to fund implementation of its project goals. Cluster members and their partners will not be compensated for interviews conducted during the site visits or over the telephone from grant or other funds. Most of those interviewed for the study could reasonably be expected to participate in such activities as part of their job responsibilities. Program participants who engage in semistructured interviews will be recruited on site as they are available, rather than in advance. Evaluators will simply ask if training recipients are willing to participate without being offered an incentive. This strategy should be sufficient to yield the small number of interviews planned because participants will not be setting aside time to keep an appointment, will not incur travel costs to participate, and will likely be waiting to receive services when approached for a possible interview.

10. Privacy

The evaluation team will take a number of measures to safeguard the data that are part of this clearance request, including data collected through the survey and site visits. The evaluation team will assure respondents of the confidentiality of their responses, both in advance materials and at the start of the surveys and interviews. Individual respondents will be informed that their participation is voluntary, they can skip any questions that they prefer not to answer, and all responses will be used for research purposes only. Further, they will be assured that no individual respondents will be identified, unless required by law, and all data will be securely stored. The contractor complies with DOL data security requirements by implementing security controls for processes and controls that it routinely employs in projects that involve sensitive data. The contractor’s procedures for handling secure data are consistent with the Privacy Act of 1974, the Computer Security Act of 1987, the Federal Information Security Management Act of 2002, OMB Circular A-130, and National Institute of Standards and Technology computer security standards. The contractor secures personally identifiable information and other sensitive project information and strictly controls access on a need-to-know basis. In addition, the contractor uses Federal Information Processing Standard 140-2 compliant cryptographic modules to encrypt data in transit and at rest.

11. Questions of a Sensitive Nature

There are no questions of a sensitive nature in the site visit protocols or the survey instrument.

12. Estimated Hour Burden of the Collection of Information

The survey of grantees and their partners, to be administered by the evaluation contractor, will collect quantitative data from all 30 grantee clusters about activities, outputs, and outcomes. Several types of respondents—including cluster managers, ETA funding stream administrators, and other partners—will complete the online survey for each cluster, each moving through a series of questions tailored to their role and prior responses. Up to 11 individuals per cluster will be contacted to complete the survey, and will spend an average of 23 minutes completing the survey. The length of the survey will vary by the individual’s position. About 20 individuals are predicted to hold the roles of both cluster manager and ETA funding stream administrator and will require about 55 minutes to complete their surveys. Another 20 individuals are expected to hold one of these two roles, and will require 35 to 40 minutes for their survey. The majority of respondents, about 290 individuals, are expected to answer the partner survey, requiring about 20 minutes. In addition to survey completion, grantees will provide information that will enable the evaluation team to assemble the sample frame.

Table A.6 presents the number of respondents, the average burden per response, and the total burden hours for each data collection activity for which clearance is sought in this package. The estimated total hour burden to participants in the site visits and survey for the JIAC Evaluation is 334.25 hours. Details on the time and cost burdens are provided in the following subsections for each of the separate data collection activities.

Table A.6. Burden Estimates for Site Visits and Surveys

Activity |

Average Number of Respondents per Interview |

Total Number of Respondents |

Average Burden per Response (minutes) |

Total Burden Hours |

Site Visits |

||||

Site Visits |

|

|

|

|

Cluster manager |

1 |

9 |

120 |

18.00 |

ETA funding stream administrator |

1 |

6 |

105 |

10.50 |

Oversight board and other funding stream administrators |

4 |

24 |

60 |

24.00 |

Local workforce investment board |

2 |

18 |

30 |

9.00 |

Local economic development agency |

2 |

18 |

30 |

9.00 |

Employer consortium |

2 |

18 |

30 |

9.00 |

Activity leader |

1 |

18 |

90 |

27.00 |

Trainer/frontline staff |

2 |

36 |

45 |

27.00 |

Program participant |

1 |

18 |

30 |

9.00 |

Total across 9 clusters |

- |

165 |

52 |

142.50 |

Total Site Visit Burden |

142.50 hours |

|||

Survey |

||||

Cluster Member Contact Information Submission |

1 |

30 |

30 |

15.00 |

Online Survey Completion |

1 |

330 |

23 |

126.50 |

Total Survey Burden |

141.50 hours |

|||

Total Burden |

284.00 hours |

|||

Note: Estimated burden hours are based on pretest interview timings and scheduled site visit interview lengths.

Burden Estimates for Site Visits

Approximately half of the burden estimate, specifically 142.50 hours, represents time for semistructured interviews conducted during site visits to nine clusters. During these two- to three-day visits, the evaluator will interview individuals involved in implementing the initiatives. Respondents will work for various organizations in different locations, so travel might be necessary to meet with some respondents who are unable to convene in a central location. Of course, the individuals interviewed and the positions they hold will vary based on the structure of the cluster and on the number and types of organizations participating. However, the following discussion provides an illustrative general picture of the likely structure of these visits.

The largest portion of burden during the site visits will result from interviews with cluster management staff. On average, the evaluator expects to spend one day per site at a central location conducting interviews with the cluster manager (120 minutes), the ETA funding stream administrator (105 minutes), and an average of four individuals from the cluster oversight board and other non-ETA funding stream administrators (60 minutes each). There are two possible scenarios in which these interviews could overlap.

Single grantee organization. Grants from the three federal funding agencies for JIAC and five federal funding agencies for AM-JIAC could be awarded to a single organization or to multiple organizations. When a single entity within a cluster received all of the JIAC or AM-JIAC grants, the cluster administrator and the ETA funding stream administrator might be the same individual. In addition, there might not be an oversight board or non-ETA funding stream administrators in these cases. If the same individual holds multiple grant administrator positions, the contractor will conduct only one interview. To give a sense of the frequency of this scenario, all of the federal JIAC grants were awarded to a single entity in nine of the 20 selected clusters, and all of the AM-JIAC grants were awarded to a single entity in two of the 10 selected clusters. Assuming that the same proportions hold in the nine sites selected for evaluation visits, one-third or three of the site visits would be conducted in sites where a single entity holds all of the grants and only a cluster manager interview would be conducted.

Multiple grantee organizations. The remaining six clusters selected for site visits are presumed to have multiple entities serving as grantees. In these sites, the cluster manager and ETA funding stream manager might or might not be the same individual. As an upper bound, the burden estimate assumes that all three types of interviews (cluster manager, ETA funding stream administrator, and oversight board) will be conducted at each of these sites.

Using these assumptions about the structure of grantees’ organizations, Table A.6 includes a total of nine interviews with cluster managers, six interviews with ETA funding administrators, and interviews with 24 oversight board members (about four members in each of six sites).

The rest of the site visit will consist of a range of interviews with other participating organizations. The evaluation team will conduct 30-minute interviews with an average of two representatives from the local workforce investment board, two officials from the local economic development agency, and two representatives from a local employer consortium. The team will also visit two partner organizations that provide services funded under the ETA JIAC or AM-JIAC grants. A 90-minute interview will be conducted with the activity leader at each organization, 45-minute interviews will be conducted with trainers or frontline staff at each site, and 30 minutes will be spent observing the site and interviewing a program participant. Some interviews might be conducted with small groups of individuals to facilitate data collection.

Burden Estimates for the Survey

The survey effort will result in burden for those helping to develop the sample frame as well as the survey respondents themselves. The evaluator anticipates that grantees within each of the 30 clusters will spend 30 minutes providing contact information for members of their cluster from which the survey sample will be drawn, resulting in a total of 15 hours for the effort as a whole. Among those selected to complete the survey, the instrument is designed to take an average of 23 minutes. With a sample of 330 individuals across 30 clusters, the total burden for the survey effort represents 141.5 hours.7

13. Estimated Total Annual Cost Burden to Respondents and Record Keepers

There will be no start-up or ongoing financial costs incurred by respondents that result from the data collection efforts for the JIAC evaluation. The proposed information collection plan will not require respondents to purchase equipment or services or to establish new data retrieval mechanisms.

Indirect cost burden will be incurred as respondents spend time contacting providers, completing the survey, and participating in site visits. In calculating the estimated burden cost for grantees, survey respondents, and cluster members, an estimated hourly cost of $20.26 is used. This is the average hourly earnings of production and nonsupervisory workers on private, nonfarm payrolls in October 2013 as reported by the Bureau of Labor Statistics, Office of Current Employment Statistics. This hourly cost is multiplied by the number of hours all of the respondents of that type are expected to spend. For example, each of 30 lead grantees is expected to spend 30 minutes providing contact information, resulting in 15 hours of burden. ((30 people*30 minutes)/60 minutes per hour) = 15 hours. The cost associated with this burden is $303.90. (15 hours * $20.26) Similarly, for program participants, an estimated hourly cost of $15.95 was derived from the 2011 Workforce Investment Act Standardized Record Data databook, which indicated that dislocated workers with some postsecondary education had pre-dislocation quarterly earnings of $8,294.

Table A.7. Indirect Cost Burden to Contact Providers, Survey Respondents, and Site Visit Participants

Respondents |

Total Responses |

Average Time per Response (minutes) |

Burden |

Estimated Hourly Cost |

Burden

|

Lead Grantee |

30 |

30 |

15 |

$20.26 |

$303.90 |

Survey Respondents |

330 |

23 |

126.5 |

$20.26 |

$2,562.89 |

Cluster Members |

201 |

55 |

183.75 |

$20.26 |

$3,722.78 |

Program Participants |

18 |

30 |

9 |

$15.95 |

$143.55 |

Total Burden Cost |

|

|

|

|

$6,733.12 |

Note: The $20.26 estimated hourly cost used here is the average hourly earnings of production and nonsupervisory workers on private, nonfarm payrolls in October 2013 as reported by the Bureau of Labor Statistics, Office of Current Employment Statistics at www.bls.gov/webapps/legacy/cestab8.htm . The $15.95 is derived from the 2011 Workforce Investment Act Standardized Record Data databook, indicating that dislocated workers with some postsecondary education have predislocation quarterly earnings of $8,294. www.doleta.gov/performance/results/WIASRD_state_data_archive.cfm.

14. Estimated Annualized Cost to the Federal Government

The estimated annualized cost to the government is $172,789 for site visits, $182,240 for administration of the grantee and partner surveys, and $23,611 for Federal oversight costs corresponding to the 20 percent of the annual rates for a GS-14, step-4 employee in Washington DC $118,057 x 0.2 = $23,611 (see http://www.opm.gov/policy-data-oversight/pay-leave/salaries-wages/salary-tables/15Tables/html/DCB.aspx).

Site visits $172,789

Additional Administration $182,240

Federal Oversight $23,611

Total $378,640

15. Changes in Burden

The data collection efforts for the JIAC Evaluation are new and will count as 284.00 total hours toward ETA’s information collection burden.

16. Publication Plans and Project Schedule

The data collection for which this supporting statement seeks clearance will result in three reports: a design report, an interim report, and a final report. Table A.3 shows a schedule for these deliverables, followed by a fuller explanation.

Table A.8. Deliverable Schedule

Deliverable |

Date |

Design Report |

January 2014* |

Interim Report |

February 2015** |

Final Report |

May 2016 |

*Actual date, **Actual date and does not include the ICR data collection

Design report. The JIAC Grants Evaluation’s design report details the evaluations team’s strategy for carrying out the evaluation’s activities. This report specifies the study’s conceptual goals, key research questions, and logic model; details the data collection procedures for the study; and describes the analysis strategy and deliverables. The design report also contains copies of all data collection instruments and preliminary outlines for the interim and final reports.

Interim report. The interim report presents findings on the context, planning, and inputs for the demonstration from early internal and administrative data sources—including the grant document review and federal staff interviews—as well as a discussion of the site selection process for site visits. This report provides a preliminary look at implementation across all 30 of the grantees and describes the types of support federal agencies provided to grantees. It also includes a discussion of factors considered in the selection of the nine grantee clusters that will be asked to participate in site visits.

Final report. The final report will present a systematic and integrated analysis that triangulates information across all of the study’s data sources—including the document review, federal interviews, site visits, and the survey. It will provide a comprehensive description of the multiagency collaboration required for this grant and the clusters’ progress in implementing ETA-funded activities and achieving related outputs and outcomes. The final analysis will follow the key research questions closely and integrate qualitative and quantitative findings.

17. Reasons for Not Displaying Expiration Date of OMB Approval

The expiration date for OMB approval will be displayed on all forms distributed as part of the data collection.

18. Exceptions to the Certification Statement

Exception to the certification statement is not requested for the data collection.

1 The study also involves two data collection efforts that are not part of this submission. First, the evaluation team will collect and analyze copies of the quarterly progress reports submitted by grantee clusters to ETA. These reports were approved under Office of Management and Budget (OMB) Approval Number 1205-0507. Given that ETA will supply copies of the reports to the evaluator, the evaluation will place no additional burden on grantees. Second, the evaluation team conducted two rounds of interviews with federal employees. The first round was conducted with representatives from four of the five funding agencies in November 2013 and provided background for and feedback on the evaluation design. The second round included 23 interviews with federal employees during summer 2014 to provide historical context for the federal multi-agency collaboration required for the grants, provide the federal perspective on regional implementation efforts, and inform the process of selecting clusters for the evaluation site visits. Data collections with federal employees are exempt from information collection requests and, therefore, are not included as part of this submission.

2 The unemployment statistics cited in this paragraph are based on data maintained by the Bureau of Labor Statistics and available at http://data.bls.gov/timeseries/lns14000000.

3 Sperling, Gene, and Ginger Lew. “New Obama Administration Jobs and Innovation Initiative to Spur Regional Economic Growth.” May 21, 2011. Available at [http://www.whitehouse.gov/blog/2011/05/21/new-obama-administration-jobs-and-innovation-initiative-spur-regional-economic-growt]. Accessed November 21, 2013.

4 U.S. Department of Labor, Office of Policy Development and Research. “Request for Quote – Office of Policy Development and Research (OPDR), Jobs and Accelerator Challenge Grants Evaluation.” Washington, DC: U.S. Department of Labor, July 8, 2013.

5 Because the evaluation does not include resources to independently measure program outputs or outcomes, the evaluator must rely on grantees’ self-reported data. Clusters must report on specific outputs and outcomes for each funding agency in their quarterly progress reports for the JIAC and AM-JIAC grants. As such, the evaluation’s analyses of grantees’ progress toward target outcomes will be purely descriptive: the evaluation team will assess what can be learned regarding grantees’ short-term outputs and outcomes based on clusters’ self-reports. To probe the accuracy of these data and the robustness of the monitoring systems used for these grants, the evaluation will examine how cluster monitoring and reporting was conducted and examine factors influencing the quality of the clusters’ data.

6 Members of the TWG are listed in Part B Section 5 of this submission.

| File Type | application/msword |

| Author | Samia Amin |

| Last Modified By | Windows User |

| File Modified | 2015-03-06 |

| File Created | 2015-03-03 |

© 2026 OMB.report | Privacy Policy