Bundling OMB Part B final_4.21.15

Bundling OMB Part B final_4.21.15.docx

Opportunity Youth Evaluation Bundling Study

OMB: 3045-0174

SUPPORTING STATEMENT FOR

AMERICORPS OPPORTUNITY YOUTH BUNDLING EVALUATION SURVEY

B. Collection of Information Employing Statistical Methods

B1. Describe (including numerical estimate) the potential respondent universe and any sampling or other respondent selection method to be used. Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection had been conducted previously, include the actual response rate achieved during the last collection.

Study Sample

The AmeriCorps Opportunity Youth Evaluation sample will consist of AmeriCorps members who fit the definition of opportunity youth and comparison non-members that match members on key characteristics. Opportunity youth, or disconnected youth are defined in the Consolidated Appropriations Act of 2014 as “individuals between the ages of 14 and 24 who are low income and either homeless, in foster care, involved in the juvenile justice system, unemployed, or not enrolled in or at risk of dropping out of an educational institution.” Due to the fact that AmeriCorps members may not be younger than 16, for the purpose of the evaluation, opportunity youth are defined as individuals between the ages of 16 and 24 and meeting the above criteria.

Treatment Group

The treatment group will consist of the total population of AmeriCorps members at 20 program sites (from 9 AmeriCorps State and National programs). The program sites share a common approach to improving opportunity youth member outcomes and were selected using a rigorous screening approach that established their eligibility in terms of similarity of activities and outcomes measured (two memos describing the creation of a screening rubric and the results of its application are available from CNCS upon request). All programs were in their first or second year of a three-year funding cycle at the outset of the study to allow for completion of the pre/post-test within the timeframe of the three-year funding cycle.

The expected number of participants at each site, based on the number of positions that have been funded in FY14, are presented in the table below.

Table 1. AmeriCorps Members - Opportunity Youth Study Participants

Program |

Sites |

Number of Members |

Program #1 |

1 |

40 |

Program #2 |

1 |

50 |

Program #3 |

12 |

177 |

Program #4 |

1 |

6 |

Program #5 |

1 |

18 |

Program #6 |

1 |

16 |

Program #7 |

1 |

65 |

Program #8 |

1 |

30 |

Program #9 |

1 |

20 |

Total |

20 |

422 |

Comparison Group

Propensity score matching will be used to ensure that comparison group members are observationally equivalent to treatment group members. Comparison participants in the study will be drawn from the following groups:

Applicants to the program who were selected for inclusion as members, but declined to participate in the program;

Applicants to the program who are eligible for inclusion but did not become members due to limitations in the availability of positions or minor logistical conflicts; and,

Potential applicants recruited from sources used by participating AmeriCorps programs to recruit applicants, such as partnering social service or community agencies.

These participants will be recruited by the evaluators, with the assistance of program leaders and contacts, at a targeted 2:1 ratio against treatment participants in order to maximize the likelihood of a good match.

All participating programs were screened for eligibility in the evaluation. One criterion for selection in this evaluation was access to additional opportunity youth who could serve as comparison group members. Most commonly, this occurs when programs are oversubscribed, so that some potentially qualified applicants are not accepted in the programs. Applicants to the program who were selected and declined to participate or who would have been selected but were not because of limitations on program size or minor logistical conflicts (e.g., not being able to participate in mandatory training because of time conflicts) are expected to be demographically similar to treatment participants, and have demonstrated motivation to participate in the program. Therefore they are ideal potential comparison group members.

The use of non-randomized comparison group members to estimate a counterfactual condition presents a potential threat to validity in the form of selection bias, as outcomes may be attributed to member characteristics that lead to program participation rather than program participation itself. Propensity score matching addresses selection bias by identifying comparison group members that match treatment group members on pre-treatment characteristics, such that comparison group members match treatment members in their probability of program participation (Caliendo & Kopeinig, 2008).

Comparison group members will be matched to treatment group members within each program site. For each site, if a sufficient number of comparison group members cannot be culled from applicants, the remaining comparison group members will be recruited by evaluators based on the specific program’s context, recruitment methods, and selection criteria. In most cases, program applicants fitting the definition of opportunity youth are referred from local agencies (e.g., social service agencies) or institutions (e.g., schools). Evaluators will first contact organizations that provide referrals to each program in order to determine whether there are any additional youth that may also meet selection criteria for the program. Comparison group members will be screened using the same minimum eligibility criteria used to screen treatment group participants, including English language proficiency, basic literacy, and ability to legally work in the United States.

This combination of methods is expected to yield a sufficient number of comparison group members to obtain the targeted 2:1 ratio. If additional recruitment is needed in some sites, evaluators will work with programs to develop alternative recruitment strategies, such as identifying and contacting local organizations that are similar to existing referring organizations to obtain contact information for youth who may meet eligibility criteria and can be further screened for inclusion. This may also include contacting organizations that work with opportunity youth in geographically proximal and demographically similar regions to that served by the program. If the latter strategy is used, regions will be matched on key county-level characteristics obtained from the U.S. Census, including county-level measures of unemployment rates and on-time graduation rates.

Surveys: Respondent Universe, Sample Size, and Target Response Rates

The respondent universe is the complete population of opportunity youth at the 20 site locations that are potentially eligible for inclusion in the AmeriCorps programs. This includes the projected 422 opportunity youth AmeriCorps members, as well as all opportunity youth who may potentially serve as comparison group members in the 20 sites. The number of eligible opportunity youth at the 20 sites cannot be precisely determined since no exact census of these youth exists at the community level, and the definition of “opportunity youth” used by the Opportunity Index, the key source of data at the county level, varies from the definition used in this study. However an estimate of the potential respondents is given, based on estimations of the number of individuals between the ages of 16 and 24 who are neither in school nor employed in the primary counties served by the included programs, obtained from U.S. Census Data via the Opportunity Index (Opportunity Nation, 2014). Comparison group members will be recruited at a 2:1 ratio against AmeriCorps members and one or more comparison group members will be matched to each AmeriCorps member.

Table 2 below presents the size of the population and size of the survey sample to be selected.

Table 2. Respondent Universe and Sample Population

Sample Unit |

Population |

Sample to be Selected |

AmeriCorps program members from 9 selected programs |

422 opportunity youth members |

422 opportunity youth members |

Comparison group members |

Approx. 277,000 opportunity youth non-members |

Up to 844 opportunity youth non-members |

Table 3 below summarizes the projected number of treatment and comparison group members for each of the nine programs (20 sites) with projected response of 80% of the baseline sample at the second wave (T2) and 80% of the second wave sample at the third wave (T3).

Table 3. AmeriCorps and Comparison Group Members– Targeted Response Rates

Program |

Sites |

Treatment |

Comparison |

||||

|

|

T1 |

T2 (80%) |

T3 (80%) |

T1 |

T2 (80%) |

T3 (80%) |

Program #1 |

1 |

40 |

32 |

26 |

Up to 80 |

Up to 64 |

Up to 51 |

Program #2 |

1 |

50 |

40 |

32 |

Up to 100 |

Up to 80 |

Up to 64 |

Program #3 |

12 |

177 |

142 |

113 |

Up to 354 |

Up to 283 |

Up to 227 |

Program #4 |

1 |

6 |

5 |

4 |

Up to 12 |

Up to 10 |

Up to 8 |

Program #5 |

1 |

18 |

14 |

11 |

Up to 36 |

Up to 29 |

Up to 23 |

Program #6 |

1 |

16 |

13 |

10 |

Up to 32 |

Up to 25 |

Up to 20 |

Program #7 |

1 |

65 |

52 |

42 |

Up to 130 |

Up to 104 |

Up to 83 |

Program #8 |

1 |

30 |

24 |

19 |

Up to 60 |

Up to 48 |

Up to 38 |

Program #9 |

1 |

20 |

16 |

13 |

Up to 40 |

Up to 32 |

Up to 26 |

Total |

|

422 |

338 |

270 |

Up to 844 |

Up to 675 |

Up to 540 |

B2. Information Collection Procedures/Limitations of the Study

B2. Describe the procedures for the collection of information, including: Statistical methodology for stratification and sample selection; Estimation procedure; Degree of accuracy needed for the purpose described in the justification; Unusual problems requiring specialized sampling procedures; and any use of periodic (less frequent than annual) data collection cycles to reduce burden.

Primary Data Collection

A survey conducted at three time points will be used to assess outcomes. Prior to data collection, program staff will complete the Baseline Survey Tracking Sheet (see Attachment B-2), and give participants consent materials (see Attachments B-4, B-5, and B-6), following pre-specified instructions (see Attachments B-3 and B-7)1. The initial baseline survey will include questions regarding demographic characteristics and baseline outcome characteristics, which will be used for propensity score matching, and in some cases included as controls in the impact analysis regression models. The variables to be collected will be: age, gender, caregiver status, military service, race/ethnicity, criminal history, physical/mental disability, educational level/degrees, prior employment history and income, financial support, and area of residence. These measures were designed to encompass the various factors that may determine both the likelihood that an applicant may be selected for participation in the AmeriCorps program, as well as an individual’s education level and employment status.

The study’s outcomes will be measured during the pre-service (pre-test) and post-service (post-test) surveys. The latter will include an immediate post-service survey at the end of members’ program participation, and a follow-up survey administered six months after the initial post-survey. Surveys will be available in multiple modalities, including proctored paper surveys, online surveys, and telephone interviews. Members of the treatment group (opportunity youth AmeriCorps members) will complete the pre-survey and first post-survey using the proctored paper survey mode during member on- and off-boarding. For the follow-up post-service survey administered to the treatment group, and for all surveys administered to the comparison group, respondents will complete the online survey, with the telephone interview mode used only in cases where participants do not have email addresses or do not respond to emailed invitations and emailed or texted reminders to complete the online survey. Since treatment and comparison groups will be demographically similar and statistically matched to ensure that there are no major differences, there is no expectation that any mode effects found would differ for treatment or comparison group members. Therefore, current opportunity youth AmeriCorps can be considered representatives of the complete target audience. The timing of survey administration to the treatment group will vary by each site’s program start/stop date; comparison group youths’ surveys will be administered at a similar time period to that of their matched AmeriCorps members.

Outcomes will be assessed in the three domains of interest: education, employment, and community engagement. Questions will assess changes in knowledge, attitudes, behavior, and skills in each of these areas. These interim changes are expected to be measurable at the initial post-test. Survey items include questions from the following sources: the generic AmeriCorps member application, the AmeriCorps Member Exit Survey, the AmeriCorps Alumni Survey, the National Longitudinal Study of Youth (NLSY) questionnaire, the Career Competencies Indicator (CCI), and the Career Decisions Self-Efficacy Scale (CDSE); with the exception of the member application, each scale used to construct this study’s survey has been extensively tested or validated with groups similar to those in this study (Attachment A-2 matches each question to its source scale). In addition, the survey contains checklists based on program input related to their experiences and activities, which were created to assess behaviors related to each of the three outcome domains, such as completion of college applications or job applications.

Additional impacts in each of the three outcome areas will also be assessed, though it is expected that some or all of these will not be apparent until data is collected from the follow-up survey six months after program participation. Long term impacts, anticipated to be visible at a minimum of three to six months after program completion, include changes in education status, employment status, civic engagement (e.g., voting), and decreased interactions with the criminal justice system.

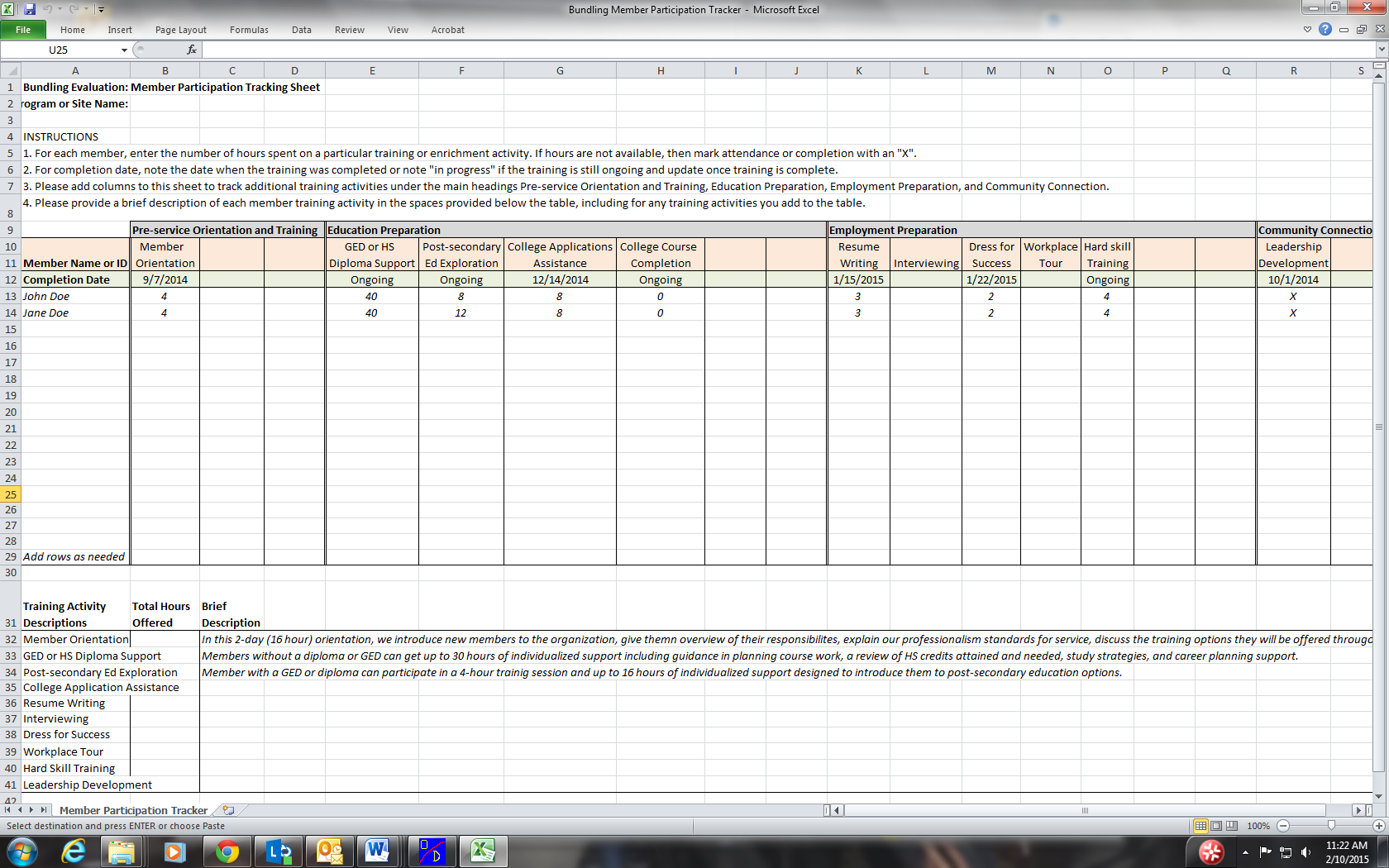

Secondary Data Collection

Though it is not proposed that they be included in the primary impact regression model, program participation variables for each AmeriCorps program participant will be collected. Such variables can be used to gain exploratory insight into the heterogeneity of participants’ experiences as AmeriCorps members, and the association that the nature of participation activities shares with education, employment, and community engagement outcomes. Measures include: number of hours of service completed, the number and type of development sessions attended, and the types of services received or tutorials attended. These measures will be directly collected from program records and entered into the Evaluation Participant Tracking Sheet (see Attachment B-8). To the extent possible, comparison group members will also be asked about their participation in programs similar to AmeriCorps, or receipt of services in the domains of interest, in order to reduce the potential for Type II errors.

Statistical Methodology and Sample Selection

Within each site, propensity score matching will be used to make the groups of participants and non-participants as statistically equivalent as possible. A baseline analysis will be conducted during program activities (Year 1) which will include a test of the baseline equivalence of the program and comparison groups pre-match and post-match. The impact analysis will be conducted twice. The initial impact analysis will occur at the end of the program participation period. This will differ for each program. For 8 of the programs, this will occur 9-12 months after program enrollment; one program site is summer-only and its initial impact analysis will take place in August 2016. For all program sites, the second impact analysis will be conducted six months after the end of the program participation period.

Matching Procedure

Propensity score matching (PSM) techniques facilitate the construction of a sample of nonparticipants with characteristics that closely correspond to those of program participants. Propensity score matching will, to the extent possible, adjust the analysis sample so that the observational equivalence of the treatment and comparison groups is maximized. Implementing this approach involves:

Collecting survey data from members and non-members of the programs, including pre- program measures of education and employment.

Applying matching methods to all participant data to construct appropriate comparison groups, which are comprised of non-participants with the same observable characteristics as program participants.

Constructing common outcome measures for the treatment and the comparison group members based on assessment and survey/interview data.

Producing rigorous estimates of program impacts through outcome comparisons between the treatment and the comparison group.

Matching methods have emerged as a reliable approach for producing rigorous evaluations of education and employment programs, particularly when a random assignment design is not feasible. Matching methods rely on the conditional independence assumption: the outcome (i.e., the outcome of the individual not participating in the program) is independent of program membership, controlling for observed characteristics. The implication is that non-participants who are observationally comparable to participants can be used as a comparison group for the evaluation. Matching methods provide credible impact estimates when: 1) the data include large samples of non-participants; and 2) matching is performed based on rich information on participant and non-participant characteristics, such as education and employment history.

PSM will be implemented using the following steps:

Step 1: Merge data – All study participant data collected at the first stage of data collection will be merged based on pre-assigned participant ID numbers. The merged data will include all available characteristics and outcomes of participants and non-participants. The data will include a dichotomous program participant variable, indicating whether the participant was an AmeriCorps member identified as an opportunity youth (with a score of 1) or was in the comparison group (with a score of 0).

Step 2: Produce propensity score – The evaluator will use a logit model to estimate the likelihood of program membership based on available baseline control variables: age, gender, race, ethnicity, criminal history, physical/mental disability, education level/degrees, prior employment history and income, financial support, caregiver status, military status, and area of residence. The model results will be used to produce a propensity score for each member and non-member in the data; the propensity score is equal to the predicted probability of program membership based on observed member characteristics.

Step 3: Use propensity score to match members with non-members – Initially, radius matching will be used to match participant cases to one or more comparison cases that have identical or nearly identical propensity scores. Other methods of matching are available (e.g., kernel) if the match is unsuccessful.

Step 4: Test comparison sample and modify specification if necessary – Once matching is achieved, it is necessary to test if members and comparison individuals share similar characteristics. These tests involve comparisons of variable means and standard deviations between the treatment and the comparison group. If treatment-comparison differences in characteristics are detected, the evaluator will modify the logit model specification (e.g., include polynomials to capture nonlinearities or multiplicative terms to capture variable interactions, changing the method of matching) to eliminate such differences and ensure that a successful match is achieved. Because of the nature of program assignment, this is a particularly necessary step for the internal validity of the impact analysis.

Degree of Accuracy Needed for the Purpose Described in the Justification

The proposed study will be sampling the full population of eligible program participants and recruited comparison group members, so the minimum detectable effect size of the difference between the treatment and comparison group for 80% power was calculated rather than the degree of accuracy (e.g., confidence interval of a point estimate), to determine the sample size for the study. The study will have sufficient power to detect a moderate standardized effect size, between delta= .24 and .37, as described below.

Minimum Detectable Effect Size

As the number of available AmeriCorps grantees serving opportunity youth was a limiting factor for the study, JBS calculated the Minimum Detectable Effect Size (MDES) for the study under a number of different assumptions, keeping constant a target of 80 percent power and a p=.05 level for statistical significance. Because AmeriCorps opportunity youth serve within programs or program sites in diverse geographic locations, the effects of service are assumed to be nested within sites.

It was anticipated that each program would have an average of 16 youth members2 at Time 2 (after 80% attrition), with whom at least 16 comparison youth would be propensity score matched. It is anticipated that comparison youth will be pulled from within the program site (e.g., unselected applicants) or recruited from each program site’s referring partners. Therefore, comparison group participants are considered nested within each program site. The MDES was calculated assuming 20 groups with 32 members, based on the projected sample size at Time 2 (after 80% attrition). Optimal Design Software was used to calculate the MDES for a series of different scenarios, which varied in their: 1) intra-class correlations (rho), ranging from very low to moderate (from 0 to .05); and 2) outcome variance accounted for by covariates, ranging from very low (.1) to higher (r2=.30). The estimates are provided in Table 4 below. The lowest (and therefore best case) minimum detectable effect sizes was a standardized effect size of delta=.24 which would be slightly above what would be considered small (Cohen, 1988) and could be attainable for the outcomes this study is targeting. Though less likely, the highest MDES are in the range of .36-.37, which would require a fairly robust program effect to be detected. Additional attrition at Time 3 will result in higher required effect sizes.

Table 4. Number of Clusters, Number of Participants and Minimum Detectable Effect Size

Number of Available Programs and Participants |

MDES with R2=.15 rho=0 |

MDES with R2=.15 rho=.02 |

MDES with R2=.15 rho=.05 |

MDES with R2=.30 rho=0 |

MDES with R2=.30 rho=.02 |

MDES with R2=.30 rho=.05 |

Treated vs. Non treated within site (treating sub-sites and sites as sites 20 sites with N= 32 in each site) |

0.24 |

0.30 |

0.37 |

0.24 |

0.29 |

0.36 |

Analysis of Survey Results

The bundled evaluation study will take data collected from program participants and non-participants from each of the 20 sites, collapsing them into a single dataset for the impact analysis. A single, dichotomous program participation indicator (1=participated in an AmeriCorps opportunity youth program as a member; 0=did not participate in an AmeriCorps program as a member) will be the variable used to evaluate the program impact on education, employment, and community engagement outcomes.

Descriptive Analyses of Evaluation Sample

Prior to conducting any impact analyses, the evaluator will develop descriptive analyses for treatment and matched comparison group members based on data from the surveys/interviews and administrative data taken from before the program began. These analyses will provide an overview of the baseline characteristics of treatment and matched comparison group members, including age, gender, race, criminal history, physical/mental disability, education level/degrees, prior employment history and income, financial support, and area of residence.

The results will provide an overall characterization of AmeriCorps program members and their matched comparison cases. These analyses, as mentioned above, will also provide evidence on whether the matching approach was effective in identifying comparison cases that are observationally equivalent to the treatment cases. To show that matching was done effectively, the study will use comparisons of variable means and standard deviations between the treatment and the comparison group.

Additionally, the evaluator will produce descriptive statistics of treatment and matched comparison group outcomes at two points in time after program completion: at the end of the program participation (post-test) and six months after program completion.

Descriptive analyses of these measures will enable us to observe patterns in education, employment, and community engagement-related outcomes for treatment and comparison group members following program completion. These outcomes can be converted such that they apply to performance measures and pre-assigned target levels. This will enable these data to be applied to other reporting and performance requirements of the AmeriCorps program.

Impact Analyses

To estimate the impact of AmeriCorps programs on participants’ education, employment, and community-engagement-related outcomes, the evaluator will examine outcome differences between the treatment group (AmeriCorps program members) and the comparison group. By including all members of the treatment group, regardless of whether or not they complete their service or service hours, the estimated relation of treatment group membership on post-test outcomes will be interpreted as the Intent-to-Treat impact estimate. An additional Treatment-on-Treated analysis which adjusts for hours of service completed and participation in key member development activities will also be conducted.

To estimate program impacts with increased statistical efficiency, the evaluator will use multivariate multilevel regression models using the post-match sample, which controls for available participant characteristics. Group-level covariates will be added to the model to account for site-level contextual differences in socio-economic conditions, based on public information regarding the county in which each site functions. The dependent variable in this model will be each participant’s education, employment, and community engagement-related outcomes of interest, namely educational enrollment, educational attainment, employment attainment, employment retention, civic engagement, interactions with the criminal justice system, and connection to community resources.

The parameter constituting the ‘impact estimate’ in this model will be the regression-adjusted treatment effect of the AmeriCorps program on each outcome of interest. We will perform a design-based analysis of the following regression model

y = Xβ + Zu + ε

where y is an n x 1 vector of responses, x is an n x p matrix containing the fixed effect regressors, β is a p x 1 vector of fixed-effect parameters, Z is an n x q matrix of random effects regressors, u is a q x 1 vector of random effects, and ε is an n x 1 vector of errors.

Fixed-effect parameters include treatment and comparison group assignment as well as the following individual-level covariates:

Age

Gender

Race/ethnicity

Caregiver status

Employment history

Education history

Criminal history

Random-effects parameters that will be considered for inclusion are county-level unemployment and on-time graduation rates. However, due to the small number of groups in the multilevel study, it is unlikely that site-level differences will be statistically detectable.

Where outcome measures are not normally distributed across participants, the type of regression estimated will be changed (e.g., Poisson, negative binomial or logistic) and adjustments can be made to the model estimation procedures such that the model makes fewer assumptions of outcome distribution normality.

Reporting of Survey Results

It is anticipated that reports will be produced at three points in time for this evaluation. The first report, to be developed following the recruitment of the comparison group participants, will provide information on baseline equivalence of the treatment and comparison groups. The second set of reports will describe the outcomes and comparison reports for each participating program and the evaluation as a whole at the end of program completion (post-test). These reports will be produced during the third year of the evaluation. The third set of reports will be the impact analysis report six-months after program completion, including participant outcomes and comparison reports for each participating program and for the evaluation as a whole, based on the survey conducted at the six-month follow-up. Reports for the overall evaluation will be shared with CNCS and with all participating programs. Reports for individual programs will be shared with CNCS and the corresponding individual program.

The analysis of treatment and comparison groups at the second and third waves will examine differences between question responses vs. the first wave; e.g., “XX percent change in self-efficacy ratings of treatment group members, compared to YY percent change in comparison group members, an estimated difference-in-difference of -/+WW percent which is statistically significant if p-value < 0.05.” See below for a sample table shell.

Table 5: Sample Table Shell for Reporting Pre- Post Treatment and Comparison Group Survey Results

Survey Question |

Change in Treatment Group |

Change in Comparison Group |

Difference-in-Change |

p-value |

Self-efficacy |

XX.X |

YY.Y |

-/+WW.W** |

0.VVV |

Notes: **p-value<0.01, *p-value<0.05

Sample Retention

It is necessary for a successful evaluation study to avoid loss of the sample due to missing data and attrition. It is expected that the program members (i.e. the treatment group) will be much easier to locate and survey because most will still be attending the AmeriCorps program for the first year and will have some degree of connection with the program during the following year. Thus, focused attention for retention efforts may be necessary for treatment group participants3 who drop out of their program early and for comparison group participants. The evaluation team will use multiple approaches, potentially including obtaining information on key friends and family members at intake, maintaining regular contact (e.g., every other month) through calls, texts, postcards, and/or social networking sites (e.g., Facebook), as is appropriate. Small but useful incentives will be offered at follow-up. Differential rates of attrition between program and comparison participants will be monitored closely and reported in program status updates.

In order to minimize differential attrition between the program and comparison groups, the contractor will work with AmeriCorps program staff to attempt to equalize as much as possible the survey conditions for all participants (program participants, program participants who drop out of the program, and members of the comparison group) while remaining flexible to the needs and preferences of different participants. The survey will be made available in multiple modalities (paper, online, phone) and participants will have the option of receiving reminders in the format of their choosing. JBS believe that it will be possible to make survey conditions reasonably comparable across all participants and maximize accessibility through the use of flexible strategies. These strategies will avoid overall and differential attrition of the sample.

Unusual Problems Requiring Specialized Sampling Procedures

None.

Use of Periodic (Less Frequent than Annual) Data Collection Cycles

Data will be collected at three points based on the timing of treatment member program participation. Eight of the nine programs last 9-12 months; one is a summer-only program. For programs that are shorter than 12 months, it will be necessary to collect pre- and post- program surveys within the same year. For all programs, follow-up surveys will be collected six months after the end of the program. This shorter follow-up cycle is necessary in order to maximize response rates and to fit with grantee funding cycles.

B3. Methods for Maximizing the Response Rate and Addressing Issues of Nonresponse

B3. Describe methods to maximize response rates and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield “reliable” data that can be generalized to the universe studied.

Maximizing the response rate is a key priority for this study. The evaluators will employ a variety of strategies to maximize the response rates and to address issues of nonresponse. This is compatible with research suggesting that the use of several different strategies can increase the success of retention efforts (Robinson, Dennison, Wayman, Pronovost, & Needham, 2007).

Maximizing the Response Rate

The specific strategies that will be used to maximize the response rate and ensure the target sample size is met include:

Oversampling;

Targeted communications via the survey introduction, telephone script, and email message;

Using multiple reminders and multiple modes to contact and follow-up with respondents;

Incentive offers.

Oversampling

Oversampling of comparison group members will be used to ensure the target sample size is met and to maximize the likelihood of a good match.

Targeted Communication

The instructions for survey proctors (see Attachment B-8), the introduction and follow-up emails (see Attachments B-12 and B-10), and the telephone script for the phone survey (see Attachment B-11), are designed to encourage participation and reduce nonresponse. They provide information about the purpose and nature of the study, and assurances of the confidentiality of responses. The evaluation team will use multiple communication approaches, potentially including obtaining information on key friends and family members at intake, and maintaining regular contact with respondents during non-survey periods (e.g., every other month) through calls, texts, postcards, and/or social networking sites (e.g., Facebook) as appropriate. Programs will also be encouraged to facilitate communication about the study with participants before and during data collection periods.

Using Multiple Contacts and Multiple Modes

A multimodal survey will be used to improve the overall response rate and reduce attrition. The survey will be distributed to respondents via email, telephone, and/or by paper. Non-respondents will be contacted a minimum of three times with reminder emails or phone calls at four, eight, and twelve days after initial contact.

All respondents will be informed of the significance of the survey to encourage their participation. In addition, processes to increase the efficiency of the survey (e.g., ensuring that the survey is as short as possible through pilot testing, using branching to ensure that respondents read and respond to only questions that apply to them) and the assurance of confidentiality will encourage survey completion.

Incentive Offers

Although sometimes controversial, offering incentives to human research subjects is both a relatively common and ethical way to increase response rates and reduce attrition in studies. According to Dillman (2000), the use of incentives has a positive impact on increasing the response rate with no adverse effects on reliability. Although participation in this project is voluntary, respondents are likely to perceive a time cost and burden associated with their participation. The use of incentives to increase the response rate is particularly important when collecting data from hard-to-reach populations (e.g., opportunity youth comparison group members). Based on the definition of opportunity or disconnected youth from the Consolidated Appropriations Act of 2014, which is the definition being used in this study, the research team has defined the intended treatment and comparison groups as “hard-to-reach”.

This classification is consistent with existing research in both defining hard-to-reach populations and also studying opportunity or disconnected youth. For example, Marpsata and Razafindratsima (2010) detail several, often overlapping, characteristics that may qualify a population as hard-to-reach, including: difficult to identify (many youth may not realize they fit the definition of opportunity youth), engagement in illicit or sensitive affairs (drug use, criminal history), or individuals do not wish to disclose that they are a member of this population, usually to avoid stigmatization.

The use of incentives has shown documented success in the study of hard-to-reach populations. Research by Church (1999) found that monetary incentives increased the response rate of mail-in surveys by 19.1 percentage points over no incentives and 11.2 percentage points over non-monetary incentives. There also appears to be a positive, linear relationship between incentive amount and response rate. These findings hold true for telephone, mail-in, and face-to-face or proctored surveys (Church 1999; Yu and Cooper 1983; VanGeest et al., 2007; Hawley et al. 2009; Singer and Kulka 1999). Additionally, research by Singer et al. (1999) reported that incentives have “significantly greater” impacts when used with a population where a low response rate is expected. Given the overall response rate for the Pilot Test, the research team feels incentives will be necessary to reach the 80% response rate set forth by OMB.

Therefore, contingent upon survey completion, cash equivalent incentives in lieu of cash payments will be offered. Gift cards from a major retailer (e.g. Target) will be used as an incentive for opportunity youth to complete the survey. Both treatment and comparison group respondents will be given a $10 gift card after completing the pre-test and again after completing the post-test survey. Given the “disconnected” and difficult to track nature of the population under study, as well as the length of time between post-test and follow-up post-test, a $20 gift card will be given after completing the six month follow-up post-test.

Addressing Issues of Nonresponse

Even with the most aggressive and comprehensive retention efforts, there is a possibility of attrition in that some respondents that participated in the baseline survey may not be found for the follow-up (i.e., the respondent is deceased) or the respondent, even if found, may decline to participate in the follow-up. All completion rates at follow-up are conditioned on the respondent having been a baseline respondent, since follow-up data collection will be attempted only for those who responded at baseline. If the completion rate is less than 80 percent at either the second or third wave of data collection, we will conduct a non-response bias analysis to determine whether the follow-up respondents differ significantly from the baseline treatment and comparison group members that enrolled in the study, and whether attrition differs for the two groups.

If necessary, we will calculate a non-response weight adjustment. The evaluator will compute survey weights that account for differential non-response at each round of data collection. Follow-up survey weights will account for both the propensity to participate in the baseline survey and the follow-up. The follow-up survey weights will be used in analyzing the combined baseline and follow-up data. Analyses using the weights will be representative of the original study population. The contractor will evaluate whether it is necessary to make weight adjustments for additional missing data arising from the matching process.

Since almost all questions on the survey are required of respondents, item nonresponse will likely be very minimal, and will result primarily from early termination of the survey. These will be classified as incomplete, but included, cases and non-sufficient responses. We will assess whether both partial survey completion and answers to survey items differ significantly by survey modality (proctored paper survey, online survey, or CATI). We will use multivariate logistic regression for the analysis of response completion by mode and logistic, multinomial, and Ordinary Least Squares (OLS) regressions for the analysis of item response. The analysis used will be determined by the nature (i.e., binomial, categorical with more than two groups, continuous) of the survey item in question. The models will be multivariate, controlling for individual demographic characteristics. Estimates of how item response changes based on response mode can also be calculated, substituting different response conditions (e.g., online versus other formats) for ‘response’ and ‘nonresponse’ categories.

Missing Data

In order to minimize missing data and inaccuracies in data derived from hand-completed surveys, the evaluator will provide oversight for data entry and will conduct regular audits of the survey and assessment data to ensure the completeness and accuracy of entered information. Similarly, administrative data will be audited for completeness and accuracy.

For phone interviews or online surveys, respondents may decline to answer some questions and some data will be missing in the final analysis dataset. If any item response rate is less than 70%, we will conduct an item nonresponse analysis to determine if the data are missing at random at the item level.

Cases in the matched dataset with missing data will be included in the final analysis using Full Information Maximum Likelihood (FIML), or, if it becomes necessary due to sample size and outcome distribution, Bayesian estimation. This technique is preferable to listwise deletion, which has strong potential for producing biased parameter estimates. Multiple Imputation, which is another alternative to FIML, can be cumbersome to employ when data are continually being collected and datasets are being managed and adjusted. Cases with missing values on the outcomes will be included in the analysis.

B4. Tests of Procedures or Methods

Survey pilot tests were completed in March 2015 with AmeriCorps opportunity youth members (n = 37). Additionally, cognitive interviews with [n=9] survey respondents were conducted to ensure data quality and allow JBS researchers to determine the extent to which respondents’ understandings of the questions match their intended meaning. Pilot test participants were assigned randomly to one of the three modalities (paper survey, online survey, or phone), in order to determine whether modality affected responses. Pilot testing the survey assessed the ease with which respondents are able to complete and return the survey in the various modalities. Across all respondents, the average time to complete the instrument was 0.33 hours (20 minutes).

Assessment of the survey instrument included the following:

Analysis of mode effects on to items with potential for differential responses due to sensitivity, social desirability, or comprehension;

Univariate descriptive statistics to ensure sufficient variability of responses; and

Reliability and variability of scales.

Mode effects were not found for items identified as sensitive (e.g., criminal history), or prone to social desirability bias (e.g., voting behavior). However, respondents assigned to the online survey condition gave significantly different responses to a question regarding earnings (revised Q.12 in attachment A-1) due to problems with comprehension.

Items demonstrated sufficient variability, and all scales demonstrated satisfactory internal consistency, with Cronbach’s alpha ranges between .74 and .90.

The survey results and feedback from the cognitive interviews indicate that in most cases, the items made sense to respondents, the response choices provided a sufficient range of options to show variability of response, and the respondents interpreted the items as intended. However, both the results of the mode effects analysis of the pilot data and information from proctors, phone interviewees, and cognitive interviewees suggest that participants had difficulty comprehending some questions and response options. Revisions were made to the survey in response to pilot test results.

For a complete and extensive description of pilot testing procedures, results, and changes made to the survey as a result of the pilot, please see the separate attachment titled “Results of pilot testing for Opportunity Youth AmeriCorps Member Survey”.

B5. Names and Telephone Numbers of Individuals Consulted (Responsibility for Data Collection and Statistical Aspects)

B5. Provide the name and telephone number of individuals consulted on statistical aspects of the design, and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

The following individuals were consulted regarding the statistical methodology: Gabbard, Susan Ph.D., JBS International, Inc. (Phone: 650-373-4949); Georges, Annie, Ph.D., JBS International, Inc. (Phone: 650-373-4938); Cardazone, Gina, Ph.D., JBS International Inc. (Phone: 650-373-4901); Lovegrove, Peter, Ph.D., JBS International, Inc. (Phone: 650-373-4915); and Vicinanza, Nicole, Ph.D., JBS International, Inc. (Phone: 650-373-4952).

The representative of the contractor responsible for overseeing the planning of data collection and analysis is:

Nicole Vicinanza, Ph.D.

Senior Research Associate

JBS International, Inc.

555 Airport Blvd., Suite 400

Burlingame, California 94010

Telephone: 650-373-4952

Agency Responsibility

Within the agency, the following individual will have oversight responsibility for all contract activities, including the data analysis:

Adrienne DiTommaso

Research Analyst, Research & Evaluation

Corporation for National and Community Service

1201 New York Ave, NW

Washington, District of Columbia 20525

Phone: 202-606-3611

E-mail: [email protected]

B6. Other information – Study Limitations

Limitations of the current study and its design include the following:

This is a quasi-experimental design using propensity score matching on observable characteristics of treatment and comparison group members. Non-observable characteristics (e.g., motivation) are assumed to be correlated with observable characteristics (e.g., employment history). However, there may be non-observable characteristics or observed but unmeasured characteristics that influence individual outcomes. The examination of differences in outcomes helps to mitigate this concern, but the possibility remains that non-observable or unobserved characteristics may differentially influence change in outcomes over times. Alternate analytic strategies, such as three-level modeling individual change over time, are possible given the nature of data collection, and may provide additional information on participant growth.

The relatively low number of groups (20) interferes with the ability to assess group-level outcomes. Although this study is concerned with individual-level outcomes, this is a study limitation.

The programs are similar but not identical. The inclusion of one group that is a summer-only program introduces the potential for increased variability in outcome differences that may interfere with the ability to detect significant effects. In addition to differences in the duration of this program, there are also differences in the targeted participant age, and potentially the overall program dosage. Though we will conduct an alternate analysis excluding this group to determine whether this affects results, this alternative analysis will have reduced statistical power in comparison with the original analysis.

Anticipated attrition at the third wave of data collection will reduce the ability to detect long-term changes, such as changes in employment status, that are expected to occur months after the program have ended.

Since the majority of comparison group participants will be program applicants or referrals from agencies, we expect that many of them will receive services that are similar to those received by treatment group members (e.g., employment services). However, we will not have access to administrative data on the extent and type of services used. Though we will ask a question about other services used, which will be particularly helpful in ensuring that comparison group do not receive identical treatment (e.g., have not enrolled as AmeriCorps members at another site), this lack of detailed administrative information on comparison group members is a limitation.

Works Cited Part B:

Caliendo, M., & Kopeinig, S. (2008). Some practical guidance for the implementation of propensity score matching. Journal of economic surveys, 22(1), 31-72.

Church, Allan H. (1999). Estimating the Effect of Incentives on Mail Survey Response Rates: A Meta-Analysis. Public Opinion Quarterly 57:62-79.

Consolidated Appropriations Act (2014). Retrieved from http://www.gpo.gov/fdsys/pkg/BILLS-113hr3547enr/pdf/BILLS-113hr3547enr.pdf

Dillman, D. A. (2000). Mail and Internet surveys: The tailored design method (2nd ed.). New York, NY: John Wiley and Sons.

Hawley, Kristin M., Jonathan R. Cook, and Amanda Jensen-Doss. (2009). Do Noncontingent Incentives Increase Survey Response Rates among Mental Health Providers? A Randomized Trial Comparison. Administration and Policy in Mental Health 36.5: 343–348.

Marpsata, Maryse and Nicolas Razafindratsimab. (2010). Survey Methods for Hard-To-Reach

Populations: Introduction to the Special Issue. Methodological Innovations Online

5(2) 3-16.

Opportunity Nation (2014). The Opportunity Index. Retrieved from: http://opportunityindex.org/

Robinson, K. A., Dennison, C. R., Wayman, D. M., Pronovost, P. J., & Needham, D. M. (2007). Systematic review identifies number of strategies important for retaining study participants. Journal of Clinical Epidemiology, 60(8), 757-e1.

Singer E, Gebler N, Raghunathan T, et al. (1999). The Effect of Incentives in Interviewer-Mediated Surveys”. Journal Official Stat; 15:217–30.

Singer, Eleanor, and Richard A. Kulka. (2002). Paying Respondents for Survey Participation. Survey Methodology Program 092.

VanGeest JB, Johnson TP, and Welch VL. (2007). Methodologies for Improving Response Rates in Surveys of Physicians: A Systematic Review. Evaluation and the Health Professions.;30:303–321.

Yu, Julie, and Harris Cooper. (1983). A Quantitative Review of Research Design Effects on

Response Rates to Questionnaires. Journal of Marketing Research 2036-2044

Attachments

Attachment A-1: Survey OMB Control #

Youth Employment and Education Study

Thank you for your willingness to complete this survey. Your responses to this survey will be kept confidential and your responses will only be reported summarized with other responses. This is NOT a test. There are no right or wrong answers to the questions, so please choose the responses that best apply to you. This survey will take approximately 20 minutes to complete. Upon completion of this survey, you will receive a [$10/$20] [electronic] gift card.

This study will take place over the course of two years. In order to track your responses confidentially over time, we are asking that you create a code that will enable us to link your surveys to each other.

First three letters of the city or town in which you were born: _ _ _ [For example: S P R for Springfield]

Number of letters in your last name: _ _ [For example 0 5 for Smith]

First 2 letters of your mother’s first name: _ _ [For example M A for Mary. If unknown, enter “AA”):]

1. Date of birth

[Month/day/year]

2. Gender

Male

Female

Other (specify): ____

3a. Are you a parent or primary caregiver of a child?

No

Yes, and all of my children live with me

Yes, and some of my children live with me

Yes, and none of my children live with me

3b. Are you a primary caregiver of a parent or other adult (e.g., disabled or sick relative)? [No, Yes]

4. Have you served on active duty in the military?

[No,

Yes]

5. How do you identify yourself in terms of ethnicity/race? Please select all that apply.

Black, not of Hispanic origin

American Indian or Alaskan Native

Asian or Pacific Islander

White, not of Hispanic origin

Hispanic

Other (specify): [open-ended text box]

6. Where do you currently live?

City or town _______

Zip code ______

7. Check the highest level of education that you have completed:

Middle school

Some high school

High school diploma or GED

Technical school / Apprenticeship

Some college

Associate’s degree

Bachelor’s degree

Graduate degree

Other (please specify): __________

[If applicable] When did you receive a high school diploma or GED? What month and year?

8. When were you last enrolled in school – What was the

month and year?

[Month/year]

9. What school did you most recently attend?

Middle school

High school

Vocational/technical/alternative school (e.g., online school, trade school)

Community college

4-year college

10. Were you employed at any point in the last 6 months?

[No; Yes]

11. Are you currently employed [AMERICORPS MEMBERS ONLY: AmeriCorps service does not qualify as employment]?

[No; Yes]

11a. [If Yes] Please describe your current employment status:

Part-time 1-20 hours per week

Part time 21-39 hours per week

Full-time 40 or more hours per week

11b. [If Yes] How long have you held your current position?

Less than 1 month

1 to 3 months

4 to 6 months

More than 6 months

11c. [If No] Please describe your current employment status:

Looking for work

Not looking for work

Disabled, not able to work

Pursuing school or training instead of work

Engaged in part-time volunteer position, internship, or apprenticeship

Engaged in full-time volunteer position, internship, or apprenticeship

12. In your most recent job, what was your hourly pay rate (in dollars)? ________

13. Have you ever done any of the following?

A paid part-time job

A paid full-time job

A paid internship

An unpaid internship

Volunteer work that was not part of a requirement for high school graduation

Received a stipend for attending a program (note: does not include AmeriCorps)

Baby-sitting, yard-work or chores that you were paid for by a friend or neighbor

14. What is the longest amount of time you have been at a single job?

I have never been employed

Less than a month

1 to 3 months

4 to 6 months

7 to 11 months

1 to 2 years

More than 2 years

15. How much do you agree or disagree that each of the following

statements describes you?

[Strongly

Agree; Agree; Neither Agree nor Disagree; Disagree; Strongly

Disagree]

I can always manage to solve difficult problems if I try hard enough.

If someone opposes me, I can find the means and ways to get what I want.

It is easy for me to stick to my aims and accomplish my goals.

I am confident that I could deal efficiently with unexpected events.

Thanks to my resourcefulness, I know how to handle unforeseen situations.

I can solve most problems if I invest the necessary effort.

I can remain calm when facing difficulties because I can rely on my coping abilities.

When I am confronted with a problem, I can usually find several solutions.

If I am in trouble, I can usually think of a solution.

I can usually handle whatever comes my way.

16. If you found out about a problem in your community that you

wanted to do something about, how well do you think you would be able

to do each of the following:

[I

definitely could do this; I probably could do this; Not sure; I could

not do this; I definitely could not do this]

Create a plan to address the problem

Get other people to care about the problem

Organize and run a meeting

Express your views in front of a group of people

Identify individuals or groups who could help you with the problem

Express your views on the Internet or through social media

Call someone on the phone you had never met before to get their help with the problem

Contact an elected official about the problem

17. How much confidence do you have that you could:

[No

confidence at all; Very little confidence; moderate confidence; Much

confidence; Complete confidence]

Use the internet to find information about occupations that interest you

Select one occupation from a list of potential occupations you are considering

Determine what your ideal job would be

Prepare a good resume

Decide what you value most in an occupation

Find out about the average yearly earnings of people in an occupation

Identify employers, firms, and institutions relevant to your career possibilities

Successfully manage the job interview process

Identify some reasonable career alternatives if you are unable to get your first choice

Determine the steps to take if you are having academic trouble

Complete a college or trade school application

Apply for financial aid to further your educational goals

Obtain formal training needed to support your career goals

Pass a college course

Obtain certification in a technical or vocational field (e.g., construction, landscaping, health)

Sign up for health care

Obtain housing vouchers or other housing assistance

Find community resources that address your needs

18. How much do you agree or disagree with the

following:

[Strongly

Agree; Agree; Neither Agree nor Disagree; Disagree; Strongly

Disagree]

I have a strong and personal attachment to a particular community

I am aware of the important needs in the community

I feel a personal obligation to contribute in some way to the community

I am or plan to become actively involved in issues that positively affect the community

I believe that voting in elections is a very important obligation that a citizen owes to the country

19. Generally speaking, would you say that you can trust all the people, most of the people, some of the people, or none of the people in your neighborhood?

All of the people

Most of the people

Some of the people

None of the people

20. Please indicate how much you agree or disagree with the

following statements.

[Strongly

Agree; Agree; Neither Agree nor Disagree; Disagree; Strongly

Disagree]

I have a clear idea of what my career goals are

I have a plan for my career

I intend to pursue education beyond high school (e.g., college, trade school)

I know what to seek and what to avoid in developing my career path

21. In the last 6 months, have you done any of the following?

Sent in a resume or completed a job application

Written or revised your resume

Interviewed for a job

Contacted a potential employer

Talked with a person employed a field you are interested in

Taken a GED test

Completed a course in high school, college, or an alternative school

Completed a college or trade school application

Completed a financial aid application (e.g., FAFSA - Free Application for Federal Student Aid)

Enrolled in a college, trade school, or a certification course

22. In the last 6 months, have you looked for any of the following?

Full-time work

Part-time work

Internship or apprenticeship

Volunteer position

23. Please assess if the follow factors are barriers to employment

for you personally:

[Large

barrier to employment; A barrier but can be overcome; Not a barrier

to employment]

No jobs available where I live

Do not have enough work experience for the job I want

Do not have enough education for the job I want

Have family or other responsibilities which interfere

Do not have transportation

Not good at interviews or do not know how to create a resume

Can make more money not in an “official” job

Criminal record makes it difficult to find a job

Credit issues make it hard to find a job

Illness or injury makes it challenging to find a job

Do not wish to work

24. Are you currently using or visiting any of the following?

Local employment development division (for unemployment insurance or for help with finding a job)

Housing center (for help with finding housing)

Job center

Crisis center

Homeless shelter

Food bank

Community health clinic

Adult school / community college extension programs

Mutual support or other assistance programs (e.g., AA, NA, AlAnon, grief support groups)

25. Are you currently accessing any of the following federal or state government supports?

Food assistance (e.g., WIC, SNAP)

Health care assistance (e.g., Medicaid or other health insurance )

Housing assistance (e.g., housing vouchers)

Other financial or practical assistance (e.g., TANF, child care assistance programs)

26. Have you ever been convicted as an adult, or adjudicated as a

juvenile offender, of any offense by either a civilian or military

court, other than minor traffic violations?

[No,

Yes]

27. Are you currently facing charges for any offense or on

probation or parole?

[No,

Yes]

28. Are you limited in any way in any activities because of

physical, mental, or emotional problems?

[No,

Yes]

29. How many times have you moved in the last 12 months?

I have not moved

Once

Two or more times

30. Were you registered to vote in the last presidential election?

Yes

No

No I was not eligible to vote

Don’t know

31. Did you vote in the last presidential election?

Yes

No

Don’t know

32. In the last 12 months, how often did you participate in the following activities? [Basically every day, a few times a week, a few times a month, once a month, less than once a month, not at all]

a. Participate in community organizations (school, religious, issue-based, recreational)

Keep informed about news and public issues

Help to keep the community safe and clean

Volunteer for a cause or issue that I care about

Donate money or goods to a cause or issue that I care about

33. What programs are you participating in or services are you receiving?

AmeriCorps or similar national or community service program (e.g., Job Corps, YouthBuild, City Year, Public Allies, Year Up)

Employment supports, [AMERICORPS MEMBERS ONLY: other than AmeriCorps] (e.g., job training)

Educational supports, [AMERICORPS MEMBERS ONLY: other than AmeriCorps] (e.g., tutoring, GED classes, college enrollment assistance)

Attachment A-2: Survey Questions and Sources

Table 1: Matching Variables

Variable |

Question(s) |

Source(s) |

Age |

1 |

AmeriCorps application |

Gender |

2 |

AmeriCorps Alumni survey |

Race |

5 |

AmeriCorps application (adapted) |

Criminal history |

26, 27 |

AmeriCorps application (adapted) |

Physical/mental disability |

28 |

Behavioral Risk Factor Surveillance System [BRFSS] |

Education level/degrees |

7, 8, 9 |

AmeriCorps application (adapted)(7), National Longitudinal Survey of Youth [NLSY] (8), NLSY (adapted) (9) |

Prior employment history and income |

10, 11a-c, 12, 13 |

NLSY (adapted)(10, 11), Gates (12), DC Alliance of Youth Advocates [DCAYA] (adapted)(13) |

Financial support |

25 |

New item |

Caregiver status |

3a, 3b |

AmeriCorps Alumni survey |

Military status |

4 |

AmeriCorps Alumni survey |

Area of residence |

6 |

AmeriCorps application (adapted) |

Similar services |

33 |

New item |

Table 2: Outcomes and Impacts

Outcome |

Question(s) |

Source(s) |

Members increase desire/expectations for postsecondary education |

20 |

New item (Career Competencies Indicator [CCI] adaptation) |

Members gain knowledge about postsecondary education |

17 (j, k, l, m, n, o) |

Career Decision Self-Efficacy scale [CDSE] (adapted) |

Members gain knowledge of job search skills |

17 (a, b, c, d, e, f, g, h, i) |

CDSE (adapted) |

Members increase positive attitudes about obtaining and maintaining employment |

20 (a, b, d) |

CCI (adapted) |

Members gain knowledge of community resources |

17 (p, q, r) |

New items (CDSE adaptation) |

Members increase positive attitudes / sense of community |

18, 19 |

AmeriCorps Exit survey |

Members increase self-efficacy |

15, 16 |

AmeriCorps Exit survey (originally from Competencies for Civic Action scale) |

Members complete job search components |

21 (a, b, c, d, e), 22 |

New items |

Members gain job experience |

10, 11a-c, 12, 13 |

NLSY (adapted)(10, 11), Gates (12), DCAYA (13) |

Members complete coursework and/or take GED test |

8, 9, 21 (f, g) |

NLSY (8) NLSY (adapted) (9), New items (21) |

Members or alumni increase in GED certificates / H.S. diplomas |

7 |

AmeriCorps application (adapted) |

Members or alumni increase access to community products (e.g., housing applications, TANF) |

24, 25 |

New items |

Members or alumni obtain a job / internship / apprenticeship |

10, 11a-c |

NLSY (adapted) |

Members increase civic engagement (registered to vote, engaged in the community) |

30, 31, 32 |

AmeriCorps Exit survey, (30, 31) AmeriCorps Alumni survey (32) |

Members or alumni increase in completed college/trade school applications |

21 (h, i) |

New items |

Members or alumni decrease recidivism / interaction with the criminal justice system |

26, 27 |

AmeriCorps application (adapted) |

Members or alumni increase in college enrollment |

7, 8, 9, 19(j) |

AmeriCorps application (adapted) (7),NLSY (8) NLSY (adapted) (9), New item(19 j) |

Members or alumni maintain employment |

10, 11a-c |

NLSY (adapted) |

AmeriCorps survey instruments used:

AmeriCorps application

AmeriCorps Exit survey

AmeriCorps Alumni survey

Additional instruments used:

Behavioral Risk Factor Surveillance System survey (BRFSS). http://www.cdc.gov/brfss/

Career Decision Self-Efficacy scale (CDSE). Betz, N. E., Klein, K. L., & Taylor, K. M. (1996). Evaluation of a short form of the career decision-making self-efficacy scale. Journal of Career Assessment, 4(1), 47-57.

Career Competencies Indicator (CCI). Francis-Smythe, J., Haase, S., Thomas, E., & Steele, C. (2013). Development and validation of the career competencies indicator (CCI). Journal of Career Assessment, 21(2), 227-248.

Competencies for Civic Action scale. Flanagan, C. A., Syvertsen, A. K., & Stout, M. D. (2007). Civic Measurement Models: Tapping Adolescents' Civic Engagement. CIRCLE Working Paper 55. Center for Information and Research on Civic Learning and Engagement (CIRCLE).

DC Alliance of Youth Advocates survey (DCAYA). http://www.dc-aya.org/

Gates Foundation survey. http://www.gatesfoundation.org/

National Longitudinal Study of Youth (NLSY). https://nlsinfo.org/

Attachment B-1: Table of Grantee Screening and Selection Results

Screening Criteria |

Program #1 |

Program #2 |

Program #3 |

Program #4 |

Program #5 |

Program #6 |

Program #7 |

Program #8 |

Program #9 |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

First |

Second |

Second |

Second |

First |

Second |

Second |

First |

Second |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

40 |

50 |

177 |

6 |

18 |

16 |

65 |

30 |

20 |

|

40% |

33% |

NA |

NA |

100% |

33% |

NA |

70% |

25% |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

Education |

Education |

Education |

Employment |

Education |

Education |

Education |

Education |

Education |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

O13-O16 |

O12-O15, |

O12-O15, |

O12-15 |

O13-O16 |

O12-O15, |

O12-O15, |

O3-O10, O12-O15, O13-O16 |

O12-O15 O13-O16, O14-O17 |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

NA |

Yes |

NA |

NA |

NA |

NA |

NA |

NA |

NA |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

|

Yes |

NA |

Yes |

NA |

NA |

NA |

Yes |

Yes |

Yes |

|

Yes |

No |

No |

No |

No |

No |

No |

No |

No |

Explanatory Notes for Attachment B-1: Table of Grantee Screening and Selection Results

Completed application: Grantee has a completed AmeriCorps application on file with CNCS.

First or second year of funding cycle: Grantee is in either the first or second year of a three-year funding cycle.

Program officer support: Program officer believes the program is an appropriate choice for inclusion in the bundle.

Opportunity Youth members: Approximate number of Opportunity Youth (OY) currently participating in the program.

Proportion of OY population reached: Approximate percentage of Opportunity Youth recruited into the program. Fifty percent, for example, means that approximately half of potential or actual applicants are accepted into the program. Some potential applicants are not invited to apply because they are not deemed to be ready to make the required commitment.

Proposed activities appropriate to bundle: The program's activities are consistent with the intended outcomes that the evaluation will measure.

Focus of intended program outcomes: The program's intended outcomes focus on education and/or employment outcomes that the evaluation will measure.

Performance measures: The program’s performance measures address the same (or similar) outcomes as the evaluation will measure.

Participation in CNCS performance measures: The grantee is participating in one or more CNCS performance measures in the focus area of economic opportunity, as identified by CNCS' codes for standard measures in this area. The following aligned output-outcome pairs are observed for one or more of the bundling candidates in Table 1.

O3: Number of economically

disadvantaged individuals receiving job placement services

O10:

Number of economically disadvantaged individuals placed in jobs

O12: Number of economically

disadvantaged National Service Participants who are unemployed prior

to their term of service

O15: Number of economically

disadvantaged National Service Participants that secure employment

during their term of service or within one year after finishing the

program

O13: Number of economically

disadvantaged National Service Participants who have not obtained

their high school diploma or equivalent prior to the start of their

term of service.

O16: Number of economically disadvantaged

National Service Participants that obtained a GED/diploma while

serving in a CNCS program or within one year after finishing the

program

O14: Number of economically

disadvantaged National Service Participants who have their high

school diploma or equivalent but have not completed a college degree

prior to their term of service

O17: Number of members that

complete a college course within one year after finishing a

CNCS-sponsored program.

Data systems at the program level: The grantee has set up data collection instruments and other systems to collect and aggregate raw data for performance measurement.

Access to existing records: Grantee can provide access to existing program records.

Existing data and measurement: The grantee has data collection systems and protocols in place that are amenable to an impact evaluation.

Staff closely connected to PM efforts: Staff representing the program for the bundled evaluation is closely involved in current performance measurement efforts.

Staff closely connected to evaluation efforts: Staff representing the program for the bundled evaluation is closely involved in any evaluation efforts currently underway. "NA" indicates a grantee is not undertaking an evaluation.

Consistent person across calls: The grantee can provide one or more staff persons to consistently participate in all group calls.

Alternate fully “deputized”: Alternate or backup staff available to participate in group calls are able to speak on the program director’s behalf.

Program is summer-only: Programs that are summer-only may not be able to collect evaluation data on the same schedule as other programs in the bundle

Attachment B-2: Baseline Survey Tracking Sheet

Attachment

B-3: Instructions for Collecting Baseline Data

Attachment

B-3: Instructions for Collecting Baseline Data

Baseline data should be collected from AmeriCorps applicants as quickly as possible. If the applicant is over the age of 18, this should be done the first instance that the applicant is face-to-face with a trained program staff member (e.g., while filling out the initial application). If the applicant is under the age of 18, parental consent must be obtained before any data collection occurs. For persons under 18, you must follow the instructions below.

Instructions for Underage Participants:

Participants under the age of 18 must obtain parental consent to participate in the study before any data is collected from them. If an applicant is under the age of 18, give them a Parental Consent form and a Youth Assent form.

Inform them that they may choose to participate in the study or not, and that their choice will in no way affect decisions about AmeriCorps membership.

Enter their first name and last initial under “Name.”

Write “Y” in the column labeled “Under 18?”

Once both forms have been turned in, write “Y” in the column labeled “Parental Consent Received?” Then follow the general instructions below.

General Instructions:

For each applicant to the program, enter their first name and last initial (e.g., John S.) under “Name” in the Baseline Survey Tracking Sheet.

Give the participant a copy of the survey. As you give the participant the survey, write down the survey ID in the “ID” column. You can find the ID in the upper right hand corner of first page of the survey.

Instruct the participant to read and complete the consent form on the first page. Verbally remind them of the following:

They may choose to participate in the study or not; their choice will in no way affect decisions about AmeriCorps membership.

Their answers will be confidential, and the survey will be placed in a sealed envelope as soon as it is completed.

If they consent, the evaluation contractor will be given their contact information, so that the contractor can follow-up with them if necessary to complete the next two surveys.

Once the participant has read and understood the instructions, they may choose to either participate in the study or not. Ask them to detach the first page from the rest of the survey and give it to you. Confirm that the ID number on the top matches the individual’s name.