HPOG NIE and Impact Supporting Statement_Part B REVISED 111814 clean

HPOG NIE and Impact Supporting Statement_Part B REVISED 111814 clean.docx

Health Profession Opportunity Grants (HPOG) program

OMB: 0970-0394

Supporting Statement for OMB Clearance Request

Part B

HPOG Impact Study 36-Month Follow Up and National Implementation Evaluation of the

Health Profession Opportunity Grants (HPOG) to Serve TANF Recipients and Other Low-Income Individuals

0970-0394

November 2014

Submitted by:

Office of Planning,

Research & Evaluation

Administration for Children & Families

U.S. Department of

Health

and Human Services

Federal Project Officers:

Hilary Forster and Mary Mueggenborg

Table of Contents

B.1 Respondent Universe and Sampling Methods 1

B.1.1 HPOG Grantees, Partners, Stakeholders, and Employers 1

B.1.2 HPOG Participants and Control Group Members 1

B.2 Procedures for Collection of Information 3

B.2.3 Degree of Accuracy Required 6

B.2.4 Who Will Collect the Information and How It Will Be Done 9

B.2.5 Procedures with Special Populations 11

B.3 Methods to Maximize Response Rates and Deal with Non-response 11

B.3.1 Grantees, Management and Staff, Partners and Stakeholders, and Employers 11

B.3.2 HPOG Participant Survey 11

B.4.1 Grantee, Management and Staff, Stakeholder/Network and Employer Surveys 12

B.4.2 15-Month Participant Follow-Up Survey 13

B.5 Individuals Consulted on Statistical Aspects of the Design 13

Appendices

A. HPOG Logic Model

B. HPOG-Impact 36-month Follow-Up Survey – Treatment Group Version

C. HPOG-Impact 36-month Follow-up Survey – Control Group Version

D. HPOG-Impact 36-month Follow-up Survey – Table of Contents

E. HPOG-NIE Screening Questionnaire

F. HPOG-NIE Semi-Structured Discussion Guide

G. OMB 60-Day Notice

H. HPOG-Impact Contact Update Letter and Contact Update Form

I. HPOG-Impact Advance Letter

Part B: Statistical Methods

In this document, we discuss the statistical methods to be used with new data collection under this OMB number (for statistical methods used with previously approved instruments please see the information collection request (0970-0394) approved August 2013). This new data collection includes: longer-term (36-month) follow-on data collection activities for the HPOG Impact Study (HPOG-Impact) and additional data collection activities for the Health Profession Opportunity Grants National Implementation Evaluation (HPOG-NIE). Both studies are sponsored by the Office of Planning, Research and Evaluation (OPRE) in the Administration for Children and Families (ACF) in the U.S. Department of Health and Human Services (HHS).

B.1 Respondent Universe and Sampling Methods

Thirty-two HPOG grants were awarded to government agencies, community-based organizations, post-secondary educational institutions, and tribal-affiliated organizations to conduct these activities. Of these, 27 were awarded to agencies serving TANF recipients and other low-income individuals. All 27 participate in HPOG-NIE. Twenty of these grantees participate in HPOG-Impact. Three of these grantees participate in the Pathways for Advancing Careers and Education (PACE)1 project.

This data collection request for HPOG-NIE and HPOG-Impact includes two major respondent universes: (1) HPOG program managers, and (2) current and future HPOG participants and potential participants, including HPOG-Impact treatment and control group members.

Below we describe each of the HPOG-NIE and HPOG-Impact respondent subgroups and respective data collection strategies.

B.1.1 HPOG Program Managers

For HPOG-NIE, the universe includes program managers in all of the grantee sites participating in the studies. The research team will ask managers from the total universe of HPOG grantees to respond to a screening questionnaire. Grantees will respond to the screener on-line (Appendix E). This web-based instrument will ask whether grantees made important changes to program practices, focus or structure and whether performance measurement information played a role in making these changes. Semi-structured interviews will be conducted by telephone with a subset of grantees. These interviews will be guided by a semi-structured discussion guide (Appendix F) which includes open-ended questions to understand the process by which decisions to change programs were made and how performance measurement information was used in making those decisions.

B.1.2 HPOG Participants and Control Group Members

For HPOG-Impact, the universe includes program participants and control groups members. Program staff recruited these individuals and determined eligibility. For those individuals deemed eligible for the program and who furthermore agreed to be in the study, program staff obtained informed consent. (If individuals do not agree to be in the study, they are not eligible for HPOG services).

For HPOG-Impact, at this time the expected enrollment for the study is 10,950 individuals across the 20 participating grantees, including two treatment groups and a control group. (This sample will be supplemented with a sample of an additional 3,679 would-be students from the four HPOG programs that are participating in PACE.2 This data collection is included in OMB submission 0970-0397.) HPOG grantees vary greatly in size; the target amount of study sample from each grantee is proportional to the grantee’s annual numbers of HPOG participants.

B.1.3 Target Response Rates

Overall, we expect response rates to be sufficiently high in this study to produce valid and reliable results that can be generalized to the universe of the study. For HPOG-NIE and HPOG-Impact, we expect the following response rates:

HPOG management. We expect a very high response rate (at least 80 percent) among grantee management for the screening questionnaire. We expect responses from all 20 grantee managers participating in the semi-structured interviews.

36-Month Follow-up survey of HPOG-Impact participants. We are targeting an 80 percent response rate, which is based on experience in other studies with similar populations and follow-up intervals. Since data collection for the HPOG 15-month survey has only just begun within the past two months, we do not have sufficient data to provide reliable information about response rates. However, data collection for the PACE 15-month survey has been underway for 8 months and can be used as a proxy for HPOG. Based on response rates to date, the PACE team expects to achieve an 80 percent response rate on the 15 month survey. The following table shows response rates for cohorts released to date.

Random Assignment Month |

Number of Cases |

Percent of Total Sample |

Expected Response Rate |

Response Rate to Date |

November 2011 |

48 |

1% |

75% |

75% |

December 2011 |

67 |

1% |

78% |

78% |

January 2012 |

69 |

1% |

64% |

64% |

February 2012 |

73 |

1% |

77% |

77% |

March 2012 |

79 |

1% |

72% |

72% |

April 2012 |

130 |

1% |

77% |

77% |

May 2012 |

132 |

1% |

80% |

80% |

June 2012 |

170 |

2% |

81% |

81% |

July 2012 |

209 |

2% |

74% |

74% |

August 2012 |

305 |

3% |

77% |

74% |

September 2012 |

145 |

2% |

77% |

68% |

October 2012 |

202 |

2% |

80% |

70% |

November 2012 |

238 |

3% |

80% |

67% |

December 2012 |

220 |

2% |

80% |

57% |

January 2013 |

356 |

4% |

80% |

53% |

February 2013 |

363 |

4% |

81% |

36% |

March 2013 |

319 |

3% |

81% |

21% |

April 2013 |

330 |

4% |

81% |

4% |

Total Sample Released to Date |

3455 |

37% |

79% |

55% |

Total Projected Sample |

9232 |

100% |

80% |

|

As it shows, the PACE survey sample is released in monthly cohorts 15 months after random assignment. As of August 4, 2014, the overall response rate is 55 percent, but this figure includes a mix of monthly cohorts that are finalized and cohorts that have been released in the previous week and month where survey work has just started. The eight earliest monthly cohorts (November 2011 through July2012), comprising 977 sample members, are closed. The overall response rate for these completed cohorts is about 76 percent. These cohorts were released prior to the approval and adoption of a contact information update protocol. The fact that the survey team obtained a 76 percent response rate with these cohorts in the absence of updated contact information gives ACF and the survey team confidence that an 80 percent response rate overall will be achieved. Although none of the cohorts in which the contact information update protocol was employed are completed, the survey team has higher completion rates in the first few months of the survey period than for the earlier cohorts. For the later cohorts, the survey team obtained a 35 to 40 percent response rate in the telephone center in the first eight weeks. This is generally the threshold for transferring the case to the field interviewing team. The survey team took twice as long (16 weeks) to reach this benchmark with the earlier cohorts (those without contact updates). The survey team expects to obtain response rates above 80 percent for these later cohorts to compensate for the 76 percent rate in the early cohorts, and thus reach the 80 percent response rate goal.

B.2 Procedures for Collection of Information

B.2.1 Sample Design

The sample frame includes all of the HPOG-NIE grantees, including the HPOG grantees who are participating either in HPOG-Impact or in PACE. This section first describes the sample design related to HPOG staff involved in performance reporting (HPOG management). We then describe the sample frame for HPOG-Impact treatment and control group members.

Grantee Management—HPOG-NIE

The research team will collect data from HPOG-NIE managers who work directly with the HPOG Performance Reporting System and are familiar with the Performance Progress Report (PPR).

HPOG Study Participants—HPOG-Impact

The universe of HPOG study participants consists of those adults who gave informed consent and were randomly assigned to the treatment or control group. In sites that agreed to test an approved program enhancement, individuals are randomly assigned to a control group and one of two treatment groups: a basic HPOG treatment group, and an enhanced HPOG treatment group. Therefore, the baseline sample is projected to include:

3,623 individuals in the no-HPOG control group;

7,260 total individuals in an HPOG treatment group, including:

An anticipated 5,945 individuals in the HPOG basic treatment group; and

An anticipated 1,315 individuals in the enhanced HPOG treatment group, located in the grantees that agree to test the enhancement selected for the study.

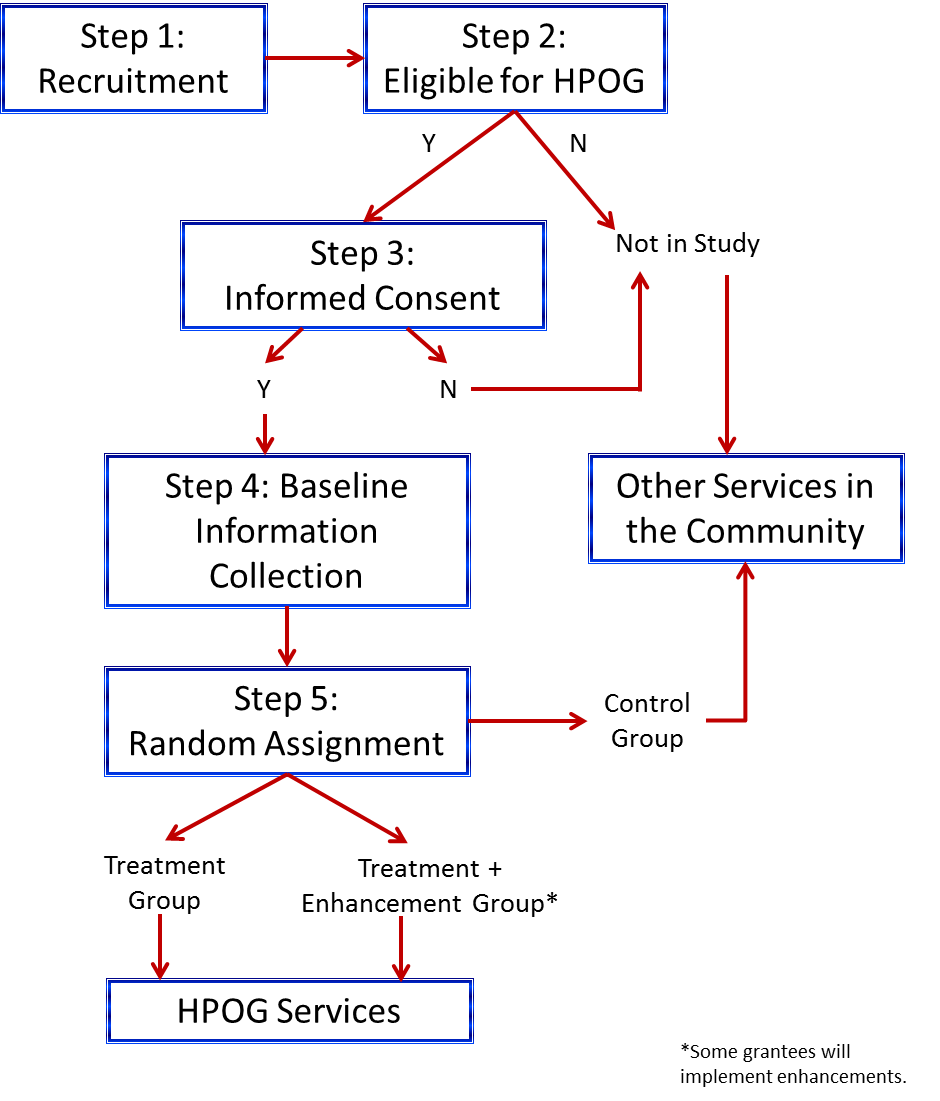

Those assigned to the treatment group are offered HPOG services. Those assigned to the control group are not offered HPOG services but can access other services in the community. Exhibit B-1 summarizes the general process described above.

Exhibit B-1: HPOG-Impact Study Participant Recruitment and Random Assignment Process

Sampling Plan for Study of Impacts on Child Outcomes

In order to assess the program impacts on children, as part of the 36-month survey we will administer a set of questions to respondents with children. The child module will be asked of all respondents who report at least one child in the household between the ages of 3 and 18 years who has resided with the respondent more than half time during the 12 months prior to the survey. Each household will be asked about a specific focal child in the household regardless of how many children are eligible. The sampling plan calls for selecting approximately equal numbers of children from each of three age categories: preschool-age children aged 3 through 5 and not yet in kindergarten; children in kindergarten through grade 5; and children in grades 6 through 12. Based on information from the HPOG child rosters (clearance received August 2013).) , we estimate that at 36 months post random assignment, approximately two-thirds of the households in the sample will have 1 or more children in K – 5th grade, one-third will have one or more preschool-age children, and another one-third will have at least one children in grades 6 – 12. As a result of this distribution, we anticipate that from households with children in multiple age groups, we will sample children in the youngest and the oldest groups at a higher rate than we sample children K – grade 5. The procedure for selecting a focal child from each household will depend on the configuration of children from each age category present in the household. Specifically, there are seven possible household configurations of age groups in households with at least one child present:

Preschool child(ren) only

Child(ren) in K – 5th grade only

Children in 6th – 12th grades only

Preschool and K – 5th grade children

K – 5th grade and 6th – 12th grade children

Preschool and children 6th – 12th grade children

Preschool-age, K – 5th grade, and 6th – 12th grade children.

The sampling plan is as follows:

For household configurations 1, 2, and 3, only one age group will be sampled; if there are multiple children in that age group, one child will be selected at random.

For household configurations 4 and 5, a K – 5th grade child will be selected from 30 percent of households and a child from the other age category in the household (preschool-age or 6th – 12th grade) will be selected from 70 percent of households.

For household configuration 6, a preschool child will be selected from 50 percent of the households, and a child in 6th – 12th grade will be selected from 50 percent of the households.

For household configuration 7, a child in K – 5th grade will be selected from 20 percent of households, a preschool-age child will be selected from 40 percent of households, and a child in 6th – 12th will be selected from 40 percent of households.

Sampling weights will be used to account for the differential sampling ratios for some child age categories in some household configurations. By applying the sampling weights, the sample for estimating program impacts on children will represent the distribution of the seven household configurations among study households.

B.2.2 Unusual problems requiring specialized sampling procedures

There are no problems requiring specialized sampling procedures.

B.2.3 Estimation Procedures

Procedures for HPOG-NIE

Estimation procedures will be, for the most part, very simple. The screening questionnaire will collect data on a census basis, removing the need for survey weights. There will be one screening questionnaire per grantee or subgrantee. Twenty grantees will be purposefully selected to participate in telephone interviews based on responses to the screening questionnaire and a review of available grantee-specific materials (grant applications, PPRs, annual goal projection reports, etc.). Grantees will be selected for telephone interviews if they meet one of the following criteria: 1) appear to make a direct connection between performance measurement information and program changes; 2) have large changes in program targets and subsequent program changes although not identified as due to performance measurement information in reviewed materials; or 3) reported performance measurement information was an important factor in making program changes in the completed screening questionnaire.

Procedures for HPOG-Impact

For overall treatment impact estimation, the research team will use multivariate regression (specified as a multi-level model to account for the multi-site clustering). The team will include individual baseline covariates to improve the power to detect impacts. The research team will pre-select the covariates. The team will pool primary findings across sites and will prepare them in a matter appropriate for ITT (intention to treat) analysis, and may also prepare effects of treatment on the treated (TOT). In general, analyses will use everyone who gives informed consent during the randomization period for HPOG-Impact. Nonresponse will not be an issue for analyses based on NDNH data. Although analyses based on Participant Follow-Up survey data will have to deal with nonresponse, including covariates in the regressions will reduce the risk of nonresponse bias as effectively as preparing nonresponse-adjusted weights using those same covariates. No sampling weights will be used for the HPOG-Impact analysis.

In addition to the straightforward experimental impact analysis (or the two- and three-arm trials), the research team will use additional analytic methods to attempt to determine which program components are more effective. The team plans to use innovative procedures that will exploit the fact that a subset of the grantees will be implementing a three-arm test. While the main impact analysis will be experimental, innovative analytic methods—capitalizing on individual- and site-level variability—will exploit the experimental design to estimate the impact of specific program components. As detailed in the project’s Evaluation Design Report, the research team will examine the extent to which varied methods can/do lead us to reach the same conclusion as the experimental analysis. This exercise has the potential to increase the confidence placed in non-experimental analyses in other areas too. All assumptions will be carefully spelled out and appropriate caveats will accompany findings in all reports.

B.2.4 Degree of Accuracy Required

The research team considers the implication of the sample size for two selected impact estimates. The first focal impact, quarterly earnings, relies on data from the National Directory of New Hires (NDNH) and therefore the full sample of respondents, including those the team cannot successfully reach for the 36-month Participant Follow-Up survey. 7,260 individuals randomized to treatment in selected sites to HPOG services (either the basic HPOG program or the enhanced HPOG program) and 3,623 to the control group, estimates suggest that the study will be able to detect an average impact of HPOG participation of $141 in the most recent quarter’s earnings (see Exhibit B-2), or $120 when sample from the HPOG/PACE programs is included. In addition, the sample size will detect the following earnings impacts when comparing earnings between HPOG participants receiving standard HPOG services and those receiving enhanced services, assuming the sample sizes reported in Exhibit B-2:3

Contrast between HPOG-enhanced program/peer support with HPOG basic program: $399;

Contrast between HPOG-enhanced program/emergency assistance with HPOG basic program: $404; and

Contrast between HPOG-enhanced program/non-cash incentives with HPOG basic program: $377.

These sample sizes are sufficient to yield policy-relevant findings regarding the enhancements, especially given we expect to use both experimental results and natural variation in our analysis. With the current projection of individuals expected to be randomized in selected sites to the HPOG program or the enhanced HPOG program with peer support, the study will be able to detect an average impact of on most recent quarter’s earnings of $399 per person using data just from the three-arm tests alone. If natural variation on these same enhancement components can also be added from other sites, the MDE of the enhancement will decrease from what we report here. To respond to the concern about these MDEs relative to findings from the ITA demonstration, the relative effects estimated in that demonstration are somewhat greater than $301 (D’Amico, Salzman & Decker, 2004).

The experimental comparison between enhanced and standard HPOG participants will also contribute to bias reduction in the study’s larger analysis of natural variation in program components across sites. Refinements to the natural variation impact model (from Bloom et al., 2003) that move the natural variation-based impact estimate of the effect of an enhancement feature closer to the experimental estimate of that effect have been shown to reduce the bias of all natural variation-based estimates (Bell, 2013) even when sample sizes for the experimental estimates are not larger enough to yield separate policy estimates. Hence, data from all three randomized enhancements will contribute to policy findings on other program components, irrespective of their own sample sizes.

To put these estimated impacts on quarterly earnings into perspective, consider that the relative effects of various approaches to training estimated for the U.S. Department of Labor’s Individual Training Account (ITA) Demonstration are somewhat greater than $301 (McConnell et al., 2006). For example, that evaluation found that providing intensive case management and educational counseling, relative to simply offering individuals a training voucher and the opportunity to choose a training program, produced earnings impacts of $328 per quarter during the first two quarters after randomization. This is on par withthe minimum detectable effects (MDEs)4 for the peer support program enhancement to be tested in this study.

ACF selected these particular enhancements to test with the possibility of being able to detect their effects and also to learn more about what components and features of career pathways programs (which by definition include a number of different components and features) are more influential. ACF will be able to use the information gained to understand if programs that do include a given component or use an implementation practice produce better participant outcomes and are “worth” the added cost of doing so. Connecting treatment explicitly to costs will allow for a better understanding of the implication for policy and practice—i.e., are the incremental effects of a given enhancement worth the cost of adding it to the standard program?

Also note that this is a study of an existing grant program where the legislation mandated that grantees use a career pathway approach and grantees were offered choices in how they put together different programmatic components and features and how they implement their programs. This study will allow ACF to understand if this investment in sector-based career pathways programs (which we currently have very little evidence about) is effective overall, and if specific components and features are more influential (and improve outcomes over and above the "standard" treatment). The research team has some ability to try to encourage grantees to include a specific enhancement and to try to increase sample in order to better detect effects; the team is using that ability to increase power as much as possible so that these important questions can be answered.

Exhibit B-2. Minimum Detectable Effects for Most Recent Quarter’s Earnings and Credential Receipt

Treatment Type, Experimental Group Sizes (# programs) |

Most Recent Quarter’s Earnings (MDEs) |

Credential Attainment (MDEs) |

|

|

|||

MDE for Standard HPOG Treatment |

|

|

|

5,945 [4,756] Standard HPOG Treatment group: |

$141 |

2.1% |

|

3,623 [2,898] Control group (20 grantees) |

|

||

7,545 [6,036] Standard HPOG + PACE Treatment group: |

$120 |

1.8% |

|

5,223 [4,178] Control group (24 grantees) |

|

||

MDE for Enhanced HPOG Treatment |

|

|

|

432 [345] Enhanced HPOG Treatment group assigned to Peer Support: |

$399 |

5.9% |

|

801 [640] Standard HPOG Treatment group (3 programs) |

|

||

422 [337] Enhanced HPOG Treatment group assigned to Emergency Assistance: |

$404 |

6.0% |

|

774 [619] Standard HPOG Treatment group (11 programs) |

|

||

461 [368] Enhanced HPOG Treatment group assigned to Non-Cash Incentives: |

$377 |

5.6% |

|

982 .[785] Standard HPOG Treatment group (5 programs) |

|

||

|

Note: MDEs based on 80 percent power with a 5 percent significance level in a one-tailed test, assuming estimated in model where baseline variables explain 20 percent of the variance in the outcome. MDEs for earnings are based on standard deviations using data for adult women from National JTPA study.5 The number of grantees and corresponding sample sizes are current as of August, 2014. Sample sizes reported are those associated with administrative data (earnings outcome); whereas the sample sizes in brackets are those associated with survey data (credential outcome) are expected to be 80% of that total.

The far right-hand column of Exhibit B-2 shows estimates for the MDEs on credential receipt. The data source for this will be the 36-Month Participant Follow-Up survey and therefore sample sizes are 20 percent smaller than for quarterly earnings (assuming an 80 percent survey response rate). With these sample sizes, estimates suggest that the study will be able to detect an average impact of HPOG participation of 1.9 percentage points in the earning of credentials, from an assumed base of 30 percent. Alternatively expressed, the power will be adequate to detect a boost in the percentage of the population who earn credentials from 30.0 to 31.9 percent (which corresponds to a 6 percent effect size). In addition, the sample size will permit detecting the following impact of credential receipt between HPOG participants receiving standard HPOG services and those receiving enhanced services, as follows:

Contrast between HPOG-enhanced program/peer support with HPOG basis program: 5.9 percentage points;

Contrast between HPOG-enhanced program/emergency assistance with HPOG basic program: 6.0 percentage points, and

Contrast between HPOG-enhanced program/non-cash incentives with HPOG basic program: 5.6 percentage points.

To put these estimated impacts on credentials into perspective, consider the effects of Job Corps estimated for the U.S. Department of Labor’s national study. That evaluation found that providing comprehensive and consistent services produced large effects on receiving a vocational certificate: 38 percent of the treatment group received a vocational certificate compared to 15 percent of the control group, an estimated impact of 23 percent (and more than twofold increase in the receipt of credentials) (Shochet et al., 2008). This is much greater than even the MDEs for the smallest program enhancement to be tested in this study, and so the research team is comfortable with the level of power in this study to detect relative increase on this outcome of interest.

B.2.5 Who Will Collect the Information and How It Will Be Done

Grantee Management

HPOG-NIE

The research team will collect the data for HPOG-NIE from management at grantees.

As soon as OMB clearance is obtained, the team will contact via email grantee managers with an email introducing the purpose of the additional data collection activities (see Appendix E). Then a second email will be sent that contains the link to the screening questionnaire (see Appendix E). Following the screener, the team will follow up with staff at selected grantees to conduct semi-structured individual telephone calls to provide more in-depth information about their use of performance measurement information.

Screening Questionnaire: The survey will target grantee management.

Semi-Structured Discussion Guide: The sample of respondents for the semi-structured interviews will be grantee management who regularly use the HPOG Performance Reporting System and are familiar with the Performance Progress Report (PPR).

As stated above, the screening questionnaire will be hosted on the Internet and accessed via a live secure web-link. This approach is particularly well-suited to the needs of these surveys in that respondents can easily stop and start if they are interrupted and review and/or modify responses. The evaluation staff will send those grantees selected to participate in a semi-structured discussion interview an email asking for their participation (see Appendix F). Staff will then telephone the grantee to schedule a convenient time for the interview. Evaluation staff will conduct all interviews by telephone.

HPOG Participant Survey

HPOG-Impact

The evaluation staff will conduct 36-month post random assignment follow up activities with treatment and control group participants:

Send HPOG participants periodic contact update requests. These requests for contact information updates (Appendix H) provide HPOG participants the opportunity to update their contact information and provide alternative contact information. Participants can send back the updated information in an enclosed self-addressed stamped envelope. Participants will be offered a $2 token of appreciation with the contact information update request. The research team will send contact update letters 4, 8, and 12 months following the 15-month survey, pending OMB approval.

Conduct a 36-month Participant Follow-Up survey. The HPOG data collection team will contact study participants with an advance letter that includes $5 (see Appendix I) reminding them that they will soon receive a call from an HPOG interviewer who will want to interview them over the telephone. The letter will remind the sample member that their participation in the survey is voluntary and that they will receive a $40 token of appreciation upon completion of the interview. Centralized interviewers using computer-assisted interview (CATI) software will conduct the follow-up survey. Interviewers will be trained in the study protocols and their performance will be regularly monitored. The interviewers will first try to reach the sample member by calling the specified contact numbers. For sample members who cannot be reached at the original phone number, interviewers will attempt to locate new telephone numbers by calling secondary contacts and doing on-line directory searches. Once the centralized interviewers have exhausted all leads, cases will be transferred to field staff to find the sample member in person. When field staff succeeds in finding a sample member and convinces him or her to answer the survey, the field staff will administer the survey using computer-assisted personal interviewing (CAPI) software.

B.2.6 Procedures with Special Populations

All study materials designed for HPOG participants will be available in English and Spanish. Interviewers will be available to conduct the Participant Follow-Up survey interview in either language. Persons who speak neither English nor Spanish, deaf persons, and persons on extended overseas assignment or travel will be ineligible for follow-up, but we will collect information on reasons for ineligibility. Persons who are incarcerated or institutionalized will be eligible for follow-up only if the institution authorizes contact with the individual.

B.3 Methods to Maximize Response Rates and Deal with Non-response

B.3.1 Grantee Management

The in-house “survey support desk” will carefully monitor screening questionnaire response rates and data quality on an ongoing basis. The support desk will be responsible for contacting non-respondents if a screening questionnaire has not been opened within the first week, via an email reminder, and again after two weeks (Appendix E). A final reminder will be sent by email three days later. The screening questionnaire website will display the phone number and email address of the support desk. The research team will contact selected grantee management by telephone to schedule the semi-structured interviews. They will contact grantee management weekly until the interview is scheduled or the respondent refuses to participate.

B.3.2 HPOG Participant Survey

For the 36-month follow-up, the following methods will be used to maximize response:

Participant contact updates and locating;

Tokens of appreciation; and

Sample control during the data collection period.

Participant Contact Update and Locating

The HPOG team has developed a comprehensive participant tracking system to maximize response to the 15-month survey. The same strategy will be followed for the 36-month survey. This multi-stage locating strategy blends active locating efforts (which involve direct participant contact) with passive locating efforts (which rely on various consumer database searches). At each point of contact with a participant (through contact update letters and at the end of the survey), the research team will collect updated name, address, telephone and email information. In addition, the team will use information collected at baseline for contact data for up to three people who did not live with the participant, but will likely know how to reach him or her. Interviewers only use secondary contact data if the primary contact information proves to be invalid—for example, if they encounter a disconnected telephone number or a returned letter marked as undeliverable. Appendix H shows a copy of the contact update letter. The research team proposes sending contact update letters at 4, 8, and 12 months after the 15-month survey data collection (pending OMB approval) and will offer a $2 token of appreciation with the contact update request.

In addition to direct contact with participants, the research team will conduct several database searches to obtain additional contact information. Passive contact update resources are comparatively inexpensive and generally available, although some sources require special arrangements for access.

Tokens of Appreciation

Offering appropriate monetary gifts to study participants in appreciation for their time can help ensure a high response rate, which is necessary to ensure unbiased impact estimates. Study participants will be provided $5 with the survey advance letter and $40 after completing the 36-month follow-up survey. As noted above, in addition to the survey, at three time points between the 15-month and 36-month follow-up surveys (4, 8, and 12 months following 15-month data collection, pending OMB approval) the participants will receive a contact update letter with a contact update form that lists the contact information they had previously provided. The letter will ask them to update this contact information by calling a toll-free number or returning the contact update form in the enclosed postage-free business reply envelope. Study participants will receive $2 with the contact update request, in appreciation for their time.

Sample Control during the Data Collection Period

During the data collection period, the research team will minimize non-response levels and the risk of non-response bias in the following ways:

Using trained interviewers who are skilled at working with low-income adults and skilled in maintaining rapport with respondents, to minimize the number of break-offs and risk of non-response bias.

Using a contact update letter and contact update form to keep the sample members engaged in the study and to enable the research team to locate them for the follow-up data collection activities. (See Appendix H for a copy of the contact update letter.)

Using an advance letter that clearly conveys to study participants the purpose of the survey, the tokens of appreciation, and reassurances about privacy, so they will perceive that cooperating is worthwhile. (See Appendix I for a copy of the advance letter.)

Providing a toll-free study hotline number to participants, which will be included in all communications to them, will allow them to ask questions about the survey, to update their contact information, and to indicate a preferred time to be called for the survey.

Taking additional contact update and locating steps, as needed, when the research team does not find sample members at the phone numbers or addresses previously collected.

Using an automated sample management system that will permit interactive sample management and electronic searches of historical contact update and locating data.

B.4 Tests of Procedures

In designing the 36-month follow-up survey, the research team included items used successfully in previous studies or in national surveys. Consequently, many of the survey questions have been thoroughly tested on large samples.

B.4.1 Grantee Management Screening Questionnaire and Semi-Structured Discussion Guide

The screening questionnaire will be pretested with two respondents from grantees serving TANF recipients and other low-income individuals. Experienced interviewers will call each respondent after they complete the screening questionnaire to discuss their perceptions of the clarity and flow of survey items, ease of completion, and time requirements. The semi-structured discussion guide will be pretested with the same grantees who will again be asked to discuss the clarity, ease of completion, and time requirements. After pretesting, we will revise the instruments based on the feedback and trim, as needed, to stay within the proposed administration time. Any edits based on pretesting will be submitted to OMB.

B.4.2 36-Month Participant Follow-Up Survey

To ensure the length of the instrument is within the burden estimate, we took efforts to pretest and edit the instruments to keep burden to a minimum. During internal pretesting, all instruments were closely examined to eliminate unnecessary respondent burden and questions deemed to be unnecessary were eliminated. External pretesting was conducted with four respondents and the length of the instrument was found to be consistent with the burden estimate. Some instrument revisions were made to improve question clarity and response categories. Edits made as a result of the pretest have been incorporated in the instruments attached in Appendices B and C.

B.5 Individuals Consulted on Statistical Aspects of the Design

The individuals listed in Exhibit B-4 below made a contribution to the design of the evaluation.

Exhibit B-4: Individuals Consulted

Name |

Role in Study |

Dr. Maria Aristigueta |

Implementation, Systems and Outcome Evaluation of the Health Profession Opportunity Grants to Serve TANF Recipients and Other Low-Income Individuals (HPOG-ISO) Technical Working Group member |

Dr. Stephen Bell |

Impact Study Project Quality Advisor |

Ms. Maureen Conway |

HPOG-ISO Technical Working Group member |

Dr. David Fein |

Key staff on PACE evaluation |

Dr. Olivia Golden |

HPOG-ISO Technical Working Group member |

Dr. Larry Hedges |

Impact Study Technical Working Group member |

Mr. Harry Hatry |

HPOG-ISO Study Team Member |

Dr. Carolyn Heinrich |

NIE and Impact Study Technical Working Group member |

Dr. John Holahan |

HPOG-ISO Technical Working Group member |

Dr. Kevin Hollenbeck |

HPOG-ISO Technical Working Group member |

Dr. Philip Hong |

External expert (measures of hope) |

Dr. Chris Hulleman |

HPOG-ISO Technical Working Group member |

Mr. David Judkins |

Key staff on NIE, Impact Study, and PACE |

Dr. Christine Kovner |

HPOG-ISO Technical Working Group member |

Dr. Robert Lerman |

HPOG-ISO Technical Working Group member |

Dr. Karen Magnuson |

External expert (child outcomes) |

Ms. Karin Martinson |

Key staff on PACE evaluation |

Dr. Rob Olsen |

Impact Study Team member |

Dr. Laura R. Peck |

Impact Study, Co-Principal Investigator |

Dr. James Riccio |

HPOG-ISO Technical Working Group member |

Dr. Howard Rolston |

Key staff on PACE evaluation |

Dr. Jeff Smith |

Impact Study Technical Working Group member |

Dr. Alan Werner |

NIE Co-Principal Investigator Impact Study Co-Principal Investigator |

Dr. Joshua Wiener |

HPOG-ISO Technical Working Group member |

Inquiries regarding the statistical aspects of the study’s planned analysis should be directed to:

Ms. Gretchen Locke Project Director, HPOG-Impact

Ms. Robin Koralek Project Director, HPOG-NIE

Dr. Laura Peck Principal Investigator, HPOG-Impact

Dr. Alan Werner Principal Investigator, HPOG-NIE

Hilary Forster Federal Contracting Officer’s Representative (COR), HPOG-Impact &

HPOG-NIE, Administration on Children and Families, HHS

References

Cantor, D., O'Hare, B. C., & O'Connor, K. S. (2008). The use of monetary incentives to reduce nonresponse in random digit dial telephone surveys. Advances in telephone survey methodology, 471-498. John Wiley and Sons.

Church, A. H. (1993). Estimating the effect of incentives on mail survey response rates: A meta-analysis. Public Opinion Quarterly, 57(1), 62-79.

Edwards, Phil, Ian Roberts, Mike Clarke, Carolyn DiGuiseppi, Sarah Pratap, Reinhard Wentz, and Irene Kwan. 2002. “Increasing Response Rates to Postal Questionnaires: Systematic Review.” British Medical Journal 324:1883-85.

Little, T.D., Jorgensen, M.S., Lang, K.M., and Moore, W. (2014). On the joys of missing data. Journal of Pediatric Psychology, 39(2), 151-162.

McConnell, S., Stuart, E., Fortson, K., Decker, P., Perez-Johnson, I., Harris, B., & Salzman, J. 2006. Managing Customers’ Training Choices: Findings from the Individual Training Account Experiment. Washington, DC: Mathematica Policy Research.

Nisar, H., Klerman, J., & Juras, R. 2012. Estimation of Intra Class Correlation in Job Training Programs. Unpublished draft manuscript. Bethesda, MD: Abt Associates Inc.

Shochet, P. Z, Burghardt, J., & McConnell, S. 2008. Does Job Corps Work? Impact Findings from the National Job Corps Study. The American Economic Review 98(5), 1864–1886.

Singer, Eleanor, Robert M. Groves, and Amy D. Corning. 1999. “Differential Incentives: Beliefs about Practices, Perceptions of Equity, and Effects on Survey Participation.” Public Opinion Quarterly 63:251–60.

Singer, E., Van Hoewyk, J., & Maher, M. P. (2000). Experiments with incentives in telephone surveys. Public Opinion Quarterly, 64(2), 171-188.

Yammarino, F. J., Skinner, S. J., & Childers, T. L. (1991). Understanding mail survey response behavior a meta-analysis. Public Opinion Quarterly, 55(4), 613-639.

1 From the project inception in 2007 through October 2014 the project was called Innovative Strategies for Increasing Self-Sufficiency.

2 Three PACE programs are also HPOG programs (that is, HPOG funding is used for all participants). A fourth PACE program has some participants funded by HPOG and thus is included in the HPOG calculations.

3 Note that when examining the effect of enhancements we are looking at the effect of something additive (not at competing, stand-alone treatments). Therefore, the enhanced version will need to be relatively more effective than the basic version to permit us to detect the estimated effect of that enhancement.

4 Minimum detectable effects are the smallest impacts that the experiment has a strong chance of detecting if such impacts are actually caused by HPOG.

5 The standard deviations for the women population in the P/PV Sectoral Employment Study (D’Amico, Salzman and Decker, 2004) and Welfare-to-Work Voucher Evaluation were higher and lower respectively; thus the figure from the National JTPA study was around the average from the previous two studies noted above. The binary outcome is assumed to be 70%, which is what the employment rates were in year one in both the JTPA and NEWWS studies.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2021-01-24 |

© 2026 OMB.report | Privacy Policy