CSE SS Part A for SAMHSA_031116

CSE SS Part A for SAMHSA_031116.docx

Community Support Evaluation

OMB: 0930-0363

Community Support Evaluation

Supporting Statement

Justification

The Substance Abuse and Mental Health Services Administration’s (SAMHSA’s) Community Support Branch of the Center for Mental Health Services (CMHS) is requesting clearance for a new data collection for the Community Support Evaluation (CSE). Recognizing that individuals with serious mental illness (SMI), substance use, and co-occurring mental and substance use disorders often experience challenges to identifying and receiving appropriate care and supports for recovery, as well as obtaining competitive employment, SAMHSA funded the Behavioral Health Treatment Court Collaborative (BHTCC) and Transforming Lives through Supported Employment (SE) Programs. The programs are rooted in the belief that recovery is a holistic process bolstered by trauma-informed care and individual- and community-level support.

The CSE consists of two studies, the BHTCC and SE Studies. In total, 15 data collection instruments and activities compose the CSE, including web-based surveys, key informant interviews (KIIs), existing data abstractions, and focus groups.

Biannual Program Inventory (BPI)—BHTCC Version

System-Level Assessment (SLA) KII—Court Personnel Version

SLA KII—Service Provider Version

SLA KII—Consumer Version

Concept Mapping—Brainstorming Activity

Concept Mapping—Sorting/Rating Activity

18-Month Client-Level Abstraction Tool (18-Month Tool)

Comparison Study Abstraction Tool—Baseline Version

Comparison Study Abstraction Tool—6-Month Version

Comparison Study Abstraction Tool—18-Month Tool

Biannual Program Inventory—SE Version

Scalability/Sustainability Assessment (SSA) KII—Administrator Version

SSA KII—Service Provider Version

Employment Needs Focus Group (ENFG)—Employer Version

ENFG—Employment Specialist Version

In 1977, the National Institute of Mental Health (NIMH) created community support programs (CSPs) “to assist states and communities in improving opportunities and services for adults with seriously disabling mental illnesses” (Department of Health and Human Services [HHS], 1993, p.i). In the CSP model, community support systems provide a comprehensive system of care, including “not only mental health services but an array of rehabilitation and social services, as well: client identification and outreach; crisis response services; housing; income support and benefits; health care; rehabilitation, vocational training and employment assistance; alcohol and/or other drug abuse treatment; consumer, family, and peer support; and protection and advocacy.”

The BHTCC and SE programs espouse the supports of recovery and operationalize and implement them through adherence to the CSP model and its principles. The BHTCC program aims to bridge the gap in service provision created by the traditionally disparate criminal justice and mental health service systems. The program affords the opportunity to build collaborations between criminal courts, specialized treatment courts, and alternative programs to coordinate the screening, referral, and treatment of adults with behavioral health conditions while in the justice system. The SE Program seeks to increase self-sufficiency among individuals with behavioral health conditions by enhancing the capacity of States and local communities to help them acquire and maintain employment. SE program grantees are accomplishing this through the implementation of evidence-based supported employment approaches, which have been shown to help individuals achieve and sustain recovery. In 2014, SAMHSA awarded 17 BHTCC program grants to courts and 7 SE grants to States. The CSE will provide a comprehensive understanding of the following:

Collaborations developed as a result of the programs

Effect of collaborations on key outcomes

CSP factors associated with consumer outcomes

Recovery supports associated with consumer outcomes

Approval is being requested for data collection associated with 15 instruments and activities—ten to be conducted with BHTCC program grantees and five for administration with SE program grantees. (A description of each data collection activity can be found in Section A.2.b.)

Circumstances of Information Collection

Background

The CSP model is a central tenet of the BHTCC and SE Programs. The model acknowledges that receiving behavioral health services and supports is paramount to achieving an optimal quality of life for individuals with SMI co-occurring disorders living in the community. CSPs utilize systems of care to help adults with these behavioral health conditions recover, live independently and productively in the community, and avoid inappropriate use of inpatient services. The CSP philosophy states that services should be consumer-centered/consumer-empowered, culturally competent, designed to meet special needs, community-based, flexible, coordinated, accountable, strengths-based (Pennsylvania Department of Public Welfare, 2013). Further, CSPs include a focus on self-directed services, community approaches/collaborations that are coordinated and promote recovery, and meaningful involvement of individuals in recovery. Ultimately, CSPs are vehicles for transforming community, local, and/or State level mental health and substance use systems through enhanced coordination of service delivery across systems.

For some individuals, it is not until they have contact with the criminal justice system that their SMI or co-occurring disorders are identified. While the success of their transition back into the community is dependent upon recovery, resources for treatment in the traditional criminal justice system are limited. The BHTCC program aims to prevent and interrupt the cycle of offense and recidivism by establishing collaborations among local courts and diverting these individuals to appropriate treatment and services. BHTCCs are rooted in the history of “problem solving courts,” which began in the 1990s to address the needs of offenders that could not be addressed in traditional courts (Bureau of Justice Assistance [BJA], n.d.-a). Until that time, the criminal justice system treated most individuals with a diagnosed mental illness the same as those without. Ideally, these courts use their legal authority to “forge new responses to chronic social, human, and legal problems” (Berman & Feinblatt, 2001). Initially, these problem-solving courts were limited to drug courts, but they have since expanded to include mental health/behavioral health, community, domestic violence, gambling, truancy, gun, and homeless courts (National Association of Drug Court Professionals, n.d.). Behavioral health courts have arisen in response to the overrepresentation of people with mental illness in the criminal justice system. It is reported that 15–20% of the correctional population has a serious mental illness, which is substantially higher than the general population (Lurigio, & Snowden, 2009). The current BHTCC program builds upon the first cohort of BHTCC grantees, funded between 2011 and 2014. The current program includes a focus on veteran populations and requires each collaborative to involve municipal courts. This recent approach combines previous and current SAMHSA funded criminal justice-treatment linkage programs with infrastructure planning and development activities to create new court and community networks to transform the behavioral health system at the community level.

Further, research has shown that SE helps individuals achieve and sustain recovery. SE allows individuals with disabilities, including those with behavioral health conditions, to function to the fullest extent possible in integrated and competitive work settings alongside those who to do not have disabilities. Such integrated settings provide opportunities to individuals with disabilities to live; work; and receive health, social, and behavioral health services in the community. The goal of the SAMHSA’s SE Program is to support the development of statewide infrastructure to provide and sustain evidence-based SE programming for adults with behavioral health conditions. Through the program, State mental health authorities create and begin implementing strategic sustainability plans, which include components around workforce training, policy change, and sustainable funding. Grantees are also tasked with establishing two community-based SE pilot programs and creating a training program for professionals who support individuals with SMI, substance use, and co-occurring disorders to find competitive jobs. In addition, grantees must create a plan to scale the SE program statewide, including developing supportive policy, training a statewide SE workforce, establishing a Supported Employment Coordinating Committee (SECC), and developing a sustainable funding plan to continue services after the grant program ends. The expected outcome of the program is for states to have the necessary infrastructure in place to maintain and expand evidence-based supported employment (SE) programs throughout the state and increase the number of individuals with SMI, substance use, and with co-occurring disorders to obtain and retain competitive employment.

The Need for Evaluation

In 2014, SAMHSA issued a request for task order proposals (RFTP) for the CSE with the following objectives:

Conduct a cross-site process and intermediate outcome evaluation and disseminate findings for the BHTCC program and for the SE program

Provide technical assistance (TA) and support for BHTCC and SE grantees to effectively meet the data collection requirements of the cross-site evaluation and the local evaluation

Coordinate data collection and analyze, aggregate, and synthesize the data

Disseminate findings

The purpose of the CSE is to (1) describe and assess BHTCC and SE grantee activities and procedures, including the intermediate or direct effects of the programs on participants; (2) document the application and sanctioned adaptations of BHTCC programs in the justice system and of the SE Program; and (3) design and implement plans to disseminate knowledge about how to replicate effective projects in other States, territories, tribal nations, and communities. The legal basis and authorizing law for conducting the CSE can be found in Section 290aa of the Public Health Services Act (42 U.S.C.241).

A government contractor will coordinate data collection for the evaluation and provide support for its local-level implementation. Each grantee is required to participate in the CSE. In this partnership, the contractor provides TA regarding data collection and research design for the evaluation, directly collects data, receives data from grantee data collection efforts, monitors data quality, and provides feedback to grantees. The CSE comprises separate BHTCC and SE Studies, each consisting of system- and client-level components. The BHTCC Study also includes a quasi-experimental comparison study. The mixed-method design of the CSE represents SAMHSA’s desire to conduct and disseminate findings from a process and intermediate outcomes evaluation of the two programs. Findings will inform current grantees, policymakers, and the field about ways to transform the behavioral health system to cultivate resiliency and recovery, actively collaborate with and engage, and improve service delivery for individuals with serious mental, substance, and co-occurring disorders who are in recovery.

Clearance Request

SAMHSA is requesting approval for a new effort entitled Community Support Evaluation. OMB clearance is requested for three years of data collection. The CSE is a multicomponent evaluation of two SAMHSA programs—the BHTCC and SE Programs—promoting recovery for individuals with mental, substance use, and co-occurring disorders. The CSE will allow SAMSHA to examine the degree to which (1) participants lives are improved as a result of the programs; (2) engagement/participation is enhanced among consumers, people in recovery, and their families; and (3) the programs are successfully implemented. Findings from the CSE will be used to inform policymakers and the field on critical aspects of future CSPs.

Purpose and Use of Information Collected

CSE Overview

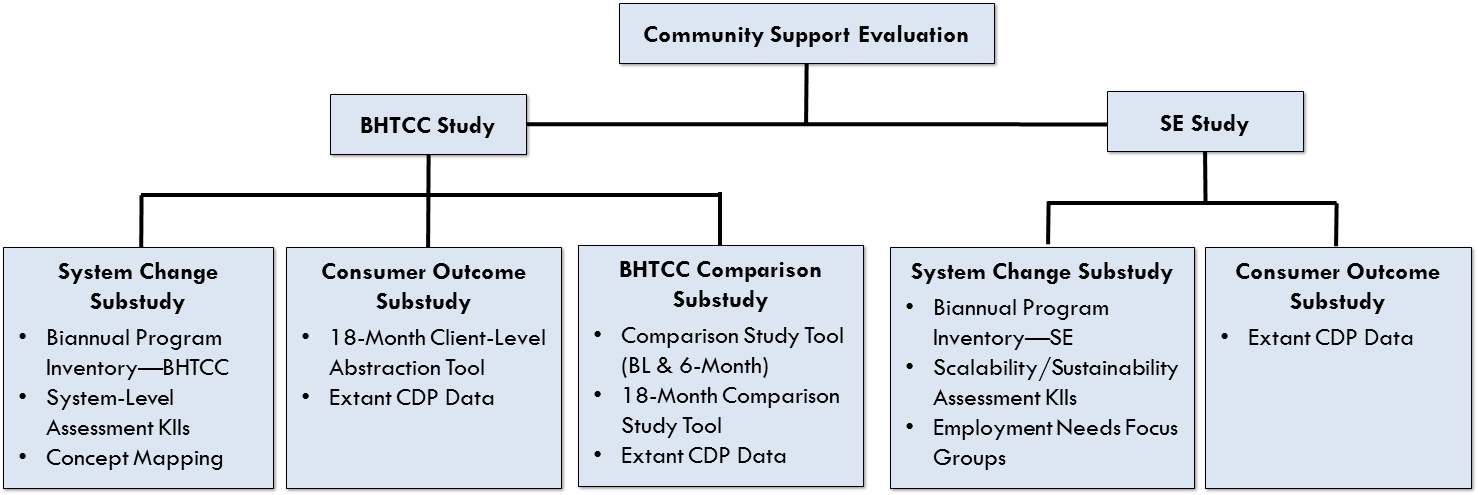

The CSE comprises separate BHTCC Study and SE Studies and utilizes a mixed-method design to assess the process and intermediate outcomes of the two programs. Because the programs view collaboration and partnerships among key stakeholders (including consumers, peers, and family members) as essential, the evaluation incorporates a range of program participants and stakeholders either directly (as respondents/participants) or indirectly (via expert panel input on the evaluation design). Use of a participatory evaluation framework is essential to meeting the needs and goals of the CSE; specifically, understanding (1) the extent to which program implementation and consumer outcomes, partnerships and collaboration, and engagement of individuals in recovery from mental and substance use disorders are achieved and (2) how the agencies or grantees contributed to the success of the program. Exhibit 1 depicts an overview of the CSE studies, substudies, and data sources. Additional details are provided in Section A.2.b.

Exhibit 1. CSE Overview

BHTCC Study

The BHTCC Study consists of three substudies: system change substudy (SCS), consumer outcome substudy (COS), and comparison substudy (CS). Data collection activities will assess the characteristics of participants and key behavioral and functional outcomes, and recidivism, as well as the system-level capacity, change, and characteristics of BHTCCs. Exhibit 2 contains a matrix of the primary BHTCC questions and associated data sources. BHTCC Study components are described below and detailed descriptions of data collection activities are provided in Exhibit 5.

Exhibit 2. BHTCC Study Questions and Data Sources

BHTCC Study Questions |

Data Source |

|||||

BPI–BHTCC |

SLA KIIs |

Concept Mapping |

18-Month Tool |

Comparison Study Tools |

Extant Client-Level Data |

|

Does the collaborative model enhance access to treatment and recovery support for persons with mental illness, substance use disorders, and co-occurring disorders? |

X |

X |

X |

|

|

|

Are different collaborative configurations associated with different service delivery and client outcomes (i.e., those with municipal courts or not)? |

X |

|

|

X |

X |

X |

What are the advantages and disadvantages of a collaborative partnership program model versus a single problem-solving court model (i.e., drug courts)? |

|

X |

X |

|

|

|

How do the intra- and interagency characteristics of the collaboration impact client outcomes? |

|

X |

X |

X |

|

X |

BHTCC System Change Substudy

The BHTCC SCS combines qualitative and quantitative data to gain an understanding of program implementation processes and associated system-level outcomes. Finding will include key stakeholders collaboration strategies and use the principles of CSPs to expand service capacity, improve access to services, and enhance infrastructure. There is a particular focus on management structure—how each community is collaborating with court staff to expand or better serve participants; the process for recruiting, screening, and retaining participants; the practices used to ensure treatment adherence and criminal justice compliance, as well as involvement of consumers in all phases of program planning and implementation. Grantees will be asked to report on trauma-informed programs and evidence-based practices being implemented and infrastructure development over time to understand how these activities evolve over the course of the program. Specific objectives to be addressed include the following:

Describe the system models, including management and staffing, for the BHTCC programs

Determine the impact of interagency collaboration on service planning, delivery, and coordination

Document the processes for participant recruitment, intake, and retention

Define how consumer-driven practices are incorporated into the BHTCC programs

Data collection activities include: BPI–BHTCC Version, SLA KIIs (Court Personnel, Service Provider, and Consumer Versions), and concept mapping.

Concept Mapping

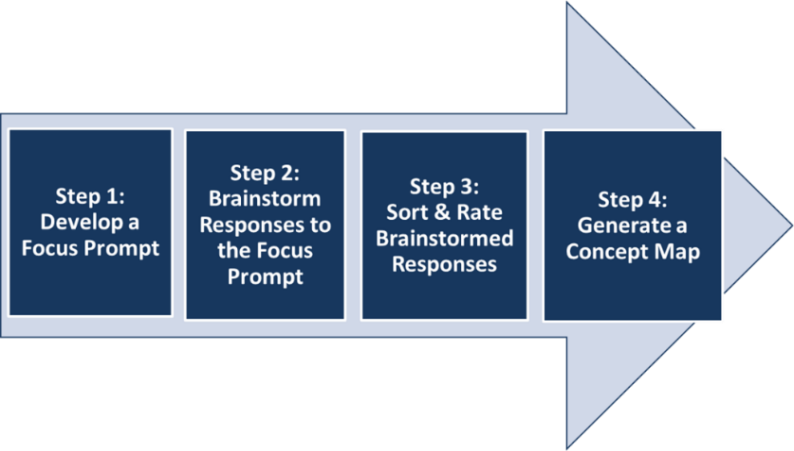

Concept mapping is a recognized tool that supports the interaction between evaluation practice, organizational learning, and community-level change. This mixed-method, participatory technique, which involves the brainstorming, rating, and sorting of ideas, incorporates two CSP principles—consumer-centered and consumer-empowerment. In total, four concept mapping exercises will be conducted as part of the SLA. The purpose of the exercises is (1) to gain an understanding of the dimensions of programming that are considered most crucial for program implementation (with involvement from key stakeholders) and (2) identify a roadmap for improving services. Concept mapping will integrate the perspectives of four stakeholder types—BHTCC peers, consumers, family members of consumers, and court personnel—some of whom otherwise may not have a voice in or advocate for what works well in these systems. The process affords them a voice, as well as equal footing with the evaluators and each other, in the collection, analysis, and interpretation of data. Participants will engage in brainstorming and sorting/rating activities at the local level as part of the process (see Exhibit 3). Information will be entered into Concept Systems software for analysis and map creation (see Section A.16.c, Data Analysis Plan).

Exhibit 3. Steps in the Concept Mapping Process

BHTCC Consumer Outcome Substudy

The BHTCC COS takes maximum advantage of client-level data from existing performance monitoring requirements to determine BHTCC participant outcomes, including functional, clinical, and program specific outcomes. In addition to the outcomes data provided through the CDP, data on program participants will be abstracted at 18 months to afford assessment of long-term outcomes related to recidivism (rearrests, recommitment, and revocations). Specific objectives to be addressed include the following:

Document participants’ experiences and satisfaction with service delivery and program participation

Assess the short-term programmatic and behavioral health outcomes for BHTCC participants

Document the participants’ experiences with peer-based supports

Determine what the short- and long-term criminal justice (recidivism) outcomes are for BHTCC participants

Data collection will occur via the 18-Month Tool.

BHTCC Comparison Substudy

As an enhanced component of the COS, the comparison substudy involves compiling and analyzing data on a matched sample of up to 260 offenders not participating in the BHTCC program (130 from each comparison site) to understand the impact of BHTCC programs on client outcomes. For a subset of BHTCC grantees, it will be possible to identify adequate sources of comparison cases, such as subjects receiving regular services instead of enhanced services under service enhancement grants. Grantees proposing service enhancement were required to report the number of additional clients to be served for each year of the proposed grant. Similarly, grantees proposing service expansion were required to report on how the expansion would be achieved (e.g., reduction in waiting lists, partnering with a new agency to provide the specific services enhancement). This information will be used to identify areas where eligibility is currently exceeding service capacity and where recruitment from a waiting list is most achievable. In the event that a wait list approach is not feasible, a matched comparison study using individuals who are eligible but who opt out of services will be utilized (in no more than two comparison sites). Comparison cases are subjects who, although eligible, are not receiving grant services for reasons unrelated to their possible outcome, such as a lack service provider capacity. For comparison cases, a reduced data protocol (reduced from client-level data gathered by BHTCC grantees for performance monitoring purposes) will be used to gather information at baseline and 6 months. Data collection activities include the Comparison Study Data Abstraction Tools (Baseline and 6-Month Versions) and the Comparison Study 18-Month Tool.

SE Study

The SE Study includes quantitative and qualitative mechanisms to understand outcomes of SE program participants, system-level assessments of service infrastructure, capacity, and delivery. Similar to the BHTCC Study, the SE Study comprises system change and consumer outcome substudies. Activities will assess program operations from the perspectives of consumers and employers, with emphasis on system needs for sustained models of SE. Exhibit 4 contains a matrix of the SE Study primary questions and relevant data sources. Study components are described below and data collection activity details can be found in Exhibit 5.

Exhibit 4. SE Study Questions and Data Sources

SE Study Question |

Data Source |

|||

BPI–SE |

SSA KIIs |

ENFGs |

Extant Client Level Data |

|

How has the state-level collaboration been structured to enhance service planning, coordination, and delivery of services? How has it supported the scalability and sustainability of the supported employment program? |

X |

X |

X |

|

What are the motivating factors associated with the SE project in each state? Do the motivating factors vary within and across each state? |

X |

X |

|

|

What actions have the state and local sites taken to provide sustainable and scalable organizational, policy, and financial resources to supported employment programs? How scalable and sustainable does their approach to supported employment appear to stakeholders? |

X |

|

X |

|

What program, contextual, and cultural factors were associated with participant outcomes? |

X |

X |

X |

X |

SE System Change Substudy

The SE SCS will examine how infrastructure is developed to support the full adoption of SE practices within each state and how states plan to sustain those practices over the long run. The SCS combines primary and secondary data to understand how key stakeholders collaborate to expand the service capacity of sites, improve access to and financing for services, and engage employers, all while reflecting the principles of community support programs. Data collection activities include: BPI—SE Version, SSA KIIs (Administrator and Service Provider Versions), and ENFGs (Employer and Employment Specialist Versions). Specific objectives to be addressed include the following:

Understand the state-level collaboration and infrastructure to enhance service planning, coordination, and delivery of services

Assess the scalability and sustainability of the SE Program

Document and assess the factors motivating adoption of the SE model in each state and variation of motivating factors across states

Document the actions that state and local sites to ensure sustainable and scalable organizational, policy, and financial resources for Supported Employment programs

SE Consumer Outcome Assessment

The goal of the SE COS is to understand employment-related outcomes that consumers experience during and after their participation in the SE program. The SE COA does not involve data collection burden. Rather, client-level data from existing performance monitoring requirements will be analyzed to understand employment-related outcomes experienced by consumers during and after their participation in the SE program. Additional details can be found in Section A.16.c.

Data Collection Activities and Methods

Exhibit 5 provides a description of CSE data collection activities and methods by study component. (Note: Informed consents for all activities are location in Attachment P.)

Exhibit 5. Data Collection Activities and Methods

Activity |

Description |

BHTCC System Change Substudy |

|

Biannual Program Inventory–BHTCC

|

The BPI–BHTCC Version is a web-based survey that will capture infrastructure development and direct services that are part of the BHTCC programs. Data include the types of planning, infrastructure, and collaboration grantees are implementing; trainings conducted; and direct services offered as part of the program. The BPI takes 45 minutes to complete and will be completed by grantee evaluation staff twice yearly (April and October) over the grant period. See Attachment A. |

System Level Assessment KIIs– Court Personnel, Service Provider, & Consumer Versions

|

The SLA KIIs will be conducted with multiple stakeholders to assess collaboration strategies to expand or better serve participants; processes for recruiting, screening, and retaining participants; practices to ensure treatment adherence and criminal justice compliance; and involvement of consumers in program planning and implementation. Data include implementation processes/outcomes; service infrastructure, capacity, entry, and delivery processes; management structure; reward and sanction models; trauma-informed practices; collaboration among BHTCC participants; and facilitators and barriers to collaboration. There are three versions of the SLA KIIs—each is tailored to the intended audience:

Grantee staff will assist with respondent recruitment by collecting consent to contact from potential participants and forwarding the forms to the contractor. The SLA KIIs will be conducted in grant years 2 and 4 via telephone or Skype and take 60 minutes to complete. The KIIs will cover the same information across years; however, the Year 4 KIIs also will ask for specific plans for future implementation. See Attachments B (SLA KII Court Personnel Y2 and Y4); C (SLA KII Service Provider Y2 and Y4); D (SLA KII Consumer); and P (SLA KII Verbal Consent Script). |

Concept Mapping Brainstorming & Sorting/Rating Activities

|

A total of three concept mapping brainstorming activities and four sorting/rating activities will be conducted with BHTCC stakeholders (peers, consumers, family members, service providers, and/or court personnel) as part of four Concept Mapping Exercises to be conducted (described below). The brainstorming and sorting/rating activities will occur through a web-based program (accommodations will be made for those without computer access [e.g., telephone or paper/pencil/mail]). All concept mapping exercises will be coordinated at the local level with assistance from the contractor.

Description of Concept Mapping Exercises Each BHTCC grantee will generate a local concept map identifying the priority supports for recovery beginning in Year 2. In Year 4, varying numbers of grantees will participate in three concept mapping exercises to generate cross-site Keys to Recovery (KTR) maps to identify key recovery supports to inform SAMHSA of continued and needed resources to ensure delivery models that contain these key supports. It is expected that stakeholders will be asked to participate in no more than two of these three cross-site maps.

|

BHTCC Consumer Outcome & Comparison Substudies |

|

18-Month Client Level Abstraction Tool

|

The 18-Month Tool is an Excel-based tool that collects existing data on long-term client outcomes on recidivism. Data include (1) rearrest dates (from NCIC database), (2) recommitment dates (from State departments of corrections and local/county jails and corrections), (3) revocation dates (from State and local corrections), and (4) risk assessment quantitative score. Grantee staff will complete the tool at 18 months from the baseline period for any client enrolled in the BHTCC program. Beginning in year 2, grantees will upload all extracted data on a quarterly basis. In their final upload (last month of grant activity), grantees will include data for all clients not currently submitted including those enrolled less than 18 months. The 18-Month Tool will be completed by grantee evaluation staff using existing sources and takes 10 minutes to complete. See Attachment G. |

Comparison Study Data Abstraction Tool–Baseline

|

The Comparison Study Tools are Excel-based tools that collect existing data on comparison cases (individuals who are not participating in the BHTCC program but are comparable in program eligibility) at baseline and 6 months. Baseline data include demographics and status of screening for co-occurring disorders, employment, and probation/parole. Respondents will include court staff (e.g., court clerks) at comparison courts who have regular interaction with clients during their involvement in the justice system. Respondents will complete the tool on the basis of (1) court paperwork and (2) information discussed during regular court-assigned interactions. No direct data collection will occur from clients. The Comparison Study Tool Baseline take 10 minutes to complete. See Attachment H. |

Comparison Study Data Abstraction Tool–6-Month

|

The Comparison Study Tool 6-Month Tool is an Excel-based tool that collect existing data on comparison cases (individuals who are not participating in the BHTCC program but are comparable in program eligibility) at 6 months. Data abstracted through the 6-month tool include employment status, probation/parole status, services received (i.e., case management, treatment, medical care, after care, peer-to-peer recovery support, and education) and number of days services were received. Respondents will include court staff (e.g., court clerks) at comparison courts who have regular interaction with clients during their involvement in the justice system and will complete the tool on the basis of (1) court paperwork and (2) information discussed during regular court-assigned interactions. No direct data collection will occur from clients. The Comparison Study Tool 6-Month takes 10 minutes to complete. See Attachment I. |

Comparison Study Data Abstraction Tool–18-Month

|

The 18-Month Tool—Comparison Study is the same as the 18-Month Tool but collects existing data on long-term outcomes on recidivism for comparison cases. Data include (1) rearrest dates (from NCIC database), (2) recommitment dates (from State departments of corrections and local/county jails and corrections), (3) revocation dates (from State and local corrections), and (4) risk assessment quantitative score. Court clerks from 2 comparison study courts will complete the tool at 18 months from the baseline period for comparison cases not enrolled in the BHTCC program. Beginning in year 2, comparison study courts will upload all extracted data on a quarterly basis. In their final upload (last month of grant activity), court clerks submit data for all comparison cases not currently submitted. The 18-Month Tool—Comparison Study will be completed by court clerks for comparison cases using existing sources and takes 10 minutes to complete. See Attachment J. |

SE System Change Substudy |

|

Biannual Program Inventory–SE Version |

The BPI–SE is a web-based survey that captures the infrastructure development and direct services that are part of the SE programs. Data include the types of planning that SE grantees and local implementation sites are implementing and activities and infrastructure developed as part of the project. The BPI is administered twice yearly (April and October) over the grant period and takes 45 minutes to complete at each administration. See Attachment K. |

Scalability/ Sustainability Assessment KIIs–Administrator & Service Provider Versions |

The SSA KIIs will be conducted with various stakeholders to assess local SE program resources, infrastructure, outcomes, sustainability, and scalability from stakeholders. Data include changes in outcomes, workforce development, State-level collaboration, partnerships and policies, and scalability and sustainability. There are two versions of the SSA KIIs—each is tailored to the intended audience:

The SSA KIIs will be conducted remotely by telephone and/or Skype technology in years 2 and 4 of the evaluation and take 60 minute to complete. The KIIs cover the same information across years; however, Year 4 KIIs will follow up on how the infrastructure and activities taking place in Year 2 come to fruition. See Attachments L (SSA KII Administrator Y2 and 4); M (SSA KII Service Provider Y2 and 4); and Q (SSA KII Verbal Consent Script). |

Employment Needs FGs—Employer and Employment Specialist Versions |

The ENFGs will be conducted to gather information about the needs and experiences of employment specialists, consumers, and employers as they relate to IPS principles and program goals. Data include local program implementation, the adoption of policies and practices for sustainability and scalability, and recommendations for program improvement and implementation best practices. ENFGs will be conducted with employment specialists and employers (who have and have not participated in the program). Specific topics are tailored to respondent type.

The ENFGs will be conducted virtually using a web-based platform (such as JoinMe) in years 2 and 4 of grant funding. The ENFG—Employment Specialist Version will last 90 minutes and the ENFG—Employer Version will take 60 minutes. See Attachments N (ENFG Employer Version), O (ENFG Employment Specialist Version), R (ENFG Employer Informed Consent), and S (ENFG Employment Specialist Informed Consent). |

Uses of Information Collected

Informing SAMHSA’s Strategic Initiatives

In FY 2014, and based on accomplishments to date, SAMHSA updated its strategic plan, entitled Leading Change 2.0: Advancing the Behavioral Health of the Nation 2015-2018 (Leading Change 2.0). Leading Change 2.0 identifies 6 strategic initiatives (SIs). In particular, the CSE is aligned with SI-1, Trauma and Justice, and SI-3, Recovery Support. Information collected through the CSE will help SAMHSA to assess these essential strategies aimed at influencing comprehensive change across the justice and behavioral health service systems, as well as assist SAMHSA in its aim to implement more rigorous evaluations of process, outcomes, and impacts of its initiatives.

SI-3: Trauma and Justice

Goal 3.1: Implement and study a trauma-informed approach throughout health, behavioral health, and related systems. (BHTCC Study)

Goal 3.2: Create capacity and systems change in the behavioral health and justice systems. (BHTCC System Change Substudy)

SI-4: Recovery Support

Goal 4.1: Improve the physical and behavioral health of individuals with mental illness and/or substance use disorders and their families. (BHTCC and SE Consumer Outcome Substudies)

Goal 4.3: Increase competitive employment and educational attainment for individuals with mental illness and/or substance use disorders. (SE System Change Substudy)

Goal 4.4: Promote community living for individuals with mental and/or substance use disorders and their families. (Community Support Evaluation)

Advancing the Field

Information gathered through the CSE will be useful to SAMHSA and its partners, other Federal agencies and administrators, current grantees, policymakers, and the field about ways to transform the behavioral health system to cultivate resiliency and recovery, actively collaborate with and engage, and improve service delivery for with serious mental, substance, and co-occurring disorders who are in recovery. Further, the focus of the CSE on assessing the process and intermediate outcomes of the BHTCC and SE Programs will contribute immensely to advancing the fields of recovery and resilience and expanding the evidence base. For example, information gathered through the CSE about the effectiveness of the BHTCC and SE Programs can be replicated in other States, territories, tribal nations, and communities. Information gathered also will be used to identify critical aspects of future CSPs.

Without this evaluation, Federal and local officials will not determine whether the BHTCC and SE Programs are having the intended impact on individuals with serious mental, substance use, and co-occurring disorder and their recovery; on the availability and competitiveness of their employment opportunities; and whether the grantees are meeting the individual goals of the programs.

Use of Improved Information Technology

Every effort had been made to limit burden on individual respondents who participate in the CSE through the use of technology. Data collection instruments will be administered via the web and Skype. Below are descriptions of how the web-based data collection and management system, web-based programs, and Skype will be used for data collection.

Web-based Data Collection and Management System

The contractor will work with SAMHSA to develop a web-based data collection and management system, the CSE Data System (CSEDS), on a SAMHSA-hosted website. The CSEDS will support the collection, management, and dissemination of data, as well as function as a centralized hub where program partners can locate comprehensive information. Features of the CSEDS are described in Exhibit 6. The contractor will request virtual machines in the SAMHSA AWS GovCloud environment for system development, testing, staging, and production. The contractor will work with SAMHSA to certify and accredit the IT system; an IT plan and Information Systems Security Professional (ISSP) plan were submitted to SAMHSA on August 31, 2015. The contractor will follow all relevant SAMHSA, HHS, and Federal policies and regulations (including the HHS Automated Data Processing Systems Security Policy; the E-Government Act of 2002; the Federal Acquisition Regulation Clause 52-239-1; the HHS Information Technology General Rules of Behavior; and other applicable laws, regulations, and guidelines). The contractor complies with all information privacy and confidentiality regulations, including the Privacy Act of 1974. The contractor will conduct and maintain a privacy impact assessment (PIA) in accordance with HHS PIA guidance.

Exhibit 6. Features of the CSEDS

Feature |

Description |

Data Collection |

The CSEDS support direct administration of web-based surveys, as well as direct entry of training, service delivery, collaboration, infrastructure development, implementation of evidence-based practices through the BPI. Client-level data collected from program participants in the CDP system and through the 18-month data abstraction tool will be uploaded and stored on CSEDS. All methods will incorporate various data quality checks. |

Repository |

During the design phase, the contractor will coordinate with SAMHSA to understand the structure and format of client-level and Infrastructure Development, Prevention and Mental Health Promotion (IPP) data collected and submitted by CSP grantees and design tables to accommodate these structures. Where feasible and desirable, data from these and other secondary data sources will be used to contextualize the process evaluation and to provide a data source for the outcome evaluation. Doing so will streamline data collection, minimize reporting burden on grantees and clients reached through these programs, and provide a standardized set of measures that can be used as explanatory and/or outcome variables in statistical analyses. |

Response Monitoring |

A response-monitoring feature will be built into the system to allow the contractor to monitor BPI data submissions. This feature will show the latest submission dates, enabling the TA team to follow up with grantees that are not submitting according to established timelines. |

Evaluation Resources |

All evaluation support materials, such as manuals, data dictionaries, data collection protocols, and instruments will be housed on CSEDS to ensure access by grantees. Additionally, any recordings of training webinars will be posted to CSEDS in the event that grantees need to train new staff or provide reviews to existing staff. |

Web-based Programs

Web-based programs will be used to facilitate group participation in focus groups and concept mapping. Concept mapping exercises also will be conducted via the web using Concept System software, which supports carrying out brainstorming, sorting/rating, and interpretation via a web-based program on personal computers. Conducting the ENFGs and concept mapping via the web will facilitate the participation of large numbers of stakeholders, mollify traditional time burden and travel costs associated with in-person data collection, and offer respondents flexibility to participate from convenient locations. Prior to conducting these activities, the feasibility of Web-based participation will be assessed and plans will be put in place to accommodate respondents who may not have computer access to participate by telephone or via another method.

Respondents to the ENFGs will participate virtually through a web-based platform, such as JoinMe. Conducting focus groups virtually introduces potential issues with regard to technology access and capabilities across the participants. To minimize issues related to internet connectivity speeds, while still providing some visual cues and opportunities for rapport building, participants will join the discussion orally but will not participate on camera. The online meeting platform will, however, be equipped with features that will enable them to visually indicate to the group that they would like to speak (e.g., a “raise hand” feature in the platform). The virtual platform may also enable participants to share information that they would not be willing to voice aloud, as it affords the ability for participants to use a built-in instant messaging system to share a private message with the moderator to read to the group. While participants will not participate on camera, the group moderator will appear on video in order to build rapport and enable the moderator to more effectively guide the discussion.

Skype/Web-based Videoconferencing

The SLA KIIs and SSA KIIs will be conducted with individuals via Skype or another web-based videoconferencing platform. Skype is a web-based software application that allows users to have spoken conversations while also viewing one another by webcam and chatting via instant message. Conducting the KIIs via Skype rather than telephone will facilitate the ability of interviewers to build rapport with respondents. In addition, utilizing Skype technology will alleviate the time and costs to travel to participate in the interviews in person and allow respondents to participate from a location of their choosing.

Efforts to Identify Duplication

The current BHTCC program builds on the accomplishments of a first cohort of BHTCC grantees funded by SAMHSA between 2011 and 2014 by combining previous and current SAMHSA funded criminal justice-treatment linkage programs with infrastructure planning and development activities to create new court and community networks to transform the behavioral health system at the community level. A key difference in the BHTCC Program second funding cohort is that each collaborative must involve municipal courts. In addition, veterans are a population of focus.

The CSE team, in developing the data collection activities and updated design for the evaluation, conducted a literature review to avoid duplication in data collection activities and the use of similar information. The specific primary data to be collected for the CSE does not exist elsewhere. An earlier evaluation of the first cohort of the BHTCC was conducted; however, consumer outcome data was limited to 6 months. The current data collection utilizes existing administrative data to supplement requirement GPRA data to inform criminal justice and other offender outcomes. In addition, there are several studies that have been conducted of alternative treatment courts; however, these studies are typically single-site and conducted as part of research supported through the Department of Justice. Thus, the focus of these parallel efforts may be on implementation of justice programs as opposed to treatment and recovery. The 18 Month Abstraction Tool, as well as the Comparison Study Abstraction Tools, utilize existing information gathered through program and administrative data or information already gathered through interactions of adult justice populations not gathered through the existing client-level GRPA reporting. The BPIs, SLA KIIs, Concept Mapping, SSA KIIs, and ENFGs are specific to the CSE and are not collected elsewhere.

Impact on Small Businesses or Other Small Entities

Some data collection activities involve individuals from public agencies, such as the mental health and criminal justice systems. Respondents to the SLA KIIs and SSA KIIs, Concept Mapping, and ENFG–Employer Version may be employed by small businesses or other small entities; however, these data collections will not have a significant impact on the agencies or entities.

Consequences if Information Collected Less Frequently

The rigor of the CSE design and its ability to answer the primary evaluation questions are dependent on the frequency of the data collected. Additionally, because the CSE is aligned with the foci of the BHTCC and SE Programs, the frequency with which data collection activities are administered is critical to SAMHSA’s overall assessment of the programs. Exhibit 7 describes the consequences if data are collected less frequently.

Exhibit 7. Consequences If Information Collected Less Frequently by Activity

Activity |

Rationale |

BPI–BHTCC & BPI–SE Versions |

Grantees will be required to complete the BPI upon the receipt of OMB approval and on a twice-yearly basis thereafter. Collecting this information biannually is necessary to document progress related to grantee infrastructure development and program goals. The consequences of collecting the BPI less frequently include losing the ability to track and assess change over time related to these factors. |

SLA KIIs & SSA KIIs |

The SLA and SSA KIIs will be conducted twice with key stakeholders in grant years 2 and 4. The timeline for KII administration toward the beginning and end of the BHTCC and SE grant periods is intentional to capture and assess change over time as it relates to progress toward program goals and infrastructure/capacity development. If the KIIs are conducted less frequently, it will not be possible to capture change over time, fidelity to program plans, and infrastructure development service enhancements/expansions. |

Concept Mapping |

Concept mapping will be conducted with a range of program stakeholders, including court personnel, service providers, and consumers as part of the participatory approach to the CSE. Exercises will be conducted in grant years 2 and 4 to coincide with full implementation of the BHTCC program and allow for greater understanding of the most important aspects of the BHTCC that support recovery from these multiple perspectives. Two Year 4 exercises will include new brainstorming of the most important aspects of the BHTCC program from a subset of grantees who are purposefully selected based on preliminary findings on key consumer outcomes (e.g., program court models including veterans courts, offender inclusion criteria, geographical setting). Conducting concept mapping less frequently will negatively impact the degree to which the CSE implements a participatory approach and incorporates the perspectives and opinions of consumers. |

18-Month Client Level Data Abstraction Tool |

The 18-Month Tool will capture long-term recidivism for BHTCC participants and comparison substudy controls not otherwise collected through other measures. If these data are not collected for BHTCC participants, it will not be possible to assess their long-term outcomes. Further, the longitudinal implementation of the Comparison Study Tool and 18-Month Tool serve as the basis for the BHTCC Comparison Study. Collecting this information less frequently will impair the rigor of the quasi-experimental design and the ability to assess and fully understand the impacts of the BHTCC Program. |

Comparison Study Tool—BL, 6-Month and 18-Month |

The longitudinal implementation of the Comparison Study Tools at BL, 6 months, and 18 months serve as the basis for the BHTCC Comparison Study. If these data are not collected, it will not be possible to compare short- and long-term outcomes for BHTCC and non-BHTCC participants. Collecting this information less frequently will impair the rigor of the quasi-experimental design and the ability to assess and fully understand the impacts of the BHTCC Program. |

Consistency with the Guidelines of 5 CFR 1320.5(d)(2)

The data collection fully complies with the requirements of 5 CFR 1320.5(d) (2).

Consultation Outside the Agency

Federal Register Notice

SAMHSA published a notice in the Federal Register on December 21, 2015 (80 FR 79349), soliciting public comment on this study.

Consultation Outside the Agency

Two steering committees (BHTCC and SE) were established for the CSE. Feedback on the data collection instruments and protocols, as well as overall evaluation design, were solicited from the steering committee; SAMHSA/CMHS Contracting Officer’s Representative (COR) and Alternate COR; the SAMHSA Center for Behavioral Health Statistics and Quality Evaluation Desk Officer; the evaluation contractor; SAMHSA grantee project officers; and grantee representatives, including project directors and local evaluators. BHTCC and SE Study-specific webinars were conducted to review the details of the evaluation and the proposed data collection for review and comment. Based on the feedback from the steering committee and other reviewers described above, modifications were made to the instruments, evaluation questions, and evaluation protocols. Organizations and individuals that reviewed the data collection activities are listed in Exhibit 8.

Exhibit 8. Consultation outside the Agency

Name/Title |

Contact Information |

Robin Davis, PhD Principal Investigator/Project Director

|

ICF International 3 Corporate Square, NE Suite 370 Atlanta, GA 30329 404-321-3211 |

Christine Walrath, PhD |

ICF International 40 Wall Street, Suite 3400 New York, NY 10005 646-695-8154 |

Samantha Lowry, MS BHTCC Study Manager |

ICF International 9300 Lee Highway Fairfax,

VA 22031 703-251-0368 |

Emily Appel-Newby SE Study Manager |

ICF International 9300 Lee Highway Fairfax, VA 22031 703-225-2409 |

Lucas Godoy Garraza, MA Senior Statistician |

ICF International 40 Wall Street, Suite 3400 New York, NY 10005 |

Megan Brooks, MA Data Manager |

ICF International 3

Corporate Square, NE 651-330-6085 |

Janeen Buck Willison, MSJ Evaluation Advisor |

Urban Institute 2100 M Street NW Washington, DC 20037 202-261-5746 |

Janine Zweig, PhD Evaluation Advisor |

Urban Institute 2100 M Street NW Washington, DC 20037 518-791-1058 |

Steven Belenko, PhD Evaluation Advisor |

Consultant 1115 Polett Walk 5th Floor Gladfelter Hall Philadelphia, PA 19122 215-204-2211 |

Carrie Petrucci, PhD, MSW |

EMT Associates, Inc. 818.667.9167 |

BHTCC Steering Committee |

|

Michael Endres, PhD |

Office of Program Improvement and Excellence for the State of Hawaii Department of Health P.O. Box 3378; Honolulu, HI. 96801-3378 808-586-4132 |

Joan Gillece, PhD |

SAMHSA National Center for Trauma Informed Care |

Honorable Stephen Goss, JD |

Dougherty Judicial Circuit Superior Court 225 Pine Avenue, Room 222 Albany, Georgia 31701 229-434-2683 |

Tara Kunkel, MSW |

National Center for State Courts 300 Newport Avenue Williamsburg, VA 23185 757-259-1575 |

Margaret Baughman Sladky, PhD |

Begun Center for Violence Prevention Research and Education as Case Western University 11402 Bellflower Rd. Cleveland, OH 44106 216-368-0160 |

Jana Spalding, MD |

Arizona State University 500 N. Third St., Ste. 200 Phoenix, AZ 85004 602-496-1470 |

Doug Marlowe, PhD |

National

Association of Drug Court Professionals |

SE Steering Committee |

|

Sean Harris, PhD |

Recovery Institute 1020 South Westnedge Avenue Kalamazoo, MI 49008 269.343.6725 |

Crystal Blyler, PhD |

Mathematica Policy Research, Inc. 1100 1st Street, NE, 12th Floor Washington, DC 20002-4221 202.250.3502 |

Jonathan Delman, PhD, JD |

Technical Assistance Collaborative 31 St James Avenue Suite 950 Boston, MA |

Payment to Respondents

The CSE uses a participatory evaluation approach and requires the participation of individuals beyond grantee program staff, such as consumers and employers. Consequently, remuneration is suggested for respondents who are not directly affiliated with the BHTCC and SE programs at the time of their participation as compensation for the associated burden, potential inconvenience of participation, and any related costs (e.g., transportation, mobile phone minutes or data, compensation for time). The CSE also involves longitudinal data collection. Remuneration is a standard practice in longitudinal studies partly because respondents are typically not directly affiliated with the program being evaluated. Given the use of longitudinal data collection and the hard-to-reach nature of these populations, compensation will be provided to respondents of the following activities: SLA KII–Consumer Version ($10) and the ENFG–Employer Version ($50). Respondents to other data collection activities are primarily staff of the BHTCC and SE programs or close affiliates. Therefore, no remuneration is planned for those activities.

Assurances of Confidentiality

To ensure the privacy of data compiled for the protection of human subjects, the data collection protocol and instruments for the CSE will be reviewed through the contractor’s institutional review board (IRB) prior to the collection of covered or protected data. The contractor’s IRB holds a Federal wide Assurance (FWA00000845; Expiration, April 13, 2019) from the HHS Office for Human Research Protections (OHRP). This review ensures compliance with the spirit and letter of HHS regulations governing such projects. All protected data will be stored on the contractor’s secure servers in the manner described in the IT Plan and IT Data Security Plan submitted to SAMHSA on August 31, 2015. In addition, the secure web-based data collection and management system, the CSEDS, will facilitate data entry and management for the evaluation.

CSE respondents will be selected on the basis of their roles in the BHTCC and SE Programs. Descriptive information will be collected on respondents, but no identifying information will be entered or stored in the CSEDS. For the SLA KIIs and SSA KIIs, grantee staff will collect consent to contact from potential participants and forward the forms to the contractor. All hard copy forms with identifying information will be stored in locked cabinets; contact information will be entered into a password-protected database accessible to the limited number of individuals who require access (selected contractor staff such as data analysts and administrative staff for administering the incentives). These individuals have signed privacy, data access, and data use agreements. Identifying information (e.g., name, e-mail address) collected to facilitate the administration of surveys, interviews, and focus groups will not be stored with responses. Further, datasets will be stripped of any identifying information prior to use by data analysts. Once final data collection is complete and incentives have been distributed (as appropriate), respondent contact information will be deleted from the database and the hard copy forms will be destroyed. Data collection activities requiring the collection of identifying information and specific procedures to protect respondent privacy are described in Exhibit 9.

Exhibit 9. Procedures to Protect Respondent Privacy

Activity |

Rationale |

BPI–BHTCC & BPI–SE |

Information to complete the inventories will be directly entered into the web-based system. To access the system, respondents receive an individual username and password to protect their privacy and no identifying information is requested on the inventories. |

SLA KIIs, SSA KIIs, & ENFGs |

Identifying information for respondents to the SLA KIIs, SSA KIIs, and ENFGs will be necessary to facilitate the administration of the interviews and focus groups. Contact information will be limited to affiliations, names, email addresses, and telephone numbers and will be entered into and maintained in a password-protected database. KIIs will be audiotaped and transcribed for analysis. Audio files will be destroyed once transcription is complete and respondent names will be redacted from transcripts. Although the individual’s identifying name will not be used in any reports, reports and datasets will contain the name of BHTCC or SE program. Although unlikely, it is possible that an individual may be identifiable when reporting results. Respondents are informed of possible identification in the consent language at the start of the activity. |

Concept Mapping |

Local grantees will take primary responsibility for coordinating and conducting concept mapping. However, identifying information for some concept mapping participants may be necessary for the contractor to facilitate participation, depending on the recruitment and implementation methods used. Contact information will be limited to name, email address, and/or telephone number and will be entered into and maintained in a password-protected database. |

Questions of a Sensitive Nature

Respondents will not be asked any questions of a personally sensitive nature. The subject matter of the BPIs, SLA KIIs, Concept Mapping, SSA KIIs, and ENFGs will be limited to the perceptions of planning and implementation activities among key stakeholders of the grants.

Estimates of Annualized Burden Hours and Costs

Clearance is being requested for 3 years of data collection for the Community Support Evaluation for 17 BHTCC and 7 SE Program grantees (24 grantees total). Exhibit 10 describes the burden and costs associated with CSE data collection activities and Exhibit 11 describes burden by respondent type. The cost was calculated based on the hourly wage rates for appropriate wage rate categories using data collected as part of the National Compensation Survey (BLS, 2014) and from the U.S. Department of Labor Federal Minimum Wage Standards.

Exhibit 10. Estimated Annualized Burden Hours and Costs

(Across the 3-Year Clearance Period)

Type of Respondent |

Instrument |

Number of Respondents |

Responses per Respondent |

Total Number of Responses |

Burden per Response (hours) |

Annual Burden (hours) |

Hourly Wage Rate ($) |

Total Cost ($) |

BHTCC Study |

||||||||

Project Evaluators |

Biannual Program Inventory BHTCC Version |

17 |

2 |

34 |

0.75 |

26 |

36.72 |

955 |

Court Personnel |

SLA KII Court Personnel Version |

23 |

1 |

23 |

1 |

23 |

62.21 |

1,410 |

BHTCC Service Providers |

SLA KII Service Provider Version |

23 |

1 |

23 |

1 |

23 |

22.03 |

499 |

Consumers |

SLA KII Consumer Version |

12 |

1 |

12 |

1 |

12 |

7.25 |

87 |

Project Evaluators |

18-Month Tool |

17 |

1 |

17 |

5.4 |

92 |

36.72 |

3,378 |

Court Clerks |

Comparison Study 18-Month Tool |

2 |

1 |

2 |

5.4 |

11 |

17.05 |

188 |

Court Clerks |

Comparison Study Tool BL Version |

2 |

1 |

2 |

7 |

14 |

17.05 |

239 |

Court Clerks |

Comparison Study Tool 6-Month Version |

2 |

1 |

2 |

7 |

14 |

17.05 |

239 |

BHTCC Stakeholders |

Concept Mapping Brainstorming Activity |

180 |

1 |

180 |

0.5 |

90 |

24.69 |

2,222 |

BHTCC Stakeholders |

Concept Mapping Sorting/Rating Activity |

294 |

1 |

294 |

0.5 |

147 |

24.69 |

3,629 |

SE Study |

||||||||

Project Directors |

Biannual Program Inventory SE Version |

7 |

2 |

14 |

0.75 |

11 |

32.56 |

342 |

Administrators |

SSA KII Administrator Version |

14 |

1 |

14 |

1 |

14 |

56.35 |

789 |

SE Service Providers |

SSA KII Service Provider Version |

14 |

1 |

14 |

1 |

14 |

22.03 |

308 |

Hiring Managers |

ENFG Employer Version |

28 |

1 |

28 |

1 |

28 |

30.09 |

203 |

Employment Specialists |

ENFG—Employment Specialist Version |

28 |

1 |

28 |

1.5 |

42 |

29.58 |

1242 |

Total |

462a |

|

687 |

|

561 |

|

15,730 |

|

Exhibit 11. Annualized Summary Burden by Respondent Type

Respondents |

Number of Respondents |

Responses/ Respondent |

Total Responses |

Total Annualized Hour Burden |

BHTC Study |

||||

Project Evaluators |

17 |

3 |

51 |

118 |

Court Personnel |

23 |

1 |

23 |

23 |

BHTCC Service Providers |

23 |

1 |

23 |

23 |

Consumers |

12 |

1 |

12 |

12 |

Court Clerks |

2 |

3 |

6 |

39 |

BHTCC Stakeholders |

294 |

1.612 |

474 |

237 |

SE Study |

||||

Project Directors |

7 |

2 |

14 |

11 |

Administrators |

14 |

1 |

14 |

14 |

SE Service Providers |

14 |

1 |

14 |

14 |

Hiring Managers |

28 |

1 |

28 |

28 |

Employment Specialists |

28 |

1 |

28 |

42 |

Total |

462 |

|

687 |

561 |

Estimates of Annualized Cost Burden to Respondents or Record Keepers

There are no startup, maintenance, or operational costs associated with the CSE.

Estimates of Annualized Cost to the Government

CMHS has planned and allocated resources for the management, processing and use of the collected information in a manner that shall enhance its utility to agencies and the public. Including the Federal contribution to local grantee evaluation efforts, the contract with the contractor, and Government staff to oversee the evaluation, the annualized cost to the Government is estimated at $1,674,392. These costs are described below.

Assuming an annual cost of no more than 20% of grant awards for performance measurement and assessment, the annual cost for the BHTCC grant level is estimated at $69,628. These monies are included in the grant awards. Each SE State grant allocates up to 20% of the total award and 15% of each subgrant award (to 2 local behavioral health agencies) for data collection, performance measurement, and performance assessment for an annual total of $256,000 per SE grantee. It is estimated that participation in the CSE will require 20% of grant funds set aside for these purposes.

SAMHSA funded the CSE contract to conduct the evaluation for 17 BHTCC and 7 SE grantees for 5 years (i.e., base year and 4 option years) at a value of $ $4,935,908. Assuming that all option years are funded, the estimated average annual cost of the contract will be $987,182. This covers expenses related to developing and monitoring the CSE including, but not limited to, developing the evaluation design and instrumentation; developing training and TA resources (i.e., manuals, training materials, etc.); conducting in-person or telephone training and TA; monitoring of grantees; traveling to grantee sites and relevant meetings; and analyzing and disseminating data activities. In addition, these funds will support the development of the web-based data collection and management system and fund staff support for data collection. It is estimated that CMHS will allocate 0.25 of a full-time equivalent each year for Government oversight of the evaluation. Assuming an annual salary of $80,000, these Government costs will be $20,000 per year.

Changes in Burden

This is a new data collection.

Time Schedule, Publication, and Analysis Plans

Time Schedule

The time schedule for implementing the CSE is summarized in Exhibit 12. A 3-year clearance is requested for this project.

Exhibit 12. Time Schedule

Activity |

Timeframe |

Begin data collection for 17 BHTCC and 7 SE grantees |

1 month after OMB clearance (expected April 2016) |

Biannual Program Inventory – Baseline

|

May 2016 |

Begin data abstraction for the 18-Month Tool

|

May 2016 |

Begin data abstraction for BHTCC Comparison Substudy

|

July 2016 (ongoing) |

KIIs and Employment Needs FGs – Administration 1

|

May-August 2016 |

Concept Mapping Exercise #1 – Local Concept Maps |

May 2016 – April 2017 |

Biannual Program Inventory #2

|

October 2016 |

Biannual Program Inventory #3

|

April 2017 |

Biannual Program Inventory #4

|

October 2017 |

Concept Mapping Exercises #2–4: Keys to Recovery Maps |

March 2018 – July 2018 |

Biannual Program Inventory #5

|

April 2018 |

KIIs and Employment Needs FGs – Administration 2

|

March 2018 – July 2018 |

Biannual Program Inventory #6

|

October 2018 |

Biannual Program Inventory #7

|

April 2019 |

Publication Plans

Dissemination of evaluation findings across the life of the CSE to SAMHSA, HHS, and key stakeholders will be a priority. Reporting and dissemination will include quarterly progress reports, annual evaluation reports, annual briefings on evaluation findings, and ad hoc reports and presentation products (via special requests). The contractor also will submit a State-level grantee report on Year 1 activities for the BHTCC. Although the contractor is not contractually required to publish findings from the CSE in peer-reviewed articles, examples of journals that would be appropriate vehicles for publication include the following:

Community-Based Public Health: Policy and Practice

Community Mental Health Journal

Criminology

Evaluation and Program Planning

Journal of Disability Policy Studies

Journal of Rehabilitation

Journal of Substance Abuse Treatment

Journal of Vocational Rehabilitation

Justice Quarterly

The Justice System Journal

Data Analysis Plan

Data collected through the CSE components will be analyzed to address key evaluation questions (see Section A.2.a). Analysis plans for each study are described below.

BHTCC Study

BHTCC System Change Substudy

SLA analyses will focus on characterizing service expansion and enhancement over the grant cycle, as well as the level and characteristics of the collaboration, particularly, between the local courts and the local community treatment and recovery providers. Consistent with the focus of the study and taking into account the limited number of grantees, the SLA quantitative analysis will focus on providing an accurate and rich description of system processes resulting from the implementation of the programs, rather than emphasizing the identification of statistically significant differences or the use of elaborate statistical models. A full understanding of these system-level processes will be reached through the systematic analysis of qualitative information particularly on the program stakeholder’s perception of results, as well as barriers and facilitators of success.

Information gathered through SLA will be used to describe the developmental status of systems and to examine differences among communities in their system development. Quantitative data are determined from items linked to framework indicators. System-generated variables from CSEDS will allow the tracking of when infrastructure development, activities, coordination of services, and the provision of direct services are first entered into the BPI. These data along with mean ratings will be used to examine how systems develop, are developing, and are sustaining their program on the basis of key system principles. Together, these data will allow for the understanding of how the BHTCC programs develop over time (i.e., What are the first things most BHTCC grantees do? What are the common activities implemented in the second year of the grant? What are the developments that occur later in the funding period? What are staff attitudes about collaboration, the value of TIC, and evidence-based practices?).

Qualitative data will provide in-depth information useful for interpreting quantitative findings, describing which features of the system enhance its development, and identifying key indicators that facilitate successful program implementation. After the KIIs and data abstraction, data collectors on the evaluation team will collaborate to write a descriptive, comprehensive, and synthesized report of the findings from the data collected. Thematic analyses of qualitative data in the narrative reports will be conducted using ATLAS.ti software according to a set of defined codes that are assigned to segments of the text. Data collected through the BPI, concept mapping, and KIIs will be triangulated to support a more thorough understanding of system change mechanisms. Results will be compared and contrasted with results of the SLA measure related to the level of grantee program development in relation to key system principles and elements of the core evaluation data, including consumers served, services and supports provided, ability to engage multiple partners, and collaborative activities. The SLA KII guides involve questions specifically designed to elicit information about fidelity to the CSP model across BHTCC grantees. Qualitative analysis will examine the level of fidelity to these principles within each grantee’s service implementation through content analysis, code application and consensus-building across the coding team.

Fidelity

Once the interviews have been thematically coded, members of the qualitative analysis team will review data from all respondents within each grantee to assess agreement across respondents (e.g., if consumers and court personnel agree on the extent to which BHTCC services are culturally relevant), and the overall perceived fidelity to each principle. Then, the qualitative team will compare across grantees to determine the most appropriate scoring system in order to maximize variation. The form that the scoring system takes will depend upon the content of the qualitative data. For example, if some CSPs are met by all grantees, while other CSPs are met by some grantees but not others, scoring would involve classifying grantees into two groups: those who incorporate the principle and those who do not. Alternatively, if all grantees are working towards the CSPs but they differ substantially in the progress they have made towards achieving the principles, scoring would consist of a Likert scale where grantees are scored according to the amount of success they have achieved in incorporating each principle. Fidelity to the CSPs will be examined in KII data from Year 2 and Year 4 to explore the possibility of examining change over time, in the event that grantees show progress in the CSPs throughout the course of the grant. The qualitative analysis team will work in collaboration with the quantitative analysis team during the scoring process to ensure the data are integrated across components. For more information on how these scores will be used quantitatively, see Linking Data from Two Levels of the BHTCC Study.

Concept Map Generation

Information generated through the facilitated brainstorming and subsequent sorting and rating procedures for concept mapping will be entered into Concept Systems software. Multidimensional scaling and cluster analysis will be applied to generate a series of concept maps that identify the most important BHTCC components that support recovery from the perspectives of BHTCC stakeholders. Through the analysis, themes of recovery support will be generated. The final number of general themes or “clusters” in the concept map will be determined by the evaluation team in conjunction with SAMHSA and with input and interpretation from our BHTCC grantees, taking into account the conceptual ideas and the average ratings of each cluster (i.e., grouping of concepts related to BHTCC components that support recovery). Concept maps will reduce the brainstormed ideas (i.e., those generated through the facilitated training session) to three to six groups or conceptual themes.

Concept maps on priority supports for recovery will be produced in Years 2 and 3 of the evaluation and will be site specific. These maps will identify the most important aspects of the BHTCC that support recovery (from varied BHTCC perspectives). Program sites can use these maps to refine and improve program delivery to ensure these key recovery supports are in place and receive continued support, as well as that additional focus and attention are provided to those supports that peers and consumers find most important. In Year 4 of the evaluation, the final concept maps will be developed through cross-site analysis of mapping activities to yield Keys to Recovery maps that identify core components of BHTCC to support recovery within BHTCC roles across BHTCC grantees. Thus, concept maps will be generated for court personnel (judges, attorneys, administrators), treatment providers (case managers, service/treatment providers), and consumers (participants, peer supports, families). These latter maps can be used to identify key recovery supports from various BHTCC program stakeholders that can inform SAMHSA of continued and needed resources to ensure delivery models that contain these key supports.

BHTCC Consumer Outcome Substudy

To determine the outcome of program participation among individuals with behavioral health conditions served by the BHTCC, ICF will rely on client-level data from existing performance monitoring requirements. In addition, information collected through 18-Month Client-Level Abstraction Tool will afford the assessment of long-term outcomes related to recidivism (rearrests, recommitment, and revocations). To take advantage of the information, specialized analytical techniques will be needed. These techniques take into account both the longitudinal and hierarchical organization of the data. Furthermore, it is anticipated that missing data, particularly arising from attrition, will require specialized treatment.

For the COS, two approaches are proposed that are appropriate to the analysis of longitudinal data: (1) “marginal” models using Generalized Estimating Equations (GEE), and (2) mixed-effect models (also called hierarchical, or multilevel models). Both approaches are adequate in the presence of repeated observations from the same individuals that are not necessarily independent. Both approaches can be used to test the existence of change over time in the variables of interest (mental health issues, employment status, time in prison) while accounting for the possible correlation over time within individuals. In addition, and unlike paired t test and other techniques for the analysis of “balanced” experiments, the proposed approaches can use all the available information (not only cases with complete follow-up) and account for observed differences between cases with complete and incomplete follow-up information (such as differences in demographic characteristics).

Longitudinal and Multilevel Data

Some basic characteristics that must be taken into account in the analysis. Essentially, a set of measures is repeatedly obtained over time from each client. In turn, clients receiving service from certain organizational entities (e.g., a mental health agency) presumably shared characteristics. These features introduce correlation between observations that turns inadequate the most classical analytical approaches, such as ordinary regression or analysis of variance.

Several alternatives exist to deal with the longitudinal correlation, including, in particular, the use of GEE or the use of mixed-effect models (also called multilevel or hierarchical models). Mixed-effect models involve more realistic assumptions about patterns of missing data (see below), but are also more demanding in terms of parametric assumptions. GEE, on the other hand, can be considered a semiparametric approach, in the sense that a full specification of the correlation structure is only needed on a “provisional” basis, while inference remains robust to these specifications.

The correlation of observations among clients within the same provider organizations presents additional challenges. The limited number of grantees favors inferential approaches that consider each grantee as a stratum, rather than a cluster (i.e., a unit from sample of a larger set of potential grantees).b The influence of client’s heterogeneity across grantees on their performance can still be explored, particularly, through the inclusion of grantee level fixed-effects.

Missing Data

Missing data are a pervasive issue in evaluation research, particularly in longitudinal studies. It is anticipated this will be an important issue in the context of longitudinal client-level information. For example, the ATCC evaluation reports that 24% of participants with baseline information did not have 6 months of follow-up information (Stainbrook & Hanna, 2014).