P3_OMB Package_Part A (revised)

P3_OMB Package_Part A (revised).docx

Performance Partnership Pilots for Disconnected Youth Program National Evaluation

OMB: 1290-0013

Part

A: Justification for the Collection of Data

for the National Evaluation of the Performance Partnership Pilots for Disconnected

Youth (P3)

Evaluation of the Performance Partnership Pilots for Disconnected

Youth (P3)

September, 2016

Submitted to:

Office of Management and Budget

Submitted

by:

Chief Evaluation Office

Office of the Assistant Secretary for Policy

United States Department of Labor

200 Constitution Avenue, NW

Washington, DC 20210

This page has been left blank for double-sided copying.

CONTENTS

Part A: Justification for the Study 1

A.1. Circumstances making the collection of information necessary 1

1. Overview of P3 1

2. Overview of the P3 evaluation 3

3. Data collection activities requiring clearance 5

A.2. Purposes and use of the information 6

1. Site visit interviews 6

2. Youth focus groups 7

3. Survey of partner managers 10

4. Survey of partner service providers 11

A.3. Use of technology to reduce burden 11

A.4. Efforts to avoid duplication 12

A.5. Methods to minimize burden on small entities 12

A.6. Consequences of not collecting data 12

A.7. Special circumstances 13

A.8. Federal Register announcement and consultation 13

1. Federal Register announcement 13

2. Consultation outside of the agency 13

A.9. Payments or gifts 13

A.10. Assurances of privacy 13

1. Privacy 14

2. Data security 16

A.11. Justification for sensitive questions 16

A.12. Estimates of hours burden 16

1. Hours by activity 16

2. Total estimated burden hours 17

A.13. Estimates of cost burden to respondents 18

A.14. Annualized costs to the federal government 18

A.15. Reasons for program changes or adjustments 20

A.16. Plans for tabulation and publication of results 20

1. Data analysis 20

2. Publication plan and schedule 21

A.17. Approval not to display the expiration date for OMB approval 22

A.18. Explanation of exceptions 22

REFERENCES 23

TABLES

Table A.1: Description of the nine pilots 2

Table A.2. Data collection activity and instruments included in the request 6

Table A.3. Examples of topics for site visit interviews and focus groups, by respondent 8

Table A.4. Annual burden estimates for data collection activities (over 36 months) 17

Table A.5. Monetized burden hours, over 36 months 18

Table A.6. Estimated annual federal costs for the National Evaluation of P3 20

Table A.7. Schedule for the National Evaluation of P3 21

FIGURES

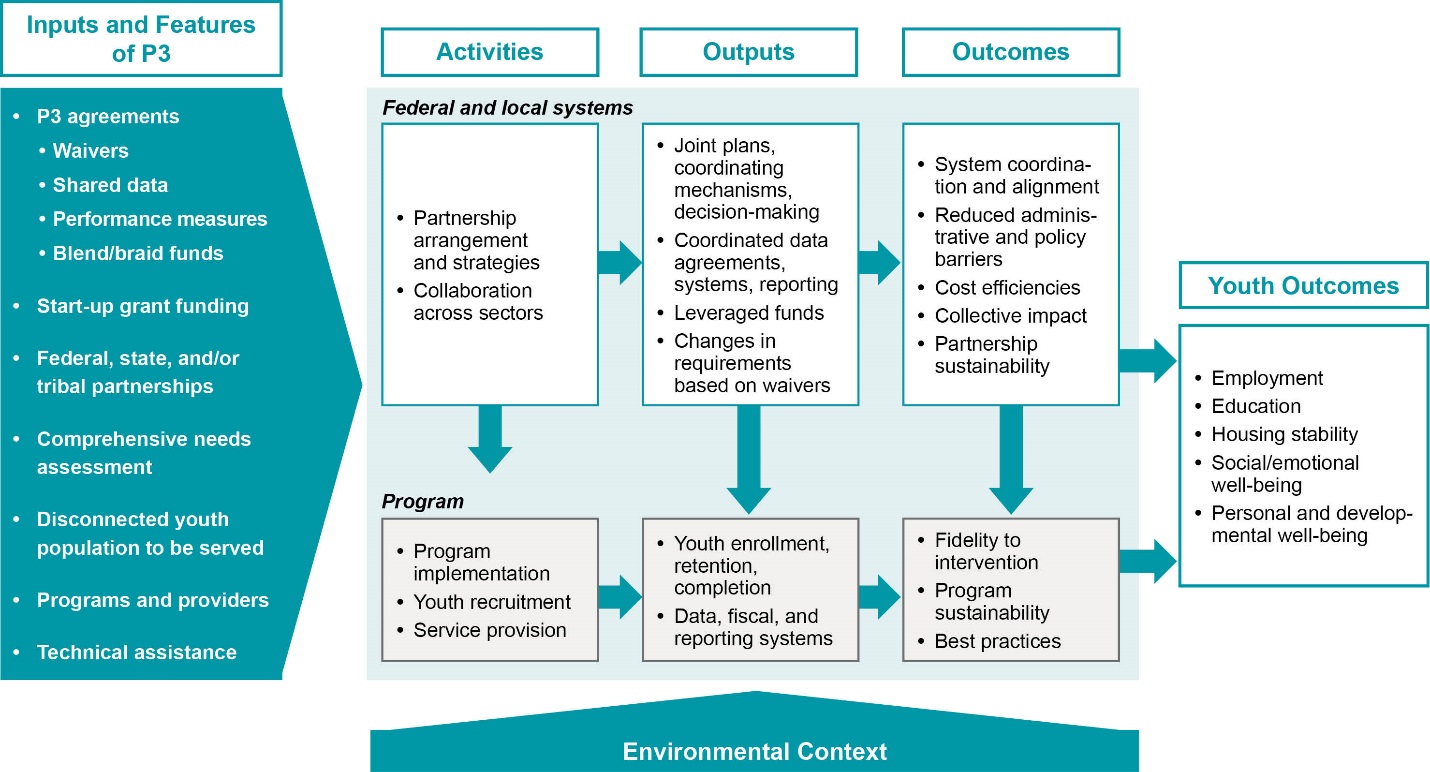

Figure A.1. P3 program model 4

Part A: Justification for the Study

The Chief Evaluation Office (CEO) of the U.S. Department of Labor (DOL) has contracted with Mathematica Policy Research and Social Policy Research Associates (hereafter “the study team”) to evaluate the Performance Partnership Pilots for Disconnected Youth (P3) program. In partnership, the P3 federal agencies—the Department of Education (ED), DOL, the Department of Health and Human Services (HHS), the Corporation for National and Community Service (CNCS), and the Institute of Museum and Library Services (IMLS)—awarded grants to nine P3 pilots to test innovative, cost-effective, and outcome-focused strategies for improving results for disconnected youth. This study provides information to policymakers and administrators that they can use to determine whether allowing states, localities, and Indian tribes greater flexibility to pool funds and waive programmatic requirements will help them overcome significant hurdles in providing effective services to and improving outcomes for disconnected youth. This package requests clearance for four data collection activities conducted as part of the evaluation’s implementation and systems analyses: (1) site visit interviews; (2) focus group discussions with P3 youth participants; (3) a survey of partner managers; and (4) a survey of partner service providers.

A.1. Circumstances making the collection of information necessary

It is vital that young people today develop the skills, knowledge, and behaviors to help them successfully transition to adulthood, fulfilling their potential and advancing our nation’s social and economic prospects. Many, but not all, youth will gain these skills, knowledge, and behaviors and become self-sufficient, productive members of society and families (Dion et al. 2013). Youth who need additional supports for this transition have been called “at risk” or “disconnected,” but they also have been called “opportunity youth” by the White House Council for Community Solutions (2012) when it highlighted their promise and drew attention to them as a top national priority.

Although improving the outcomes of these youth has been a priority, stakeholders have identified critical barriers to serving these youth, including multiple federally funded programs with different eligibility and reporting requirements and multiple data systems across the local network of youth providers. P3 is testing whether granting the flexibility to blend and braid discretionary program funding and seek appropriate waivers will ameliorate the barriers to effective services identified by the field and ultimately improve the outcomes of these youth (U.S. Government 2014). Through a competitive grant process, the federal agencies are awarding grantees this flexibility, starting with a cohort in fall 2015 and awarding two additional cohorts that Congress has authorized. The evaluation of the first cohort of P3 grantees represents an important opportunity to study the implementation outcomes and system changes that the pilots can achieve and the pilot programs’ outcomes of and impacts on youth participants. This request focuses on data collection for the implementation and systems analyses.

1. Overview of P3

In October 2015, nine competitively awarded grantees were announced as the first P3 cohort. They received up to $700,000 in start-up funds and the flexibility to blend or braid discretionary funds from fiscal year 2014 and 2015 to improve the outcomes of disconnected youth. The first cohort grantees are located in eight states (California, Florida, Illinois, Indiana, Kentucky, Louisiana, Oklahoma, and Washington) and a federally recognized Indian tribe located in Texas. As required in the legislation authorizing P3, the grantees are serving disconnected youth, defined as low-income youth ages 14 to 24 and are either homeless, in foster care, involved in the juvenile justice system, unemployed, or not enrolled in or at risk of dropping out of school. Several grantees are serving in-school and out-of-school youth, and some are focusing on specific populations such as youth in foster care or public housing. Almost all the grantees are relying on their Workforce Innovation and Opportunity Act Title I Youth funds along with other DOL, ED, HHS, CNCS, or IMLS funds. Table A.1 provides additional information about each pilot.

Table A.1: Description of the nine pilots

Pilot name |

Location of pilot services |

Anticipated number of participants |

Estimated number of partners |

Target population |

Brief description of intervention |

Baton Rouge P3 |

Baton Rouge, Louisiana |

84 |

6 |

14 to 24 year-olds who are 2 or more years behind in school |

Youth will develop an individual success plan. Program staff will develop training activities, and will encourage youth to participate in other training and education programs provided by partners. |

Best Opportunities to Shine and Succeed (BOSS) |

Broward County, Florida |

420 |

4 |

At-risk youth in six high schools |

Students will be provided a case manager. The case managers will have a 1:35 ratio of case manager to youth. The case manager will connect each participant with “evidence-based and evidence-informed” educational, employment, and personal development services that specifically address the needs of the student in regard to graduation and post-secondary success. The BOSS program will provide intensive, comprehensive, and sustained service pathways via a coordinated approach that helps youth progress seamlessly from high school to post-secondary opportunities. |

Chicago Young Parents Program (CYPP) |

Chicago, Illinois |

140 |

3 |

Low-income women ages 16 to 24 with at least one child younger than six |

CYPP is a parent engagement, education and employment program that combines two successful, research-based program models: employment and mentoring for youth and high quality comprehensive Head Start programming for children and families. All participants receive basic Head Start services plus additional mentoring, home visits, socializations, education planning, enrichment sessions, and employment. |

Indy P3 |

Indianapolis, Indiana |

80 |

8 |

At-risk, low-income youth ages 14-24; target youth in public housing |

Indy P3 will provide comprehensive, concentrated, and coordinated services for cohorts of very high-risk disconnected youth. Staff members called connectors (each serving 40 youth and families at a time) will develop individual service and success plans, link participants to core service providers, and share data across programs. Partners will emulate best practices and lessons learned from evidence-based models. |

Los Angeles P3 (LAP3) |

Los Angeles, California |

8,000 |

24 |

Youth ages 16 to 24 |

LA P3 is comprehensive service delivery system that coordinates and integrates the delivery of education, workforce and social services to disconnected youth. Partner agencies and WIOA youth contractors in the city of Los Angeles provide the program services. These are existing services: the aim of LAP3 is to enhance the availability of these services through the enhanced coordination of partner agencies. |

P3-OKC |

Oklahoma County, Oklahoma |

60-70 |

12

|

Foster youth ages 14 to 21 |

Youth will receive: (1) modified wraparound services more consistent with child welfare services; (2) an integrated plan of services to promote service integration and foster partnerships across nonprofit and public organizations; (3) the Check and Connect intervention designed to monitor school attendance, participation, and performance; and (4) enhanced vocational development, work, and/or career opportunities achieved through wraparound, educational options, and career aspects of students enrolled in career academies. |

Seattle-King County Partnership to Reconnect |

Seattle-King County, Washington |

200 |

3

|

Youth ages 16 to 24 |

The program will have three components: (1) strategic coordination of workforce development services with the state’s unique Open Doors policy, which provides K-12 funding for reengagement programs; (2) using AmeriCorps members to develop a regional outreach strategy aimed at placing the hardest-to-serve youth in programs that best reflect their interests and needs; and (3) advancing efforts toward a shared data system and common intake process that will enhance the coordination and targeting of services across Seattle-King County. |

Southeast Kentucky Promise Zone P3 |

7 rural southeast Kentucky counties |

1,000 |

3 |

At-risk youth ages 14 to 24 |

The program will include a teen pregnancy prevention program, career assessments and exploration trips, academic and career mentoring and tutoring, professional development for teachers and community members, two generations of family engagement focused on youths’ parents, and paid work experience. |

Tigua Institute of Academic and Career Development Excellence |

Ysleta Del Sur Pueblo tribe (Texas) |

45-50 |

2 |

Tribal youth members ages 14 to 17 enrolled in two local high schools |

Youth will receive group sessions of an integrated Leadership curriculum based on nation building theory and the Pueblo Revolt Timeline, which includes the Tigua lecture series to teach youth about their history, language and tribal government and the various services offered by the departments. Youth will also receive individually based wraparound services. |

Sources: Grantees’ presentations at annual P3 conference in June 2016, grantees’ draft evaluation plans, and grant applications.

2. Overview of the P3 evaluation

The National Evaluation of P3 includes the provision of evaluation technical assistance to the P3 grantees and their local evaluators, an outcomes analysis based on administrative data already being collected by the grantees, and systems and process analyses. These latter two analyses, as depicted in the program model (Figure A.1), will seek to determine how the pilots operate at both the systems and program levels. They are the focus of this data collection package.

At the systems level, grantees seek to establish partnerships and work on goals such as integrating data systems and procedures or seeking approaches with more established evidence of effectiveness. Ideally these activities will promote effective collaboration and produce cost efficiencies, among other outputs—or achieve the “collective impact” model of broad participation and intensive focus of resources—leading to better system coordination and alignment, more integrated data systems, fewer barriers to effective supports for disconnected youth, and greater knowledge of what works to improve youth outcomes.

At the program/participant level, pilots may implement or expand programs or services for youth based on evidence-based models, recruit participants, and engage and retain youth. The goal of these activities and outputs are improved outcomes for youth such as employment, engagement or retention in education, and well-being. Activities, outputs, and outcomes at both levels will be influenced by contextual factors, such as the local economy and community conditions, and a set of challenges and opportunities that limit or enhance their progress.

The data collection activities described in this package will provide data for a systems analysis and an implementation evaluation of the first cohort of P3 grantees. This information will address five main research questions:

How do the pilots use the financial and programmatic flexibilities offered by P3 to design and implement interventions to improve the outcomes of disconnected youth?

How has each pilot aimed to leverage the P3 flexibilities to enhance its partnerships and work across partners to provide effective services to disconnected youth? To what extent have these aims materialized?

Who are the youth who participate in P3 and what services do they receive?

What systems and programmatic changes and efficiencies resulted from P3?

What lessons can be drawn for developing integrated governance and service strategies to improve the outcomes of disconnected youth?

3. Data collection activities requiring clearance

This package requests clearance for three data collection activities conducted as part of the evaluation’s implementation and systems analyses: (1) site visit interviews; (2) focus group discussions with program participants; (3) a survey of partner managers; and (4) a survey of partner service providers. The data collection instruments associated with these activities that require Office of Management and Budget (OMB) approval are:

Site visit master protocol. In-person visits to the nine grantees will provide information on implementation of P3. The study team will conduct interviews with grantee administrators and staff, partner leaders and managers, and frontline staff. We will conduct two site visits to each pilot. The first visits, planned for early 2017, will be two-person visits and led by a member of the evaluation leadership team, and the second visits, planned for around spring 2018, will be one-person visits. Depending on the scope of the pilot, the visits will be from two to three days. This protocol is provided as Instrument 1.

Youth focus group discussion guide. Focus group discussions with P3 youth participants will provide important information about youths’ program experiences, views on whether the program is meeting their needs, challenges that interfere with their participation, and suggestions for program improvements. To collect this information, the study team will conduct an average of three focus groups at each of the nine pilots across the two site visits. The study team anticipates including an average of eight youth in each focus group, and that each interview will last about one hour. The youth focus group protocol is provided as Instrument 2, with Instruments 3 and 4 providing consent forms.

Partner manager survey. A survey of partner managers will provide systematic information about how partner managers perceive the P3 collaboration and the relationships across P3 partners. The site visitor will administer a short survey (about 5 minutes) to partner managers after concluding the site visit interview with the partner manager. We anticipate administering this survey to an average of 10 partner managers per grantee. We provide the survey as Instrument 5.

Partner network survey. A survey of partners will provide systematic information about the relationship across providers. The study team will administer a short survey (about 10 minutes) to direct service staff of each pilot partner. We anticipate conducting this survey once to up to 10 provider staff at each grantee. The survey will use social network analysis questions to focus on partners’ interactions with one another. The study team will field the survey in each pilot shortly after completing its first site visit. We provide the partner network survey as Instrument 6.

Table A.2 lists each instrument included in this request.

Table A.2. Data collection activity and instruments included in the request

Data collection activity/instrument |

Site visit interviews 1. P3 site visit master protocol |

Youth focus groups 2. Focus group discussion protocol |

Survey of partner managers 3. Partner manager survey |

Survey of partner service providers 4. Partner network survey

|

A.2. Purposes and use of the information

The study team will use the data collected through activities described in this request to thoroughly document and analyze (1) the grantees’ local networks or systems for serving disconnected youth and how these systems changed as a result of P3; and (2) the grantees’ implementation of program services under P3. In Section A.16, Plans for tabulation and publication of results, we provide an outline of how the study team will analyze and report on all data collected.

1. Site visit interviews

The most important source of data for understanding local systems and program services will be in-person interviews with the staff of P3 grantees, partners, and service providers. During two site visits to each P3 pilot at different phases of operation, the study team will collect information from several sources. The first visit, occurring in early 2017, will focus on the pilots’ start-up efforts, including initial discussions about the potential areas for blending and braiding of funds and programmatic waivers; the work of the lead agency in managing the P3 collaboration; the planned system changes—for example, those related to partners’ sharing of customers’ information, performance agreements, and management information systems; the process for mobilizing and communicating across key partners; and early implementation of the P3 program, including successes and challenges. The second visit, planned for spring 2018, will collect information on how systems, service models, and partnerships are evolving, including the process for identifying modifications to the grants, any new waivers, or funding changes (whether they required P3 authority or not); the pilots’ cost implications; and pilots’ sustainability plans, ongoing implementation challenges, and their solutions. During both visits, we will discuss potential efficiencies created in the P3 system, the service paths for youth, and staff experiences, among other topics.

The two site visits, lasting from two to three days, will include semi-structured interviews with administrators and staff from the grantees and partners. The researchers conducting the visits will use a modular interview guide, organized by major topics that they can adapt based on the respondent’s knowledge base, to prompt discussions on topics of interest to the study.

Researchers with substantial experience conducting visits to programs serving youth in different settings, including tribal communities, will conduct all site visits. Although experienced, all visitors will be trained before each site visit round to ensure they have a common understanding of the P3 program, site visit goals, the data collection instruments, and the procedures for consistent collection and documentation of the data. A senior member of the P3 study team, who also has extensive qualitative research experience related to youth programs, will lead each first-round visit. An experienced qualitative researcher will support the lead visitor on the first round and conduct the second visit.

The P3 Site Visits Master Protocol will guide these on-site activities (Instrument 1), and Table A.2 displays the topics that the study team will address with each on-site activity.

2. Youth focus groups

Across the two visits to each pilot, the study team will conduct three focus groups with participating youth to learn about their initial interest and enrollment in P3, program experiences, views on whether the program is meeting their needs, challenges that interfere with their participation, suggestions for program improvement, and expectations for the future. We will coordinate with the lead agency to ensure that we invite and recruit a diverse set of program participants. Possible dimensions of diversity include: gender, race/ethnicity, age, and length of time in the program. Even though the focus group participants will not be representative of all pilot participants, they will offer perspectives on program operations and experiences that differ from those of staff members. The study team anticipates that each focus group will include an average of eight youth and will last about one hour. We will offer a $20 incentive to encourage participation. The Youth Focus Group Protocol will guide these on-site discussions (Instrument 2), and Table A.3 displays the topics that the study team will address with focus group participants.

Table A.3. Examples of topics for site visit interviews and focus groups, by respondent

|

Interviews |

|

||||

Topics of interest |

Grantee lead |

Pilot manager |

Data systems manager |

Partner managers |

Front-line staff |

Focus groups of youth |

Community context |

||||||

Local labor market conditions |

|

|

|

|

|

|

Local network of organizations |

|

|

|

|

|

|

Network factors affecting delivery of services to youth |

|

|

|

|

|

|

Defining the pilots |

||||||

Organizational structure/system |

||||||

Lead agencies and roles |

|

|

|

|

|

|

Partners and roles |

|

|

|

|

|

|

Other community organizations/ stakeholders |

|

|

|

|

|

|

Program model and stage of development |

||||||

Theory of change |

|

|

|

|

|

|

Program model |

|

|

|

|

|

|

Needs identified |

|

|

|

|

|

|

Stage of development |

|

|

|

|

|

|

Flexibility |

||||||

Identified areas for flexibility |

|

|

|

|

|

|

Role of state and federal governments |

|

|

|

|

|

|

Funding |

||||||

Discretionary and other funding used for P3 |

|

|

|

|

|

|

Braiding and blending of funds |

|

|

|

|

|

|

Leveraged or other funding |

|

|

|

|

|

|

Pilot’s use of start-up funds |

|

|

|

|

|

|

Funding challenges |

|

|

|

|

|

|

Waivers |

||||||

Waivers requested and those approved |

|

|

|

|

|

|

Requests or considerations for additional waivers |

|

|

|

|

|

|

Partnerships, management, and communications |

||||||

Partner network |

||||||

Collaboration on designing P3 |

|

|

|

|

|

|

Prior (pre-P3) relationship |

|

|

|

|

|

|

Type(s) of partnership arrangements |

|

|

|

|

|

|

P3 partners’ shared vision |

|

|

|

|

|

|

P3 effect on partnerships |

|

|

|

|

|

|

P3 partnership effect on youth outcomes |

|

|

|

|

|

|

Partnership strengths and weaknesses |

|

|

|

|

|

|

Management and continuous program improvement |

||||||

Decision-making processes |

|

|

|

|

|

|

Management tools |

|

|

|

|

|

|

Assessment of performance |

|

|

|

|

|

|

Communications |

||||||

Mode and frequency |

|

|

|

|

|

|

P3-engendered changes |

|

|

|

|

|

|

Communications strengths and weaknesses |

|

|

|

|

|

|

The P3 program |

||||||

Development of P3 program/intervention |

||||||

Origins of intervention |

|

|

|

|

|

|

Use of evidence-based practices |

|

|

|

|

|

|

Differences in partners’ vision of program design |

|

|

|

|

|

|

Staff |

||||||

Structure and number |

|

|

|

|

|

|

Training, cross-training |

|

|

|

|

|

|

Communication across frontline staff |

|

|

|

|

|

|

Youth participants |

||||||

Target population |

|

|

|

|

|

|

Participant characteristics |

|

|

|

|

|

|

Eligibility criteria |

|

|

|

|

|

|

Recruitment process |

|

|

|

|

|

|

Any changes since design |

|

|

|

|

|

|

Intake and enrollment |

||||||

Intake/enrollment process and integration across partners |

|

|

|

|

|

|

Information sharing, access, and use |

|

|

|

|

|

|

P3-engendered changes to process, and challenges |

|

|

|

|

|

|

Youth services |

||||||

Menu of services and for which youth |

|

|

|

|

|

|

Roles of partners in service delivery |

|

|

|

|

|

|

Length and dosage of participation |

|

|

|

|

|

|

Definition of “completion” of the program |

|

|

|

|

|

|

Follow-up services |

|

|

|

|

|

|

Data systems and sharing |

||||||

Context |

||||||

Existing climate for sharing data |

|

|

|

|

|

|

Prior (pre-P3) data sharing and agreements |

|

|

|

|

|

|

Existing challenges |

|

|

|

|

|

|

P3 data systems and data |

||||||

Systems used to track participation and outcomes |

|

|

|

|

|

|

Systems shared and how |

|

|

|

|

|

|

Data agreements |

|

|

|

|

|

|

Data collected on participants |

|

|

|

|

|

|

Length, type, and methods of data collection |

|

|

|

|

|

|

How data are shared |

|

|

|

|

|

|

Follow-up data collected |

|

|

|

|

|

|

Data reports generated |

|

|

|

|

|

|

P3-engendered changes in systems, data, and sharing |

|

|

|

|

|

|

P3-related systems and data challenges |

|

|

|

|

|

|

Federal role and technical assistance |

||||||

Awareness of P3 |

|

|

|

|

|

|

Federal role and supports |

|

|

|

|

|

|

Interactions with federal agencies |

|

|

|

|

|

|

Interactions with other pilots |

|

|

|

|

|

|

Satisfaction with assistance |

|

|

|

|

|

|

P3-related interactions compared with other grant programs |

|

|

|

|

|

|

Assessing P3 |

||||||

Assessing P3’s potential for local change and innovation |

||||||

Ways P3 has affected community, network |

|

|

|

|

|

|

Factors hindering/facilitating innovation |

|

|

|

|

|

|

Service delivery and systems efficiencies |

||||||

Indications of improved efficiency |

|

|

|

|

|

|

Factors hindering/facilitating efficiencies |

|

|

|

|

|

|

Impact of P3 funding flexibility on number of youth served |

|

|

|

|

|

|

Sustainability |

||||||

Plans |

|

|

|

|

|

|

Potential for sustainability |

|

|

|

|

|

|

Perceptions of P3 and pilot’s success |

||||||

Assessment of overall initiative |

|

|

|

|

|

|

Perception of P3 as a governance model |

|

|

|

|

|

|

Perception of youth response |

|

|

|

|

|

|

Perception that pilot goals achieved |

|

|

|

|

|

|

Lessons learned |

||||||

Overall challenges and strategies to address them |

|

|

|

|

|

|

Plans for applying lessons learned |

|

|

|

|

|

|

3. Survey of partner managers

The survey of partner managers will provide important systematic information about how the partner managers view collaboration within their communities. Although site visit interviews will provide important information on the P3 system and how the P3 partners have worked together since the initial application design through the implementation of the P3 pilot, the survey, which draws upon the Wilder Collaboration Factors Inventory (Wilder 2013), will provide a unique opportunity to assess key aspects of the partnership using quantitative data.

Following the conclusion of the on-site interview with a partner manager, we will request that the manager complete the short paper survey. The site visitor will strive to collect the survey while on site; but, if that is not feasible, he or she will provide a pre-addressed, pre-stamped envelope for the respondent to return the completed survey. With this in-person contact, we will seek a response rate of 100 percent.

4. Survey of partner service providers

Following the first site visit, we will administer by email a short (about 10 minutes) survey to staff of partners who provide direct services to the P3 youth participants (we estimate up to 10 respondents per pilot). Drawing on prior network surveys Mathematica has conducted, the survey will ask about partner interactions with one another, enabling us to obtain more systematic and discrete information about partner relationships than we could obtain through site visit interviews alone. During the first site visit, we will introduce the survey, talk with grantee and partner staff about each partner’s appropriate respondent for the survey, and encourage the partners’ participation when they receive the emailed survey. We will seek a response rate of 90 percent or higher by relying on support from the grantee, developing relationships with pilot staff during the first visit and grantee conferences, and encouraging survey completion during on-site interviews.

A.3. Use of technology to reduce burden

The National Evaluation of P3 will use multiple methods to collect study information and, when feasible and appropriate, will use technology to reduce the burden of the data collection activities on providers of the data.

Site visits. Site visit interviews have relatively low burden, and the qualitative data to be collected do not benefit from technology, other than digitally recording interviews upon approval. We will avoid unnecessary data collection burden by covering topics not available from other sources.

Youth focus group. The study team will conduct youth focus group discussions in person without the use of information technology, other than digital recordings.

Partner manager survey. The site visit team will administer the survey on-site without the use of information technology.

Partner network survey. We will distribute the partner network survey by email. It does not contain or request sensitive or personally identifiable information (PII). Given the instrument’s brevity and the fact that it does not request or contain PII, using a PDF document attached to email is the least burdensome and most accessible means of collecting the data. Partner respondents can open the PDF attachment to the introductory email, enter their responses, and forward the email back to the sender with the completed document attached. They can do so at a convenient time and not be held to a scheduled appointment, as would be the case if data collection took place by phone or in person. The survey will ask each partner staff the same three questions about the other partners at that grantee.

The use of email enables self-administration of the P3 partner network survey, as well as tracking survey completes. We will use partner contact information, gathered during the P3 site visit, to distribute the survey to the partner staff identified as respondents for the survey. We will preload the full list of partners into the PDF document to obtain a response that relates to each partner. The PDF will allow respondents to enter responses (only check marks or Xs are necessary) but prevent them from revising any other text or information in the questionnaire. The survey does not contain complex skip patterns, and respondents will be able to view the question matrix with each possible category of response (across the top) and the full range of partners (down the side) on one sheet. This approach is commonly used for network analysis data collection to help respondents consider their levels of connectivity with all partners of the network and assess their relationships using a common set of considerations regarding the question of interest. The approach can only be used when the network is known ahead of time and the number of partners is relatively small, and it has the added advantage of facilitating data entry and analysis in that respondents provide information about all partners in the network. If respondents are not able to complete the survey in one sitting, they may save the document and return to it at another time, further reducing the burden on the respondent.

A.4. Efforts to avoid duplication

The data the study team is collecting from the site visits, youth focus group, and partner network survey for the National Evaluation of P3 are not otherwise available from existing sources. We will conduct interviews with the same staff during the two site visits but the questions will not be the same. The first visit will focus on pilots’ planning, partnership building, and early program implementation. The second visit will focus on understanding any changes that have occurred in the system since the first visit and program service levels, challenges and successes, and plans for sustainability. We will not ask youth participants to participate in more than one focus group, and we will conduct the partner network survey only once following the first site visits. We will conduct the partner manager survey in both rounds of site visits in order to track any changes in the P3 partnership.

A.5. Methods to minimize burden on small entities

We have developed the instruments to minimize burden and collect only critical evaluation information.

A.6. Consequences of not collecting data

The federal government is intent on learning how P3 has helped local communities overcome barriers to effectively serve disconnected youth and improve youths’ outcomes. Without the information collected as part of the study, federal policymakers will not have an analysis of how P3 has affected local systems for serving disconnected youth and P3-engendered programmatic changes to improve the outcomes of disconnected youth. Information collected will be important for informing future rounds of P3 as well as other federal initiatives granting administrative flexibilities to grantees of discretionary funding.

A.7. Special circumstances

No special circumstances apply to this data collection. In all respects, we will collect the data in a manner consistent with federal guidelines. No plans require respondents to report information more often than quarterly, submit more than one original and two copies of any document, retain records, or submit proprietary trade secrets.

A.8. Federal Register announcement and consultation

1. Federal Register announcement

The 60-day notice (81 FR 31664) to solicit public comments was published in the Federal Register on May 19, 2016. No comments were received.

2. Consultation outside of the agency

Consultations on the research design, sample design, and data needs were part of the study design phase of the National Evaluation of P3. These consultations ensured the technical soundness of study sample selection and the relevance of study findings and verified the importance, relevance, and accessibility of the information sought in the study.

Mathematica Policy Research

Jeanne Bellotti

Cay Bradley

Karen Needels

Linda Rosenberg

Social Policy Research Associates

Andrew Wiegand

A.9. Payments or gifts

The study team plans to offer gift cards to youth participating in the focus groups respondents as part of the data collection activities described in this clearance request. Each youth participant will receive a $20 gift card in appreciation of his or her contributions toward the research. Previous research has shown that sample members with certain socioeconomic characteristics, particularly those with low incomes and/or low educational attainment, have proven responsive to incentives (Duffer et al. 1994; Educational Testing Service 1991). We will not offer site visit interview respondents and partner network survey respondents any payments/gifts because their participation can be considered part of their regular work responsibilities, given the grant.

A.10. Assurances of privacy

We are conducting the National Evaluation of P3 in accordance with all relevant regulations and requirements, including the Privacy Act of 1974 (5 U.S.C. 552a); the Privacy Act Regulations (34 CFR Part 5b); and the Freedom of Information Act (5 CFR 552) and related regulations (41 CFR Part 1-1, 45 CFR Part 5b, and 40 CFR 44502). Before they participate in a focus group, we will ask youth 18 or older for their consent to participate. For potential participants who are younger than 18, we will ask for their parent’s or guardian’s consent for their child to participate and then collect the youths’ assent to participate before proceeding with the focus group.

We will notify all interview respondents that the information they provide is private, that all data reported in project reports will be de-identified, and that the study team will carefully safeguard study data. All study team site visitors and interviewers will receive training in privacy and data security procedures.

1. Privacy

Site visits and youth focus groups. No reports shall identify P3 sites and youth focus group participants, nor will the study team share interview notes with DOL or anyone outside of the team, except as otherwise required by law. Site visit interviews and focus groups will take place in private areas, such as offices or conference rooms. At the start of each interview, the study team will read the following statement to assure respondents of privacy and ask for their verbal consent to participate in the interview:

Everything that you say will be kept strictly private within the study team. The study report will include a list of the P3 grantees and their partners. All interview data, however, will be reported in the aggregate and, in our reports, we will not otherwise identify a specific person, grantee, or partner agency. We might identify a pilot by name or a type of organization or staff position if we identify a promising practice.

This discussion should take about <duration> minutes. Do you have any questions before we begin? Do you consent to participate in this discussion?

<If recording interview>: I would like to record our discussion so I can listen to it later when I write up my notes. No one outside the immediate team will listen to the recording. We will destroy the recording after the study is complete. If you want to say something that you do not want recorded, please let me know and I will be glad to pause the recorder. Do you have any objections to being part of this interview or to my recording our discussion?

This statement is available at the top of the P3 Site Visit Master Protocol (Instrument 1).

We will ask youth recruited for and participating in focus groups to sign a consent form. If the youth is younger than 18, we will ask program staff to collect parent/guardian consent before allowing the minor to participate. The consent forms are attached (Instruments 3 and 4). At the start of the focus group, the facilitator will also indicate that the comments will be kept private:

To help us better understand how [PROGRAM NAME] is working, we would like to ask you some questions about how you came to participate in it and your experiences. This discussion will be kept private. We will not share any information you provide with staff from [PROGRAM NAME]. In addition, our reports will never identify you by name. Instead, we will combine information from this discussion with information from discussion groups in other programs. Participants’ comments will be reported as, “One person felt that. . .” or “About half of the participants did not agree with…”

I hope you will feel free to talk with us about your experiences. I ask that none of you share what you hear with others outside the group. It will also help me if you speak clearly and if you will speak one at a time. The discussion should last about one hour.

I’d like to record the discussion so we don’t have to take detailed notes and can listen carefully to what you are saying. The recording is just to help me remember what you say. No one outside of the research team will have access to the tape. Are there any objections?

Let’s get started. [HIT THE RECORD BUTTON].

I have hit the record button. Any objections to recording this discussion?

This statement can is available at the top of the P3 Youth Focus Group Protocol (Instrument 2).

Partner manager survey. We will administer the survey so that we maintain respondents’ privacy. The introduction to the survey contains the following statement: “All of your responses will remain private and will not be shared with anybody from outside the evaluation team; nobody from the grantee, the community partners, or federal partners will see your responses.” This statement is available at the beginning of the survey (Instrument 5).

However, for the study team’s analysis of partners’ perceptions of collaboration and how it changes over time, it will be important to identify the respondent and his or her partner organization. Thus, prior to the site visit, the survey team will generate identification numbers for each partner manager and affix labels with the number onto the survey handed to the respondent. The site visitor will be responsible for handing the appropriate survey to each partner manager. We will further protect respondents’ privacy by providing them with a pre-addressed, pre-stamped envelope to return the survey in the event that they are unable to hand it directly to the site visitor upon completion.

Partner network survey. No reports shall identify the survey respondents. The survey instrument will request only the name of the organization and the respondent’s job title and responsibilities. All other data items that identify survey respondents—respondent name and contact information—will be stored in a restricted file that only the study team can access. As the study team is not requesting respondent names as part of the survey, analysis files will also not contain respondent names. The introduction to the partner network survey contains the following statement assuring respondents of privacy: “Your name and responses will be kept private to the extent of the law. Findings from the survey will be reported in aggregate form only so that no person can be identified.” This statement is available at the beginning of the survey (Instrument 6).

To further remove any connection between individuals and their partner network analysis survey responses, we will save each completed survey immediately upon receipt in a secure project folder on Mathematica’s restricted network drives. The saved survey will indicate only the organizational affiliation of the respondent and the P3 partner. We will then delete the survey document from the return email to prevent it from being backed-up on the email servers.

2. Data security

Mathematica’s security staff and the study team will work together to ensure that all data collected as part of the study—including data collected as part of site visits, including interviews, focus groups, and partner manager surveys; and through the partner network survey (including interview recordings)—are handled securely. As a frequent user of data obtained from and on behalf of federal agencies, Mathematica has adopted federal standards for the use, protection, processing, and storage of data. These safeguards are consistent with the Privacy Act, the Federal Information Security Management Act, OMB Circular A-130, and National Institute of Standards and Technology security standards. Mathematica strictly controls access to information on a need-to-know basis. Data are encrypted in transit and at rest using Federal Information Processing Standard 140-2-compliant cryptographic modules. Mathematica will retain the data collected on the National Evaluation of P3 for the duration of the study. We will completely purge data processed for the National Evaluation of P3 from all data storage components of the computer facility in accordance with instructions from DOL. Until this takes place, Mathematica will certify that any data remaining in any storage component will be safeguarded to prevent unauthorized disclosure.

A.11. Justification for sensitive questions

The instruments associated with the National Evaluation of P3 do not contain questions of a sensitive or personal nature. We will not request any personal information from respondents interviewed during site visits, other than the number of years served in their current employment position (interviews) or their age (focus groups). The interviews focus on respondents’ knowledge, experiences, and impressions of P3. Nonetheless, we will inform respondents that they do not have to respond to any questions they do not feel comfortable answering.

A.12. Estimates of hours burden

1. Hours by activity

Table A.4 provides the annual burden estimates for each of the three data collection activities for which this package requests clearance. All of the activities will take place over 36 months. Total annual burden is 195 hours.

Site visits. Interviews with P3 administrators/managers and frontline staff will last, on average, 1.25 hours. Most will be one-on-one interviews, but we anticipate that some of the frontline-staff interviews will be with small groups of two to three staff. We estimate the maximum total hours for P3 data collection at the nine sites will be 337.5, which includes 168.75 hours per each round of site visits (9 sites × 15 respondents × 1.25 hour per interview).

Youth focus groups. We expect to conduct an average of three one-hour focus group discussions with youth participants at each of the nine P3 sites across the two rounds of site visits. We expect that eight youth will attend each focus group. Thus, we estimate the total maximum reporting burden for the youth focus groups will be 216 hours (9 sites × 3 interviews per pilot × 8 respondents in each group × 1 hour per group discussion).

Partner manager survey. We expect to conduct the survey with approximately 10 partner managers (9 P3 sites with an average of 10 partner managers per site). The survey will take an average of 5 minutes (.08 hours) for the partner manager to complete. We will administer the survey in each round of site visits.

The total estimated reporting burden for the P3 partner managers participating in the survey is 15 hours (9 P3 sites × 10 partners × 0.08 hours (5 mins.) per survey × 2 survey rounds).

Partner network survey. We expect to conduct the survey with approximately 90 partners (9 P3 sites with up to 10 partner staff per site). We expect the survey will take about 10 minutes (0.17 hours) to complete, on average, per respondent. We will stagger survey administration, enabling us to test administration procedures in one pilot before administering to all sites.

The total estimated reporting burden for the P3 partners participating in the survey is 15 hours (9 P3 sites × 10 partners × 0.17 hours per survey × 1 survey round).

Table A.4. Annual burden estimates for data collection activities (over 36 months)

Respondents |

Total number of respondents over evaluation |

Number of responses per respondent |

Annual Number of Responses |

Average burden time per response |

Total burden hours over evaluation |

Annual burden hours |

|

Site visit interviews |

|

|

|

|

|

|

|

Administrators and staff |

135 |

2 |

90 |

1.25 hours |

337.50 |

112.5 |

|

Focus group discussions |

|

|

|

|

|

|

|

Youth |

216 |

1 |

72 |

1 hour |

216 |

72.0 |

|

Partner manager survey |

|

|

|

|

|

|

|

Partner managers |

90 |

2 |

60 |

5 minutes |

15 |

5.0 |

|

Partner network survey |

|

||||||

Frontline staff |

90 |

1 |

30 |

10 minutes |

15 |

5.0 |

|

Total |

531 |

-- |

252 |

-- |

583.5 |

194.5 |

|

2. Total estimated burden hours

The total estimated maximum hours of burden for the data collection included in this request for clearance is 584 hours (see Table A.4), which equals the sum of the estimated burden for the semi-structured interviews, youth focus groups, the partner manager survey, and the partner network survey (337.5 + 216 + 15+ 15 = 584).

The total monetized burden estimate for this data collection is $10,784 (Table A.5). Using the average hourly wage of social and community service managers taken from the U.S. Bureau of Labor Statistics, National Compensation Survey, 2015 (http://www.bls.gov/ncs/ncspubs_2015.htm), the cost estimate for this staff burden is $33.38. Therefore, the maximum cost estimate for grantee and partner managers to participate in interviews is $4,506.30 (135 × $33.38). The cost for partner managers to participate in the partner manager survey is $501 (15 × $33.38).The average hourly wage of miscellaneous community and social service specialists taken from the U.S. Bureau of Labor Statistics, National Compensation Survey, 2015, is $19.36. Therefore, the cost estimate for frontline staff from across different grantees and partners to participate in site visit interviews is $3,920.40 (202.5 hours × $19.36). The cost for frontline staff to participate in the survey is $290.10 (15 hours × $19.36). We assume that cost for youth participation is the federal minimum wage ($7.25 per hour) for a cost of $1,566 (216 hours × $7.25).

Table A.5. Monetized burden hours, over 36 months

Respondents |

Total maximum burden (hours) |

Type of respondent |

Estimated hourly wages |

Total indirect cost burden |

Annual monetized burden hours |

Semi-structured interviews |

|||||

Grantee and partner managers |

135 |

Manager |

$33.38 |

$4,506 |

$1,502 |

Frontline staff |

202.5 |

Frontline staff |

$19.36 |

$3,920 |

$1,307 |

Subtotal |

337.50 |

-- |

-- |

$8,427 |

$2,809 |

Youth focus groups |

|||||

Youth |

216 |

Youth |

$7.25 |

$1,566 |

$522 |

Partner manager survey |

|||||

Partner managers |

15 |

Partner manager |

$33.38 |

$501 |

$167 |

Partner network survey |

|||||

Frontline staff |

15 |

Partner staff |

$19.36 |

$290 |

$97 |

Total |

583.5 |

-- |

-- |

$10,784 |

$3,595 |

A.13. Estimates of cost burden to respondents

There will be no direct costs to respondents for the National Evaluation of P3.

A.14. Annualized costs to the federal government

DOL, like most other federal agencies, uses contracts with firms that have proven experience with program evaluation to conduct all evaluation activities. Federal employees will rely on contract staff to perform the majority of the work described in this package, and have no direct role in conducting site visit discussions or focus groups, developing study protocols or designs, the direct collection of data using these instruments, or the analysis or production of reports using these data. The role of federal staff is almost entirely restricted to managing these projects. The costs incurred by contractors to perform these activities are essentially direct federal contract costs associated with conducting site visits, discussions, and focus groups.

This estimate of federal costs is a combination of (1) direct contract costs for planning and conducting this research and evaluation project, including any necessary information collection and (2) salary associated with federal oversight and project management.

Estimates of direct contract costs. There are two categories of direct costs to the federal government associated with conducting this project. These costs are routine and typical for studies such as this. The first category is design and planning, including external review of the design by a technical working group of outside subject matter experts, and development of instruments. This work is estimated to cost $677,850. The second category is data collection and reporting, which will occur through the project period, and is estimated to cost $2,237,216. The total estimated direct costs are:

$677,850 (design) + $2,237,216 (data collection and reporting) = $2,915,066

Although this project is expected to last five years, an accurate estimate of the annualized direct contract cost will vary considerably from year to year because the tasks are focused on specific periods in the project life cycle. The design and planning costs are obviously front-loaded, the data collection costs will be incurred throughout the project, and the analysis and reporting costs will occur close to the end of the project. As a very basic estimate, the total estimated direct costs can cost can be divided by the five years of the study to produce an estimate of the average annualized cost (see Table A.6):

$2,915,066 / 5 years of study = $583,013 per year in estimated direct contract costs.

Estimates of federal oversight and project management costs. Staff in the Office of the Chief Evaluation Officer have regular duties and responsibilities for initiating, overseeing, and administering contracts to perform research and evaluation on behalf of agency programs and offices. In the event that OMB approves this information collection request, federal staff would need to perform certain functions that, although clearly part of their normal duties, would be directly attributable to this specific research and evaluation project. For purposes of calculating federal salary costs, DOL assumes:

A Senior Evaluation Specialist, GS-15, step 2, based in the Office of the Chief Evaluation Officer in Washington, D.C., who would earn $63.42 per hour to perform this work, and would spend approximately one-eighth of his or her annual time (2,080 hours / 8 = 260 hours) on this project. Total estimated annual federal costs for this individual are 260 hours × $63.42/hour = $16,489.20.

A Senior Evaluation Specialist, GS-14, step 2, based in the Office of the Chief Evaluation Officer in Washington, D.C., who would earn $53.91 per hour to perform this work, and would spend approximately one-fourth of his or her annual time (2,080 hours / 4 = 520 hours) on this project. Total estimated annual federal costs for this individual are 520 hours × $53.91/hour = $28,033.20.

Table A.6. Estimated annual federal costs for the National Evaluation of P3

Estimates of annual federal costs |

|

Direct contracts costs |

$583,013 |

Federal oversight and management |

|

1 GS-15 (1/8 time) |

$16,489 |

1 GS-14 (1/4 time) |

$28,033 |

Subtotal for federal oversight and management |

$44,522 |

Total annual cost |

$627,535 |

Note: Federal staff costs are drawn from the most current available estimates of wages and salaries available at https://www.opm.gov/policy-data-oversight/pay-leave/salaries-wages/salary-tables/16Tables/html/DCB_h.aspx.

A.15. Reasons for program changes or adjustments

This is a new submission. There is no request for program changes or adjustments.

A.16. Plans for tabulation and publication of results

1. Data analysis

The National Evaluation of P3 will use the rich information collected from all sources to describe the P3 grantees’ systems and models for delivery services to disconnected youth. The analysis plan consists of a mixed-method approach with three steps: (1) organize the qualitative data from the site visits and focus groups; (2) identify themes and patterns in the data within and across grantees; and (3) conduct a network analysis using data from the partner survey.

Organize the qualitative data. Analyzing qualitative data is inherently challenging because it requires combining information from different sources, a great deal of which is unstructured. Our first strategy to manage the volume of data will be to develop structured templates and checklists for site visitors to distill the information they collect during site visit interviews and focus groups. Through these templates, site visitors will respond to specific questions and avoid long narratives on particular topics of interest. Our second strategy will be to lay an analytic foundation by organizing the data from the site visits and focus groups using qualitative data analysis software, such as NVivo.

Identify themes and patterns in the data. A critical part of the analytic approach will be to draw on multiple sources, including different respondents within a P3 pilot, and interview and programmatic data, to triangulate the data. Both agreements and discrepancies in respondents’ responses or across data sources can provide useful information on pilots’ implementation experiences and their successes and challenges. Within each pilot, we will analyze information from interviews on the effects P3 has had on its system or network for providing services to disconnected youth and how the provision of services to these youth has changed. After the study team has analyzed and organized all of the site visit data across all pilots, it will examine the data across the pilots to look for similarities in system changes and models of organization, service delivery, or other characteristics.

Analyze the partner manager survey data. The survey will explore the quality of the P3 partnerships from the partner manager perspective. We will tabulate the responses of the survey by pilot and also explore responses by partner types, for example, public and private partners, to analyze differences between them. We also will conduct simple tabulations and analyses to analyze changes in collaboration between the first and second site visits.

Conduct a network analysis using data from the partner network survey. The survey will explore the structure and strength of the networks that P3 grantees created to serve disconnected youth by assessing a number of specific characteristics of each grantee network. The survey will gather information about the frequency of communication and the change in communication over time, and the helpfulness of various partners in serving disconnected youth. The study team will not request respondents’ names on the survey instrument, only organization names. Further, although the study team will conduct the analysis separately for each P3 grantee, individual partners will not be identified in the presentation of findings. Instead, we will discuss partner networks by types of partners, not specific partner entities. In this way, results from the partner network survey will not reveal identities of any respondents.

The study team will use two primary measures to describe and depict service delivery networks within and across the P3 grantees: density (interconnectedness) and centrality (prominence). Density is the proportion of possible relationships that are actually present and measures the extent to which each partner is connected with all others across the network as a whole. Centrality examines the prominence of individual entities within the network by identifying the partner entities that are most sought after (indegree centrality). The study team will examine the measures of prominence for specific partners within the select networks across the two measures for comparison. We expect that we may find differences in the network interconnectedness and centrality of partners based on any communication and based specifically on changes in communication.

Using sociograms, the study team will illustrate the patterns in the size of partner networks, the strength of the relationships across partners, and the direction of partnerships. These sociograms will depict the density and centrality of P3 networks based on (1) contact frequency and (2) change in contact frequency since becoming involved in P3.

In addition to sociograms, the study will produce tables that present network-level characteristics such as overall density and centralization (measures discussed above). The study team will also present figures of helpfulness ratings in the P3 pilots, illustrating the centrality of specific partners in each network.

2. Publication plan and schedule

We will present findings from the evaluation in interim and final reports. Table A.7 shows the schedule for the study.

Table A.7. Schedule for the National Evaluation of P3

Activity |

Date |

Conduct round 1 data collection (site visit interviews and focus groups, partner manager survey, partner network survey) |

January – March 2017 |

Interim report |

Fall 2017 |

Conduct round 2 data collection (site visit interviews, focus groups, and partner manager survey) |

March – May 2018 |

Interim report |

Winter 2018 |

Final report |

December 2020 |

A.17. Approval not to display the expiration date for OMB approval

The OMB approval number and expiration date will be displayed or cited on all forms completed as part of the data collection.

A.18. Explanation of exceptions

No exceptions are necessary for this information collection.

REFERENCES

Dion, R., M.C. Bradley, A. Gothro, M. Bardos, J. Lansing, M. Stagner, and A. Dworsky. “Advancing the Self-Sufficiency and Well-Being of At-Risk Youth: A Conceptual Framework.” OPRE Report 2013-13. Washington, DC: U.S. Department of Health and Human Services, Office of Planning, Research and Evaluation, Administration for Children and Families, 2013.

U.S. Government. “Consultation Paper. Changing the Odds for Disconnected Youth: Initial Design Considerations for the Performance Partnership Pilots, Washington, DC: U.S. Government, 2014.

White House Council for Community Solutions. “Final Report: Community Solutions for Opportunity Youth.” 2012. Available at http://www.serve.gov/sites/default/files/ctools/12_0604whccs_finalreport.pdf. Accessed February 8, 2016.

Wilder Foundation. “Wilder Collaboration Factors Inventory.” 2013. Available at https://www.wilder.org/Wilder-Research/Research-Services/Documents/Wilder%20Collaboration%20Factors%20Inventory.pdf. Accessed February 9, 2016.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | Part A: Justification for the Collection of Data for the Institutional Analysis of American Job Centers |

| Author | MATHEMATICA |

| File Modified | 0000-00-00 |

| File Created | 2021-01-23 |

© 2026 OMB.report | Privacy Policy

igure

A.1. P3 program model

igure

A.1. P3 program model