Part A PIAAC 2017

Part A PIAAC 2017.docx

Program for the International Assessment of Adult Competencies (PIAAC) 2017 National Supplement

OMB: 1850-0870

Program for the International

Assessment of Adult Competencies (PIAAC)

2017

National Supplement

Supporting Statement Part A

Submitted by:

National Center for Education Statistics

U.S. Department of Education

Institute of Education Sciences

Washington, DC

July 2016

Updated April 2017

Table of Contents

A. Justification 3

A.1 Importance of Information 3

A.2 Purposes and Uses of the Data 4

A.3 Improved Information Technology (Reduction of Burden) 6

A.4 Efforts to Identify Duplication 7

A.5 Minimizing Burden for Small Entities 8

A.6 Frequency of Data Collection 8

A.8 Consultation outside NCES 8

A.9 Payments or Gifts to Respondents 8

A.10 Assurance of Confidentiality 8

A.13 Total Annual Cost Burden 11

A.14 Annualized Cost to Federal Government 11

A.15 Program Changes or Adjustments 12

A.16 Plans for Tabulation and Publication 12

Part B.: Collections of Information Employing Statisitical Methods

Appendix A: PIAAC 2017 National Supplement Screener

Appendix B: PIAAC 2017 National Supplement Background Questionnaire

Appendix C: PIAAC 2017 National Supplement Revisions to the Screener and Background Questionnaire

Appendix D: PIAAC 2017 National Supplement Core Tasks

[Unchanged since PIAAC 2014 approval in June 2013 (OMB# 1850-0870 v.4)]

Appendix E: PIAAC 2017 National Supplement Contact Letters and Brochure

Appendix F: PIAAC Reducing Nonresponse Bias and Preliminary Nonresponse Bias Analysis

[Unchanged since PIAAC 2014 approval in June 2013 (OMB# 1850-0870 v.4)]

Preface

The Program for the International Assessment of Adult Competencies (PIAAC) is a cyclical, large-scale study of adult skills and life experiences focusing on education and employment. PIAAC is designed internationally to assess adults in different countries over a broad range of abilities, from simple reading to complex problem-solving skills, and to collect information on individuals’ skill use and background. In the United States, PIAAC is conducted by the National Center for Education Statistics (NCES), within the U.S. Department of Education (ED), and NCES has contracted with Westat to administer the PIAAC 2017 data collection in the U.S. PIAAC defines four core competency domains of adult cognitive skills that are seen as key to facilitating the social and economic participation of adults in advanced economies: literacy, reading components, numeracy, and problem solving in technology-rich environments. All participating countries are required to assess the literacy and numeracy domains, but the reading components and problem solving in technology-rich environments domains are optional. The U.S. administers PIAAC assessment in all four domains to a nationally representative sample of adults, along with survey questions about their education background, work history, the skills they use on the job and at home, their civic engagement, and sense of their health and well-being. The results are used to compare participating countries on the skills capacities of their workforce-aged adults and to learn more about relationships between educational background, employment, and other outcomes.

PIAAC is coordinated by the Organization for Economic Cooperation and Development (OECD) and developed by participating countries with the support of the OECD. Twenty-four participating countries, including the U.S., completed the first international cycle of PIAAC Main Study in 2012. A new set of nine countries completed it in 2014, and another new set of five countries will complete it in 2017 (Table 1). In addition to the U.S. participating in the PIAAC Main Study data collection in 2012, the U.S. has conducted a national supplement in 2014, and this request is to conduct a 2017 PIAAC National Supplement data collection.

Table 1. PIAAC data collection efforts

Data Collection Name |

International Cycle & Round |

Time Period |

# of Countries |

U.S. Participation |

PIAAC 2012 Main Study |

Cycle 1 – 1st round |

2011-2012 |

24 |

Yes |

PIAAC 2014 Main Study |

Cycle 1 – 2nd round |

2013-2014 |

9 |

No |

PIAAC 2014 National Supplement |

n/a |

2013-2014 |

0 |

U.S. Only |

PIAAC 2017 Main Study |

Cycle 1 – 3rd round |

2017 |

5 |

No |

PIAAC 2017 National Supplement |

n/a |

2017 |

0 |

U.S. Only |

PIAAC 2022 |

Cycle 2 – 1st round |

2021-2022 |

TBD |

Yes |

In the PIAAC 2012 Main Study (August 2011-April 2012), the U.S sample included a nationally representative sample of 5,010 adults (ages 16-65) in 80 primary sampling units (PSUs), and the survey components included a screener, an in-person background questionnaire, and an assessment. In the PIAAC 2014 National Supplement (August 2013-May 2014), the U.S. sample included 3,660 new adults in the same 80 PSUs as in PIAAC 2012, using the same background questionnaire and assessment but a different screener to identify adults from four targeted (i.e. oversampled) subgroups of interest [unemployed adults (ages 16-65), young adults (ages 16-34), older adults (ages 66-74), and adults in prison (ages 16-74)]. The PIAAC 2017 National Supplement (February-September 2017) will be the third PIAAC data collection completed in the U.S. and will include a nationally representative sample of 3,800 new adults (ages 16-74) in a new sample of 80 PSUs, using the PIAAC 2012 Main Study screener, an enhanced version of the PIAAC 2012 Main Study background questionnaire, and the same assessment used during the prior two data collections. PIAAC 2017 National Supplement will not oversample any subgroups and will not include adults in prison.

As noted above, PIAAC is a multi-cycle collaboration between the governments of participating countries, the Organization for Economic Cooperation and Development (OECD), and a consortium of various international organizations, referred to as the PIAAC Consortium [coordinated by the Educational Testing Service (ETS) and including: the German Institute for International Educational Research (DIPF); the German Social Sciences Infrastructure Services’ Centre for Survey Research and Methodology (GESIS-ZUMA); the University of Maastricht; the U.S. firm Westat; the International Association for the Evaluation of Educational Achievement (IEA); and the Belgium firm cApStAn].

An important element of PIAAC is its collaborative and international nature. Within the U.S., NCES collaborates on PIAAC with the U.S. Department of Labor (DoL). Staff from NCES and DoL serve as the U.S. co-representatives on PIAAC's international governing body, and NCES has consulted extensively with DoL, particularly on development of the job skills section of the background questionnaire. Internationally, through: an extensive series of international meetings and workgroups assisted by OECD staff; expert panels; researchers; the PIAAC Consortium’s support staff; and representatives of the participating countries, from both Ministries or Departments of Education and Labor, collaboratively develop the PIAAC framework for the assessment and background questionnaire, develop the common standards and data collection procedures, and guide the development of the software platform for uniformly administering the assessment on laptops. All countries must follow the common standards and procedures and use the same software when conducting the survey and assessment. As a result, PIAAC is able to provide a reliable and comparable measure of adult skills in the adult population across the participating countries. PIAAC is wholly a product of international and inter-department collaboration and, as such, represents compromises on the part of all participants.

This submission, in addition to the supporting statements (Parts A and B), includes the following appendices:

Appendix A – the PIAAC 2017 National Supplement screener – mostly unchanged from that used during the PIAAC 2012 Main Study (OMB# 1850-0870 v.2-3, approved in March 2011). PIAAC 2017 National Supplement will not oversample any subpopulations and thus will not utilize the PIAAC 2014 National Supplement screener (OMB# 1850-0870 v.4).

Appendix B – the PIAAC 2017 National Supplement Background Questionnaire – mostly unchanged from that used during the PIAAC 2014 National Supplement (OMB# 1850-0870 v.4, approved in June 2013)

Appendix C describes the revisions made to the PIAAC 2017 National Supplement screener and background questionnaire as compared to the previous rounds of PIAAC.

Appendix D – the PIAAC 2017 core tasks – unchanged from that which was used during the PIAAC 2012 Main Study and the PIAAC 2014 National Supplement (OMB# 1850-0870 v.2-4).

Appendix E – PIAAC 2017 respondent communication materials – unchanged from those that were used to contact households during the PIAAC 2014 National Supplement (omitting materials specific to the prison sample).

Appendix F – the OECD and PIAAC Consortium guidance regarding nonresponse bias (NRB) – unchanged from that which was used in the PIAAC 2014 National Supplement (OMB# 1850-0870 v.4); it discusses the issue of bias in PIAAC outcome statistics, the survey goals to reduce NRB, and preliminary plans for conducting NRB analysis.

Justification

A.1 Importance of Information

Over the past two decades, there has been growing interest by national governments and other stakeholders in an international assessment of adult skills to monitor how well populations are prepared for the challenges of a knowledge-based society. In the mid-1990s, the International Adult Literacy Survey (IALS) assessed the prose, document, and quantitative literacy of adults in 22 countries or territories, including the U.S. Between 2002 and 2006, the Adult Literacy and Lifeskills (ALL) Survey assessed prose and document literacy, numeracy, and problem-solving in ten countries, including the U.S., and one Mexican state. PIAAC builds on knowledge and experiences gained from IALS and ALL. The majority of its literacy and numeracy assessment items came from IALS and ALL. However, PIAAC extends beyond the previous adult assessments through the addition of the problem solving in technology-rich environments component, designed to measure information processing skills in a digital context. The PIAAC 2012 Main Study aimed to address the growing need to collect more sophisticated information to better match the needs of governments to develop a high-quality workforce capable of solving problems and dealing with complex information that is often presented electronically on computers. The PIAAC 2014 National Supplement provided an additional sample of U.S. adults that, when combined with the PIAAC 2012 Main Study data, enhanced the U.S. PIAAC sample and supported analyses of key U.S. subgroups, including young adults (ages 16-34), unemployed adults (ages 16-65), older adults (ages 66-74), and adults in prison (ages 16-74).

The PIAAC 2017 National Supplement will use the same instruments and procedures that were used during the administration of the PIAAC 2012 Main Study and the PIAAC 2014 National Supplement. By conducting PIAAC, NCES is able to provide policy-relevant data for international comparisons of the U.S. adult population’s competencies and skills, and help inform decision-making on the part of national, state, and local policymakers, especially those concerned with economic development and workforce training.

A.2 Purposes and Uses of the Data

The core objectives for the PIAAC 2017 National Supplement study are to collect a nationally representative sample of the U.S. adult population for 16 to 74 year olds and to provide additional sample, so that when combined with the 2012 and 2014 samples, it can produce indirect, county-level estimates for the U.S. The previous U.S. PIAAC data collections featured large enough sample sizes to provide reasonably precise standard survey "direct" estimates of literacy levels for the nation's adults and for major population groups of interest such as gender and age. However, the sample sizes in some states and in jurisdictions within states, such as counties, were not large enough to produce direct estimates of adequate precision (some larger states may have sufficient sample sizes but the survey design did not support state-level estimation). Indeed, some states and most counties in the nation had no sample in the surveys. Nevertheless, policymakers, business leaders, and educators/researchers often need literacy information for states and counties.

The PIAAC 2017 National Supplement data set will be used to:

Provide a better understanding of the relationship of education and employment with adult skills by providing a nationally representative, mid-decade update of U.S. adult skills;

Provide additional sample for 66 to 74 year olds who were not included in the PIAAC 2012 Main Study;

Improve the representativeness of the U.S. sample by providing indirect county-level estimates for the U.S. when combined with the 2012 and 2014 samples. The county-level estimates are expected to be at about the same level of accuracy as the county-level estimates derived from the 2003 National Assessment and Adult Literacy (NAAL) survey;

Produce U.S. National estimates of proficiency in literacy, numeracy and problem solving from the 2017 national sample and provide an additional set of U.S. data points for evaluating trends in proficiency as compared to estimates from the 2012/2014 combined sample, and earlier adult literacy surveys. These data will also be comparable to the OECD international average and country specific estimates produced as the result of all PIAAC Cycle 1 data collection rounds; and

Produce model-based estimates through small area estimation (SAE) methods using the data collected from all U.S. PIAAC data collection efforts (2012/2014/2017).

Additionally, information from the PIAAC 2017 National Supplement will be used by:

Federal policymakers and Congress to (a) better understand the distribution of skills within and across segments of the population and (b) be able to plan Federal policies and interventions that are most effective in developing key skills among various subgroups of the adult population;

State and local officials to plan and develop education and training policies targeted to those segments of the population in need of skill development;

News media to provide more detailed information to the public about the distribution of skills within the U.S. adult population, in general, and the U.S. workforce more specifically; and

Business and educational organizations to better understand the skills of the U.S. labor force and to properly invest in skills development among key segments of the workforce to address skill gaps.

PIAAC 2017 National Supplement Components

The primary focus of the PIAAC 2017 National Supplement background questionnaire (BQ) and assessment is the measurement of a broad range of abilities, from simple reading to complex problem-solving skills, and to collect information on individuals’ skill use and background. PIAAC focuses on four core competency domains of adult cognitive skills that are seen as key to facilitating the social and economic participation of adults in advanced economies: literacy, reading components, numeracy, and problem solving in technology-rich environments.

Background Questionnaire (BQ)

The PIAAC BQ is meant to identify (a) what skills participants regularly use in their job and in their home environment, (b) how participants acquire those skills, and (c) how those skills are distributed throughout the population. To obtain this information, the BQ asks participants about their education and training; present and past work experience; the skills they use at work; their use of specific literacy, numeracy, and information and computer technology (ICT) skills at work and home; personal traits; and health and other background information. The BQ is administered as a computer-assisted personal interview (CAPI) by the household interviewer, on a study laptop, and takes approximately 45 minutes to complete.

For the PIAAC 2017 National Supplement, the PIAAC 2012 and 2014 BQ will be used, with a few questions added to obtain information about total household income, military service, underemployment, holding a GED, and professional certifications or state/industry licenses (see Appendix B and C).

Assessment

The PIAAC direct-assessment evaluates the skills of adults in three fundamental domains: literacy, reading components, and numeracy. These domains are considered to constitute “key” information processing skills in that they provide a foundation for the development of other higher-order cognitive skills and are prerequisites for gaining access to and understanding specific domains of knowledge. The assessment also measures skills in problem solving in technology-rich environments in the adults who take the assessment on the computer.

The assessment was designed as a computer-based assessment; however, a paper version, which tests skills in the domains of literacy and numeracy only, is administered to respondents who indicate in the BQ that they have little or no familiarity with computers. Respondents with computer familiarity are initially routed to the computer-based assessment.

One of the unique aspects of PIAAC is the adaptive design of the computer-based assessment within the domains of literacy and numeracy that allows respondents to be directed to a set of easier or more difficult items based on their answers to a set of core items (Appendix D), which are automatically scored as correct or incorrect. The computer-based core items are designed to assess the respondent’s ability to complete the domain-based items and to assess his or her basic computer skills (e.g., the respondent’s capacity to use the mouse to highlight text). As a result, some respondents may begin with the computer-based assessment but at the completion of the computer-based core items be routed to the paper-and-pencil assessment.

In the adaptive design, the choice of assessment items for each participant depends upon an algorithm using a set of variables that include: i) the participant's level of education, ii) the participant's status as a native or non-native language speaker; and iii) the participant's performance in the computer-based assessment core tasks and on the computer-based items as they advance through the assessment. The problem-solving domain does not have an adaptive design. The key advantage of an adaptive design is to provide a more accurate assessment of participants' abilities, while using a smaller number of items than a traditional test design in which respondents must answer all test items, including all of the easiest and the most difficult items.

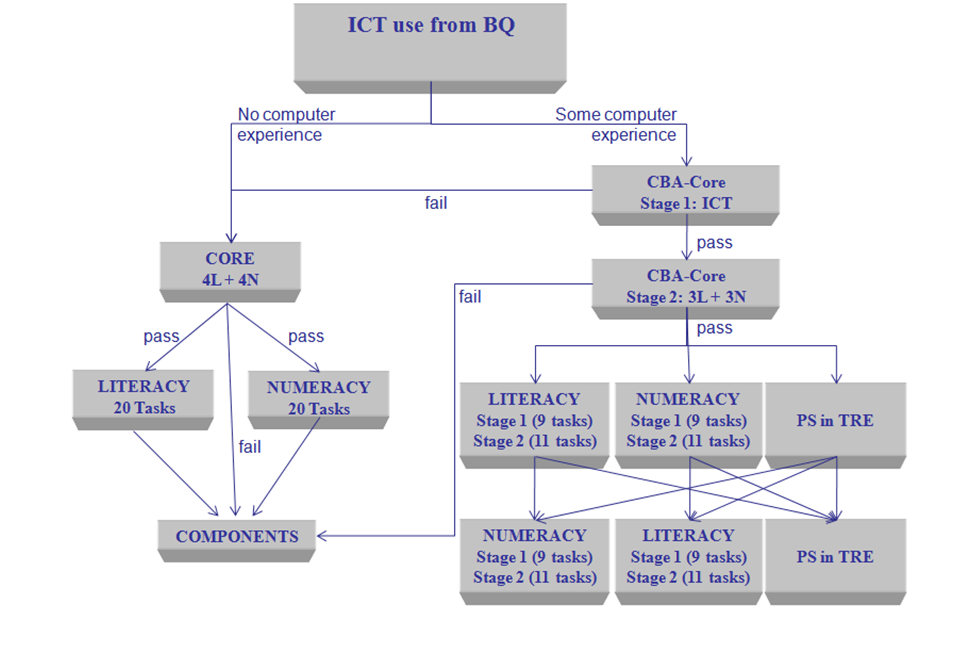

At the completion of the BQ, the participant is administered the computer-based core or, if the participant cannot or will not use the computer, the paper-and-pencil based core. Upon the completion and scoring of the core tasks, the respondent is routed to the computer-based assessment (CBA), the paper-based assessment (PBA) of literacy and numeracy, or the paper-based reading components. Routing through the assessment is controlled by the respondent’s performance on various assessment items. Figure 1 demonstrates the various paths through the assessment.

Figure 1. The PIAAC 2017 National Supplement assessment paths

A.3 Improved Information Technology (Reduction of Burden)

Technology is a large component of the PIAAC 2017 National Supplement. The screener (Appendix A) and the BQ (Appendix B) are CAPI administered. The interviewer will read the items aloud to the respondent from the screen on a laptop computer and will record all responses on the computer. The use of a computer for these questionnaires allows for automated skip patterns to be programmed into the database as well as data to be entered directly into the database for analyses. In addition, for the screener, the computer will run a sampling algorithm to determine who, if anyone, in the household is eligible to participate in the study, and it will select a respondent or respondents for the BQ and the assessment.

Although PIAAC is designed to be an adaptive, computer-administered assessment, not all sampled adults may be able to use a computer. Most respondents in the PIAAC 2017 National Supplement will complete the assessment via computer. For those who are routed to complete the assessment on paper, an automated interviewer guide, containing prompts to be read aloud to the respondent, will assist the interviewer in administering the assessment.

Additionally, the Field Management System (FMS) used in PIAAC in 2012 and 2014 will be used for the PIAAC 2017 National Supplement study. The FMS is comprised of three basic modules: the Supervisor Management System, the Interviewer Management System, and the Home Office Management System. The Supervisor Management System will be used to manage data collection and case assignments and produce productivity reports. The Interviewer Management System allows interviewers to administer the automated instruments, manage case status, transmit data, and maintain information on the cases. The Home Office Management System supports the packaging and shipping of cases to the field, shipping of booklets for scoring report production, processing of cases, receipt control, the receipt and processing of automated data, and is integrated with processes for editing and analysis.

Based on our previous experience during the PIAAC 2012 and 2014, it is estimated that approximately 97% of all responses collected across all of the PIAAC instruments, including the screener, background questionnaire, core tasks, and the assessment will be collected electronically.

A.4 Efforts to Identify Duplication

None of the previous adult literacy assessments conducted in the U.S., including ALL and NAAL, has used computer-based assessments to measure adult skills. Moreover, PIAAC is the first study in the U.S. to incorporate a technology component among the skills being measured. The international nature of the study will allow comparisons of the prevalence of these skills in the U.S. versus other PIAAC participating countries.

In total, in the U.S., 8,670 adults participated in PIAAC in 2012 and 2014, which is not enough respondents to produce accurate estimates at the state or county level. Thus, in the U.S., PIAAC results can only be reported at the national level. The target sample of 3,800 respondents in the PIAAC 2017 National Supplement effort has been designed to obtain sufficient coverage of different types of counties such that, when combined with the PIAAC 2012 Main Study and the PIAAC 2014 National Supplement samples, it can produce indirect small area estimates for the U.S. counties and states (about 12,470 adults). The last county-level estimates available were those derived from the 2003 National Assessment and Adult Literacy (NAAL).

Content

PIAAC is a "literacy" assessment, designed to measure performance in certain skill areas at a broader level than school curricula, encompassing a broader set of skills that adults have acquired throughout life. The skills that are measured in PIAAC differ from those measured by other studies such as the Trends in International Mathematics and Science Study (TIMSS) and the Progress in International Reading Literacy Study (PIRLS), which are curriculum based and designed to assess what students have been taught in school in specific subjects (such as science, mathematics, or reading) using multiple-choice and open-ended test questions. Another international assessment, the Program for International Student Assessment (PISA), assesses literacy, functional skills, and other broad learning outcomes and is designed to measure what 15-year-olds have learned inside and outside of school throughout their lives. Besides its different target age group, PISA measures skills at a much higher and more academic level than PIAAC, with PIAAC being more focused on the extensive range of skills needed in both everyday life and in the workforce. PIAAC contains tasks not included in the school based assessments, and assesses a range of skills from a very basic level to the higher workplace level skills that adults will encounter in everyday life.

PIAAC has also improved and expanded on the cognitive frameworks of previous large-scale adult literacy assessments (including the National Adult Literacy Assessment (NALS), NAAL, IALS, and ALL) and includes an assessment of problem solving via computer, which was not a component of these earlier surveys. The most significant difference between PIAAC and previous large-scale assessments is that PIAAC is administered on laptop computers and is designed to be a computer-adaptive assessment, so respondents receive groups of items targeted to their performance levels (with respondents unable or unwilling to take the assessment on computer being provided with an equivalent paper-and-pencil booklet). Because of these differences, PIAAC introduced a new set of scales to measure adult literacy, numeracy, and problem solving. Scales from IALS and ALL have been mapped to the PIAAC scales so that trends in performance can be measured over time.

Adult Household Sample

As an international assessment of adult competencies, PIAAC differs from student assessments in several ways. PIAAC assesses a wide range of ages (16-74), irrespective of their schooling background, whereas student assessments target a specific age (e.g., 15-year-olds in the case of PISA) or grade (e.g., grade 4 in PIRLS) and are designed to evaluate the effects of schooling. PIAAC is administered in individuals' homes, whereas international student assessments such as PIRLS, PISA, and TIMSS are conducted in schools.

Information collected

The kind of information PIAAC collects also reflects policy purpose different from the other assessments. PIAAC provides policy relevant data for international comparisons of the U.S. adult population’s competencies and skills and provides vital data to national, state, and local policymakers focused on economic development and adult workforce training.

A.5 Minimizing Burden for Small Entities

The PIAAC 2017 National Supplement will collect information from the 16-74 year-old population through households only. No small entities will be contacted to participate in the PIAAC data collection.

A.6 Frequency of Data Collection

PIAAC is a cyclical study that is intended to be updated internationally every 10 years. The first round of PIAAC was the PIAAC 2012 Main Study. The second round of PIAAC data collection in the U.S. was the PIAAC 2014 National Supplement and was completed only in the U.S. to provide data on special subgroups of interest. The PIAAC 2017 National Supplement will be the third round of U.S. data collection and will provide a mid-decade, nationally representative update as well as additional information to support indirect state- and county-level estimates of adult proficiency levels. The PIAAC 2017 National Supplement data collection will allow nationally representative estimates in the U.S. to be measured every five years (the next OECD cycle, Cycle 2, of PIAAC data collection is planned for 2022).

A.7 Special Circumstances

The special circumstances identified in the Instructions for Supporting Statement do not apply to this study.

A.8 Consultation outside NCES

PIAAC, as the other international studies conducted by NCES, is developed as a cooperative enterprise involving all participating countries. PIAAC was developed under the auspices of the OECD by a consortium of organizations. The following are the key persons from these organizations who are involved in the design, development, and operation of PIAAC:

Andreas Schleicher, Indicators and Analysis Division, Organization for Economic Cooperation and Development, 2, rue André Pascal, 75775 Paris Cedex16, FRANCE

Irwin Kirsch, Project Director for PIAAC Consortium, Educational Testing Service (ETS) Corporate Headquarters, 660 Rosedale Road, Princeton, NJ 08541

Jacquie Hogan, Project Director, Westat, 1600 Research Boulevard, Rockville, Maryland 20850-3129

A.9 Payments or Gifts to Respondents

In recent years, in-person household-based survey response rates have been declining. Research indicates that incentives play an important role in gaining respondent cooperation. To meet PIAAC 2017 National Supplement response rate goals, based on the PIAAC 2010 Field Test incentive experiment, and as was done in PIAAC 2012 and 2014, sampled respondents will be offered $50 to thank them for the their time and effort spent participating in PIAAC (including completing the background questionnaire, the Core Task, and the Assessment).

A.10 Assurance of Confidentiality

Data security and confidentiality protection procedures have been put in place for PIAAC to ensure that Westat and its subcontractors comply with all privacy requirements, including:

The statement of work of this contract;

Privacy Act of 1974 (5 U.S.C. §552a);

Family Educational and Privacy Act (FERPA) of 1974 (20 U.S.C. §1232(g));

Privacy Act Regulations (34 CFR Part 5b);

Computer Security Act of 1987;

U.S.A. Patriot Act of 2001 (P.L. 107-56);

Education Sciences Reform Act of 2002 (ESRA 2002, 20 U.S.C. §9573);

Confidential Information Protect and Statistical Efficiency Act of 2002;

E-Government Act of 2002, Title V, Subtitle A;

Cybersecurity Enhancement Act of 2015 (6 U.S.C. §151);

The U.S. Department of Education General Handbook for Information Technology Security General Support Systems and Major Applications Inventory Procedures (March 2005);

The U.S. Department of Education Incident Handling Procedures (February 2009);

The U.S. Department of Education, ACS Directive OM: 5-101, Contractor Employee Personnel Security Screenings;

NCES Statistical Standards; and

All new legislation that impacts the data collected through the inter-agency agreement for this study.

Furthermore, Westat will comply with the Department’s IT security policy requirements as set forth in the Handbook for Information Assurance Security Policy and related procedures and guidance, as well as IT security requirements in the Federal Information Security Management Act (FISMA), Federal Information Processing Standards (FIPS) publications, Office of Management and Budget (OMB) Circulars, and the National Institute of Standards and Technology (NIST) standards and guidance. All data products and publications will also adhere to the revised NCES Statistical Standards, as described at the website: http://nces.ed.gov/statprog/2012/.

All study personnel will sign Westat and PIAAC confidentiality agreements, and notarized NCES nondisclosure affidavits will be obtained from all personnel who will have access to individual identifiers. The protocols for satisfying the confidentiality protocols for the PIAAC 2017 National Supplement have been approved by the Institute of Education Sciences (IES) Disclosure Review Board (DRB). PIAAC Consortium organizations, including ETS and Westat, will follow the same procedures used in PIAAC 2012 and 2014 to support the data delivery, cleaning, analysis, scaling, and estimation. NCES worked closely with the DRB and the PIAAC Consortium organizations to map out the details of the disclosure analysis plan for the PIAAC 2012 Main Study and the PIAAC 2014 National Supplement. The same disclosure analysis plan that was approved for 2012 and 2014 will be used in 2017.

The physical and/or electronic transfer of personally identifiable information (PII), particularly first names and addresses, will be limited to the extent necessary to perform project requirements. This limitation includes both internal transfers (e.g., transfer of information between agents of Westat, including subcontractors and/or field workers) and external transfers (e.g., transfers between Westat and NCES, or between Westat and another government agency or private entity assisting in data collection). For PIAAC, the only transfer of PII outside of Westat facilities is the automated transmission of case-reassignments and complete cases between Westat and its field interviewing staff. The transmission of this information is secure, using approved methods of encryption. All field interviewer laptops are encrypted using full-disk encryption in compliance with FIPS 140-2 to preclude disclosure of PII should a laptop be lost or stolen. Westat will not transfer PIAAC files (whether or not they contain PII or direct identifiers) of any type to any external entity without the express, advance approval of NCES.

In accordance with NCES Data Confidentiality and Security Requirements, Westat will deliver hard-copy assessment booklets to Pearson, the scoring subcontractor, for scoring in a manner that protects this information from disclosure or loss. These hard-copy data will not include any PII. Specifically, for electronic files, direct identifiers will not be included (a Westat-assigned study identifier will be used to uniquely identify cases), and these files will be encrypted according to NCES standards. If these are transferred on media, such as CD or DVD, they will be encrypted in compliance with FIPS 140-2.

All PIAAC data files constructed to conduct the study will be maintained in secure network areas at Westat and will be subject to Westat’s regularly scheduled backup process, with backups stored in secure facilities on and off site. These data will be stored and maintained in secure network and database locations where access is limited to the specifically authorized Westat staff who are assigned to the project and have completed the NCES Affidavit of Non-disclosure. Identifiers will be maintained in files required to conduct survey operations that will be physically separate from other research data and accessible only to sworn agency and contractor personnel. Westat will deliver data files, accompanying software, and documentation to NCES at the end of the study. Neither respondents’ names nor addresses will be included in those data files.

The following protocols are also part of the approved plan: (1) training personnel regarding the meaning of confidentiality, particularly as it relates to handling requests for information and providing assurance to respondents about the protection of their responses; (2) controlling and protecting access to computer files under the control of a single database manager; (3) building-in safeguards concerning status monitoring and receipt control systems; and (4) having a secured and operator-manned in-house computing facility.

The laws pertaining to the collection and use of personally identifiable information will be clearly communicated to participants in correspondence and prior to administering PIAAC BQ and assessment:

A study introductory letter and brochure will be provided to households that will describe the study, its voluntary nature, and convey the extent to which respondents and their responses will be kept confidential; they will include the following statement:

The National Center for Education Statistics is authorized to conduct this study under the Education Sciences Reform Act of 2002 (ESRA 2002, 20 U.S.C. §9543). All of the information you provide may only be used for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose except as required by law (20 U.S.C. §9573 and 6 U.S.C. §151). Individuals are never identified in any reports. All reported statistics refer to the U.S. as a whole or to national subgroups.

Prior to administering the BQ, the household interviewer will review the study brochure and the confidentiality language cited above. In addition the following statement is the first item in the BQ and will be read by the interviewer to the respondent before proceeding to administer the BQ and assessment.

The National Center for Education Statistics is authorized to conduct this study under the Education Sciences Reform Act of 2002 (ESRA 2002; 20 U.S.C. § 9543). All of the information you provide may be used only for statistical purposes and may not be disclosed, or used, in identifiable form for any other purpose except as required by law (20 U.S.C. §9573 and 6 U.S.C. §151). Individuals are never identified in any reports. All reported statistics refer to the U.S. as a whole or to national subgroups. According to the Paperwork Reduction Act of 1995, no persons are required to respond to a collection of information unless it displays a valid OMB control number. The valid OMB control number for this voluntary survey is 1850–0870. The time required to complete this survey is estimated to average two hours per response, including the time to review instructions, gather the data needed, and complete and review the PIAAC questionnaire and exercise. If you have any comments concerning the accuracy of the time estimate, suggestions for improving this survey, or any comments or concerns regarding this survey, please write directly to: PIAAC, National Center for Education Statistics, Potomac Center Plaza, 550 12th Street, SW, Washington, DC 20202. OMB No. 1850-0870. Approval expires 10/31/2019.

Because the BQ and assessment flow without a break from one to another and are administered together during a single session with the respondent, the statement above, read at the start of the BQ, is not reread prior to asking the respondent to complete the assessment.

A.11 Sensitive Questions

The screener and background questionnaire for the PIAAC 2017 National Supplement will include questions about race/ethnicity, place of birth, and household income. These questions are considered standard practice in survey research and will conform to all existing laws regarding sensitive information.

A.12 Estimates of Burden

For the PIAAC 2017 National Supplement, the total response burden is estimated at two hours per respondent, including the time to answer the screener (5 minutes), background questionnaire questions (45 minutes), complete the Core Task and the orientation module (10 minutes), and the assessment (60 minutes).

Table 2. Estimates of burden for PIAAC 2017 National Supplement

Data collection instrument |

Sample size |

Expected Response Rate |

Number of Respondents |

Number of Responses |

Burden per Respondent (Minutes) |

Total burden hours |

Households |

|

|

|

|

|

|

PIAAC Screener |

5,632 |

86.5% |

4,872 |

4,872 |

5 |

406 |

Individuals |

|

|

|

|

|

|

Background Questionnaire |

4,763 |

81.4% |

3,877* |

3,877 |

45 |

2,908 |

Core Task and Orientation Module |

3,877 |

100% |

3,877* |

3,877 |

10 |

646 |

Assessment** |

3,877 |

98.0% |

3,800* |

3,800 |

60 |

3,800 |

Total |

NA |

NA |

4,872 |

12,626 |

NA |

3,960 |

* Duplicate counts of individuals are not included in the total number of respondents estimate.

** Assessments are exempt from Paperwork Reduction Act reporting and thus are not included in the burden total.

NOTE: See table 5 in Part B for details on the sample yield estimates (e.g., only 85 percent of households that take the Screener are expected to be eligible to take the BQ, but 6.6 percent of those households are expected to have two eligible adults).

Table 2 presents the estimates of burden for the PIAAC 2017 National Supplement. The intended target total number of assessment respondents for PIAAC 2017 National Supplement is 3,800, with a total burden time (excluding the assessment) of 3,960 hours and an expected overall response rate of about 69 percent. In the first row, 5,632 is the number of expected occupied households, computed as the total number of sampled dwelling units multiplied by the occupancy rate (6,626 * .85). Of these, 4,872 households are estimated to go through the screener (the number of expected occupied households multiplied by the screener response rate: 5,632*.865). In the second row, 4,763 is the number of sampled persons, computed as the product of (a) the number of completed screeners (4,872), (b) the proportion of households having at least one eligible person 16-65 (.832) and 66-74 (.09), and (c) an adjustment for the proportion of HHs with 2 sample persons age 16-65 selected (1.066). In the third row, 3,877 is the number of sampled persons estimated to complete the BQ (the product of 4,763 * the BQ response rate of 0.81). Of these, 3,800 participants are expected to complete the assessment (based on the expected 0.98 assessment response rate).

A.13 Total Annual Cost Burden

There are no additional costs to respondents and no record-keeping requirements.

A.14 Annualized Cost to Federal Government

The total cost to the federal government, including all direct and indirect costs of preparing for and conducting the PIAAC 2017 National Supplement is estimated to be $7,516,341 (see Table 3 for cost detail).

Table 3. Cost for conducting the PIAAC 2017 National Supplement

Item |

Cost |

Labor |

2,362,101 |

Other Direct Costs |

2,077,156 |

Respondent Incentives |

222,375 |

Overhead, G&A, and Fee |

2,526,459 |

Salaries of Federal Employees |

328,250 |

TOTAL PRICE |

$7,516,341 |

A.15 Program Changes or Adjustments

This request is a reinstatement, with change, of a previously approved collection, and as such it reflects an increase in burden. However, the burden is actually lower as compared to the PIAAC 2014 National Supplement because fewer households are being screened to obtain the target number of completed cases, largely because in PIAAC 2017 no subpopulations are being oversampled.

A.16 Plans for Tabulation and Publication

The data from the PIAAC 2017 National Supplement will be used to produce national estimates and, when combined with the PIAAC 2012 Main Study and the PIAAC 2014 National Supplement data, will also produce indirect state- and county-level estimates for the U.S. Analyses of data will include examinations of the literacy, reading components, numeracy, and problem-solving in technology rich environments.

Planned publications and reports for the PIAAC 2017 National Supplement include the following:

General Audience Report

This report will present information on national estimates for literacy, reading components, numeracy, and problem-solving in technology rich environments, written for a non-specialist, general U.S. audience. This report will present the results of analyses in a clear and non-technical way, conveying how the 2017 U.S. sample compares to their international peers who participated in PIAAC Cycle 1, and what differences are statistically significant from earlier results. This report may be used to describe results for smaller groups which, when combining the data from 2012, 2014 and 2017, will provide adequate sample sizes to report on these subgroups at a more granular level.

The General Audience report will also introduce the PIAAC 2017 National Supplement sample design and will announce the future release of the state and county level small area estimates. These estimates will be generated for all counties and states in the U.S by combining the data collected during the 2012, 2014 and 2017 U.S. PIAAC data collection efforts with data from other sources, such as the decennial censuses, that provide information on characteristics of states and counties Using the same method and procedures applied to produce indirect estimates for NAAL 2003, NCES will produce estimates of the percentages of adults with low level literacy skills for each state and county in the U.S. NCES expects that these results will be published on the NCES website and will include a series of informational webpages as well as a web-based data tool which will allow users to select and compare estimates across states and counties and compare these estimates to the international and U.S. estimates.

Technical Report

NCES will produce a Technical Report for the PIAAC 2017 National Supplement. This document will detail the procedures used in the PIAAC 2017 National Supplement (e.g., sampling, recruitment, data collection, scoring, weighting, and imputation) and describe any problems encountered and the contractor’s response to them. The primary purpose of the Technical Report is to document the steps undertaken in conducting and completing the study. This report will include an analysis of non-response bias, which will assess the presence and extent of bias due to nonresponse. As required by NCES standards, selected characteristics of respondents will be compared with those of non-respondents to provide information about whether and how they differ from respondents along dimensions for which we have data for the nonresponding units. Electronic versions of each publication will be made available on the NCES website (see Table 4 for planned schedule).

Table 4. The PIAAC 2017 National Supplement production schedule

Date |

Activity |

October - December 2016 |

Finalize data collection manuals, forms, systems, laptops, and interview/assessment materials for the PIAAC 2017 National Supplement. |

February - September 2017 |

Collect the PIAAC 2017 National Supplement data. |

September 2017 |

Receive the PIAAC 2017 National Supplement raw data for delivery to the international consortium. |

March 2018 |

Receive the PIAAC 2017 National Supplement preliminary cleaned data and scores from PIAAC consortium. |

June 2018 - October 2019 |

Produce the U.S. PIAAC 2017 National Supplement General Audience Report and Technical Report. |

November 2019 – December 2021 |

Produce the U.S. PIAAC indirect state- and county-level estimates. |

A.17 Display OMB Expiration Date

The OMB expiration date will be displayed on all data collection materials.

A.18 Exceptions to Certification Statement

No exceptions to the certifications are requested.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | PIAAC OMB Clearance Part A 12-15-09 |

| Author | Michelle Amsbary |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy