50037 Full OMB Package Section A_revised 42417 CLEAN

50037 Full OMB Package Section A_revised 42417 CLEAN.docx

Assessing the Implementation and Cost of High Quality Early Care and Education: Comparative Multi-Case Study, Phase 2

OMB: 0970-0499

Assessing the Implementation and Cost of High Quality Early Care and Education: Comparative Multi-Case Study, Phase 2

OMB Information Collection Request

NEW

Supporting Statement

Part A

May 2017

Submitted by:

Office of Planning, Research and Evaluation

Administration for Children and Families

U.S. Department of Health and Human Services

330 C Street, SW

Fourth Floor

Washington, DC 20024

Project officers:

Ivelisse Martinez-Beck, Senior Social Science Research Analyst and

Child Care Research Team Leader

Meryl Barofsky, Senior Social Science Research Analyst

This page has been left blank for double-sided copying.

Contents

A1. Necessity for the data collection 1

A2. Purpose of survey and data collection procedures 2

A3. Improved information technology to reduce burden 6

A4. Efforts to identify duplication 6

A5. Involvement of small organizations 6

A6. Consequences of less frequent data collection 6

A8. Federal Register Notice and consultation 6

A9. Incentives for respondents 8

A12. Estimation of information collection burden 9

A13. Cost burden to respondents or record keepers 12

A14. Estimate of cost to the federal government 12

A16. Plan and time schedule for information collection, tabulation, and publication 12

A17. Reasons not to display OMB expiration date 15

A18. Exceptions to certification for Paperwork Reduction Act submissions 15

attachments

ATTACHMENT A: RECRUITMENT AND ENGAGEMENT CALL SCRIPTS FOR CENTER DIRECTORS AND PROGRAM DIRECTORS

ATTACHMENT B: IMPLEMENTATION INTERVIEW

ATTACHMENT C: COST WORKBOOK

ATTACHMENT D: TIME-USE SURVEY

ATTACHMENT E: FEDERAL REGISTER NOTICE

ATTACHMENT F: PUBLIC COMMENT

ATTACHMENT G: RESPONSE TO PUBLIC COMMENT

ATTACHMENT H: INITIAL EMAIL TO CENTER DIRECTORS

ATTACHMENT I: TIME USE SURVEY ROSTER

ATTACHMENT J: TIME USE SURVEY ADVANCE LETTER, AND FOLLOW-UP LETTER

ATTACHMENT K: ADDITIONAL RECRUITMENT MATERIALS

Tables

A.1 Research questions 3

A.2 Phases of data collection for the ECE-ICHQ multi-case study 3

A.3 Data collection activity for Phase 2 of the ECE-ICHQ Multi-Case Study, by timing, respondent, and format 4

A.4 ECE-ICHQ technical expert panel members 7

A.5 Total burden requested under this information collection 11

A.6 Multi-case study schedule 12

FIGURES

A.1 Draft conceptual framework for the ECE-ICHQ project 2

A1. Necessity for the data collection

The Administration for Children & Families (ACF) at the U.S. Department of Health and Human Services seeks approval to collect information informing the development of measures of the implementation and costs of high quality early care and education. This information collection is part of the project, Assessing the Implementation and Cost of High Quality Early Care and Education (ECE-ICHQ).

Study background

Support at the federal and state level to improve the quality of early care and education (ECE) services for young children has increased based on evidence about the benefits of high quality ECE, particularly for low-income children. However, there is a lack of information about how to effectively target funds to increase quality in ECE. ACF’s Office of Planning, Research and Evaluation (OPRE) contracted with Mathematica Policy Research and consultant Elizabeth Davis of the University of Minnesota to conduct the ECE-ICHQ project with the goal of creating an instrument that will produce measures of the implementation and costs of the key functions that support quality in center-based ECE serving children from birth to age 5.1 The study team, together with OPRE and a Technical Expert Panel (TEP) created a framework for measuring implementation of ECE center functions—from classroom instruction and monitoring individual child progress to strategic program planning and evaluation—using principles of implementation science.

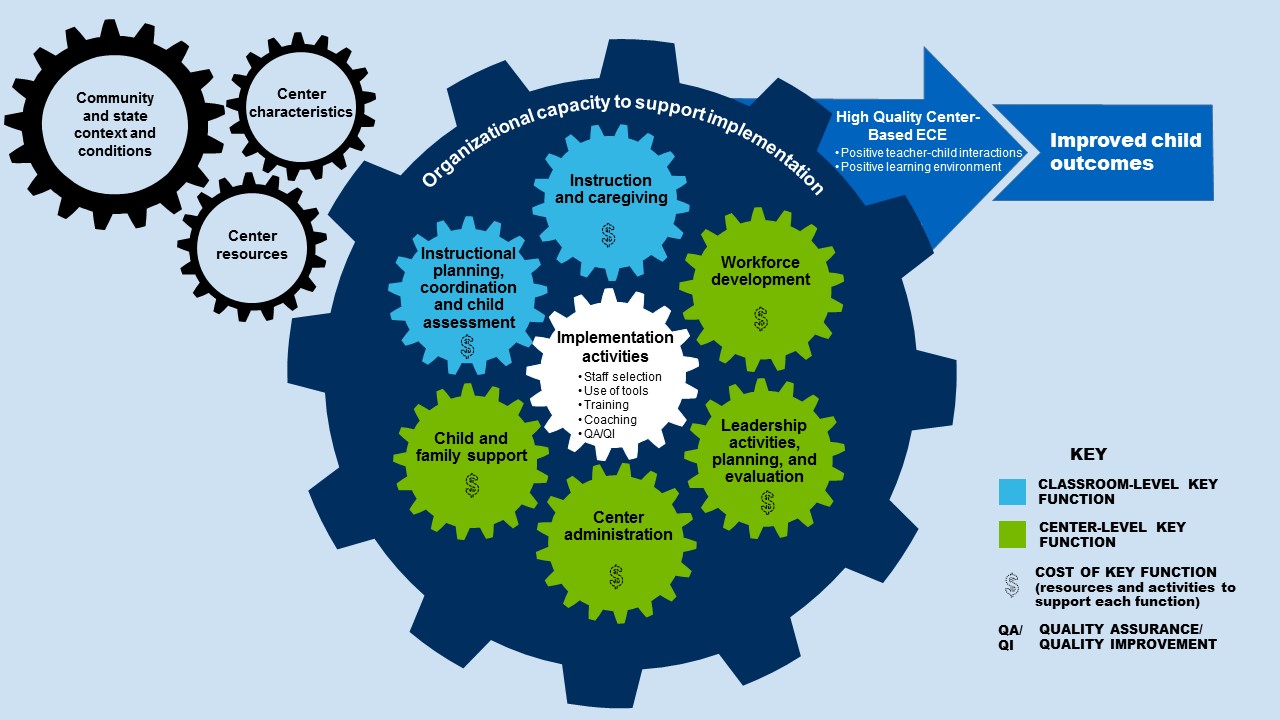

The premise of the ECE-ICHQ project is that centers vary in their investments in and capacities to implement key functions in ways that support quality. The draft conceptual framework in Figure A.1 will guide the study’s approach to data collection. The framework depicts the key functions of a center-based ECE provider (a term shortened to ECE center throughout), the costs underlying them, and how these functions are driven by a number of elements that influence whether and how a center can achieve high quality and improve child outcomes. The gears represent the key functions (that might support quality) that the study team expects to find in ECE centers. What the functions look like and how they are carried out within each ECE center is driven by (1) the implementation activities that support them, (2) the organizational capacity in which they operate, and (3) the resources and characteristics of the ECE center. All of this is further driven by the context and conditions within the broader community and state.

Implementation measures will capture what each center is doing to support quality and how these efforts are implemented. Key functions of an ECE center will be assigned costs to describe the distribution of resources within total costs. The study team will identify how resources are distributed within ECE centers in ways that could influence quality by producing cost measures by function.

Figure A.1. Draft conceptual framework for the ECE-ICHQ project

The goals of this two-phase comparative multi-case study are (1) to test and refine a mixed-methods approach to identify the implementation activities and costs of key functions within ECE centers serving children from birth to age 5 and (2) to produce data for creating measures of implementation and costs. Since the fall of 2014, the ECE-ICHQ study team has developed a conceptual framework; conducted a review of the literature (Caronongan et al., 2016); consulted with a technical expert panel (TEP); and collected and summarized findings from Phase 1 of the study. (Phase 1 was completed under ACF’s generic clearance 0970-0355.) Phase 1 included thoroughly testing data collection tools and methods, conducting cognitive interviews to obtain feedback from respondents about the tools, and refining and reducing the tools for this next phase. This information collection request is for Phase 2 of the study, which will further refine the data collection tools and procedures through additional quantitative study of the implementation of key functions of center-based ECE providers and an analysis of costs.

Legal or administrative requirements that necessitate the collection

There are no legal or administrative requirements that necessitate this collection. ACF is undertaking the collection at the discretion of the agency.

A2. Purpose of survey and data collection procedures

Overview of purpose and approach

The purpose of information collected under the current request is to further design and finalize the data collection tools and develop measures of implementation and costs of the six key functions (outlined in Figure A.1. above) that can support quality within ECE centers. Using data from Phase 1 of the study, the research team was able to test the usability of the ECE-ICHQ measurement items and refine and reduce the data collection tools and approach. The goal of Phase 2 will be to (1) test the usability and efficiency of refined and reduced data collection tools across a range of ECE centers and (2) assess the alignment of draft measures of implementation and costs and the relationships between them. The research team will collect data using telephone interviews, a cost workbook, and a web-based survey.

Research questions

Table A.1 outlines the ECE-ICHQ research questions addressed by the multi-case study. In consultation with the TEP and from the literature review, the team developed these research questions to address gaps in knowledge and measurement.

Questions focused on ECE centers: |

What are the differences in center characteristics, contexts, and conditions that affect implementation and costs? |

What are the attributes of the center-level and classroom-level functions that a center-based ECE provider pursues and what implementation activities support each function? |

What are the costs associated with the implementation of key functions? |

How do staff members use their time in support of key functions within the center? |

Questions focused on measurement development: |

How can time-use data from selected staff be efficiently collected and analyzed to allocate labor costs into distinct cost categories by key function? |

What approaches to data collection and coding will produce an efficient and feasible instrument for broad use? |

Questions focused on the purpose and relevance of the measures for policy and practice: |

What are the best methods for aligning implementation and cost data to produce relevant and useful measures that will inform decisions about how to invest in implementation activities and key functions that are likely to lead to quality? |

How will the measures help practitioners decide which activities are useful to pursue within a center or classroom and how to implement them? |

How might policymakers use these measures to inform decisions about funding, regulation, and quality investments in center-based ECE? |

How might the measures inform the use and allocation of resources at the practitioner, state, and possibly national level? |

Study design

Table A.2 outlines the study’s two phases of data collection. Phase 1 included data collection from 15 ECE centers across three states to test data collection tools and procedures. The study team refined and reduced the data collection tools and procedures for Phase 2 based on the information collected and analyzed in Phase 1. In Phase 2, the study team will select 50 new centers within the same three states to respond to the updated data collection tools, via telephone and web.

Table A.2. Phases of data collection for the ECE-ICHQ multi-case study

Phase |

Purpose |

Methods |

Number of centers |

1 |

Identify the range of implementation activities and key functions; test data collection tools and methods using cognitive interviewing techniques |

Semi-structured, on-site interviews; electronic or paper self-administered questionnaires; electronic cost workbooks; paper time-use surveys |

15 |

2 |

Test usability and efficiency of refined and reduced data collection tools and methods; specifically test web-based collection for time-use data for lead and assistant teachers |

Telephone interviews; electronic cost workbook; web-based time-use survey |

50 |

Universe of data collection efforts

This current information collection request includes the following data collection activities, designed to support data collection for ECE-ICHQ Phase 2 data collection. Table A.3 lists each activity, respondent type, and format.

Table A.3. Data collection activity for Phase 2 of the ECE-ICHQ Multi-Case Study, by timing, respondent, and format

Data collection activity |

Respondents |

Format |

Center recruitment and engagement call |

Site administrator or center director Umbrella organization administrator (as applicable) |

Telephone (some in person for hard to reach centers) |

Implementation interview |

Site administrator or center director Education specialist Umbrella organization administrator (as applicable) |

Telephone |

Cost workbook |

Financial manager at site Financial manager of umbrella organization (as applicable) |

Excel workbook; telephone and email follow-up |

Time-use survey |

Site administrator or center director Education specialist Lead and assistant teachers |

Web-based with hard copy option |

Center recruitment and engagement call. The study team will recruit 50 centers from the same three states identified in Phase 1.2 The study team will assemble initial contact lists for centers in the three states through state websites. They will gather public information from websites that provide detailed contact information and information about funding sources (such as if a center accepts children who receive Child Care and Development Fund subsidies, or offers the state pre-kindergarten program). The team prefers to use state websites that also list Head Start programs but, if necessary, they can also use Head Start Program Information Report (PIR) data to build or supplement the list of Head Start programs. The team will use the contact information to send advance materials to centers and follow up by phone.

Project staff will call the director of each selected center to discuss the study and recruit the director to participate. The center recruitment and engagement call script (Attachment A) will guide the recruiter through the process of (1) explaining the study; (2) requesting participation from the center director; and, if the director agrees, (3) collecting information about the characteristics of the center, such as whether it is part of a larger, umbrella organization (such as a Head Start grantee with multiple sites, an ECE chain, or a community-based organization such as a YMCA), whether it provides Head Start or state-funded pre-kindergarten programs, and whether it serves children who receive subsidies for their care (for example, through the Child Care and Development Fund). The study team will send field staff to centers that require extra encouragement so staff can explain the study and answer the center directors’ questions in person. Finally, the recruiter will schedule the data collection activities. If the center is part of a larger organization that requires the organization’s agreement, the recruiter will contact the appropriate person to obtain that agreement before recruiting the center (also included in Attachment A).

Implementation interview. The team will structure the implementation interviews around a set of rubrics that focus on each of the key functions, as well as the center characteristics and resources to support implementation (Attachment B). Each rubric asks a set of questions to gather information about the structure of services or practices (the what), as well as the intentionality, accountability, and consistency with which each is implemented (the how). For example, each rubric includes questions about the factors that go into specific decisions (such as setting training priorities or evaluating staff performance), who is involved in the implementation process, and variations that can occur across teaching staff or classrooms. Interviewers will use the rubrics to guide data collection, checking response categories that apply or writing in responses to open-ended questions.

Cost workbook. The cost workbook (Attachment C) will collect information on costs, including salaries and fringe benefits for staff, nonlabor costs, and indirect (overhead) costs. The study team will send electronic workbooks for centers to complete. The workbook will be accompanied by clear, succinct definitions of key terms as well as instructions for completion. The project’s goal is to identify costs related to key functions, but the workbook’s arrangement focuses on resource categories, rather than key functions. Costs reported in the workbook will be allocated to key functions based on data from the time-use survey (for personnel costs) or the purpose of the cost item (for nonpersonnel costs). For example, costs associated with the purchase of materials, training, and technical assistance or coaching to support the adoption of a new curriculum or child assessment tool will be allocated to the relevant key function.

Although the goal will be to obtain the most complete information possible, based on Phase 1 it is clear that centers differ in the expenditure records they keep and the types of costs they might be able to report in the cost workbook. The data collection approach will prioritize gathering complete and accurate information on resource categories that will inform the creation of base cost estimates across all centers, which will include categories likely to comprise the largest proportion of costs, such as salaries, fringe benefits, and facilities.

Time-use survey. The purpose of the survey (Attachment D) is to collect information on staff time use that will help transform labor time into costs associated with the key functions. The study team will target select administrators and teaching staff to complete the survey. Teaching staff targeted will be those staff who provide direct instruction or care to children ages 0-5 and work consistently with children in a specific classroom, including teachers and assistant teachers, but not substitutes or floaters. The time-use survey will be web-based, but respondents will also have the option to complete a paper copy if they prefer.

A3. Improved information technology to reduce burden

Using feedback collected in Phase 1, the research team will alter the approach to data collection for Phase 2 to increase the use of information technology. The research team will shift to reliance on telephone interviews and web-based time-use surveys. The team will continue to provide the cost workbooks in an electronic spreadsheet format with individualized telephone and email follow-up as necessary.

A4. Efforts to identify duplication

None of the study instruments will ask for information that can be reliably obtained from alternative data sources, in a format that assigns costs to key functions. No comparable data have been collected on the costs of key functions associated with providing quality services at the center level for ECE centers serving children from birth to age 5.

Furthermore, the design of the study instruments ensures minimal duplication of data collected through each instrument. There is one cost workbook and one set of implementation rubrics that will guide data collection with each center; they have been developed to be complementary data collection tools to gather the necessary information with the least burden to respondents. The team will administer the time-use surveys to multiple respondents—directors, managers, and teachers—to provide a full picture of staff members’ time use.

A5. Involvement of small organizations

The team will recruit small ECE centers (those serving fewer than 100 children and having fewer than five classrooms) to participate. To minimize the burden on these centers, the study team will carefully schedule telephone interviews with the directors and managers at times that are most convenient for them, and when it will not interfere with the care of children. For example, the team will schedule interviews with directors in the early mornings or late afternoons when there are fewer children at the center. The team will not be interviewing teachers; teachers will be able to complete the web-based time use surveys when it is convenient for them.

A6. Consequences of less frequent data collection

This is a one-time data collection.

A7. Special circumstances

There are no special circumstances for the proposed data collection efforts.

A8. Federal Register Notice and consultation

Federal Register Notice and comments

In accordance with the Paperwork Reduction Act of 1995 (Pub. L. 104-13) and Office of Management and Budget (OMB) regulations at 5 CFR Part 1320 (60 FR 44978 August 29, 1995), ACF published a notice in the Federal Register announcing the agency’s intention to request an OMB review of this information collection activity. This notice was published on August 25, 2016, (vol. 81, no. 165, p. 58515) and provided a 60-day period for public comment. A copy of the notice is attached as Attachment E. ACF received one comment in response to this notice (Attachment F). The agency’s response to the comment is included in Attachment G.

Phase 1 was conducted under ACF’s generic clearance (0970-0355). The Federal Register notice announcing the agency’s intention to request an OMB review of information collection activities of this nature was published on September 15, 2014, (vol. 79, no. 178, p. 54985) and provided a 60-day period for public comment. ACF did not receive any comments in response to the notice.

Consultation with experts outside of the study

In designing the study, the team drew on a pool of experts to complement the knowledge and experience of the study team (Table A.4). To ensure the representation of multiple perspectives and areas of expertise, the expert consultants included program administrators, policy experts, and researchers. Collectively, the study team and external experts have specialized knowledge in measuring child care quality, cost-benefit analysis, time-use analysis, and implementation associated with high quality child care.

Through a combination of in-person meetings and conference calls, study experts have provided input to help the team (1) define what ECE-ICHQ will measure; (2) identify elements of the conceptual framework and the relationships between them; and (3) make key decisions about the approach, sampling, and methods of Phase 1 of the study. Select members of the expert panel also reviewed findings from Phase 1 and gave input on revisions to the data collection process and tools for Phase 2 that would reduce the burden on respondents, improve the accuracy of data collection, and support development of systematic measures of implementation and costs across a range of ECE centers.

Table A.4. ECE-ICHQ technical expert panel members

Name |

Affiliation |

Melanie Brizzi |

Currently, director of Child Care Services for Child Care Aware of America. She joined the TEP when she was director of Office of Early Childhood and Out of School Learning, Indiana Family Social Services Administration |

Rena Hallam |

Delaware Institute for Excellence in Early Childhood, University of Delaware |

Lynn Karoly |

RAND Corporation |

Mark Kehoe |

Brightside Academy |

Henry Levin |

Teacher’s College, Columbia University |

Katherine Magnuson |

School of Social Work, University of Wisconsin–Madison |

Tammy Mann |

The Campagna Center |

Nancy Marshall |

Wellesley Center for Women, Wellesley College |

Allison Metz |

National Implementation Research Network, Frank Porter Graham Child Development Institute, University of North Carolina at Chapel Hill |

Louise Stoney |

Alliance for Early Childhood Finance |

A9. Incentives for respondents

With OMB approval, the study team will offer each participating center a gift card valued at $350 for their involvement in the study, including participating in interviews, completing the cost workbook, and connecting the study team with the appropriate staff to complete the time-use surveys. The team will also provide a $10 gift card to each of the center staff who completes the time-use survey. These amounts were determined based on the estimated burden to participants and are consistent with those offered in Phase I. The study team used these same center ($350) and respondent level ($10) incentives in Phase 1, after learning through recruitment challenges that the prior lower levels of appreciation were not in line with the staff’s perception of the level of effort they would need to commit to the study.

Prior to the launch of Phase 1, the project team conducted a pilot (under the OPRE generic clearance OMB #0970-0355) to test the data collection process and tools using a $100 token of appreciation to participating centers. The experience from the pilot suggested an increased level of appreciation was necessary to gather the information needed for two main reasons: (1) the $100 was not viewed favorably relative to the time center staff were asked to commit to the data collection. All three centers approached for the pilot mentioned this disconnect and ultimately, only one center participated. (2) Some centers may incur direct expenses for data collection. For example, one of the pilot centers paid an accountant by the hour for assistance with bookkeeping and financial management and this person needed to complete the cost workbook.

Based on these experiences, the project team determined that the level of appreciation for centers was not well-aligned with the level of effort requested from the center. The project team looked to the FACES Redesign Pilot study and the Assessing Early Childhood Teachers’ Use of Child Progress Monitoring to Individualize Teaching Practices study (EDIT) as the closest, recent comparable data collection efforts in terms of burden. The FACES Redesign Pilot provided each participating program with $200 for coordinating data collection. After weighing both the time and logistics factors (which are greater for the ECE-ICHQ study), the team recommended a $350 center-level incentive to align the appreciation with the time needed from center staff. In the EDIT study, teachers participated in a 20-minute debriefing telephone call following the EDIT site visit and received a $20 gift card as a token of appreciation for this discussion. The ECE-ICHQ study will request that each teacher and select program administrators (such as the center director and education manager) complete a 15-minute time-use survey. Because the survey will be web-based and respondents will have the ability to complete it at their convenience, the study team recommends a token of appreciation of $10 per respondent.

Once the center-level incentive was increased and the respondent-level appreciation was added (as approved by OMB), the study team was successful in recruiting and completing data collection in 15 centers representing a range in characteristics in Phase 1. The amount of time to recruit and collect data from the 15 centers in Phase 1 was substantially shorter than the first three pilot centers that were only offered $100 appreciation (Questions and Answers When Designing Surveys for Information Collections, https://obamawhitehouse.archives.gov/sites/default/files/omb/assets/omb/inforeg/pmc_survey_guidance_2006.pdf).

A10. Privacy of respondents

The team will keep information collected private to the extent permitted by law. The study team will inform respondents of all planned uses of data, that their participation is voluntary, and that their information will be kept private to the extent permitted by law. The study team will submit all materials planned for use with respondents as part of this information collection to the New England Institutional Review Board for approval.

As specified in the contract, Mathematica will protect respondents’ privacy to the extent permitted by law and will comply with all federal and departmental regulations for private information. Mathematica has developed a data safety and monitoring plan that assesses all protections of respondents’ personally identifiable information. Mathematica will ensure that all of its employees and consultants who perform work under this contract are trained on data privacy issues and comply with the above requirements. Upon hire, every Mathematica employee signs a Confidentiality Pledge stating that any identifying facts or information about individuals, businesses, organizations, and families participating in projects conducted by Mathematica are private and are not for release unless authorized.

As specified in the evaluator’s contract, Mathematica will use Federal Information Processing Standard (currently, FIPS 140-2) compliant encryption (Security Requirements for Cryptographic Module, as amended) to protect all instances of sensitive information during storage and transmission. Mathematica will securely generate and manage encryption keys to prevent unauthorized decryption of information, in accordance with the Federal Processing Standard. Mathematica will (1) ensure that this standard is incorporated into the company’s property management and control system; and (2) establish a procedure to account for all laptop computers, desktop computers, and other mobile devices and portable media that store or process sensitive information. Any data stored electronically will be secured in accordance with the most current National Institute of Standards and Technology requirements and other applicable federal and departmental regulations. In addition, Mathematica must submit a plan for minimizing to the extent possible the inclusion of sensitive information on paper records and for protecting any paper records, field notes, or other documents that contain sensitive or personally identifiable information to ensure secure storage and limits on access.

Information will not be maintained in a paper or electronic system from which data are actually or directly retrieved by an individuals’ personal identifier.

A11. Sensitive questions

Calculating accurate estimates of center costs requires collecting information on staff salaries and center operating costs. The study team will explain the importance of this information to respondents and will ask sites to report salary information only by staff title, not personal name.

A12. Estimation of information collection burden

Newly requested information collections

Table A.5 summarizes the estimated reporting burden and costs for each of the study tools included in this information collection request. The estimates include time for respondents to review instructions, search data sources, complete and review their responses, and transmit or disclose information. Figures are estimated as follows:

Advance materials to center directors. To reach a large number of centers early in the recruitment process, the study team will send an advance email to directors of 400 centers that will invite them to participate (Attachment H). Based on Phase 1 experience, the team expects reaching out to this number of centers will allow us to recruit 50 centers for the study. The team expects it will take about 5 minutes for directors to review this email.

Center recruitment and engagement call script. There are two parts to the script: Part 1 focuses on recruitment and will take about 20 minutes, and Part 2 focuses on obtaining information from centers that agree to participate in the study and will take about 25 minutes. Based on past studies, the study team expects to reach out to 400 centers to secure the participation of the 50 centers necessary for this study. The study team therefore expects to conduct Part 2 with the 50 centers that agree to participate. Based on Phase 1, the study team anticipates that for one-third of the centers that agree to participate (15 centers), they will need to speak with a director of a larger organization with which the center is affiliated to fully obtain the center’s participation in the study. The team plans that this discussion will be similar to Part 1 of the center director recruitment call and will take about 20 minutes, on average.

Implementation interview. The team will conduct the three-hour implementation interview with the center director at each of the 50 centers. Based on the experience in Phase 1, the team anticipates that in 60 percent of the centers (30 centers), additional respondents will be involved in parts of the interview. On average, the team estimates that two additional respondents in the 30 centers will be involved in up to 30 minutes of the interview each. The additional respondents could include an assistant center director, education program manager or specialist, or executive staff from an umbrella organization (such as a Head Start grantee, or corporate office of a chain).

Cost workbook. The financial manager at each center or umbrella organization will be the primary person to complete the cost workbook with support from the data collection team as necessary. In Phase 1, one individual served as the respondent for completing the cost workbook in half the centers. In the other half, one additional respondent was required to assist in gathering information necessary to complete the workbook. For example, in some centers, an additional respondent provided information on in-kind contributions from a sponsoring organization or other source. In Phase 1, two-thirds of the centers relied heavily on the data collection team to help complete the cost workbook. In these cases, respondents provided financial documents and data collectors, then extracted information necessary for completing the workbook. For Phase 2, in which they will use a streamlined version of the workbook, the study team anticipates that a higher proportion of centers will be able to complete (or nearly complete) the revised workbook on their own.

Given the considerations of the Phase 1 experience and revisions to the cost workbook, the study team estimates that it will take 7.5 hours, on average, for respondents at each center to complete the cost workbook by assembling records, entering data, and responding to follow-up communication. The estimated average assumes some variation among centers in the extent to which respondents complete the workbook independently or with the assistance of the study team. The team assumes some centers will complete most of the workbook independently, some will complete it partially, and some will not complete the workbook but will provide financial records that enable the study team to complete it on their behalf. The team further assumes that respondents in all centers will participate in follow-up communication to confirm the information provided and review portions of the workbook with members of the study team.

Time-use survey. The team will target the time-use survey to an average of 14 staff per center (1 or 2 administrators, 12 or 13 teaching staff) at each of the 50 centers, for a total of 700 center staff. Field staff will work with the center director to obtain a roster with contact information for all the staff targeted for the time use survey in a center (Attachment I). The team expects this roster to take about 15 minutes for the center director to complete. The field staff will also distribute an advance letter inviting each staff member to participate in the survey, which the team estimates will take about 5 minutes for each staff person to review (Attachment J). The team plans on an 80 percent response rate (560 respondents) and expects the time-use survey to take 15 minutes to complete.

Table A.5. Total burden requested under this information collection

Instrument |

Total/Annual number of respondents |

Number of responses per respondent |

Average burden hours per response |

Annual burden hours |

Average hourly wage |

Total annual cost |

Initial email to selected center directors |

400 |

1 |

.08 |

32 |

$25.37 |

$811.84 |

Center recruitment and engagement call script (Part 1) Center director Umbrella organization administrator |

400 15 |

1 1 |

.33 .33 |

132 5 |

$25.37 $25.37 |

$3,348.84 $126.85 |

Center recruitment and engagement call script (Part 2) |

50 |

1 |

.42 |

21 |

$25.37 |

$532.77 |

Implementation interview |

|

|

|

|

|

|

Center director |

50 |

1 |

3 |

150 |

$25.37 |

$3,805.50 |

Additional center staff |

60 |

1 |

.5 |

30 |

$25.37 |

$761.10 |

Cost workbooks |

50 |

1 |

7.5 |

375 |

$25.37 |

$9,513.75 |

Time use survey staff roster |

50 |

1 |

.25 |

13 |

$25.37 |

$329.81 |

Time use survey advance letter and follow-up letter |

700 |

1 |

.08 |

56 |

$25.37 |

$1,420.72 |

Time use surveys |

560 |

1 |

.25 |

140 |

$17.01 |

$2,381.40 |

Estimated annual burden total |

954 |

|

$23,032.58 |

|||

Total annual cost

The team based average hourly wage estimates for deriving total annual costs on data from the Bureau of Labor Statistics, Occupational Employment Statistics (2015). For each instrument included in Table A.5, the team calculated the total annual cost by multiplying the annual burden hours by the average hourly wage.

The mean hourly wage of $25.37 for education administrators of preschool and child care centers or programs (occupational code 11-9031) is used for center directors, education managers, and financial managers and applies to all data collection tools except the time-use survey. The mean hourly wage for preschool teachers (occupational code 25-2011) of $15.62 is used for teachers and assistants. The study team calculated hourly average wage burden for the time-use survey based on 2 staff per center (an administrator and an education specialist) at $25.37 and 12 child care staff per center at $15.62, for an average of $17.01.

A13. Cost burden to respondents or record keepers

There are no additional costs to respondents.

A14. Estimate of cost to the federal government

The total/annual cost for the data collection activities under this current request will be $1,253,920. This includes direct and indirect costs of data collection.

A15. Change in burden

This is a new data collection.

A16. Plan and time schedule for information collection, tabulation, and publication

Table A.6 shows the schedule for the multi-case study. The multi-case study report, expected in August 2018, will present findings based on data collected from the 50 centers in Phase 2. Methodological findings of interest from Phase 1 (completed under ACF’s generic clearance 0970-0355) may also be included.

Table A.6. Multi-case study schedule

Task |

Date |

Phase 1 data collection |

April 2016 to December 2016 |

Phase 2 data collection |

August 2017 to January 2018* |

Multi-case study report |

August 2018 |

*Actual dates dependent on OMB approval

The analytic goals for Phase 2 focus on creating and using variables necessary to produce measures that will assess: (1) the associations between the implementation activities and costs of key functions within ECE centers and, (2) variations across ECE centers in how costs are allocated across key functions based on center resources, characteristics, and implementation activities. The analytic process the study team will follow to build the measures includes a series of incremental steps. The steps progress from first, organizing, coding, and analyzing the data at the item-level; next, creating summary variables for analysis by key function; and finally, analyzing summary variables or scales to examine associations among implementation, cost, and center characteristics.

Coding scheme. The team has developed a coding scheme based on the elements of the conceptual framework using data collected in Phase 1. The implementation rubrics are organized around the six key functions and before data collection, the team will assign numerical values to the discrete items (response categories) on the implementation rubrics. After data collection, members of the team will review the open-ended questions to identify and code additional response categories both for reporting during this phase and for further revising the rubrics for efficiency.

The cost workbook will be the source of data on costs incurred by centers across categories, such as staff salaries and benefits, facilities, and supplies and equipment. Based on data from Phase 1, the cost data collection team developed a codebook to assign costs to each of the six key functions. Some costs might relate to multiple key functions. For such costs, the team will develop a method to assign costs to multiple key functions, possibly proportionally, based on information from the implementation interview. Labor costs are another example of costs that span multiple key functions. The team will code data from the time-use survey to facilitate the allocation of labor resources to specific key functions—not just based on the roles of specific employees, but also based on the amount of time spent on various activities.

Item-level analysis. All data from the implementation interview, cost workbook and time-use surveys will be exported into Excel or SAS for analysis. To inform tool refinement and enhance the data collection approach, the study team will inspect item-level responses to identify patterns of nonresponse or issues with questions or tools. The team will examine item-level descriptive statistics to assess the variance on individual items and the extent to which it occurs in expected ways. For example, the researchers will examine correlations between select item-level costs and key center characteristics to determine whether items function similarly for different types of centers. They might identify items that must be dropped or revised because of limited variation, particularly in the implementation data.

Creating summary variables and measures. The study team will create summary variables that will serve to assess each key function by implementation construct (drawing on data from the implementation interview) and cost category (drawing on data from the cost workbook and time-use survey). The cost measures will include: total annual cost, total cost by key function (for example, the team will combine costs into a single analytic variable for instruction and caregiving costs), proportion of total costs allocated to each key function, and total cost per child care hour. The study team will develop implementation measures that represent a descriptor of each key function (summary of what the center is doing in each area), the intensity of implementation by key function (summary of how it implements the function), and the extent of organizational capacity to support implementation.

To examine implementation, the researchers will develop summary variables that assess constructs through binary indicators (for example, the presence or absence of a center characteristic such as serves infants or toddlers), and scales (for example, ratings of the use and training on a curriculum). The team will inspect descriptive statistics for all summary variables or scales they create and examine correlations between constructs and key center characteristics to determine whether summary variables function similarly for centers of different types. They will compare the distribution of variables by funding mix, inclusion of infant or toddler age, or center size, for example. The team will conduct psychometric analyses to document internal consistency reliability (using Cronbach’s alpha) as well as tests for skewed distributions that could be problematic in analysis (reducing variability).To assess the validity and reliability of draft scales (that is, the sets of items in the implementation rubrics), the study team will conduct factor analysis to identify key implementation factors and how they work together within each of the six functions as well as how factors work together across functions (as the data allow). With 50 centers, the team expects to have sufficient statistical power to test how well the model fits the data. Factor analysis is particularly useful in measurement development because, through an iterative process, the research team can reduce a large number of variables that measure a concept—such as implementation—down to a few underlying factors. The team will pursue an iterative approach to the analysis by reviewing output to assess how well items are working together (whether they are correlating or not) and the items that emerge as important in explaining variation in the key function. They plan to first run Exploratory Factor Analyses to identify which items cohere as factors and how many factors exist for each function (establishing the validity of the constructs in the measure). Then they will conduct Confirmatory Factor Analyses to test the reliability of the factors identified. The team will maintain the factors that emerge that have strong reliability and will make any necessary revisions to the rubrics.

There may be some variables that the team finds to have little or no variation, or that may not fit any of the factor models, but still address topics important to the field. The team will identify these variables and consider if it they should be maintained as descriptive variables. The resulting measures of the implementation of each key function are likely to have two types of variables: those based purely on descriptive analyses and those derived from the factor analyses (factors). Measuring “what” a center does within each key function may lend itself to the descriptive variables, while measuring “how” a center implements each key function will be the focus of the factor analysis.

Descriptive analysis. The study team will analyze the implementation and cost data by key function, first to assess the degree of variation within each area (implementation and costs) across the 50 centers, and then to examine the patterns of variation between implementation and costs. The analysis can inform how implementation decisions might affect costs of the key function, as well as the overall allocation of time and resources across ECE centers. The researchers will also examine the data based on center characteristics such as funding mix, size, or rating level of the quality rating and improvement system to determine if patterns exist in ways that the team can expect or explain. Although center sample sizes will not be large enough to establish definitive links between implementation and cost, or between costs and center characteristics, the team will conduct descriptive analyses to explore whether identifiable patterns or differences exist. The team will design the analysis to identify the center characteristics and activities that affect the implementation and costs of key functions.

Final report. The final report on the multi-case study will draw from across both data collection phases to discuss the project goals, conceptual framework, and data collection and measurement development methods. Data from Phase 1 will not be publicly reported (because of the limitations of using a generic OMB clearance), but findings about the methodology used during Phase 1 will be integrated in the final report. Findings from the analysis of data collected from the 50 ECE centers in Phase 2 will address the study questions about implementation and costs.

A17. Reasons not to display OMB expiration date

All instruments will display the expiration date for OMB approval.

A18. Exceptions to certification for Paperwork Reduction Act submissions

No exceptions are necessary for this information collection.

REFERENCES

Bureau of Labor Statistics, “May

2015 Occupation Profiles, Occupational Employment Statistics.”

Washington, DC: U.S. Department of Labor.

https://www.bls.gov/oes/

current/oes_stru.htm.

Caronongan, P., G. Kirby, K. Boller, E. Modlin, J. Lyskawa. “Assessing the Implementation and Cost of High Quality Early Care and Education: A Review of Literature.” OPRE Report 2016-31. Washington, DC: U.S. Department of Health and Human Services, Administration for Children and Families, Office of Planning, Research and Evaluation, 2016.

1 The ECE-ICHQ conceptual framework includes six key functions: (1) instruction and caregiving; (2) workforce development; (3) leadership activities, planning, and evaluation; (4) center administration; (5) child and family support; and (6) instructional planning, coordination, and child assessment.

2 If the study team finds that one or more of the states must be replaced (for example, because of limitations in the number of potential centers available to recruit), they will select another state with similar characteristics. In particular, they will select a state or states with similar QRIS and stringency of licensing requirements. The team will also seek to maintain geographic variation across the three states.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | 50037 Full OMB Package Section A_to OPRE_2.17.17 |

| Subject | Assessing the Implementation and Cost of High Quality Early Care and Education: Comparative Multi-Case Study, Phase 2 |

| Author | Mathematica Staff |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy