Supporting Statement URI Part B 20170706

Supporting Statement URI Part B 20170706.docx

Risk Preferences and Demand for Crop Insurance and Cover Crop Programs (RPDCICCP)

OMB: 0536-0076

Supporting Statement for

Risk Preferences and Demand for Crop Insurance and Cover Crop Programs

Section B

B. COLLECTION OF INFORMATION USING STATISTICAL METHODS

1. Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection method to be used. Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection has been conducted previously, include the actual response rate achieved during the last collection.

The data collected will be used for research activities which address an important question about the link between risk preferences and demand for crop insurance, rather than to produce estimates about a population. We will conduct this experiment using a convenience sample of student subjects. It is common practice in academic research to test experiments with student subjects before replicating the experiment with more difficult to reach populations (Gneezy and Potters, 1997; Harrison and List, 2004; Levitt and List, 2007). Additionally, our proposed experiment will test the theoretical predictions of behavioral economics risk models—noting that results of tests of theoretical predictions are more likely to generalize across diverse subject pools than measurements of idiosyncratic preferences alone.1

The study uses a convenience sample of 500 students recruited from a respondent pool of 2,000 students at the University of Rhode Island who have expressed interest in participating in economics experiments and who have shared their email addresses with the Department of Environmental and Natural Resource Economics. Participants will be randomly assigned to one of four risk environment treatments. Results of the study are not meant to be used to make policy decisions, and the sample is not meant to be nationally representative.

2. Describe the procedures for the collection of information.

Frequency of Collection:

Each respondent will participate in at most one session.

Recruitment:

Potential participants will be recruited to participate in the experiment via email (Attachment J: Recruitment Message) and in-class solicitations. The respondent pool consists of undergraduate and graduate students at the University of Rhode Island who take classes within the Department of Environmental and Natural Resource Economics and/or register to participate in the SimLab database.

Because it is common to get a number of no-shows and last-minute cancellations for a given experiment session, each session will also be “overbooked” by 2 potential participants as backups to ensure efficient use of scare economics laboratory time. In cases where more people show up than can be accommodated in a session, any extras who show up before the scheduled start time will receive a $10 payment for time and travel and can reschedule for another session. This is common practice among experimentalists and the norm for experiments conducted at SimLab.

Prior to giving their consent, potential participants at the beginning of each experimental session will be provided with: (1) a Consent Form (Attachment F: Consent Form) that contains a description of the study, including details of study purpose, data collection methodology, and burden estimate, as well as the use of the information collected and the voluntary nature of the study; and (2) a copy of the data protection disclaimer (Attachment G: Disclaimer).

Experiment:

The goals of this experiment are to (1) characterize the relationship between cover crop usage and crop insurance purchases, and (2) explore how this relationship depends on individuals’ risk preferences and demographic characteristics.

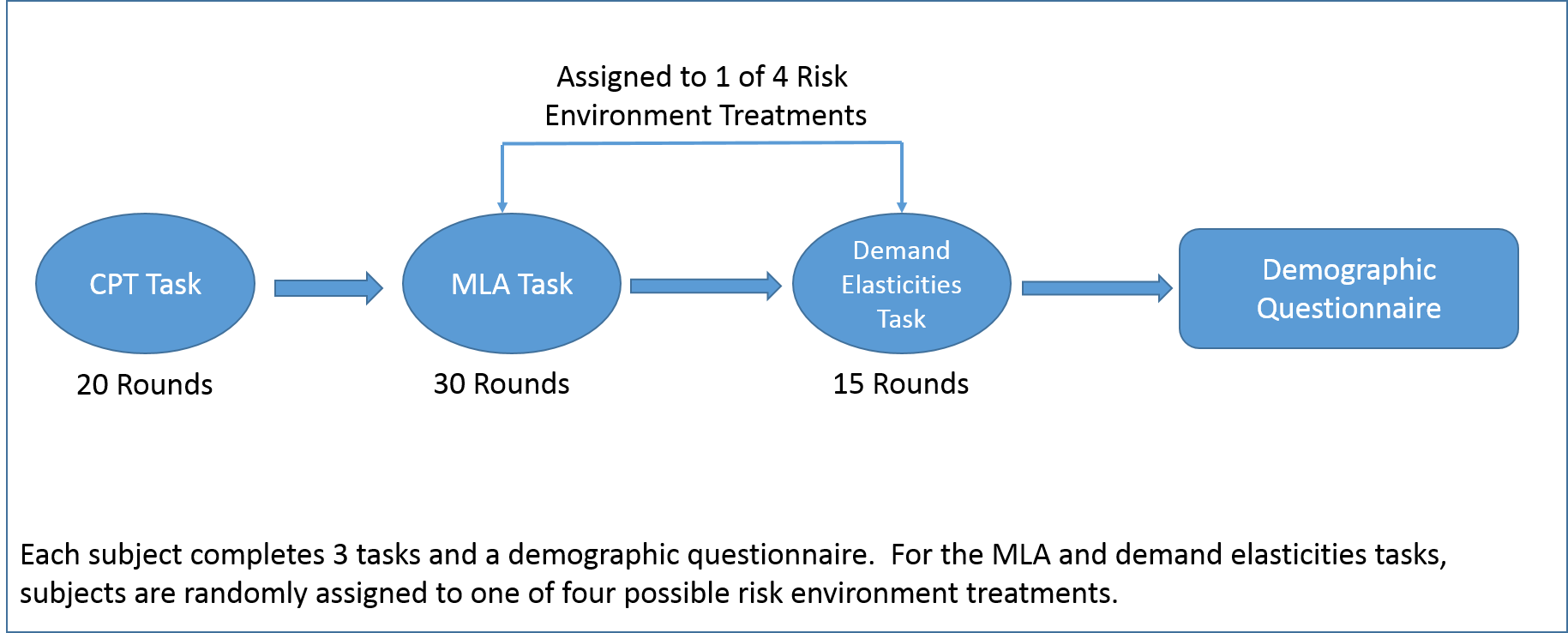

Figure 1 provides a schematic of the experimental design. Each subject will be randomly assigned to one of four possible risk environments. Within their assigned risk environment treatment, each subject will complete three different tasks: a task to measure Cumulative Prospect Theory (CPT) risk preferences, a task to measure Myopic Loss Aversion (MLA) risk preferences, a task to measure insurance and cover crop demand elasticities. The CPT and MLA tasks will measure different types of risk preferences using methods which are standard for the academic literature. The third task will measure demand elasticities jointly for stylized crop insurance purchases and cover crop decisions.

The CPT, MLA, and demand elasticities tasks will consist of a number of rounds where subjects’ make a series of decisions involving risk. The CPT task will consist of 20 rounds, the MLA task will consist of 30 rounds, and the demand elasticities task will consist of 15 rounds. Each round of each task will require a subject to evaluate one or more risky decisions. Detailed descriptions of all tasks are included in Attachment B: Experimental Design Protocol. Each subject will complete all 65 rounds and a brief demographic questionnaire within a 90 minute period.

Figure 1: Schematic of Experimental Design

Risk environment treatments will vary the amount of risk attached to subjects’ earnings in the MLA and demand elasticities tasks. The four possible risk environment treatments are (1) low expected revenue and low revenue risk, (2) high expected revenue and low revenue risk, (3) low expected revenue and high revenue risk, and (4) high expected revenue and high revenue risk. Detailed descriptions of all risk environment treatments are included in Attachment B: Experimental Design Protocol. Subjects will be equally allocated to all treatments as shown in the Table 1.

Table 1: Allocation of Subjects to Risk Environment Treatments

|

Low Expected Revenue |

High Expected Revenue |

Total |

Low Revenue Risk |

125 |

125 |

250 |

High Revenue Risk |

125 |

125 |

250 |

Total |

250 |

250 |

500 |

Respondents will participate in at most one session, and will be randomly assigned to only one risk environment treatment. Participants will accrue earnings based on decisions made in all elicitation tasks as well as a $10 show-up fee. Earnings will be paid to them in cash at the end of the experiment. Subjects will complete all components of the experiment within 90 minutes. More details for the experimental design are presented in the Attachment B: Experimental Design Protocol.

Once an experimental session is scheduled, it is the responsibility of the respondents to travel to the experiment site. URI’s SimLab is located in Rm. 200A Coastal Institute Building, University of Rhode Island, 1 Greenhouse Rd., Kingston RI 02881.

The experiment is programmed in Python and accessible via a Chrome web browser on laboratory computers. At the beginning of each session and elicitation task, participants are given detailed explanations and instructions for all procedures (Attachment D: Instructions). A short demographic questionnaire (Attachment E: Questionnaire) will also be given to participants at the end of the experiment, for the purposes of collecting control variables useful in basic regression analysis on laboratory experimental data.

Debriefing and Payment:

At the end of each session, all respondents will be debriefed. This gives an opportunity to address respondents' questions regarding the study.

Each participant will also receive a cash payment at the end of each 90 minute session. The cash payment will consist of earnings from the experiment plus a $10 show up fee. The value of the total cash payment will be uncertain before the experiments take place, and average payments are expected to range between $20-25. The range of earnings from the experiment is -$4.50 - $100, and the feasible total payments including the show up fee is $5.50 - $110. While subjects may lose up to $4.50 through their choices in the experiment, all subjects are certain to receive a positive payment for the session overall. The probability of realizing a payment of $50 or more is 2.29%, conditional on the subjects’ decisions within the experiment. The conditional probability of realizing a payment of $75 or more is 0.692%.

The payments listed above are for the entire 90 minute session. Within the session, subjects will complete three elicitation tasks (65 rounds total) and a demographic questionnaire. Subjects will be paid based on the results of one randomly selected round from the full experiment. By paying subjects for one randomly drawn round, we prevent any wealth effects from distorting the findings of the experiment.2 This practice is standard in the literature (Azrieli et al, 2012). More detailed explanation for payment method and justifications are given in Supporting Statement A (section 9) and in Attachment B: Experimental Design.

Power Analysis:

The experiment has four between-subjects treatments to vary the risk environment in which subjects make their insurance purchases. We design our sample size to provide a minimum detectable effect (MDE) of 20% average difference in insurance demand elasticities across treatments. We select an MDE of 20% average differences as this corresponds to the range of demand elasticities provided in the literature.

We use the power command in Stata/IC 14.1 to calculate the required sample size for a two-sample means test (independent samples t-test) of a 20% MDE between treatments against the null hypothesis of no difference in average insurance demand elasticity between treatments. Following the literature, we assume a population mean difference of -0.32, a standard deviation of the population mean difference equal to 0.1582, and 80% power for a two-tailed test of statistical significance at the 95% confidence level.

Under these assumptions, we require at least 111 subjects per treatment group to have adequate power to detect differences in average insurance demand elasticities across treatments. This implies a minimum total sample size of 444 subjects for the full experiment. We plan to recruit no more than 500 subjects. We have selected our sample size at slightly higher than the minimum required sample size to accommodate (1) any subjects who respond to recruitment emails but are unable to participate because the session is over-subscribed, and (2) any subjects who decide to withdraw from the experiment before completing the full procedure.

3. Describe methods to maximize response rates and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling a special justification must be provided for any collection that will not yield “reliable” data that can be generalized to the universe studied.

Potential participants are recruited via email and in-class solicitations. Many participants will have participated in similar economic experiments conducted on the campus of the University of Rhode Island. Typically, a single solicitation email or in-class request is sufficient to recruit enough subjects to complete an experimental session.

Because it is common to get a number of no-shows and last-minute cancellations for a given experiment session, each session will also be “overbooked” by 2 potential participants as backups to ensure efficient use of scare economics laboratory time. In cases where more people show up than can be accommodated in a session, any extras who show up before the scheduled start time will receive a $10 payment for time and travel and can reschedule for another session. This is common practice among experimentalists and the norm for experiments conducted at SimLab.

Experimental procedures were carefully designed to optimize the accuracy and reliability of information collected. In designing our experimental procedures and payment levels, we took into consideration academic standards for normal payoff ranges for student and non-student populations, statistical power considerations, budgetary limitations, and the history of discussions between OMB and ERS regarding other research approved under the previous generic clearance (OMB control # 0536-0070). More detailed explanations of calibrations of risk elicitation tasks is provided in Attachment B: Experimental Design.

Results will be inform future experiments to study risk management decision-making with farmer subjects. Results may be shared with other agencies within USDA for research purposes, but will not be used to evaluate existing policies or directly inform new policy making. Results may be disseminated as presentations and publications for academic and professional audiences, but will not be used to prepare official agency statistics.

4. Describe any tests of procedures or methods to be undertaken.

The methods and procedures in use in this experiment have been used widely in the literature (see for example Holt and Laury (2002, 2005), Tanaka, Camerer and Nguyen (2010), Bruhin, Epper and Fehr-Duda (2010), and Gneezy and Potters (1997)).

Pre-tests were conducted at SimLab with 9 students over the period 12-15 April 2016 to test the experimental procedures, instructions, and interface; and to observe a distribution of payments and completion times. Subjects were undergraduate and graduate students recruited at the University of Rhode Island.

Specifically, the pre-tests:

1) Checked for bugs

within the experimental software.

Result: Minor

software bugs identified and corrected with the layout of menus in

the CPT task.

2) Collected subject

feedback on aspects of instructions that were unclear during the

pretest.

Result: Feedback received and

instructions updated accordingly.

3) Tested total running

time for the experiment, including completing informed consent,

reading instructions, completing all experimental rounds, completing

demographic questionnaire, and paying subjects.

Result:

Session lengths ranged from 47-74 minutes, with a median run time of

62 minutes.

4) Tested the

distribution of subject payments.

Result:

Payments ranged from $10-29.80, with a median payment of $23.33.

Additional details are available in Attachment H: Pretest Report.

5. Provide the name and telephone number of individuals consulted on statistical aspects of the design and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

The contact individual for this research is Stephanie Rosch, Ph.D., Economic Research Service, USDA, telephone (202) 694-5049.

The research is being conducted under a cooperative agreement between the Economic Research Service and the University of Rhode Island. Data will be collected, entered, and provided to ERS by Professor Tom Sproul at the University of Rhode Island, telephone (401) 874-9197.

REFERENCES

Azrieli, Y., Chambers, C. P., & Healy, P. J. (2012). Incentives in experiments: A theoretical analysis. In Working Paper.

Bardsley, N. (2010). Experimental economics: Rethinking the rules. Princeton University Press.

Bruhin, A., Fehr‐Duda, H., & Epper, T. (2010). Risk and rationality: Uncovering heterogeneity in probability distortion. Econometrica, 78(4), 1375-1412.

Camerer, C. (2011). The promise and success of lab-field generalizability in experimental economics: A critical reply to Levitt and List. Available at SSRN 1977749.

Croson, R., & Gächter, S. (2010). The science of experimental economics. Journal of Economic Behavior & Organization, 73(1), 122-131.

Druckman, J. N., & Kam, C. D. (2009). Students as experimental participants: A defense of the 'narrow data base'. Available at SSRN 1498843

Gneezy, U., & Potters, J. (1997). An experiment on risk taking and evaluation periods. The Quarterly Journal of Economics, 631-645.

Guala, F. (2005). The methodology of experimental economics. Cambridge University Press.

Harrison, G. W., & List, J. A. (2004). Field experiments. Journal of Economic literature, 42(4), 1009-1055.

Holt, C. A., & Laury, S. K. (2002). Risk aversion and incentive effects. American economic review, 92(5), 1644-1655.

Holt, C. A., & Laury, S. K. (2005). Risk aversion and incentive effects: New data without order effects. The American economic review, 95(3), 902-904.

Levitt, S. D., & List, J. A. (2007). What do laboratory experiments measuring social preferences reveal about the real world? The journal of economic perspectives, 21(2), 153-174.

Plott, C. R. (1991). Will economics become an experimental science? Southern Economic Journal, 901-919.

Tanaka, T., Camerer, C. F., & Nguyen, Q. (2010). Risk and Time Preferences: Linking Experimental and Household Survey Data from Vietnam. American Economic Review, 100(1), 557-571.

1 Druckman and Kam (2011) provide extensive discussion of the external validity of experiments with student subjects that are specifically to test economic theories. See also Plott (1991), Guala (2005), Bardsley (2010), Croson and Gachter (2010), and Camerer (2011).

2 Wealth effects are the theoretical changes in behavior that occur after a given individuals’ wealth increases.

Supporting

Statement: Part B Page

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Higgins, Nathaniel - ERS |

| File Modified | 0000-00-00 |

| File Created | 2021-01-21 |

© 2026 OMB.report | Privacy Policy