NCS_2017MailDesignTest_AttachmentA_StudyPlan

NCS_2017MailDesignTest_AttachmentA_StudyPlan.docx

American Community Survey Methods Panel Tests

NCS_2017MailDesignTest_AttachmentA_StudyPlan

OMB: 0607-0936

AMERICAN COMMUNITY SURVEY (ACS) |

|

|

April 17, 2017 |

ACS reseARCH & EVALUATION ANALYSIS PLAN |

|

2017 ACS Mail Design Test |

|

|

|

|

|

|

|

|

|

|

|

|

Table of Contents

1. Introduction 1

2. Research Questions and Methodology 4

3. Literature Review 8

4. Potential Actions 9

5. Major Schedule Tasks 9

6. Project Oversight 10

7. References 10

List of Tables

Table 1. Descripton of Mail Materials for all Treatments in the 2017 ACS Mail Design Test 3

Table 2. Research Questions for the Mail Design Test 4

In 2015, the U.S. Census Bureau conducted the “2015 Summer Mandatory Messaging Test” (SMMT) to assess the impact on response, cost, and reliability of survey estimates of four sets of proposed design changes to the American Community Survey (ACS) mail materials, using the September 2015 ACS panel (Oliver et al., 2015). The proposed changes aimed to improve the way we communicate the importance and benefits of the ACS while updating the look and feel of the mail materials. Of the four designs evaluated, the Revised Design, which included the use of different logos on the envelopes and letters, the use of bold lettering and boxes to highlight elements of the material, and the addition of a box that read “Open Immediately” on the envelopes, proved to be the most promising. The Revised Design increased the self-response rates significantly and increased the precision of the survey estimates.

However, the Revised Design does not address the concern by some members of the public about the strong mandatory messages found throughout the ACS mail materials. Of the remaining candidate treatments, a variation of the Revised Design called the Softened Revised Design, where references to the mandatory nature of the survey were either removed or softened throughout the mail materials, offered the best compromise. Unfortunately, the Softened Revised Design lowered self-response (Oliver et al, 2015). Therefore, we resumed our research into mail designs that soften the mandatory messages, with the hope that the resulting self-response rates would be higher than that of the Softened Revised Design.

In June 2016, we presented the results of the SMMT and other related research (Clark, 2015a; Clark, 2015b; Barth, 2015, Heimel et al., 2016) as well as recommendations made by the White House’s Social and Behavioral Science Team (SBST), to the National Academies’ Committee on National Statistics (CNSTAT) to obtain feedback from experts in the field. In July 2016, we held a series of meetings with Don Dillman, a leading expert in the field of survey methodology to assist us in redesigning the ACS mail materials to soften the mandatory messages while maximizing self-response. The result is two proposed experimental treatments that are derivatives of the Softened Revised Design – the Partial Redesign and the Full Redesign (Roberts, 2016).

For the “2017 ACS Mail Design Test,” we will evaluate these two new experimental treatments and a third –– a modification of the Softened Revised Design treatment. These experimental treatments are designed to increase awareness of the ACS through new messaging and an updated look-and-feel that increases respondent engagement and self-response while softening the tone of the mandatory requirement of the survey.

Modifications to the original Softened Revised Design include the placement of the phrase “our toll free number” before the toll free number; an updated confidentiality statement; and a new design for the reminder letter-outgoing envelope that incorporates the Reingold design recommendations (U.S. Census Bureau, 2015). The Partial Redesign and the Full Redesign treatments depart from the Softened Revised Design in the following ways:

Removal of the “Multilingual Brochure” to reduce the number of mail pieces. This information is now included on the enclosed letter.

The addition of a “Why We Ask” pamphlet, a color pamphlet designed to engage the recipient and provide summary information about the benefits of the ACS.

Design changes to the front page of the questionnaire to provide instruction information that would have been contained on the “Instruction Card.”

The use of a letter instead of a postcard for the final reminder to allow us to include login information on the letter to make responding easier. The accompanying envelope contains a new message, “Final Notice Respond Now” to make a strong push for response.

Unlike recent research (Clark, 2015a; Clark, 2015b; Barth, 2015; Oliver et al., 2016; Heimel et al., 2016), which focused on changes to specific mail materials, the Partial Redesign and Full Redesign treatments test holistic changes to the mailing strategy. The Full Redesign treatment differs from the Partial Redesign in that it varies communication content in the mail materials. Per Don Dillman’s recommendations, the messages in the letters are written in a “folksy,” deferential style that is “non-threatening” (see Dillman et al., 2014 to learn more about this approach).

Table 1 provides an overview of the differences among the four treatments (1 control and 3 experimental treatments). The data are organized by the five possible mailings that an address in sample could receive. The current production ACS mail contact strategy begins with an “initial mailing”. Prospective respondents are invited to complete the ACS online or wait for a paper questionnaire. About seven days later, these recipients receive a reminder letter. About fourteen days later, recipients who have not responded receive a “questionnaire package” containing a paper questionnaire. About four days later, these recipients receive a reminder postcard. About fourteen days later, the nonrespondents who are not eligible for the Computer-Assisted Telephone Interview (CATI) operation (we do not have a telephone number for them) receive a final reminder postcard.

See Appendices A – D for facsimiles of the mail materials listed in Table 1. The Appendices are presented in the following order:

Appendix A: Current Production Treatment Materials (pages 1-12)

Appendix B: Softened Revised Design Treatment Materials (pages 1-12)

Appendix C: Partial Redesign Treatment Materials (pages 1-13)

Appendix D: Full Redesign Treatment Materials (pages 1-13)

Table 1. Description of Mail Materials for all Treatments in the 2017 Mail Design Test

Mailing Classification |

Current Production (control) |

Softened Revised Design (experimental) |

Partial Redesign (experimental) |

Full Redesign (experimental) |

Initial Mailing

Outgoing Envelope

Frequently Asked Questions Brochure

Letter

Instruction Card

Multilingual Information

“Why We Ask” pamphlet |

Your Response is Required by Law

11.5 x 6 (envelope size)

YES

No Callout Box

YES

Brochure Included

NO |

Your Response is Important to Your Community

Open Immediately

11.5 x 6

NO

Callout Box

Softened Wording

YES

Brochure Included

NO |

Your Response is Important to Your Community

Open Immediately

11.5 x 6

NO

Callout Box

Softened Wording

YES*

Included in the letter

YES |

Your Response is Important to Your Community

9.5 x 4.375

NO

Callout Box

Wording Changes

NO

Included in the letter

YES |

Reminder Letter

|

|

|

|

|

Outgoing Envelope |

No message |

No message |

No message |

No message |

Letter |

|

|

|

Wording changes |

Questionnaire Package

|

|

|

|

|

Outgoing Envelope |

Your Response is Required by Law |

Your Response is Important to Your Community

Open Immediately

|

Your Response is Important to Your Community

Open Immediately |

Your Response is Important to Your Community |

Questionnaire |

Current |

Current |

Design Changes** |

Design Changes**

|

Frequently Asked Questions Brochure

Letter |

YES

Current

|

NO

Softened Wording |

NO

Softened Wording |

NO

Wording Changes

|

Instruction Card |

YES |

YES |

NO |

NO

|

Return Envelope |

YES |

YES |

YES |

YES |

Reminder Postcard |

Postcard

|

Postcard

Softened Wording |

Postcard

Softened Wording |

Postcard

Wording changes |

Final Reminder (only nonrespondents not eligible for CATI) |

Postcard

|

Postcard

Softened Wording |

Letter***

Softened Wording

‘Final Notice Respond Now’ on envelope |

Letter

Wording changes

‘Final Notice Respond Now’ on envelope |

* Necessary because the letter did not have space to print both the respondent address and the log in information.

**The front page will include instruction information that would have been placed on the instruction card.

***The wording placement is slightly different compared to the Softened Revised Design Treatment postcard.

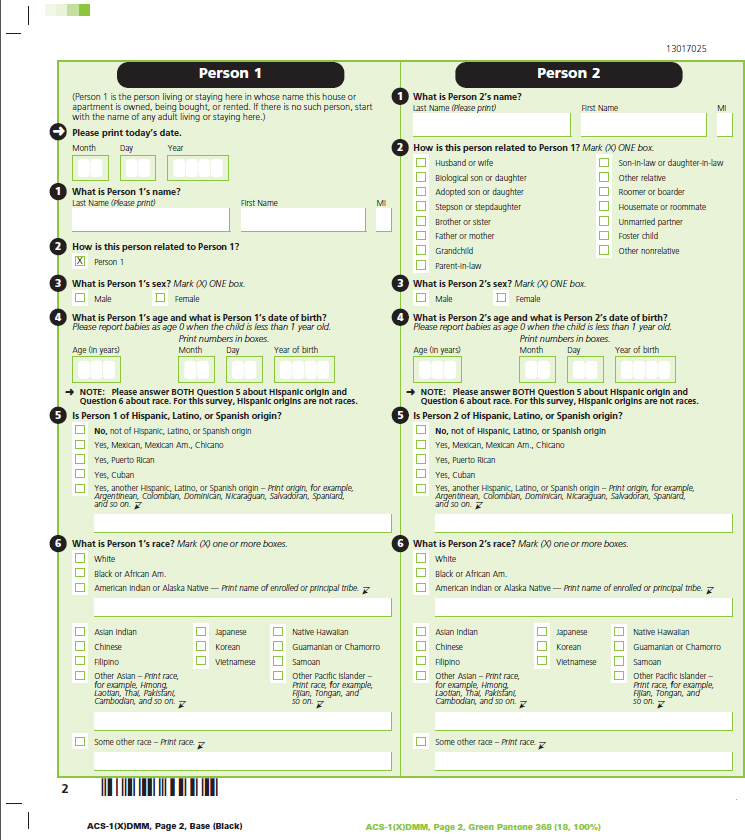

Because we provide more instruction information about the ACS on the first page of the paper questionnaire associated with the Partial and Full Redesign treatments, the “please print today’s date” question found on the first page of the current production questionnaire (see page 9 of Appendix A) has been moved to the second page of the revised questionnaire (see page 11 of Appendix E). This move could potentially affect analysts’ ability to calculate a person’s age when not provided.

When a person’s age is not provided and only the month and year of birth are provided (see question 4 of Appendix E), analysts calculate the missing age using the “please print today’s date” information. Historically, such cases are not common. However, these cases could be impacted by the move of the “please print today’s date” to the second page of the questionnaire. We will assess this change in this study.

2.1 Research Questions

The results of the “2017 ACS Mail Design Test” has the potential to improve the respondent experience by addressing two concerns: increasing respondent engagement and reducing respondent burden. It is our hope that if these concerns are satisfied, it will result in a higher self-response rate for either the Partial Redesign or Full Redesign treatment as compared to the Softened Revised Design. Table 2 presents the research questions that this test will answer at specified points in time.

Table 2. Research Questions for the Mail Design Test

Research Question |

Treatment Comparison |

When to Compare |

What is the impact of placing the multilingual information on a letter instead of a brochure and including a “Why We Ask” pamphlet on self-response return rates? |

Partial Redesign vs Softened Revised Design

|

|

What is the impact of placing the multilingual information on a letter instead of a brochure; including a “Why We Ask” pamphlet; and using “folksy” wording that conveys a deferential tone on self-response return rates? |

Full Redesign vs Softened Revised Design

|

|

What is the impact of using “folksy” wording that expresses a deferential tone on self-response return rates? |

Full Redesign vs Partial Redesign |

|

Research Question |

Treatment Comparison |

When to Compare |

What is the overall impact of the experimental treatment vs the control treatment on final response rates, data collection costs, and reliability of survey estimates? |

All Experimental Treatments vs Current Production |

|

Research Question |

Treatment Comparison |

When to Compare |

Is the item missing data rate for the month/day fields of the “please print today’s date” question the same? |

Partial and Full Redesign Treatments vs. Current Production |

|

The self-response return rates for the treatment comparisons will be calculated at the following points in time:

Date the mail questionnaire package is mailed to households that did not respond online:

Rationale: Determine the effect of each treatment on self-response for households provided one mode for self-response (Internet) and a reminder letter (M1 mailing universe).

Date the final reminder postcard/letter is mailed to households that did not respond to the survey online or by mail:

Rationale: Determine the cumulative effect of each treatment on self-response for nonrespondent households that now have two choices for self-response (Internet and mail) and have received a reminder postcard (M2 mailing universe).

Through the Computer-Assisted Telephone Interview (CATI) closeout:

Rationale: Determine the cumulative effect of each treatment on self-response for the households that have received a final reminder postcard or letter and still have not responded and (M3 mailing universe).

The final response rates will be calculated at closeout after all data collection operations, including the nonresponse operations, CATI and the Computer-Assisted Personal Interview (CAPI) are completed.

Rationale: Determine the effect of each treatment on overall survey response and the impact on costs and survey estimates.

Self-response return rates, final response rates, data collection costs, and effect on survey estimates will play a role in evaluating the experimental treatments. See Section 2.2 for the formulae for the self-response return rates and the final response rates.

To assess if there is an impact of moving the date from the first page of the questionnaire to the second page, we will first answer the following question: Is the item missing data rate for the month/day fields of the “please print today’s date” question the same for the treatment and production questionnaires? The item missing data rates will be calculated for the control treatment, the Partial Redesign treatment, and the Full Redesign treatment. If the answer to the above question is “yes” then there is no impact. If there is an impact, we will provide the number of cases where the age calculation would be affected.

2.2 Methodology

2.2.1 Sample Design

The monthly ACS production sample of approximately 295,000 addresses is divided into 24 groups, where each group contains approximately 12,000 addresses. Each group is a representative subsample of the entire monthly sample and each monthly sample is representative of the entire yearly sample and the country. We will use two randomly selected groups for each treatment. Hence, each treatment will have a sample size of approximately 24,000 addresses. In total, approximately 96,000 addresses will be used for the four treatments, which includes the current production treatment group. The current production treatment will have the same mail materials as the rest of production, but will be sorted and mailed at the same time as the other treatment materials.

The sample size(s) are chosen so that we may conduct 90-percent confidence level hypothesis tests to determine if the differences between control and experimental treatment self-response return rates are greater than 1.25-percent. In calculating our sample size, we assume that the survey achieves a 50-percent response rate and that our statistical test has a power value (discernment of this 1.25-percent difference) of 80-percent.

2.2.2 Self-Response Return Rates

-

Self-Response Return Rate

=

Number of mailable/deliverable sample addresses that provided a non-blank1 paper questionnaire via mail or TQA, OR a complete or sufficient partial Internet response2

*100

Total number of mailable/deliverable sample addresses3

Note: The numerator encompasses all possible response modes, including Telephone Questionnaire Assistance (TQA) (part of the mail mode), which is available throughout all phases of data collection. Depending upon the point in time though, direct response via paper questionnaire may not be possible.

2.2.3 Final Response Rates

-

Final = Response Return Rate

Number of the addresses that provided a complete or sufficient partial response

*100

Total number of mailable/deliverable sample addresses, excluding nonrespondent addresses that were not subsampled for CAPI, and addresses determined to be out-of-scope

Note: The numerator will be calculated across mode and by mode (Internet, mail, CATI, and CAPI).

2.2.4 Item Missing Data Rates

-

Item Missing Data Rate

=

Number of eligible housing units that did not provide a required response for the item

*100

Total number of eligible housing units required to provide a response to the item

2.2. 5 Standard Error of the Estimates

We will estimate the variances of the point estimates using the Successive Differences Replication (SDR) method with replicates – the standard method used in the ACS (see U.S. Census Bureau, 2014, Chapter 12). In calculating the self-response return rates and final response rates, we will use replicate base weights, which only account for sampling probabilities. We will calculate the variance for each rate and for the difference between rates using the formula below.

Where:

the

estimate calculated using the rth

replicate

the

estimate calculated using the rth

replicate

the

estimate calculated using the full sample

the

estimate calculated using the full sample

2.2.6 Effect of Response on Cost and Survey Estimates

In evaluating the three experimental treatments, it is not sufficient to only compare their self-response return rates and final response rates. A treatment’s data collection costs and effect on the survey estimates, if adopted, are also important. Past and recent research (Dillman, 1996; Barth, 2015; Oliver et al., 2016) has shown that treatments that soften or remove the mandatory language in the ACS reduce self-response. As a result, the CATI and CAPI workloads, which cost more per case to complete than self-response cases, increase.

For each experimental treatment, we will evaluate impacts under the following three scenarios:

Maintain the current sample size: this scenario will apply the results from this test to a full year of ACS sample to evaluate the effect on the cost and reliability of estimates of using the given experimental treatment methodology for an entire ACS data collection year.

Maintain current reliability: this scenario will use the results from this test to determine the initial sample size and cost necessary to maintain the reliability achieved using the current ACS methodology.

Maintain costs: this scenario applies the results from this test to determine how much the sample size would need to decrease or how much it could increase to collect the ACS data using the test strategies within the 2017 budget. The effect on reliability of the survey estimates will also be determined.

Between October 2013 and November 2014, the U.S. Census Bureau collaborated with Reingold Inc. to research and propose design and messaging changes to the ACS mail materials that could potentially increase the ACS self-response rates (U.S. Census Bureau, 2015). The high-level recommendations from the report are:

Emphasize the Census brand in ACS mail materials.

Use visual design principles to draw attention to key messages and help respondents better navigate ACS material.

Use deadline-oriented messages to attract attention and create a sense of urgency.

Prioritize an official “governmental” appearance over a visually rich “marketing” approach.

Emphasize effective “mandatory” messaging.

Demonstrate benefits of ACS participation to local communities.

Draw a clearer connection between objectionable questions and real-world applications and benefits.

Streamline mail packages and individual materials.

Based on these and other recommendations, the ACS conducted five field tests in 2015 to improve the mail materials and messaging and simultaneously address respondent burden, respondent concerns about the perceived intrusiveness of the ACS, and self-response rates. A description of these five tests is provided below:

Paper Questionnaire Package Test: conducted March 2015 to examine ways to reduce the complexity of this package (Clark, 2015a).

Mail Contact Strategy Modification Test: conducted April 2015 to examine ways to streamline the mail materials (Clark, 2015b).

Envelope Mandatory Messaging Test: conducted May 2015 to study the impact of removing mandatory messages from the envelopes (Barth, 2015).

Summer Mandatory Messaging Test: conducted September 2015 to study the impact of removing or modifying the mandatory messages from the mail materials (Oliver et al., 2016).

Why We Ask: conducted November 2015 to study the impact of including a flyer in the paper questionnaire mailing package explaining why the ACS asks the questions that it does (Heimel et al., 2016).

In June 2016, we presented the results of these research as well as recommendations made by the Whitehouse’s Social and Behavioral Science Team (SBST), to the National Academies’ Committee on National Statistics (CNSTAT) to obtain feedback from experts in the field (Plewes, 2016). In July 2016, we also held a series of meetings with Don Dillman who provided a critique of our current mail materials and messaging and 2015 research and offered suggestions for improvement. The result is two newly proposed mail treatments that are derivatives of the Softened Revised Design treatment from the “2015 Summer Mandatory Messaging Test” (Oliver et al., 2016) – the Partial Redesign and the Full Redesign. These two new treatments involve changes to the ACS mail materials and communications strategy based on social exchange theory. See Dillman (2014) for details.

The conclusions drawn from the “2017 ACS Mail Design Test” could result in new mail materials put into production, as well as revisions to the current materials.

Tasks (minimum required) |

Planned Start (mm/dd/yy) |

Planned Completion (mm/dd/yy) |

Author drafts REAP, obtains CR feedback, updates and distributes Final REAP |

12/07/16 |

04/18/17 |

PM/Author conducts research activities |

07/20/17 |

11/09/17 |

Author drafts initial report, obtains CR feedback, updates and obtains final report sign off by the CRs and Division Chief |

11/13/17 |

05/09/18 |

Post report to the Internet |

05/10/18 |

05/31/18 |

Barth, D. (2015). “2015 Envelope Mandatory Messaging Test”. Washington, DC, U.S. Census Bureau. Retrieved on February 10, 2017 from

http://www.census.gov/content/dam/Census/library/working-papers/2016/acs/2016_Barth_01.pdf

Clark, S. (2015a). “2015 Replacement Mail Questionnaire Package Test”. Washington, DC, U.S. Census Bureau. Retrieved on March 23, 2017 from

https://www.census.gov/content/dam/Census/library/working-papers/2015/acs/2015_Clark_02.pdf

Clark, S. (2015b). “2015 Mail Contact Strategy Modification Test”. Washington, DC, U.S. Census Bureau. Retrieved on March 23, 2017 from

https://www.census.gov/content/dam/Census/library/working-papers/2015/acs/2015_Clark_03.pdf

Dillman, D., Singer, E., Clark, J., & Treat, J. (1996). “Effects of Benefits

(Appeals, Mandatory Appeals, and Variations in Statements of Confidentiality) on Completion Rates for Census Questionnaires,” Public Opinion Quarterly, 60, 376-389.

Dillman, D., Smyth, J., & Christian, L. (2014). Internet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design Method (4th ed.). Hoboken, NJ: Wiley.

Heimel, S., Barth, D., & Rabe, M. (2016). “Why We Ask” Mail Package Insert Test”. Washington, DC, U.S. Census Bureau. Retrieved on February 21, 2017 from

http://www.census.gov/content/dam/Census/library/working-papers/2016/acs/2016_Heimel_01.pdf

Oliver, B., Risley, M., & Roberts, A. (2016). “2015 Summer Mandatory Messaging Test”. Washington DC, U.S. Census Bureau. Retrieved on February 10, 2017 from

https://www.census.gov/content/dam/Census/library/working-papers/2016/acs/2016_Oliver_01.pdf

Plewes, T.J. (2016). “Reducing Response Burden in the American Community Survey”. Proceedings of a Workshop at the National Academies of Sciences, Engineering, and Medicine, Committee on National Statistics, Washington, DC, March 8-9, 2016.

Roberts, A. (2016). “2017 ACS Mail Design Test”. Washington DC, U.S. Census Bureau. Retrieved on February 10, 2017 from the “2017 Mail Design Test” folder located in the ACSO SharePoint site.

U.S. Census Bureau (2014). “American Community Survey Design and Methodology”. Retrieved on February 10, 2017 from

U.S. Census Bureau (2015). “2014 American Community Survey Messaging and Mail Package Assessment Research: Cumulative Findings Report”. Retrieved on February 10, 2017 from https://www.census.gov/content/dam/Census/library/working-papers/2014/acs/2014_Walker_02.pdf

Appendix A: Current Production Treatment Materials

Initial Mailing – Outgoing Envelope

Initial Mailing - FAQ Brochure:

Current Production Treatment Materials

Initial

Mailing - Letter

Current Production Treatment Materials

Initial Mailing - Instruction Card

Current Production Treatment Materials

Initial Mailing - Multilingual Brochure

Reminder Letter – Outgoing Envelope

Current Production Treatment Materials

Reminder Letter

Current Production Treatment Materials

Questionnaire Package – Outgoing Envelope

Questionnaire Package – Return Envelope

Current Production Treatment Materials

Questionnaire Package – Letter

Current Production Treatment Materials

Questionnaire Package – Instruction Card

Current Production Treatment Materials

Questionnaire Package– Page One of Questionnaire

Current Production Treatment Materials

Questionnaire Package - FAQ Brochure:

Current Production Treatment Materials

Reminder

Postcard

Current Production Treatment Materials

Final Reminder Postcard

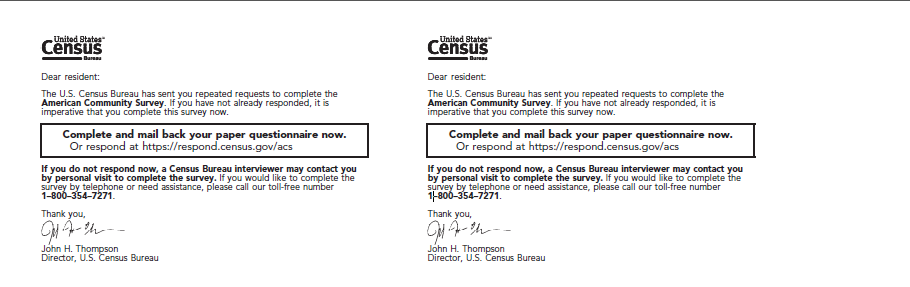

Appendix B: Softened Revised Design Treatment Materials

Initial Mailing – Outgoing Envelope

Softened Revised Design Treatment Materials

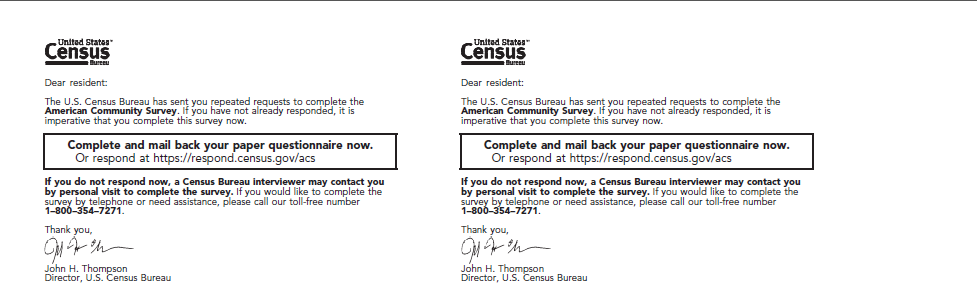

Initial Mailing – Letter

Softened Revised Design Treatment Materials

Initial Mailing – Instruction Card

Softened Revised Design Treatment Materials

Initial Mailing – Multilingual Brochure

Softened Revised Design Treatment Materials

Reminder Letter – Outgoing Envelope

Softened Revised Design Treatment Materials

Reminder Letter

Softened Revised Design Treatment Materials

Questionnaire

Package – Outgoing Envelope

Questionnaire Package – Return Envelope

Softened Revised Design Treatment Materials

Questionnaire Package – Letter

Softened Revised Design Treatment Materials

Questionnaire Package – Instruction Card

Softened Revised Design Treatment Materials

Questionnaire Package - Page One of Questionnaire

Softened Revised Design Treatment Materials

Reminder Postcard

Softened Revised Design Treatment Materials

Final Reminder Postcard

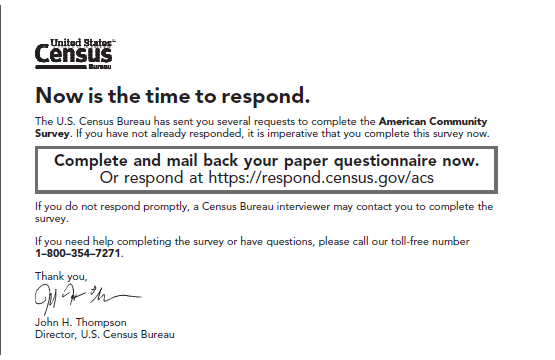

Appendix C: Partial Redesign Treatment Materials

Initial Mailing – Outgoing Envelope

Partial Redesign Treatment Materials

Initial Mailing – Why We Ask Brochure (front)

Partial Redesign Treatment Materials

Initial Mailing – Why We Ask Brochure (back)

Partial Redesign Treatment Materials

Initial Mailing – Letter

Partial Redesign Treatment Materials

Partial Redesign Treatment Materials

Initial Mailing – Instruction Card

Partial Redesign Treatment Materials

Reminder Letter – Outgoing Envelope

Partial Redesign Treatment Materials

Reminder Letter

Partial Redesign Treatment Materials

Questionnaire Package – Outgoing Envelope

Questionnaire Package – Return Envelope

Partial Redesign Treatment Materials - DRAFT

Questionnaire Package – Letter

Partial Redesign Treatment Materials

Questionnaire Package – Page One of Questionnaire

Partial Redesign Treatment Materials

Reminder Postcard

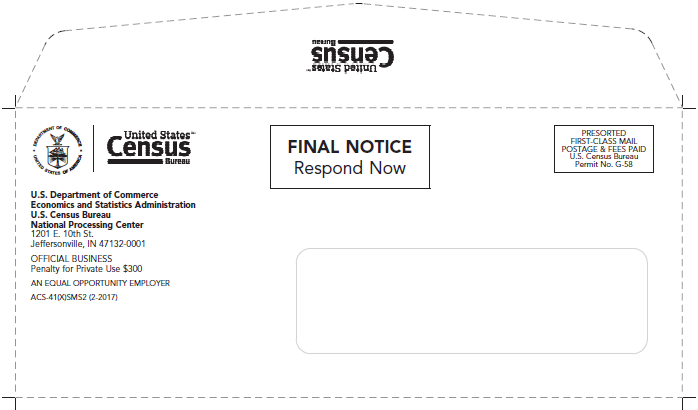

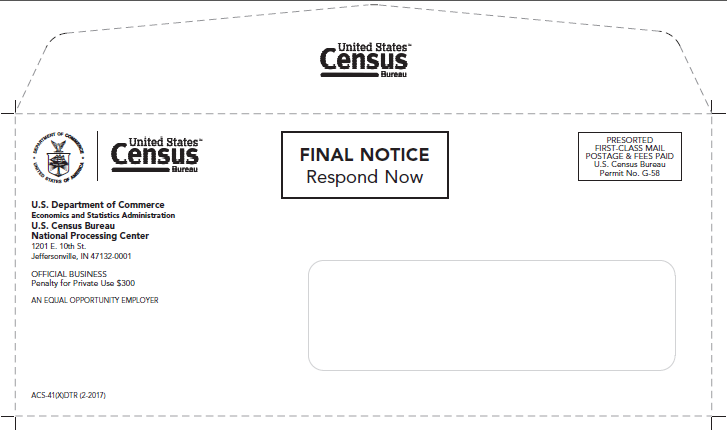

Final Reminder Letter – Outgoing Envelope

Partial Redesign Treatment Materials

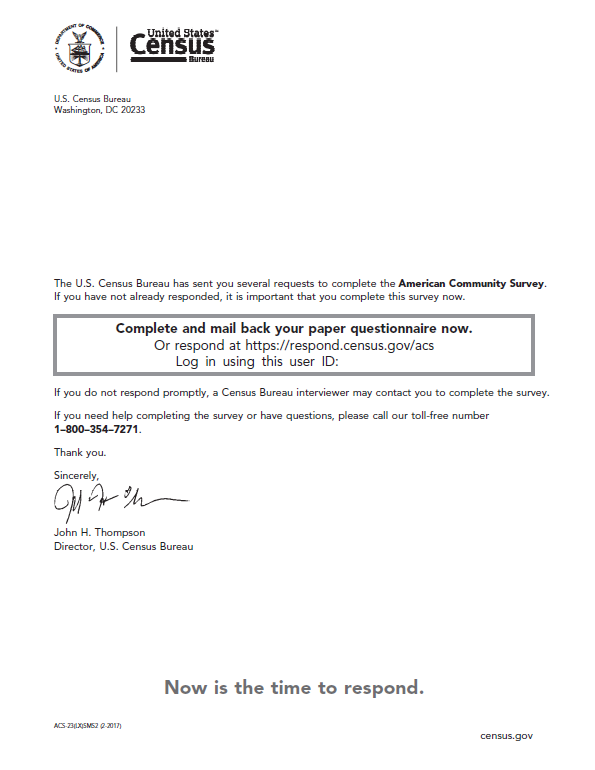

Final Reminder Letter

Appendix D: Full Redesign Treatment Materials

Initial Mailing – Outgoing Envelope

Full Redesign Treatment Materials

Initial Mailing – Why We Ask Brochure

Full Redesign Treatment Materials

Initial Mailing – Why We Ask Brochure (back)

Full Redesign Treatment Materials

Initial Mailing – Letter

Full Redesign Treatment Materials

Full Redesign Treatment Materials - DRAFT

Reminder Letter – Outgoing Envelope

Full Redesign Treatment Materials

Reminder Letter

Full Redesign Treatment Materials

Questionnaire Package – Outgoing Envelope

Questionnaire Package – Return Envelope

Full Redesign Treatment Materials - DRAFT

Questionnaire Package – Letter

Full Redesign Treatment Materials

Questionnaire Package – Page One of Questionnaire

Full Redesign Treatment Materials

Questionnaire Package – Page Two of Questionnaire

Full Redesign Treatment Materials

Reminder Postcard

Final Reminder Letter – Outgoing Envelope

Full Redesign Treatment Materials

Final Reminder Letter

1 The paper form contains no data defined persons and no respondent-provided telephone number.

2 Respondent reaches the first question in the detailed person questions section for the first person in the household.

3 We will remove addresses where the initial mailing was returned by the postal service as undeliverable-as-addressed and for which we did not receive a response in any mode.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | REAP Mail Design Test |

| File Modified | 0000-00-00 |

| File Created | 2021-01-21 |

© 2026 OMB.report | Privacy Policy