VIQI OMB Supporting Statement A - REVISED - 05082018 clean - with incentives substudy

VIQI OMB Supporting Statement A - REVISED - 05082018 clean - with incentives substudy.doc

Variations in Implementation of Quality Interventions (VIQI)

OMB: 0970-0508

Variations in Implementation of Quality Interventions (VIQI)

OMB Information Collection Request

New Collection

Supporting Statement

Part A

Original Submission: November 2017

Updated as of April 2018

Submitted By:

Office of Planning, Research, and Evaluation

Administration for Children and Families

U.S. Department of Health and Human Services

4th Floor, Mary E. Switzer Building

330 C Street, SW

Washington, D.C. 20201

Project Officers:

Ivelisse Martinez-Beck

Amy Madigan

A1. Necessity for the Data Collection

The Administration for Children and Families (ACF) at the U.S. Department of Health and Human Services (HHS), Office of Planning, Research, and Evaluation (OPRE) has launched the Variations in Implementation of Quality Interventions (VIQI): Examining the Quality-Child Outcomes Relationship in Child Care and Early Education Project. VIQI is a large-scale, experimental study that aims to inform policymakers, practitioners, and stakeholders about effective ways to support the quality and effectiveness of early care and education (ECE) centers for promoting young children’s learning and development by building rigorous evidence that aims to: 1) identify dimensions of quality within ECE settings that are key levers for promoting children’s outcomes; 2) inform what levels of quality are necessary to successfully support children’s developmental gains; 3) identify drivers that facilitate and inhibit successful implementation of interventions aimed at strengthening quality; and, 4) understand how these relations vary across different ECE settings, staff and children – all noted gaps in the knowledge base guiding policy, investments, and practice in the ECE field. VIQI is being conducted by MDRC in partnership with Abt Associates, Frank Porter Graham Child Development Institute, and MEF Associates.

Background

Prompted by converging evidence about the importance of early childhood for creating a foundation for lifelong success and concern that children from low-income and racially and ethnically diverse families tend to face greater risk for poorer outcomes than their higher- income peers, public support and government investments in ECE are at an all-time high. The literature and theory point to classroom quality – or the quality of children’s learning opportunities and experiences in the classroom – as being potentially influential for promoting child outcomes. Yet, there is considerable variation in the overall quality of ECE services, with instructional quality – a hypothesized key driver of children’s gains – often being low across ECE programs nationally despite a focus on quality improvement at national, state and local levels. Further, these relationships may vary with children of different ages. Indeed, there are still many open questions about how best to design and target investments to ensure that children, particularly low-income children, receive and benefit from high-quality, ECE programming on a large scale.

There is a growing, but imperfect, knowledge base about which dimensions of quality are most important to strengthen, and what levels of quality need to be achieved to promote child outcomes across ECE settings. The ECE literature has identified several basic dimensions of classroom quality – such as structural, process and instructional quality – that are hypothesized to promote child outcomes. Nonexperimental evidence portrays an intriguing pattern of correlational findings suggesting that quality may need to reach certain levels before effects on child outcomes become evident and that different dimensions of quality may interact with each other in synergistic ways to affect child outcomes. But, existing evidence has not pinpointed the exact levels that are consistently linked with child outcomes. Further, there is relatively little causal evidence showing that efforts to strengthen ECE quality will yield improvements in child outcomes. Without such rigorous evidence, it is difficult to draw policy and practice implications. What the field needs is a stronger, causal evidence base that provides a better understanding of the quality-child outcomes relationship, the dimensions of quality that are most related to child outcomes, and the program and classroom factors that aid delivery of quality teaching and caregiving in ECE settings.

VIQI aims to fill a particular gap in the ECE literature by conducting a rigorous experimental study testing two promising interventions that consist of curricular and professional development supports and target different dimensions of classroom quality to build evidence about the effectiveness of the interventions and investigate the relationship between classroom quality (and each of its dimensions) and children’s outcomes in mixed-aged ECE classrooms that serve both three- and four-year-old children. Further, VIQI aims to focus on three-year-old children being served in mixed-aged ECE classrooms because much of the ECE literature to date has centered on four-year-old children. Below we situate the study design within the broader literature about quality of ECE and child outcomes.

Legal or Administrative Requirements that Necessitate the Collection

There are no legal or administrative requirements that necessitate the collection. ACF is undertaking the collection at the discretion of the agency.

A2. Purpose of Survey and Data Collection Procedures

Overview of Purpose and Approach

To achieve these aims, the VIQI Project will be partnering with community-based child care and Head Start programs and centers that serve 3- and 4-year-old children. VIQI will be conducted in two phases (See Table 1 for an overview of the study phases). The first phase includes a year-long pilot study that will pilot two curricular and professional development models. The information generated from the pilot study will be used to refine the design and data collection instruments for the second phase of the project. The second phase entails a year-long impact evaluation and process study that involves testing two curricular and professional development models that aim to strengthen teacher practices, the quality of classroom processes, and children’s outcomes.

The curricular and professional development models selected are based upon prior evidence and theory of change underscoring their potential for generating impacts on child outcomes and differential impacts on different dimensions of quality on a large scale to allow us to rigorously examine the nature of the quality-to-child outcomes relationship.

The pilot study will take place in about 40 community-based and Head Start ECE centers (evenly split, to the extent possible) located in about three metropolitan areas in the United States. The impact evaluation and process study will take place in about 165 community-based and Head Start ECE centers spread across seven different metropolitan areas in the United States.

To leverage the extent to which the curricular and professional development models rigorously affect different dimensions of quality and child outcomes, the VIQI project will consist of a 3-group experimental design in the pilot study and a 3-group experimental design in the impact evaluation and the process study in which the initial quality and other characteristics of ECE centers are measured. In the impact evaluation and process study, the centers will be stratified based upon select information collected – by setting type (e.g., Head Start and community-based ECE centers) and initial levels of quality – and randomly assigned to one of the intervention conditions where they will be offered different curricular and professional development supports aimed at strengthening the quality of classroom and teacher practices, or to a business-as-usual comparison condition.

In the pilot study, about 40 centers in about three metropolitan areas will participate in the VIQI Project. Information about center and staff characteristics and classroom and teacher practices will be collected 1) to recruit and randomly assign centers; 2) to describe how the different interventions are implemented and are experienced by centers and teachers; 3) to assess the extent to which the interventions are implemented with fidelity, including identifying challenges and barriers to implementing the interventions with fidelity; 4) to assess the extent to which our data collection strategies and measures appropriately capture key constructs of interest; 5) to explore changes over time in different dimensions of quality for each of the interventions to gauge the interventions’ potential for achieving sufficiently large impacts on quality in the Impact Evaluation and Process Study; and, 6) to describe the characteristics of families and children being served by centers participating in the pilot study. These insights will be used to inform the extent to which it may be possible, and what adjustments may be necessary to activities and supports provided to install the interventions, to generate sufficiently large impacts on quality and, in turn, child outcomes to address the guiding questions of interest in the impact evaluation and process study. In addition to this, information about the background characteristics of all consented families and children being served in the centers will be collected in the pilot. We may also collect measures of children’s skills at the beginning and end of the pilot study for a subset of children in these centers. The information will then be used to adjust and to refine the research design and measures that will be used in the impact evaluation and process study the following year.

In the impact evaluation and the process study, about 165 centers in seven metropolitan areas will participate in the VIQI project. Information about center and staff characteristics and classroom and teacher practices will be collected 1) to recruit, stratify, and randomly assign centers; 2) to identify subgroups of interest; 3) to describe how the interventions are implemented and are experienced by centers and teachers; 4) to document the treatment differentials across research conditions; and 5) to assess the impacts of each of the interventions on different dimensions of quality, when compared to a business-as-usual comparison condition for the all participating centers in the impact evaluation, and separately for subgroups of interest. In addition, information about the background characteristics of families and children being served in the centers will be collected, as well as measures of children’s skills at the beginning and end of the year-long impact evaluation for a subset of children in these centers. This information will also be used 1) to define subgroups of interest defined by family and child characteristics, and 2) to assess the impacts of each of the interventions on children’s skills for all participating centers in the impact evaluation, and separately for subgroups of interest. Lastly, the information on quality, teacher practices, and children’s skills, and the impacts on these outcomes, will be used in a set of analyses that will rigorously examine the nature of the quality-to-child outcomes relationship for all participating centers in the impact evaluation and separately for subgroups of interest.

Table 1. Overview of the VIQI Pilot Study, Impact Evaluation and Process Study

|

Pilot Study |

Impact Evaluation and Process Study |

Goals |

Refine and assess feasibility of Impact Evaluation and Process Study designs; anticipate and address implementation barriers; document potential treatment contrast and counterfactual conditions; pilot and streamline measures; describe the participating centers and classrooms, and children that are being served during the pilot study; explore the extent to which the interventions have the potential to generate differences in different dimensions of quality (and possibly children’s outcomes), if implementation of the interventions is with strong enough fidelity to warrant doing so) |

Build rigorous evidence; describe the group of participating centers and classrooms, and children being served, during the impact evaluation and process study; determine effects of interventions on quality and children; examine effects of quality on children; explore subgroups to inform when and for whom interventions are more or less effective; document counterfactual conditions and treatment contrast; document implementation fidelity and drivers |

Timing of data collection instruments |

Q1 2018 (or upon receipt of approval of this OMB package) - Q2 2019 |

Q3 2019- Q2 2021 |

Metropolitan areas |

About 3 cities |

About 7 cities (including pilot cities) |

Sample |

40 centers, 120 classrooms (3 classes/center) 480 3-year-olds (4 per class) Split across CBO and HS |

165 centers, 495 classrooms (3 classes/center) 1980 3-year-olds (4 per class) Split across CBO and HS Stratified by initial quality |

Duration of implementation of interventions |

1 academic year (Q3 2018 – Q2 2019) |

1 academic year (Q3 2020 – Q2 2021) |

Conditions |

3 conditions: Centers randomly assigned within each locality across CBO and HS to 2 interventions with PD or to a BAU control condition; 15 centers (45 classrooms) in each intervention condition and 10 centers (30 classrooms) BAU control condition |

3 conditions: Centers randomly assigned within each locality across CBO and HS and stratified by initial quality to 2 interventions with PD or to a BAU control condition; 55 centers (165 classrooms) in each condition – sample split 33:33:33 across conditions in 3-group design |

Data sources |

|

|

The study received a generic OMB clearance on 02/25/2017 to gather information from staff responsible for Head Start and child care programs on existing services and populations served to better understand the landscape of early care and education programs and to aid in the refinement of the study design (OMB #0970-0356). The current request covers all of the data collection activities for the pilot study, impact evaluation, and process study. The data collection activities will include the following:

Instruments for Screening and Recruitment of ECE Centers that will be used in the pilot study, impact evaluation, and process study to assess ECE centers’ eligibility, to inform the sampling strategy, and to recruit ECE centers to participate in the VIQI Project;

Baseline Instruments for the Pilot Study, Impact Evaluation, and Process Study will be used to collect background information about centers, classrooms, center staff, and families and children being served in the centers. All of the instruments will be administered at the beginning of the pilot study, impact evaluation, and process study;

Follow-Up Instruments for the Pilot Study, Impact Evaluation, and Process Study will be used to inform how centers, classrooms, teachers, and children changed and to assess the impacts of each of the interventions over the course of the pilot study, impact evaluation, and process study. All of the instruments will be administered at the end of the pilot study, impact evaluation, and process study; and,

Fidelity of Implementation Instruments for Pilot Study and Process Study will be used to document administrators’, coaches’, teachers’ and assistant teachers’ experiences with the curricular and professional development models they are being trained on and are delivering to document treatment differentials across research conditions, and to provide context for interpreting the findings of the impact evaluation.

The data collection activities described in this request are intended to obtain complementary, but not overlapping, information. Each of the planned data sources and data collection will gather unique and crucial information to capture implementation of the interventions, the drivers of implementation, and differential impacts of the interventions on children’s learning experiences in ECE classrooms and their developmental outcomes to inform the quality-to-child outcomes relationship. In the design of the data collection instruments, the study team has aimed to reduce and minimize duplication of information being collected, except for when multiple perspectives enhance and provide different lenses and insights into the constructs and processes of interest. In all cases, attention has been paid to leveraging existing or administrative data sources whenever possible. However, as is often the case in early childhood education settings, there is limited or no existing administrative data sources that can inform the constructs and processes of interests. This necessitates each of the planned data collection activities under this request to gather crucial information needed to successfully achieve the research aims and goals underlying the VIQI Project. Detailed information for each data collection activity is provided in the section: Universe of Data Collection.

Data collection timeline

The timeline of the two phases of the VIQI Project and planned data collection activities is shown in Table 1, and more details are provided below in Section A16. The Pilot Study is scheduled to begin in Winter 2018 (or following OMB approval of this request) and end in Spring 2019. The activities are as follows:

Starting Winter 2018 (or following OMB approval of this request), VIQI will begin screening potential centers to assess their eligibility for meeting the sampling criteria for the Pilot Study until the targeted number of centers (about 40 centers) are successfully recruited;

Beginning in Fall 2018, Baseline Instruments, including self-administered surveys distributed to center administrators (e.g., directors or executive directors), lead and assistant teachers, and coaches serving the participating centers, as well as two-time points of classroom observations, will be collected. In addition, self-administered parent/guardian information forms will be distributed to parents/guardians of children being served in the participating classrooms and centers and a subset of these children may be asked to complete a set of direct child assessments. The baseline data collection will be conducted in Fall 2018;

Beginning in Fall 2018, Fidelity of Implementation Instruments will be collected. This will include collection of logs completed by lead teachers and assistant teachers, as well as logs completed by coaches. Administrators, lead teachers, assistant teachers, and coaches will also be asked to participate in semi-structured interviews conducted in small group or one-on-one format in Winter 2019 to talk about their experiences installing the interventions and completing the data collection instruments. A subset of classrooms will be observed by an external observer using an implementation fidelity observation protocol to document their fidelity of implementation and the treatment contrast in teaching practices across different conditions; and

Beginning in Winter 2019 and ending in Spring 2019, Follow-Up Instruments will be collected. This will consist of classroom observations at three points in time in the Winter and/or Spring, as well as self-administered surveys collected from administrators, lead teachers, assistant teachers, and coaches in Spring. In addition, a subset of children being served in participating centers may be asked to complete a set of direct child assessments and lead teachers will be asked to complete reports on those selected children in Spring.

The Impact Evaluation and Process Study are scheduled to begin in Summer/Fall 2019 and end in Spring 2021. However, the timing and design of the Impact Evaluation and Process Study will be finalized based upon learnings gained from the Pilot Study. These activities are as follows:

Starting Summer/Fall 2019, VIQI will begin screening potential centers to assess their eligibility for meeting the sampling criteria for the Impact Evaluation and Process Study until all 165 centers are successfully recruited;

Upon centers being recruited into the VIQI Project, Baseline Instruments will be collected. This battery of instruments includes self-administered surveys distributed to center administrators, lead and assistant teachers, and coaches serving the participating centers and two time points of classroom observations. These baseline data collection activities will begin in Winter 2020 and end in Fall 2020, with the majority of data being collected prior to random assignment. In addition, in Fall 2020, self-administered parent/guardian information forms will be distributed to parents/guardians of children being served in the participating centers and a subset of these children will be asked to complete a set of direct child assessments.

Beginning in Fall 2020, Fidelity of Implementation Instruments will be collected. This will include collection of logs completed by lead teachers and assistant teachers, as well as logs completed by coaches. Administrators, lead teachers, assistant teachers, and coaches will also be asked to participate in semi-structured interviews conducted in small group or one-on-one format in Winter 2021 to talk about their experiences installing the interventions and completing the data collection instruments. A subset of classrooms will be observed to document their fidelity of implementation and the treatment contrast in teaching practices across different conditions; and,

Beginning in Winter 2021 and ending in Spring 2021, Follow-Up Instruments will be collected. This will consist of classroom observations at three points in time in the Winter and/or Spring, and self-administered surveys collected from administrators, lead teachers, assistant teachers, and coaches in Spring. In addition, a subset of children being served in participating centers will be asked to complete a set of direct child assessments and lead teachers will be asked to complete reports on those selected children in Spring.

Research Questions

The VIQI project will aim to address a set of fundamental questions for the early care and education field across several key areas. The specific questions vary with the phase of the VIQI project, as follows:

Pilot Study Research Questions

Did screening and recruitment protocols operate as expected in recruiting the targeted group of study participants during the Pilot Study? What modifications to the screening and recruitment protocols are necessary for the Impact Evaluation and Process Study?

Did data collection protocols and procedures operate as expected during the Pilot Study? What modifications to data collection procedures are necessary for the Impact Evaluation and process Study?

To what extent did the measures operate as expected during the Pilot Study? What modifications to the measures are necessary for the Impact Evaluation and the Process Study?

How were the interventions implemented during the Pilot Study? How were the critical components of the interventions that are hypothesized to drive effects of the interventions on quality implemented during the Pilot Study? What successes and challenges were encountered for each research condition over the course of the Pilot Study? What is the readiness and capacity of the developers to support the installation of the interventions during the pilot year? What modifications to the protocols and procedures for installing the interventions are necessary for the Impact Evaluation and Process Study?

Did quality improve during the pilot year in centers implementing the interventions? What do these improvements in quality suggest about the likelihood of achieving impacts on quality in the Impact Evaluation and Process Study that are sufficient in magnitude to assess the nature of quality-child outcomes relationship?

Who are the families and children being served by the centers participating in the Pilot Study? What are the potential effects of each intervention on children’s outcomes?

Based upon insights from the Pilot Study, what adjustments to the study design and research questions for the Impact Evaluation and the Process Study are recommended?

Impact Evaluation Research Questions

What are the effects of the interventions on different dimensions of quality, teacher, and child outcomes? For whom and under what circumstances are the interventions more or less effective?

What are the causal effects of different dimensions of quality on children’s outcomes?

Are there thresholds in the effects of quality on child outcomes?

Do the effects of quality on child outcomes differ, depending on child, staff and center characteristics, including centers that vary in their initial levels of quality?

Process Study Research Questions

What are the characteristics of the participants at the center, staff, and child levels in the Impact Evaluation? Which of these characteristics are drivers of fidelity of implementation? How do these drivers relate with each other?

What are the implementation systems (e.g., professional development, training, coaching, assessments) that support the delivery of the interventions in classrooms? How much variation is there in participation of these supports? What drivers seem to support or inhibit participation?

To what degree are the interventions delivered in the classrooms as intended? How much variation is there in fidelity of implementation of the interventions? What drivers seem to facilitate or inhibit successful implementation and fidelity to the intended intervention model(s)?

What is the relative treatment contrast achieved in teacher practices targeted by the intervention(s)?

Brief Review of Scientific Literature

Below, we situate the study design within the broader literature on what we know and what we need to know about how to deliver quality early care and education at scale to produce positive impacts for young children, particularly those with low-income or disadvantaged backgrounds.

Impact of ECE on children’s outcomes. As ECE policy has come to the forefront, healthy debate has ensued about how to best ensure that investments reliably and effectively support young children. Some research shows that ECE produces substantial impacts on children’s early learning and longer-term outcomes (Heckman et al., 2013; Yoshikawa et al., 2013). Seminal studies, such as the often cited examples of High Scope/Perry Preschool and Abecedarian Projects, show that some model, high-quality, intensive programs can have large and lasting impacts for disadvantaged children with returns of $4 to $10 in benefits per dollar spent by preventing later risky behavior and boosting academic and labor market success (Currie, 2001; Heckman et al., 2010; Masse & Barnett, 2002). Looking to the current context, several recent large-scale evaluations of publicly funded ECE programs have also produced positive short-term effects on a range of children’s early outcomes (Burchinal et al., 2015). Yet, effects are generally smaller in magnitude than those of earlier studies. Thus, despite evidence of positive effects in earlier studies, the research does not provide clear guidance about how to design, target and implement public investments in ECE programming to ensure that young children benefit from the programs on a large scale, particularly across the mix of settings that provide such services.

Relationship between classroom quality and child outcomes. The ECE field has broadly defined classroom quality along several dimensions:

Structural quality features, or how programs and classrooms are designed and configured, as well as the characteristics of classrooms and the staff that work directly with children (e.g., teacher-student ratios, class size and composition, teacher compensation, education and training, classroom safety);

Process quality features, or the quality of children’s interactions with teachers and others in the classroom, including the warmth and sensitivity of these interactions and the overall classroom management and organization; and,

Instructional quality features, which includes a constellation of developmentally appropriate, intentional teaching practices and organized activities that aim to create a language-rich environment through discourse and use of vocabulary; promote children’s higher-order skills (e.g., broader world knowledge, language and vocabulary, deeper understanding of concepts within domains, and critical analytic and problem solving skills) and extend children’s learning; intentionally align or individualize children’s instructional experiences to their skill level in different developmental domains (e.g., language, literacy, math, science or social-emotional) with the inclusion of instructional content that is rich and broad in scope and follows a developmental sequence within specific domains.

A review of large-scale studies and evaluations reveals considerable variation in quality (Burchinal et al., 2015); almost all evaluations of Head Start (HS) and pre-k programs report adequate process quality (as measured by the CLASS), but quality of instructional support is low to moderate (Burchinal et al., 2015; Moiduddin et al., 2012). Further attempts to understand how these different dimensions of quality influence children’s gains have yielded intriguing patterns of findings that raise critical questions about which dimensions matter for young children, at what thresholds, and how they might interact with each other. The literature suggests that structural features set the stage for supporting more positive interactions rich in content and stimulation, but do not guarantee such interactions will occur; accordingly, they are not consistently linked with children’s gains (Cassidy et al., 2005; NICHD ECCRN, 2002; Zaslow et al., 2010). Aspects of process quality are hypothesized to be more closely linked with children’s gains. Yet, recent studies have found that commonly used measures of quality, which capture a mix of structural and/or process quality features, have not been consistently or strongly linked with child outcomes (Burchinal, Kainz & Cai, 2011; Weiland, Ulvestad, Sachs & Yoshikawa, 2013). Some non-experimental work suggests that certain levels or thresholds of quality must be met before associations between quality and child outcomes become evident, while other work underscores the potential interplay between thresholds and quality dimensions underlying child development (Burchinal et al., 2016). Elsewhere, correlations of measures of instruction within specific content areas (such as math or language) appear to have larger effects on children in those domains than do more global measures of process quality (Burchinal et al., 2016). Emerging findings like these highlight specific instructional “moves” or teacher practices that might be more directly linked with improvements in children’s learning and development. But, the evidence to date has not been consistent. Therefore, while the literature suggests the quality-child outcome relationship may be nonlinear and there are interactions among quality dimensions, further work is needed to more clearly document the exact nature of these effects on children’s learning and development.

Curricular and professional development models for strengthening the quality of ECE. A review of the ECE literature concludes that one of the most effective strategies for strengthening the quality of ECE is by implementing curricular models aligned with ongoing professional development supports such as coaching and training.

Curricula. Implementing explicit, intentional curricula – which provides content, tools, and specific pedagogical guidance for instruction (Stein, Remillard, & Smith, 2007) – is thought to promote children’s gains because it ensures continuing emphasis on particular skills needed for children’s school success, keeps children engaged and challenged, and maintains classroom quality (Klein & Knitzer, 2006). A review of ECE curricula finds 3 general categories with varying theories of change and levels of effectiveness across a variety of ECE settings, populations and circumstances:

“Whole-child” curricula (e.g., Creative Curriculum, High Scope) provide a range of activities aimed at creating safe, organized and well-managed, warm and nurturing environments aimed at promoting a wide range of child outcomes. Typically, teachers select activities without explicitly focusing on a scope and sequence of learning;

Domain-specific curricula, often delivered as curricular enhancements, focus on enhancing instructional quality in a single content area and follow a set scope and sequence where prerequisite skills are taught and mastered before more complex skills are introduced; and,

Integrated or interdisciplinary curricula purposefully aim to enhance instructional quality in more than one content area and follow a set scope and sequence, like single domain-specific curricula, where prerequisite skills are taught and mastered before more complex skills are introduced. The content and activities aim to have a high degree of interconnections across areas through the creation of a language-rich environment aimed at reinforcing children’s learning and skill development across domains, but also developing children’s higher-order thinking skills that cut across multiple content and skill areas.

Professional development (PD). A review of the PD literature suggests that targeted PD models have been shown to enhance classroom quality and teachers’ practices, especially when well-designed training is followed by in-classroom coaching that helps teachers transfer what they learned in training to their work with children (Joyce & Showers, 1981). Within the PD literature, coaching models typically expect coaches to: 1) build relationships with teachers; 2) observe, model, and advise in the classroom; 3) meet with teachers to discuss classroom practices, provide support and feedback, and assist with problem-solving; and 4) monitor progress toward identified goals. Coaching differs from the typical ECE PD, generally “one-shot” workshops that do not allow for breadth or depth of exploration of a particular topic. In fact, prior studies indicate that training alone is not enough to improve teacher practices over time (Koh & Neuman, 2009; Neuman & Cunningham, 2009). Across the evaluation literature, most curricula with demonstrated effectiveness are coupled with integrated, intensive PD. However, this review also suggests that such interventions can generate robust impacts on teacher practice, but do not always yield corresponding improvements in child outcomes, suggesting that more evidence is needed to guide the combination of targets – which dimensions of quality, content areas, and children’s skills – should be the foci of curricular and PD supports to reliably strengthen the effectiveness of ECE programming at scale.

Challenges to replication at scale and implications for the VIQI project. Last, scaling effective, high-quality ECE services can be challenging, in part, due to the variety of service systems providing ECE in the U.S. Multiple federal, state and private funding streams, laws and regulations oversee and support ECE services delivered via a mix of settings and teachers with varying education and training. Studies often find effects are not replicated when an intervention is scaled and tested. Two potential explanations are a decrease in fidelity to the program model when an intervention is scaled up due to relatively uncontrolled real-world settings (Hulleman & Cordray, 2009) and changes in the counterfactual context. Regardless of how well an intervention is implemented, programs operating the “business-as-usual” or counterfactual conditions may implement something similar and, subsequently, exhibit similar outcomes. This is particularly relevant in today’s evaluation context. Earlier studies like Abecedarian and Perry Preschool compared intervention groups to control groups that received few, if any, services. Due to ECE growth in ensuing decades, more recent studies typically compare intervention groups to control groups of children also heavily served by ECE. As found in the Head Start Impact Study and the later Head Start Variation Study, the changing counterfactual condition likely depresses the size of net impacts when tested at scale (Bloom & Weiland 2015; U.S. Department of Health & Human Services, 2010). Despite these issues, evaluations rarely collect detailed information on how well the intervention is being delivered, or on a parallel assessment of key outputs in counterfactual conditions, to understand whether the desired strength of treatment contrast has been achieved. This makes it difficult to know if inconsistency in impacts is due to problems with “infidelity,” intervention’s theory of change, relatively strong counterfactual conditions, or some combination. The implementation research and measurement plans described in this package are designed to meet these needs.

Conceptual framework underlying the VIQI project. The VIQI project aims to leverage two types of variation to build evidence for the field – variation across settings, staff, and children already evident across the ECE landscape nationally and experimentally induced variation in quality dimensions. At the core of the VIQI project, a multi-group randomized controlled experimental design where the effectiveness of two interventions (supported by intensive professional development) targeting different dimensions of quality will be tested across community-based and Head Start centers that vary in their initial levels of quality. In doing so, the project addresses a pressing need in the ECE field for further evidence rigorously unpacking the “black box” of quality by illuminating: 1) the causal effects of different dimensions of quality on child outcomes; 2) the effectiveness of different interventions (combinations of curricular and PD supports) across a variety of ECE settings, populations and circumstances; and 3) the reasons why or why not the curricular and PD supports are effective at shaping quality and child outcomes and what factors might drive those results. The VIQI project focuses primarily on Head Start and community-based child care centers, since these are settings for which the literature about the effects of different dimensions of quality and the effects of curricular and PD supports have been further developed. This project will not focus on home-based or other informal child care settings, such as family-based child care settings, as the literature provides much less guidance around which aspects of quality and which interventions might be more or less important and effective in those settings.

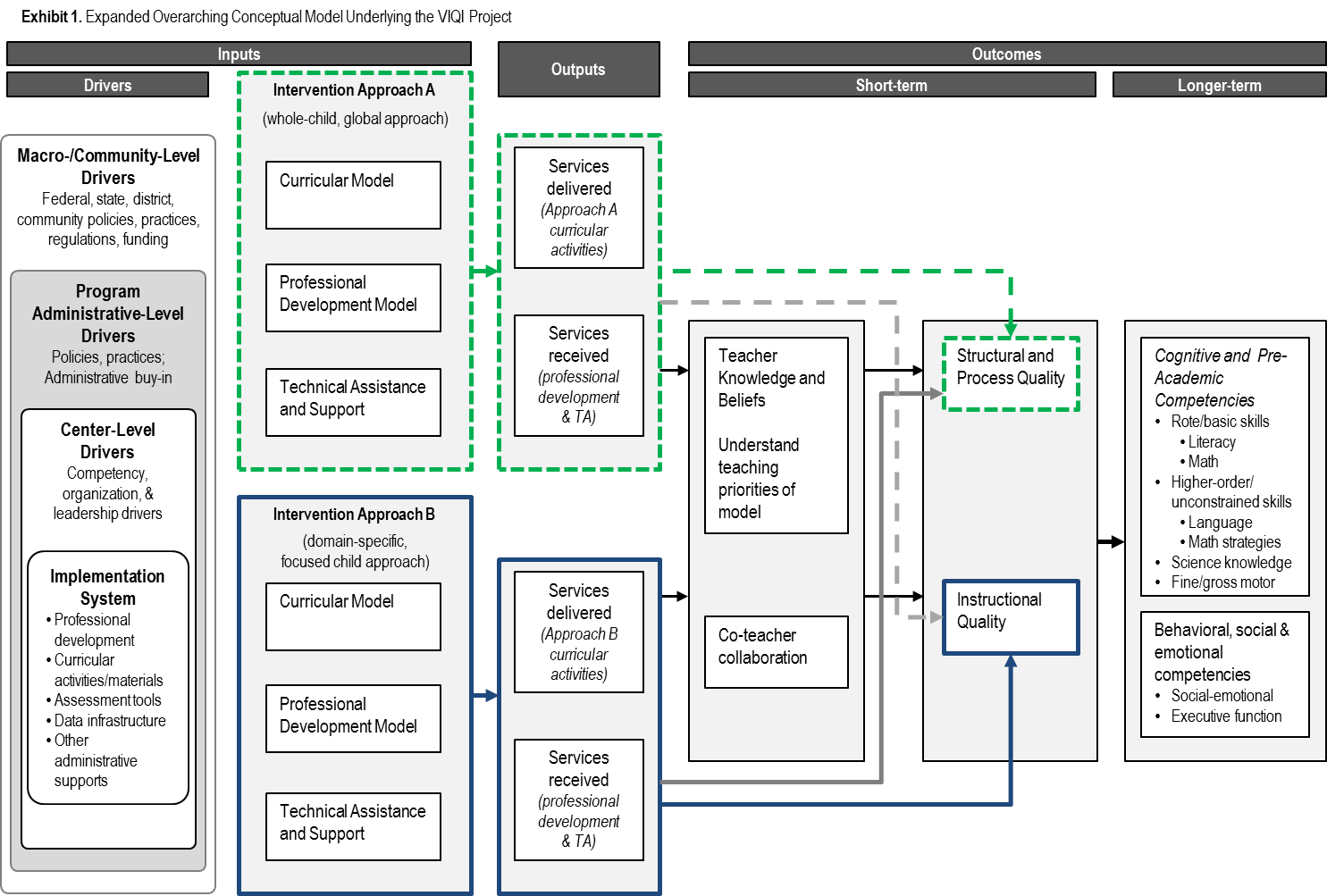

The conceptual framework (shown in Exhibit 1) that was developed by taking stock of the ECE evidence base, implementation science and developmental theory and research serves as the foundation for the VIQI project and guides our planned approach to the study design, data sources and measurement plan.

In the middle of the figure, we depict the “black box” of quality – structural, process, and instructional quality features thought to characterize classrooms’ overall global quality and functioning – that we aim to unpack in the VIQI project. The features are grouped to represent two pathways that are hypothesized to promote child outcomes in ECE programs – one through a combined construct of structural and process quality features and one though instructional quality features. This represents the number of pathways, and in turn dimensions of quality, that can be reasonably rigorously teased apart from each other to unpack the effects of different dimensions of quality on child outcomes, given the operational realities of conducting a study of this magnitude within the project’s existing resources.

To the left of these dimensions of quality in Exhibit 1, two interventions (a combination of curricula and professional development supports) are shown that are hypothesized to differentially influence these different pathways directly and through a set of associated mechanisms (which are discussed in more detail below). Intervention Approach A is conceptualized as a whole-child, global intervention approach that is expected to primarily affect children’s experiences in the classrooms though structural and process quality features. The Creative Curriculum has been selected as the intervention that best meets the whole-child, global approach. In contrast, Intervention Approach B is conceptualized as an integrated or interdisciplinary approach with broad scope and explicit sequencing of content that is hypothesized to primarily affect children’s experiences in the classrooms though instructional quality features. Connect4Learning has been selected as the intervention that best meets the integrated or interdisciplinary approach. The dotted lines from each of the intervention approaches to the other dimensions of quality are meant to show how the interventions primarily target one over the other pathway.

Stemming from this, at the core of the VIQI project, we envision a multi-group randomized controlled experimental design where the effectiveness of the two interventions is tested. The design can be thought of as a test of two interventions that take different approaches to and focus on different aspects of quality. Such a test would allow us to rigorously estimate two opposing mechanisms for strengthening the quality of children’s experiences in the classrooms to affect child outcomes to determine which approach – and in turn, which dimensions of quality – yield the greatest improvements in children’s development.

The planned data collection activities and measures covered under this request are also guided by this conceptual framework, which provides a theory of change underlying ECE programs and how they achieve their intended effects on children’s experiences in the classrooms and child outcomes. Going left to right of Exhibit 1, it is hypothesized that there are multilevel drivers and inputs that influence the outputs or activities and services outputted and delivered from installation of the interventions, which lead to a set of shorter-term outcomes both for teachers (in terms of knowledge, beliefs, understanding, and co-teacher collaboration) and for classrooms (in terms of multiple aspects of quality) as well as longer-term outcomes for children (in terms of their cognitive, pre-academic, behavioral, and social-emotional competencies including both basic skills and higher-order skills). Below we further describe each of these aspects of the conceptual framework.

Implementation drivers. On the far left side of Exhibit 1, we denote the implementation drivers that have been identified in the literature as promoting high-fidelity implementation (Fixsen, Blase, Naoom, & Wallace, 2009; Han & Weiss, 2005; Metz et al., 2014). The conceptual model takes an ecological systems approach—by focusing on various multilevel influences—and utilizes the National Implementation Research Network (NIRN) model (NIRN, 2016) as a base to provide a wider picture of the proximal and distal drivers of implementation. In doing so, we identify three categories of drivers at the organizational level, or in our case, ECE center level. These drivers are conceptualized as interactive processes that will help ECE centers and their staff to support effective and consistent implementation of an intervention as intended, leading to reliable benefits in quality and teacher practice and ultimately in children’s outcomes. They include:

Competency Drivers – This set of drivers posits that the background, experience, attitudes, and knowledge of staff selected to implement, along with the training and coaching provided to them, are essential for changing educator behavior and supporting implementation.

Organization Drivers – This set of drivers assumes that a well-developed infrastructure, including a positive climate and readiness to take on an initiative, is essential for enabling and supporting the competency drivers and implementation.

Leadership Drivers – This set of drivers posits that leadership, including good management and an effective leadership style that can resolve adaptive and technical issues and problems, can help manage change and, in turn, support implementation.

We nest an Implementation System within the center-level drivers. The Implementation System identifies specific processes that are embedded within the competency and organization drivers – namely, existing professional development supports (e.g., training, coaching infrastructure), curricular activities and materials, and data support system already in place within centers – as these existing capacities or processes are thought to facilitate the installation and implementation of the VIQI interventions.

Because implementation will take place in classrooms within centers, many of which are also nested within larger, umbrella organizations or grantee agencies (which we refer to as the program administrative level) and within a changing landscape of ECE priorities, policies, and practices at the community, district, state, and federal levels (e.g., Durlak & Dupre, 2008; Han & Weiss, 2005), the figure also depicts a layer of influences within the larger macro-system context. The influence of drivers at these higher levels of the ecological system on implementation are largely thought to be indirect, or filtered through, the center-level drivers. Therefore, a greater focus of our planned measurement strategy is on these center-level drivers.

Interventions. To the right of the drivers in Exhibit 1, the interventions (i.e., Interventions A and B) being tested are also depicted as key implementation inputs within our conceptual framework. In VIQI the interventions chosen will entail a curricular model that includes a particular set of curricular materials and activities, a professional development model that includes teacher training and coaching, and ongoing provision of technical assistance and support—all with the aim of promoting the dimension of quality that intervention is hypothesized to target.

Outputs. The middle portion of the figure—outputs—represents the extent to which the intervention services are delivered and received as intended, which in VIQI represents implementation of the curricular and professional development models as well as monitoring of that implementation and provision of technical assistance. This box implicitly highlights the importance of fidelity of implementation—delivering the intervention as intended—as a necessary link in the chain for the interventions to achieve the intended effects on quality and children’s outcomes.

Fidelity of implementation is multidimensional and consists of two main aspects: (1) implementation fidelity, or the extent to which the professional development model and other supports are received as intended; and (2) intervention fidelity, or the extent to which the curricular model is delivered by teachers as intended (Hulleman et al., 2012). Typically assessed fidelity constructs in the literature include adherence, which refers to whether teachers conform to the curriculum “protocol” or deliver the component pieces of a curriculum (e.g., whether teachers used the materials that developers intended them to use); dosage, which refers to an index of quantity of delivery such as number of coaching sessions implemented or the length of sessions; quality, which refers to the qualitative aspects of the manner in which the intervention is delivered, or the skill with which facilitators (e.g., trainers, teachers) deliver material and interact with participants (e.g., teachers, children); and participant responsiveness, which refers to the level of involvement displayed by intervention recipients (e.g., were children actively engaged with curricular components) (Dane & Schneider, 1998).

Mechanisms for changing short-term and longer-term outcomes. Continuing from left to right of the conceptual framework, Exhibit 1 then shows the general theory of change stemming from the installation of an intervention like those planned in VIQI through (1) short-term improvements in teacher outcomes, such as their knowledge, beliefs, and relationship with co-teachers; (2) improvements in different dimensions of classroom quality, which in turn result in; (3) increases in children’s competencies. As discussed earlier, two primary pathways are depicted where the two interventions being tested in the VIQI project are expected to have differential impacts on different dimensions of classroom outcomes (i.e., a combined construct of structural and process quality features vs. instructional quality features). The two dimensions of quality are in the same gray box because we view them as being interrelated, but not completely overlapping. Together, they are thought to characterize classrooms’ overall quality and functioning. Lastly, in Exhibit 1, we depict that the two hypothesized pathways are thought to directly shape a range of children’s cognitive, pre-academic, behavioral and social-emotional competencies.

Study Design

As discussed above, the VIQI project will be conducted in two phases consisting of a year-long pilot study followed by year-long impact evaluation and process study.

Screening and Recruitment for the Pilot Study, Impact Evaluation and Process Study

The design for each of these phases will involve a screening and recruitment process where eligible centers will be asked to participate in the respective phase of the VIQI project. The goal for the pilot study is to successfully recruit about 40 centers, and the goal for the impact evaluation and process study is to recruit 165 centers. Although the results of this study are designed to be generalizable to the center-classroom-child combinations eligible for this study, the study will not provide results that are statistically representative of populations of children or classrooms or centers. Convenience methods and qualitative judgement are necessary to ensure both diversity and feasibility.

The eligibility screening and recruitment process will aim to identify and engage centers in the study that meet the sampling criteria for the VIQI project. To identify the pool of eligible centers for the VIQI project, a staged and tiered screening and recruitment process is planned. This approach will be tested in the pilot study. Information from the pilot study will then be used to adjust and refine the approach for screening and recruitment for the impact evaluation and process study.

In planning for the VIQI project, the team has reviewed publicly available data sources recommended by OPRE, the Office of Child Care, and the Office of Head Start. Yet, these sources are limited in aiding the identification of localities and centers that meet the sampling criteria for the VIQI project. For this reason, the study team will need to contact key informants to gather information to enable us to successfully screen and recruit centers that meet our sampling criteria. A combination of phone calls and in-person visits using semi-structured protocols to ask a series of questions tailored to informants will be used to gather detailed information to inform the recruitment and selection of centers for the respective phase of the study.

A first step in the plan is to gather detailed information about the ECE landscape at state and local levels to explore whether particular localities will be a good fit for the VIQI project. The list of key informants will be identified through a purposeful, snowball sampling process and information gathered through the generic clearance package, in addition to recommendations from the Contracting Officer’s Representative (COR) for VIQI, organizations recognized as experts in the ECE field, other recommendations for state and local experts within large metropolitan areas, and independent internet searchers and reviews of existing and publicly available information (such as reports, websites when available) about the landscape of ECE programming at national, state and local levels. Much of these landscaping activities are currently being conducted under the generic clearance package. From this, we expect to be able to identify promising metropolitan areas that will be targeted for more focused screening and recruitment activities for the pilot study or impact evaluation and process study, depending upon the phase of the project.

As the list of candidate metropolitan areas is narrowed down, the study team will reach out to key informants at local administrative entities that are connected to large numbers of Head Start and community-based child care centers. In some cases, these entities may be Head Start grantee or delegate agencies that receive funding directly from the Office of Head Start. In others, these entities may be community-based child care oversight agencies that operate or oversee multiple child care centers. Together, we refer to these entities as “umbrella agencies”. Upon obtaining initial screening and eligibility information, the study team will then refine and narrow the list of prospective umbrella agencies and begin outreach to informants at individual centers (as warranted). The study team will continue gathering information to further refine and narrow the list of potential centers in an iterative fashion where we take stock of the information learned from sets of conversations to guide the next set of conversations and contacts.

Our screening and recruitment activities will aim to generate a group of participants that has geographic variation of centers in several metropolitan areas across the United States, but the study participants will not be a probability sample; and, therefore, we will not be able to statistically generalize our findings to a broader population of ECE centers in the United States. However, our extensive landscaping effort of ECE programming conducted under the generic clearance will allow us to describe how the centers recruited into the study fit in the context of the broader ECE landscape across the United States.

Once centers agree to participate in the VIQI project, classrooms will be identified and selected to participate in the pilot study or the impact evaluation and process study. Information gathered from the screening and recruitment activities will be used inform the selection of classrooms within participating centers. In line with the target populations for the interventions being tested, classrooms serving a mix of 3- and 4-year-old children that provide a full-day of Head Start or child care services will be selected. (For the VIQI project, “full-day” is considered providing early care and education for at least six consecutive hours per day, five days per week with a consistent lead teacher for that schedule.) We anticipate identifying about 3 classrooms per center that meet these criteria. We also expect that most centers will not have more than 3 classrooms serving a mix of 3- and 4-year-old children. As such, the selected classrooms are expected to closely represent the universe of classrooms serving 3- and 4-year-old children in the centers participating in the VIQI project. We also aim to collect data from all of the administrators, lead and assistant teachers and coaches serving these classrooms of children. Therefore, any analysis of data from these classrooms will be generalizable to all of the classrooms serving 3- and 4-year-old children and related staff in participating centers. We do not expect the participants to be generalizable to classrooms and staff in the participating centers serving children of other combinations of age groupings.

After centers agree to participate in the respective phase of the VIQI project and eligible classrooms are identified and selected, lead and assistant teachers in these classrooms will be asked to participate in any training and professional development activities related to the installation of the interventions, depending upon the center’s assigned research condition. Potential study participants in each of the centers will be asked to complete a set of data collection activities to address the research questions guiding each phase of the study. At baseline, the study team will collect baseline instruments that gather background information about centers, classrooms, and center staff. The targeted group for these data collection activities are all administrators in participating centers who will be asked to complete an administrator baseline survey, all lead and assistant teachers in classrooms selected to participate in this study who will be asked to complete a teacher/assistant teacher baseline survey, and all coaches serving the participating centers who will be asked to complete a coach baseline survey. All participating classrooms will also participate in two time-points of classroom observations at baseline to capture information about the classrooms’ initial quality. A baseline information form will also be administered to parents or guardians of children enrolled in participating ECE centers at the beginning of the pilot study and the impact evaluation and process study. The baseline information form will be used to screen and select eligible children to participate in the protocol for baseline assessments of children’s skills. (A more detailed description of the recruitment and screening process for families and children is provided below.)

Together, the baseline instruments will gather information that will be used to describe the group of participants in the pilot study, impact evaluation and process study. The information will be used to: (a) assess the extent to which screening and recruitment processes in the pilot study, impact evaluation and process study generated participants that met the selection criteria for the VIQI project and compare the resulting group of participants with the samples of other ECE studies and the broader set of centers across the ECE landscape nationally; (b) stratify the group of participating centers based upon the extent to which they provide Head Start or community-based child care services and levels of initial quality (in the impact evaluation and process study only); (c) assess equivalence of research conditions prior to the installation of the interventions, describe subgroups of interest, and as covariates in any impact or subsequent analyses aimed at causally estimating the quality-to-child outcome relationships; and (d) explore the potential influence of drivers in facilitating or inhibiting successful installation and implementation of the interventions in the process study.

After baseline information is collected, a stratified, cluster-based, multi-group random assignment design will be used. In pilot study, centers will be stratified by locality and then randomly assigned to one of the research conditions. In the impact evaluation and process study, centers, first, will be stratified by whether they provide center-based child care or Head Start services and by their initial levels of quality and, second, will be randomly assigned to one of the research conditions. For the pilot study, each of the participating centers (about 40) will be randomly assigned to one of three groups: a group that receives Intervention 1 (Group 1), a group that received Intervention 2 (Group 2), or to a group that continues to conduct “business as usual” (Control). About three classrooms per center serving 3- and 4-year-old children will be asked to participate in the study. In the pilot study, centers will be unevenly assigned to each group, such that 30 centers install one of the targeted interventions (split evenly across two interventions) and 10 centers are in a business-as-usual control condition. For the impact evaluation and process study, centers will be randomly assigned to each group equally: one of two intervention conditions or a business-as-usual control condition. All of the classrooms serving 3- and 4-year-old children that are selected to participate in the study will be asked to participate in the training and professional development activities prescribed for the assigned research condition.

Over the course of the year of the pilot study and process study, the study team will collect a set of fidelity of implementation instruments across research conditions. All lead and assistant teachers across research conditions will be asked to complete weekly teacher logs. All coaches who support the installation of the interventions will be asked to complete coach logs upon completing coaching sessions with teachers on their caseload. A randomly selected subset of classrooms across research conditions will also be asked to participate in implementation fidelity observations to gather information about the implementation and delivery of the interventions and the treatment differential across research conditions. This subset of classrooms will be selected with an eye towards equal representation of classrooms across all of the research conditions and representation of classrooms in centers that provide Head Start and community-based child care services and high and low initial levels of quality. In the pilot study, this information will be used to (a) describe the implementation of the interventions; (b) identify potential factors that appear to either facilitate or inhibit successful installation of the interventions; (c) revise and finalize the data collection activities and measures that will be included in the impact evaluation and process study; and (d) guide the selection of the piloted interventions that have reasonable prospects for generating impacts on dimensions of quality and child outcomes and will be included in the impact evaluation and process study. In the process study, the information will be used to (a) document how the interventions are delivered and received by staff; (b) inform the degree to which the interventions are implemented with fidelity in line with the intended intervention models; and (c) identify factors at the center, staff and child levels that appear to facilitate or inhibit successful implementation of the intervention models to provide context for interpreting the findings of the impact evaluation.

At follow-up, the study team will collect follow-up instruments to inform how centers, classrooms, teachers and children changed as a function of the interventions over the course of the pilot study, impact evaluation, and process study. All the instruments will be administered at the end of the pilot study, impact evaluation and process study. The targeted group for these data collection activities are all administrators in participating centers who will be asked to complete an administrator follow-up survey, all lead and assistant teachers in classrooms selected to participate in this study who will be asked to complete a teacher/assistant teacher follow-up survey, and all coaches serving the participating centers who will be asked to complete a coach follow-up survey. All participating classrooms will also participate in three time-points of classroom observations at follow-up to capture information about the classrooms’ quality. For the pilot study and impact evaluation and process study, a protocol for follow-up assessments of children’s skills, as well as teacher reports on children’s social and behavioral skills, will also be collected for the selected group of children in each of the participating classrooms (the sampling approach of children is discussed in the “Sampling” Section of B1 in Supporting Statement B).

The follow-up information will be used in the pilot study to (a) identify challenges and barriers to installing the interventions; (b) understand the extent to which the interventions are delivered with fidelity; (c) refine hypotheses about the extent to which the interventions are likely to change targeted dimensions of quality in line with the interventions’ respective theories of change; (d) to inform the extent to which the interventions are likely to generate impacts on different dimensions of quality in the impact evaluation and process study that are sufficient to explore the key questions of the impact evaluation and process study; (e) guide the finalization of procedures and support used to install the interventions to help ensure reasonable prospects for generating impacts on dimensions of quality and child outcomes and will be included in the impact evaluation and process study; (f) revise and finalize the data collection activities and measures that will be included in the impact evaluation and process study; (g) describe the population of families and children being served in participating classrooms and centers; and, (h) assess the potential of each intervention to change child outcomes. For the impact evaluation, the information will be used to (a) describe the settings, classrooms and child outcomes and, for information collected at both time points, how they may have changed from baseline to follow-up; (b) document treatment contrasts across research conditions; (c) estimate the impacts of the interventions on quality and child outcomes for all participating centers and classrooms and subgroups of interest; and, (d) estimate the causal effects of quality on child outcomes for all participating centers and classrooms and subgroups of interest.

As mentioned above, the design of the pilot study and impact evaluation and process study will involve recruiting, screening, and selecting a group of families and children who are open to participating in the data collection activities and who meet the study’s eligibility and selection criteria. See the “Sampling” Section of B1 in Supporting Statement B for more details on selection of families and children.

Universe of Data Collection Efforts

The VIQI study will take a multi-method, multi-informant approach to collecting critical and detailed information necessary to address the research questions underlying the VIQI project and to fulfill ACF’s learning agenda. See Exhibit 2 for a matrix detailing the data collection instruments.

Exhibit 2. VIQI Data Collection Instruments

Data Collection Instrument |

Data Type |

Landscaping protocol with Stakeholder Agencies |

Site call/visit |

Screening protocol for phone calls |

Site call/visit |

Protocol for in-person visits for screening and recruitment activities |

Site call/visit |

Baseline administrator survey |

Survey |

Baseline teacher/assistant teacher survey |

Survey |

Baseline coach survey |

Survey |

Baseline classroom observation protocol |

Observation |

Baseline parent/guardian information form |

Survey |

Baseline protocol for child assessments |

Direct assessments |

Follow-up administrator survey |

Survey |

Follow-up teacher/assistant teacher survey |

Survey |

Follow-up coach survey |

Survey |

Follow-up classroom observation protocol |

Observation |

Follow-up protocol for child assessments |

Direct assessments |

Teacher reports to questions about children in classroom |

Teacher report |

Teacher/assistant teacher Log |

Log |

Coach Log |

Log |

Implementation fidelity observation protocol |

Observation |

Interview/Focus group protocol |

Interview |

See Exhibit 3 for a matrix detailing the data constructs of interest that will be collected by the data collection instruments.

Exhibit

3.

Matrix of Measurement Constructs

Exhibit

3.

Matrix of Measurement Constructs

Exhibit

3.

Matrix of Measurement Constructs (Continued)

Exhibit

3.

Matrix of Measurement Constructs (Continued)

Exhibit

3.

Matrix of Measurement Constructs (Continued)

Exhibit

3.

Matrix of Measurement Constructs (Continued)

Exhibit

3.

Matrix of Measurement Constructs (Continued)

Exhibit

3.

Matrix of Measurement Constructs (Continued)

Instruments for Screening and Recruitment of ECE Centers

Attachment A.1 – Landscaping Protocol with Stakeholder Agencies and Related Materials

The VIQI team will conduct a series of semi-structured phone/in-person discussions with state and local informants using a semi-structured discussion protocol that is tailored depending upon the expertise and background of the informants and the gaps in the study team’s knowledge base about the ECE landscape in different metropolitan areas. The goal of these discussions is to better understand the landscape and structure of ECE programming and the population served in different metropolitan areas, current/upcoming initiatives to improve quality, variation in levels of quality, details about state/local implementation of curricula and professional development initiatives, data infrastructure, and feasibility of conducting the VIQI project. The group for this data collection protocol is expected to consist of Head Start (HS) grantee and community-based child care agency informants. A total of 120 individuals from different metropolitan areas will be asked to participate in these discussions across the pilot study, impact evaluation and process study of the VIQI project. The information is expected to be gathered in a discussion (and follow-up conversations, if needed) that should last a total of approximately 1.5 hours per participant.

Attachment A.2 – Screening Protocol for Phone Calls and Related Materials

The VIQI team will conduct a series of semi-structured phone discussions with staff from multiple organizations (a mix of Head Start grantee agencies, delegate agencies, organizations that operate multiple child care centers, and independent ECE centers). The conversations will be conducted using a semi-structured discussion protocol that is tailored depending upon the expertise and background of the staff person and the gaps in the study team’s knowledge base about the ECE programming structure and services provided by organizations. Grantees and centers will be asked to provide information about the center(s) history, enrollment, structure and staffing, children’s demographics, curricula and teacher professional development. Information collected during screening calls will help determine whether the grantee or center is eligible for an in-person site visit. The group for this data collection protocol is expected to consist of 132 individuals from HS grantee and child care oversight agencies and 336 individuals from Head Start and child care centers across the pilot study, impact evaluation and process study of the VIQI project. (Because we expect to speak with more respondents at the center level than at the grantee or agency level—using one protocol— we note two separate burden estimates in Exhibit 5 below.) The information is expected to be gathered in a discussion (and follow-up conversations, if needed) that should last a total of approximately 1.2 to 2 hours per participant.

Attachment A.3 – Protocol for In-person Visits for Screening and Recruitment Activities and Related Materials

A subset of the grantees and centers that complete screening and recruitment phone call discussions, are interested in participating in the project, and meet preliminary eligibility requirements for study inclusion will receive up to two rounds of in-person site visits by the VIQI team. The visits will be conducted using a semi-structured discussion protocol that is tailored depending upon the expertise and background of the staff person and the gaps in the study team’s knowledge base about ECE programming and services provided by organizations. During these visits, project team will obtain further clarification on information obtained during the screening call, explore program- and center-level operations in more detail, and discuss plans for potential implementation of the selected interventions, including professional development, research procedures, and the roles and responsibilities of the programs and the project team. The group for this data collection protocol is expected to consist of 610 individuals from HS grantee and child care oversight agencies and 950 individuals from Head Start and child care centers across the pilot study, impact evaluation and process study of the VIQI project. (Because we expect to speak with more respondents at the center level than at the grantee or agency level—using one protocol— we note two separate burden estimates in Exhibit 5 below.) The information is expected to be gathered in a discussion (and follow-up conversations, if needed) that should last a total of approximately 1.2 to 1.5 hours per participant.

Baseline Instruments for the Pilot Study, Impact Evaluation, and Process Study

Attachment B.1 – Baseline Administrator Survey

All center administrators in participating centers in the pilot and evaluation and process studies will be asked to complete a baseline survey to capture measures of demographics, professional experience, center staffing and services provided, training, coaching, and implementation drivers (e.g., beliefs, burnout/stress, center readiness). The target group for this data collection instrument is expected to consist of administrators who are employed with participating centers (or their overarching program) prior to random assignment. We expect some turnover in administrators, and we will seek to collect the baseline administrator survey from any newly hired administrators at the beginning of the academic or school year that a given center is participating in the study. In total, we expect to ask up to 246 administrators to be asked to complete the baseline survey across the pilot study, impact evaluation and process study of the VIQI project: up to 48 during the pilot study and 198 during the impact evaluation and process study.

Appendix B.2 – Baseline Teacher Survey

All lead and assistant teachers in participating classrooms in the pilot and evaluation and process studies will be asked to complete a baseline survey. Teacher surveys will collect data on teacher demographics, professional experience, and classroom resources (e.g., materials and supplies) as well as implementation drivers or moderators of implementation as indicated in the Conceptual Model (Exhibit 1). The target group for this data collection instrument is expected to consist of lead and assistant teachers who are in selected classrooms meeting the VIQI project’s eligibility criteria in participating centers prior to random assignment. We expect some turnover in lead and assistant teachers during the year, and we will seek to collect the baseline teacher survey from any newly hired lead and assistant teachers at the beginning of the academic or school year. In total, we expect up to 1,538 teachers to be asked to complete the baseline survey across the pilot study, impact evaluation and process study of the VIQI project: up to 300 during the pilot study and 1,238 during the impact evaluation and process study.

Appendix B.3– Baseline Coach Survey

All coaches serving intervention centers in the pilot and evaluation and process studies, along with all coaches identified as serving teachers in participating control centers, will be asked to complete a baseline survey. Coach surveys include many of the same measures as the teacher surveys (e.g., demographics, professional experience, beliefs, pedagogical content knowledge) plus some questions about their coaching style. Coaches serving intervention centers will also be asked questions about their motivation to implement the assigned intervention. The target group for this data collection is expected to consist of coaches serving intervention centers, along with all coaches identified as serving teachers in participating control centers just after random assignment is conducted, but prior to the installation of the interventions associated with some research conditions. We expect some turnover in coaches during the year, and we will seek to collect the baseline coach survey from any newly hired coaches over the course of the year that a given center is participating in the study. In total, we expect up to 230 coaches to be asked to complete the baseline survey across the pilot study, impact evaluation and process study of the VIQI project: up to 22 during the pilot study and 208 during the impact evaluation and process study.

Attachment B.4 – Baseline Protocol for Classroom Observations

All selected classrooms will be asked to participate in two time-points of classroom observations at baseline for the pilot study or impact evaluation and process study. The observations will aim to obtain information about the initial quality levels across different dimensions of structural, process and instructional quality. The protocol guiding the observations will be administered with lead teachers of the classrooms targeted for this data collection activity and includes guidelines for scheduling observations, pre-observation teacher interview, multiple observational measures of classroom quality, and post-observation teacher interview. The pre- and post-observation teacher interviews are intended to collect updated information on classroom staffing, schedule for the day of the observation, and curricular sources used for instruction during the observation. In total, we expect up to 615 teachers to complete the baseline protocol for classroom observations: up to 120 in the pilot study and 495 during the impact evaluation and process study.

Attachment B.5 – Baseline Parent/Guardian Information Form