NSVSP OMB Supporting Statement B_final

NSVSP OMB Supporting Statement B_final.docx

National Survey of Victim Service Providers (NSVSP), 2019

OMB: 1121-0363

B. Statistical Methods

1. Respondent Universe and Sampling Methods

The sample universe for the 2019 National Survey of Victim Service Providers (NSVSP) is all entities nationwide identified through the National Census of Victim Service Providers (NCVSP) as actively providing services or assistance to victims of crime or abuse either as the primary mission of the organization or through dedicated personnel or a named program. For the NSVSP, a sample of approximately 7,237 entities will be selected from this national roster of VSPs. The sample will be large enough to account for non-response and ineligibility, while also ensuring an adequate number of completed surveys for national estimates and state-level estimates for the 14 states with the most VSPs. Based on the NCVSP response rate (83%) and the resources dedicated to nonresponse follow-up with VSPs, the response rate for the much longer NSVSP is expected to be approximately 70%. Aside from nonresponse, we predict a 15% ineligibility rate given that more than a year will have passed since the sampling frame was developed. We expect that some VSPs will have stopped providing direct services to victims, merged with other providers, or become ineligible for other reasons.

It is anticipated that a total of 4,306 VSPs will complete the survey (7,237*70% (response rate)*85% (eligibility rate) = 4,306).

Frame

Because there are no other complete rosters of VSPs nationwide, BJS, Rand, NORC at the University of Chicago, and the National Center for Victims of Crime (NCVC) worked collaboratively to create this frame of VSPs under OMB #1121-0355. VSPs that identified as informal or for-profit VSPs are not be eligible for the NSVSP as these agencies are qualitatively different from other VSPs. In addition, VSPs without dedicated staff or programs to serve crime victims are ineligible for the NSVSP. After removing these ineligible VSPs from the NCVSP roster, the NSVSP frame file includes 11,877 eligible civilian entities in the United States that provide services to victims as the primary function of the organization or through dedicated programs or personnel.

Sampling

The NSVSP data will be used to produce the estimates at desired precision levels for the nation as a whole and for 14 U.S. states. Although national level estimates are important for informing federal policy and funding decisions, and for individual VSPs to compare themselves to national averages, sub-national estimates are of particular interest to a wide range of stakeholders for this first NSVSP. In part, the strong interest in subnational estimates is due to the great diversity in the VSP field, and some of the variation in how agencies operate, which crime victims they serve, and the rules governing their funding differ by state. Much of the federal funding for victim services is allocated to states by the federal government and then administered by states, meaning that VSPs adhere to both federal and state policies governing how the funding can be spent. Sub-national estimates, especially at the state-level, would greatly increase the utility of the data for state agencies tasked with allocating VSP funding, VSPs interested in comparing their data to state averages, and other stakeholders making critical decisions about the local VSP field.

Therefore, the project team selected a sample with the goal to support as many state-level estimates as possible without drawing too large of a sample that would burden the field and potentially result in a lower response rate. The sample is designed to produce estimates for 14 selected states that had the largest numbers of VSPs, and adequate numbers of VSPs for producing reliable estimates with a 70% response rate. This includes California, Texas, New York, Ohio, Florida, Virginia, Illinois, North Carolina, Pennsylvania, Georgia, South Carolina, Missouri, Indiana, and Massachusetts. To benefit states for which the sample does not support state-wide estimates, the sample will also be drawn to represent sub-national estimates based on geographical regions defined by the U.S. Census Bureau as the South, West, Midwest, and Northeast. The goal for the sample design is to be able to produce the following estimates at acceptable precision levels (e.g., a margin of error [MOE] of 0.05 for an estimated proportion of 50% at the state level and a margin of error [MOE] of 0.02 for an estimated proportion of 50% at the national level):

National estimates of the proportion of VSPs with various specific characteristics (e.g., number of victims served, types of services provided) and estimates of these same characteristics by type of VSP (i.e., government-based; nonprofit or faith-based; hospital, medical, or emergency; campus or educational; and tribal); national estimates of the average number of trafficking victims served per VSP and by government-based versus non-government-based VSPs.

State-level estimates of the proportion of VSPs with various specific characteristics (e.g., type of VSP, number of victims served (large, medium, or small), types of crime victim served);

To fulfill these goals, the VSPs will first be grouped into 39 primary strata, defined by crossing geographical areas with VSP types. Each of the 14 stand-alone states is treated as a separate geographical area and the remaining states are grouped into four Census regions, for a total of 18 geographical areas. These 18 areas are crossed with the two major VSP types (government vs. non-government) after removing hospitals, tribal organizations and campus organizations, which are put into three separate strata due to their small size. This initial stratification will improve the efficiency of the sample estimates since the sample variance is decreased as the stratum means diverge and there is within-stratum homogeneity. Samples will be drawn independently from each stratum to support precise estimates. Table 1 summarizes the sample size allocation by primary stratum.

Table 1. Sample size allocation by primary stratum

Primary stratum |

Geographical area |

VSP type |

Population size |

Sample size |

Sampling rate (%) |

1 |

CA |

Non-government |

534 |

277 |

51.9 |

2 |

CA |

Government |

325 |

169 |

51.9 |

3 |

FL |

Non-government |

208 |

169 |

81.1 |

4 |

FL |

Government |

204 |

165 |

81.1 |

5 |

GA |

Non-government |

193 |

177 |

91.6 |

6 |

GA |

Government |

128 |

117 |

91.6 |

7 |

IL |

Non-government |

227 |

184 |

81.2 |

8 |

IL |

Government |

184 |

149 |

81.2 |

9 |

IN |

Non-government |

128 |

128 |

100.0 |

10 |

IN |

Government |

117 |

117 |

100.0 |

11 |

MA |

Non-government |

127 |

127 |

100.0 |

12 |

MA |

Government |

118 |

118 |

100.0 |

13 |

MO |

Non-government |

122 |

122 |

100.0 |

14 |

MO |

Government |

138 |

138 |

100.0 |

15 |

Midwest |

Non-government |

595 |

234 |

39.3 |

16 |

Midwest |

Government |

665 |

261 |

39.3 |

17 |

NC |

Non-government |

221 |

183 |

82.7 |

18 |

NC |

Government |

176 |

145 |

82.7 |

19 |

NY |

Non-government |

282 |

225 |

79.9 |

20 |

NY |

Government |

142 |

113 |

79.9 |

21 |

Northeast |

Non-government |

294 |

207 |

70.5 |

22 |

Northeast |

Government |

238 |

168 |

70.5 |

23 |

OH |

Non-government |

204 |

165 |

81.0 |

24 |

OH |

Government |

209 |

169 |

81.0 |

25 |

PA |

Non-government |

185 |

170 |

91.7 |

26 |

PA |

Government |

135 |

124 |

91.7 |

27 |

SC |

Non-government |

70 |

70 |

100.0 |

28 |

SC |

Government |

190 |

190 |

100.0 |

29 |

South |

Non-government |

915 |

276 |

30.2 |

30 |

South |

Government |

841 |

254 |

30.2 |

31 |

TX |

Non-government |

403 |

206 |

51.0 |

32 |

TX |

Government |

478 |

244 |

51.0 |

33 |

VA |

Non-government |

172 |

139 |

80.6 |

34 |

VA |

Government |

245 |

197 |

80.6 |

35 |

West |

Non-government |

842 |

274 |

32.5 |

36 |

West |

Government |

758 |

247 |

32.5 |

37 |

Campus |

Campus VSPs |

247 |

247 |

100.0 |

38 |

Hospital |

Hospitals |

356 |

311 |

87.2 |

39 |

Tribal |

Tribal VSPs |

261 |

261 |

100.0 |

Total |

|

|

11,877 |

7,237 |

60.9 |

Note: The sample sizes in the table are calculated for achieving an MOE of 0.05 for an estimated proportion of 50% within a state, a Census region, or one of the three types of VSPs (tribal, campus, hospital). The sample sizes account for (1) the 70% response; and (2) another 15% ineligibility.

Most of the primary strata have a sampling rate higher than 80%, and in some of them the sampling rates are 100%, indicating all the VSPs are selected in the sample due to their relatively small population sizes. In those primary strata with high sampling rates, the large VSPs will be selected with certainty. The large VSPs are defined by number of employees (including both full time and part time) using the 90th percentile as a threshold, defined as those having 23 or more employees nationally. In the strata with a sampling rate of 50% or lower, the VSPs will be sorted and grouped by the number of employees. The VSPs with more employees will be selected with higher probabilities than the VSPs with fewer employees. This is to ensure that large VSPs will be adequately represented in the sample as there are a small number of them in the universe. The NCVSP data show that the large VSPs can have quite different characteristics from the smaller ones. Sample sizes will be allocated to each substratum to satisfy both the national and subnational measurement goals.

2. Procedures for collecting data

The NSVSP will be a multimode data collection effort, primarily administered as a web survey. The instrument will be programmed as a web survey but VSPs will also have the option to complete the survey by telephone in subsequent non-response follow-up efforts. Web-based data collection is the preferred means to increase response rates, expedite the data collection process, simplify data verification, and facilitate report preparation. In the NCVSP, about 86% of respondents completed the web survey, with another 14% completing the instrument over the phone, and only 11 VSPs requesting a paper version. This suggests that most VSPs are capable of and even prefer to complete the survey online, but that response rates can be increased by offering a different mode of response as part of the nonresponse follow-up procedures.

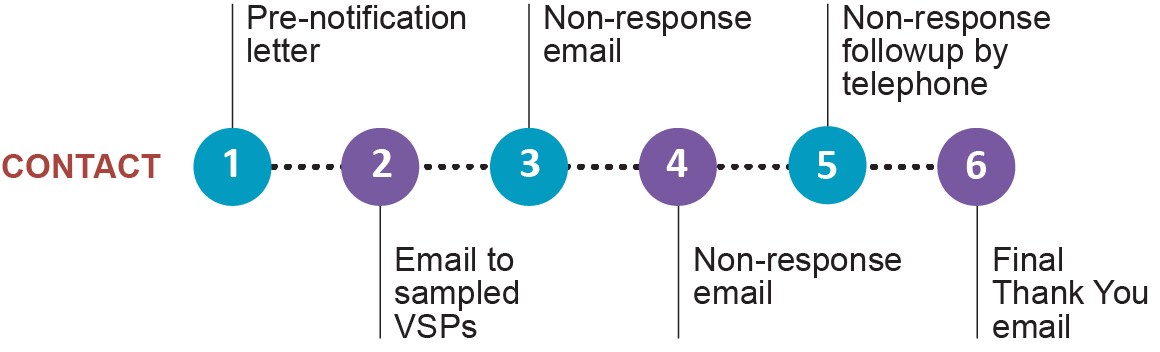

Figure 1 shows the proposed data collection steps and is followed by descriptions of each contact with respondents.

Contact 1: Pre-Notification Letter and FAQs mailed to Sampled VSPs –VSPs will be mailed a pre-notification letter and a set of FAQs from BJS and OVC to a contact name obtained from the NCVSP data collection effort announcing the start of the survey data collection, describing its purpose, providing a list of the types of data that will be collected on the survey, and giving information on which representative in the organization will be contacted to complete the survey via email (see attachment 5 for survey administration materials). This letter will also note support from other organizations, including those represented in the expert panel meeting. Recipients of the letter will be asked to contact Westat, either through a toll-free number or by an online portal, if the survey invitation should be directed to someone else within the program or organization or if they have any questions about the survey. We will allow 3 weeks for this process before initiating Contact 2, which allows time for respondents to get in touch with Westat with changes in the point of contact and time to search for new addresses for VSPs with returned mail. Including this stage in our administration process will help us avoid continually attempting to contact the wrong person within an organization to complete the survey and will yield significant benefits in later phases of the survey administration.

Contact 2: Email to Sampled VSPs – Approximately 3 weeks after the mailing of the pre-notification letter, all sampled VSPs will receive an email that includes access information to the online NSVSP (e.g., a web link and login credentials). The email will include a due date for completing the survey (approximately 8 weeks from Contact 2), and a toll-free telephone number to call for assistance. We will initiate tracing efforts for any emails that have been returned as undeliverable within a specified time period (1 to 2 weeks). Specifically, research staff will conduct a web search for alternative contact information, including an alternative email address. This effort will be supplemented with phone calls to the VSPs to collect a working email address.

The vast majority of respondents provided an email on the NCVSP (all but about 400). About 6% of campus-based, 4% of tribal, 4% of government-based, 3% of non-profit or faith-based, and 1% of hospital-based VSPs did not provide an email address. If sampled VSPs are missing an email address, or the email address bounces back and a new email cannot be located, this second contact will be made via mail instead of email.

For VSPs without valid email addresses, all future follow-up attempts will be made via the telephone.

Contact 3: Non-Response Email – Approximately 1 month before the survey completion due date, non-responding VSPs will receive Contact 3 email to encourage a response.

Contact 4: Non-Response Email – About 1 week before the due date, non-responding VSPs will receive Contact 4 email reminding VSPs of the due date and encouraging a response.

Contact 5: Non-Response Follow-up by Telephone – On the Monday following the survey’s due date, follow-up telephone interviews will begin with participants who have not started the survey and those who have started but did not complete the survey. Telephone follow-up will proceed throughout the remainder of data collection period, approximately 8 weeks, with some flexibility depending on response rate and progress toward securing completed surveys.

Contact 6: Final Thank You Email – The survey management system will be programmed so that an automated email will be delivered to respondents to express appreciation and state that they may be contacted by telephone at a later date if we have any questions. Once all the data have been collected and reviewed, respondents will receive an email informing them that the data collection effort is complete and thanking them once more. This last contact can help keep organizations engaged in the overall mission of the program for possible future administrations of the NSVSP.

The following are data quality assurance steps that the research team will employ during the data collection and processing period:

Data retrieval. The telephone follow-up effort will also include a data quality/data retrieval effort. As questionnaires are received they will be reviewed, and if needed, the VSP data provider will be re-contacted to clarify responses or provide missing information. Although we will program the web instrument to include logic and range checks for submitted data, there may be important quality checks that will require data retrieval efforts (e.g., edits that are too complicated to convey in a message within the web survey).

Data quality monitoring. Respondents completing the survey via the web instrument will enter their responses directly into the online instrument. To confirm that editing rules are being followed, the data management team will review frequencies for the entered data after the first 100 cases are submitted. Any issues will be investigated and resolved. Throughout the remainder of the data collection period, project staff will conduct regular data frequency reviews to evaluate the quality and completeness of data captured in both the data entry and web modes.

3. Methods to maximize response rates and address non-response

Methods to maximize response rates

Instrument development. The NSVSP instrument and the procedures to collect, clean, and analyze the data have been developed based on technological advances that enhance data quality and minimize burden to survey participants and researchers. Based on the NCVSP results, we expect the vast majority of VSPs to complete the survey via web. As discussed in more detail in Part A of this package, the web interviewing capabilities are designed to assist respondents in completing their questionnaires by providing a high-quality user experience and including features that reduce respondent burden and ensure complete and accurate data. In addition, the web survey has a user-friendly interface, is easy to share and allows for easy navigation to specific sections in the event that multiple people at the VSP need to complete it.

Further, the instrument itself includes several design elements intended to increase the ease of reading and understanding the questionnaire. First, related questions are grouped together in topical sections. In addition, the survey instrument begins with the most salient items. Questions and instructions are presented in a consistent manner on each page in order to allow respondents to comprehend question items more readily. Proper alignment and vertical spacing is also used to help respondents mentally categorize the information on the page and to aid in a neat, well-organized presentation. Because we expect that some items may need to be answered by different individuals within the organization, the web survey features navigational tools that allow a survey respondent to easily see which sections have been completed, and which are still outstanding. The web survey will also feature a pane that contains a link to frequently asked questions and a glossary of terms, so that no matter what question is being answered, the survey respondent can easily find this information. A helpdesk will be staffed to provide assistance by phone and email to all respondents during normal business hours (Eastern Time) and will be available to all respondents through a toll-free number.

Before fielding the NSVSP, nine VSPs will be asked to complete the web-based survey to examine issues related to the usability, specifically the presentation and ease of navigating through the web-based instrument. This will provide an opportunity to modify the design and presentation of the survey to be more user friendly if needed, protecting against break offs or item skips.

Although the web will be emphasized as the preferred mode of survey completion, data collected over the phone during the nonresponse follow-up phase of the fielding will be collected using the same software as the VSPs will use to enter their data. Furthermore, telephone interviewers will receive extensive training specific to the project to ensure high response rates and data quality. First, trainees complete self-paced modules to become familiar with the study background, purpose, and the nature of the content of the survey. In the second phase, trainees attend a trainer-led session that focuses on gaining cooperation, addressing respondent questions and concerns, and the unique details of the survey. The third phase consists of role-play exercises where one trainee administers the survey to another trainee over the telephone as if conducting a live interview. Westat supervisors monitor trainees to evaluate their readiness for live data collection. Once approved, trainees are assigned as interviewers for the project.

In addition to administration issues, the research team spent significant attention to the NSVSP survey length and content. The project team worked to balance the many data priorities identified by the field with the need to keep the survey to 45 minutes or less. When possible, item wording or content was selected to be similar to wording or content familiar to large parts of the VSP field that are required to report on performance measures, for example VSPs with OVC funding report numbers of victims served by crime type each year. The NSVSP is intended to be a more detailed, longer follow-up to the NCVSP, but was kept to an average of 45 minutes. The expert panel members and cognitive testing participants felt this was an acceptable length, and offered additional guidance for encouraging participation in this longer survey. Many stakeholders felt participation would be high simply given the great interest in making data available about the VSP field, and recommended providing explanation about why these data are being collected with a set of frequently asked questions. VSPs also recommended providing respondents with an outline of the topics covered in the questionnaire when they receive the initial survey request, so that they can pre-identify the resources and individual staff members that will be needed to complete the survey. Experts felt this would decrease the likelihood of break offs from the survey and increase data quality. This advice will be implemented in the NSVSP recruitment methodology, see attachments 5a and 5b.

Outreach and recruitment efforts. The recruitment strategies have also been designed to maximize response rates. Outreach efforts will include broad scale activities to raise awareness of the survey and promote enthusiasm for its success. The project team also plans to utilize external stakeholders, including OVC and OVW, and the expert panel members to encourage and increase cooperation among VSPs. This is expected to be particularly helpful in legitimizing the proposed NSVSP. Working through trusted intermediaries who are part of the project expert panel, project staff will:

Distribute short informational blurbs to be shared in organization newsletters that encourage participation if an entity is selected for the sample. (One month prior to launch.)

Ask expert panel members to post an NSVSP icon on their own websites with a link to the project website and share similar information on their social media accounts (e.g., Twitter, Facebook) (One month prior to launch).

Ask expert panel members to distribute through their networks a reminder blurb urging service providers to take part in the survey. (At launch.)

Each of those awareness materials will link to a Website that provides more information about the project and the importance of participation for selected entities. That Website will include a list of the trusted victim advocacy leaders and other stakeholders who are involved in the project, which should give participants additional confidence in the survey project.

Nonresponse follow-up protocols. Once the data collection has launched, the approach that will be used for nonresponse follow-up is consistent with best practices in survey research and entails the steps described in Contacts 3 – 5 in Figure 1 above. For example, the phone-based follow-up efforts will include completing surveys with full and partial non-respondents as well as efforts to collect missing data from submitted web surveys.

Telephone non-response follow-up data collection will use a call scheduler to ensure that call attempts are spread across a variety of days of the week and times of day to maximize contact likelihood. Once a call attempt results in a contact, further calls to non-final cases will be made based on the respondent’s anticipated availability as identified during the contact. The goal of the call strategy will be to optimize response rates while maintaining efficiency and minimizing frustration among participants. Given the nature of this questionnaire, a single respondent may not have all of the required data upon initial contact, thus we envision that several callbacks may be required to complete the survey. While our primary goal at this stage will be to obtain completed interviews by telephone, a secondary benefit of the calls is that it will likely prompt some respondents to complete the web survey on their own.

Based on the NCVSP, we anticipate a total response rate on this survey of 70 percent (5,365 completes), with 30 percent of completes being done by telephone. However, it is anticipated that 60 percent of respondents will require telephone calls since some web completes will come after the beginning of the telephone non-response follow-up. The telephone data collection (and potentially retrieval) period will be conducted over the last 2 months of the 4-month data collection period.

Over the course of data collection, Westat will provide BJS with biweekly production reports on paradata monitoring participation rates by geography and provider type, length of time required to complete the survey, and other indicators related to securing a high participation rate. These frequent reports will help to ensure the protocol is working correctly and Westat will make quick modifications if needed. Monitoring response rate and performance para data will also allow the team to quickly identify problems with participation, and if needed, implement an adaptive design to ensure a representative distribution of VSPs at the national level (e.g., targeting outreach toward specific groups that are not responding at high rates).

Addressing nonresponse and missing data

While the majority of sampled VSPs will participate after multiple contact attempts, a nonresponse bias analysis (NRBA) will be conducted to assess the extent to which nonresponding VSPs differ from responders on key characteristics available through the NCVSP frame. The NCVSP provides data on the basic characteristics of VSPs that can be used to conduct NRBA.

Westat, the contractor for this data collection effort, has developed a SAS macro (WesNRBA) that facilitates a non-response bias analysis. It offers users the opportunities to conduct a basic analysis that uses auxiliary data and a more extensive analysis that uses external totals and/or survey outcome data. The purpose of the basic NRBA is to evaluate the relationship of response status to available outcome-related auxiliary variables to inform the weighting process. The purpose of the extended analysis is to take a deeper look into different ways to gauge the extent of the potential for bias. Should the analysis reveal evidence of nonresponse bias, Westat will work with BJS to assess the impact to estimates. Unit nonresponse will then be handled by creating weights to compensate for the reduced sample size and to reduce the impact of the bias.

Among responding VSPs, there may be item nonresponse, which will be addressed through imputation, to the extent feasible. The general approach for imputation is to use the information available for a record to assign values for the record’s missing items. Two already-developed SAS macros for performing imputation have been used successfully used by Westat on many surveys. The Westat-developed SAS macro, WESDECK, performs hot-deck imputation and the Westat-developed SAS macro, AutoImpute, can be used to preserve complex skip patterns and covariance structure while imputing entire questionnaires with minimal human intervention (Piesse, Judkins, and Fan, 20051). These methods for imputation have been tested on several other large surveys and found to be effective tools. One of these SAS macros will be chosen depending upon the amount of missing data and the complexity of questionnaire.

4. Test of procedures or methods

The NSVSP instrument has gone through two rounds of cognitive testing to finalize the survey content and questions (for example assessing how best to word questions, testing response options, feasibility of reporting certain data, etc). In addition, the web-based survey will go through a round of usability testing before administration to ensure the presentation and programming of the instrument is user friendly.

Cognitive testing- Round 1

Thirty VSPs were recruited to participate in round 1 of cognitive testing of the NSVSP instrument. The 30 VSPs represented domestic violence shelters, rape crisis centers, homicide survivor groups, prosecutor-based providers, hospital-based providers, and campus providers. The interviews were conducted in November and December of 2017. Round 1 of testing focused on the survey content and ability to provide reliable responses to the survey items (e.g., were there items most VSPs would not be able to complete, did the response categories make sense, etc.). Interviewers discussed each item with VSPs, but did not ask VSPs to complete the survey. Most of the feedback from the cognitive interviews resulted in minor edits to wording of questions or tweaks to particular response items. During this round of cognitive testing, many cognitive interview participants reported that they would likely participate; however, many respondents noted they would need assistance from additional staff or data reports (e.g., from a case management system) to complete the survey. Survey length was estimated at taking 45 minutes. See attachment 3 for additional cognitive testing findings.

Cognitive testing- Round 2

Subsequent to the Expert Panel meeting held in April 2018, changes were made to the content and wording of the NSVSP, necessitating a second round of cognitive testing. This round of cognitive testing, conducted in June 2018, assessed the completeness of the information collected (were respondents able to furnish the requested information), the uniformity of understanding of the survey from one respondent to the next (e.g., did each respondent define a domestic violence victim in the same way), and the level of burden needed to complete the instrument (in terms of number of minutes to complete, types of record look-up that were required, and number of staff members needed to address different sections of the instrument). See attachment 4 for the cognitive testing report.

The instrument performed well overall in round 2 of testing, and resulted in only minor revisions to item wording or response options and changes to improve readability and further clarify key terms. However, the time it took to complete the instrument ranged widely (15 minutes to 2.75 hours; 5 of the 9 participants took 30 to 45 minutes). To ensure that the majority of VSPs can complete the survey in 45 minutes or less, the Westat and BJS project team further reduced the burden of the survey to prevent survey breakoffs or nonresponse. The team focused on simplifying the instrument by cutting items or reducing detail, while not modifying the item stems or wordings so as to not modify the interpretation of the items. Some of the high-burden items that required VSPs to look up information in their case management system were removed (e.g., a few non-priority items asking for victim counts). Additional respondent burden was minimized by reducing the level of detail in response options (e.g., rather than asking for 5 different age categories for trafficking victims, the item focuses on whether victims were youth under 18 or adults age 18 or older), or by breaking longer items into more than one question (e.g., rather than asking VSPs to provide a count of all their fulltime, part-time, and volunteer employees broken down by job description in one table, this item was separated into a few questions). Lastly, some items were removed because the burden was not justified by their value given the potential for different types of VSPs to interpret the questions in different ways, leading to inconsistent responses. The final instrument is in attachment 2.

Usability testing

Before launching the NSVSP, nine VSPs will be asked to complete the web instrument and a short online questionnaire to share their experiences specific to the presentation and programming of the survey. This final test will ensure the survey is easy to access and navigate. The open-ended online questionnaire will be administered at the end of the web instrument and will focus on the presentation of the survey questions and response options, perceptions of places where the online instrument could be more user friendly, respondents’ experiences stopping and restarting the survey, and other issues related to the general ease or burden in navigating through the online survey. In addition, the research team will examine paradata for a number of feasibility indicators, for example number of times VSPs log into and out of the survey, overall time spent across all sessions, section time spent across sessions, number of timeouts, errors thrown, and help documents accessed. The usability testing is not expected to result in any changes to the content of the instrument. Should any issues arise that result in substantive changes, BJS would follow procedures for alerting the public and obtaining additional OMB approval of such changes.

5. Individuals consulted on statistical aspects and collecting/analyzing data

The victimization unit of BJS takes responsibility for the overall design and management of the activities described in this submission, including data collection procedures, development of the questionnaires, and analysis of the data. BJS contacts are –

Barbara Oudekerk, Ph.D.

Statistician

Bureau of Justice Statistics

810 Seventh St., NW

Washington, DC 20531

(202) 616-3904

Jeffrey H. Anderson

Director

Bureau of Justice Statistics

810 Seventh St., NW

Washington, DC 20531

(202) 307-0765

The administration of the survey, as well as analysis of the data and reporting on finding will be completed under a cooperative agreement with Westat. Principal Investigators are—

Sarah Vidal

Westat

RB3125

1600 Research Blvd

Rockville, MD 20850

Phone: (240) 453-2765

Jennifer O’Brien

Westat

RB3251

1600 Research Blvd

Rockville, MD 20850

Phone: 301-251-4272

1 Piesse, A., Judkins, D., and Fan, Z. (2005). Item imputation made easy (pp. 3476–3479). Proceedings of the Section on Survey Research Methods of the American Statistical Association.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Oudekerk, Barbara Ann |

| File Modified | 0000-00-00 |

| File Created | 2021-01-20 |

© 2026 OMB.report | Privacy Policy