EPSEP OMB Supporting Statement Part A_7-10-19 rev

EPSEP OMB Supporting Statement Part A_7-10-19 rev .docx

Evaluation of Preschool Special Education Practices Efficacy Study

OMB: 1850-0916

Evaluation of Preschool Special Education Practices Efficacy Study

Part A: Supporting Statement for Paperwork Reduction Act Submission

OMB

Information Collection Request 1850-0916

Submitted

to:

Submitted

to:

Institute of Education Sciences

Project

Officer: Yumiko Sekino

Contract Number: ED-IES-14-C-0001

Submitted by:

Mathematica Policy Research

P.O.

Box 2393

Princeton, NJ 08543-2393

Telephone: (609)

799-3535

Facsimile: (609) 799-0005

Project

Director: Cheri Vogel

Reference Number: 40346

This page has been left blank for double-sided copying.

CONTENTS

Part A. SUPPORTING STATEMENT for paperwork reduction act submission 1

Justification 2

A1. Circumstances necessitating the collection of information 2

A2. Purpose and use of data 9

A3. Use of technology to reduce burden 11

A4. Efforts to avoid duplication 12

A5. Methods of minimizing burden on small entities 12

A6. Consequences of not collecting data 12

A7. Special circumstances 13

A8. Federal Register announcement and consultation 13

A9. Payments or gifts 14

A10. Assurances of confidentiality 15

A11. Justification for sensitive questions 16

A12. Estimates of hours burden 16

A13. Estimate of cost burden to respondents 17

A14. Annualized cost to the federal government 17

A15. Reasons for program changes or adjustments 17

A16. Plans for tabulation and publication of results 17

A17. Approval not to display the expiration date for OMB approval 18

A18. Explanation of exceptions 18

References 19

APPENDIX A: INDIVIDUALS WITH DISABILITIES EDUCATION ACT 2004, SECTION 664

APPENDIX B: CLASS ROSTER AND DATA REQUEST FORM

APPENDIX C: PARENT LETTER AND CONSENT FORM

APPENDIX D: ADMINISTRATIVE RECORDS REQUEST FORM

APPENDIX E: OBSERVATION INSTRUMENTS WITH A TEACHER INTERVIEW COMPONENT

APPENDIX F: TEACHER FOCUS GROUP FORMS AND PROTOCOL

APPENDIX G: TEACHER BACKGROUND AND EXPERIENCES SURVEY

APPENDIX H: TEACHER-CHILD REPORTS

APPENDIX I: CONFIDENTIALITY PLEDGE

TABLES

A.1 Data collection activities 5

A.2 How data collection activities will address the research questions 10

A.3 Schedule of major study activities 11

A.4 Experts who consulted during the study design 13

A.5 Estimated response time for data collection 17

FIGURES

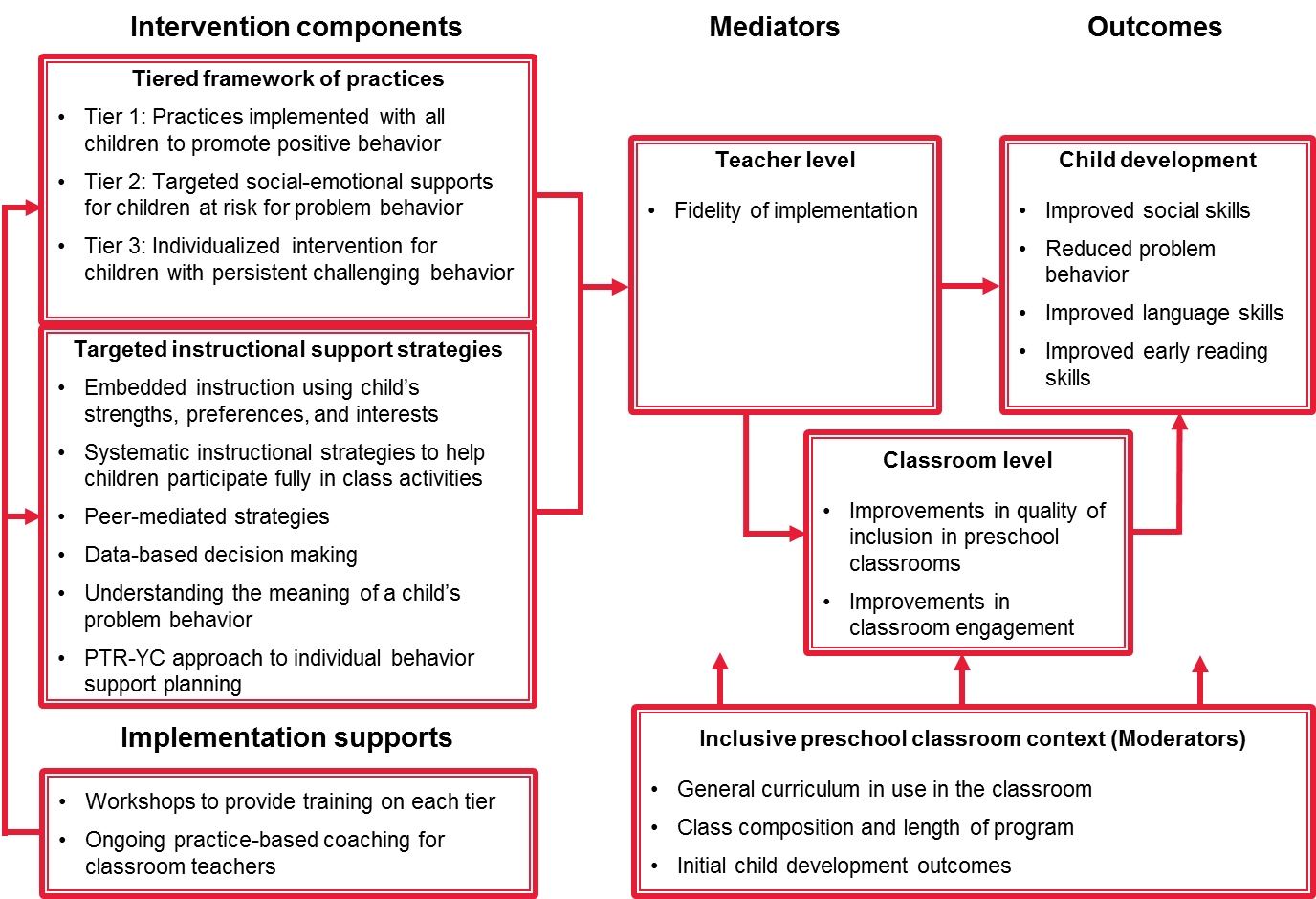

A.1 Logic model to guide the efficacy study 4

Part A. SUPPORTING STATEMENT for paperwork reduction act submission

This package requests clearance for data collection activities to support a rigorous efficacy study of an instructional framework designed to address the needs of all preschool children in inclusive classrooms.1 The efficacy study is part of the Evaluation of Preschool Special Education Practices (EPSEP), which is exploring the feasibility of a large-scale effectiveness study of an intervention for preschool children in inclusive classrooms. The Institute of Education Sciences (IES) in the U.S. Department of Education has contracted with Mathematica Policy Research and its partners the University of Florida, University of North Carolina at Chapel Hill, and Vanderbilt University to conduct EPSEP (ED-IES-14-C-0001).

The main objective of the efficacy study is to test whether the Instructionally Enhanced Pyramid Model (IEPM) can be implemented with fidelity. IEPM is comprised of three established individual interventions for children with disabilities integrated together into a single comprehensive intervention for use with all children in inclusive preschool classrooms (IEPM is described in detail later in section A1.c). The secondary objective is to provide initial evidence about IEPM’s impacts on classroom and child outcomes. This study provides an important test of whether strategies for delivering content in a manner that meets the needs of each child with a disability can be integrated with an existing framework of teaching practices for inclusive preschool classes, thus helping all children participate and make progress in the general preschool curriculum. These strategies, which are called targeted instructional supports, have been tested separately but have not been tested as part of this framework.

Findings from an earlier EPSEP survey data collection (OMB 1850-0916, approved March 26, 2015) and systematic review provided little evidence that curricula and interventions that integrate targeted instructional supports are available for school districts to use in inclusive preschool classrooms. These earlier findings justify the need for an efficacy study to obtain more information before IES decides whether to conduct a large-scale evaluation. In addition, the results can inform preschool instructional practices and policy objectives in the Individuals with Disabilities Education Act (IDEA) that support inclusion.

The efficacy study will include data collection to conduct both implementation and impact analyses. The implementation analysis will use observation data to describe the fidelity of training and implementation. It also will draw on coaching logs and coach interviews to describe program implementation. 2 In addition, responses to a teacher survey and teacher focus groups will provide information on teachers’ backgrounds, professional experiences, and perspectives on IEPM implementation. The impact analysis will use data from observations of classroom inclusion quality and engagement, a child observation, a direct child assessment, and teacher reports on child outcomes. The implementation and impact analyses also will use district administrative records to offer additional contextual and background information on the preschool program, its teachers, and enrolled children.

This supporting statement provides a detailed discussion of the procedures for these data collection activities, as well as copies of the forms and instruments the study team has developed and that we are submitting for OMB review and approval.

Justification

A1. Circumstances necessitating the collection of information

a. Policy context and statement of need

Nationally, more than 750,000 preschool children with disabilities (ages 3 through 5) receive special education and related services through IDEA. IDEA is a long-standing federal law last reauthorized by Congress in 2004 (Public Law 108-446) that supports children and youth with disabilities. Since the law’s 1986 reauthorization (Public Law 99-457), Part B Section 619 of IDEA has ensured that preschool children with disabilities have access to a free appropriate public education and authorized funding to help education agencies meet their individual learning needs. A goal of IDEA is to provide preschool children with disabilities the supports they need to learn the pre-academic, social-emotional, and behavioral readiness skills important for later school success.

Recent reauthorizations of IDEA and federal policy guidance have supported educating preschool children with disabilities in inclusive classes where they learn with other children and can participate and make progress in the general early childhood curriculum (U.S. Department of Health and Human Services and U.S. Department of Education 2015). Studies covering a range of child populations and preschool contexts have found positive effects of preschool inclusion on the development of cognitive, social-emotional, and behavioral skills both for all children (Hemmeter et al. 2016) and for children with disabilities (Green et al. 2014; Odom et al. 2004; Strain and Bovey 2011). No study has found that inclusion harms children without disabilities (Buysse and Bailey 1993; Cross et al. 2004; Diamond and Huang 2005).

When delivering instruction in inclusive preschool classes, the field’s recommendation is to integrate a framework of evidence-based practices with targeted instructional supports for children with disabilities (Division for Early Childhood 2014; Horn and Banerjee 2009; Snyder et al. 2018). The framework of practices is the instructional content that promotes positive outcomes for all children. The targeted instructional supports are strategies for delivering the content in a manner that meets the behavioral, social, communication, and learning needs of children with disabilities. The combination of an evidence-based framework of practices and targeted instructional supports is thought to help children with disabilities participate in the general preschool curriculum and make progress on their individualized goals.

However, a systematic review and district surveys conducted earlier in EPSEP (OMB 1850-0916, approved March 26, 2015) found little evidence that such integrated intervention programs that aim to address the needs of all children with disabilities in inclusive preschool classrooms exist and that districts use them. The existing evidence comes from intervention programs that either do not include targeted instructional supports or, if they do, are aimed mainly at children with specific types of disabilities.

The EPSEP efficacy study will help fill this gap by providing rigorous evidence on the efficacy of an instructional framework that integrates instructional content and targeted instructional supports. It will provide a necessary test of whether the framework’s components can be implemented together—and provide initial evidence of its impacts—to help IES decide whether it will proceed with a large-scale effectiveness study.

This study is authorized by Section 664(b) of IDEA 2004, which requires IES to carry out a national assessment of activities that receive assistance through IDEA funds (Appendix A). The scope of the national assessment includes assessing the implementation of professional development activities and their impacts on instruction and child outcomes. EPSEP is one of several studies that IES has sponsored to meet this mandate.

b. Study design and research questions

The efficacy study will be a small-scale randomized controlled trial conducted during the 2019–2020 and 2020–2021 school years. The study team held a competition to select the intervention to test; based on expert panel recommendations, IEPM was selected. (See the next section for more information on IEPM.) Implementation of IEPM involves two years of teacher training and coaching. The developer/provider team that will lead the training and coaching on IEPM believes that, based on evidence from prior studies of the individual IEPM components, two years of implementation support is necessary for teachers to achieve fidelity. The study will recruit 80 teachers to participate, and these teachers will come from 40 inclusive preschool classrooms in 26 public schools from three school districts. These schools will be randomly assigned to one of two groups: (1) an intervention group that receives full training and coaching support on IEPM for two years, or (2) a control group that will continue with their typical practices in the classroom (“business-as-usual”). The child samples will include all children in study classrooms. This study design will allow us to examine implementation fidelity that will provide context for interpreting impact results and shedding light on how IEPM may affect classroom and child outcomes. Accordingly, we will also estimate the impact of IEPM (compared to the control group) after each year of implementation for preschool children with and without disabilities, separately and together.

The efficacy study’s seven research questions are listed below.

What are the characteristics of schools, classrooms, staff, and children in the intervention and control groups?

What implementation supports do staff and children in the intervention group receive?

What level of intervention fidelity do teaching staff achieve after each year?

What are the experiences and perspectives of coaches and of staff in the intervention and control groups, including on challenges to implementation?

What is the cost of implementing IEPM?

What are IEPM’s impacts on the classroom environment?

What are IEPM’s impacts on the social-emotional, behavioral, language, and early reading skills of children with disabilities and of children without disabilities?

c. Overview of the Instructionally Enhanced Pyramid Model

IEPM organizes evidence-based practices and targeted instructional supports in a tiered approach to address the social, emotional, and behavioral needs of all preschool children in inclusive classrooms, including children with, or at risk for, disabilities. It includes practices implemented with all classroom children that focus on building nurturing and responsive relationships and creating a high quality supportive classroom environment (Tier 1), social-emotional supports for children at risk for problem behavior or social-emotional delays (Tier 2), and individualized interventions for children with severe and persistent challenging behavior (Tier 3).

A distinguishing feature of IEPM is that it integrates targeted instructional support strategies into all three tiers. In this regard, it enhances the three tiers in the Pyramid Model for Promoting Social and Emotional Competence in Young Children (Fox et al. 2003; Hemmeter et al. 2006) by offering teachers specific guidance on how to implement practices at each tier. The targeted instructional supports are drawn from two evidence-based interventions: (1) the Learning Experiences Alternate Program for Preschools and Their Parents (LEAP; Strain and Bovey 2011); and (2) Prevent-Teach-Reinforce for Young Children (PTR-YC; Dunlap et al. 2018). At Tiers 1 and 2, IEPM offers strategies from LEAP for how teachers can embed learning opportunities into ongoing activities and routines, support children to participate fully in class activities, use peer-mediated strategies, make data-based decisions, and understand the meaning of children’s problem behavior. At Tier 3, IEPM uses the PTR-YC process to guide individual behavior support planning. All three of IEPM’s component interventions have evidence of positive impacts on child outcomes from rigorous studies conducted in public preschool classrooms (Dunlap et al. 2018; Hemmeter et al. 2016; Strain and Bovey 2011).

Figure A.1 shows the components of IEPM as part of a logic model for the efficacy study.

Figure A.1. Logic model to guide the efficacy study

Implementation support for teachers on IEPM will be aligned with the tested practices and requirements from its three components (Pyramid Model, LEAP, and PTR-YC). Each tier will have one set of integrated training materials and guides to support implementation. A coach will be assigned to each classroom to work with them during each of the training workshops and provide ongoing support during the school year. An initial workshop in summer 2019 will introduce teachers to the Tier 1 practices. Two subsequent workshops during fall and winter of the 2019–2020 school year will introduce Tier 2 and Tier 3 practices, respectively. Throughout the school year, teachers will receive near-weekly practice-based coaching and feedback (28 to 31 coaching sessions for the school year). Training and support during 2020–2021 would involve a refresher workshop during summer 2020, followed by additional practice-based coaching (nine sessions).

d. Data collection

The efficacy study includes several data collections. The study team will collect data from districts, schools, and teachers to describe the implementation of IEPM and measure its impacts on classroom and child outcomes. We also will obtain permission from parents or guardians of children in study classrooms to collect data on their children. Table A.1 summarizes all these data, and we describe them in the text that follows the table.

Table A.1. Data collection activities

Instrument |

Data need |

Respondent |

Mode |

Sample |

Schedule |

Sampling, consent, and contextual information |

|||||

Class rosters from schools |

List of children in study teachers’ classes used to prepare parent consent forms; IEP and ELL status |

School |

Paper or electronic list of students enrolled in study teachers’ classrooms |

Treatment and control |

Fall 2019, fall 2020 |

Parent consent form |

Active consent from parent or guardian for child to be included in data collection for the study |

Parent or guardian |

Paper form indicating consent or nonconsent for children to be included in data collection for the study |

Treatment and control |

Fall 2019, fall 2020 |

Administrative records from districts |

Child characteristics, discipline, kindergarten screener, curriculum-linked assessments, staff rates, facilities costs |

Districts |

Electronic records |

Treatment and control |

Summer 2020, summer 2021 |

Implementation |

|||||

Training fidelity observations for workshops on IEPM |

Degree to which the workshops met the content requirements |

Study team |

Paper checklists based on the training fidelity tool for the Pyramid Model |

Treatment |

Summer 2019, fall and winter 2019, summer 2020 |

Intervention fidelity observations and interviews |

Degree to which teachers implement IEPM as intended in classrooms |

Study team |

Observation lasting 1.5 school days using the TPOT and QPI; includes time for a teacher interview for the TPOT (20 minutes) and PTR-YC procedural fidelity checklist (5 minutes) |

Treatment and control |

Fall 2019, fall 2020, spring 2020, spring 2021 |

Provider’s coaching logs and cost records |

Coaching frequency and length, progress on action plans, procedures followed, strategies used, number of children at IEPM tier levels, IEPM costs |

Coaches |

Electronic form completed by coaches after each feedback session |

Treatment |

Throughout the 2019–2020 and 2020–2021 school years |

Coach interviews |

Perspectives on implementation successes and challenges |

Coaches |

60-minute interview |

Treatment |

Spring 2020, spring 2021 |

Teacher focus groups |

Perspectives on implementation successes and challenges; opportunity costs of IEPM support |

Teachers |

90-minute focus group |

Treatment |

Spring 2020, spring 2021 |

Teacher background and experiences survey |

Teacher experience and education, professional development received, attitudes, use of data |

Teachers |

10-minute paper survey |

Treatment and control |

Spring 2020, spring 2021 |

Classroom quality |

|||||

Classroom inclusion and engagement observations and interviews |

Classroom inclusion quality and child engagement |

Study team |

3-hour observation that includes the ICP (2.5 hours) and Engagement Check II (0.5 hours); ICP also involves a 20-minute teacher interview. |

Treatment and control |

Fall 2019, spring 2020, fall 2020, spring 2021 |

Child outcomes |

|||||

Child observations |

Frequency of child social interactions and problem behaviors |

Study team |

60-minute observation per child using the TCOS |

Treatment and control (four children per classroom) |

Spring 2020, spring 2021 |

Teacher-child reports |

Child functional abilities, social-emotional skills, problem behaviors, and language skills |

Teachers |

27-minute (fall) or 22-minute (spring) paper form for teachers using ABILITIES Index (fall only); SSIS and CELF P2 pragmatic profile |

Treatment and control |

Fall 2019, spring 2020, fall 2020, spring 2021 |

Child assessment |

Early reading skills |

Children |

30-minute assessment using the TERA-3 |

Treatment and control |

Spring 2020, spring 2021 |

Notes: CELF P2 = Clinical Evaluation of Language Fundamentals Preschool 2; ELL = English language learner; ICP = Inclusive Classroom Profile; IEP = individualized education program; PTR-YC = Prevent-Teach-Reinforce for Young Children; QPI = Quality Program Indicators; SSIS = Social Skills Improvement System; TCOS = Target Child Observation System; TERA = Test of Early Reading Ability; TPOT = Teaching Pyramid Observation Tool.

Sampling, consent, and contextual information

Class rosters from schools. We will provide schools with a class roster and data request form (Appendix B) to obtain lists from schools of the children in study teachers’ classrooms in fall 2019 and fall 2020. The information requested will include school, teacher, and student identifiers, as well as indicators for English language learners (ELL) and individualized education program status. The specific identifiers (name and ID) will enable the study team to match individuals to other data requested as part of the study (for example, attendance records, teacher survey responses). We will use the class roster data to develop parent permission packets.

Parent consent form. We will provide a paper consent form for parents or guardians to give permission for the study team to collect data on their child in fall and spring through one-on-one assessments, teacher reports, and observations (Appendix C). We will collect the permission forms in fall 2019 and fall 2020. To achieve high consent rates, we will use a two-step process for collecting consents. A field staff member will go to each school to explain the study to teachers. We will then ask teachers to explain the study to families, and distribute and collect the consent forms. We will offer to talk by telephone with any parents who have questions before they sign the form.

Administrative records from districts. We will collect administrative records for all study children from districts in summer 2020 and 2021 following each implementation year (Appendix D). The records will include demographic information, such as gender, age, free or reduced-price lunch status; IDEA status and disability category; and ELL status to describe the child sample. We also will collect data for the impact analysis on attendance, suspensions, and expulsions, as well as scores on any curriculum-linked assessments and kindergarten screeners that the districts conduct. Finally, we will collect data to confirm the child’s teacher, the child’s school, and that the child is enrolled in a preschool grade. In addition to child-level records, we will ask districts for cost information, including staff, substitute teacher, and facilities rates, to assess implementation costs.

Implementation

Training fidelity observations for workshops on IEPM. Study team members will attend each IEPM workshop to rate the content fidelity of the trainings using paper checklists. The study team and IEPM provider will develop the checklists, which will address the key content areas for each training. We will use data from the training fidelity checklists to address research question 2. No respondent burden is associated with this data collection activity.

Intervention fidelity observations and interviews. We will visit all study schools in fall and spring (2019-2020), fall and spring (2020/2021) to conduct intervention fidelity observations and interviews (Appendix E). We will use this data collection activity to determine whether teachers are implementing IEPM as intended. We will use the existing fidelity measures for IEPM’s three intervention components. These measures are called the Teaching Pyramid Observation Tool (TPOT); the Quality Program Indicators (QPI, for LEAP); and the PTR-YC procedural fidelity checklist. The TPOT addresses 14 key practices through items that assess teachers’ capacity to implement the Pyramid Model’s universal, targeted, and intensive intervention practices. It requires a half-day observation that includes time for a 20-minute teacher interview. The QPI assesses the fidelity with which classroom staff implement LEAP targeted instructional supports. It requires a full classroom day observation. The PTR-YC procedural fidelity checklist assesses whether staff followed procedures for any children who receive Tier 3 intervention. The study team will complete this checklist for children in Tier 3 based on a teacher interview (5 minutes) the day of the QPI observation. The only respondent burden associated with this data collection activity is for the interviews; observations have no respondent burden.

Provider’s coaching logs and cost records. We will use information from coaching logs adapted from the logs used for the Pyramid Model. Study coaches will complete the coaching logs to document key aspects of the sessions they hold with teachers. The logs will include information on (1) coaching frequency, (2) the length of the coaching meeting, (3) progress on steps outlined in teacher action plans, (4) coaching procedures followed, (5) coaching strategies used, and (6) the number of children at each IEPM tier level. Provider cost records will provide information for estimating the costs of implementing IEPM. No respondent burden is associated with this data collection activity.

Coach interviews. Study team members will conduct a 60-minute interview with study coaches in spring 2020 and spring 2021. We anticipate the study will need five coaches to support the 20 treatment classrooms. The interviews will provide information for the implementation analysis on coaches’ perspectives on IEPM implementation successes and challenges. No respondent burden associated is with this data collection activity.

Teacher focus groups. We will conduct 90-minute focus groups with all study teachers in each treatment group school in spring 2020 and spring 2021 (Appendix F). The focus groups will provide information on teachers’ perspectives on IEPM implementation successes and challenges. We also will ask teachers to share their thoughts on activities or resources that they are missing when participating in the IEPM training and coaching (also referred to as “opportunity cost”).

Teacher background and experiences survey. All teachers in the study (two per classroom in treatment and control schools) will complete a 10-minute self-administered hard-copy survey in spring 2020 and spring 2021 to gather key information on their teaching backgrounds and experiences (Appendix G). The survey will ask teachers about their years of teaching experience and educational attainment; the professional development and coaching they have received, as well as the challenges they perceive related to teaching preschool children with disabilities; and how they use progress monitoring data to make instructional decisions.

Classroom quality

Classroom inclusion and engagement observations and interviews. We will observe all study classrooms in the fall and spring of each implementation year to assess the quality of classroom inclusion and level of child engagement. We will measure inclusion quality using the Inclusive Classroom Profile (ICP). ICP observations take approximately 2.5 hours. Most items are assessed through structured observations in which observers focus on adults’ interactions with, and support for, children with disabilities. A few items will require asking the teacher a question or reviewing documents (included in Appendix H). Observers also will use the Engagement Check II to record the engagement of children in activities or interactions during sessions totaling 30 minutes. This will be done in conjunction with the ICP observation. The only respondent burden associated with this data collection activity is for the interviews; observations have no respondent burden.

Child outcomes

Child observations. We will conduct a 60-minute observation of the frequency of children’s social interactions and problem behaviors in all study classrooms in the spring of each implementation year. The Target Child Observation System (TCOS) is a direct measure of key constructs that IEPM targets; we will complete it for four selected children per classroom (that is, those demonstrating the greatest difficulties with social interaction and behavior challenges as measured by Social Skills Improvement System scores in the fall [see next paragraph]). This direct micro-level information on children’s behavior will augment other child behavior information we plan to collect. No respondent burden is associated with this activity.

Teacher-child reports. We will ask study teachers in treatment and control schools to complete a paper form reporting on children’s functional abilities, social-emotional skills, problem behaviors, and language skills (Appendix I). We will use the ABILITIES Index (5 minutes) to measure functional abilities, which we can use to control for initial ability in the impact analysis. We will use the Social Skills Improvement System (SSIS) rating scales (15 minutes) to measure social skills and problem behaviors. We will use the Clinical Evaluation of Language Fundamentals Preschool 2 (CELF P2) pragmatic profile to measure language use in social situations. We will ask teachers to report on all these measures for each child in fall 2019 and fall 2020, and we will ask them to report on the SSIS and CELF P2 in spring 2020 and spring 2021. We assume that the two study teachers in each classroom will evenly divide the responsibility of completing the teacher-child reports between them.

Child assessment. All study children in treatment and control schools will complete a 30-minute assessment of early reading using the Test of Early Reading Ability-Third Edition (TERA-3). The TERA-3 includes three subtests: alphabet, conventions, and meaning. We have included 5 minutes in the time to complete the TERA-3 to give children short breaks between the subtests. We will administer the assessment one-on-one in English during the school day in the spring of each implementation year. No respondent burden is associated with this activity.

A2. Purpose and use of data

Mathematica and its partners will collect and analyze the data for this efficacy study. The work will be completed under contract number ED-IES-14-C-0001. The data will be used to address the study’s research questions, as shown in Table A.2, and to inform IES’s assessment of whether to conduct a large-scale effectiveness study.

Table A.2. How data collection activities will address the research questions

Research questions |

Data collection activities |

IEPM implementation across two school years |

|

1. What are the characteristics of schools, classrooms, staff, and children in the intervention and control groups? |

|

2. What implementation supports do staff and children in the intervention group receive? |

|

3. What level of intervention fidelity do teaching staff achieve after each year? |

|

4. What are the experiences and perspectives of coaches and of staff in the intervention and control groups, including on challenges to implementation? |

|

5. What is the cost of implementing IEPM? |

|

IEPM impacts relative to a business-as-usual control after each year of implementation |

|

6. What are IEPM’s impacts on the classroom environment? |

|

7. What are IEPM’s impacts on the social-emotional, behavioral, language, and early reading skills of children with disabilities and of children without disabilities? |

|

The efficacy study builds on, and is justified by, a survey data collection (OMB 1850-0916, approved March 26, 2015) and systematic review conducted earlier in EPSEP. The surveys were administered to preschool special education coordinators in 50 states and the District Columbia, and in a nationally representative sample of 1,200 districts during the 2014–2015 school year. Findings from the surveys indicated that, although many school districts have inclusive preschool classrooms in their public schools, few use curricula and interventions that integrate targeted instructional supports for children with disabilities. A systematic review identified few evidence-based curricula and interventions that feature both instructional content and targeted instructional supports.

The efficacy study is the next step in the EPSEP feasibility assessment. It will test the implementation and impacts of a program that integrates instructional content and targeted instructional supports.

The efficacy study will be completed in three years. Table A.3 shows the schedule of data collection activities and the overall evaluation timeline.

Table A.3. Schedule of major study activities

Activity |

Summer 2019 |

Fall 2019 |

Spring 2020 |

Summer 2020 |

Fall 2020 |

Spring 2021 |

Summer 2021 |

Fall 2022 |

Sampling, consent, and contextual information |

||||||||

School and teacher participation forms |

X |

|

|

|

|

|

|

|

Class roster form |

|

X |

|

|

X |

|

|

|

Parent consent forms |

|

X |

|

|

X |

|

|

|

Administrative records from districts |

|

|

|

X |

|

|

X |

|

Implementation |

||||||||

Training fidelity observations for workshops on IEPM |

X |

X |

|

X |

|

|

|

|

Intervention fidelity observations and interviews |

|

X |

X |

|

X |

X |

|

|

Coach interviews |

|

|

X |

|

|

X |

|

|

Provider’s coaching logs and records |

|

X |

X |

|

X |

X |

|

|

Teacher focus groups |

|

|

X |

|

|

X |

|

|

Teacher background and experiences survey |

|

|

X |

|

|

X |

|

|

Classroom quality |

||||||||

Classroom inclusion and engagement observations and interviews |

|

X |

X |

|

X |

X |

|

|

Child outcomes |

||||||||

Child observations |

|

|

X |

|

|

X |

|

|

Teacher-child reports |

|

X |

X |

|

X |

X |

|

|

Child assessment |

|

|

X |

|

|

X |

|

|

Study findings and next steps |

||||||||

Report on study findings |

|

|

|

|

|

|

|

X |

IES decision whether to conduct large-scale study |

|

|

|

|

|

|

|

X |

A3. Use of technology to reduce burden

The data collection plan is designed to obtain information in an efficient way that minimizes respondent burden. Data collection will use information technologies to reduce the burden on study participants. For example:

Extant data. When feasible, we will gather information from existing online sources. For example, we will obtain information on school calendars from copies published online.

Electronic submission. We will ask districts to provide electronic copies of student records. We will specify the required data elements; however, to reduce burden on the district, we will accept any format in which the data are provided. To help ensure study participants’ confidentiality, we will use a site that allows data to be uploaded securely. We will store data in a secure folder with access limited to the study team.

Support for respondents. The study team will designate a site liaison who will be the main point of contact for district staff. Site liaisons will be accessible by telephone and email to field inquiries from district staff. In addition, the study will have a toll-free number and email address.

We will not include a web-based mode for the teacher-child reports or teacher background and experiences survey. In prior studies of the IEPM components, high response rates were obtained using a staggered data collection process where teachers are provided hard-copy packets to complete in a staggered, sequential manner. We will provide the teacher background and experiences survey to teachers as part of an on-site visit; therefore, a web-based mode is not necessary.

A4. Efforts to avoid duplication

IEPM integrates three existing interventions that have not been combined before or tested together. Therefore, no other evaluations of IEPM are being conducted, and there is no other source for the information to be collected. Moreover, the data collection plan reflects careful attention to the potential sources of information for this study, particularly to the reliability of the information and the efficiency in gathering it. We will use existing administrative information as much as possible, primarily for constructing the child sample and for data on child characteristics.

Information obtained from the training fidelity observations, provider’s coaching logs and records, coach interviews, teacher focus groups, and teacher background and experiences survey is not available elsewhere. No database includes fidelity data from the TPOT, QPI, and PTR-YC together to study the intervention fidelity of IEPM or a related program, let alone one that also includes the classroom and child outcome data to be collected under this request.

A5. Methods of minimizing burden on small entities

The primary entities for the evaluation are district and school staff. We will minimize burden for all respondents by requesting only the minimum data required to meet evaluation objectives. Burden on respondents will be further minimized through the careful specification of information needs. We will also keep our data collection instruments short and focused on the data of most interest.

A6. Consequences of not collecting data

The data collection plan described in this submission is necessary for IES to meet the objectives for testing implementation of IEPM through conduct of a rigorous efficacy study. Collecting these data will allow us to (a) describe implementation experiences, particularly whether IEPM’s components can be implemented together with fidelity and (b) estimate the impact of IEPM on classroom and child outcomes.

Here, we outline the consequences of not collecting specific data:

Without the provider’s coaching logs, coach interviews, and teacher focus groups, we would not have data to understand the coaching that teachers received, perspectives on implementation successes and challenges, and opportunity costs associated with IEPM.

Without the teacher background and experiences survey, we would not have the data needed to compare teachers in the treatment and control groups in terms of the types of professional development they are receiving, their perspectives on teaching preschool children in inclusive classrooms, and how they use data to make decisions.

Without the child observations, teacher-child reports, and child assessment, we would not be able to measure the impact of IEPM on critical child outcomes. These constructs include children’s social-emotional skills, problem behaviors, language pragmatics, and early reading skills.

Without the district administrative records, we would not have data to construct or fully describe the study sample. We also would not have data on suspensions and expulsions for the impact analysis.

A7. Special circumstances

There are no special circumstances associated with this data collection.

A8. Federal Register announcement and consultation

a. Federal Register announcement

The 60-day Federal Register notice was published on January 29, 2019, volume 84, no. 19, page 454. One comment was received in response to the 30-day Federal Register notice, but no changes were made as the comment is related to the intervention being tested by the evaluation rather than the information collection instruments.

b. Consultation outside the agency

In selecting IEPM and the implementation support provider, the study team and IES have sought input from several people with expertise in early childhood special education. In addition, with IES, we formed a technical working group (TWG) to provide input on the design and data collection plans for the efficacy study. Table A.4 lists the experts who have provided consultation on the efficacy study.

Table A.4. Experts who consulted during the study design

Name |

Affiliation |

Consultation on selection of program and provider |

|

Margaret Burchinal |

Frank Porter Graham Child Development Institute, University of North Carolina at Chapel Hill |

Ed Feil |

Oregon Research Institute |

Laura Justice |

College of Education and Human Ecology, Ohio State University |

TWG members |

|

Karen Bierman |

Department of Psychology, Pennsylvania State University |

Geoffrey Borman |

Department of Educational Policy Studies, University of Wisconsin-Madison |

Judy Carta |

School of Education and Juniper Gardens Children’s Project, University of Kansas |

Bridget Hamre |

Curry School of Education and Human Development, University of Virginia |

Annemarie Hindman |

College of Education, Temple University |

Laura Justice |

College of Education and Human Ecology, Ohio State University |

Scott McConnell |

Department of Education Psychology, University of Minnesota |

Carol Trivette |

Department of Early Childhood Education, East Tennessee State University |

c. Unresolved issues

There are no unresolved issues.

A9. Payments or gifts

To maximize the success of our data collection effort, we have proposed incentives for teachers and children participating in the study. Because high response rates are needed to make the study findings reliable, we propose incentives to teachers to compensate them for their time and effort completing the data collection activities. We also propose a small gift to offer each child after completing the assessment. The proposed amounts are within the incentive guidelines outlined in the March 22, 2005, memo, “Guidelines for Incentives for NCEE Evaluation Studies,” prepared for OMB.

Teacher incentive for collecting parent consent forms. We propose to provide teachers with an incentive for collecting parent consent forms that allow us to collect data from and about their child. Teachers (one per classroom) will receive $25 for distributing and collecting parent consent. Because it will be critical for the study to obtain parental permission for as many children in study classrooms as possible, we will offer teachers an additional $25 for collecting parent consent forms for at least 85 percent of their students. This represents a maximum of $50 for any one teacher (roughly $2.75 per form and less than the NCEE guideline of $3 per low burden student report). We expect teachers will have to remind children and call or email parents to obtain 85 percent of the forms. Our goal is to ensure that we have as many children in the classroom as possible during data collection to accurately evaluate children’s behavior and practices in the classroom. Field staff will collect the consent forms from the teachers. We used this tiered incentive on multiple studies, including the Impact Study of Feedback for Teachers Based on Classroom Videos, where 70 percent of the teachers obtained consents from 85 percent or more of the students in their classrooms.

Teacher respondent payment. We propose to offer a $25 classroom-level incentive in the form of materials and/or books. This is a thank you for allowing field staff to conduct several days (up to 5) of observations and/or child assessments twice a year, which will result in some disruption to the normal flow of the school day for a week. We also propose to give teachers $20 for each teacher-child report they complete. It will take 25 minutes, on average, to complete each teacher-child report. We will ask the study teachers to complete a teacher-child report for each study child in their classroom in fall 2019, spring 2020, fall 2020, and spring 2021 (to limit burden on any one teacher, this responsibility will be divided between the two study teachers in each class). These reports will provide data on the child’s functional abilities, social-emotional skills, problem behaviors, and language pragmatics skills. Given the length and number of teacher-child reports to be completed twice a year, we consider this a high-burden activity and as such propose the combination of classroom-level and teacher-level incentives to be critical to achieving the necessary response rates.

Teacher focus group payments. Teachers receiving the IEPM program will be invited to participate in a 90-minute focus group to share their opinions on the training and coaching received. Those who participate in the focus group will receive $50. Focus groups will include approximately 10 teachers per group, with discussions taking place in each district in the spring of both years.

Child assessment payments. Children who complete the child assessment in the spring will receive a book valued at $7 as a thank you for participating in this activity. Children will receive different books in spring 2020 and spring 2021.

A10. Assurances of confidentiality

Mathematica and its research partners will conduct all data collection activities for this study in accordance with relevant regulations and requirements, which are:

The Privacy Act of 1974, P.L. 93-579 (5 U.S.C. 552a)

The Family Educational and Rights and Privacy Act (FERPA) (20 U.S.C. 1232g; 34 CFR Part 99)

The Protection of Pupil Rights Amendment (PPRA) (20 U.S.C. 1232h; 34 CFR Part 98)

The Education Sciences Institute Reform Act of 2002, Title I, Part E, Section 183

The research team will protect the confidentiality of all data collected for the study and will use it for research purposes only. The Mathematica project director will ensure that all individually identifiable information about respondents remains confidential. All data will be kept in secured locations and identifiers will be destroyed as soon as they are no longer required. All members of the study team having access to the data will be trained and certified on the importance of confidentiality and data security. When reporting the results, data will be presented only in aggregate form, such that individuals, schools, and districts are not identified. Included in all voluntary requests for data will be the following statement under the Notice of Confidentiality:

“Information collected for this study comes under the confidentiality and data protection requirements of the Institute of Education Sciences (The Education Sciences Reform Act of 2002, Title I, Part E, Section 183). Responses to this data collection will be used only for statistical purposes. The reports prepared for the study will summarize findings across the sample and will not associate responses with a specific district or individual. We will not provide information that identifies you or your district to anyone outside the study team, except as required by law.”

The following safeguards are routinely employed by Mathematica to carry out confidentiality assurances, and they will be consistently applied to this study:

All Mathematica employees sign a confidentiality pledge (Appendix I) that emphasizes the importance of confidentiality and describes employees’ obligations to maintain it.

Personally identifiable information (PII) is maintained on separate forms and files, which are linked only by sample identification numbers.

Access to hard copy documents is strictly limited. Documents are stored in locked files and cabinets. Discarded materials are shredded.

Access to computer data files is protected by secure usernames and passwords, which are only available to specific users.

Sensitive data is encrypted and stored on removable storage devices that are kept physically secure when not in use.

Mathematica’s standard for maintaining confidentiality includes training staff regarding the meaning of confidentiality, particularly as it relates to handling requests for information, and providing assurance to respondents about the protection of their responses. It also includes built-in safeguards concerning status monitoring and receipt control systems.

The data is to be stored both electronically and in paper copy. It is to be retrievable by ID and the data will be maintained and disposed of in accordance with the Department’s Records Disposition requirements. The electronic file will kept in a password protected server. The paper copy will be kept in a locked file cabinet and all access to data in both electronic and paper form will be restricted to study staff on a need to know basis.

A11. Justification for sensitive questions

No questions of a sensitive nature will be included in this study.

A12. Estimates of hours burden

Table A.5 provides an estimate of time burden for the data collections, broken down by instrument and respondent. These estimates are based on our experience collecting administrative data from districts and obtaining parent permission. The estimates for administering surveys to teachers and items in the teacher participation forms are based on pre-test findings.

The number of targeted respondents is 1,739 and the number of estimated responses is 1,471. The total burden is estimated at 1,398 hours, or an estimated average annual burden of 466 burden hours calculated across three years.

The total of 1,398 hours includes the following: (1) up to 8 hours, annually for two years, for each of the three districts to assemble student records data for students participating in the evaluation; (2) up to 26 hours, annually for two years, for class rosters provided by schools; (3) 10 teachers per district (in the treatment schools) to participate in a 90-minute focus group in the spring of both years; (4) 10 minutes annually for two rounds of the teacher background and experience survey (95 percent of the anticipated samples of 80 teachers in both years); (5) 25 minutes for teacher interviews that are part of the intervention fidelity observations each fall and spring; (6) 20 minutes for teachers interviews that are part of classroom inclusions and engagement observations twice a year for two years; (7) 25 minutes for teachers to complete up to 14 Teacher-child reports each fall and 13 Teacher-child reports each spring; and (8) 10 minutes for 590 parents each year to complete a consent form.

Table A.5. Estimated response time for data collection

Respondent/data request |

Number of targeted respondents |

Expected response rate (percentage) |

Expected number of respondents |

Unit response time (hours) |

Responses per respondent per year |

Years of responses |

Total burden time (hours) |

Response time averaged across three years (hours)

|

Districts |

|

|

|

|

|

|

|

|

Student records data (one time per year for two years) |

3 |

100 |

3 |

8.0 |

1 |

2 |

48 |

16.0 |

Schools |

|

|

|

|

|

|

|

|

Class roster form (one time per year for two years) |

26 |

100 |

26 |

1.0 |

1 |

2 |

52 |

17.3 |

Teachers |

|

|

|

|

|

|

|

|

Teacher focus groups (one per district in spring 2020 and spring 2021) |

30 |

100 |

30 |

1.5 |

1 |

2 |

90 |

30.0 |

Teacher background and experiences survey (one per teacher spring 2019 and spring 2020) |

80 |

95 |

76 |

0.17 |

1 |

2 |

26 |

8.6 |

Interviews that are part of intervention fidelity observations (fall 2019, spring 2020, fall 2020, and spring 2021) |

40 |

100 |

40 |

0.42 |

2 |

2 |

67 |

22.3 |

Interviews that are part of classroom inclusion and engagement observations (fall and spring each year) |

40 |

100 |

40 |

0.33 |

2 |

2 |

53 |

17.6 |

Teacher-child report (fall and spring each year; consented children divided between both classroom teachers) |

80 |

95 |

76 |

0.42 |

13.5 |

2 |

862 |

287.3 |

Parents |

|

|

|

|

|

|

|

|

Parent consent form (year 1) |

720 |

82 |

590 |

0.17 |

1 |

1 |

100 |

33.4 |

Parent consent form (year 2) |

720 |

82 |

590 |

0.17 |

1 |

1 |

100 |

33.4 |

Total (rounded) |

1,739 |

|

1,471 |

|

|

|

1,398 |

466 |

A13. Estimate of cost burden to respondents

There are no costs to respondents associated with this study.

A14. Annualized cost to the federal government

The total cost to the federal government for this data collection (the current request) is $6,705,860. This includes costs of recruiting districts, designing and administering all data collection instruments, processing and analyzing the data, and preparing a report. The estimated average annual cost is $1,676,465 (the total cost divided by the four years of the study).

A15. Reasons for program changes or adjustments

Even though the burden hours are less than the previous collection, there is a program change due to this being a reinstatement.

A16. Plans for tabulation and publication of results

The efficacy study will assess whether IEPM can be implemented with fidelity and estimate its impact on classroom and child outcomes. The contractor will use the data collected to prepare a report that clearly describes how the data address the key study questions, highlights key findings of interest to policymakers and educators, and includes charts and tables to illustrate key findings. The report will be written in a manner suitable for distribution to a broad audience of policymakers and educators and will be accompanied by a one-page and a four-page summary brief. We anticipate that ED will clear and release this report by fall 2022. The final report and briefs will be made publicly available on both the ED website and on the Mathematica website.

A17. Approval not to display the expiration date for OMB approval

All instruments will display the expiration date for OMB approval.

A18. Explanation of exceptions

No exceptions to the certification statement are requested or required.

REFERENCES

Buysse, V., and D.B. Bailey. “Developmental and Behavioral Outcomes in Young Children with Disabilities in Integrated and Segregated Settings. A Review of Comparative Studies.” Journal of Special Education, vol. 26, no. 4, 1993, pp. 434–461.

Cross, A.F., E.K. Traub, L. Hutter-Pishgahi, and G. Shelton. “Elements of Successful Inclusion for Children with Significant Disabilities.” Topics in Early Childhood Special Education, vol. 24, no. 3, 2004, pp. 169–183.

Diamond, K.E., and H.H. Huang. “Preschoolers’ Ideas About Disabilities.” Infants and Young Children, vol. 18, no. 1, 2005, pp. 37–46.

Division for Early Childhood. “DEC Recommended Practices in Early Intervention/Early Childhood Special Education.” Arlington, VA: DEC, 2014. Available at www.dec-sped.org/recommendedpractices. Accessed September 14, 2018.

Doksum, K. “Empirical Probability Plots and Statistical Inference for Nonlinear Models in the Two-Sample Case.” The Annals of Statistics, vol. 2, 1974, pp. 267–277.

Dunlap, G., P.S. Strain, J. Lee, J.D. Joseph, and N. Leech. “A Randomized Controlled Evaluation of Prevent-Teach-Reinforce for Young Children.” Topics in Early Childhood Special Education, vol. 37, 2018, pp. 195–205.

Firpo, S. “Efficient Semiparametric Estimation of Quantile Treatment Effects.” Econometrica, vol. 75, no. 1, 2007, pp. 259-276.

Fox, L., G. Dunlap, M.L. Hemmeter, G.E. Joseph, and P.S. Strain. “The Teaching Pyramid: A Model for Supporting Social Competence and Preventing Challenging Behavior in Young Children.” Young Children, vol. 58, 2003, pp. 48–52.

Green, K., N. Terry, and P. Gallagher. “Progress in Language and Literacy Skills Among Children with Disabilities in Inclusive Early Reading First Classrooms.” Topics in Early Childhood Special Education, vol. 33, no. 4, 2014, pp. 249–259.

Hemmeter, M.L., M. Ostrosky, and L. Fox. “Social and Emotional Foundations for Early Learning: A Conceptual Model for Intervention.” School Psychology Review, vol. 35, 2006, pp. 583–601.

Hemmeter, M.L., P.A. Snyder, L. Fox, and J. Algina. “Evaluating the Implementation of the Pyramid Model for Promoting Social-Emotional Competence in Early Childhood Classrooms.” Topics in Early Childhood Special Education, vol. 36, no. 3, 2016, pp. 133–146.

Horn, E., and R. Banerjee. “Understanding Curriculum Modifications and Embedded Learning Opportunities in the Context of Supporting All Children’s Success.” Language, Speech, and Hearing Services in School, vol. 40, 2009, pp. 406–415.

Koenker, R., and G. Bassett. “Regression Quantiles.” Econometrica, vol. 46, no. 1, 1978, pp. 33–50.

Lehmann, E. Nonparametrics: Statistical Methods Based on Ranks. San Francisco: Holden-Day, 1974.

Odom, S.L., J. Vitztum, R. Worley, J. Lieber, S. Sandall, M.J. Hanson, P. Beckman, I.S. Schwartz, and E. Horn. “Preschool Inclusion in the United States: A Review of Research from an Ecological Systems Perspective.” Journal of Research in Special Educational Needs, vol. 4, no. 1, 2004, pp. 17–49.

Schochet, P.Z., M. Puma, and J. Deke. “Understanding Variation in Treatment Effects in Education Impact Evaluations: An Overview of Quantitative Methods.” Methods report submitted to the Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance. Princeton, NJ: Mathematica Policy Research, April 2014.

Snyder, P., M.L. Hemmeter, M. McLean, S. Sandall, T. McLaughlin, and J. Algina. “Effects of Professional Development on Preschool Teachers’ Use of Embedded Instruction Practices.” Exceptional Children, vol. 84, 2018, pp. 213–232. doi.org/10.1177/0014402917735512.

Strain, P.S., and E. Bovey. “Randomized Controlled Trial of the LEAP Model of Early Intervention for Young Children with Autism Spectrum Disorders.” Topics in Early Childhood Special Education, vol. 31, no. 3, 2011, pp.133–154.

U.S.

Department of Health and Human Services and U.S. Department of

Education. “Policy Statement on Inclusion of Children with

Disabilities in Early Childhood Programs.” Washington, DC: HHS

and ED, 2015. Available at

https://www2.ed.gov/about/inits/ed/

earlylearning/inclusion/index.html.

Accessed September 14, 2018.

This page has been left blank for double-sided copying.

www.mathematica-mpr.com

Improving

public well-being by conducting high quality,

objective

research and data collection

Princeton, NJ ■ Ann Arbor, MI ■ Cambridge, MA ■ Chicago, IL ■ Oakland, CA ■ Seattle, WA ■ TUCSON, AZ ■ Washington, DC ■ Woodlawn, MD

1 We define inclusive classrooms as classrooms in which children with disabilities are educated alongside other children and receive most or all of their special education.

2 IES is not requesting approval for the collection of data that the study team will collect and that will not impose any burden on teachers or district staff. Examples include coaching logs, coach interviews, and observations.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Stephen Lipscomb |

| File Modified | 0000-00-00 |

| File Created | 2021-01-15 |

© 2026 OMB.report | Privacy Policy