PMAPS- PM Supp Stat A_022817_clean_UPDATED_072919_2

PMAPS- PM Supp Stat A_022817_clean_UPDATED_072919_2.docx

Collection of Performance Measures - Personal Responsibility Education Program (PREP)

OMB: 0970-0497

U.S. Department of Health and Human Services Office of Planning Research and Evaluation, and Family and Youth Services Bureau Administration for Children and Families Switzer Building, 4rd floor 330 C Street, SW Washington, DC 20201 Project Officer: Caryn Blitz

|

Part A: Justification for the Collection of Performance Measures - Personal Responsibility Education Program July 2019

|

Part a Introduction 1

A1. Circumstances Making the Collection of Information Necessary 4

1. Legal or Administrative Requirements that Necessitate the Collection 4

A.2. Purpose and Use of the Information Collection 4

A.3. Use of Information Technology to Reduce Burden 7

A.4. Efforts to Identify Duplication and Use of Similar Information 8

A.5. Impact on Small Businesses 9

A.6. Consequences of Not Collecting the Information/Collecting Less Frequently 9

A.7. Special Circumstances 9

A.8. Federal Register Notice and Consultation Outside the Agency 9

A.9. Incentives for Respondents 9

A.10. Assurance of Privacy 10

A.11. Justification for Sensitive Questions 11

A.12 Estimates of the Burden of Data Collection 12

1. Annual Performance Measures Burden for Youth Participants 12

2. Annual Performance Measures Burden for Grantees and Sub-Awardees 14

3. Core Measures for Local Impact Evaluations 15

4. Total Annual Burden and Cost Estimates 15

A13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers 16

A.14. Annualized Cost to Federal Government 16

A.15. Explanation for Program Changes or Adjustments 16

CONTENTS (continued)

A16. Plans for Tabulation and Publication and Project Time Schedule 16

1. Analysis Plan 16

2. Time Schedule and Publications 17

A17. Reason(s) Display of OMB Expiration Date is Inappropriate 18

A18. Exceptions to Certification for Paperwork Reduction Act Submissions 18

SUPPORTING REFERENCES FOR INCLUSION OF SENSITIVE QUESTIONS OR GROUPS OF QUESTIONS 19

TABLES

A2 1. Collection Frequency for PREP Performance Measures Data 5

2. Collection Frequency for PREIS Core Measures 7

A11 1. Summary of Sensitive Questions to Be Included on the Participant Entry and Exit Surveys, the Core Measures for PREIS Grantees' Local Evaluations, and Their Justification 11

A12 1. Annual Performance Measures Burden for Youth Participants 13

2. Calculations of Burden for Grantees and Sub-awardees 14

3. Core Measures for Local Impact Evaluations 15

4. Total Annual Burden and Cost Estimates……………………………………… 16

INSTRUMENTS

INSTRUMENT #1 – PARTICIPANT ENTRY SURVEY

INSTRUMENT #2 – PARTICIPANT EXIT SURVEY

INSTRUMENT #3 – PERFORMANCE REPORTING SYSTEM DATA ENTRY FORM

INSTRUMENT #4 – SUBAWARDEE DATA COLLECTION AND REPORTING FORM

INSTRUMENT #5 – CORE MEASURES FOR PREIS GRANTEES’ LOCAL EVALUATIONS

ATTACHMENTS

ATTACHMENT A: 60-DAY FEDERAL REGISTER NOTICE

ATTACHMENT B: CONSENT FORMS

The Administration for Children and Families (ACF) Office of Planning, Research, and Evaluation (OPRE) seeks approval to collect a revised set of performance measures which were previously approved under OMB Control #0970-0398, for the Personal Responsibility Education Program (PREP).

In March 2010, Congress authorized the PREP as part of the Patient Protection and Affordable Care Act (ACA); it was reauthorized in 2015 for an additional two years of funding through the Medicare Access and CHIP Reauthorization ACT of 2015. PREP provides grants to states, tribes, and tribal communities, and community organizations to support evidence-based programs to reduce teen pregnancy and sexually transmitted infections (STIs). The programs are required to provide education on both abstinence and contraceptive use. The programs also offer information on adulthood preparation subjects such as healthy relationships, adolescent development, financial literacy, parent–child communication, education and employment skills, and healthy life skills. Grantees are encouraged to target their programming to high-risk populations—for example, youth in foster care, homeless youth, youth with HIV/AIDS, pregnant youth who are under 21 years of age, mothers who are under 21 years of age, and youth residing in geographic areas with high teen birth rates.

Per legislation, there are four PREP programs:

States and other entities can access allotted PREP funding through a formula grants program, known as State PREP, or SPREP.

If a state does not access PREP funding, competitive grants are available for programs in that particular state: the grants are known together as Competitive PREP, or CPREP.

Grants to tribes and tribal communities are made through a competitive process: the grants are known together as Tribal PREP, or TPREP.

Funding is also available to fund new and innovative approaches to teen pregnancy prevention: the grants are known together as Personal Responsibility Education – Innovative Strategies, or as PREIS. These grantees are also conducting grantee-specific evaluations, called local evaluations. These evaluations are conducted by independent evaluators, called local evaluators, selected by grantees.

ACF is seeking approval to collect performance measures across all PREP programming (i.e., SPREP, CPREP, TPREP, and PREIS) and to collect a small set of additional “core measures” for local evaluations for PREIS grantees.1 This approval will support PREP-funded programs in collecting and reporting their performance data through the PREP data warehouse. We also plan to develop streamlined data analysis and reporting through a Performance Measures Dashboard to provide grantees and federal program staff with near real-time data for program monitoring and improvement. Both the warehouse and Dashboard will be housed by the contractor.

Performance measures. We plan to collect data via a revised set of instruments used previously (OMB Control #0970-0398) for the collection of PREP performance measures:

Instrument #1: Participant entry survey

Instrument #2: Participant exit survey

Instrument #3: Performance Reporting System Data Entry Form

Instrument #4: Subawardee Data Collection and Reporting Form

The collection and analysis of PREP performance measures (PM) play a unique role in the mix of current federal evaluation efforts to expand the evidence base on teen pregnancy prevention programs. The objective of the PREP PM effort is to document how PREP-funded programs are operationalized in the field and assess program outcomes. The PM effort will provide information on large-scale (national) replication of evidence-based programs, with particular emphasis on 1) lessons learned from replication among high-risk populations in new settings, such as youth in foster care group homes, in the juvenile justice system, or youth living on tribal lands; 2) lessons learned from how states, tribes, and localities implement evidence-based programs most appropriate for their local contexts; and (3) adaptations made to support the unique PREP requirements, such as the inclusion of adulthood preparation subjects.

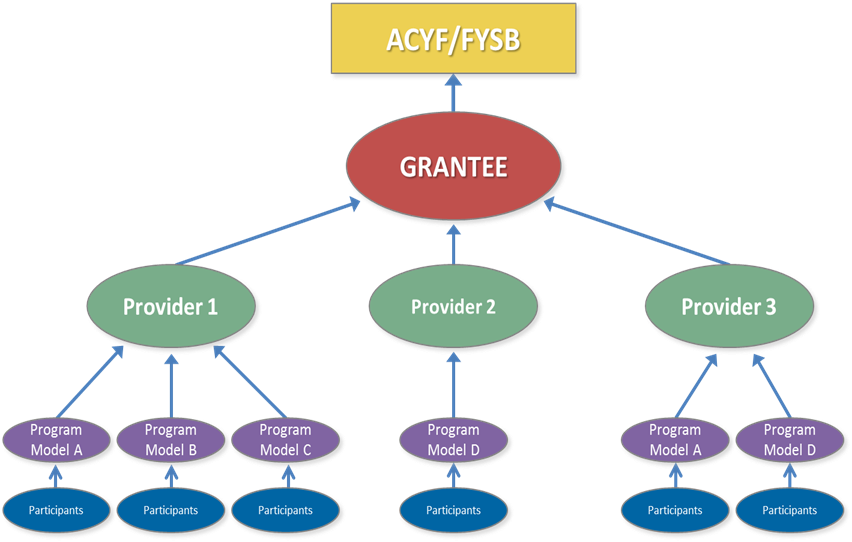

The plan for collecting and reporting the performance measures data reflects the multiple layers that states, tribes and tribal communities, and community organizations are using to support program delivery. For example, some grantees may directly implement the programs. In other arrangements, grantees may deliver programs through other providers (e.g., sub-awardees). Ultimately, the grantees will be responsible for ensuring that all performance measures are reported to ACF. The data that the grantees report to ACF will originate from three levels – the grantee, grantees’ sub-awardees, and the youth completing entry and exit surveys. For some performance measures, grantees will provide data about activities or decisions that they undertake directly at the grantee level. For other measures, data will come from the sub-awardees to the grantee because sub-awardees oversee the activities to be documented. In addition, some data will come from the youth themselves, who will be asked to complete entry and exit surveys. The efforts expected to be undertaken at each level and the estimated level of burden are further explained in Section A.12.

Figure 1: Levels of PREP Performance Measures Data

The performance measures data will be reported by grantees to the PREP PM data warehouse. Mathematica Policy Research will maintain the warehouse and make any necessary adjustments or updates as needed.

Core measures for PREIS grantees’ local evaluations. This request also includes another instrument:

Instrument #5: Core measures for PREIS grantees’ local evaluations

All PREIS grantees are expected to conduct grantee-specific impact evaluations. The impact evaluations are led by independent evaluators, called local evaluators, selected by the grantee. The objective of the PREIS local evaluations is to assess the impacts of PREIS grants. Since PREIS grantees are implementing innovative teen pregnancy prevention strategies, the local, impact evaluations will help ACF determine the effectiveness of innovative strategies in improving key outcomes related to teen pregnancy, STIs, and associated sexual risk behaviors.

For their local evaluations, PREIS grantees will be expected to collect a small set of core measures at three time points: post-program, short-term follow-up and long-term follow-up. These measures will already be collected at baseline through Instrument #1 (the Participant Entry Survey). The collection of a common set of core measures will allow grantees to assess the effectiveness of their program on a set of key measures.

PREIS grantees will work closely with their selected local evaluation teams to develop strong evaluation plans in order to implement a rigorous, impact evaluation. ACF will work closely with each grantee/local evaluator pair to ensure that local evaluations are conducted in such a way that they meet the standards of HHS’ Teen Pregnancy Prevention Evidence Review. Specifically, ACF will ensure that grants are implemented in a way that is amenable to impact evaluation (e.g., randomized controlled trial or high-quality quasi-experimental design), and that evaluations have a strong treatment and control group counterfactual with sufficient sample to detect impacts.

Instrument #5, core measures for PREIS grantees’ local evaluations, includes outcome measures specific to HHS’ Teen Pregnancy Prevention Evidence Review. Approval of Instrument #5 will ensure that all PREIS local evaluations collect the same core measures, so that there is uniformity in reporting and, ultimately, information to determine whether PREIS grantees demonstrate evidence of effectiveness that meets HHS’ Teen Pregnancy Prevention Evidence Review standards.

PREIS grantees, in concert with their local evaluators, will be responsible for all data collection efforts and analyses. PREIS grantees will work with the evaluation team to ensure that data is collected systematically from all participants. Additionally, PREIS grantees will work with the evaluation team to ensure that they have a strong analysis plan to support scientifically rigorous findings. ACF will provide training and technical assistance throughout the entire evaluation period, from the planning process through data analysis.

A1. Circumstances Making the Collection of Information Necessary

Legal or Administrative Requirements that Necessitate the Collection

On March 23, 2010 the President signed into law the Patient Protection and Affordable Care Act (ACA), H.R. 3590 (Public Law 111-148, Section 2953). In addition to its other requirements, the act amended Title V of the Social Security Act (42 U.S.C. 701 et seq.) to include $55.25 million in formula grants to states to “replicate evidence-based effective program models or substantially incorporate elements of effective programs that have been proven on the basis of scientific research to change behavior, which means delaying sexual activity, increasing condom or contraceptive use for sexually active youth, or reducing pregnancy among youth.” Beyond the $55.25 million for the State PREP program, the PREP legislation also established a $10 million PREP Innovative Strategies (PREIS) program, and a $3.25 million Tribal program.

Section 215 of the Medicare Access and CHIP Reauthorization Act of 2015 extends funding through FY2017 for PREP formula grants to states. The legislation mandates that the Secretary evaluate the programs and activities carried out with funds made available through PREP. To meet this requirement, Family and Youth Services Bureau (FYSB) and OPRE within ACF contracted with Mathematica Policy Research and its subcontractors to collect performance measures and conduct a local evaluation of PREIS grantees.

The collection of performance measures supports compliance with the GPRA Modernization Act of 2010 (Public Law 111-352).

A.2. Purpose and Use of the Information Collection

Performance measures (PM). The purpose of measuring performance is to track inputs, outputs and outcomes over time to provide information on how all PREP grantees and their programs are performing. Through the PM, grantees will be required to submit data twice a year on PREP program structure and PREP program delivery.

PREP program structure refers to how grant funds are being used, the program models selected, the ways in which grantees and sub-awardees support program implementation, and the characteristics of the youth served.

PREP program delivery refers to the extent to which the intended program dosage was delivered, youths’ attendance, youths’ perceptions of program effectiveness and their experiences in the programs, and challenges experienced implementing the programs.

To understand PREP program structure, grantees will be asked to provide the amount of the grant allocated for various activities, including direct service provision; approach to staffing PREP at the grantee level; grantee provision of training, technical assistance, and program monitoring; number of sub-awardees, their funding, program models, populations, settings, and coverage of adulthood preparation subjects; number of program facilitators, their training on the program model, and the extent to which they are monitored to ensure program quality; and the characteristics of the youth entering the PREP programs. This information will be collected from the grantees (Instrument 3) and their sub-awardees (Instrument 4). Subawardees will submit their data to grantees, who will then compile this information and submit it to ACF twice a year (Instrument 3).

To understand PREP program delivery, grantees will be asked to provide the number of completed program hours for each cohort; number of youth who ever attended a PREP program, and by subpopulations, such as youth in foster care or the juvenile justice system; youths’ attendance; youths’ perceptions of program effectiveness and program experiences; and challenges providers face implementing their programs. This information will be collected from sub-awardees (Instrument 4) and submitted to ACF by the grantees twice a year (Instrument 3).

The frequency with which performance data will be collected from grantees is summarized in Table A2.1.

Table A2.1. Collection Frequency for PREP Performance Measures Data

Instrument/Category |

Frequency of Collection |

Frequency of Reporting to ACF |

#1 Participant Entry Survey |

|

|

Demographics; Sexual behaviors and intentions; Pregnancy history; Recent contraceptive use; Associated protective/risk behaviors

|

Program Entry |

Twice a year |

#2 Participant Exit Survey |

|

|

Demographics; Program impacts on sexual intentions, contraceptive use, and protective/risk factors; Participant perceptions of program effects; Participant assessments of program experiences

|

Program Exit |

Twice a year |

#3 Grantee Performance Reporting System Data Entry Form |

Ongoing, as programs are implemented

|

|

Total respondent counts of measures in the Entry and Exit surveys

Total respondent counts by measures of attendance, reach, and dosage

Program completion by cohort

Implementation challenges and technical assistance needs

Administrative data on program features and structure, cost, and support for program implementation

|

Program Entry and Exit

At cohort completion

At cohort completion

Twice a year

Twice a year |

Twice a year

Twice a year

Twice a year

Twice a year

Twice a year

|

#4 Subawardee Data Collection and Reporting Form |

Ongoing, as programs are implemented |

|

Fidelity to evidence-based program models (e.g., intended program delivery hours, target populations, and adult preparations topics)

Staff perceptions of implementation challenges and technical assistance needs

|

Twice a year

Twice a year |

Twice a year

Twice a year |

a “Collection frequency” refers to when grantees, their sub-awardees, and program staff collect the data that will later be compiled and reported to ACF. Grantees will be reporting the data twice a year to ACF in order to inform continuous quality improvement.

A major objective of the performance measures analysis will be to construct, for grantees, ACF, and Congress, a picture of PREP implementation in the form of a basic set of statistics across all grantees. These statistics, for example, will answer questions for the overall PREP program, such as:

What programs were implemented, and for how many youth?

What are the characteristics of the populations served?

To what extent were members of vulnerable populations served?

How many youth participated in most program sessions or activities?

How many entities are involved at the sub-awardee level in delivering PREP programs?

How do grantees allocate their resources?

How do participants feel about the programs, and how do they perceive its effect on them?

What challenges do grantees and their partners see in implementing PREP programs on a large scale? ACF will then use the performance measures data to (1) track how grantees are allocating their PREP funds; (2) assess whether PREP objectives are being met (e.g., in terms of the populations served); and (3) help drive PREP programs toward continuous improvement of service delivery. In addition, ACF will use this information to fulfill reporting requirements to Congress and the Office of Management and Budget concerning the PREP initiative. ACF also intends to share grantee and sub-awardee level findings with each grantee to inform their own program improvement efforts.

The Participant Entry Survey (Instrument #1), Participant Exit Survey (Instrument #2), the Performance Reporting System Data Entry Form (Instrument #3), the Sub-awardee Data Collection and Reporting Form (Instrument #4) are attached.

Core measures for PREIS grantees’ local evaluations. Data collected with the PREIS core measures will be used to examine youth outcomes and the impacts of the PREIS programs. The core measures data collection will assess sexual risk outcomes, including the extent and nature of sexual activity, use of contraception if sexually active, and pregnancy.

Core measures will be used to address the following research questions on program impact:

Are the approaches effective at reducing adolescent pregnancy?

What are their effects on related outcomes, such as postponing sexual activity and reducing or preventing sexual risk behaviors and STDs?

The frequency with which core measures will be collected is summarized in Table A2.2.

Table A2.2. Collection Frequency for PREIS Core Measures

Instrument/Category |

Frequency of Collection by Grantee |

Frequency of Reporting to ACF |

Instrument #5: Core measures for PREIS grantees’ local evaluations Teen pregnancy prevention associated risk behaviors and outcomes

|

Program Exit, Short-term Follow-up, and Long-Term Follow-up |

At the end of the grant period |

|

||

Instrument #5 will be used to collect core measures for PREIS grantees’ local evaluations.

A.3. Use of Information Technology to Reduce Burden

Performance measures. To comply with the Paperwork Reduction Act of 1995 (Pub. L. 104-13) and to reduce grantee burden, ACF is (1) providing common data element definitions across PREP grantees and program models, (2) collecting these data in a uniform manner through the PREP data warehouse, (3) using the PREP data warehouse to calculate common performance measures across grantees and program models, and (4) developing a Performance Measures Dashboard (Dashboard) that is interoperable with the PREP data warehouse to provide near-real-time data reporting for PREP grantees, FYSB project officers, and other ACF staff. Using the PREP data warehouse reduces reporting burden and minimizes grantee and sub-awardee costs related to implementing the reporting requirements. Implementing the Dashboard reduces data analysis and report production time so that grantees can receive near-real-time data through an interactive data dashboard.

Core measures for PREIS grantees’ local evaluations. ACF will assist grantees to find methods to reduce burden via information technology.

A.4. Efforts to Identify Duplication and Use of Similar Information

Performance measures. ACF has carefully reviewed the information collection requirements to avoid duplication with existing studies or other ongoing federal teen pregnancy prevention evaluations and believes that this requested data collection complements, rather than duplicates, the existing literature and the other ongoing federal teen pregnancy prevention evaluations and projects.

As background, there are many federal teen pregnancy prevention-related projects currently in the field or in the beginning stages of development 2 Although the information from these federal efforts will increase our understanding of strategies to reduce teenage sexual risk behavior, the focus of the PREP PM effort is different from the foci of the other federal efforts. Specifically, the PREP PM effort provides the following unique opportunities:

Opportunity to learn about using a state formula grant to scale up evidence-based programs. The PREP PM effort will allow us to learn about both the opportunities and the challenges of scaling up evidence-based teen pregnancy prevention programs through both state formula grants (SPREP) and competitive discretionary grants (TREP, SPREP, and PREIS). It is the only federal evaluation to examine both.

Opportunity to understand the special components of PREP programs. The PREP PM effort will help us to understand the unique components of the programs funded through PREP, such as the adulthood preparation topics, which are being incorporated in the teen pregnancy prevention programming funded through PREP. These components are not part of the other teen pregnancy prevention programs.

Core measures for PREIS grantees’ local evaluations. Likewise, ACF has reviewed other teen pregnancy prevention efforts and has not identified similar efforts underway. The PREIS grantees are responsible for implementing new and innovative approaches to teen pregnancy prevention. By collecting a core set of measures, grantees will help fill gaps in the teen pregnancy prevention field and continue to grow this field.

A.5. Impact on Small Businesses

Performance measures and core measures for PREIS grantees’ local evaluations. Programs in some sites may be operated by community-based organizations. ACF and its contractor teams will provide thorough training and technical assistance throughout the entire data collection effort, from the planning period all the way through data analysis. This training and technical assistance should help to minimize the burden on small businesses.

A.6. Consequences of Not Collecting the Information/Collecting Less Frequently

Performance measures. The Government Performance and Results Act (GPRA) requires federal agencies to report annually on measures of program performance. Therefore, it is essential that grantees report the performance data described in this ICR to ACF. Failure to collect performance measures across all grantees will inhibit ACF from carrying out its reporting requirements to Congress. Further, failure to collect data will inhibit grantees and ACF from reporting to other key stakeholders on PREP program design, implementation, and outcomes.

Core measures for PREIS grantees’ local evaluations. Outcome data are essential to conducting rigorous evaluations required of PREIS grantees supported under PREP legislation. Failure to require a standard set of outcome data will inhibit grantees’ capabilities to submit information that meets the standards of HHS’ Teen Pregnancy Prevention Evidence of Effectiveness. Further, it will hinder ACF’s efforts to look across PREIS programs’ impacts.

There are no special circumstances for the proposed data collection efforts.

A.8. Federal Register Notice and Consultation Outside the Agency

Performance measures; and core measures for PREIS grantees’ local evaluations.

In accordance with the Paperwork Reduction Act of 1995 (Pub. L. 104-13 and Office of Management and Budget (OMB) regulations at 5 CFR Part 1320 (60 FR 44978, August 29, 1995)), ACF published a notice in the Federal Register announcing the agency’s intention to request an OMB review of this information collection activity. This notice was published on April 11, 2016, Volume 81, Number 69, page 21353, and provided a 60-day period for public comment. A copy of this notice is included as Attachment A. During the notice and comment period, the government received no substantive comments.

To develop the original PREP performance measures (OMB Control # 0970-0398), ACF consulted with staff of Mathematica Policy Research, Child Trends, and RTI International. In reconsidering measures, and in identifying core measures for PREIS grantees’ local evaluations, ACF consulted internal staff and nine tribal grantees to provide feedback.

A.9. Incentives for Respondents

Performance measures. No incentives are proposed for the PMs information collection.

Core measures for PREIS grantees’ local evaluations. ACF does not propose to provide incentives for the PREIS grantees’ local evaluations.

Performance measures.

Participant-level data. Participant-level data required for PM reporting will be gathered by grantees and their subawardees. Grantees will then enter this information in aggregated form into the PREP data warehouse. Grantees and sub-awardees will be responsible for ensuring privacy of participant-level data and securing institutional review board (IRB) approvals to collect these items, as necessary. Some of the grantees may need IRB approval based upon their local jurisdiction mandates. Therefore, we are informing grantees that they should determine whether they need IRB approval and follow the proper procedures of their locality. Grantees will be required to inform participants of the measures that are being taken to protect the privacy of their answers.

These data will be reported by grantees only as aggregate counts. There will be no means by which individual responses can be identified by ACF, Mathematica Policy Research, or other end-users of the data.

Grantee-level data. Grantees will enter all data into the PREP data warehouse that will be transferred from RTI to Mathematica Policy Research, and that is currently being used for the PREP Multicomponent Evaluation. The PREP data warehouse is designed to ensure the security of data that are maintained in there. Electronic data from the PMAPS projects will be stored in a location within the Mathematica Policy Research network that provides the appropriate level of security based on the sensitivity or identifiability of the data. Further, all data reported by grantees related to program participants will be aggregated; no personal identifiers or data on individual participants will be submitted to ACF. Data generated by the warehouse will be in aggregate form only.

Mathematica Policy Research will create and house a Performance Dashboard to provide authorized stakeholders with self-service access to various views of performance indicators that support the management and improvement of PREP programs. The Dashboard application will interface with the data warehouse and allow authorized users to obtain data visualizations of the full suite of PREP performance measures. The Dashboard will display data at the grantee and national levels and will allow users to drill down along dimensions of interest such as funding stream, time, geographic region, curriculum, or adult preparation subject. As needed, Mathematica Policy Research will enforce security roles to prohibit grantees from accessing others’ data.

The Dashboard will be interoperable with the PREP data warehouse. It will have a near-real-time interface with the warehouse so it can display the status of data submissions and help monitor agencies’ compliance with reporting requirements. This is in contrast to the current functionality, which requires several days to extract, process, and review data in response to ACF’s requests, after which months are needed to analyze the data, and to write and refine reports.

Consent Forms. Grantees will receive guidance for active or passive consent (see Consent Forms, Attachment B). The following language is also included on the first page of Instrument 1 and Instrument 2:

The purpose of the information collection and how the information is planned to be used to further the proper performance of the functions of the agency;

An estimate of the time to complete the instrument;

That the collection of information are voluntary; and

That responses will be kept private to the extent permitted by law.

The statement: An agency may not conduct or sponsor, and a person is not required to respond to, a collection of information unless it displays a currently valid OMB control number. The OMB number for this information collection is 0970-0497and the expiration date is 04/30/2020.

Core measures for PREIS grantees’ local evaluations. PREIS grantees will be collecting and analyzing their own data. They will be responsible for maintaining the privacy of all data. All grantees will be required to have their own IRB approval and thus will need to have a plan to protect participant privacy.

Prior to collecting data at PREIS sites, the local evaluation team will seek the appropriate consent or assent needed for data collection with participants. The consent and assent forms will be approved by the grantees’ local IRBs. Participants will be told that, to the extent allowable by law, individual identifying information will not be released or published; rather, data collection will be published only in summary form with no identifying information at the individual level.

At the end of PREIS local evaluations, grantees will submit de-identified data sets to ACF. ACF will provide technical assistance to grantees on how to strip data of identifiers, in order to protect participant privacy.

Grantees submit aggregate data, so no personally identifiable information is collected by ACF and its contractors. ACF will work with the ACF and HHS Offices of the Chief Information Officer (OCIO) to complete any necessary requirements related to privacy and security, as needed. Information will not be maintained in a paper or electronic system from which they are actually or directly retrieved by an individual’s personal identifier.

A.11. Justification for Sensitive Questions

Performance measures and core measures for PREIS grantees’ local evaluations. A key objective of PREP programs is to prevent teen pregnancy through a decrease in sexual activity and/or an increase in contraceptive use. We understand that issues pertaining to the sexual behavior of and contraceptive use among youth and young adults can be very sensitive in nature; however, the questions for the programs’ PM and the core measures for the PREIS local evaluations are necessary to understanding program functioning.

Table A11.1 provides a list of sensitive questions that will be asked on the participant entry and exit surveys and the justification for their inclusion.

Table A11.1. Summary of Sensitive Questions to Be Included on the Participant Entry and Exit Surveys, the Core Measures for PREIS Grantees’ Local Evaluations, and Their Justification

Topic |

Justification |

Participant Entry Survey (Instrument #1) |

|

Sexual orientation (Question 6) |

ACF has a strong interest in improving programming that serves lesbian, gay, bisexual, transgender, and questioning (LGBTQ) youth. This question will allow us to document the extent to which PREP programs serve this subpopulation. This item is also Question 6 on the Exit Survey. |

Sexual activity, incidence of pregnancy and STIs, and contraceptive use (Questions 14-18) |

Level of sexual activity, incidence of pregnancy, and contraceptive use are all central to the PREP evaluation. Collecting this information will allow us to document the characteristics of the population served by PREP and the degree to which they engage in risky behavior. |

Participant Exit Survey (Instrument #2) |

|

Participants’ perceptions of PREP’s effects on their sexual activity and contraceptive use (Questions15 and 17) |

Reducing intentions to engage in sexual activity, risky adolescent sexual behavior and increasing contraceptive use for those who are sexually active are among the central goals of PREP-funded programs. Examining whether participating youth consider PREP programs to be effective in achieving these goals is an important element of gauging the success of these programs. |

|

PREIS Local Evaluation Core Measures (Instrument #5) |

Sexual activity, incidence of pregnancy, and contraceptive use (Questions 1-7) |

The level of sexual activity, incidence of pregnancy/STI, and contraceptive use are all central to PREIS evaluations. Collecting this information will allow us to demonstrate the impacts of PREIS programs, per HHS Teen Pregnancy Prevention Evidence Review. |

|

|

Grantees will inform program participants that their participation is voluntary and they may refuse to answer any or all of the questions in the entry and exit surveys. All grantees will have the opportunity to opt out of asking sensitive questions if necessary.

A.12 Estimates of the Burden of Data Collection

Tables A12.1 and A12.2 provide the estimated annual burden calculations for the performance measures reporting. These are broken out separately as burden for youth participants (Table A12.1) and for grantees and their sub-awardees (Table A12.2). Table A12.3 provides the burden estimates for the core measures for grantees’ local evaluation, and Table A12.4 provides the overall burden estimates.

1. Annual Performance Measures Burden for Youth Participants

Table A12.1 presents the hours and cost burden for the participant entry and exit surveys. The number of participants completing these surveys is based on data collected with state, tribal, and competitive PREP grantees involved in performance measures data collection for the PREP Multicomponent Evaluation from 2012-2015 (OMB Control No.: 0970-0398) and the anticipated number of PREIS grantees estimated by FYSB program staff. The amount of time it will take for youth to complete the entry and exit surveys is estimated based on previous experience administering similar surveys to youth participants. The cost of this burden is estimated by assuming that 10 percent of the youth served by state, tribal, and competitive PREP grantees and 20 percent of youth served by PREIS grantees will be age 18 or older, and then assigning a value to their time of $7.25 per hour, the federal minimum wage. The estimate of the proportion of youth served by PREP programs that will be 18 or older is based on the data collected with, state, tribal, and competitive PREP grantees involved in performance measures data collection for the PREP Multicomponent Evaluation from 2012-2015 (OMB Control No.: 0970-0398) and the anticipated number of youth 18 or older served by PREIS grantees, estimated by FYSB program staff.

Participant entry survey. PREP grantees are expected to serve approximately 436,575 participants over the three year OMB clearance period, for an average of about 145,525 new participants per year.3 Once we apply a 95 percent response rate to the participants, we anticipate 138,249 respondents to the entry survey each year (145,525 x 0.95 =138,249). Based on previous experience with similar instruments, the participant entry survey is estimated to take 9 minutes (0.15 hour) to complete. The total annual burden for this data collection is estimated to be 138,249 x .15 = hours. The annual cost of this burden is estimated to be 2,170 hours for youth age 18 or older x $7.25 = $15,731.

Participant exit survey. It is estimated that about 20 percent of the participants will drop out of the program prior to completion, leaving approximately 116,420 (145,525 x .80 = 116,420) participants at the end of the program annually.4 Of those, we expect 95 percent, or approximately 110,599 participants, will complete the participant exit survey each year.5 Based on previous experience with similar surveys, the exit survey is estimated to take youth 8 minutes (0.13333 hours) to complete. The total annual burden for this data collection is estimated to be 110,599 x .13333 hours = 14,746 hours. The cost of this burden is estimated to be 1,543 hours (for youth age 18 and older) x $7.25 = $11,186.

Additionally, sixteen grantees will be involved in local evaluations that will include collection of sexual behavior outcomes at short-term and long-term follow-up. The estimated burden for this data

Table A12.1. Annual Performance Measures Burden for Youth Participants

Data Collection Instrument |

Type of Respondent |

Total

|

Annual Number of Respondents |

Number of Responses per Respondent |

Average Burden Hours per Response |

Annual Burden Hours |

Annual6 Burden Hours for Age 18 or Older |

Hourly Wage Rate |

Total Annualized Cost |

Participant Entry Survey |

PREP State and Tribal Participants |

327,277 |

109,092 |

1 |

0.15 |

16,364 |

1,636 |

$7.25 |

$11,864 |

CPREP Participants |

68,270 |

22,757 |

1 |

0.15 |

3,414 |

341 |

$7.25 |

$2,475 |

|

PREIS Participants |

19,200 |

6,400 |

1 |

0.15 |

960 |

192 |

$7.25 |

$1,392 |

|

Total Participants |

414,747 |

138,249 |

1 |

0.15 |

20,737 |

2,170 |

$7.25 |

$15,731 |

|

Participant Exit Survey (Instrument 2) |

PREP State and Tribal Participants |

261,821 |

87,274 |

1 |

0.13333 |

11,636 |

1,164 |

$7.25 |

$8,436 |

CPREP Participants |

54,616 |

18,205 |

1 |

0.13333 |

2,427 |

243 |

$7.25 |

$1,760 |

|

PREIS Participants |

15,360 |

5,120 |

1 |

0.13333 |

683 |

137 |

$7.25 |

$990 |

|

Total Participants |

331,797 |

110,599 |

1 |

0.13333 |

14,746 |

1,543 |

$7.25 |

$11,186 |

2. Annual Performance Measures Burden for Grantees and Sub-Awardees

The 93 grantees7 will report performance measures data into a national data warehouse developed for the PREP initiative. They will gather this information with the assistance of their sub-awardees (estimated to be 416 across all grantees).8 The grantee and sub-awardee data collection efforts described below are record-keeping tasks.

Table A12.2. Annual Performance Measures Burden for Grantees and Sub-Awardees

Data Collection Instrument |

Type of Respondent |

Total

|

Annual Number of Respondents |

Number of Responses per Respondent |

Average Burden Hours per Response |

Annual Burden Hours |

Annual Burden Hours for Age 18 or Older |

Hourly Wage Rate |

Total Annualized Cost |

Performance Reporting Data Entry Form (Instrument 3) |

State/Tribal Grantee Administrators |

177 |

59 |

2 |

18 |

2,124 |

N/A |

$21.35 |

$45,347 |

CPREP Grantees |

63 |

21 |

2 |

14 |

588 |

N/A |

$20.76 |

$12,207 |

|

PREIS Grantees |

39 |

13 |

2 |

14 |

364 |

N/A |

$20.76 |

$7,557 |

|

Total N across Grantees |

279 |

93 |

2 |

18 for S/T; 14 for CPREP and PREIS |

3,076 |

N/A |

$21.35 for S/T; $20.76 for CPREP and PREIS |

$65,111 |

|

Subawardee Data Collection and Reporting Form (Instrument 4) |

State/Tribal Subawardees |

1,116 |

372 |

2 |

14 |

10,416 |

N/A |

$20.76 |

$216,236 |

CPREP Subawardees |

132 |

44 |

2 |

12 |

1,056 |

N/A |

$20.76 |

$21,923 |

|

Total N across Sub-awardees |

1,248 |

416 |

2 |

14 for S/T; 12 for CPREP |

11,472 |

N/A |

$20.76 |

$238,159 |

Total Annual Burden and Cost for Grantees

Twice per year, all 93 grantees9 will be required to submit all of the required performance measures into the national data warehouse. Time for a designated PREP grantee administrator to aggregate the data across each of the grantee’s sub-awardees and submit all of the required data into the warehouse is included in the burden estimates along with time to collect information at the grantee-level that pertain to grantee structure, cost, and support for program implementation. The Performance Reporting System Data Entry Form includes all of these required data elements that the grantee will collect, aggregate, and submit into the national warehouse (see Instrument 3). Time for these activities is estimated to be 36 hours per year per state and tribal grantee and 28 hours per year per CPREP and PREIS grantee. The total annual burden for these activities is estimated to be 3,076 hours (59 x 36 hours = 2,124 plus 34 x 28 hours = 952 hours). The cost burden for this activity is estimated to be $65,111 (2,124 x $21.35 for state and tribal grantees and 952 x $20.76 for competitive PREP and PREIS grantees). The hourly wage rates represent the mean hourly wage rate for all occupations ($21.35) and the mean hourly wage rate for community and social service occupations ($20.76) (National Occupational Employment and Wage Estimates, Bureau of Labor Statistics, Department of Labor, May 2010).

Total Annual Burden and Cost for Sub-Awardees

The 416 estimated sub-awardees will conduct multiple activities to support the Performance Measures Study each year (see Instrument 4). They will aggregate data on participant level entry and exit surveys and on attendance and program session hours, report to the grantee on implementation challenges and needs for technical assistance, and report to the grantee on sub-awardee structure, cost, and support for program implementation. The total estimated annual time for sub-awardees is 28 hours for state and tribal and 24 hours for CPREP. The total annual burden for this data collection activity is estimated to be 11,472 hours (372 state and tribal sub-awardees x 28 hours = 10,416 plus 44 CPREP sub-awardees x 24 hours = 1,056 hours). The cost burden for this activity is estimated to be $238,159 (11,472 hours x $20.76).

3. Core Measures for Local Impact Evaluations

Based on the enrollment total from cohort 1, it is expected that 19,200 youth will be enrolled in the evaluation sample across the anticipated 16 local impact evaluations that will be conducted by either PREIS grantees. Sample intake will take place over three years, for an average of 6,400 participants per year. The eight core measures will be asked of youth three times – at an immediate post-program follow-up, at a short-term follow-up (estimated to be 6 months post-program) and a long-term follow-up (estimated to be 12 months post-program). The average annual expected response rate, after anticipated attrition from the original sample intake (N = 6,4000), across the three follow-up surveys, is 5,333 per year, for a total of 16,000 youth across all three years of data collection.Based on previous experience with similar questionnaires, it is estimated that it will take youth 5 minutes (0.08 hours) to complete the 8 questions (with skip patterns), on average. Therefore, the total annual burden for this data collection is estimated to be 16,000 x 0.08 = 1,280 hours. The cost of this burden is estimated to be 1,280 hours x 0.20 (average proportion of youth expected to be age 18 or older at each follow-up) x $7.25 (federal minimum wage) = $1,856.

Data Collection Instrument |

Type of Respondent |

Total

|

Annual Number of Respondents |

Number of Responses per Respondent |

Average Burden Hours per Response |

AnnualBurden Hours |

Annual Burden Hours for Age 18 or Older |

Hourly Wage Rate |

Total Annualized Cost |

Core Measures for local impact evaluations (Instrument 5) |

PREIS youth participants |

16,000 |

5,333 |

3 |

.08 |

1,280 |

256 |

$7.25 |

$1,856 |

4. Total Annual Burden and Cost Estimates

Table A12.4 details the overall burden requested for performance measures data collection under PMAPS. A total of 51,311 hours (and a cost of $332,043) is requested in this ICR. This includes time and cost for performance measures data collection associated with participants, grantees and sub-awardees.

Table A12.4 Total Annual Burden and Cost Estimates

Data Collection Instrument |

Type of Respondent |

Total Number of Respondents |

Annual Number of Respondents |

Number of Responses per Respondent |

Average Burden Hours per Response |

Total Annual Burden Hours |

Annual Burden Hours for Age 18 or Older |

Hourly Wage Rate |

Total Annualized Cost |

Entry Survey (Instrument 1) |

Participants |

414,747 |

138,249 |

1 |

0.15 |

20,737 |

2,170 |

$7.25 |

$15,731 |

Exit Survey (Instrument 2) |

Participants |

331,797 |

110,599 |

1 |

0.13333 |

14,746 |

1,543 |

$7.25 |

$11,186 |

Core measures (Instrument 5) |

Participants |

16,000 |

5,333 |

3 |

0.08 |

1,280 |

256 |

$7.25 |

$1,856 |

Performance Measures Data Report Form (Instrument 3) |

Grantees |

279 |

93 |

2 |

18 for S/T; 14 for CPREP and PREIS |

3,076 |

N/A |

$21.35 for S/T; $20.76 for CPREP and PREIS |

$65,111 |

Performance Measure Data Report Form (Instrument 4) |

Sub-awardees |

1,248 |

416 |

2 |

14 for S/T; 12 for CPREP |

11,472 |

N/A |

$20.76 |

$238,159 |

Estimated Total Annual Burden |

51,311 |

Estimated Total Annualized Cost |

$332,043 |

||||||

A13. Estimates of other total annual cost burden to respondents and record keepers

These information collection activities do not place any other annual cost burden to respondents and record keepers.

A.14. Annualized cost to federal government

The estimated cost for development, collection, and analysis of the PREP performance measures and the core measures to be included in local impact evaluations conducted by a subset of PREP grantees is$856,257. over the three years for requested clearance. The annual cost to the federal government is estimated to be $285,419.

A.15. Explanation for Program Changes or Adjustments

This is a new data collection for new cohorts of PREP grantees.

A16. Plans for Tabulation and Publication and Project Time Schedule

1. Analysis Plan

Performance measures. The PM effort involves collecting performance measures data that will be used to monitor and analyze grantee performance.

Using the performance data for accountability requires constructing indicators for many of the same measures, but separately for each grantee and even sub-awardee. Indicators at the grantee level help fulfill federal responsibilities to hold grantees accountable for performance. Indicators at the sub-awardee level will help grantees in their efforts to hold accountable those to whom they are providing resources for PREP implementation. The structure of the data will also allow for examining several of these questions by program model to better understand successes and challenges implementing the various programmatic approaches.

The results of the performance measures analysis will help ACF and grantees pinpoint areas for possible improvement of program implementation. For example, ACF will be able to determine which grantees deliver their complete program content and hours to a high percentage of participant cohorts, and for which program models that is true. Grantees will be able to determine from performance data which of the implemented program models are succeeding in getting participants to complete at least 75 percent of the program sessions. ACF will be able to generate statistics showing how programs serving vulnerable populations compare to programs serving more general teen populations with regard to participant completion, participants’ assessments and perceived effects. ACF will learn which implementation challenges are most evident to grantees and their sub-awardees, and which are seen as topics for technical assistance. Over time, data can demonstrate which grantees and sub-awardees are improving with respect to elements of program delivery and which areas of technical assistance require on-going attention.

Core measures for PREIS grantees’ local evaluations. Analyses for PREIS local evaluations will be conducted by each individual grantee. ACF and its contractors will provide training and technical assistance to ensure that impact analyses are appropriately conducted. Results will help PREIS grantees understand the efficacy of their programs.

2. Time Schedule and Publications

Performance measures. Performance measures are expected to be continuously collected and analyzed. This request is for a three year period, and subsequent packages will be submitted as necessary for new collections or to extend collection periods. The performance analysis reporting schedule is designed to complement the timing of grantees’ program implementation and the availability of the tools to support the data collection. OMB approval for the PREP PM data collection is anticipated in early 2017. Below is a schedule of the data collection and reporting efforts for the PM:

July 2017: PREP PREIS and Tribal grantees will collect performance measures and core measures for the PYP evaluation on sexual behavior and communication with a caring adult (Instrument #5) at program exit, short-term, and long-term followup.

Fall 2017 and twice a year thereafter: All PREP grantees will begin collecting data on characteristics of the individual youth served, youths’ perceptions of program effectiveness and program experiences, and data on participants’ enrollment, attendance, and delivered program hours (i.e., program delivery data); and how grant funds are being used, the program models selected, and the ways in which grantees and sub-awardees support program implementation (i.e., program structure data).

The analytical results based on grantees reported program delivery data will be compiled into full written reports once each year, with data profiles more immediately available (within one to two months) through the Performance Measures Dashboard.

Core measures for PREIS grantees’ local evaluations. Analyses for PREIS local evaluations will be conducted by each individual grantee at the conclusion of its evaluation, generally in 2019 or 2020. ACF and its contractors will provide training and technical assistance to assist with dissemination of findings. Because this ICR, if approved, will last only through 2018, a subsequent request will discuss specifics of PREIS local evaluation publications.

A17. Reason(s) Display of OMB Expiration Date is Inappropriate

All instruments, consent and assent forms and letters will display the OMB Control Number and expiration date.

A18. Exceptions to Certification for Paperwork Reduction Act Submissions

No exceptions are necessary for this information collection.

SUPPORTING REFERENCES FOR

INCLUSION OF SENSITIVE

QUESTIONS OR GROUPS OF QUESTIONS

Blake, Susan M., Rebecca Ledsky, Thomas Lehman, Carol Goodenow, Richard Sawyer, and Tim Hack. "Preventing Sexual Risk Behaviors among Gay, Lesbian, and Bisexual Adolescents: The Benefits of Gay-Sensitive HIV Instruction in Schools." American Journal of Public Health, vol. 91, no. 6, 2001, pp. 940-46.

Boyer, Cherrie B., Jeanne M. Tschann, and Mary-Ann Shafer. "Predictors of Risk for Sexually Transmitted Diseases in Ninth Grade Urban High School Students." Journal of Adolescent Research, vol. 14, no. 4, 1999, pp. 448-65.

Buhi, Eric R. and Patricia Goodson. "Predictors of Adolescent Sexual Behavior and Intention: A Theory-Guided Systematic Review." Journal of Adolescent Health: Official Publication of the Society for Adolescent Medicine, vol. 40, no. 1, 2007, pp. 4.

Davis, E. C., and Friel, L. V. “Adolescent Sexuality: Disentangling the Effects of Family Structure and Family Context.” Journal of Marriage & Family, vol. 63, no. 3, 2001, pp. 669-681.

Dermen, K. H., M. L. Cooper, and V. B. Agocha. "Sex-Related Alcohol Expectancies as Moderators of the Relationship between Alcohol use and Risky Sex in Adolescents." Journal of Studies on Alcohol, vol. 59, no. 1, 1998, pp. 71.

Fergusson, David M. and Michael T. Lynskey. "Alcohol Misuse and Adolescent Sexual Behaviors and Risk Taking." Pediatrics, vol. 98, no. 1, 1996, pp. 91.

Goodenow, C., J. Netherland, and L. Szalacha. "AIDS-Related Risk among Adolescent Males Who have Sex with Males, Females, or both: Evidence from a Statewide Survey." American Journal of Public Health, vol. 92, 2002, pp. 203-210.

Li, Xiaoming, Bonita Stanton, Lesley Cottrell, James Burns, Robert Pack, and Linda Kaljee. "Patterns of Initiation of Sex and Drug-Related Activities among Urban Low-Income African-American Adolescents." Journal of Adolescent Health: Official Publication of the Society for Adolescent Medicine, vol. 28, no. 1, 2001, pp. 46.

Magura, S., J. L. Shapiro, and S. -. Kang. "Condom use among Criminally-Involved Adolescents." AIDS Care, vol. 6, no. 5, 1994, pp. 595.

Raj, Anita, Jay G. Silverman, and Hortensia Amaro. "The Relationship between Sexual Abuse and Sexual Risk among High School Students: Findings from the 1997 Massachusetts Youth Risk Behavior Survey." Maternal and Child Health Journal, vol. 4, no. 2, 2000, pp. 125-134.

Resnick, M. D., P. S. Bearman, R. W. Blum, K. E. Bauman, K. M. Harris, J. Jones, J. Tabor, T. Beuhring, R. Sieving, M. Shew, L. H. Bearinger, and J. R. Udry. "Protecting Adolescents from Harm: Findings from the National Longitudinal Study on Adolescent Health." JAMA: The Journal of the American Medical Association, vol. 278, no. 10, 1997, pp. 823.

Santelli, John S., Leah Robin, Nancy D. Brener, and Richard Lowry. "Timing of Alcohol and Other Drug use and Sexual Risk Behaviors among Unmarried Adolescents and Young Adults." Family Planning Perspectives, vol. 33, no. 5, 2001.

Sen, Bisakha. "Does Alcohol-use Increase the Risk of Sexual Intercourse among Adolescents? Evidence from the NLSY97." Journal of Health Economics, vol. 21, no. 6, 2002, pp. 1085.

Tapert, Susan F., Gregory A. Aarons, Georganna R. Sedlar, and Sandra A. Brown. "Adolescent Substance use and Sexual Risk-Taking Behavior." Journal of Adolescent Health: Official Publication of the Society for Adolescent Medicine, vol. 28, n3, 2001, pp.181.

Upchurch, DM. and Kusunoki Y. “Associations Between Forced Sex, Sexual and Protective Practices, and STDs Among a National Sample of Adolescent Girls.” Women's Health Issues, vol. 14, no. 3, 2004, pp.75-84.

1 Our efforts to define and collect performance measure data will be supported by two contracts: PREP Studies of Performance Measures and Adult Preparation Subject (PMAPS) and the Promising Youth Programs (PYP).

2 These include (1) the Pregnancy Assistance Fund (PAF) Evaluation Cross-Grantee Implementation Study (sponsored by the Office on Adolescent Health (OAH) in HHS; OMB # 0990-0416); (2) the Teen Pregnancy Prevention (TPP) Performance Measures (OAH; OMB # 0990-0438); (3) the Teen Pregnancy Prevention Program Feasibility and Design Study (TP3 FADS) (OAH); (4) Evaluation of the Teen Pregnancy Prevention Program: Replicating Evidence-Based Teen Pregnancy Prevention Programs to Scale in Communities with the Greatest Need (tier 1B) (OAH); (5) TPP Grantee-Led Impact Evaluations (OAH); and (6) Young Men and Teen Pregnancy Prevention (YM TPP) (OAH).

Each of the efforts have a specific focus. The PAF evaluation is focused on randomized-controlled trials of 2 programs and a quasi-experimental design of 1 program for pregnant and parenting youth; includes implementation analysis. The TPP performance measures is collecting performance measures every 6 months from all OAH TPP grantees. The TP3 FADS is conducting randomized-controlled trials to test (1) replications of commonly used but understudied evidence-based teen pregnancy prevention programs, (2) significant or meaningful adaptations to existing evidence-based approaches, and (3) selected core components, key activities, and implementation strategies of common programs. The Tier 1B evaluation is focused on rigorous, multi-grantee evaluation that includes impact evaluation, implementation evaluation, and case-studies. The TPP Grantee-Led Impact Evaluations include randomized-controlled trials, or quasi-experimental design experiments, examining impacts of grantee-led, independent evaluations of new or innovative approaches to preventing teen pregnancy. The YM TPP evaluation is conducting randomized-controlled trials examining impacts of 3 different teen pregnancy prevention interventions targeting young men, ages 15-24.

3 The three year period for which we are requesting clearance covers the first three years of the PMAPS project.

4 Based on our review of data from the PREP Multicomponent Evaluation Performance Analysis Study (PAS), we estimate that 60 percent of youth served in PREP programs will be in school-based programs and that 40 percent will be served in out-of-school programs. We assume that 90 percent of youth in school-based PREP programs will complete the program and that 65 percent of youth in out-of-school PREP programs will complete the program. These assumptions yield an overall program completion rate of 80 percent.

5 We are currently requesting clearance for three years; over the three years for which we are requesting clearance, we expect that 349,260 youth will complete the programs and 331,797 will complete a participant exit survey.

6 Annual burden hours for youth 18 and over are included in the total annual burden hours. Only a subsample of the toal population are expected to be over 18.

7 The 93 grantees include 49 states and territories, 10 grants to tribes and tribal communities, 21 grants under Competitive PREP, and 13 PREIS grantees.

8 Our estimates are based upon the number of sub-awardees observed through the PREP Multi-Component evaluation and the growth in sub-awardees annually.

9 As mentioned previously, the 93 grantees include 49 states and territories, 10 grants to tribes and tribal communities, 21 grants under Competitive PREP, and 13 PREIS grantees.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | BCollette |

| File Modified | 0000-00-00 |

| File Created | 2021-01-11 |

© 2026 OMB.report | Privacy Policy