SSB_Phase II_NSCAW III_2017_clean

SSB_Phase II_NSCAW III_2017_clean.docx

National Survey of Child and Adolescent Well-Being Second Cohort (NSCAW III): Data Collection (Phase II)

OMB: 0970-0202

National Survey of Child and Adolescent Well-Being—Third Cohort (NSCAW III): Phase II

DRAFT OMB Information Collection Request

0970 - 0202

Supporting Statement

Part B

February 2017

Submitted By:

Office of Planning, Research and Evaluation

Administration for Children and Families

U.S. Department of Health and Human Services

4th Floor, Mary E. Switzer Building

330 C Street, SW

Washington, D.C. 20201

Project Officers:

Mary Bruce Webb

Christine Fortunato

Contents

Section Page

B1. Respondent Universe and Sampling Methods 1

B1.2 Sampling Frame Used and Its Coverage of the Target Population 2

B1.3 Design of the Sample (Including Any Stratification or Clustering) 2

B1.4 Size of the Sample and Precision Needed for Key Estimates 7

B1.6 Expected Item Nonresponse Rate for Critical Questions 8

B2. Procedures for Collection of Information 9

B3. Methods to Maximize Response Rates and Deal with Nonresponse 14

B3.1 Expected Response Rates 14

B3.2 Dealing with Nonresponse 14

B3.3 Maximizing Response Rates 15

List of Exhibits

Number Page

Exhibit B1.1. Phase II of NSCAW III Target Population 2

Exhibit B1.2. Second-Stage Sampling Domains 4

Exhibit B1.3. Flow Diagram of the Sampling Process 7

Exhibit B1.4. Calculations for Child-Level Sample for Phase II of NSCAW III 8

B1. Respondent Universe and Sampling Methods

For the sake of comparability across cohorts, the National Survey for Child and Adolescent Well-being (NSCAW III) sample design will mirror the original design used in NSCAW I and replicated in NSCAW II.1 However, unlike NSCAW II which reused the NSCAW I primary sampling units (PSUs), NSCAW III will select a new sample of PSUs using a procedure that maximizes the overlap of the PSU sample. The sample design chosen for NSCAW III is based on the lessons learned from NSCAW I and NSCAW II and incorporates enhancements to improve the sampling precision.

Key features of the NSCAW III sampling design are as follows:

Rather than carry over all former PSUs from prior cohorts of NSCAW, a new sample of 83 PSUs will be selected in order to update the probability proportional to size (PPS) selection probabilities for the current distribution of the child welfare population.

A “maximal PSU sampling coordination” approach will be used that maximizes the probability of sampling PSUs (or agencies) in the NSCAW II sample.

AFC states—i.e., states having legal statutes requiring the agencies to contact families and obtain written permission to allow their information to be released—will be removed from the sample once they have been identified through the recruitment process.

The plan for sampling PSUs was outlined in detail in Phase I of the study approved by OMB in November 2016 (OMB # 0970-0202, Expiration Date: 11/30/2019). Phase I activities began in November, are ongoing, and include the recruitment of the child welfare agencies and the collection of sample frame files that will be used to select the sample of children involved with the child welfare system (CWS). This OMB submission covers Phase II, which includes all tasks related to collecting data from the CWS-involved children, their caregivers and their caseworkers.

B1.1 Target Population

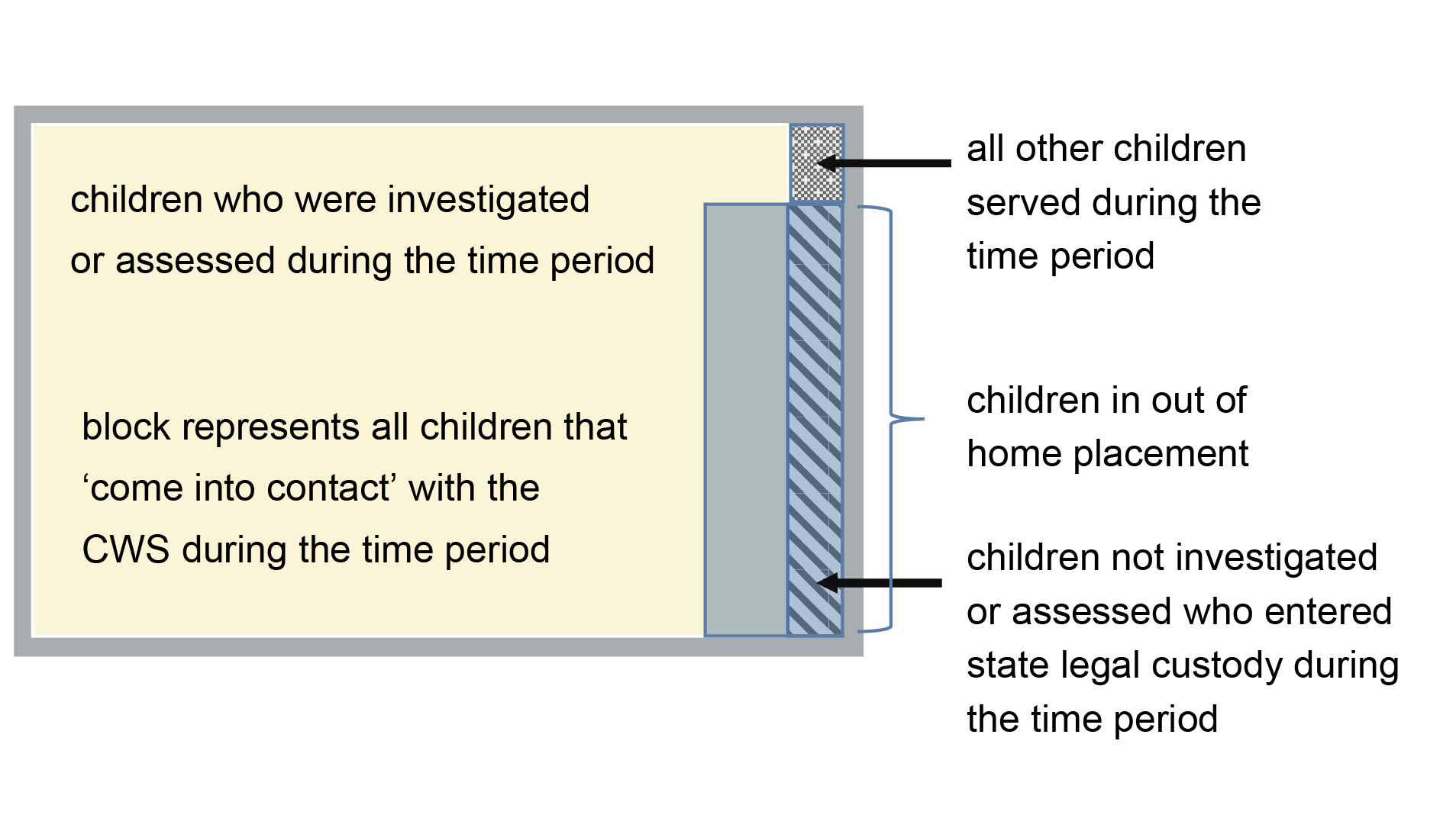

For the baseline and 18-month follow-up data collection, the target population for NSCAW III includes all children ages 0-17½ who come into contact with the CWS during the 12-month sampling period. Specifically, the target population includes children who were (1) were investigated or assessed for child abuse or neglect and (2) entered state legal custody through other pathways (e.g., juvenile justice). These children, who are placed into legal guardianship, may comprise as much as 20 percent of children in out-of-home placement. This target population for Phase II of NSCAW III is shown in Exhibit B1.1.

Exhibit B1.1. Phase II of NSCAW III Target Population |

|

According to 2014 data from the National Child Abuse and Neglect Data System (NCANDS), an estimated 3.6 million referrals of abuse or neglect, concerning approximately 6.6 million children, were received by child protective services (CPS). Almost 61 percent of those referrals were accepted for investigation or assessment.

B1.2 Sampling Frame Used and Its Coverage of the Target Population

NSCAW III will construct a sampling frame consisting of all counties in the U.S. except for (1) very small ones, namely those who are expected to produce fewer than 55 completed NSCAW III interviews (2) counties whose state law (AFC states) prohibits the release of information. The first exclusion was similarly used for previous NSCAW samples and for cost efficiency. Only about 1-2 percent of the child welfare population resides in these small counties so their exclusion has a negligible effect on population coverage and estimation bias. The second exclusion (AFC states) is necessary because child welfare agencies in these states are prevented by state law from participating in the NSCAW.

The NSCAW I target population represented approximately 94.6 percent of the U.S. population of children investigated or assessed for child abuse or neglect during the sampling period. In NSCAW II, it was approximately 88 percent as a result of the additional AFC states that were dropped from the study. In NSCAW III, population coverage for this same group is likely to be approximately at the NSCAW II levels or perhaps slightly lower if more states have passed AFC legislation necessitating their removal from the target population. Neither NSCAW I or II included children who enter and are served by the CWS without a maltreatment investigation. These children will be included in the NSCAW III target population (see Figure B1.1) so the coverage of this more broadly defined population could be greater than NSCAW I.

B1.3 Design of the Sample (Including Any Stratification or Clustering)

NSCAW III proposes to use a stratified, cluster sample design, similar to prior cohorts of NSCAW.

Phase I: Sampling of Child Welfare Agencies (Previously Approved; 0970-0202, Nov 2016)

The first stage of sampling involves the selection of primary sampling units (PSU), which for this study are U.S. counties. Please see the Phase I OMB submission approved in November 2016 for details of the plan for sampling PSUs (OMB # 0970-0202, Expiration Date: 11/30/2019). The frame PSUs will be ordered by Census region, by state within Census region and then by urban/rural status to ensure that regions and states in both urban and rural areas will be sampled in proportion to their child welfare populations. In a few very large counties such as Los Angeles County, CW agencies will be sampled proportionately. A frame of all children in the target population will be developed for each sample agency using lists obtained from the agencies during each month of the sampling period. Then children will be selected disproportionately in each PSU according to their sampling domain to achieve the desired sample size in each domain.

Biemer (2007) determined that a sample size of 55 to 60 completed cases per PSU, per year, is ideal for the general NSCAW design in terms of cost versus error optimization. Thus, for an overall sample size of 4,565 cases, 83 PSUs/cooperating child welfare agencies is optimal.

Using a maximum sampling coordination approach, a sample of 83 PSUs/cooperating child welfare agencies via PPS sampling using composite size measures that incorporate the population sizes of the selected domains in each PSU. Data from the most recent NCANDS file will supply these population counts. The composite size measure method (Folsom, Potter and Williams, 1987) provides a means to control domain sample sizes that maximizes the efficiency of the design by minimizing weight variation for units within sampling domains. PSUs will be defined essentially as they were in NSCAW II (i.e., geographic areas that encompass the population served by a single child welfare agency). In most cases, these areas correspond to counties or contiguous areas of two or more counties. In larger metropolitan areas with branch offices, the county will be subdivided into areas served by a single agency/office.

As in NSCAW I, the selection of primary sampling units (PSUs) will involve the following four steps:

1. Partition the target population into PSUs (i.e., counties)

2. Compute a size measure for each PSU

3. Stratify the PSU sampling frame

4. Select the sample of PSUs

The activities for carrying out each of these four steps are outlined below:

Step 1: Partitioning the Target Population into PSUs. The administrative structure of the child welfare system varies considerably across the states and even within states. Therefore, a single definition of a PSU is not feasible since it depended on the administrative structure of the state system, as well as the jurisdictions of child welfare agencies within the state. For most areas of the country, the best definition of a PSU is the county since it corresponds to a clearly defined political entity and geographic area of manageable size. In other areas, the definition of a PSU is not as straightforward, as in a single child welfare agency that had jurisdiction over several counties. In such instances, the PSU will be defined as a part of or the entire area over which the child welfare agency had jurisdiction. Extremely large counties or MSAs have child welfare agencies with many branch offices, each with its own data system. Such PSUs will be divided into smaller units, such as areas delineated by branch office jurisdictions, to create manageable PSUs. For the purpose of the first-stage sampling discussion, counties are referred to as PSUs, for simplicity’s sake.

Phase II: Sampling of Children – Current Information Collection Request

Step 2: Compute a Size Measure for Each PSU. The second-stage sampling units will be stratified into nine domains of interest to control the second-stage sample allocation so that domains of interest have sufficient sample sizes. The second-stage NSCAW III domains and the allocation of achieved sample sizes are shown in Exhibit B1.2. Note that there are a total of nine sampling domains defined by columns 1 and 2 of the table. Column 3 is the expected sample size under proportionate sampling. Because some of these sample sizes are inadequate for research purposes, they will be increased to the levels shown in Column 4 (Target sample size). Also shown in this table is the unequal weighting effect (UWE), clustering effect (based upon the expected intercluster correlation), the effective sample size (which is the target sample size divided by the UWE and the clustering effect) and the minimum detectable effect size (MDES) which is the smallest effect size that can be detected with 80 percent power and a type I error of 5 percent.

Exhibit B1.2. Second-Stage Sampling Domains

Age group |

Services |

Proportionate |

Target |

UWE |

Clustering |

Effective |

MDES |

Infant (under 1 year) |

Services in home |

109 |

533 |

1.000 |

1.358 |

392 |

0.200 |

Services out of home |

73 |

533 |

1.000 |

1.358 |

392 |

0.200 |

|

No services |

182 |

533 |

1.000 |

1.358 |

392 |

0.200 |

|

Ages 1 to 11 |

Services in home |

914 |

397 |

1.000 |

1.250 |

318 |

0.222 |

Services out of home |

291 |

189 |

1.000 |

1.084 |

174 |

0.300 |

|

No services |

1,799 |

782 |

1.000 |

1.556 |

503 |

0.177 |

|

Ages 12 to 17 |

Services in home |

366 |

533 |

1.000 |

1.358 |

392 |

0.200 |

Services out of home |

123 |

533 |

1.000 |

1.358 |

392 |

0.200 |

|

No services |

708 |

533 |

1.000 |

1.358 |

392 |

0.200 |

|

Total |

|

4,565 |

4,565 |

1.754 |

4.564 |

570 |

0.166 |

The composite size measure method, described in Folsom, Potter, and Williams (1987), will provide a method for controlling domain sample sizes while maximizing the efficiency of the design. The composite size measure reflects the size of the sample that would fall into the PSU if a national random sample of children were selected with the desired sampling rates for all domains but without PSU clustering.

After the composite size measures are computed, each of the approximately 3,140 counties on the initial PSU frame will be checked to determine whether it was large enough to support the planned completion of at least 55 valid interviews per PSU during the twelve-month data collection period. In NSCAW I, approximately 700 counties with an expected number of 55 or fewer eligible children were deleted from the frame; this accounted for approximately 1 percent of the target population. We expect a similar final coverage rate for NSCAW III.

Step 3: Stratifying the First-Stage Frame. The PSU frame will be implicitly stratified by nine census regions and urbanicity within region prior to sampling. The urbanicity of a PSU will be defined by whether the county was part of an MSA (extremely large county). Stratifying PSUs by region and urbanicity allows for controlled allocation of sample PSUs in these implicit strata.

Step 4: Selecting the PSUs. Given the

first-stage stratification and the size measure

,

the selection frequency of the kth PSU in the hth

first-stage stratum is calculated as

,

the selection frequency of the kth PSU in the hth

first-stage stratum is calculated as

(2)

(2)

where

is the number of PSUs selected from the hth first-stage

stratum and,

is the number of PSUs selected from the hth first-stage

stratum and,

is the total size measure of all PSUs in the hth first-stage

stratum.

is the total size measure of all PSUs in the hth first-stage

stratum.

PSUs will be selected using an algorithm that

maximizes the expected number of PSUs that will overlap NSCAW I and

NSCAW III while assuring unconditional selection probability is as in

(2). Given the sample of NSCAW I PSUs in stratum h, denoted

,

the algorithm produces a set of conditional probabilities

,

the algorithm produces a set of conditional probabilities

while preserving the unconditional probabilities

while preserving the unconditional probabilities

specified in (2). PSUs will then be sampled from each stratum using

the conditional probabilities produced by the algorithm in Ernst

(1995).

specified in (2). PSUs will then be sampled from each stratum using

the conditional probabilities produced by the algorithm in Ernst

(1995).

Design of Second Stage Unit Sample and Overview of the Process

After selecting the PSUs for the study, the process of recruiting the child welfare agencies associated with the PSUs will begin. As these agencies are recruited, we will work with them individually to refine our projections of the expected sizes of the domains of analysis for sampling. The nine domains for the study are shown in Exhibit B.1.2. As shown in this exhibit, the number of children that will be selected in each domain will be sufficient to achieve a minimum detectable effect size (MDES) of 0.2 in all nine strata. When calculating the necessary sample sizes, we assumed an intercluster correlation of 0.066 based upon an analysis of the NSCAW II key characteristics.

As previously noted, a sample size of about 55 completed interviews per PSU is ideal for NSCAW for cost and error optimization. We will use the data available from both NSCAW I and II to establish initial sample allocations for each domain within PSU. Then we will adjust those sample allocations throughout the data collection process by following steps:

1. Each month, the contractor (RTI International) will receive files from each child welfare agency containing all children with completed investigations/assessments as well as children entering legal custody through alternative pathways such as the juvenile justice system.

2. These files will be processed and any duplications will be removed.

3. The contractor will compute the number of cases to select in each domain, in each PSU, in any given month using an algorithm we developed in NSCAW II. The algorithm determines the number of cases to select so that target sample sizes are achieved by the end of data collection, then the algorithm optimizes the allocation of sample across PSUs so that UWEs are minimized while equalizing interviewer assignments.

4. The sample for each domain in each PSU is selected, reviewed for accuracy, and transmitted to the field. These steps are depicted in Exhibit B.1.3.

In prior NSCAW studies, some child welfare

agencies can be slow to enter cases and their outcomes into their

agency data systems. In fact, there could be a lag of up to three

months before an investigated case is finally entered into the

system. In these agency, a sampling process that only obtained cases

completed in month t, say, would missing cases that were not

entered into the system until month t+1, say. For that reason,

we will obtain four files from the agency for each month of sampling:

the month t file as of month t as well as the month t

file after it has been updated in months t+1, t+2, and

t+3. This will ensure there will be no loss of coverage as a

result of delayed data entry.

Exhibit B1.3. Flow Diagram of the Sampling Process

B1.4 Size of the Sample and Precision Needed for Key Estimates

The sampling of agencies (or PSUs) during the agency recruitment phase will result in the selection of 83 participating agencies. From the 83 participating agencies, approximately 4,565 CWS-involved children, their caregivers, and their caseworkers will be interviewed. It is not particularly meaningful to specify the statistical power of NSCAW child-level analysis overall because it will include so many different research questions, variables and subpopulations. However, in Exhibit B1.2 we show the MDES for estimates within the primary domains of analysis. The target sample size for NSCAW III is 4,565 completed cases where a completed case is defined as a completed interview for the key respondent (defined as the caregiver if the child is under the age of 11, and as the child if the child is 11 or older). Based on experience with the NSCAW II analysis, this sample was adequate for many types of analysis that were conducted, both for cross-sectional and longitudinal analysis.

To determine the number of cases to draw from each PSU, the initial sample needs was estimated as 8,695 sampled children to reach a completed sample size of 4,565. The rationale is presented in Exhibit B1.4.

Exhibit B1.4. Calculations for Child-Level Sample for Phase II of NSCAW III

Steps |

Number |

1. initial sample |

8,695 |

2. assume 25% of the initial cases will be ineligible due to factors including the following:

|

.75 x 8,695 = 6,521 |

3. assume 60% of the cases will cooperate with the initial data collection efforts |

.60 x 6,521 = 3,913 |

4. subject 50% of the remaining nonresponders (n = 3,143) to intensive data collection efforts |

.50 x 2,609= 1,304 |

5. assume 50% of the nonresponders ultimately participate |

.50 x 1,304 = 652 |

Total number of completed cases |

3,913 + 652 = 4,565 |

With the number of completed cases, the average number of completes per PSU, and the oversampled domains, the extensive data available from NSCAW I and II will be used to update response rates by domain and by PSU in order to establish initial sample allocations for each domain.

B1.5 Expected Response Rate

An important requirement of the NSCAW III design is to maximize both agency and child-level response rates. Obtaining a response rate of 80 percent for the key respondent at baseline is a high priority. The contractor will use a number of response rate enhancement features that will maximize response rates without appreciably increasing data collection costs. Central among these features are the following:

Incorporating both contact and response propensity models in the field work to identify sample members who are either difficult to contact, have a low probability of cooperating when contacted or both,

Using two-phase sampling to select a 50 percent sample of nonrespondents to pursue during the nonresponse followup phase of data collection, and

Using matched substitution for select groups of nonrespondents in order to further reduce nonresponse bias and boost response rates.

See section B.3 for additional discussion about maximizing response rates and dealing with nonresponse.

B1.6 Expected Item Nonresponse Rate for Critical Questions

In an omnibus survey with an extensive and diverse research community such as the NSCAW, identifying critical questions is complicated because questions which are not critical for some disciplines (such as child health) and nonetheless critical for another (such as child education). Nevertheless, a nonresponse analysis conducted on the NSCAW II questionnaire data (RTI, 2013) attempted to address this question for all areas regarded as critical by sizeable factions of the NSCAW data user community. The analyis identified questionnaire modules as well as key questions that have high item nonresponse within the three different types of interviews – child, caseworker and caregiver. The total item missing rate for an item was calculated by summing the frequency of the components of nonresponse – a Refusal or a Don’t Know response, or an Inapplicable coded by the interviewer. A ratio was then created by dividing that sum by the number of respondents asked the question. The items included in this analysis were confined to items contained in the Restricted Release data file (i.e., the most complete data files available to researchers whose organizations qualify for the release of highly sensitive and possibly identifying data).

The nonresponse analysis found that only a few items on the child instrument (a total of 24 items out of several hundred items) had high missing rates defined as item nonresponse, which is 10 percent or greater, mostly due to “Don’t Know” responses. The level of item nonresponse is higher in the caregiver instrument with a total of 29 items having 20 percent or greater missing and 22 items with 10 to 19.99 percent.

Over half (n=14) of the question items from the child instrument having high missing rates (between 10 and 27 percent) are from modules administered to children living in out-of-home care, including question such as whether the child wants to be adopted and the frequency of biological famly visits. Another six items are questions asking about drug or alcohol abuse (between 12 and 34 percent) with “Refused” responses accounting for the missing percentage. For the caregiver instrument, the items with high missing rates were distributed among several modules. Examples of these items are questions asking about household income and the child’s height.

With regard to the caseworker instrument, caseworkers at baseline were less able to respond to questions about service referrals and provision. In some agencies investigative caseworkers do not have access to information regarding the referral or provision of services; in these instances, NSCAW field representatives were instructed to ask the questions and record Don’t Know. As a result, approximately 20 percent of investigators could not fully answer the questions regarding services. For example, the Services to Children (SC) section had 39 items at or above a 10 percent missing rate, with 15 items at or above 20 percent missing.

Given the literally thousands of items in the NSCAW instrument, the numbers cited for items having high item nonresponse rates are a small proportion of all critical items. Further, none of the main well-being indicators (e.g., physical health; cognitive development; and social, emotional, and behavioral well-being) had missing rates that were greater than 5 percent.

B2. Procedures for Collection of Information

Procedures for data collection mirror closely those developed for and used successfully on NSCAW I and NSCAW II. NSCAW is a longitudinal study with multiple informants associated with each sampled child, in order to get the fullest possible picture of that child. Baseline and 18-month follow-up waves of face-to-face interviews/assessments will be conducted with the sampled child, the child’s current caregiver (e.g., biological parents, foster parents, kin caregivers, group home caregivers), and the child’s caseworker. All interviews will be conducted via Computer-Assisted Personal Interview (CAPI). Administrative data will be collected and merged with survey data at the child level to enhance the robustness and overall utility of the study. Baseline data collection is scheduled to begin in August 2017, pending OMB approval. The 18-month follow-up data collection is scheduled to begin in 2019. Both children who remain in the CWS and those who exit the system will be followed for the full study period.

Caregiver Interview

Contacting Families and Children Selected into the Sample. Because of the diversity in agency policies and their concerns about releasing names of clients, several different procedures were developed in NSCAW I and II by which the field representative acquired names and contacting information for selected children and families. In most agencies the “agency liaison” identified provide the field representative with names and contacting information for the current primary caregiver of each child selected into the sample.

Prior to being contacted by phone by a field representative, a lead letter and project fact sheet are mailed to the child’s current caregiver. Versions of the lead letters and project fact sheets are provided in Appendix F. Each version is intended for a specific respondent type. Specifically:

Biological, foster, or adoptive parents, as well as kin caregivers receive the Caregiver verison of the lead letter and fact sheet.

Legal guardians of the child who are not the child’s current caregiver receive the Legal Guardian verison of the lead letter and fact sheet. This version is designed for non-custodial parents, judges, agency personnel, etc.

All advance letter and fact sheets emphasize the importance of the study, ACF’s sponsorship, the the protections private interview data will receive, and the fact that participation in the study provides each family the opportunity to share their experiences with the CWS.

Selection of the Adult Caregiver. The NSCAW field representative will attempt to interview the adult caregiver in the household who knows the sampled child best and who can accurately answer as many of the questions as possible. Specifically, for children who remain in the custody of their parent(s) and for whom there is more than one caregiver in the household, we will ask to interview the adult “most knowledgeable” about the child and who has co-resided with the child for two months or more. In the instance there are multiple possible respondents who are most knowledgeable and meet the co-residency requirement, the hierarchy of parent-child relationships employed on both NSCAW I and NSCAW II will be used. This selection hierarchy typically results in the selection of the mother or mother figure in the child’s living environment. In situations in which the child is in out-of-home placement such as foster care or kin care, we will seek to identify the adult in the household who is “most knowledgeable” about the child and has been co-resident with the child for at least two months. Less frequently, sampled children are found to be residing in group homes or residential treatment centers. These cases will be carefully examined to in order to identify the most knowledgeable adult respondent for the sampled child.

Informed Consent Procedures. The child’s current caregiver will be asked to consent to study participation for both her/himself and the selected minor child. Field representatives will be carefully trained to confirm with the caseworker that the adult respondent chosen has legal guardianship and the legal right to consent to the child’s participation. If the chosen adult respondent does not have guardianship rights, the field representative will identify and contact the person who does have the authority to consent for the child. In some sites the agency will have guardianship for out-of-home placement children; in other sites the family court or juvenile court may hold guardianship. The field representative will contact the guardian, explain the study and the child’s selection, and seek permission to interview the child. Appendix G contains the Caregiver and Legal Guardian consent and permission for child interview forms to be administered on NSCAW III. Field representatives will read each form aloud to the respondent and also leave a copy for them to keep.

Caregiver Interview Content. The Caregiver interview is focused on the child’s health and functioning, the caregiver’s health and functioning, services received by the child and the family, the family environment, and experiences with the child welfare system. The interview is expected to average approximately 100 minutes. As noted in Supporting Statement A, Caregiver interview modules and items are provided in Appendix C.

Child Interview and Assessments

Informed Consent and Assent Procedures. As noted above, signed informed consent will be obatained from the legal guardian of each sampled child before approaching that child for participation in the study. NSCAW I pretest findings indicated that children younger than 7 years of age do not adequately comprehend some of the fundamental concepts necessary to meaningfully process informed assent information. However, children ages 7 and older will be asked to provide their assent to participate. Appendix G contains the Assent Forms for Youth ages 7 to 10 and ages 11 to 17, respectively.

The field representative will read the assent form aloud in order to introduce the child to the study, assure the child that what they tell us will be kept private to the extent permitted by law (with the exceptions surrounding expressed suicidal intent and suspected maltreatment), and to provide the child with an understanding of the voluntary nature of participation and their right to refuse to answer any question we ask of them. To assess the child’s comprehension of the assent form, we will use the teach-back method (Isles, 2013) in our assent process to confirm whether or not the child understands what is being explained to them. After reading the assent form to the child, the field representative will follow a script and ask the child to respond to some follow-up questions using their own words to check for their understanding of the material and, if needed, to re-explain what is included in the form. If the child is unable to demonstrate comprehension after three attempts, the field representative will discontinue the interview.

Sampled children who are confirmed to be legally emancipated per the legal requirements of their state of residence will be approached for an interview (see Appendix F for the Emancipated Youth version of the lead letter and fact sheet) and will provide their own consent for interview. If the emancipated youth was living with a caregiver in the 3 months prior to the interview, the field representative will attempt to collect the youth’s permission to contact the prior caregiver to request an interview. See Appendix G for the Emancipated Youth and Caregiver of Emancipated Youth consent forms.

At the 18-month follow-up, a subset of children in the NSCAW III cohort will be 18 years of age or older. These young adults will be contacted directly during the 18-month follow-up (see Appendix F for the Young Adult version of the lead letter and fact sheet) and will consent for their own interview (see Appendix G for the Young Adult version of the consent form). A prior caregiver interview will not be attempted.

Child Interview Content. Where possible, the field representative will schedule the sampled child to be interviewed during the same visit as the adult caregiver. Based on prior NSCAW experience, this is possible in approximately 70% of cases.

The interview protocol varies considerably depending on the age of the child. Younger children are assessed primarily through physical development measures (length, weight, and head circumference) and standardized child assessments focused on developmental and cognitive status, children ages 5 and older are asked to self-report on their experiences at school and with peers. The interview protocol for children ages 11 and older includes more sensitive questions administered in Audio Computer-Assisted Self Interview (ACASI) software. The ACASI sections include questions on substance abuse, sexual activity, delinquency, injuries, and maltreatment. The interviews with children will average 60 to 90 minutes, depending on the child’s age and experiences. Interviews with emancipated youth and young adults will average approximately 100 minutes. As noted in Supporting Statement A, Child interview modules and items are provided in Appendix B.

Caseworker Interview

Trained field representatives will obtain the name and contacting information for the caseworker assigned to the child/family from the agency liaison. Appendix F contains the Caseworker versions of the lead letter and fact sheet to be sent prior to the field representative contacting the caseworker by phone to schedule the interview. At baseline, the child’s investigative or foster care caseworker will be contacted. At the 18-month follow-up, the child’s services caseworker will be contacted.

At the 18-month follow-up, some caseworkers providing services to children will be “new” to the study. These are likely to be caseworkers in agencies subcontracted by the sampled agency to provide services. These caseworkers will recive the New Agency Caseworker version of the lead letter and fact sheet (also provided in Appendix F).

Informed Consent Procedures. To maximize convenience for the caseworkers and to safeguard private case record data, caseworker interviews will be conducted at agency offices. Prior to conducting the interivews, field representatives will read aloud and administer the Caseworker version of the consent form (see Appendix G).

Caseworker Interview Content. The baseline caseworker interview focuses on the circumstances surrounding the maltreatment investigation or other event that brought the child into the agency’s custody. The interview also assesses various risk factors that were present at the time of the event that triggered the child/family entering CWS, any history of prior CWS involvement, plans for reunification for children placed out of home, and any referrals made or services received by the child or family. The baseline caseworker interview will average approximately 45 minutes.

An 18-month follow-up services caseworker interview will be conducted if the child is in out of home care or if the child or family received CWS services since the baseline interview. The follow-up interview collects information about child and caregiver need for an receipt of services, whether there were any additional reports of maltreatment or other CWS involvement, child placement and adoption information, and court visits. It also collect information on caseworker characteristics and on the organizational climate within the caseworker’s agency. The administration time for the follow-up services caseworker interview is approximately 60 mintues.

As noted in Supporting Statement A, Caseworker interview modules and items are provided in Appendix D.

Collection of Administrative Data

Federal agencies are encouraged to leverage existing administrative data as a means of increasing the utility of their research in a cost efficient manner (OMB, 2014). To enhance the richness and usefulness of NSCAW, we plan to link the NSCAW interview and assessment data to administrative data from the following sources::

National Child Abuse and Neglect Data System (NCANDS) data on maltreatment reports

Adoption and Foster Care Analysis and Reporting System (AFCARS) data on adoptions and placements

National Directory of New Hires (NDNH) data on wages and unemployment insurance

Social Security Administration (SSA) quarterly earnings and disability benefits (SSI) data

Medicaid Analytic eXtract (MAX) Medicaid claims data

NCANDS and AFCARS data files will be provided by the participating child welfare agencies recruited during Phase I of the study approved by OMB in November 2016 (OMB # 0970-0202, Expiration Date: 11/30/2019). Agencies will provide a crosswalk of encrypted and unencrypted identification numbers to allow the project team to identify participating children and to link their administrative data to their survey data.

In Phase II of the project, NCANDS and AFCARS data, as well as administrative data from other sources will be linked to interview and assessment data. Data from the NDNH’s national repository of wage and employment information will be requested from the Office of Child Support Enforcement’s (OCSE), an office within ACF. Master Earnings Files containing quarterly wage and self-employment earnings and SSI files containing disability benefits data will be requested from the Social Security Administration (SSA). Finally, the Medicaid Analytic eXtract (MAX) files containing Medicaid claims data will be requested from the Research Data Asistance Center (ResDac), the organization that manages the MAX files for the Centers for Medicaid and Medicare Services (CMS).

During the Phase II baseline and 18-month follow-up interviews, caregivers, legal guardians, and children 13 years and older will be asked to sign a form consenting for their survey data to be linked to administrative data sources (see Data Linkage forms in Appendix H). For minor children who are not emancipated, consent from both the caregiver or legal guardian and the child must be obtained for their survey data to be linked to administrative data. If one declines, neither the caregiver nor the child’s survey data will be linked to other data sources.

A separate linkage form will be used to request that the minor child’s survey data be linked to their Medicaid claims data (see HIPAA Authorization forms in Appendix I). If the current caregiver is not the child’s legal guardian, the legal guardian will be asked to sign a separate authorization form to link the child’s survey data to Medicaid data.

All Personally Identifiable Information (PII) from the administrative data sources will be protected by storing the data files in RTI’s Enhanced Security Network (ESN) NIST-Moderate environment and the project will obtain all necessary IRB and DUA approvals prior to conducting the data linkage.

B3. Methods to Maximize Response Rates and Deal with Nonresponse

B3.1 Expected Response Rates

The expected response rate of 80 percent for key respondents is discussed in Section B1.5. This response rate is based on the use of several response rate enhancement features described in Section B3.3.

B3.2 Dealing with Nonresponse

Sampling weights are used to produce unbiased estimates by taking into account the unequal sample selection at various stages and adjustments for nonresponse, poststratification, and extreme values to reduce bias and variance of estimates. The sampling weights will be derived from each stage of sampling and are calculated as the inverse probability of selection for the unit of observation of each stage. Those weights, referred to as design-based weights, will be calculated when the samples are selected. After data collection, we will adjust the design-based weights to account for nonresponse, under- and over-coverage in certain demographic groups, and extreme weights, resulting in fully adjusted sample weights. The fully adjusted analysis weights will minimize nonresponse bias and variance in estimates.

We will create a sample weight for every key respondent that reflects the varying probabilities of selection, and we will use RTI’s generalized exponential model (GEM) to make adjustments for unit nonresponse, coverage error, and extreme weights. The weight will account for the purposive oversampling of the nine domains as well as bias that can be introduced by nonresponse. We have used GEM to create sample weight adjustment for both NSCAW I and II, so our weighting approaches are completely consistent with those two cohorts.

Exhibit B3.1 lists the eight weight components for NSCAW data. The first four are the design-based ones, and the last four are adjustment factors. To limit the weight variation and thus increase estimation precision, GEM constrains the weights during the nonresponse and poststratification adjustment process to stay within statistician-specified bounds. This form of weight trimming simultaneously minimizes bias and weight variation. To ensure the quality of the adjustments and uncover any unusual impact of the adjustment on the initial weights, we will compare the following statistics before and after each adjustment: weight sums by subdomains to check for failure to match control totals (slippage), proportions of extreme weights, UWEs, distributions of the weights, and ratios of maximum weight to mean weight.

Exhibit B3.1. Sample Weight Components

Design-Based Weights |

|

|

1. inverse probability of selecting PSU |

|

reflects selection made at Stage 1 |

2. inverse probability of selecting child |

|

reflects selection made at Stage 2 |

3. selection probability adjustment for family rejection/list method |

|

reflects screening |

4. inverse probability of selecting a household for nonresponse follow-up |

|

reflects a subsample of nonrespondents selected for intense efforts to obtain a completed interview |

5. household-level nonresponse adjustment |

|

reduces bias, modeled using RTI’s GEM |

6. person-level nonresponse adjustment |

|

reduces bias, modeled using RTI’s GEM |

7. person-level post-stratification adjustment |

|

reduces variance and bias, modeled using RTI’s GEM; forces the sum of the respondents’ weights to equal current population estimates for the civilian noninstitutionalized population aged 15 to 44 in the United States |

8. person-level extreme weight adjustment |

|

reduce variance, modeled using RTI’s GEM |

As noted in the Analysis Plan (Section 16.1 of Supporting Statement A), a set of special “calibration weights” will also be developed for NSCAW III. Since NSCAW I and II were implemented on overlapping but not identical PSUs, new methods for calibrating sampling weights were developed to ensure cross-cohort comparisons could be made (Biemer, 2012; Biemer and Wheeless, 2011, 2013; Kott, 2012; Kott and Liao, 2012; RTI, 2012). These weight adjustments compensate for the differences in population coverage between the two cohorts.

As described in Section A9 of Supporting Statement Part A, we also plan to offer tokens of appreciation to child and caregiver respondents to mitigate nonresponse rates. We plan to offer child and adolescent respondents gifts of appreciation that are appropriate for the child’s developmental level. For caregiver respondents, we propose to test the use of differential gifts of appreciation for caregiver respondents and to track their effectiveness using a responsive design framework.

B3.3 Maximizing Response Rates

NSCAW III’s ability to gain the cooperation of potential respondents and maintain their participation through the 18-month follow-up is key to the success of this endeavor. A respondent’s willingness to participate, both initially and long-term, is affected by a combination of circumstances surrounding the nature of each selected case. It is a challenge to achieve an 80 percent baseline response rate with this target population because often NSCAW families are recruited for study participation during a time of crisis.

For NSCAW III, we propose dividing the Phase II data collection into two phases: an initial phase that uses standard fieldwork data collection procedures and a second phase that uses extra efforts to locate and complete interviews with respondents. For the second phase, a subsample of cases not reached or completed will then be selected for more intensive data collection efforts. The subsample will be selected through propensity models that identify which cases are most likely to respond and which cases will most benefit data quality. These cases will be assigned to our most successful interviewers, who will try to locate them and elicit cooperation. They will have extra tools to use, such as additional labor hours to work the case, letters of endorsement, and a slightly higher gift of appreciation for caregiver respondents. More information about our differential incentive approach can be found in Section A9 of Supporting Statement A.

In addition, we will experiment with an approach referred to as “matched case substitution” which will further reduce nonresponse bias and is theoretically superior to relying solely on weighting adjustments for bias reduction. Match case substitution is a process for identifying a child on within agency sampling frame that closely matches a nonresponding child. The matching child is interview and this child’s data is the substituted for the nonresponding child in the analysis file. The costs and bias reduction effectiveness of this method will be evaluated in the early months NSCAW III collection. This evaluation will determine the extent to which acceptable matches can be found on the sampling frame to be used in substitution as well as the cost of fielding additional cases as substitutes for nonrespondents. Following this evaluation, a determination will be made as to whether matched case substitution offers and viable and cost effective approach to bias reduction.

B4. Tests of Procedures or Methods to be Undertaken

No pretests will be conducted. Most of the methods and procedures for the child, caregiver and caseworker interviews/assessments have successfully been administered in NSCAW I and II.

B5. Individual(s) Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data

RTI International and its subcontractors (University of North Carolina at Chapel Hill, Native American Management Services, Inc., and HR Directions, LLC) are conducting this project under contract number HHSP233201500039. RTI International developed the plans for statistical analyses for this study. The team is led by the following individuals:

Mary Bruce Webb, ACF, Contracting Officer’s Representative

Christine Fortunato, ACF, Co-Contracting Officer’s Representative

Melissa Dolan, RTI International, Project Director

Heather Ringeisen, RTI International, Co-Investigator

Paul Biemer, RTI International, Co-Investigator

Mark Testa, University of North Carolina at Chapel Hill, Co-Investigator

In addition, statistical consulting for the sampling plan was provided by M. A. Hidiroglou, PhD, from Statistics Canada in Ottawa, Ontario.

References

Biemer, P. P. (2007). “NSCAW II Design Methodology and Recommendations,” internal RTI International design report, May 4, 2007.

Biemer, P. (2012). Weighting the NSCAW sample for comparisons between NSCAW I and II, internal RTI memorandum

Biemer, P. and Wheeless, S. (2011). Analysis of the coverage bias for the NSCAW I and NSCAW II comparison weights, internal RTI memorandum.

Biemer, P. and Wheeless, S. (2013). Comparing NSCAW I and NSCAW II estimates using children in the calibrated weights, internal RTI International memorandum.

Ernst, L.R. (1995). Maximizing and minimizing overlap of ultimate sampling units. In JSM Proceedings, Survey Research Methods Section. Alexandria, VA: American Statistical Association. 706-711.

Folsom, R.E., Potter, F.J., and Williams, S.R. (1987). Notes on a composite size measure for self-weighting samples in multiple domains. In Proceedings of the American Statistical Association, Section on Survey Research Methods, 792-796.

Isles, A.F. (2013). Understood Consent Versus Informed Consent: A New Paradigm for Obtaining Consent for Pediatric Research Studies. Frontiers in Pediatrics, 1:38.

Kott, P. (2012). Some thoughts on comparing estimates from NSCAW I and NSCAW II, internal RTI memorandum

Kott, P. and Liao, D. (2012). Providing double protection for unit nonresponse with a nonlinear calibration-weighting routine. Survey Research Methods, 6(2), 105-111

U.S. Office of Management and Budget (1999). National Survey of Child and Adolescent Well-Being (NSCAW). OMB Information Collection Request. OMB Control No: 0970-0202, ICR Reference No: 199906-0970-002, Conclusion Date: 8/18/1999.

U.S. Office of Management and Budget (2008). National Survey of Child and Adolescent Well-Being. OMB Information Collection Request. OMB Control No: 0970-0202, ICR Reference No: 200803-0970-002, Conclusion Date: 3/4/2008.

U.S. Office of Management and Budget (2014). Guidance for providing and using administrative data for statistical purposes. (Publication No.M-14-06). Retrieved from https://www.whitehouse.gov/sites/default/files/omb/memoranda/2014/m-14-06.pdf.

Research Triangle Institute (2012). SUDAAN Language Manual, Volumes 1 and 2, Release 11. Research Triangle Park, NC: Research Triangle Institute.

Research Trinagle Institute (2013). National Survey of Child and Adolescent Well- Being 2nd Cohort (NSCAW II) Data File Users Manual. Washington, DC: Administration on Children and Families.

1 The NSCAW II OMB package contains additional detail about the previous sample designs and can be found here: http://www.reginfo.gov/public/do/PRAViewDocument?ref_nbr=200803-0970-002

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Sroka, Christopher |

| File Modified | 0000-00-00 |

| File Created | 2021-01-15 |

© 2026 OMB.report | Privacy Policy