Unemployment Insurance Data Validation Handbook

Tax Handbook 361 7.29.19.doc

Unemployment Insurance Data Validation (DV) Program

UNEMPLOYMENT INSURANCE DATA VALIDATION HANDBOOK

OMB: 1205-0431

UNEMPLOYMENT INSURANCE

DATA VALIDATION HANDBOOK

Tax

OFFICE OF UNEMPLOYMENT INSURANCE

DEPARTMENT OF LABOR

August 2019

OMB No: 1205-0431

OMB Expiration Date: August 31, 2019

Estimated Average Response Time: 730 hours.

OMB Approval. The reporting requirements for ETA Handbook 361 are approved by OMB according to the Paperwork Reduction Act of 1995 under OMB No. 1205-0431 to expire August 31, 2019. The respondents' obligation to comply with the reporting requirements is required to obtain or retain benefits (Section 303(a)(6), SSA). Persons are not required to respond to this collection of information unless it displays a currently valid OMB control number.

Burden Disclosure. SWA response time for this collection of information is estimated to average 730 hours per response (this is the average of a full validation every third year with an estimated burden of 900 hours, and partial validations in the two intervening years), including the time for reviewing instructions, searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden to the U. S. Department of Labor, Employment and Training Administration, Office of Workforce Security (Attn: Rachel Beistel), 200 Constitution Avenue, NW, Room S-4519, Washington, D.C. 20210 (Paperwork Reduction Project 1205-0431).

TABLE OF CONTENTS

B. Data Errors Identified Through Validation I-3

C. Data Sources for Federal Reporting and Validation I-4

D. Basic Validation Approach I-4

E. Reconstructing Federal Report Items I-7

G. Walkthrough of Data Validation Methodology I-10

B. Report Quarter Terminology A-1

D. Population Table Specifications A-8

INTRODUCTION

A. Objectives

States regularly report to the U.S. Department of Labor (DOL) under the Unemployment Insurance Required Reports (UIRR) system. In particular, states document their performance in collecting UI employer contributions (taxes) and employer reports on the Employment and Training Administration (ETA) 581 report entitled “Contribution Operations.” (Figure A)

Data from the ETA 581 report are used for three critical purposes: (1) allocation of UI administrative funding based on state workload, (2) performance measurement to ensure the quality of state UI program operations, and (3) calculation of state and national economic statistics. Table A summarizes the types and uses of the data. Figure A displays the ETA 581 report.

Table A Types and Uses of ETA 581 Data |

|||

Data Type |

Funding/ Workload |

Performance/Tax Performance System (TPS) Computed Measures |

Economic Statistics |

Active Employers |

|

|

|

Report Filing |

|

|

|

Status Determinations |

|

|

|

Accounts Receivable |

|

|

|

Field Audits |

|

|

|

Wage Items |

|

|

|

Because ETA 581 data have these critical uses, it is essential that states report their activities accurately and uniformly. Data validation measures the accuracy of state reporting on employer contribution activities. Two principles underlie a comprehensive data validation process:

Figure A

Form ETA 581

If data are collected, they should be valid and usable.

Given the high degree of automation of UI systems, it is feasible and cost-effective

to validate most report cells.

States conduct the validation themselves and report the results to ETA. This handbook provides detailed validation instructions for each state. ETA also provides states with a Sun-based data validation software application (referred to as the Sun-based system in UIPL 22-05) to use in conducting the validation.

Validation is administered using a “validation year” that coincides with the State Quality Service Plan (SQSP) performance year. It comprises all reports for the four quarters beginning April 1 and ending March 31. Once validity is established by a passing validation, states are required to validate reported data every third year, except for data elements used to calculate the Government Performance and Results Act (GPRA) measures. GPRA data are validated annually. The SQSP is the vehicle through which states submit plans to implement validation or to revalidate failed items.

If states modernize their UI tax data management system, partially, completely, or in any way that impacts reporting and therefore data validation, states must validate every benefits population the following year once the new system is deployed or goes live. This requirement includes populations with passing scores that were previously valid for three years and not yet due for validation. Similarly, if a state does not submit the underlying benchmark populations (Benefits Populations 5 and 8; Tax Populations 3 and 5) to complete Module 4 within the three year validation cycle, the scores will also not be considered valid until these are transmitted. If a state revises an ETA UI Required Report for the same time period it used for data validation, the data validation score will not be considered valid until the state resubmits the results using the revised report counts.

B. Data Errors Identified Through Validation

Systematic errors and random errors are the two major types of data error in federal UIRR reports. Systematic errors involve faulty design or execution of reporting programs. Random errors involve judgment and input errors. Reporting system errors are always systematic, while errors stemming from human judgment can be either systematic or random. Both systematic and random errors must be addressed in the validation design.

• Systematic errors are addressed through validation of the reporting programs that states use to create federal reports. Systematic errors tend to be constant and fall into one of three categories: 1) too many transactions (overcounts), 2) too few transactions (undercounts), or 3) misclassified transactions. Systematic human errors occur when staff are using incorrect definitions or procedures. For example, a reporting unit may establish its own definition for a data element that conflicts with the federal definition (this can happen deliberately or inadvertently). Systematic errors are the most serious because they occur repeatedly. They are also the easiest to detect and correct. Systematic errors do not need to be assessed very frequently, and each system error only needs to be corrected once. A one-time adjustment in a retrieval code or calculation specification, or staff retraining on a corrected definition or procedure, will usually correct systematic errors.

• Random errors are more variable. They include problems such as input errors or judgment errors such as misunderstanding or misapplying Federal definitions. In general, random errors occur intermittently. For example, a few data entry errors may occur even when most information is entered correctly. Correcting one error does not ensure that similar errors will not occur in the future.

Consistent and accurate reporting requires both good practice and accurate systems for reporting the data. Data validation and Tax Performance System (TPS) reviews together test whether data are accurately posted to the state employer contributions system and reported correctly on the ETA 581.

C. Data Sources for Federal Reporting and Validation

States use different methods to prepare the ETA 581 report. Some states produce the Federal reports directly from the employer contributions database: computer programs scan the entire database to select, classify, and count transactions. Other states produce a database extract or statistical file as transactions are processed, essentially keeping a running count of items to be tabulated for the report. Still other states use a combination of these methods. The basic approach to data validation is the same no matter how the report is developed: using standard national criteria, states reconstruct the report counts, using only transactions that should have been reported; and compare what they reported to this reconstructed “validation” count.

The validation methodology is flexible in accommodating the different approaches used by states. However, validation is most effective when validation data are produced directly from the employer contributions database. For cost reasons and to minimize changes in data over time, some states prefer to use daily, weekly, or monthly statistical extract files instead. When extract files are used, other types of system errors may occur. Reportable transactions may be improperly excluded from the employer master file. Furthermore, the statistical file may contain corrupt data. Because the statistical file is not used as part of the daily tax system, errors are not likely to be detected and corrected through routine agency business.

The only way to test for these problems is to independently reconstruct or query the employer master file. States that produce validation data from the same statistical extract files used to produce the ETA 581 instead of directly from the database must ensure that the extract files contain all the appropriate employer transactions and statuses. The way to do this is to recreate the logic used to produce the ETA 581. This handbook includes a validation tool, “independent count validation,” specifically for this purpose.1 See Appendix B.

Table B outlines variations in the validation methodology, based on typical state approaches to ETA 581 reporting and data validation reconstruction. To determine the specific validation methodology to be implemented, the state validator or federal representative should identify the state’s ETA 581 report source and validation reconstruction source for each population to be validated.

D. Basic Validation Approach

The basic approach used in data validation is to reconstruct the numbers that should have been reported on the ETA 581. Because state UI records are highly automated, states can develop computer programs that extract from electronic databases all transactions or statuses that they believe should have been counted on the report. Each extracted transaction or status is compiled as a record containing information on every dimension needed to classify it properly for reporting purposes. Automation reduces the burden on validators and state information systems (IS) staffs to extract records from state files, assemble those records for analysis, and assess validation results.

Once transactions and statuses are extracted, they are subjected to a series of quality tests. The DV software contains logic rules to ensure that the classifying elements in each record have values consistent with classification into report cells. The validator examines samples of the records to assess whether states have used the most definitive source of information and have adhered to Federal definitions. After it is determined that the extract data meet the quality tests, the data are used to produce “validation counts” that are compared to what the state has reported. If reported counts are within the appropriate tolerance (usually ± 2%) of the validation counts, the reporting system is judged valid.

States conduct validation using standardized web-based software that runs on DOL Sun computers in state UI offices. Results are transmitted to the same UI data base used for UI required reports, and from which results are extracted to monitor DV program compliance.

Table BVariations in Validation Methodologies Based on StateApproaches to Reporting and Reconstruction |

||||||||||

|

|

ETA 581 |

Data Validation |

|

|

|

||||

Scenario |

Transactions Overwritten on Database |

Program Type |

Source |

Timing |

Program Type |

Source |

Timing |

Independent Count Required |

Source Documentation Review Required |

Comments |

1 |

No |

Count |

Database |

Snapshot |

DRE |

Database |

Snapshot |

No |

No |

Best scenario because comparing snapshots eliminates timing discrepancies |

2 |

No |

Count |

Statistical file |

Daily |

DRE |

Database |

Snapshot |

No |

No |

Database is only reconstruction source. There could be changes in transaction characteristics (but will find all transactions). |

3 |

No |

DRE |

Database |

Snapshot |

DRE |

Database |

Snapshot |

Yes |

No |

Reporting and validation are the same program. Independent count may mirror that program. |

4 |

No |

DRE |

Statistical file |

Daily |

DRE |

Statistical file |

Daily |

Yes |

Yes |

Since transactions are not overwritten, states should be able to do Scenario 2 instead. |

5 |

Yes |

DRE |

Statistical file

|

Daily |

DRE |

Statistical file |

Daily |

NA |

NA |

No alternative validation source. Cannot reconstruct from the database. Not thorough validation. |

6

|

Yes |

Count |

Statistical file |

Daily |

Must create a daily extract |

NA |

NA |

NA |

NA |

Cannot reconstruct from database. Must change reporting process to Scenario 5. |

NOTE: Snapshot is of the last day of the reporting period.

DRE = Detail Record Extract

NA = Not Available

Data validation provides a reconstruction or audit trail to support the counts and classifications of transactions that were submitted on the ETA 581 report. Through this audit trail, the state proves that its UIRR data have been correctly counted and reported. For example, if a state reports 5,000 active reimbursable employers at the end of the quarter, then the state must be able to create a file listing all 5,000 employers as well as relevant characteristics such as the Employer Account Number (EAN), employer type, date the liability threshold was met, number of liable quarters, and wages in each of those liable quarters. Analysis of these characteristics can assure validators that the file contains 5,000 reimbursable employers correctly classified as active, and that the reported number is valid.

E. Reconstructing Federal Report Items

There are 37 ETA 581 report items to validate.2 A single employer account transaction or status may be counted in several different ETA 581 report items. For example, a contributions report that is filed on time is counted in two items for the current report quarter (timely reports and secured reports) and in one item in the following report quarter (resolved reports).

A general principle of the validation design is to streamline the validation process as much as possible. Transactions and statuses are analyzed only once, even if they appear in multiple items. The streamlining is accomplished by classifying them into mutually exclusive groups, which match to one or more items on the federal report. Specifically, tax validation identifies five types of employer transactions or statuses (called populations), which are further divided into 46 mutually exclusive groups (subpopulations). All validation counts are built from these subpopulations. The five populations are: (1) Active Employers, (2) Report Filing, (3) Status Determinations, (4) Accounts Receivable, and (5) Field Audits.

Table C lists the ETA 581 populations and subpopulations that are reconstructed and the number of report items validated by each population. It also describes the dimensions used to divide populations into subpopulations.

Table C ETA 581 Report, by Transaction Population |

||||

Population |

ETA 581 Line Numbers |

Dimensions Used to Create Subpopulations |

Number of Report Items |

Number of Subpopulations |

|

101 |

Employer status:

|

2 |

2 |

|

201 |

Timing of report receipt and resolution:

|

6 |

16 |

|

301 |

Type of status determination:

|

7 |

8 |

|

401 402 403 404 |

Receivable amounts:

|

10 |

16 |

|

501 502 |

By employer size:

By audit result:

|

11 |

4 |

|

101 |

|

1 |

N/A |

F. Handbook Overview

To determine the extent to which reported data are accurate and meet federal reporting definitions, five separate validation “modules” have been developed. Four of these modules—1, 2, 4, and 5—are processes that include various tools to use in validating the quantity and quality of federally reported data. Module 3 is not a process but a key map linking state data sources to Federal reporting definitions that is essential to building and testing extract files. The modules and accompanying appendices are outlined below.

Module 1—Report Validation (RV)

Module 1 describes how to validate whether state ETA 581 reporting programs are functioning correctly. The Sun-based software systematically processes reconstruction files and compares the count in each federal report item to the count in the corresponding subpopulation. The validator examines transactions that the software rejects as invalid and determines whether the rejected records need to be eliminated from the validation files because they represent uncountable transactions (e.g., their dates put them out of range for the validated quarter) or are improperly-built but potentially countable records that need to be fixed in a regenerated file.

Module 2—Data Element Validation (DEV)

Module 2 describes how to test the extract files to validate that the correct data elements are used, and thus that validation counts can be trusted as accurate. Two tests are conducted as part of DEV:

(2.1) Minimum Samples (formerly called File Integrity Validation, or FIV), two records from each subpopulation, are examined to see that the correct data were extracted from the database to build the reconstruction file.

(2.2) Sort Tests check whether the primary letter codes in the validation files are supported by state database values, or whether Employer Account Numbers have the prefix, suffix, or range values the state uses to differentiate contributory from reimbursing employers. Not all sorts are applicable in all states.

Module 3—State-Specific Data Element Validation Instructions

Module 3 provides the state-specific instructions that the validator uses for investigating minimum samples and applying sort tests, and helps guide programmers in building extract files. Module 3 documents the system screens that display the data to be validated as well as the rules that must be applied to each data element to determine its accuracy. State definitions or procedures that affect validation are also documented to help state and federal staff interpret the validation results and improve procedures.

Module 4—TPS Validation

Module 4 determines whether the state’s TPS acceptance samples were selected randomly from the correct universe of transactions. The quality reviews are a key indicator of the state’s performance; and it is important to review the sampling methodology to ensure the results are statistically valid.

Module 5—Wage Item Validation

This module explains how wage item counts are validated.

Appendix A—Report Validation File Specifications

Appendix A includes specifications for the five validation files that the state needs to generate. Its key element is a table for each population that shows how each subpopulation is defined by values of a record’s data elements, where each element is defined in Module 3, and how each subpopulation relates to federal report cells validated. In addition, Appendix A provides information about timing issues for each population.

Appendix B—Independent Count

Appendix B describes how to determine whether any transactions have been excluded from an ETA 581 report item. These procedures are applicable to states that create the ETA 581 from the same extract files used to generate the reconstruction files.

G. Walkthrough of Data Validation Methodology

Figure B is a schematic illustration of the DV process. This section provides IS and validation staff with a step-by-step walkthrough, using ETA 581 active employers as an example.3 It references the handbook module in which that aspect of the data validation process is described. Readers should review the referenced modules for further information.

State IS staff generate the ETA 581 report from the state’s UI employer database(s) or from statistical files of counts or detailed records. The report item in the upper left corner of Figure B represents the count of contributory and reimbursing employers reported on the ETA 581.

At the same time, guided by the file layout (DV Operations Guide, Appendix B), file specifications (Appendix A) and definitions (Module 3), IS staff extract detailed records for the reported transactions to reconstruct and provide an audit trail for the reported count. (See Module 1.) This may be an iterative process; the software may reject many observations from the initial version of the file as errors. The validator and programmers must examine errors and determine which records should be removed from the file (e.g., dates out of range, duplicate transactions) and which records are incorrectly built but are countable transactions and thus must be corrected and the file rebuilt. The file is not ready for its final import from which samples are drawn and RV results examined until all errors are dealt with.

The state should generate the ETA 581 and the validation file (the reconstructed “audit trail”) from the employer database(s) at the same time. Ideally, to prevent inconsistencies due to timing, the state would then immediately import the validation file into the software so that minimum samples can be drawn and the state can generate supporting documentation (for example, query screens) from the employer database(s).4

The DV software compares the reconstructed count with the reported count. In this example, the validation screen shows the detailed records for the three contributory employers reported on the ETA 581.

The software selects a sample of two records per subpopulation and displays them on the sample worksheet. (See Module 2.) The validator assembles the materials—Module 3, reconstruction files, sample worksheets, and screens—to be used during validation and for review by DOL auditors.

The validator, following the “step” numbers in each column heading on the sample worksheet on the DV software, tests the accuracy of the reconstructed data using the state-specific instructions under the corresponding step number in the state’s Module 3. The bottom right portion of Figure B shows a page from Utah’s Module 3. (See Module 2.)

Module 3 refers to state source documentation (usually query screens) and to specific fields on the screens.

To complete the minimum sample reviews, the validator follows the rules for 2A (contributory employer) in Module 3. The rule for Step 2A requires the validator to compare the employer-type indicator on the screen to the employer-type indicator on the sample worksheet. Using the down arrow, the validator selects ‘PASS’ if the two indicators match; otherwise, ‘FAIL.’

The validator repeats the process for each data element on the worksheet guided by the step numbers in each column heading. (See Module 2.)

MODULE 1

Tax

REPORT VALIDATION

MODULE 1

A. Purpose

The report validation process is used to determine the accuracy of counts reported on the ETA 581 report. Five validation extract files are constructed according to specifications in the DV Operations Guide and Appendix A. These files are used to reconstruct the counts for the five types of employer contributions populations that the state is validating. The report validation files enable the validator to determine the accuracy of the ETA 581 report item counts. Table 1.1 lists the five report validation population files and the parts of the ETA 581 report they validate.

Table 1.1

Populations

Population |

Population Description |

ETA 581 Line Number |

1 |

Active employers |

101 |

2 |

Report filing |

201 |

3 |

Status determinations |

301 |

4 |

Accounts receivable |

401, 402, 403, 404 |

5 |

Field audits |

501, 502 |

B. Methodology

Step 1 Produce Report Validation Extract Files

State staff produces five report validation extract filesthere is one extract file for each of the five populations of UI contributions transactions and statuses. State staff should use the following specifications to prepare the five files:

Extract File Specifications (Appendix B of the DV Operations Guide; also available on the DV Web page at http://ows.doleta.gov/dv/).

Population tables and timing specifications in Appendix A

Duplicate Detection Criteria (Appendix D of the DV Operations Guide)

State’s Module 3

The extract file format is ASCII, comma delimited. See Figure 1.1 for an example of a record layout. Data must be in the order listed in the record layouts. The Data Type/Format column on the layouts indicates generic values for text fields. The generic values must be followed by a dash and the state-specific value. See Figure 1.2 for an example of an extract file.

It is best to generate the validation files at the same time as the ETA 581 to eliminate differences in data caused by changes in the employer database over time. Because the ETA 581 provides a “snapshot” of transactions and employer statuses during a specific time period, the validation is intended to verify the status of transactions at the time the report was run, even if data later changed. It is less efficient to compare a set of transactions or statuses captured at one point in time with those captured at another point in time, because many discrepancies will represent legitimate changes in a dynamic database, instead of systems errors or faulty data. For example, an employer’s status can legitimately change from active to inactive. If states have a complete audit trail, timing should not affect the reconstruction of transactions. For example, states should maintain records of status determinations even if the employer’s status changed in the same quarter. The validator can use these audit trails to verify that a transaction was correct at the time of reporting.

Step 2 Import Extract Files

The extract files are imported into the DV software following the instructions in the DV Operations Guide. The software processes each extract file and builds the subpopulations as specified in Appendix A. The subpopulations are based on the unique types of transactions and statuses that can occur and that can be reported on the federal reports. For example, Population 1, active employers, includes all employers who were active on the last day of the quarter. The software assigns each record to a subpopulation defined by unique combinations of characteristics such as employer status, employer type, liability date, and termination date. See Figure 1.3 for a sample of a validation file imported into the software.

Step 3 Examine Error Reports and Reload Extracts If Necessary

When the extract files are loaded, the DV software reads each record to ensure that all fields are valid. Any records with invalid data, missing mandatory data, or records which appear to be duplicates are rejected and an error report is produced. The record layouts in the DV Operations Guide specify the valid data formats for each field, and the population tables in Appendix A specify the valid values. The software uses the duplicate detection criteria in the DV Operations Guide to identify duplicate records that the validator must review.

After reviewing any error reports that are generated, state staff should determine if the extracts are correct or whether they need to be regenerated or reformatted and reloaded into the DV software. This process should be repeated until the extract files have no errors.

See Figure 1.4 for an example of an error report.

Step 4 Report Validation

The DV software calculates the validation count or dollar amount for each subpopulation specified in Appendix A. The validation values are compared to the corresponding reported values in the national UI database. The software then calculates the difference between the validation and reported values and also calculates an error rate. A reported value is considered valid and “passes” report validation if the error rate falls within the established tolerance (± 1% for data used in Government Performance and Results Act (GPRA) measures and ± 2% for all others). The GPRA Tax measure is the percent of all New Status determinations made within 90 days; the elements with a ±1% tolerance are Total New Status Determinations and New Status determinations made within 90 Days.

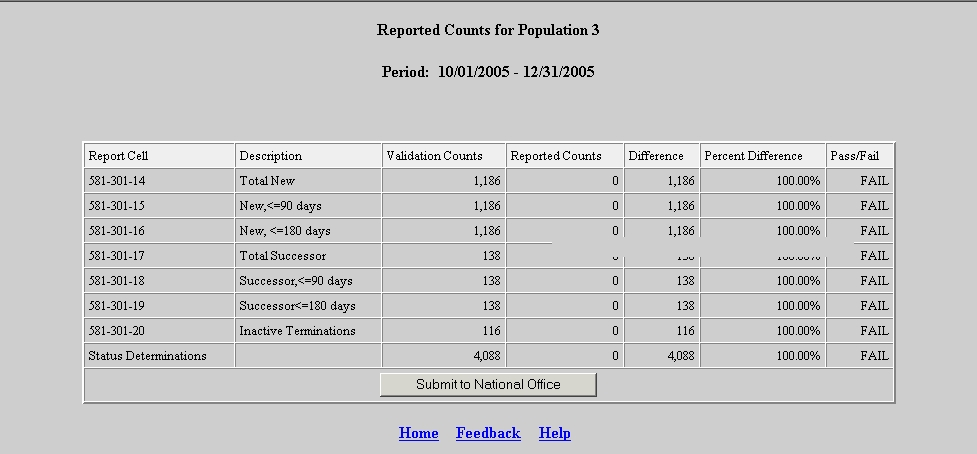

The software produces a summary report that displays all of this information. This summary report is submitted to the UI national office.

Overview of Module 1

Table 1.2 and Figure 1.1 show an overview of Module 2 methodology.

Table 1.2

Overview of Module 1

Step |

Description of Step |

1A – 1D |

Analyze the validation program specifications including:

1A. Record Layouts (DV Operations Guide): contains specifications to build the validation extract files 1B. Data Element Validation State Specific Instructions (Module 3): Includes instructions and state’s specific screen names, element names and value codes for validating each data element. 1C. Duplicate Detection Criteria (DV Operations Guide): contains the criteria that the software uses to detect duplicates. 1D. Subpopulation Specifications (Appendix A): contains reporting and sampling specifications for each population. |

2 |

Extract transaction records from the state database(s), including all of the data elements specified in the record layouts. The extract process should include a routine to ensure that invalid duplicates are excluded from the file, as specified in the duplicate detection criteria in the DV Operations Guide. |

3 |

Import the validation files into the DV software, which processes the files and assigns transactions to the subpopulations specified in Appendix A. |

4 |

The RV screen compares the validation counts to the reported counts and displays the error rates and a pass/fail population score. |

Figure 1.1

O

verview

of Module 1

verview

of Module 1

The following figures are examples of:

Population 3 Record Layout (Figure 1.2)

Population 3 Sample Extract File (Figure 1.3)

Population 3 Validation File after Processed through DV Software (Figure 1.4)

Population 3 RV Summary (Figure 1.5)

Figure 1.2

Population 3 Record Layout (portion)

The record layout provides the format for the validation extract file. The extract file type must be ASCII, comma delimited columns. Data must be in the order listed in the record layout. The Data Format column indicates the generic values for text fields. These must be followed by a dash and the state-specific value. The Module 3 reference indicates the step where the state-specific values are documented.

Example: If the state-specific code for New Status Determination is NEW, then the data format would be N-NEW.

No. |

Field Name |

Module 3 Reference |

Field Description |

Data Type/Format |

DVWS |

Constraint |

1 |

OBS |

|

Sequential number, start at 1 |

Number - 00000000 (Required) |

INTEGER |

NOT NULL |

2 |

EAN |

Step 1A |

Employer Account Number |

Number - 000000000 (Required) |

CHAR (20) |

NOT NULL |

3 |

Employer Type |

Step

2A |

Indicate whether the employer type is contributory or reimbursable. |

Text

- C; R |

CHAR (20) |

NOT NULL |

4 |

Status Determination Type Indicator |

Step 11A- D |

Indicate status determination type by New, Successor, Inactivation or Termination. |

Text

- N; S; I; T |

CHAR (10) |

NOT NULL |

5 |

Time Lapse |

Step 12 |

Place a zero (0) in this field. (Software generates the time lapse) |

Number - 0 |

INTEGER |

|

Figure 1.3

A Population 3 Extract File in ASCI Comma-delimited Format

000000001,000000001,C-100,T-345,0,05/16/2003,03/31/2003,,,,,,,05/16/2003,20031021140204

000000002,000000002,C-800,I-306,0,05/08/2003,09/30/2002,,,,,,05/08/2003,,20031021140204

000000003,000000003,C-100,N-106,0,04/02/2003,12/09/2002,,04/02/2003,,,,,,20031021140204

000000004,000000004,C-100,I-306,0,06/13/2003,06/30/2002,,,,,,06/13/2003,,20031021140204

000000005,000000005,C-100,I-325,0,06/16/2003,03/31/2002,,,,,,06/16/2003,,20031021140204

000000006,000000006,C-100,N-101,0,04/02/2003,03/31/2003,,04/02/2003,,,,,,20031021140204

000000007,000000007,C-100,I-306,0,06/13/2003,03/31/2003,,,,,,06/13/2003,,20031021140204

000000008,000000008,C-040,S-186,0,04/03/2003,01/01/2003,,04/03/2003,,04/03/2003,000281498,,,20031021140204

000000009,000000009,C-040,I-370,0,04/03/2003,12/31/1997,,,,,,04/03/2003,,20031021140204

000000010,000000010,C-420,S-186,0,04/03/2003,07/01/2002,,04/03/2003,,04/03/2003,000149776,,,20031021140204

000000011,000000011,C-420,I-370,0,04/03/2003,12/31/1980,,,,,,04/03/2003,,20031021140204

000000012,000000012,C-130,S-186,0,04/03/2003,10/02/2002,,04/03/2003,,04/03/2003,000051455,,,20031021140204

000000013,000000013,C-130,I-370,0,04/03/2003,03/31/1971,,,,,,04/03/2003,,20031021140204

000000014,000000014,C-000,S-165,0,04/21/2003,01/01/2002,,04/21/2003,,04/21/2003,000283912,,,20031021140204

000000015,000000015,C-100,I-306,0,06/03/2003,09/30/2002,,,,,,06/03/2003,,20031021140204

000000016,000000016,C-100,S-161,0,04/08/2003,01/24/2003,,04/08/2003,,04/08/2003,000309296,,,20031021140204

000000017,000000017,C-100,N-101,0,04/02/2003,12/31/2001,,04/02/2003,,,,,,20031021140204

000000018,000000018,C-100,N-101,0,04/02/2003,12/31/2002,,04/02/2003,,,,,,20031021140204

000000019,000000019,C-100,N-106,0,04/02/2003,12/22/2002,,04/02/2003,,,,,,20031021140204

000000020,000000020,C-100,N-101,0,04/02/2003,03/31/2003,,04/02/2003,,,,,,20031021140204

000000021,000000021,C-800,N-120,0,04/02/2003,06/30/2002,,04/02/2003,,,,,,20031021140204

000000022,000000022,C-800,I-306,0,04/02/2003,03/31/2002,,,,,,04/02/2003,,20031021140204

000000023,000000023,C-100,N-101,0,04/02/2003,12/31/2002,,04/02/2003,,,,,,20031021140204

000000024,000000024,C-100,N-101,0,04/02/2003,03/31/2002,,04/02/2003,,,,,,20031021140204

Figure 1.4

A Population 3 Validation File After Processing by DV Software

Figure 1.5

Population 3 Report Validation Summary

MODULE 2

Tax

DATA ELEMENT VALIDATION

MODULE 2

A. Purpose

The most important goal of the validation process is to determine how accurately employer contributions transactions and statuses have been reported on the ETA 581. After the report validation files have been built and each transaction has been assigned to a specific subpopulation, the key question is whether the data in each record are correct. This process is called data element validation (DEV). During DEV the validator will test whether the validation file is built from the correct elements, i.e., elements that are consistent with Federal reporting definitions. DEV comprises two separate testing procedures to ascertain whether key data elements in the report validation file have the values that should be used for building the file and validating the ETA 581 report counts. These are minimum samples and sorts/range tests. Only when a file has passed both procedures can it be considered the basis for judging the accuracy of ETA 581 report counts.

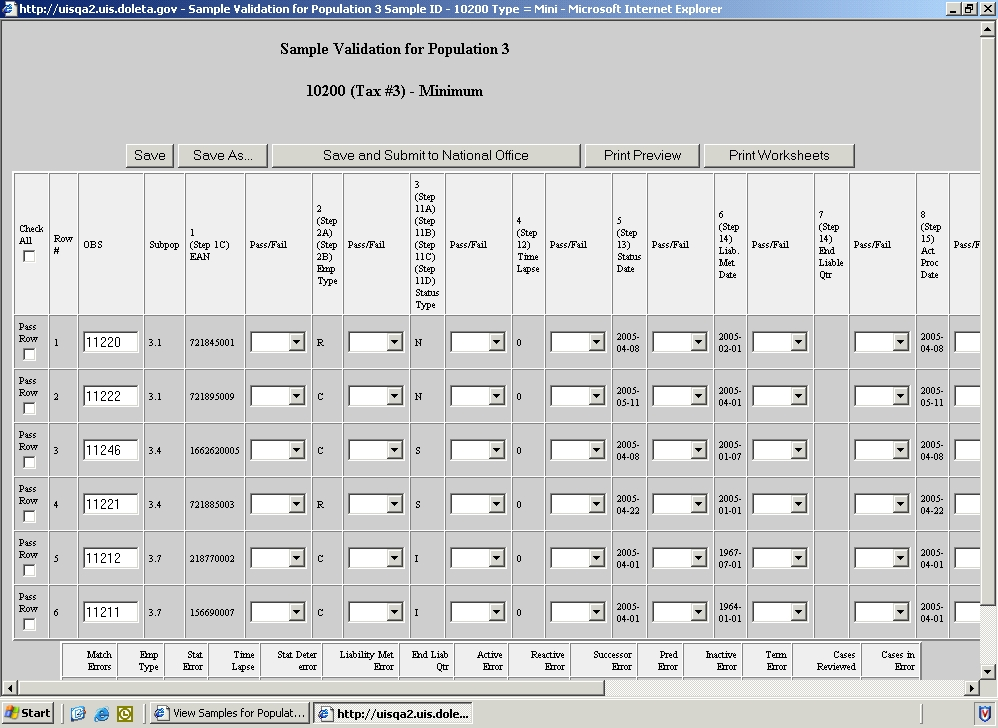

Module 2.1Minimum Samples (formerly called File Integrity Validation (FIV). The DV software selects a sample of two (2) records per subpopulation that are then displayed on the sample worksheet. The validator reviews the sampled records using the state-specific data values and instructions in Module 3, which point the validator to the appropriate supporting documentation (such as employer history screens). The validator uses this documentation to validate that the data elements on the worksheets are accurate and that the transactions are assigned to the appropriate subpopulations. This test ensures that the data in the reconstruction file accurately reflect the correct employer records in the state’s database(s). All states’ populations have minimum samples.

Module 2.2Sorts Tests/Range Validation provides additional validity tests that examine whether the primary letter codes used in building the extract file (e.g., N for New or S for Successor Status determinations) correspond to the proper state-specific codes, and whether Employer Account Numbers (EANs) have the right prefix, suffix or range values for Contributory or Reimbursing employer accounts in the state. Not all of these tests apply in all states.

MODULE 2.1—MINIMUM SAMPLES

B. Procedures

Task 1: Select Minimum Samples

The sampling function of the UI Tax DV software randomly selects two records from each subpopulation. See the tax tutorial for detailed instructions on how to use the Sun-based DV software. For each population, the software creates a Minimum Samples Worksheet listing all data elements for each sampled record (see example in Figure 2.1).

Minimum samples—instead of the larger random samples used in benefits--are sufficient for tax because tax database data are simpler than benefits data, and so the test of whether a file has been built correctly is whether the correct data have been retrieved for the extract file. The validator does not have to apply the kind of logic tests used in benefits to assess whether the correct data element has been used to build the file. The extract process that states use to build the extract files is highly automated. Automated processes are repetitive. If, for example, a certain field in the employer history file is extracted and placed in the fifth column of the reconstruction file for one record, that same field will be used for the fifth column of every record in that file. Thus, if we know that all data elements have been transferred correctly for the sampled records, we can be reasonably sure that all similar records are constructed correctly.

Task 2: Conduct Sample Investigation

For each data element in the sampled records, the validator compares the data value on the worksheet to source screens following instructions in Module 3. Based on that comparison, the validator records whether or not the value matches what is in the state database. The source data can be found by referring to query screens from the state data system. These screens display information on transactions and the status of employer accounts.5

Figure 2.2 is a sample page from Module 3. For each step listed in Module 3, Minimum Sample Instructions are provided. These instructions help the validator locate and compare specific data elements in the state database corresponding to the data on the worksheet, and to determine the validity of the information (pass or fail).

The instructions for each step or substep identify the supporting documentation (screen and field names) that the validator will need to examine. A set of logic tests, called validation rules, determines the accuracy of each characteristic of a given transaction. A subsection, called function, explains the purpose of each rule.

Definitions listed for each step in Module 3 give the federal definition of the item being validated. This definition is followed by further information on the data elementexamples, inclusions (situations falling within the definition), and exclusions.

Definitional Issues identify known discrepancies between state and federal definitions. This section provides a place for states to systematically document validation issues, letting validators and auditors know when problems are anticipated. Where state and federal definitions differ, be sure to follow the federal rules as required by the reporting instructions.

Comments provide additional information identified by states that state staff or federal auditors may need in order to handle unusual situations.

Task 3: Produce Sample Results

Using the down-arrow on the software, select ‘PASS’ on the worksheet next to each data element that successfully passes a step. Select ‘FAIL’ if a data element does not pass the step. Each column on the worksheet must be validated before the record is considered complete, and Pass or Fail must be selected for every item of every record in the sample or else the summary line will indicate “Incomplete.” The software will not transmit results of populations with incomplete DEV items. Based on the pass/fail entries, the worksheet will provide an item-by-item count of the number of data elements that failed. All sampled records must be completely free of errors for a state to pass a Minimum sample. Once the state validator has finished reviewing all of the sampled records for a population, the results should be saved following the instructions in the DV Operations Guide.

C. Examples

1. Example of Minimum Samples Worksheet (Figure 2.1)

2. Sample Page from Module 3 (Figure 2.2)

3. Minimum Sample Validation Procedures (Table 2.1 and Figure 2.3)

Figure 2.1 Example of Minimum Samples Worksheet

|

Figure 2.2

Sample Page from Module 3

Overview of Module 2.1 (Figure 2.3)

Table 2.1 and Figure 2.3 summarize the tasks in the Minimum Sample review process.

Figure 2.3

Task No. |

Description of Task |

Who Performs Task |

1 |

Open Minimum Samples Worksheet, which lists all data elements for two records from each subpopulation. A single worksheet is generated for each population. |

Validator |

2 |

Produce necessary query screens at the same time reconstruction file is created. |

IS Staff Validator |

3 |

The validator turns to the designated step in Module 3. Each step will have one or more rules listed. The purpose or “Function” of each rule is provided. In addition, each step includes the definition from the ETA 581. Use “Definitional Problems” to document instances where state regulations or practices conflict with the federal definitions. The validator can use the “Comments” field to record notes or document issues that may be helpful for future validations. |

Validator |

4 |

The validator locates the source Document listed to check each rule. The document is the source used to compare the data on the worksheet with the data residing in the state database or state files. In some cases, it will not be necessary to pull any additional documents when all of the data elements have been included on the worksheet. In other instances, it will be necessary for the validator to refer to screens and/or case files. |

Validator |

5 |

The validator determines whether the data element being validated passes all of the validation rules using the required documents. |

Validator |

6 |

If any of the rules for the step fail validation, the validator selects ‘FAIL’ on the worksheet for that step. |

Validator |

7 |

If the data element passes all of the rules, the validator selects ‘PASS’ on the worksheet for that step. |

Validator |

Module 2.2—SORTS TESTS

Sorts is the name given to a series of tests used in certain states to determine whether data element values such as C for Contributory or N for New status determinations used to build the extract file records are supported by underlying state database codes or Employer Account Number (EAN) values.

The validation software assigns records to subpopulations, in part by using the generic codes used by all states to build the validation files. For example, all states use ‘C’ to mean contributory followed by a dash and the state-specific code for contributory. Sorts tests must be conducted when states have multiple state-specific codes that could be assigned to a single generic code. A separate query test is also necessary when the state uses the prefix, or suffix, or value range of the EAN to identify whether the employer type is contributory or reimbursing.

Table 2.2 shows a variety of codes that one state uses to classify a status determination that an employer is either newly liable or a successor to an existing employer. In building its population 3 validation file, this state might have several acceptable state-specific codes to map to the generic status determination type indicators of “N” (new) and “S” (successor). For example, this state’s validation file might show new status determination type indicator values of N-01, N-02, N-03, N-07, N-08, N-09 or N-10.

For most of the sorts, the validation software produces a distribution of all records in populations 1 – 4 in the extract file by certain key primary codes. It shows the relationship of the records with the primary code to the state’s secondary codes captured when the file was built. The validator examines these codes to identify any state-specific codes that are not acceptable matches for the generic code. In the example above, any code other than 01-03 or 07-10 would not be consistent with a correct value of N on the record. For sorts 1.1, 1.2, 2.1 and 2.2, the software allows the validator to query Populations 1 and 2 to determine whether the prefix, suffix, or value range of the EAN is consistent with state procedures for classifying employers by type (contributory or reimbursing). A data element passes the sort test if no more than 2% of the sorted transactions include an incorrect state-specific code or the wrong EAN value. Unless a population extract file passes all applicable sorts tests and has no minimum samples with errors, the population cannot pass report validation because the integrity of the extract file used for the validation is not established.

The DV software provides a data entry screen on which the validator records, for each sort, the number of records subjected to the test and the number of records that are “out of range.” It also allows the validator to check N/A if the sort does not apply in the state.

Table 2.2 Status Determination Reason Codes |

|

This table lists codes that a state used to indicate the reason employers were subject to the provisions of UI law as either a ‘new’ employer or as a ‘successor.’ |

|

Code |

Reason |

01 |

Payroll |

02 |

Employment 13th week |

03 |

FUTA |

04 |

Whole Successor allowed |

05 |

Part Successor |

06 |

Consolidation allowed |

07 |

Revived with new number |

08 |

Payroll domestic |

09 |

Payroll agriculture |

10 |

Employment agricultural |

D. Procedures

Task 1: Identify Applicable Sorts Tests

Examine the sort criteria in Table 2.3 (pages 2-12 and 2-13, below). For each potential sort, look at the column entitled “When to Do Range Validation” to determine whether the test is applicable to the state. A sort is only applicable when there are multiple state codes that map to a single generic indicator, or when the state uses the Employer’s Account Number (EAN) to identify whether an employer is contributory or reimbursing.

Task 2: Conduct Appropriate Test

To begin range validation, log onto the Sun-based DV software and select the appropriate population from the Tax Selection Criteria menu.

Select View Data Element Sorts from the drop-down list. The software presents a DATA ELEMENT SORTS table listing every sort for the population and a box for entering the number of errors you identify for that sort. The table displays the # of Cases to which the sort is applicable; the software has calculated them by summing the cases in the relevant subpopulations.

Click on the link for an applicable sort. If a sort is not applicable in your state, click the N/A box.

The DV software only queries records in the subpopulations applicable to the selected sort. Except for the EAN sorts, all of the sorts in Table 2.3 involve instances where a state may have multiple codes for employer status, employer type, or types of transactions. The software produces a distribution of the primary code by the state-specific secondary codes; validators can examine the individual sorted records for each secondary code by clicking on a link. See the DV Operations Guide for a detailed explanation of how to conduct EAN range validation (sorts 1.1, 1.2, 2.1, 2.2).

Task 3: Produce Range Validation Results

After examining the sorted records, the validator enters the total of errors on the Data Element Sorts screen. As soon as the SAVE button is clicked, the DV software calculates the error rate and indicates whether the sort passed or failed. The results are sent to the Population Scores table. When all sorts and Minimum samples are complete, the validator may transmit the results to the national office.

States have passed range validation when they have established that no more than 2% of the records in the tax extract files include incorrect state-specific codes or incorrect EANs representing employer type.

Task 4: Correcting Validation Errors

Validation is not an end in itself; it is a means toward correct reporting. If validation identifies reporting errors, the state should correct the reporting errors as soon as possible. To document the corrective action for resolving reporting errors, and the timetable for completion, the state must address the problem to its ETA Regional Office in accordance with the annual State Quality Service Plan (SQSP). This will be either in the SQSP narrative or as part of a Corrective Action Plan (CAP). (Any state that fails to conduct and submit the validation for one or more benefits or tax populations must address this failure in a CAP.) The narrative or CAP should contain the following information on every validated report element that exceeds the validation error rate tolerance:

Report element(s) in error.

Magnitude of error found.

Status/Plan/Schedule for correcting. If reporting errors were corrected in the course of the first validation, the report should simply note “corrected during validation.” (Validation of the affected transactions should occur immediately after these corrections have been made.)

Timing of CAP or Narrative. The plan for correcting the errors should be submitted within the established deadlines of the SQSP.

Revalidation. Populations that failed any validation must be revalidated the following year. A revalidation should confirm the success of the corrective action or, if the state has not completed corrective action, identify the current extent of the error.

Errors Discovered Outside the Validation Process. During the validation process, errors in reporting may be identified that are outside the scope of the validation program. Such errors should be included in the comments section of the state’s data validation reports.

Table 2.3 Sorts Validation Criteria |

||||||

Population

|

Sort

|

Subpopulations Sorted |

When to Do Sorts Validation

|

Test Data Element |

Test Criteria

|

Module 3 References |

1 |

S1.1 |

1.1 |

When the employer’s account number indicates that the employer type is contributory. |

EAN |

All EANs must be in ranges allocated to contributory employers |

Step 2A |

1 |

S1.2 |

1.2 |

When the employer’s account number indicates that the employer type is reimbursing. |

EAN |

All EANs must be in ranges allocated to reimbursing employers |

Step 2B |

1 |

S1.3 |

1.1 and 1.2 |

When more than one employer status code is used to indicate that the employer’s status is active. |

Employer Status Indicator |

All status codes must represent active employers |

Step 3A |

1 |

S1.4 |

1.1 |

When more than one employer type code is used to indicate that the employer type is contributory. |

Employer Type Indicator |

All employer type codes must represent contributory employers |

Step 2A |

1 |

S1.5 |

1.2 |

When more than one employer type code is used to indicate that the employer type is reimbursing. |

Employer Type Indicator |

All employer type codes must represent reimbursing employers |

Step 2B |

2 |

S2.1 |

2.1- 2.8 |

When the employer’s account number indicates that the employer type is contributory. |

EAN |

All EANs must be in ranges allocated to contributory employers |

Step 2A |

2 |

S2.2 |

2.9-2.18 |

When the employer’s account number indicates that the employer type is reimbursing. |

EAN |

All EANs must be in ranges allocated to reimbursing employers |

Step 2B |

2 |

S2.3 |

2.1-2.8 |

When more than one employer type code is used to indicate that the employer type is contributory. |

Employer Type Indicator |

All employer type codes must represent contributory employers |

Step 2A |

2 |

S2.4 |

2.9-2.18 |

When more than one employer type code is used to indicate that the employer type is reimbursing. |

Employer Type Indicator |

All employer type codes must represent reimbursing employers |

Step 2B |

3 |

S3.1 |

3.1-3.3 |

When the state uses more than one code to indicate that the status determination type is new. |

Status Deter-mination Type |

All status determination type codes must represent ‘new’ status determination type |

Step 11A |

3 |

S3.2 |

3.4-3.6 |

When the state uses more than one code to indicate that the status determination type is successor. |

Status Deter-mination Type |

All status determination type codes must represent ‘successor’ status determination type |

Step 11B |

3 |

S3.3 |

3.7 |

When the state uses more than one code to indicate that the status determination type is inactivation. |

Status Deter-mination Type |

All status determination type codes must represent ‘inactivation’ status determination type |

Step 11C |

3 |

S3.4 |

3.8 |

When the state uses more than one code to indicate that the status determination type is termination. |

Status Deter-mination Type |

All status determination type codes must represent ‘termination’ status determination type |

Step 11D |

4 |

S4.1 |

4.1, 4.9 |

When the state uses more than one code to indicate that the transaction type is establishment. |

Transaction Type Indicator |

All transactions must be establishment of accounts receivable |

Step 21A |

4 |

S4.2 |

4.2, 4.10 |

When the state uses more than one code to indicate that the transaction type is liquidation. |

Transaction Type Indicator |

All transactions must be liquidations of accounts receivable |

Step 21B |

4 |

S4.3 |

4.3, 4.4, 4.11, 4.12 |

When the state uses more than one code to indicate that the transaction type is declared uncollectible. |

Transaction Type Indicator |

All transactions must be accounts receivable declared uncollectible |

Step 21C |

Module 3

Tax

DATA ELEMENT VALIDATION

STATE SPECIFIC INSTRUCTIONS

MODULE 3

A. Purpose

Module 3 provides the set of actual state-specific instructions that the validator uses in data element validation. It lists the state system screens or documents that contain the data from which the extract files are built as well as the rules to validate them. State definitions or procedures that affect validation are also documented to help state and federal staffs interpret the validation results and improve procedures. Although the intent of Module 3 is to identify state-specific data that are consistent with Federal reporting definitions, the inclusion of state-specific information in this module is not to be deemed a finding by itself that such information is in compliance with federal reporting data definitions.

Module 3 is not included in this handbook. It is maintained in a database that contains data for every state. Since these instructions are state-specific, each state is responsible for reviewing and updating its Module 3 regularly. Every year by June 10 States need to certify that they have reviewed their Module 3 and that is up-to-date.

B. Methodology

Table 3.1 outlines each step in the state-specific validation instructions and its component substeps. Table 3.2 indicates the combination of validation steps required for validation of each population. The worksheet guides the validator to the necessary steps by the presence or absence of data in each column for a given transaction. Each column header identifies the steps to use in validating the data in that column. Once the validator learns the instructions and rules listed under each step and substep, it may not be necessary to refer to them for each transaction or element being validated.

The validator begins the validation by looking at the first transaction (first row) on the worksheet and then by looking at the first step listed in the column header at the top of the worksheet. The validator then locates that step in the state-specific instructions in Module 3.

If there are substeps, but the substep is not specified in the column heading, the first page for the step number will direct the validator to the appropriate substep.

Note: Some steps in Module 3 indicate that they do not require validation or they are no longer required. The step numbers, however, have been retained in Module 3 to document the states’ procedures for these steps.

Table 3.1

Data Element Validation Steps and Substeps

Step |

Substep A |

Substep B |

Substep C |

Substep D |

Substep E |

|

Active Employers |

Employer Report Filing |

Status Determinations |

Accounts Receivable |

Field Audits |

|

Contributory |

Reimbursable |

|

|

|

|

Active |

Inactive/ Terminated |

|

|

|

|

Initial |

Reopen |

|

|

|

|

|

|

|

|

|

|

Combined |

Inactivation |

Termination |

|

|

|

Quarterly Wages |

Number of Liable Quarters |

|

|

|

|

Timely |

Secured |

Resolved |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

New |

Successor |

Inactivation |

Termination |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Transaction Date |

Established Date |

|

|

|

|

|

|

|

|

|

|

Receivable Established |

Receivable Liquidated |

Declared Uncollectible |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Contributory |

Reimbursable |

|

|

|

|

Large |

Not Large |

|

|

|

|

Change |

No Change |

|

|

|

|

|

|

|

|

|

|

Pre-Audit |

Post-Audit |

Under Reported |

Over Reported |

Reconciled |

|

Pre-Audit |

Post-Audit |

Under Reported |

Over Reported |

Reconciled |

|

Pre-Audit |

Post-Audit |

Under Reported |

Over Reported |

Reconciled |

Table 3.2

Relevant Data Element Validation Steps, by Population

Population |

Relevant Data Element Validation Stepsa |

|

1, 2, 3, 5, 7, 14, 15, and 16 |

|

1, 2, 4, 5, 6, 8, 9, 10, and 14 |

|

1, 2, 6, 11, 12, 13, 14, 15, 16, 17, and 18 |

|

1, 2, 19, 20, 21, 22, 23, 24, 25, 26, and 27 |

|

1, 28, 29, 30, 31, 32, and 33 |

a The population tables in Appendix A specify the appropriate substeps for each population.

MODULE 4

Tax

QUALITY SAMPLE VALIDATION

MODULE 4

A. Introduction

One of the Tax Validation responsibilities is to review the integrity of four key Tax Performance System (TPS) acceptance samples: the three Status Determination samples and the Field Audit sample. The objective of the DV reviews is to ensure that the samples are drawn randomly from the correct population or universe. The validator (a) reviews how the sample was selected and (b) compares the universe from which the sample is drawn to a reference count—ideally the validated reference count--from the ETA 581 report. To ensure that only random acceptance samples are investigated, the randomness of each TPS sample should be validated before the cases are given to the TPS reviewer for investigation. To keep from delaying the case investigations, do (a) as soon as the sample is drawn, as it does not depend on the 581 reference data. Comparing the universe count with the 581 reference count, (b), can be done later, when 581 data are submitted. That will be approximately February 20 for the Status Determination samples and November 20 or February 20 for the Field Audit sample (per UIPL 13-10, states have discretion in the review period for the Field Audit sample.)

States may select their TPS samples in either of two ways. The first is a conventional interval sample: the programmer (or a utility program) divides the size of the desired sample (say 30) into the size of the population (say 300) and derives the sample interval (every 10th observation). A random start point--in this instance between 1 and 10—is then applied, and the program selects every tenth case from that point. The National Office provides states with random start numbers for all TPS samples in December for the upcoming calendar year. States may also use a sampling utility program that randomizes the file and selects the first 30 observations. This approach is somewhat more difficult to validate, but could involve reviewing the sample against the source file (see Step 2 below) or reviewing the utility program specifications.

B. Procedures

Task 1: Compare Universe Counts

From IS staff the validator should obtain copies of the universe files for Status Determinations and Field Audits. For status determinations there will be three TPS universes: (1) New, (2) Successor, and (3) Inactive/Terminated. The universe listings should cover all quarters for which the actual acceptance sample was drawn. These are as follows:

Status Determinations: the four quarters of the calendar year

Field Audits: Give period used for acceptance sample.

UIPL 13-10 changed period from first three calendar-year quarters to four quarters, but state may select either four calendar-year or four Federal fiscal-year quarters, e.g., 1/1/2010 – 12/31/2010 or 10/1/2009 – 9/30/2010.

Compare the count of each status determination universe and the field audit universe to the reference count reported on the ETA 581 (see below) for the same period. If the universe is within ±2% of the reference count, this indicates that the correct universe was used. Please note that you may need to adjust the TPS universe count of Inactivation/Termination determinations to make a proper comparison with the 581 reference count. The TPS universe includes all inactivation/termination transactions actually entered on the state’s system. In some states, there may be differences from the counts required to meet ETA 581 reporting requirements.

Reference Counts by line and UIDB cell number:

New Status Determinations: ETA 581 line 301, column 14 (c11)

Successor Determinations: ETA 581 line 301, column 17 (c68)

Inactivations/Terminations: ETA 581 line 301, column 20 (c63)

Field Audits: ETA 581, line 501, column 47 (c256)

NOTE: The TPS universe is to include all actual determinations to inactivate and/or terminate accounts during a calendar year. Federal reporting instructions may require different counts for Inactivations/Terminations on the 581; for example:

An account that was first inactivated and later terminated would appear twice in the TPS universe, but would only be counted once on the 581 (581 instructions allow only the inactivation/termination of an active account).

The 581 instructions also stipulate that the count of active employer accounts cannot include employers that have not reported wages for eight consecutive calendar quarters. However, some states’ policies do not allow accounts to be inactivatied until additional steps occur beyond the Federal eight-quarter rule. Since it is the state’s actual determination to terminate or inactivate an account that adds it to the TPS universe, such accounts are included in the 581 count of Inactivations/Terminations, but will not appear in the TPS universe until the actual inactivation or termination decision is issued.

If such discrepancies arise, adjustments need to be made to the counts before comparison can be made. If the adjusted TPS universe is within ±2% of the 581 count, the TPS universe will be considered to contain the correct transactions.

Task 2: Review Sample Selection

Determine whether an interval sample was drawn (and how it was drawn) or whether the file was randomized such that the first set of cases could be selected without establishing intervals.

If an interval sample was drawn, check to see that the correct random start number was used and that proper cases were selected (for example, if the random start was 10 and the interval was every 40th case, check to see that cases 50, 90, 130, and so forth were selected).

If the sample was drawn from a randomized file, print the file and ensure that it was not ordered by date, employer, or some other nonrandom means. The validator can compare the printout with the way the file was ordered prior to randomization to ensure that the file was randomly reordered.

Task 3: Record Findings on the Template

The Sun-based software does not include a screen for forwarding the results of the quality reviews. Results of the quality review validation should be documented in a Word® file using the format below and sent via email to the National Office to [email protected]. Note any problems in the Comments field.

State:

New Status Determinations (Tax Pop 3)

Calendar Year: YYYY

Universe: XXX,XXX

581 Count: XXX,XXX

Difference as % of 581 Count:

Sampling Method: (Interval or Randomized File)

Problems/Comments:

State:

Successor Status Determinations (Tax Pop 3)

Calendar Year: YYYY

Universe: XXX,XXX

581 Count: XXX,XXX

Difference as % of 581 Count:

Sampling Method: (Interval or Randomized File)

Problems/Comments:

State:

Terminations/Inactivation Status Determinations (Tax Pop 3)

Calendar Year: YYYY

Universe: XXX,XXX

Adjustments XXX,XXX

Adj Universe XXX,XXX

581 Count: XXX,XXX

Difference as % of 581 Count:

Sampling Method: (Interval or Randomized File)

Problems/Comments:

State:

Field Audit Quality (Tax Population 5)

Four Quarters Ending : YYYYQ

Universe: XXX,XXX

581 Count: XXX,XXX

Difference as % of 581 Count:

Sampling Method: (Interval or Randomized File)

Problems/Comments:

C. Results and Actions

If the sampling method was not correct or was not implemented properly, the validator should ensure that the sample is redrawn and is random before it is given to the TPS reviewer. The problems should be discussed with the programmer to ensure that next year’s sample is drawn randomly. If the programmer confirms that the process was incorrect, the validator should record the problems in the comments section of the template.

If the universe for any TPS acceptance sample differs from the 581 reference count by more than ±2%, the review must be repeated the following year. Otherwise, the review need not be repeated for three years.

MODULE 5

Tax

WAGE ITEM VALIDATION

MODULE 5

A. Purpose

Each quarter, employers report to the state agency employee wages on a wage report (WR) and summary wage and contributions data on a contribution report (CR) on various media, including paper, magnetic tapes, diskettes, CD-ROMs, or files transmitted over the Internet. A WR includes a wage record for each employee: the individual’s employee's name, social security number (SSN) and earnings in covered employment during the quarter. The agency creates a record in its files--called a wage item--that identifies the individual, his employer, and the individual’s earnings for the quarter. The agency reports the number of wage items on the ETA 581 report; this count is one of the workload items used to allocate UI administrative funds. Wage item validation assesses the accuracy of the count of wage items reported on the 581, and alerts the state to correct any inaccuracies. This helps ensure equitable funding for this state workload activity. The following box gives a typical flow for processing reports and contributions.

It would be ideal to validate the count of wage items by building a reconstruction file as is done for the five Tax Populations. This is impractical for two reasons: (1) size of the extract file--California’s file would contain over 18 million records--and (2) inability to conduct Data Element Validation (employers, not the agency, have the original wage information). Instead, validators recount small samples of wage records before they’re processed, recount them, and compare this count with the count after those same records have been processed into wage items. In this process, DV makes an inference about the accuracy of the 581 count from those sample recounts.

In this recount, validators make sure that (a) every wage record was included as a wage item, and (b) that wage items did not include any:

Corrections (the system must be able to process corrections without double counting the item); or

Incomplete wage records (for example, if the identifier or wage amount is missing or 0 for the employee); or

Duplicate records.

This approach allows states to validate wage items at any time as long as the original wage records can be retrieved. The validation approach involves selecting samples of wage records for a particular quarter that contains all modes through which employers have been submitting wage records to the state. The validator then manually determines how many of them should have been processed as wage items, and compares the count with the wage items the state obtained when it processed the same wage records. The relationship between the original count and the validated count is used to infer the accuracy of the state’s 581 wage item count for that quarter.

In general, states process Wage Records into Wage Items and count them for reporting on the 581 as follows:

|

B. Methodology

Task 1: Determine the modes the state uses to receive wage records, how each mode is processed, and how the 581 count is obtained.

Modes. Identify the specific modes your state uses to receive or “capture” wage records.

Processing. It is essential to understand the process your state uses to enter the records of each mode into your system as wage items and corrections. It may be helpful to build a simple flowchart, along the lines of the model above, of how wage records are received, processed into wage items and counted for the 581.

How does your state process wage records?

If wage records are received on paper, how are they data-entered?

For records that are batch-processed, are the totals for wage items indicated for each batch, so that a recount of the batch can be compared with the original count of wage items?

Are items received subjected to front-end edits to look for probable SSN errors (less than 9 digits, impossible sequences), missing wages, missing names, etc.?

How are corrections handled and recorded so that you as a validator can note corrections?

Counting. How is the 581 count taken?

Built up as a sum of counts of items from each batch?

As a snapshot of the wage file at the end of the report quarter?

Other?

Task 2. Set up the Worksheet in the Tax Validation Software

Establish a row on the Wage Item Validation Worksheet in the UI Tax Data Validation software for each mode in which your state receives wage items. See Figure 5.1 below and DV Operations Guide.

Task 3: Select Samples

States typically group or “batch” wage and contribution reports, by mode received, to process them and organize their accounting records. The definition of a “batch” may vary depending on the size of reports and how they are received. For example, you may batch paper wage reports in groups of 50 or 100 documents with a batch number identifier and summary transaction totals. Or, you may process a large wage file from a tape or an FTP transmission, each of which could be a single batch of thousands of records.

It has been determined that a sample of 150 records from each mode, investigated in two stages, will allow a sufficiently precise inference to be made about the accuracy of processing wage records into wage items. Stage 1 is a random set of 50 of the 150 records, and if necessary, Stage 2 is the remaining 100 records. On the basis of your study and flowcharting of wage record processing, select a point at which you can identify at least 150 wage records obtained by each mode of receipt.

For Each Mode:

Pick a Batch of Wage Reports containing at least 150 wage records.

Randomly select 150 records of these records

Divide the 150 records into a Stage 1 set of 50 records and Stage 2 set of the remaining 100 records in such a way that the division between Stage 1 and Stage 2 is also random.

In a spreadsheet program such as Excel or a database, set up a means to track results of your investigations of the wage records.

Task 4: Review Sampled Records for Each Mode and Compare Count with Wage Items

Task 4a: Review and Compare Count for First 50 Records in each Mode

The validator must count only wage items that are complete. This means each countable entry must include each of the following elements:

Employee Identifier (Name or SSN)

Employer Identifier (Name or EAN)

Wage dollar amount

Ensure that only complete records are counted, including corrected records. Enter the recount for each mode on your tracking worksheet.

Task 4b: Obtain the System Wage Item Count

How this is done will depend on how your state counts wage items.

Batch cumulating of Wage Items. If your state obtains its 581 count by cumulating batch counts, and you have the original records with an indication of whether they were included as wage items or not, you can compare your results with those originals.

It may be easier to retrieve your wage history files to see which of the records in your set were counted.

Snapshot counting of Wage Items. Ask the programmer to extract and count all wage items from your system for the validated quarter that have the combination of EAN and SSN included in your mode samples.

Enter this count as the “581 count for batch” on your worksheet.

Task 4c: Identify and Count Erroneous Records

If there are any discrepancies between your sample recounts and the 581 counts from the system, search for duplicates—including corrections counted as records—and incomplete records among the extracted records.

Task 4d: Determine Whether Stage 2 Investigation is needed

Proceed as follows at this point:

If difference between your recount and 581 count is

= 0, the mode passes at Stage 1. The mode is done.

≥ 4, the mode fails at stage 1. The mode is done.

= 1, 2, or 3, review remaining 100 cases for the mode, following Steps 4a-4c.

Even though the mode fails at stage 1, you may also want to review the remaining 100 cases to estimate the error rate in wage item processing for the mode.

Task 4e: Combine Results for Stages 1 and 2 if second stage was investigated.

Task 5: Enter Combined Results in Wage Item Validation Worksheet

From your tracking worksheet, enter the total number of valid wage items in the “Recount for Batch” column on the Wage Item Validation Worksheet on the DV software. If any duplicates or other errors have been identified, enter the count by type in the appropriate columns on the worksheet.

Task 6: UI Tax Validation Software

The UI Tax Data Validation software calculates the difference between the recount and reported counts for the validated sample of wage items. Wage Item Validation passes if, based on the sample results, each mode is likely to contain no more than 2% errors. Pass and fail for a mode is determined as follows:

Pass:

The Stage 1 sample of 50 wage records contains no errors.

The full sample of 150 wage records contains no more than 6 errors.

Fail:

The Stage 1 sample of 50 wage records 4 or more errors.