WISEWOMAN OMB Part A

WISEWOMAN OMB Part A.docx

WISEWOMAN National Program Evaluation

OMB: 0920-1279

WISEWOMAN National Program Evaluation

Supporting Statement

Part A: Justification

August 2, 2019

Contact: Joanna Elmi

Division of Heart Disease and Stroke Prevention

Centers for Disease Control and Prevention

Atlanta, Georgia

Email address: [email protected]

This page left blank for double-sided copying.

CONTENTS

A. Justification

1. Circumstances Making the Collection of Information Necessary 1

2. Purpose and Use of the Information Collection 2

3. Use of Improved Information Technology and Burden Reduction 5

4. Efforts to Identify Duplication and Use of Similar Information 6

5. Impact on Small Businesses or Other Small Entities 6

6. Consequences of Collecting the Information Less Frequently 7

7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5 8

8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside the Agency 8

9. Explanation of Any Payment or Gift to Respondents 9

10. Protection of the Privacy and Confidentiality of Information Provided by Respondents 9

11. Institutional Review Board (IRB) and Justification for Sensitive Questions 10

12. Estimates of Annualized Burden Hours and Costs 11

13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers 14

14. Annualized Cost to the Federal Government 14

15. Explanation for Program Changes or Adjustments 14

16. Plans for Tabulation and Publication and Project Time Schedule 15

17. Reason(s) Display of OMB Expiration Date is Inappropriate 22

18. Exceptions to Certification for Paperwork Reduction Act Submissions 22

This page left blank for double-sided copying.

LIST OF ATTACHMENTS

Attachment A: BREAST AND CERVICAL CANCER MORTALITY PREVENTION ACT OF 1990

Attachment B: PUBLIC HEALTH SERVICE ACT

Attachment C: PROGRAM Survey INSTRUMENT AND SUPPLEMENTARY DOCUMENTS

C1: PROGRAM SURVEY

C2: PROGRAM SURVEY INVITATION EMAIL

C3: PROGRAM SURVEY REMINDER EMAIL

Attachment D: Site Visit data collection instruments

D1: DISCUSSION GUIDE-GROUP INTERVIEW WITH KEY ADMINISTRATIVE STAFF

D2: DISCUSSION GUIDE-GROUP INTERVIEW WITH HEALTHY BEHAVIOR SUPPORT STAFF

D3: DISCUSSION GUIDE-GROUP INTERVIEW WITH STAFF AT PARTNER CLINICAL PROVIDERS

D4: DISCUSSION GUIDE-COMMUNITY PARTNERS

D5: DISCUSSION GUIDE - INNOVATION FUNDING RECIPIENTS

ATTACHMENT E: CONSENT FORM

Attachment F: Federal Register Notice

This page left blank for double-sided copying.

TABLES

Table A.1. Data collection efforts and evaluation component 4

Table A.2. Estimated annualized burden hours 12

Table A.3. Estimated annualized burden costs 13

Table A.4. Estimated annualized federal government cost distribution 14

Table A.5. Analytic approaches to answering evaluation questions 16

Table A.6. Illustrative table shell - Average baseline outcomes and average change in outcomes among WISEWOMAN participants 18

Table A.7. Illustrative table shell – Marginal effects of the WISEWOMAN program on outcomes (multivariate regressions results) 19

Table A.8. Proposed project timeline 22

This page left blank for double-sided copying.

Goal of the study: The goal of the study is to assess the implementation of the WISEWOMAN program and measure the effect of the program on individual-, organizational-, and community-level outcomes.

Intended use of the resulting data: Results from the evaluation efforts will provide information about the annual and long-term performance of the program and help determine progress toward WISEWOMAN goals and outcomes. In addition, the study will document emerging, promising, and best practices that could be replicated and scaled up.

Methods to be used to collect: The study will use primary data collected through semi-structured site visit interviews and a program survey of WISEWOMAN funding recipients, as well as secondary participant-level service data provided by recipients.

The subpopulation to be studied: The evaluation will collect data from WISEWOMAN recipients (i.e., state and tribal health departments) and their clinical providers, healthy behavior support providers, and community partners.

How data will be analyzed: Data will be analyzed using qualitative analysis methods, summary statistics such as mean outcome values at different follow-up periods, regressions (including linear and logistic models), and regression trees.

This page left blank for double-sided copying.

Supporting Statement Part A (Justification)

1. Circumstances Making the Collection of Information Necessary

This statement requests Office of Management and Budget (OMB) approval for three years to conduct new data collection for the evaluation of the Well-Integrated Screening and Evaluation for Women Across the Nation (WISEWOMAN) program under Funding Opportunity Announcement (FOA) DP18-1816. The WISEWOMAN program is authorized under a legislative supplement to the Breast and Cervical Cancer Mortality Prevention Act of 1990 (Public Law 101-354, see Attachment A). The Centers for Disease Control and Prevention’s (CDC’s) authority to collect information from WISEWOMAN program recipients is established by Section 301 of the Public Health Service Act [42 U.S.C. 241] (Attachment B).

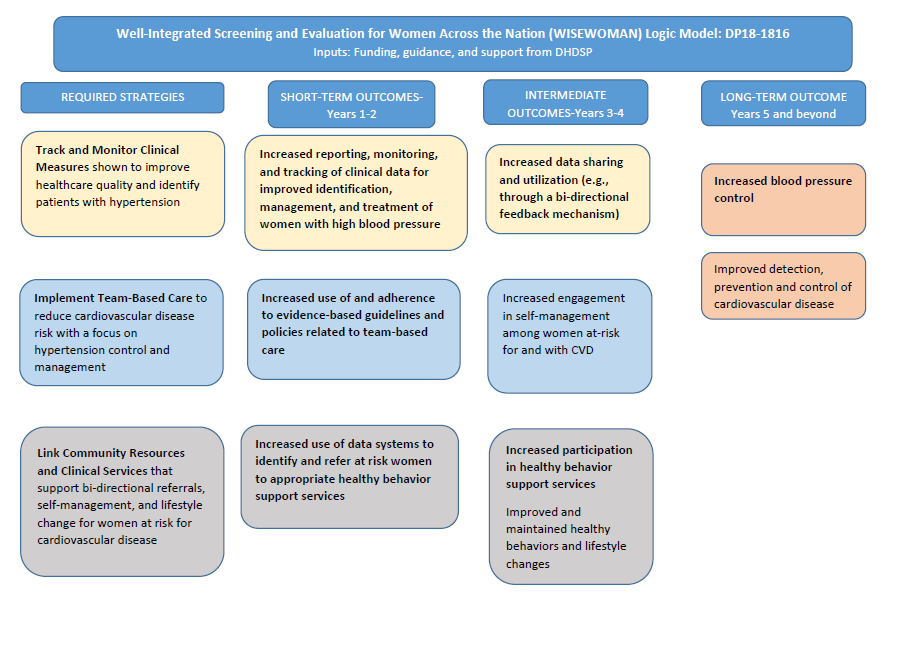

To address cardiovascular disease (CVD) risk among women and improve the health of the nation, the WISEWOMAN program has provided low-income, underinsured, or uninsured women ages 40 to 64 with services to support prevention, management, and treatment of cardiovascular disease since 1995. The program provides a unique combination of cardiovascular and chronic disease risk screening, healthy lifestyle support programs, and linkages to community resources. In 2018, CDC’s National Center for Chronic Disease Prevention and Health Promotion (NCCDPHP), Division of Heart Disease and Stroke Prevention (DHDSP) released its fifth notice of funding opportunity (NOFO) (DP18-1816) for the current WISEWOMAN program, which resulted in five-year cooperative agreements with 24 state, territorial, and tribal health departments, including 6 new and 18 continuing recipients from the previous NOFO. The current WISEWOMAN program emphasizes three strategies to reduce CVD risk and support hypertension control and management, including: (1) tracking and monitoring clinical measures, (2) implementing team-based care, and (3) linking community resources and clinical services to support care coordination, self-management, and lifestyle change. The 2018 cooperative agreement also included a competitive component that provided additional funding to seven recipients to support the implementation and evaluation of a small set of innovative strategies designed to reduce risks, complications, and barriers to the prevention and control of heart disease and stroke.

The purpose of the WISEWOMAN comprehensive evaluation is to assess the implementation of the program and measure the effect of the program on individual-, organizational-, and community-level outcomes. Results from evaluation efforts will provide information about the annual and long-term performance of the program activities to determine progress toward WISEWOMAN goals and outcomes and, to the extent feasible, document emerging, promising, and best practices that could be replicated and scaled up. DHDSP will use the results of the evaluation to improve interventions for disadvantaged women at risk for CVD that will contribute to reductions in morbidity and mortality in the nation. The WISEWOMAN evaluation is consistent with the needs of the CDC to meet its Government Performance and Results Act requirements.

The information collection for the evaluation of WISEWOMAN program represents new data collection to complement current data collection of minimum data elements (MDEs) (OMB # 0920-0612). The MDEs are collected on an ongoing basis and capture individual-level information about participants’ outcomes, services received, and demographics. Although the evaluation will use the MDEs for assessing participant-level changes in outcomes, the MDEs do not provide sufficient information about qualitative measures of program implementation, lessons learned, and emerging, promising, and best practices used by WISEWOMAN recipients. To address these gaps, the new data collection includes a program survey and site visits with qualitative interviews. Information collected through these two activities along with MDE data and other publicly available secondary data will be used together to evaluate the effect of the program in improving cardiovascular health among disadvantaged women.

2. Purpose and Use of the Information Collection

The information collected under this OMB request will provide the data necessary to support a thorough evaluation of the WISEWOMAN program and meet the specific goals of the evaluation. Underlying the evaluation of WISEWOMAN is the program logic model (Figure A.1). This framework was used to identify data elements related to program implementation and outcomes that are required in addition to MDEs, which are collected on an ongoing basis separately from these proposed data activities and capture individual-level information about participants’ outcomes, receipt of services, and demographics.

The purposes of the evaluation are aligned with WISEWOMAN program needs and objectives for accountability, programmatic decision making, and ongoing quality improvement. The evaluation of the WISEWOMAN program is focused around the following goals:

Provide information to assess implementation of the program

Provide evidence of program contribution to outcomes

Assess the relationship between program components and outcomes to identify the relative contribution of components to desired outcomes for programmatic decision making

Identify emerging, promising, and best practices in implementation, continued program improvement, replication, and dissemination1

Strengthen the evidence base for the WISEWOMAN program model, including use of community-clinical interventions to support cardiovascular health

Figure A.1. WISEWOMAN Logic Model

To reach these goals, the evaluation will consist of four components: a process evaluation, an outcomes evaluation, a targeted analysis of WISEWOMAN innovation funding recipients, and the summative evaluation. Each evaluation component will address a key evaluation question:

Process evaluation: What are the emerging, promising, and best practices for program implementation? What are the challenges to program implementation?

Outcome evaluation: What is the overall effect of WISEWOMAN on changes in outcomes?

Innovation evaluation: What are the innovative approaches that WISEWOMAN recipients are implementing to reduce risks, complications, and barriers to the prevention and control of cardiovascular disease?2

Summative evaluation: What WISEWOMAN components and pathways are associated with improvements in outcomes?3 What are recipients’ plans for sustaining this work?

The strength of the data collected for the monitoring and evaluation will be critical in the development of credible results. Table A.1 summarizes each data collection method and the evaluation components into which they will feed. The mixed-modes data collection approach will capture both quantitative measures of program activities, outputs, and outcomes, as well as qualitative impressions of program implementation, lessons learned, and emerging, promising, and best practices. This data collection approach will generate results useful to policymakers and practitioners, informing them about the implementation and value of WISEWOMAN as a multifaceted intervention to promote cardiovascular health.

Table A.1. Data collection efforts and evaluation component

Data collection method |

Respondents |

Process evaluation |

Outcomes evaluation |

Innovation analysis |

Summative evaluation |

Data collection requested under this OMB package |

|||||

Program survey |

All WISEWOMAN recipients |

Ö |

|

Ö |

Ö |

Site visits |

Recipient staff, providers, and partners from all WISEWOMAN recipients |

Ö |

Ö |

Ö |

Ö |

Data from existing data sources |

|||||

Minimum data elements |

All participants |

Ö |

Ö |

Ö |

Ö |

Recipient applications, data management plans, evaluation and performance measurement plans, work places, and evaluation products |

All WISEWOMAN recipients |

Ö |

Ö |

Ö |

Ö |

Recipient evaluation reports |

All WISEWOMAN recipients |

Ö |

|

Ö |

Ö |

Below, we discuss the specific use of the information collected under each method.

The program survey (Attachment C1) is designed to provide systematic information about the implementation of the WISEWOMAN program across its specified activities. The program survey will be fielded twice to collect data in the early and mature stages of program implementation. These data will be used for the process evaluation, innovation analysis, and summative evaluation to provide variables related to program components and intervention models that may explain outcomes. For the process evaluation, the information will be used to assess services offered and provided, intervention models used by recipients, and program achievements. For the innovation analysis, survey findings will provide context around program implementation that will help determine whether strategies used by the innovation funding recipients may be replicated by other recipients or programs. For the summative evaluation, the data will be used to assess specific program components or models and their association with outcomes.

Site visits (Attachments D1, D2, D3, and D4) will include key informant interviews of administrative staff, clinic staff, healthy behavior support service staff, and other partners that will cover several aspects of program activities, including staffing, services provided, populations reached and served, partnerships, networks, and reflections on challenges and successes. In addition to these topics, key informant interviews with the seven innovation funding recipients and their partner organizations will include questions about innovation strategy implementation, effectiveness, and scalability (Attachment D5). Qualitative information from the site visits will be used mainly to assess program implementation and identify and describe emerging, promising, and best practices throughout the process evaluation and the innovation analysis. In addition, qualitative information about the nuances of program implementation may provide context to quantitative outcomes for the outcomes and summative evaluations.

3. Use of Improved Information Technology and Burden Reduction

Program survey. The program survey will comply with the Government Paperwork Elimination Act (Public Law 105-277, Title XVII) by employing technology efficiently in an effort to reduce burden on respondents. The program survey for 24 respondents will use an editable PDF format. The editable PDF allows respondents to easily change responses. This format was used in 2015 and 2018 to administer a similar program survey of WISEWOMAN administrative staff, and respondents reported that the mode was easy to use. The self-administered format allows respondents to complete the survey at a day and time that is most convenient for them, with the option of completing the questionnaire over multiple sessions, as needed. The instrument solicits only information that corresponds to the specific research items discussed in Section A.2, above. No superfluous or unnecessary information is being requested of respondents.

Site Visits. As these are qualitative data collection efforts, CDC will not use information technology to collect information from a total of 189 persons contacted in the site visits (staff, providers, and partners which comprise seven key informant interviews at each of 17 WISEWOMAN recipients and 10 key informant interviews at each of the 7 WISEWOMAN recipients that also received innovation funding). Because the data collection is qualitative in nature and requires information from a relatively small number of individuals, it is not appropriate, practical, or cost-beneficial to build electronic instruments to collect the information. All information will be collected orally in person using discussion guides, supported by digital recordings. Site visit transcripts will be analyzed in NVivo, a software system used for the qualitative analysis of large amounts of data collected in text format.

4. Efforts to Identify Duplication and Use of Similar Information

The information that we are requesting to collect described in this OMB package is not available elsewhere. The WISEWOMAN program currently supports data collection of MDEs from recipients on screening and assessment, lifestyle program, and health coaching activities, outputs, cardiovascular risk, and outcomes (OMB # 0920-0612). While we plan to use the MDE data collected, there are no existing data sources that provide systematic or in-depth data on recipient implementation, which will be necessary to assess program implementation in relation to outcomes. In addition, we will use program data collected through other mechanisms, such as funding applications and recipient evaluation reports, whenever possible to supplement requested data. To the extent that they are available, we will use data from secondary sources to provide contextual community and program information over the period of the cooperative agreement. However, data from existing sources are not sufficient to evaluate the program. We describe the efforts to identify duplication and use of similar information for each data collection effort below.

Program Survey. CDC sought to avoid duplication of effort in the design of the form by adapting questions from the previous WISEWOMAN program survey (OMB #0920-1068). Twenty one questions were deleted because they were no longer required to address the evaluation questions (presented in A.6 below). In addition, 15 questions were added and 13 questions were revised to reflect changes in program priorities, such as an emphasis on use of data to conduct program activities and strategies used to address disparities in cardiovascular health.

Site Visit Data Collection Instruments. CDC revised the site visit data collection instruments (Attachment D1, D2, D3, and D4) that were used during the last cooperative agreement (OMB #0920-1068). Questions were added, deleted, and revised to address changes in program priorities. In addition, CDC developed a new interview protocol to collect information from the seven recipients receiving innovation funding. (Attachment D5).

5. Impact on Small Businesses or Other Small Entities

The comprehensive WISEWOMAN evaluation does not target small business and other small entities for participation in data collection activities. Individuals selected for interviews may be representatives of small businesses but there are no specific requirements for small businesses to participate.

Program Survey. The program survey will be conducted with all 24 WISEWOMAN recipients. The survey will occur during the second and fourth program years. The WISEWOMAN recipients are state and territorial health departments. We minimize burden by designing the instrument to include the minimum questions needed for evaluation. The program survey instrument will be administered in editable PDF format to allow respondents to stop and come back to the survey to accommodate respondents’ schedules.

Site Visits. This component of the evaluation was designed to minimize the burden on key informants/participants. In each of program years 2, 3, 4 and 5, a small burden will be placed on between five and seven WISEWOMAN recipients when a few of their staff and partner organization representatives will be invited to participate in the site visit. Each program will be visited once during the cooperative agreement. The method for selecting the recipients that will be visited each year is described in Support Statement B. During the site visits, the key informant interviews will be conducted in person. Burden will be minimized by restricting the interviews to 45 to 120 minutes and conducting them at a time and location that is convenient for the key informant.

6. Consequences of Collecting the Information Less Frequently

Below, we discuss the consequences of collecting the information less frequently for each data collection activity.

Program survey. To obtain a complete picture of WISEWOMAN implementation and contribution to systems over time, recipients will be asked to complete the program survey twice: during Program Years 2 and 4. Recipients will respond to the same questions in both rounds of the survey to capture changes in implementation and systems between the beginning and end of the cooperative agreement. The information collected from the first round will be used in the process evaluation to assess program implementation, and in the innovation analysis to assess the scalability of strategies implemented by the innovation funding recipients. Information from both survey periods will be used in the summative evaluation to measure variation in implementation and systems progress over the course of the cooperative agreement, which can be used by CDC to identify gaps in and approaches to improve implementation. Changes over time in implementation and systems will also be linked to changes in outcomes to identify factors associated with better outcomes. The findings from these analyses can be used to identify the best and promising practices associated with better outcomes to be used for purposes of replication and scale-up. Collecting information from all recipients at a single point in time (one round of the program survey) will allow for linkages of implementation and systems measured at one point in time to changes in outcomes. However, if the program survey was limited to a single round, it would preclude CDC from examining how implementation progressed over the cooperative agreement and prevent linkages of changes in implementation to changes in outcomes in the summative evaluation.

Site visits. Between five and seven recipients will participate in site visits in each of Program Years 2 through 5, resulting in 24 visits total across the three calendar years of data collection. Each recipient will receive one site visit. There will be no additional qualitative information collection under this OMB request. Data collection at the site level will enable us to observe program implementation directly and provide opportunities to interact with a wide variety of program staff and partners to understand the program context at a deeper level. Information collected through the site visits can be used to identify emerging, promising, and best practices in the process evaluation. In addition, this information can be used to describe recipients’ characteristics in the outcomes and summative evaluations and the innovation analysis, which can help identify promising and best practices.

7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5

This request fully complies with 5 CFR 1320.5. There are no special circumstances.

8. Comments in Response to the Federal Register Notice and Efforts to Consult Outside the Agency

A 60-day Federal Register Notice was published in the Federal Register on May 30, 2019, Vol. 84, No. 104, pp. 25058-25059 (see Attachment F). CDC did not receive any comments on the 60-day Notice.

There were no additional efforts to consult outside the agency.

9. Explanation of Any Payment or Gift to Respondents

Program survey respondents will not receive a monetary token of appreciation for their participation. Likewise, respondents for the in-depth interviews conducted during the site visits will not be provided with a monetary token of appreciation, as information will be collected as part of the participation process for recipients and will be essential for providing, targeting, and improving services for program participants. Participation in the survey data collection and the site visit interviews is part of WISEWOMAN administrative staff members’ professional positions as members of recipient organizations or their partners.

10. Protection of the Privacy and Confidentiality of Information Provided by Respondents

The CIO’s Information Systems Security Officer reviewed this submission and determination whether the Privacy Act applies is still under review. CDC’s contractors, General Dynamics Information Technology (GDIT) and Mathematica Policy Research, will have access to personally identifiable information for program survey respondents and site visit respondents. This information will be used to contact potential respondents to invite them to participate in the program survey and site visits, and for non-response follow-up for the program survey.

Below is an overview of the steps to be taken to ensure the privacy of respondents for the two data collection efforts under this request for OMB clearance, including the mode of data collection and targeted respondents; identifiable information to be collected; parties responsible for data collection, transmission, and storage; and parties with access to the data and uses of the data. Ultimately, all data files shared with CDC by the study contractors will be stripped of identifying information to maintain the privacy of those who participated in the evaluation.

The Program Survey (Attachment C1) is designed for self-administration through an editable PDF. Program managers may delegate completion of sections of the survey to other WISEWOMAN staff, but only one survey will be submitted per recipient in each survey round. No individually identifiable information about the respondents will be collected; only the identifying information for the recipient agencies will be included with the survey submission. Mathematica will assist CDC in administering the survey. Respondents will be instructed on how to transmit the survey back using email, and data will be stored on secure servers by Mathematica. Data from the program survey will be compiled into a SAS dataset for analysis. In the program survey data file, personally identifiable information, such as the name of the respondent, his / her email address, and the name of the organization, may be included in the initial data files. However, these identifiers will be delinked and ultimately removed from the final dataset, as unique identifiers will be assigned to each case. At the end of data collection and analysis, data will be permanently destroyed on the contractor servers. Mathematica will provide CDC with descriptive summary tables of survey results to facilitate discussions about evaluation findings. Data will then be analyzed and presented in tables and figures in the aggregate in reports. Because the number of potential respondents to the program survey is small (N=24), care will be taken in the reporting of findings to minimize the potential of identifying any single respondent in any reports or publications associated with the evaluation. Activities of specific recipient agencies may be mentioned in reports; however, individual respondents will not be identified in any materials.

Site Visits (Attachment D1, D2, D3, D4, and D5) will include key informant discussions/ interviews with four types of informants: WISEWOMAN program directors and administrative staff, WISEWOMAN healthy behavior support staff, health care providers, and partner organization representatives. Mathematica staff will conduct the site visits and CDC may choose to attend the site visits to listen in on the interviews. The interviews will be recorded and transcribed (only first names of respondents and the recipient agencies’ identifying information will be collected); all information will be transmitted and stored securely on the Mathematica servers. Site visit transcriptions will be coded and uploaded into a qualitative database by Mathematica, using software such as NVivo. Key themes will be developed based on the qualitative data analysis. Such identified themes and quotes may be included in reports; specific quotes will not be attributed to any single person in any reports. Original recordings and transcriptions from the site visits will not be shared with CDC to protect key informant privacy though de-identified notes highlighting key findings may be shared with CDC. To protect key informant privacy, recordings and transcripts will be destroyed at the end of the project.

Participation in data collection efforts will be voluntary for all recipients, their staff, and their partners identified as potential respondents. As part of establishing communication for the data collection efforts, potential respondents will be sent information about the study and what is required for participation. The elements of consent will be explained in these communications (see Attachments B, C and D). Respondents will be informed that they may refuse to answer any question, and can stop at any time without any known risks to participation. All data collected from the survey and site visits will be treated in a secure manner and will not be disclosed, unless otherwise compelled by law. Survey and site visit interview data will be stored by Mathematica on secure servers. Only approved members of the project team at GDIT and Mathematica will have access to the data collected through the two data collection efforts for the purposes of analysis and reporting. Data management procedures have not changed since the previous approval (OMB #0920-1068).

11. Institutional Review Board (IRB) and Justification for Sensitive Questions

IRB approval

In addition to specific security procedures for the various data collection activities, two approaches cut across the entire study. First, all contractor employees will sign a pledge to protect the privacy of data and respondent identity, and breaking that pledge is grounds for immediate dismissal and possible legal action. Second, the contractor provided the Institutional Review Board (IRB) with an overview of all of the data collection activities supporting the evaluation. The IRB determined that the proposed project does not involve research with human subjects, and that IRB approval is not required.

Sensitive Questions

The program survey and in-depth interviews conducted during the site visits will not contain any sensitive items. Although the Privacy Act does not apply to organizations, CDC acknowledges that information collection pertaining to organizational policies, performance data, or other practices may be viewed as sensitive if disclosure of such information could result in liability or competitive disadvantage to the organization. No such ramifications will exist for WISEWOMAN recipients. The information they provide will focus on program operations, challenges, and impacts on the populations they serve. These data will be used to identify areas for program improvement broadly, with no negative consequences for any single recipient or recipient partner. This information will be communicated in writing during the survey introductions and is part of the consent form signed by all persons engaging in site visit interviews.

12. Estimates of Annualized Burden Hours and Costs

In this section, we provide detailed information about the anticipated burden and cost estimates for each component of data collection in the WISEWOMAN evaluation. Tables A.2 and A.3 provide a summary of the annual burden hours and costs across the three years of data collection.

Program survey (Attachment C1). The burden estimate for this data collection effort is 60 minutes per respondent per survey year. The survey instrument is preceded by a survey invitation (Attachment C2) and may be followed up by a reminder email(s) (Attachment C3). The survey instrument will be completed twice, once in Program Year 2 and once in Program Year 4; we annualize the burden across the three data collection years in Tables A.2 and A.3. We anticipate the survey to be completed by the recipient program manager who is most closely related to WISEWOMAN implementation activities. The annualized hour and cost burden is estimated to be $48.27 based on the BLS median hourly wage for managerial positions (general, operational) as of 2017.4 The burden estimate for the program survey was confirmed through pre-testing activities conducted with recipient respondents in 2014. Although CDC revised the survey instrument to reflect changes in the program model, the length of the survey is not expected to change because a similar number of items were added and deleted. Furthermore, the level of effort required to respond to new and deleted items is similar – the question format and length of new items mirror the deleted survey items.

Site visits. The site visits will occur at 24 recipient programs. Site visitors will conduct 6 site visits per year in Program Years 2 and 3, seven site visits in Program Year 4, and five site visits in Program Year 5 for a total of 24 site visits across the three years of data collection. Each site visit will include a standard set of interviews with four types of staff (estimates are total burden estimates per respondent): 1 program administrator (Attachment D1; 90 minutes), 2 healthy behavior support staff (Attachment D2; 60 minutes each); 2 medical providers (Attachment D3; 60 minutes each); and 2 partner organization staff (Attachment D4; 60 minutes each). In addition, the seven site visits conducted during Program Year 4 with innovation funding recipients will include a supplemental set of interview questions about recipients’ innovative strategies to deliver WISEWOMAN services (Attachment D5; 45 minutes per respondent). The supplemental questions will be administered to the following respondents: the program administrator (for a total of 135 minutes of interviews); an additional healthy behavior support staff member; an additional medical provider; and an additional partner organization staff member. The annualized hour and cost burden for program administrator staff (recipient and partners) is estimated to be $48.27 based on the BLS median hourly wage for all managerial positions as of 2017.5 The annualized cost burden for recipient partners is estimated at $47.29 per hour based on the median wage for managerial positions in medical or health services management organizations as of 2017. 6 For the healthy behavior support staff, the annualized cost burden is estimated at $26.28 per hour based on the BLS median wage for health care social workers as of 2017.7 The median wage for medical providers participating in site visits is estimated at $95.55 based on BLS median hourly wage for family and general practitioners as of 2017.8

No pre-testing is planned for the site visit interview guides. During the development and implementation process, careful adherence will be paid to the amount of content covered within the amount of time allocated. Staff conducting these interviews will reduce the number of items covered, as needed, during the course of the interview to adhere to the burden estimates described above.

Table A.2. Estimated annualized burden hours

Type of Respondents |

Form Name |

No. of Respondents |

No. of Responses per Respondent |

Avg. Burden per Response (in hr) |

Total Burden (in hr) |

WISEWOMAN Recipient Administrators |

Program Survey (Attachment C1) |

16 |

1 |

1 |

16 |

Site Visit Discussion Guide (Attachment D1) |

8 |

1 |

90/60 |

12 |

|

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

1 |

45/60 |

2 |

|

Recipient Partners |

Site Visit Discussion Guide (Attachment D4) |

16 |

1 |

1 |

16 |

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

1 |

45/60 |

2 |

|

Healthy Behavior Support Staff |

Site Visit Discussion Guide (Attachment D2) |

16 |

1 |

1 |

16 |

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

1 |

45/60 |

2 |

|

Clinical Providers |

Site Visit Discussion Guide (Attachment D3) |

16 |

1 |

1 |

16 |

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

1 |

45/60 |

2 |

|

Total |

|

84 |

|||

For the recipient partners, healthy behavior support staff, and clinical providers, we estimate that approximately 60% of respondents will be from the state/local/tribal government sector, and 40% of respondents will be from the private sector.

The total estimated annualized cost to respondents is $4,492

Table A.3. Estimated annualized burden costs

Type of Respondents |

Form Name |

No. of Respondents |

Total Burden (in hr) |

Hourly Wage Rate |

Total Cost |

WISEWOMAN Recipient Administrators |

Program Survey (Attachment C1) |

16 |

16 |

$48.27 |

$772 |

Site Visit Discussion Guide (Attachment D1) |

8 |

12 |

$48.27 |

$579 |

|

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

2 |

$48.27 |

$97 |

|

Recipient Partners |

Site Visit Discussion Guide (Attachment D4) |

16 |

16 |

$47.29 |

$756 |

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

2 |

$47.29 |

$95 |

|

Healthy Behavior Support Staff |

Site Visit Discussion Guide (Attachment D2) |

16 |

16 |

$26.28 |

$420 |

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

2 |

$26.28 |

$53 |

|

Clinical Providers |

Site Visit Discussion Guide (Attachment D3) |

16 |

16 |

$95.55 |

$1,529 |

Innovation Site Visit Discussion Guide (Attachment D5) |

2 |

2 |

$95.55 |

$191 |

|

Total |

|

$4,492 |

|||

13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers

There are no capital or start-up costs to respondents associated with this data collection.

14. Annualized Cost to the Federal Government

Table A.4 presents the two types of costs to the government that will be incurred: (1) external contracted data collection and analyses and (2) government personnel.

1. The project is being conducted under a contract that was awarded on September 24, 2018. The contract is for a total of 5 years, including the three years of data collection activities. The annualized cost for the cost data collection task for the data contractor is estimated at $150,000, including travel for site visits.

2. Governmental costs for this project include personnel costs for federal staff involved in providing oversight and guidance for the planning and design of the assessment, refinement of the data collection tools, development of OMB materials, collection and analysis of the data, and reporting. These activities involve approximately 5% of two GS-12 health scientist and 5% of a GS-13 health scientist. The annualized cost of federal staff to the federal government is $12,671.

The total annualized cost to the federal government for the duration of this data collection is $162,671.

Table A.4. Estimated annualized federal government cost distribution

Type of Government Cost |

Annualized Cost |

Data contractor |

$150,000 |

Federal staff |

$12,671 |

GS-12 health scientist at 5% FTE |

$ 3,785 |

GS-12 health scientist at 5% FTE |

$ 3,785 |

GS-13 health scientist at 5% FTE |

$5,101 |

Total |

$162,671 |

15. Explanation for Program Changes or Adjustments

This is a new data collection.

16. Plans for Tabulation and Publication and Project Time Schedule

Analysis plan

The overarching evaluation design is a mixed-methods approach that will provide a comprehensive assessment of the WISEWOMAN program. Each component of the design builds on the previous components and informs the subsequent components. The evaluation also considers the multiple levels at which the program operates to improve outcomes (participant, recipient, and community levels) and the increased program emphasis on use of innovative strategies for CVD identification and treatment and engaging women in becoming informed and activated in their own CVD self-management.

Each proposed evaluation component and corresponding analytic approaches is intended to answer one of the three evaluation questions. The proposed data collection activities support one or more of the three evaluation components. Table A.5 lists the four evaluation questions linked to the evaluation components, data collection activities, and analytic approaches that will provide the evidence to help answer the questions. The outputs and outcomes assessed are those shown in the logic model (Figure A.1 in Section A.2).

Table A.5. Analytic approaches to answering evaluation questions

Evaluation Question (Evaluation Component) |

Data Source(s)a |

Analytic Approaches |

|

1 |

What are the emerging, promising, and best practices for program implementation? What are the challenges to program implementation? (Process evaluation) |

Program survey, site visits, and existing data sources |

Qualitative assessment to examine the processes and procedures recipients use to recruit and enroll participants; conduct cardiovascular disease risk screenings; provide healthy behavior support services; link to community resources; and track participation, service receipt, and outcomes. The qualitative assessment relies on coding of transcripts and notes from site visits (as well as various existing data sources). The purpose of the coding is to triangulate on key themes within the qualitative data collected and to organize it in a manner that permits comparisons of data from different sources. Quantitative assessment to describe program participation and receipt of services and referrals. The quantitative descriptive assessment includes the development of metrics (primarily from the program survey) to evaluate implementation and performance, such as progress toward program performance and enumeration of services provided by participants’ characteristics and risk. Both assessments can be used to examine the differences in stages of implementation to assess facilitators and barriers to implementation. |

2 |

What is the effect of the WISEWOMAN program on changes in outcomes? (Outcomes evaluation) |

Site visits and existing data sources |

Longitudinal analysis of changes in outcomes among WISEWOMAN participants over time: descriptive and multivariate analyses and an analysis of disparities. The primary data source for the outcomes is the MDEs collected for all participants over time by CDC. The descriptive analysis includes summaries of the mean values for outcomes at the first available time period and the mean values for changes in outcomes at each subsequent time period (See Table A.6 for an example of how outcomes results can be presented using contextual information from new data collection). The multivariate analysis adds key participant-, recipient-, and community-level variables to the bivariate analysis of changes in outcomes over time. The explanatory variables of interest will be taken from the MDEs as well as the site visits. Methods include ordinary least squares and logistic regression frameworks with an indicator variable for the time period to capture the change over time. (See Table A.7 for an example of how results can be presented using contextual information from new data collection) One approach to the disparities analysis is to examine how the difference between the outcomes for two groups of WISEWOMAN participants (for example, white participants and black/ African American participants) changes over time. This analysis will highlight whether different subgroups of women benefit similarly from the program. Key variables to examine in this analysis include race, ethnicity, and education level. |

3 |

What are the innovative approaches that WISEWOMAN recipients are implementing to reduce risks, complications, and barriers to the prevention and control of cardiovascular disease? (Innovation analysis) |

Program survey, site visits, and existing data sources |

Qualitative assessment to describe the work of the seven recipients who have received innovation funding, highlight best practices and lessons learned among these recipients, and examine whether these strategies could be replicated and scaled. |

4 |

What WISEWOMAN components and pathways are associated with improvements in outcomes? What are recipients’ plans for sustaining this work? (Summative evaluation) |

Program survey, site visits, and existing data sources |

Combines and synthesizes the information collected through the three years of data collection and the findings of the process evaluation and outcomes evaluation. The objective is to identify community, recipient, and participant characteristics associated with better or poorer participant outcomes. In the quantitative component, we will use both multivariate longitudinal analysis, as already described, and regression trees. Regression trees will help to identify characteristics and combinations of characteristics associated with changes in outcomes over time in a compact, succinct way. We will also consider statistical approaches, including hierarchical linear modeling, to account for our expectation that women receiving services from the same WISEWOMAN recipients are likely to have more similar changes in outcomes over time than women receiving services from different WISEWOMAN recipients,. |

a In addition to new data collection, there are existing data sources available for the evaluation including: MDEs, recipient applications, annual performance reports, data management plans, evaluation and performance measurement plans, work plans, and evaluation products.

Table A.6. Illustrative table shell - Average baseline outcomes and average change in outcomes among WISEWOMAN participants

|

Average baseline level |

Average

change |

Average

change |

Average

change |

||||||||

Outcomes domains |

Overall |

Program trait 1b |

Program trait 2 |

Overall |

Program trait 1 |

Program trait 2 |

Overall |

Program trait 1 |

Program trait 2 |

Overall |

Program trait 1 |

Program trait 2 |

Risk reduction counseling |

|

|

|

|

|

|

|

|

|

|

|

|

Hypertension/ blood pressure control |

|

|

|

|

|

|

|

|

|

|

|

|

Blood pressure monitoring |

|

|

|

|

|

|

|

|

|

|

|

|

Cholesterol |

|

|

|

|

|

|

|

|

|

|

|

|

Diabetes |

|

|

|

|

|

|

|

|

|

|

|

|

Medication adherence |

|

|

|

|

|

|

|

|

|

|

|

|

Cardiovascular risk factors |

|

|

|

|

|

|

|

|

|

|

|

|

Diet |

|

|

|

|

|

|

|

|

|

|

|

|

Exercise |

|

|

|

|

|

|

|

|

|

|

|

|

Tobacco use |

|

|

|

|

|

|

|

|

|

|

|

|

Alcohol use |

|

|

|

|

|

|

|

|

|

|

|

|

BMI |

|

|

|

|

|

|

|

|

|

|

|

|

Quality of life |

|

|

|

|

|

|

|

|

|

|

|

|

Alert values |

|

|

|

|

|

|

|

|

|

|

|

|

Referrals |

|

|

|

|

|

|

|

|

|

|

|

|

Completed referrals |

|

|

|

|

|

|

|

|

|

|

|

|

a WISEWOMAN participants return for follow-up on a rolling basis, so rather than examining change over time based on program years, we use the number of follow-ups each woman has received.

b For a full detailed list of the outcomes to be examined in the outcomes evaluation, see section III in Table B.1 (Attachment C)

c We can examine how outcomes and changes in outcomes vary by various characteristics of the communities and programs and aggregate participant characteristics. The program traits represent the characteristics that can be defined using the information to be collected in the program survey and site visits.

BMI =body mass index

Table A.7. Illustrative table shell – Marginal effects of the WISEWOMAN program on outcomes (multivariate regressions results)

Outcomes domainsa |

Follow-Up Period 1 |

Follow-Up Period 2 |

Follow-Up Period 3 |

Risk reduction counseling |

|

|

|

Overall |

Marginal effect (SE)b |

Marginal effect (SE) |

Marginal effect (SE) |

Program trait 1c |

… |

|

|

Program trait 2 |

|

|

|

Hypertension/ blood pressure control |

|

|

|

Overall |

|

|

|

Program trait 1 |

|

|

|

Program trait 2 |

|

|

|

Cholesterol |

|

|

|

Overall |

|

|

|

Program trait 1 |

|

|

|

Program trait 2 |

|

|

|

Diabetes |

|

|

|

Overall |

|

|

|

Program trait 1 |

|

|

|

Program trait 2 |

|

|

|

a For a full detailed list of the outcomes to be examined in the outcomes evaluation, see Table B.1 (Attachment C).

b In the longitudinal analysis, we will report the marginal effect, that is, the estimated change in outcomes, comparing baseline to each follow-up period (follow-up periods are defined based on participants’ follow-up visits).

c We can examine how outcomes and changes in outcomes vary by various characteristics of the communities and programs and aggregate participant characteristics. The program traits represent the characteristics that can be defined using the information to be collected in the program survey and site visits.

BMI =body mass index

Reports

Results from the evaluations will be summarized in four brief reports—one report for each of the process (Program Year 2), outcomes (Program Year 3), and summative evaluations (Program Year 5), as well as an updated outcomes evaluation report in Program Year 4 using available rescreening data. In addition, findings from the Program Year 4 evaluation of recipients receiving innovation funding will be summarized in recipient case studies. For each program year, infographics will be developed based on evaluation findings, and recipient profiles will be developed or updated.

Each product plays an important role, and taken together, the findings presented provide the most complete picture of the WISEWOMAN program. In addition to the annual evaluation reports and innovation recipient case studies, opportunities will be identified to present preliminary findings throughout the evaluation period (for example, sharing results tables during calls with recipients or briefings to CDC staff). The findings presented in these preliminary products do not represent additional findings beyond what will ultimately be presented in the four evaluation reports. They are chiefly an opportunity to share the findings prior to the full reports. Additional publications may include peer-reviewed journal articles and issue briefs to disseminate results to the broader community of policymakers and practitioners involved in the prevention and study of cardiovascular disease.

All four reports will include a description of the relevant evaluation methodology, data collection instruments, data analysis procedures, a summary of and results from quantitative and qualitative analyses, as well as conclusions on program performance and implications for program planning. The reports will be tailored to stakeholder needs, recognizing that these reports may be used for a variety of purposes. We provide a brief summary of the timing and content of each of the products produced as part of the evaluation:

Process evaluation report. The process evaluation report will provide a detailed description of and findings from the process evaluation conducted in Program Year 2. The report will synthesize the information collected in the first rounds of the program survey and the initial site visits conducted in Program Year 2 regarding how the WISEWOMAN program is being implemented with a particular focus on facilitators and barriers to successful implementation.

Outcomes evaluation report. The outcomes evaluation report will detail the results from the outcomes evaluation conducted in Program Year 3. The report will focus on estimating changes in outcomes among WISEWOMAN participants (measured using the MDEs). The outcomes evaluation report will be updated in Program Year 4 using newly available MDE data to further estimate changes in outcomes among WISEWOMAN participants.

Innovation funding recipient case studies. Case studies developed in Program Year 4 will highlight recipients receiving the additional innovation component funding, which is intended to support the implementation and evaluation of a small set of innovative strategies designed to reduce risks, complications, and barriers to the prevention and control of heart disease and stroke. Case studies will describe best practices and promising approaches based on data collected during site visits conducted with innovation funding recipients in Program Year 4.

Summative evaluation report. The summative evaluation report will detail the findings from the summative evaluation conducted in Program Year 5. The report will synthesize the findings from the process evaluation and outcomes evaluation along with additional information provided by the program survey and site visits (including another year of MDE data and the second round of the program survey). The report will provide the most comprehensive picture of how outcomes have changed over the cooperative agreement and the community, recipient, and participant factors (including changes in these factors) that are associated with these changes.

Evaluation infographics. Infographics based on evaluation findings will be developed in each of Program Years 2, 3, 4, and 5 to provide a visual representation of data collected through the evaluation.

Recipient profiles. Profiles highlighting each recipient and describing their partners, targeted population, strategies and activities, and performance measures and outcomes will be developed in Program Year 2 based on data collected through the site visits, program survey, MDEs, and other recipient documents. The recipient profiles will be updated in each of Program Years 3, 4, and 5.

Peer-reviewed journal publications. To disseminate evaluation results to the broader practice and research community focused on cardiovascular disease, findings from the evaluation may be summarized in a peer-reviewed journal article or series of articles developed by CDC. These articles may provide valuable insight about best practices in program implementation, changes in outcomes over time, and the influence of factors at multiple levels on outcomes.

Presentations and webinars. In Program Years 2 through 5, findings from the evaluation may be presented to WISEWOMAN funded programs and other WISEWOMAN stakeholders at CDC-sponsored meetings, including in-person trainings, teleconferences or webinars, and professional conferences.

Analysis plan for pre-test

CDC has completed a pre-test of the program survey with four recipients. All pre-tests were conducted using a paper version of the survey. The pre-test allowed us to debrief with respondents and collect information that will help to inform refinements and clarifications to the wording of new items. The instrument has been revised based on results of the pre-test and feedback from CDC staff.

Timeline

The evaluation timeline considers the need for evidence throughout the five-year project period and data collection over this period to ensure that information is gathered at appropriate points in time to support the various analyses under each of the four complementary evaluation components. The estimated schedule for key data collection, analysis, and reporting tasks relevant to this request for OMB approval is presented in Table A.8. The evaluation timeline is indicated by Program Years 2 through 5.

Key milestones after Program Year 1 are listed in relation to the estimated date of OMB clearance (beginning of Program Year 2). In Program Year 2, new data collection begins; activities include the first round of the program survey and six site visits to funded recipients. Program Year 3 will also include six site visits. In Program Year 4, the final round of the program survey will be conducted and seven programs will be visited. Finally, five programs will be visited in Program Year 5. The maximum three years of clearance is requested with the expectation that data collection will commence at the beginning of Calendar Year 2020 and close by the end of Calendar Year 2022.

In Program Years 2 through 5, updates to the evaluation plan will reflect any refinements to the specific evaluation design component for the program year, including the prioritization of questions and further specifications to the design approach. In addition, analysis and the development of evaluation reports will be conducted in Program Years 2 through 5 after the data collection in each year is complete.

Table A.8. Proposed project timeline

Activity |

Anticipated timeline |

Program Year 2: Process evaluation |

|

Data collection |

|

Develop data collection systems |

1 month after OMB approval (Spring 2020) |

Field program survey |

3-4 months after OMB approval (Spring - Summer 2020) |

Conduct 6 site visits |

2-5 months after OMB approval (Spring - Summer 2020) |

Develop and submit evaluation plan and report |

|

Updated evaluation plan |

1 month after OMB approval (Spring 2020) |

Analyze and synthesize data |

5-9 months after OMB approval (Summer - Fall 2020) |

Final evaluation report |

10 months after OMB approval (Winter 2021) |

Program Year 3: Outcomes evaluation |

|

Data collection |

|

Conduct 6 site visits |

14-17 months after OMB approval (Spring 2021) |

Develop and submit evaluation plan and report |

|

Updated evaluation plan |

12 months after OMB approval (Winter 2021) |

Analyze and synthesize data |

13-21 months after OMB approval (Spring – Fall 2021) |

Final evaluation report |

22 months after OMB approval (Winter 2022) |

Program Year 4: Updated outcomes evaluation and focused qualitative analysis |

|

Data collection |

|

Field program survey |

27-28 months after OMB approval (Spring - Summer 2022) |

Conduct 7 site visits with innovation funding recipients |

26-29 months after OMB approval (Spring – Summer 2022) |

Develop and submit evaluation plan and report |

|

Updated evaluation plan |

24 months after OMB approval (Winter 2022) |

Analyze and synthesize data |

28-33 months after OMB approval (Summer – Fall 2022) |

Final evaluation report |

34 months after OMB approval (Winter 2023) |

Program Year 5: Summative evaluation |

|

Data collection |

|

Conduct 5 site visits |

32-35 months after OMB approval (Fall 2022– Winter 2023) |

Develop and submit evaluation plan and report |

|

Updated evaluation plan |

32 months after OMB approval (Fall 2022) |

Analyze and synthesize data |

36-39 months after OMB approval (Winter 2022 – Spring 2023) |

Final evaluation report |

40 months after OMB approval (Summer 2023) |

17. Reason(s) Display of OMB Expiration Date is Inappropriate

There are no exceptions to the certification; the expiration date will be displayed.

18. Exceptions to Certification for Paperwork Reduction Act Submissions

There are no exceptions to the certification.

1 Best practices in this case are those shown to be effective across organizations based on research. In contrast, emerging and promising practices are those shown effective in a particular situation or under a specific circumstance and hold promise for adoption by other organizations.

2 Innovation funding recipients must focus on one or more strategies related to: (1) identifying and targeting hard to reach and underserved women; (2) working with healthcare systems or other stakeholders to expand use of telehealth technology to promote management of hypertension and high cholesterol; (3) implementing novel strategies to enhance referral, participation, and adherence in cardiac rehabilitation programs in traditional and community settings, including home-based settings; (4) implementing novel approaches to facilitate bi-directional referral between community programs/resources and health care systems (e.g., using electronic health records, digital blood pressure monitoring, 800 numbers, 211 referral systems, etc.); and (5) developing a statewide infrastructure to promote long-term sustainability/coverage for community health workers. Seven recipients received innovation funding.

3 Components refer to the activities conducted by WISEWOMAN recipients, and pathways are the ways in which the components or activities are translated into better outcomes. For example, health coaching sessions are an activity, and improved health knowledge and behaviors would be a pathway from health coaching to better outcomes, such as lower cardiovascular risk.

4 Source: BLS Website, as of March 1, 2019. [http://www.bls.gov/oes/current/oes111021.htm]

5 Source: BLS Website, as of March 1, 2019. [http://www.bls.gov/oes/current/oes111021.htm]

6 Source: BLS Website, as of March 1, 2019. [http://www.bls.gov/oes/current/oes119111.htm]

7 Source: BLS Website, as of March 1, 2019. [http://www.bls.gov/oes/current/oes211022.htm]

8 Source: BLS Website, as of March 1, 2019. [http://www.bls.gov/oes/current/oes291062.htm]

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Emily Wharton |

| File Modified | 0000-00-00 |

| File Created | 2021-01-15 |

© 2025 OMB.report | Privacy Policy