Coordinated Collection - Research & Analysis Plan

Odyssey Coordinated Collection_Research & Analysis Plan_Final.docx

Service Annual Survey

Coordinated Collection - Research & Analysis Plan

OMB: 0607-0422

![]()

Odyssey Coordinated Collection

Research & Analysis Plan

Version 1.0

October 24, 2019

Table of Contents

Introduction…………………………………………………………………………………………………………..…….3

Research Questions……………………….………………………….………………………………………………...3

Design……………………………………………………………………………………………………………………...….3

Analysis……..……………….………………………………….………………………………………………………...…4

Decisions………………………………..………………….………………………………………………………………..5

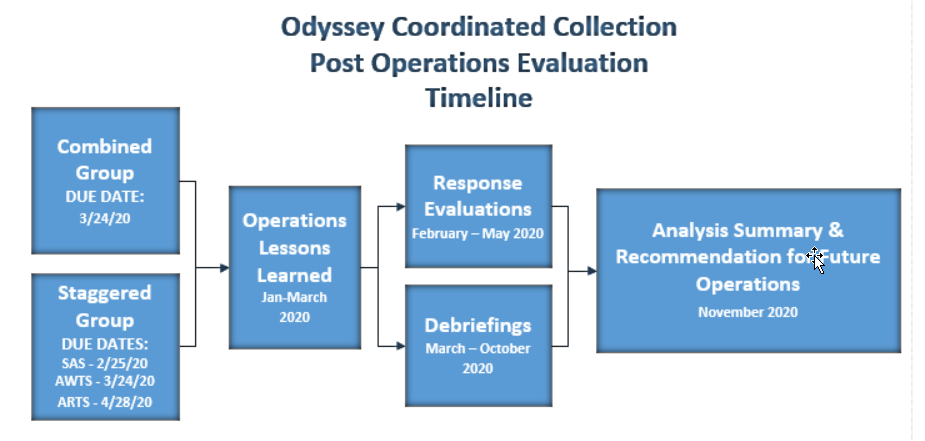

Timeline……………………………………………………………………………………………………………………….6

Approvals……………………………………………………………………………………………………………………..6

The Odyssey Coordinated Collection Team is conducting a pilot to better understand the processing, setup requirements, and data collection operations necessary to implement coordinated collection across the Annual Retail Trade Survey (ARTS), the Annual Wholesale Trade Survey (AWTS), and the Services Annual Survey (SAS), with a future goal of expansion to other Economic surveys.

In order to properly evaluate the outcomes of this pilot, we have put together the following research and analysis plan to set the stage for an organized process of post collection operations that will hopefully serve to assist us with implementing improvements based on successes, contingencies, and lessons learned during the pilot.

What are we hoping to learn from this coordinated collection test?

Workload measurement: Does consolidating surveys with multiple contacts change the type and amount of work for analysts handling feedback/changes (e.g. inbound calls, customer service assistance requirements, requests for breaking consolidation, etc.)?

Impact of one contact: Does consolidating surveys with multiple contacts into one contact point affect survey performance indicators and respondent burden?

Due dates: Do companies that have a single due date respond differently than those who have a staggered due date?

Data quality: Does combining survey mailings change data quality?

How will this test be set up and conducted?

Universe: Selected companies in at least two of the following surveys: ARTS, AWTS, and SAS.

Post Data Collection: Review paradata, conduct statistical analysis, and conduct debriefings with respondents and non-respondents.

Sample Design: Randomly assign cases in the universe between the single due date and staggered due date groups while controlling on the number of unique contact names across surveys and number of surveys/industries. Those cases in all three surveys will be analyzed separately due to the assignment of account managers.

From data collection:

Overall Comparison of the Impact of Coordinated Collection:

Review and analyze paradata from eCorr. This includes:

How often the delegation function was used

Accepted delegation vs pending/unaccepted delegation

Delegation to one contact, multiple contacts, or none

Compare number of contacts needed to complete the survey to prior year

The length of time between authentication code usage and check-ins

Use of time extensions across surveys

Analyze the timing of response (via check-in). This includes:

Comparing the number of days to respond to each survey to the prior year

Did companies respond to a survey that they did not in the past or vice versa?

Feedback from analysts. This includes:

Lessons learned

Changes in work (type, amount)

Review the help desk calls. This includes:

Number of phone calls received

Frequency of contact with companies selected

Reasons for inbound calls

Comments from outbound calls

Analyze the quality of responses by comparing them to the prior year.

Comparison of Single versus Staggered Due Dates Treatment groups:

Analyze the number of days to respond for each current year survey

Analyze the number of days to respond compared to the prior year

Analyze the length of time between authentication code usage and check-ins

From debriefings:

Overall

Obtain feedback on the communication strategy to assess the effectiveness from the respondent perspectives

For those selected as the primary contact, perceived burden vs actual burden to coordinate completion of the surveys

Distinguishing surveys:

Was messaging via letters, follow-up phone calls, outbound emails, and respondent portal design clear and beneficial?

Did concerns via inbound phone calls and emails get resolved clearly?

Thoughts on due date(s)

Issues due to multiple contacts within the business

Did providing the names of previous respondents in the pre-notification aid the primary contact?

Did the respondent (the primary contact) use the delegation function in eCorr? Why or why not?

Usability of the delegation function? Easy or difficult?

Review other surveys that respondents are in for consideration of future expansion

Single vs Staggered Due Date Approach:

Did the respondent pay attention to due date?

Did we get the message across that there are 2 or 3 separate requests?

Once respondents do one survey, do they recognize there is another 1 or 2 out there?

Did someone who responded to just one survey last year now respond to the others when consolidating the contact?

Does timing matter, with respect to the date the surveys open for collection and/or with respect to the due date?

Do respondents express more or less satisfaction with one approach vs the other? More or less confusion?

Do respondents differ in their preferences for a single contact person, depending on the approach?

Any other insights into one delivery strategy vs another?

Decisions to be impacted by test results:

Addition of surveys to coordinated collection going forward

What does a fully coordinated collection look like in the future state?

Communication for the primary contacts either completing for all surveys or coordinating completion

Updates needed in our processing systems to better handle coordinated collection

Measurable success criteria to determine future operations/expansion (GO/NO GO DECISION):

Response rates

Count of cases that broke consolidation

Changes in workload

Quality of data (items to measure tbd; potentially current to prior year data, annuals to indicators, flags (e.g. edited data))

Cost impact (mail/materials, resources/staffing)

Approver |

Date Approved |

Kimberly Moore, Chief, Economy-wide Statistics Division (EWD) |

10/25/19 |

Lisa Donaldson, Chief, Economic Management Division (EMD) |

10/30/19 |

Carol Caldwell, Chief, Economic Statistical Methods Division (ESMD) |

10/30/19 |

Stephanie Studds, Chief, Economic Indicators Division (EID) |

10/25/19 |

Michelle Karlsson, Assistant Division Chief for Collection (EMD) |

10/30/19 |

Diane Willimack, Assistant Division Chief for Measurement and Response Improvement |

10/31/19 |

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | ABS Load Spec |

| Author | Michael Burgess |

| File Modified | 0000-00-00 |

| File Created | 2021-01-15 |

© 2026 OMB.report | Privacy Policy