OASH Task 3_OMB PRA_SSB

OASH Task 3_OMB PRA_SSB.docx

Evaluation of Pregnancy Prevention Program Replications for High Risk and Hard to Reach Youth

OMB: 0990-0472

Supporting

Statement B for the

Office

of the Assistant Secretary for Health

Evaluation

of Pregnancy Prevention Program Replications for High Risk and Hard

to Reach Youth

Submitted to

Office

of Management and Budget

Office of Information and Regulatory

Affairs

Submitted by

Department

of Health and Human Services

Office of the Assistant Secretary

for Health

01/07/2019

B1. Respondent Universe and Sampling Methods 4

B2. Procedures for the Collection of Information 10

B3. Methods to Maximize Response Rates and Address Nonresponse 11

B4. Tests of Procedures or Methods to be Undertaken 11

B5. Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data 13

References 14

Exhibits

Exhibit 1. Implementing organizations and program characteristics 4

Exhibit 2. Estimated Sample Sizes 5

Exhibit 3. Stepwise Attrition Assumptions 6

Exhibit 4. Power Analyses and Minimal Detectable Effect Sizes 9

Exhibit 5. Individuals Consulted on Design, Data Collection, and Analyses 13

B1. Respondent Universe and Sampling Methods

Sampling Frame: Purposively Selected IOs. The evaluation will include multiple Implementing Organizations (IOs) selected via a competitive acquisition process to replicate adolescent pregnancy prevention programs. In accord with the statutory language, MITRE will fund each selected IO to implement one medically accurate and age -appropriate program that has been “proven effective through rigorous evaluation to reduce teen pregnancy, behavioral risk factors underlying teen pregnancy, or other associated risk factors.” Through their MITRE subcontracts, the IOs will be funded to deliver various components of their pregnancy prevention programs including curricula and associated materials to deliver services, as well as contextual/preparation activities such as staff recruitment and training and youth participant recruitment and enrollment.

The respondent universe for the evaluation includes program youth in each of the selected IOs and a matched comparison group of youth from three selected IOs. In addition, the respondent universe for parent and guardian consent forms will include the parents or guardians of the program and comparison group youth. This package makes assumptions about the respondent universe based on the current stage of the competitive acquisition process, which is close to—but not entirely—complete. Characteristics of potential IOs who have made it to the final round of the acquisition process are described below, including the previously proven effective programs being replicated, their proposed settings, target populations, and sample sizes (Exhibit 1). Given that the focus of this information collection is to evaluate preliminary data regarding replications of previously proven effective programs in high-risk and hard-to-reach populations, we placed emphasis on ensuring that there was diversity in the locations and target populations served across the IOs during the acquisition and selection process.

Exhibit 1. Implementing Organization and Program Characteristics

IO |

Program |

Location |

Maximum sample size |

Target youth population |

Setting for program delivery |

1 |

Love Notes |

Pima County, AZ |

120 |

Predominantly Hispanic youth |

School-based settings |

2 |

Love Notes |

Cincinnati, OH |

105 |

Predominantly low income and African American youth |

School-based setting + Alternative school |

3 |

Promoting Health Among Teens- Abstinence Only |

Houston, TX |

500 |

Youth who are adjudicated or attending alternative schools |

Juvenile detention, residential facilities, and alternative schools |

4 |

Teen Outreach Program |

Kayenta, AZ |

361 |

Youth in the Navajo Nation (94% Native American) |

School-based setting + community-based service learning |

5 |

Making Proud Choices |

Warsaw, VA |

60 |

Predominantly African American youth |

Community-based setting |

6 |

HIPTeens |

Atlanta, GA |

45 |

Refugee youth, 100% female |

Community-based setting |

7 |

Love Notes |

Southern California; San Antonio, TX; N. Chicago, IL; Bronx, NY |

650 |

Predominantly low-income Latinx youth in neighborhoods with disproportionately high birth rates |

Community-based settings |

8 |

Power Through Choices |

Chattanooga, TN |

30 |

Lower-resourced Appalachian youth |

Community-based settings |

IOs, sites, or programs based in clinics, private non-group and non-licensed residential homes, or one-on-one settings will not be eligible for funding under the acquisition, as described in Supporting Statement A and Appendix F.

Projected Sample Sizes for Respondent Universe. Based on our current knowledge about the potential IOs being included in the information collection, the potential respondent universe for program youth at enrollment is estimated to be 1,871 youth across 8 IOs (Exhibit 2).

Exhibit 2. Estimated Sample Size

Instrument |

Maximum Respondent Universe of Enrolled Youth |

Expected Maximum Response Rate (step-wise) |

Total Expected Completed Instruments |

Program Youth |

|

|

|

Parental consent |

1,871 |

65.0% |

1,216 |

Baseline survey/ youth assent |

1,216 |

100.0% |

1,216 |

First follow-up survey |

1,216 |

60.0% |

730 |

3-month follow-up survey |

730 |

60.0% |

438 |

Youth assent for focus group |

730 |

65.0% |

474 |

Focus groups |

474 |

60.0% |

285 |

Comparison Youth (Stage One, Initial Pool) |

|

|

|

Parental consent/youth assent |

4,533 |

65.0% |

2,946 |

Baseline survey |

2,946 |

100.0% |

2,946 |

Comparison Youth (Stage Two, Final Group) |

|

|

|

Baseline survey |

1,216 |

100% |

1,216 |

First follow-up survey |

1,216 |

60% |

730 |

3-month follow-up survey |

730 |

60% |

438 |

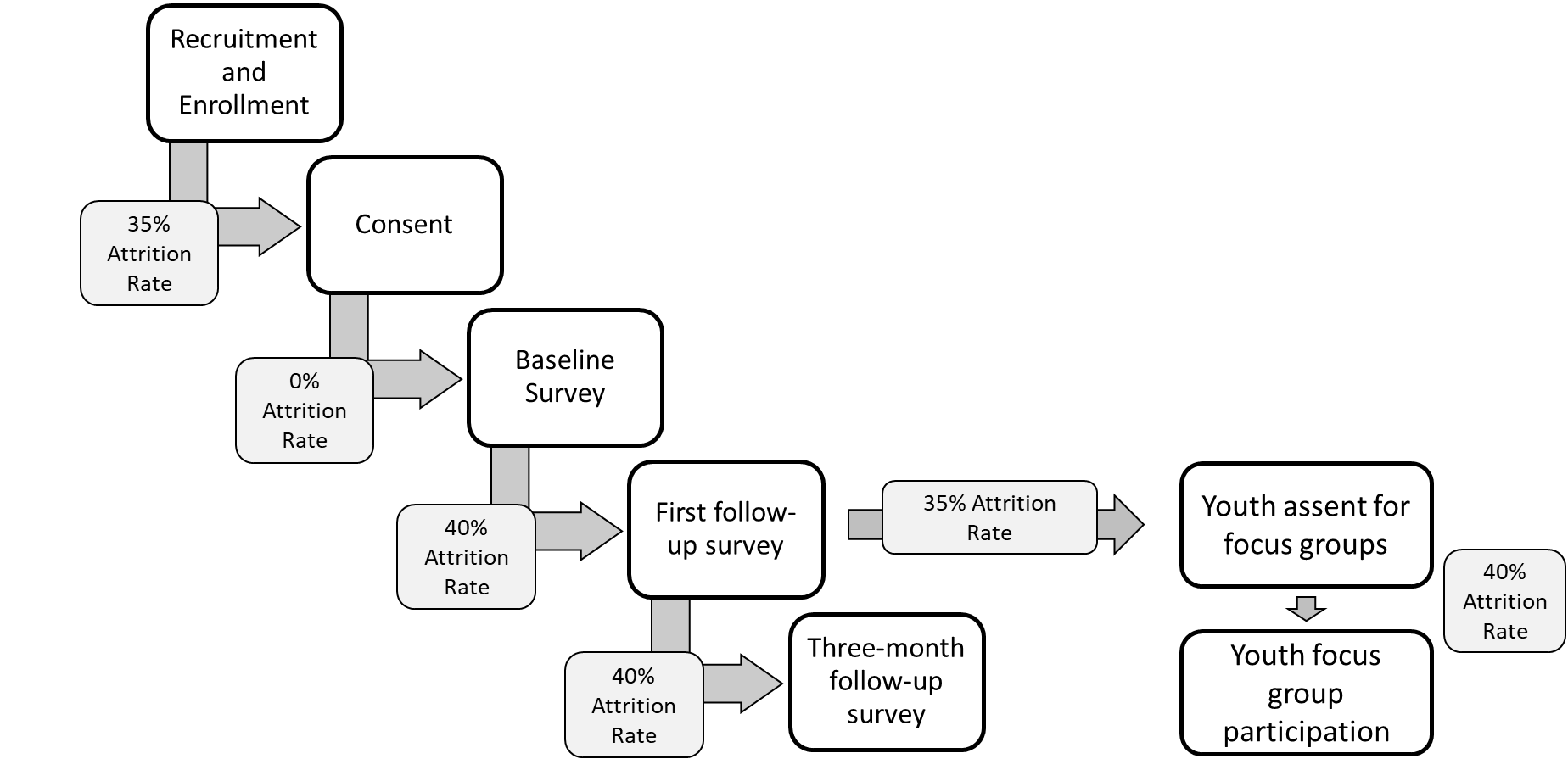

Our sample size estimates in Exhibit 2 are linked to the attrition rate assumptions in Exhibit 3 and the sampling frame for matched comparison group discussed in more detail below. For all youth in this evaluation, there is a stepwise sample size pipeline from consent to baseline survey, baseline to first follow-up survey, and first follow-up to three-month follow-up survey. Comparison youth will not be asked to participate in focus groups, so attrition rates related to focus groups apply only to program youth.

Exhibit 3. Stepwise Attrition Assumptions for Youth Data Collection

Parent/Guardian Consent. We assume that 35% of enrolled youth will not receive parent/guardian consent to participate in data collection. A 35% attrition rate from enrollment to consent is supported by evidence from previous evaluations of a variety of pregnancy prevention interventions (LaChausse, 2016; Abe, Barker, Chan, & Eucogco, 2016; Oman, Vesely, Green, & Clements-Nolle, 2018). Consent rates for these studies range from 57% to 98%. Given the proposed evaluation’s focus on youth who may be hard-to-reach, vulnerable, or underserved, we estimate a parent/guardian consent rate on the lower end of this range at 65% to avoid overestimating statistical power.

Baseline and Follow-up Surveys. All youth who receive parent/guardian consent will be invited to complete baseline surveys. Given that consent will be provided on the same day as the baseline survey (e.g. youth who participate in the survey must have consent before receiving the survey), we assume a 0% attrition rate from consent to baseline. Attrition rates from baseline to first follow-up survey, and 3-month follow-up survey were estimated based on best practices from HHS (Cole & Chizek, 2014), reports from prior evaluations (listed above), and adjustments due to OASH’s focus on hard-to-reach and underserved youth. We expect a 40% attrition rate from baseline to first follow-up, and an additional 40% attrition rate from first follow-up to 3-month follow-up.

Focus Groups. Two attrition rates are relevant for estimating youth focus group sample sizes. We assume that 35% of the youth participating in the first-follow up survey will not assent to participate in focus group. (Focus group assent and first youth follow-up surveys will both be collected during the final program session). In addition, we assume that 40% of the youth assenting to participate in focus groups will not participate, given the additional burden of transportation to another data collection session and time needed to participate in focus groups. Attrition estimates between focus group assent and focus group participation were based on prior literature, and informed by a plan to use best practices in retaining assenting program youth (Peterson-Sweeney, 2005), including reminder phone calls, emails, and/or texts about the focus group time and location.

Sampling Frame for Matched Comparison Youth. Matched-comparison youth will be recruited from communities represented by three of the IOs and their program youth. These IOs were selected for the comparison group analyses based on their organizational capacity to recruit a comparison group large enough for analyses and the targeted populations they serve. The comparison group for the overall evaluation will be comprised of youth in the comparison groups for these three IOs, essentially functioning as a within-program comparison group receiving no treatment.

Comparison group youth will be selected in a two-stage process. Recruitment for the initial pool of comparison group youth will target potential comparison youth with similar demographic characteristics (sex, age, race, ethnicity), living situations (presence of mother and/or father in the home, socioeconomic status), and risky health behaviors (drug and alcohol use) compared to the program youth. The treatment group will include youth in special populations (foster youth, etc.) that may be, on average, quite different than other youth in their communities on baseline sexual attitudes, beliefs, and behaviors. Therefore, in our initial matching phase, we assume that we will need three times as many comparison youth than program youth, in order to ultimately match with a 1:1 sample.

The 3:1 ratio of initial comparison youth to program youth was chosen based on the likely difficulty of identifying comparison youth who match program youth on key demographic and baseline variables in samples of hard-to-reach and underserved youth. Further, a recent evaluation of school-based sexual health education program had a full comparison group sample about twice as large as the final matched sample (Rotz, Goesling, Crofton, Manlove, & Welti, 2016)

During the initial phase of comparison group selection, youth and their parents will complete assent and consent forms respectively, and assented/consented youth will complete the baseline survey.

After baseline survey completion, propensity scores will be calculated from key demographics survey, answers to survey items about likelihood of program participation, and baseline measures of sexual attitudes, beliefs, intentions and behaviors. Program and comparison youth for whom the propensity score is less than .10 and greater than .90 will be discarded, in order to reduce bias in the estimated treatment effect in final analyses (Angrist & Pischke, 2009; Crump, Hotz, Imbens, & Mitnik, 2009; McKenzie, Stillman, & Gibson, 2010).

Three-month follow-up surveys will only be collected from comparison group youth included in the 1:1 matched sample. Due to lag between data collection, data cleaning, and calculation of propensity scores, it is possible that initial follow-up survey data may be collected from the larger sample of comparison group youth. However, to avoid overestimating power at first follow-up, sample sizes for this potential event are not included in the current proposal.

Power Analysis. We examined whether the estimated sample sizes are large enough to detect statistically meaningful differences in proximal or behavioral outcomes between program and comparison group members if those differences do in fact exist. There are two aims for the evaluation’s analyses, as described in SSA and repeated here.

Aim 1: To what degree can the effects of previously proven-effective pregnancy prevention programs be replicated on youth knowledge, attitudes, intentions, beliefs, and behaviors related to sexual activity and health, particularly among hard-to-reach and high-risk youth ?

Aim 2: To what degree do knowledge, attitudes, intentions, beliefs, and behaviors related to sexual activity and health change after exposure to previously proven effective sexual health programs among hard-to-reach and high-risk youth?

To determine our capacity to address our research questions with acceptable levels of power, we estimated the minimal detectable effect size (MDES), or the smallest true effect that is detectable for a given sample size (Bloom, 1995). The minimal detectable effect size is measured using a standardized “effect size,” which involves dividing an intervention’s impact by the standard deviation of the outcome. A lower number on the scale of MDES estimates (0 to 1) indicates a “smaller” effect size of the program can be detected. We calculated the MDES of the current evaluation using PowerUp! (Dong, Kelcey, Maynard, & Spybrook, 2015; Dong & Maynard, 2013), which incorporates well-established formulae and methods for power calculation for multilevel (clustered) research designs. To avoid overestimating power, we hypothesized that meaningful clusters exist at the implementing organization level; however, this hypothesis will be tested using intraclass correlations once data are collected, and adjustments to analyses made accordingly.

The power analyses required us to make assumptions about proportion of variance (or the or R2) in proximal or behavioral outcomes explained by covariates, including baseline measure of the outcomes, youth demographics, and a fixed effect for each IO. Based on prior research and published reports about best practices in evaluation, we conservatively assumed an R2 of 0.28.1 We also assumed two-tailed tests of significance, and made the standard assumption of power at 0.80.

Exhibit 4 shows the MDES for Aim 1 (examining between-subjects effects of programs on outcomes among comparison and program youth) and Aim 2 (examining within-subjects effects of programs on outcomes over time in program youth only). Overall, these analyses indicate that the proposed information collection will be adequately powered to detect existing statistically significant effects on the primary outcomes of interest for Aim 1, and almost all of the primary outcomes of interest for Aim 2.

We compared previously reported effect sizes in the literature for studies with positive and statistically significant findings with our estimated MDEs for the current evaluation.2 Effect sizes from prior evaluations were extracted from the following programs: Project TALC, Teen Outreach Program, Love Notes, Children’s Aid Society-Carrera Program, Reducing the Risk, SiHLE, Project IMAGE, Raising Healthy Children, Sisters Saving Sisters, COMPAS, Keeping it Real, It’s Your Game, Generations, Horizons, Positive Prevention PLUS, and 2 unnamed programs. It is important to note that these represent a range of program types—including sexual health education, sexual risk avoidance, and youth development programs—implemented in a variety of settings, in order for our estimates to be similar to programs included in this evaluation.

Exhibit 4. Power Analyses and Minimal Detectable Effect Sizes

Construct |

Effect Sizes from Prior Evaluations |

Aim 1: Between-Subjects Analysis for 3 IOs (Program + Comparison Group) |

Aim 2: Within-Subjects Analysis for All 8 IOs (Program Youth Only) |

||

Sample Size* |

MDES |

Sample Size* |

MDES |

||

Behavioral Outcomes (3-Month Follow-up only) |

|||||

Pregnancy3 |

0.220 |

Total: n = 707 |

0.179 |

Total: n = 438 |

0.227 |

STI 4 |

0.587 |

Total: n = 707

|

0.179 |

Total: n = 438 |

0.227 |

Protected sexual activity5 |

0.352 |

Total: n =707 |

0.179 |

Total: n = 438 |

0.227 |

KABI Outcomes (First Follow-Up and 3-Month Follow-up) |

|||||

Knowledge6 |

0.518 |

First Follow-up: 1,179

3-Month Follow-up: 707 |

First Follow-Up: MDES = 0.139

3-Month Follow-up: MDES = 0.179 |

First Follow-up: n = 730

3-Month Follow-up: n = 438 |

First Follow-Up: MDES = 0.176

3-Month Follow-up: MDES = 0.227 |

Attitudes & Beliefs7 |

0.240 |

First Follow-up: 1,179

3-Month Follow-up: 707 |

First Follow-Up: MDES = 0.139

3-Month Follow-up: MDES = 0.179 |

First Follow-up: n = 730

3-Month Follow-up: n = 438 |

First Follow-Up: MDES = 0.176

3-Month Follow-up: MDES = 0.227 |

Intentions8 |

0.224 |

First Follow-up: 1,179

3-Month Follow-up: 707 |

First Follow-Up: MDES = 0.09 to 0.11

3-Month Follow-up: MDES = 0.139 |

First Follow-up: n = 730

3-Month Follow-up: n = 438 |

First Follow-Up: MDES = 0.176

3-Month Follow-up: MDES = 0.227 |

*All Sample Sizes account for attrition estimates at the indicated time points. |

|||||

Estimated MDES range from 0.139 to 0.227. These MDES all exceed the MDES reported in the literature previously (range: 0.220 to 0.587), with the exception of the MDES for within-subjects analyses of changes in pregnancy and sexual activity intentions over time among program youth at 3 months. Given that pregnancies are indicators of long term sexual health outcomes, rare incidents, and difficult to change (as evidenced by the relatively lower effect size indicated by prior evaluations), this result is not unexpected. Previous evaluations have found it difficult to change sexual activity intentions (as evidenced by the relatively low effect size from prior evaluations), and measurable outcomes vary (e.g. some evaluations measured intention to use condoms, others measured intentions to engage or not engage in sexual activity generally). Nevertheless, findings for pregnancy and intentions outcomes in within-subjects analyses at 3 months will be interpreted only as exploratory and preliminary data.

B2. Procedures for the Collection of Information

In this section, data collection plans describing the consent/ assent, survey instrument delivery/completion, focus group implementation, and data management procedures are described.

Parent/Guardian Consent: The parent consent forms are provided in Appendix B. Consent forms ask parents (or guardians) to consent to their child to take the surveys and to indicate whether they provide consent for their child’s demographic data to be used in analyses, regardless of whether they consent to participation in the evaluation. This additional step will allow the evaluation team to collect and analyze demographic data to assess the extent of nonresponse bias.

The parents/guardians of program youth will also be asked to consent to focus groups.

For program youth, parents/guardians must sign the form and have the youth return it to the health educator or other IO staff. If a consent form for the evaluation cannot be obtained at the time of enrollment, the health educator will give youth a parental consent form to take home and complete. Data from surveys and focus groups will only be derived from youth with completed and affirmative consent and assent forms available by the end of the program.

For comparison youth, MITRE or the data collection subcontractor will obtain consent forms. The proposed methods to be used to facilitate improved response rates are described in section B3 and will be updated and included in the final evaluation plan.

Youth Baseline, First Follow-Up, and Third Follow-up Survey: Each survey is anticipated to take approximately 50 minutes to complete; at baseline, youth will also be asked to assent to participate, which will take an additional 10 minutes. The data collection subcontractor for this evaluation will collect all pre- and post-survey data. Surveys will be administered via an electronic format to both program and comparison youth.

For program youth, the contractor MITRE will train and supervise data collection staff at the IOs, providing technical assistance to IOs to facilitate data collection. For comparison youth, the contractor, MITRE, will either conduct all data collection, or will train and supervise a data collection subcontractor and train their team.

The surveys are included in Appendices D and E. Survey data will be collected at approximately the same time for youth in both the program and the comparison group corresponding to a given IO. The baseline survey will be administered immediately (ideally within a week) before the program begins and first follow-up surveys after the program ends (ideally on the last day of the program), for both youth in the program and the comparison group.

Focus Groups

The focus group protocol is described in Appendix F. In addition to primary data provided directly by the youth, observations made by focus group facilitators and captured in audio-recordings of the focus group sessions will serve to augment study team understanding of youth lived experiences.

B3. Methods to Maximize Response Rates and Address Nonresponse

To maximize response rates, we intend to use strategies previously by HHS Office of Adolescent Health (OAH) (Cole & Chizek, 2014). These strategies include planned follow-up protocols (flyers, phone calls, and other reminders), an emphasis on in-person data collection for program and comparison youth, the use of incentives, and collection of follow-up data from all consented youth possible, even if they do not complete the program or if they have a low dose. To account for and evaluate attrition, survey non-response, and item-level nonresponse, the following methods will be employed.

Attrition. For overall study attrition, we will conduct analyses of youth participant attrition at various steps in the data collection process described in Exhibit 3 above, and the extent to which attrition differs for program and comparison group members.

Nonresponse bias analysis Available demographic data (including data from enrollment forms) will be used to conduct nonresponse bias analyses to assess differences between youth choosing to complete at least one survey item and youth not choosing to complete the survey.

Survey item nonresponse. In addition to examining survey non-response, we will also report statistics on missing data for each youth survey item and look at patterns in item-level non-response (e.g., by gender, age, race/ethnicity, etc.). Tests of data missingness patterns and frequencies by item and case will be conducted. Although imputation may be considered as a potential approach for addressing survey item nonresponse, imputation will not be applied to demographic data or key outcome variables.

B4. Tests of Procedures or Methods to be Undertaken

As much as possible, the data collection instruments for the study draw on surveys, forms, and protocols that have been used successfully in previous federal studies. In this section, we describe which existing resources informed the surveys and focus groups, and the cognitive testing planned to evaluate the clarity of certain items or protocols.

Youth Baseline, First Follow-up, and Three-Month Follow-Up Surveys. The baseline, first follow-up, and three-month follow-up surveys were modeled on instruments used in previous studies addressing similar topics with similar populations. These instruments are listed and cited in Appendices D and E in the survey matrix.

Most of the questions in the baseline questionnaire are based on questions used in previous questionnaires. We will conduct cognitive testing of the surveys with 9 youth aged 12 to 16. The cognitive testing may identify questions that are not clearly worded, or protocols that are not clearly articulated. The study team will revise the questionnaire accordingly, and will report how many youth were able to complete the questionnaire in the estimated allotted timeframe (50 minutes). If some youth are not able to complete the questionnaire in the allotted timeframe, the study team may shorten the questionnaire to ensure it can be completed with minimal participant burden.

Focus group protocol. Similarly, questions in the focus group protocol were derived from existing sources on best practices for qualitative research and focus groups focused on sexual and reproductive health for youth and adolescents (Hollis, Openshaw, & Goble, 2002; International Women’s Health Coalition, 2015; Kennedy, Kools, & Krueger, 2001; Krueger & Casey, 2015; Liamputtong, 2006; Liamputtong, 2011; McDonagh & Bateman, 2012; Peterson-Sweeney, 2005; U.S. Department of Health and Human Services, Office of Adolescent Health, n.d.).

We will conduct cognitive testing of the focus group protocol with 9 youth aged 12 to 16. The cognitive testing may identify questions that are not clearly worded, timing miscalculations, and additional resource needs. The study team will revise the protocol accordingly, and will report the average length of the focus group protocol. If facilitators are unable to deliver the semi-structured interview guide (i.e. script) or any other components of the focus group protocol in the allotted timeframe, the study team may adapt the protocol to ensure it can be implemented with minimal participant burden.

Study team field staff will be available to answer questions about implementing these data collection procedures throughout the data collection period. Staff will be trained to respond to questions about the study and individual forms, so they can provide technical assistance and report any issues that come up in the field.

B5. Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data

Individuals consulted on the statistical aspects of the study are listed in Exhibit 5.

Exhibit 5. Individuals Consulted on Design, Data Collection, and Analysis

Name |

|

Role |

|||

Design |

Collect |

Analyze |

Other/Additional |

||

Stefanie

Schmidt, PhD |

|

|

|

Deliverable review |

|

Carol

Ward, DrPH |

|

|

|

Deliverable review |

|

Lauren Honess-Morreale, MPH, PMP MITRE Corporation |

|

|

|

Deliverable review and approval |

|

Kim Sprague, Ed.M. MITRE Corporation |

|

|

|

|

|

Beth Linas, MPH, PhD MITRE Corporation |

|

|

|

Deliverable review |

|

Jaclyn Saltzman, MPH, PhD MITRE Corporation |

|

|

|

Deliverable review |

|

Sarah Kriz, PhD MITRE Corporation |

|

|

|

Deliverable review |

|

Angie Hinzey, EdD, MPH, CHES MITRE Corporation |

|

|

|

Deliverable review |

|

Nanci Coppola, MD Office of the Assistant Secretary for Health |

|

|

|

Deliverable review and approval |

|

Alicia

Richmond Scott, MSW |

|

|

|

Deliverable review and approval |

|

References

Abe, Y., Barker, L. T., Chan, V., & Eucogco, J. (2016). Culturally Responsive Adolescent Pregnancy and Sexually Transmitted Infection Prevention Program for Middle School Students in Hawaii ‘i. American Journal of Public Health, 106(S1), S110-S116.

Angrist, J. D., & Pischke, J.-S. (2009). Mostly harmless econometrics: An empiricist's companion.

Princeton: Princeton University Press.

Barbee, A. P., Cunningham, M. R., van Zyl, M. A., Antle, B. F., & Langley, C. N. (2016). Impact

of two adolescent pregnancy prevention interventions on risky sexual behavior: A three-arm cluster randomized control trial. American Journal of Public Health, 106, S85-S90.

doi:10.2105/AJPH.2016.303429

Bloom, H. S. (1995). Minimum detectable effects: A simple way to report the statistical power of experimental designs. Evaluation review, 19(5), 547-556.

Bull, S., Devine, S., Schmiege, S. J., Pickard, L., Campbell, J., & Shlay, J. C. (2016). Text messaging, teen outreach program, and sexual health behavior: A cluster randomized trial. American Journal of Public Health, 106, S117-S124. doi:10.2105/AJPH.2016.303363

Champion, J. D. & Collins, J. L. (2012). Comparison of a theory-based (AIDS risk reduction model) cognitive behavioral intervention versus enhanced counseling for abused ethnic minority adolescent women on infection with sexually transmitted infection: Results of a randomized controlled trial. International Journal of Nursing Studies, 49(2), 138-150.

Cole, R. & Chizek, S. (2014). Evaluation Technical Assistance Brief for OAH & ACYF Teenage Pregnancy Prevention Grantees: Sample Attrition in Teen Pregnancy Prevention Impact Evaluations (Contract #HHSP233201300416G). Washington, D.C.: U.S. Department of Health and Human Services, Office of Adolescent Health

Covington, R. D., Goesling, B., Clark Tuttle, C., Crofton, M., Manlove, J., Oman, R. F., and Vesely, S. (2016) Final Impacts of the POWER Through Choices Program. Washington, DC: U.S. Department of Health and Human Services, Office of Adolescent Health.

Crump, R. K., Hotz, V. J., Imbens, G. W., & Mitnik, O. A. (2009). Dealing with limited overlap in

estimation of average treatment effects. Biometrika, 96(1), 187-199.

Daley, E.M., Buhi, E.R., Wang, W., Singleton, A., Debate, R., Marhefka, S., et al. (2015) Evaluation of Wyman’s Teen Outreach Program® in Florida: Final Impact Report for Florida Department of Health. Findings from the Replication of an Evidence-Based Teen Pregnancy Prevention Program. Tampa, FL: The University of South Florida.

DiClemente, R. J., Wingood, G. M., Rose, E. S., Sales, J. M., Lang, D. L., Caliendo, A. M., et al. (2009). Efficacy of sexually transmitted Disease/Human immunodeficiency virus sexual risk-reduction intervention for african american adolescent females seeking sexual health services: A randomized controlled trial. Archives of Pediatrics & Adolescent Medicine, 163(12), 1112-1121.

Dierschke, N., Gelfond, J., Lowe, D., Schenken, R.S., and Plastino, K. (2015). Evaluation of Need to Know (N2K) in South Texas: Findings From an Innovative Demonstration Program for Teen Pregnancy Prevention Program. San Antonio, Texas: The University of Texas Health Science Center at San Antonio.

Dong, N., Kelcey, B., Maynard, R. & Spybrook, J. (2015) PowerUp! Tool for power

analysis. www.causalevaluation.org.

Dong, N., & Maynard, R. A. (2013). PowerUp!: A tool for calculating minimum detectable effect

sizes and minimum required sample sizes for experimental and quasi-experimental design studies. Journal of Research on Educational Effectiveness, 6(1), 24-67.

Espada, J.P., Orgilés, M., Morales, A., Ballester, R., & Huedo-Medina, T.B. (2012) Effectiveness of a

School HIV/AIDS Prevention Program for Spanish Adolescents. AIDS Education and Prevention, 24(1), 500–513.

Hawkins, J. D., Kosterman, R., Catalano, R. F., Hill, K. G., & Abbott, R. D. (2008). Effects of Social Development Intervention in Childhood 15 Years Later. Archives of Pediatrics & Adolescent Medicine, 162(12), 1133-1141.

Hollis, V., Openshaw, S., and Goble, R. (2002). Conducting focus groups: Purpose and practicalities. British Journal of Occupational Therapy, 65(1):2-8.

International Women’s Health Coalition (2015). Ensuring Youth Participation in Sexual and Reproductive Health Programs: What We Know. Retrieved from: https://iwhc.org/wp-content/uploads/2015/03/youth-participation.pdf

Jemmott, J. B., Jemmott, L. S., Braverman, P. K., & Fong, G. T. (2005). HIV/STD risk reduction interventions for African American and Latino adolescent girls at an adolescent medicine clinic: A randomized controlled trial. Archives of Pediatrics & Adolescent Medicine, 159(5), 440-449.

Jemmott, J. B., Jemmott, L. S., & Fong, G. T. (1998). Abstinence and safer sex HIV risk-reduction interventions for African American adolescents: a randomized controlled trial. JAMA, 279(19), 1529-1536.

Kennedy, C., Kools, S., and Krueger, R. (2001). Methodological considerations in children’s focus groups. Nursing Research, 50, 3:184-187.

Krueger, R. and Casey, M.A. (2015). Focus groups: A practical guide for applied research (5th ed). Thousand Oaks, CA: SAGE Publications, Inc.

LaChausse, R. G. (2016). A clustered randomized controlled trial of the positive prevention PLUS adolescent pregnancy prevention program. American Journal of Public Health, 106, S91-S96. doi:10.2105/AJPH.2016.303414

Lewin, A., Mitchell, S., Beers, L., Schmitz, K., & Boudreaux, M. (2016). Improved contraceptive use among teen mothers in a patient-centered medical home. Journal of Adolescent Health, 59(2), 171-176.

Liamputtong, P. (2006). Researching the vulnerable: A guide to sensitive research methods. Thousand Oaks, CA: SAGE Publications, Ltd.

Liamputtong, P. (2011). Focus group methodology: Principle and practice. Thousand Oaks, CA: SAGE Publications, Ltd.

Mahat, G., Scoloveno, M.A., Leon, T.D., & Frenkel, J. (2008). Preliminary Evidence of an Adolescent HIV/AIDS Peer Education Program. Journal of Pediatric Nursing, 23(1), 358–363.

McDonagh, J. and Bateman, B. (2012). Nothing about us without us: considerations for research involving young people. Arch Dis Child Educ Pract Ed, 97:55–60. doi:10.1136/adc.2010.197947

McKenzie, D., Stillman, S., & Gibson, J. (2010). How important is selection? Experimental vs. non-

experimental measures of the income gains from migration. Journal of the European Economic

Association, 8(4), 913-945.

Morales, A., Espada, J. P., & Orgilés, M. (2015). A 1-year follow-up evaluation of a sexual-health education program for Spanish adolescents compared with a well-established program. The European Journal of Public Health, 26(1), 35-41.

Oman, R. F., Vesely, S. K., Green, J., Clements-Nolle, K., & Lu, M. (2018). Adolescent pregnancy prevention among youths living in group care homes: a cluster randomized controlled trial. American Journal of Public Health, 108(S1), S38-S44.

Philliber, S., Kaye, J. W., Herrling, S., & West, E. (2002). Preventing pregnancy and improving health care access among teenagers: An evaluation of the Children's Aid Society-Carrera program. Perspectives on Sexual and Reproductive Health, 34(5), 244-251.

Peskin, M.F., Shegog, R., Markham, C.M., Thiel, M., Baumler, E.R., Addy, R.C., Gabay, E.K., Emery, S.T. (2015). Efficacy of Its Your Game-Tech: A Computer-Based Sexual Health Education Program for Middle School Youth. Journal of Adolescent Health, 56, 515–521.

Peterson-Sweeney, K. (2005). The Use of Focus Groups in Pediatric and Adolescent Research. Journal of Pediatr Health Care. 19, 104-110

Piotrowski, Z. H., & Hedeker, D. (2016). Evaluation of the Be the Exception sixth-grade program in rural communities to delay the onset of sexual behavior. American Journal of Public Health, 106(S1), S132-S139.

Roberto, A. J., Zimmerman, R. S., Carlyle, K. E., & Abner, E. L. (2007). A computer-based approach to preventing pregnancy, STD, and HIV in rural adolescents. Journal of Health Communication, 12(1), 53-76.

Robinson, W.T., Kaufman, R. & Cahill, L. (2016). Evaluation of the Teen Outreach Program in Louisiana. New Orleans, LA. Louisiana State University Health Sciences Center at New Orleans, School of Public Health, Initiative for Evaluation and Capacity Building.

Rotz, D.,Goesling, B., Crofton, M., Manlove, J., and Welti, K. (2016). Final Impacts of Teen PEP (Teen

Prevention Education Program) in New Jersey and North Carolina High Schools. Washington, D.C.: U.S. Department of Health and Human Services, Office of Adolescent Health.

Rotheram-Borus, M. J., Lee, M., Leonard, N., Lin, Y. Y., Franzke, L., Turner, E., ... & Gwadz, M. (2003). Four-year behavioral outcomes of an intervention for parents living with HIV and their adolescent children. AIDS, 17(8), 1217-1225.

Ruwe, M.B., McCloskey, L., Meyers, A., Prudent, N., and Foureau-Dorsinville, M. (2016) Evaluation of Haitian American Responsible Teen. Findings from the Replication of an Evidence-based Teen Pregnancy Prevention Program in Eastern Massachusetts. Washington, DC: U.S. Department of Health and Human Services, Office of Adolescent Health.

Schwinn, T, Kaufman, C. E., Black, K., Keane, E. M, Tuitt, N. R., Big Crow, C. K., Shangreau, C., Schaffer, G, & Schinke, S. (2015). Evaluation of mCircle of Life in Tribes of the Northern Plains: Findings from an Innovative Teen Pregnancy Prevention Program. Final behavioral impact report submitted to the Office of Adolescent Health. Washington, D.C.: U.S. Department of Health and Human Services, Office of Adolescent Health.

Slater, H.M., and Mitschke, D.B. (2015). Evaluation of the Crossroads Program in Arlington, TX: Findings from an Innovative Teen Pregnancy Prevention Program. Arlington, TX: University of Texas at Arlington.

Smith, T., Clark, J. F., & Nigg, C. R. (2015). Building Support for an Evidence-Based Teen Pregnancy and Sexually Transmitted Infection Prevention Program Adapted for Foster Youth. Hawaii Journal of Medicine & Public Health, 74(1).

Smith, K. V., Dye, C, Rotz D., Cook, E., Rosinsky, K., & Scott, M. (2016) Interim Impacts of the Gender Matters Program. Washington, DC: U.S. Department of Health and Human Services, Office of Adolescent Health.

The United States Department of Health and Human Services, Office of Adolescent Health (n.d.). Using human-centered design to better understand adolescent and community health. Retrieved from: https://www.hhs.gov/ash/oah/sites/default/files/oah-human-centered-design-brief.pdf

Tolou-Shams, M., Houck, C., Conrad, S. M., Tarantino, N., Stein, L. A. R., & Brown, L. K. (2011). HIV prevention for juvenile drug court offenders: A randomized controlled trial focusing on affect management. Journal of Correctional Health Care, 17(3), 226-232.

Tortolero, S. R., Markham, C. M., Peskin, M. F., Shegog, R., Addy, R. C., Escobar-Chaves, S. L., & Baumler, E. R. (2010). It's Your Game: Keep It Real: delaying sexual behavior with an effective middle school program. Journal of Adolescent Health, 46(2), 169-179.

Usera, J. J. & Curtis, K. M (2015). Evaluation of Ateyapi Identity Mentoring Program in South Dakota: Findings from the Replication of an Evidence-Based Teen Pregnancy Prevention Program. Sturgis, SD: Delta Evaluation Consulting, LLC.

Vyas, A., Wood, S., Landry, M., Douglass, G., and Fallon, S. (2015). The Evaluation of Be Yourself/Sé Tú Mismo in Montgomery & Prince Georges Counties, Maryland. Washington, DC: The George Washington University Milken Institute School of Public Health.

Walker, E. M., Inoa, R,. & Coppola, N. (2016). Evaluation of Promoting Health Among Teens Abstinence-Only Intervention in Yonkers, NY. Sametric Research. Princeton, N.J. 08540

Wingood, G. M., DiClemente, R. J., Harringon, K. F., et al. (2006). Efficacy of an HIV prevention program among female adolescents experiencing gender-based violence. American Journal of Public Health, 96, 1085-1090.

1 https://tppevidencereview.aspe.hhs.gov/pdfs/rb_TPP_QED.pdf

2 All studies were found via the Teen Pregnancy Prevention Evidence Review at https://tppevidencereview.youth.gov/FindAProgram.aspx

3 Project TALC (Rotheram-Borus et al., 2003), Teen Outreach Program (Daley et al., 2015), Love Notes (Barbee et al., 2016), Reducing the Risk (Barbee et al., 2016), Children’s Aid Society- Carrera Program (Philliber et al., 2002)

4 SiHLE (Wingood et al., 2006), Project IMAGE (Champion & Collins, 2012), Raising Healthy Children (Hawkins et al., 2008), Sisters Saving Sisters (Jemmott et al., 2005).

5 Generations (Lewin et al., 2016), Horizons (DiClemente et al., 2009), Love Notes (Barbee et al., 2016), Reducing the Risk (Barbee et al., 2016), Positive Prevention PLUS (LaChausse, 2016)

6 COMPAS (Espada et al., 2012; Morales et al., 2015), Keeping it Real (Tortolero et al., 2010), Its Your Game (Peskin et al., 2015), Peer Education Program (Mahat et al., 2008)

7 COMPAS (Espada et al., 2012; Morales et al., 2015), Keeping it Real (Tortolero et al., 2010), Its Your Game (Peskin et al., 2015), Computer-based STD/HIV Education (Roberto et al., 2007), Sisters Saving Sisters (Jemmott et al., 2005).

8 COMPAS (Espada et al., 2012), Ciudate! (Moraels et al., 2015), Keeping it Real (Tortolero et al., 2010), Sisters Saving Sisters (Jemmott et al., 2005).

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Cullen, Katie |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy