OASH Task 3_OMB PRA_SSA_4.9.2020

OASH Task 3_OMB PRA_SSA_4.9.2020.docx

Evaluation of Pregnancy Prevention Program Replications for High Risk and Hard to Reach Youth

OMB: 0990-0472

Supporting Statement A for

the

Office

of the Assistant Secretary for Health

Evaluation

of Pregnancy Prevention Program Replications for High Risk and Hard

to Reach Youth

Submitted to

Office of Management and Budget

Office of

Information and Regulatory Affairs

Submitted by

Department of Health and Human Services

Office

of the Assistant Secretary for Health

04/09/2019

EXECUTIVE SUMMARY

Status of Evaluation: This is a new information collection request specific to Pregnancy Prevention Programs for Adolescents: A Replication Study. The request is for 24 months.

Purpose/Aim: To conduct an independent evaluation examining whether programs that have been proven effective through rigorous evaluation can be replicated with similarly successful and consistent results among hard-to-reach, high-risk, vulnerable, or understudied youth.

Design: The independent evaluator, MITRE, will use a quasi-experimental design to measure program effects on youth knowledge, attitudes, beliefs, intentions, and behaviors related to sexual health. The evaluation will also examine youth perspectives regarding participation in adolescent pregnancy prevention programs.

Sample Size: Up to eight implementing organizations—organizations focused on health, education, and social services that have experience working with community-based organizations to implement pregnancy prevention programs for adolescents—will implement previously proven-effective adolescent pregnancy prevention programs for up to 1,900 youth. Baseline data will be collected from a matched comparison group that will yield a 1:1 match with a subset (n = 982) of these participating program youth.

Utility of the information collection: Findings will inform OASH’s programmatic efforts to improve sexual health knowledge, attitudes, beliefs, intentions, and behaviors among hard-to-reach, vulnerable, or understudied youth.

Contents

1. Circumstances Making the Collection of Information Necessary 2

2. Purpose and Use of Information Collection 3

3. Use of Improved Information Technology and Burden Reduction 7

4. Efforts to Identify Duplication and Use of Similar Information 7

5. Impact on Small Businesses or Other Small Entities 8

6. Consequences of Not Collecting the Information or of Collecting Less Frequently 8

7. Special Circumstances Relating to the Guidelines 9

8. Comments in Response to the Federal Register Notice, and Outside Consultation 9

9. Explanation of Any Payment/Gifts to Respondents 9

10. Assurance of Confidentiality Provided to Respondents 10

11. Justification for Sensitive Questions 12

12. Estimates of Annualized Hour and Cost Burden 13

13. Estimates of other Total Annual Cost Burden to Respondents or Recordkeepers/Capital Costs 14

14. Annualized Cost to Federal Government 14

15. Program Changes or Adjustments 15

16. Plans for Tabulation and Publication and Project Time Schedule 15

17. Display of Expiration Date for OMB Approval 15

Exhibits

Exhibit 1. Key Constructs and Operational Definitions 4

Exhibit 2. Quasi-Experimental Design 5

Exhibit 3. Implementing Organizations and Program Characteristics 6

Exhibit 4. Description of Data Collection Forms, Timing, and Justification 7

Exhibit 5. Consultation with Technical Experts 9

Exhibit 6. Estimated Annualized Burden Hours 13

Exhibit 7. Estimated Annualized Cost to Respondents 14

Exhibit 8. Approximate Schedule for the Evaluation 15

A. Justification

1. Circumstances Making the Collection of Information Necessary

The Office of the Assistant Secretary for Health (OASH) in the Department of Health and Human Services (HHS) is requesting Office of Management and Budget (OMB) clearance for information collections related to the evaluation of adolescent pregnancy prevention programs with previously demonstrated positive outcomes in a sample of high risk and hard to reach youth. In accordance with statutes described below, the evaluation aims to understand the effects of previously proven adolescent pregnancy programs on knowledge, attitudes, beliefs, intentions, and behaviors related to sexual activity and health among high risk and hard-to-reach youth.

Statutory basis. OASH is authorized to conduct this evaluation—aimed at “replicating programs that have been proven effective through rigorous evaluation to reduce teenage pregnancy, behavioral risk factors underlying teenage pregnancy, or other associated risk factors”—by the Public Health Service Act (42 U.S.C.241) and the FY2018 Consolidated Appropriations for General Departmental Management (Appendix A). To implement this project, OASH contracted with MITRE, an independent, not-for-profit company that operates the Health Federally Funded Research and Development Center (FFRDC), to conduct an independent evaluation in a manner consistent with statutory language that is, via “competitive contracts and grants to public and private entities to fund medically accurate and age appropriate programs that reduce teen pregnancy.” Hence, MITRE plans to competitively and independently award subcontracts to organizations that will replicate adolescent pregnancy prevention programs that have been proven effective through rigorous evaluation. A broad range of proven effective programs, including but not limited to sexual health education programs, youth development programs, and sexual risk avoidance programs are eligible for these subcontracts.

Need. Rates of pregnancy among hard-to-reach, high-risk, vulnerable, or understudied youth are significantly higher than the general population. However, there have been few evaluations assessing whether programs that have been previously proven successful can be delivered successfully to these youth. Hence, this evaluation is intended to help fill the evidence gap about the efficacy and effectiveness of existing pregnancy prevention programs among high-risk, vulnerable, or understudied youth.

Thus, although samples from other populations may also be included in the evaluation, the priority populations of interest for this evaluation are:

High-risk, vulnerable, and culturally under-represented youth populations including, youth in the juvenile justice system, foster care, minority youth (especially Native American and Alaskan Natives), pregnant or parenting youth and their partners, and youth experiencing housing insecurity; or

Geographic areas and populations that are underserved by other pregnancy prevention programs (e.g. some rural communities and areas with high birth rates for youth).

Study Background. The teen birth rate has continued to drop, yet data from 2017-2018 show continuing disparities in teen birth rates—by race and ethnicity, (Centers for Disease Control and Prevention, 2019). Youth who living in foster care experience disproportionately higher prevalence and incidence of teen pregnancy (Boonstra, 2011). Youth who are racial/ethnic minorities also tend to have higher teen pregnancy rates. For example, almost a third (32.9%) of American Indian/Alaska Native girls aged 15-19 had given birth in 2017 (U.S. Department of HHS & OAH, 2019).1

The proposed evaluation will help fill the gap in evidence examining the replicability of proven effective pregnancy prevention programs among underserved or hard-to-reach youth. HHS has conducted multiple evaluations and replication studies of programs focused on adolescent sexual health in the general population. Teen pregnancy prevention programs targeting large swaths of the population are predominantly school based.2 Some subgroups of youth experiencing disproportionately higher rates and risk for teen pregnancy may not be effectively served by school-based programs.

2. Purpose and Use of Information Collection

Use of information: To address the gaps in understanding described in section 1, OASH plans to use the findings of this evaluation to inform guidance to HHS grantees and prospective grantees on approaches for replication of pregnancy prevention programs for hard-to-reach and underserved youth.

Evaluation overview: The proposed evaluation aims to address the following research questions:

Aim 1: To what degree can the effects of previously proven-effective pregnancy prevention programs be replicated on youth knowledge, attitudes, intentions, beliefs, and behaviors related to sexual activity and health, particularly among hard-to-reach and high-risk youth?

Aim 2: To what degree do knowledge, attitudes, intentions, beliefs, and behaviors related to sexual activity and health change after exposure to previously proven effective sexual health programs among hard-to-reach and high-risk youth?

That is, the evaluation is focused on and aiming to be powered to understand whether any sexual health programming—regardless of program type—is related to change in youth sexual health outcomes among high-risk and hard-to-reach youth, commensurate with effects that have been reported by prior evaluations. Additionally, the evaluation aims to understand whether sexual health programming is associated with changes in these outcomes of interest among high-risk and hard-to-reach youth, regardless of whether these changes are commensurate with the effects reported previously. This secondary research question was deemed necessary given that high-risk and hard-to-reach populations may respond differently than the populations in which the programs were originally tested. For example, a youth development program found to be marginally (but still statistically significantly) effective in a homogenous school-based setting may be more effective in a community-based format with higher risk youth.

Implementing organizations (IOs)—organizations focused on health, education, and social services that have experience working with community-based organizations to implement pregnancy prevention programming—will deliver programs for high-risk and hard-to-reach youth in a variety of settings. The programs, settings and program types for the selected IOs vary. Program settings include schools, after-school programs, community-based programs, faith-based organizations, and licensed group or residential programs.3 Additional program characteristics that differ are group and sample size, location, and populations served. Program types include comprehensive sexual health education programs, sexual risk avoidance programs, and youth development programs.

In order to address the research question, youth surveys and focus groups will be conducted with youth participating in these programs. Youth surveys will be delivered at three points in time: baseline, first follow-up (immediately after the program group receives the intervention), and three-month follow-up (three months after the intervention is implemented). Surveys will be taken by youth in these pregnancy prevention programs, and by comparison youth recruited from the same communities/populations. Exhibit 1 describes the key constructs (and their operational definitions) that will be measured in the surveys and focus groups. The proximal outcomes measured, such as knowledge, attitudes, beliefs, and intentions (KABI), have been previously found to shape sexual activity and health behaviors and long term outcomes in adolescents (Koniak-Griffin & Stein, 2006). Baseline surveys will also measure background (control or covariate) variables that have been theorized or empirically shown to account for some variance in youth proximal outcomes or youth sexual health outcomes, such as demographic information.

Exhibit 1. Key constructs and operational definitions.

Sexual Health Construct |

Operational Definition |

Source of Information |

Proximal Outcomes |

Changes in knowledge, attitudes, beliefs, and intentions that are expected to occur as a result of an effective program. |

Surveys |

Knowledge |

Understanding and recall of medically accurate information regarding sexual and reproductive health. |

Surveys |

Attitudes |

Evaluative reactions, either positive or negative, to sexual activity, romantic relationships, and reproductive health. |

Surveys |

Beliefs |

Perspectives and opinions regarding topics relevant to sexual and reproductive health. |

Surveys |

Intentions |

Plans regarding one’s sexual health, romantic relationships, and sexual activity behaviors. |

Surveys |

Behaviors |

Actions and interactions regarding sexual activity, relationships, and related activities such as drug use and communication with parents/guardians. |

Surveys |

Youth Feedback |

Factors that promote or inhibit youth participation in pregnancy prevention programs. |

Youth focus groups |

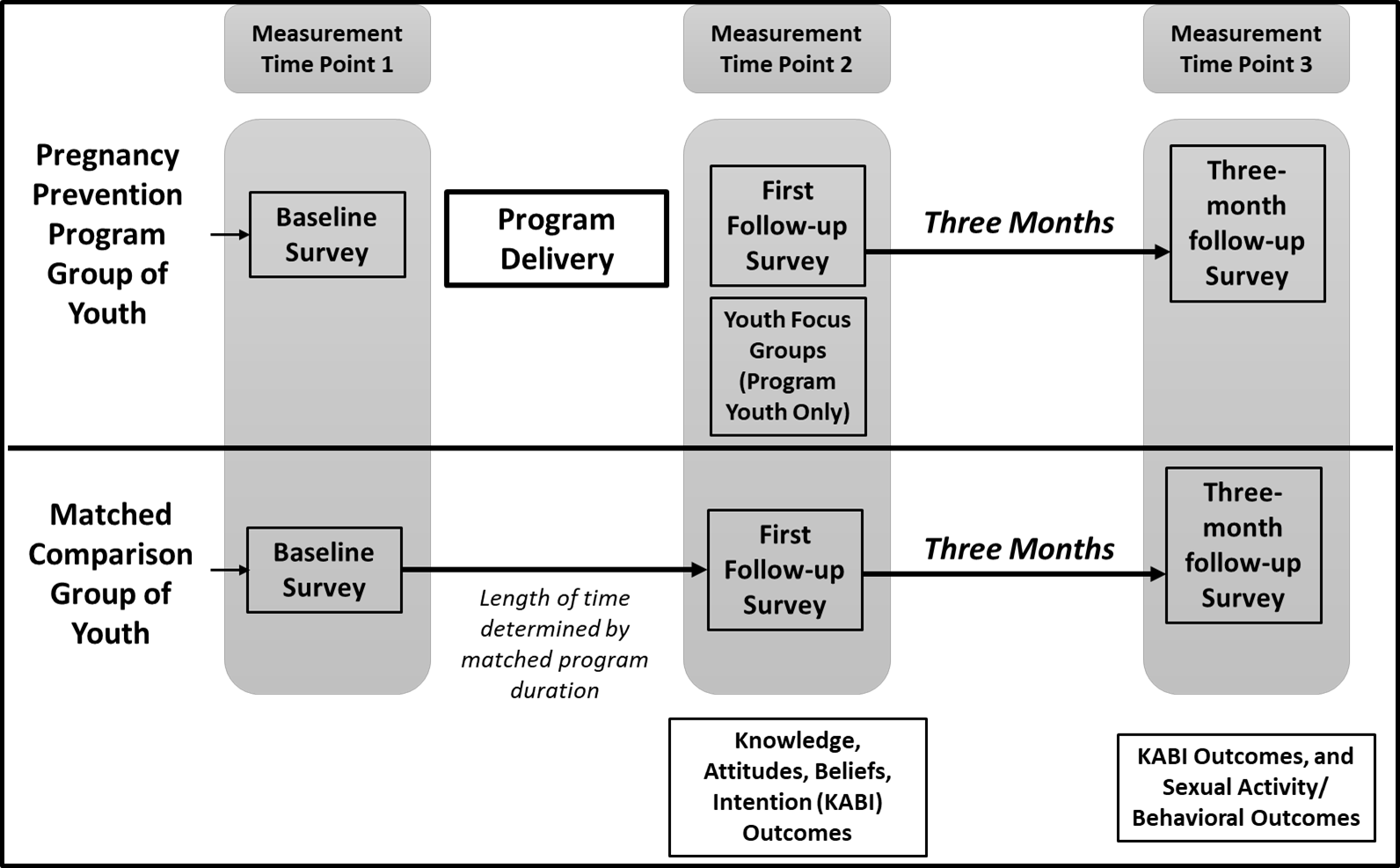

In addition, focus groups of program youth will be conducted at program completion in order to examine the factors that may promote or inhibit participation of hard-to-reach and underserved adolescents and to gain insights on which program components, if any, youth identified as helpful for program participation. As shown in Exhibit 2, we anticipate that any changes in proximal (KABI) outcomes could be observed at both the first and three-month follow-up surveys. However, we anticipate any changes in behavioral outcomes (e.g. engagement in sexual intercourse, use of contraceptives) would not be observed until the three-month follow-up.

Exhibit 2. Quasi-Experimental Design with program youth and matched comparison youth

Matched Comparison Group Design. Youth in the comparison group will be selected in a two-stage process. An initial pool of comparison group youth will be recruited, consented, and complete the baseline survey from a subset of the larger program youth sample (Exhibit 3). Specifically, comparison groups will only be created for IOs where there is a large enough pool of program youth to justify the additional burden, in efforts to adequately power analyses to address Aim 1 of this information collection. Thus, matched comparison youth will only be sampled from communities represented by IOs #3, #4, and #7 as described in Exhibit 3.

Surveys from these comparison groups will provide data for between-person analyses for Aim 1, modeling the comparison group youth as receiving no treatment. To ensure that comparison groups are matched and to aid in achieving and measuring baseline equivalence, recruitment for the initial pool of comparison group youth will target individuals with similar demographic characteristics (sex, age, race, ethnicity), living situations (presence of mother and/or father in the home, socioeconomic status), and risky health behaviors (drug and alcohol use) compared to the program youth. A final sample of comparison youth who match program youth on key demographic and baseline variables will be selected. This 3:1 ratio of initial comparison youth to program youth was chosen based on the likely difficulty of identifying comparison youth who match program youth on key demographic and baseline variables in samples of hard-to-reach and underserved youth, as well as extant literature showing initial comparison groups about twice as large as the final matched group (Rotz, Goesling, Crofton, Manlove, & Welti, 2016).

Exhibit 3. Implementing Organizations and Program Characteristics

IO |

Program |

Location |

Maximum sample size |

Target youth population |

Setting for program delivery |

1 |

Love Notes |

Pima County, AZ |

120 |

Predominantly Hispanic youth |

School-based settings |

2 |

Love Notes |

Cincinnati, OH |

105 |

Predominantly low income and African American youth |

School-based setting + Alternative school |

3* |

Promoting Health Among Teens- Abstinence Only |

Houston, TX |

500 |

Youth who are adjudicated or attending alternative schools |

Juvenile detention, residential facilities, and alternative schools |

4* |

Teen Outreach Program |

Kayenta, AZ |

361 |

Youth in the Navajo Nation (94% Native American) |

School-based setting + community-based service learning |

5 |

Making Proud Choices |

Warsaw, VA |

60 |

Predominantly African American youth |

Community-based setting |

6 |

HIPTeens |

Atlanta, GA |

45 |

Refugee youth, 100% female |

Community-based setting |

7* |

Love Notes |

Southern California; San Antonio, TX; N. Chicago, IL; Bronx, NY |

650 |

Predominantly low-income Latinx youth in neighborhoods with disproportionately high birth rates |

Community-based settings |

8 |

Power Through Choices |

Chattanooga, TN |

30 |

Lower-resourced Appalachian youth |

Community-based settings |

* Matched comparison groups will be created for these IOs.

Surveys from all participating youth—regardless of whether their IO participated in the comparison group study—will be used for within-person analyses examining trends in change over time after program exposure, as addressed in Aim 2.

List of all data collection forms. In addition to the youth surveys and focus groups described above, the evaluation will also include parent/guardian consent forms and youth assent forms. Exhibit 4 provides additional detail about each of the data collection forms, their timing, and the rationale for their collection.

As part of standard reporting and organizational practices, the subcontracting IOs will be providing additional information about program delivery and enrollment in order for MITRE to conduct a comprehensive implementation evaluation to contextualize findings in final reports.

Study Design Limitations. The study results will not be generalizable to the larger population of teen pregnancy prevention programs because the IOs have been purposively selected to focus on those serving hard-to-reach and underserved youth. The evaluation may not have adequate statistical power to examine the effects of individual programs, subgroups of programs (e.g. all sexual health education programs, all sexual risk avoidance programs, or all youth development programs), or cross-program effects. We will analyze trends in findings in order to provide preliminary information on program effectiveness overall (Aim 1) and program effects specifically (Aim 2) in these understudied populations. Findings are not intended to inform policy or funding decisions, but are intended to inform practice regarding the replicability of pregnancy prevention programs in high-risk and hard-to-reach youth populations.

Exhibit 4. Description of data collection forms, timing, and justifications

Data collection form (Location in package) |

Reason for data collection |

Timing |

Consent form (Appendix B) |

Parent/guardians will provide or decline consent for their child to participate in each one of the evaluation activities (including enrollment forms, surveys, and focus groups). The consent forms are estimated to take no more than 15 minutes to complete. |

First day of program |

Assent form (Appendix C) |

Youth will provide or decline assent to participate in each one of the evaluation activities (including enrollment forms, surveys, and focus groups). The assent forms are estimated to take no more than 10 minutes to complete. |

First day of program, prior to each survey, prior to focus group |

Surveys (Appendices D,E, I, J, K, and L) |

Measuring KABI and behavioral baseline values and outcomes among youth program participants. Each survey is anticipated to take approximately 50 minutes to complete, except for the baseline survey which also includes the assent form described above. MITRE or MITRE-trained data collection subcontractor(s) will collect all survey data. Surveys will be administered electronically and by in-person administrators. |

First day of program (Baseline), Last day of program (first follow-up), Three months after program (3-month follow-up) |

Focus groups (Appendix F; Focus group protocol) |

Gain insights into the aspects of youth lived experiences and program characteristics which may be associated with program participation. Each focus group is anticipated to take between 90 minutes to conduct. MITRE or a MITRE-trained data collection subcontractor will conduct all focus groups. |

Within 3 days and not more than 2 weeks after last day of program |

For all surveys, both baseline and follow-up, state-of- the art technology will be used to (1) increase efficiency, (2) ensure data security, (3) improve comprehension and accuracy of responses, and (4) reduce the burden of data collection for the youth included in the evaluation. All (100%) of the baseline and follow-up surveys for youth will be administered electronically by subcontracted data collection staff using the Qualtrics Research Core survey platform. This software allows online administration via a web browser or offline administration via an application for tablet devices. Electronically administered surveys will increase efficiency by allowing youth to use a touchscreen or mouse rather than requiring pencil and paper responses and may improve self-disclosure by shielding youth responses with changing screens (Kays, Gathercoal, & Behrow, 2012; Materia et al., 2016). By eliminating paper surveys, we also increase efficiency with which youth survey responses are collected and shared with MITRE. In terms of improved comprehension and accuracy, electronic surveys allow skip and display patterns; respondents only see questions that are relevant to them. This avoids confusion that paper surveys often cause because paper surveys must display all questions and instruct respondents to skip certain ones.

The evaluation does not duplicate other efforts by HHS. Several HHS efforts, including the TPP replication study and the Adolescent Pregnancy Prevention Approaches Study (Office of Adolescent Health, U.S. Department of Health and Human Services, 2019), have evaluated pregnancy prevention program for adolescents. The current evaluation differs from prior and current efforts by focusing on all types of pregnancy prevention programs, including sexual health education, sexual risk reduction, and youth development programs, and by specifically targeting underserved and hard-to-reach youth.

Efforts to identify duplication included comprehensive scans of the literature, examining previously conducted HHS evaluations, and having in-depth conversations with HHS representatives to identify areas of overlap and novelty. Previously conducted HHS Evaluations informed our proposed evaluation plans including but not limited to the specific evaluations of the Teen Pregnancy Prevention (TPP) Replication Study (Abt Associates, Inc., 2015), the Evaluation of Adolescent Pregnancy Prevention Approaches (PPA) Study (Smith & Coleman, 2012), and the PREP study (Goesling, Wood, Lee, & Zief, 2017; 2018). The information collection requirements for the evaluation have been carefully reviewed to determine what information is already available from these existing and relevant evaluations and what will need to be collected for the first time. We have also reviewed previously published and relevant studies and data collection instruments and procedures available from HHS.4,5 OASH program staff have also provided recommendations for review as well as feedback on the plans we present in this submission.

Respondents in this evaluation will be youth and their parents or legal guardians who provide consent. This collection will not involve small business or small entities.

The collection of baseline and follow-up youth survey data and youth focus group data are essential to conducting an evaluation for OASH regarding replication of proven-effective pregnancy prevention programs. In the absence of such data, the ability to replicate and implement these proven-effective programs with fidelity and similar effects in different locations and with different populations will be unknown or the quality of evidence will be very weak. This information is critical to the field and to OASH for use in informing the future evaluation and funding decisions regarding pregnancy prevention programs for youth, especially for underserved and high-need populations.

Given the need to use a rigorous quasi-experimental study design, it is necessary to collect data from program youth respondents at baseline and both follow-up points. Similarly, the quasi-experimental design requires the same data collection schedule for the comparison group who do not participate in a program. If data are not collected at this frequency, OASH will not meet their statutory obligations.

There are no special circumstances for the proposed data collection. The proposed data collection is consistent with guidelines set forth in 5 CFR 1320.5.

Federal Register Notice Comments. A 60-Day Notice was published on November 7th, 2019, vol. 84, page 216, and no public comments received (84 FR 216). A 30-day Notice was published on January 29th, 2020, vol 85, page 5217, and no public comments were received (85 FR 5217).

Outside Consultation. To inform the design of the evaluation and data collection, OASH consulted the organization responsible for conducting the research and providing substantive expertise (the MITRE Corporation) and outside technical experts. Exhibit 5 presents the name, affiliation, and contact information of members of the outside consultation panel.

Exhibit 5. Consultation with technical experts

Name/Title/Affiliation |

|

Nanci Coppola, DPM, MS Expert Consultant, OASH |

|

Lauren Honess-Morreale, PMP, MPH Project Leader, Principal Public Health/Healthcare MITRE |

|

Jaclyn Saltzman, MPH, PhD Task Leader, Senior Epidemiologist and Public Health Scientist MITRE |

|

Sarah Kriz, PhD Deputy Project Leader, Lead Cognitive Psychologist MITRE |

|

Angie Hinzey, MPH, EdD Task Leader, Senior Organizational Change Management MITRE |

|

Carol Ward, MPH, DrPH Task Leader, Principal Public Health/Healthcare MITRE |

|

Stefanie Schmidt, PhD Senior Technical Advisor, Principal Health Economist MITRE |

|

Beth Linas, MHS, PhD Task Leader, Lead Epidemiologist and Public Health Specialist MITRE |

Our proposed incentives are comparable to those used in previous evaluations, and critical to ensure return of parent or guardian consent forms

Incentives for Returning Consent Forms. To increase receipt of consent forms—and thus increase our capacity to collect accurate recruitment and enrollment data—we will offer small incentives to youth and/or their parents or guardians in return for signed consent forms. Incentives will be provided regardless of whether youth and/or parents/guardians ultimately consent to participate in any aspect of data collection. The type of incentive will vary by setting and population, will be decided in collaboration with implementing organizations, and will be worth no more than $20.00. For consent/assent form completion, incentives provided to youth in intervention groups will be identical to those provided to youth in matched-comparison groups.

Incentives for Survey Completion. To increase response rates for surveys at baseline, post-implementation, and at 3-month follow-up, we will also offer small incentives to youth in return for completed surveys at each time point. Although this remuneration is not compensation for the youth’s time, we are sensitive to the fact that data collection activities involve additional time that youth need to spend at a particular location, which may require alternate transportation or logistical plans.

The type of incentive provided to youth for completing surveys will vary by setting and population, and will be decided in collaboration with the implementing organizations. In order to ensure that we are able to collect an adequate sample and to account for our expectations of higher attrition rates after 3-month follow-up, remuneration rates will be identical at baseline and post-implementation, but will increase at the 3-month follow-up point. Incentives for the baseline and first follow-up survey will be valued at no more than $25.00, and no more than $40.00 for the three month follow-up survey. Options for incentives at survey completion may include, but are not limited to, an Amazon or iTunes gift card. Incentives provided to program youth will be identical to those provided to youth in matched-comparison groups.

Incentives for Focus Group Attendance. To increase participation in focus groups immediately following program implementation, a small incentive will be offered to youth in return for their attendance in the focus groups. As in the incentive for survey completion, the incentive for focus group attendance is designed to thank the youth participants for their time and to be sensitive to the additional resources (e.g. transportation) needed to be at the focus group location. Incentives for focus group participation will be valued at no more than $40.00. The type of incentive provided to youth for attending the focus group will vary by setting and population, and will be decided in collaboration with implementing organizations. Options for incentives for focus groups include, but are not limited to, Amazon or iTunes gift cards for participation.

Parental consent and youth assent forms are provided in Appendices B and C. These forms present the study and its purpose, data collection, and participants’ rights and privacy protections to respondents and their parents.

Personally Identifiable Information. The study team will only have access to personally identifiable information (PII) for those youth who have provided their assent as well as parental/guardian consent to participate in one or more data collection aspects of the evaluation.

Youth PII in the form of a unique identifier will be used to track individual youth attendance/dosage, to track the administration and completion of surveys and focus groups, and to track program and evaluation attrition. To protect PII, the evaluation has completed a review of privacy protections consistent with those required by HHS, and the information collection has been issued a Certificate of Confidentiality from NIH (Appendix G). Appendix H contains the IRB approval. Some PII (e.g., demographics) will also be used as covariates for analysis. PII data concerning attendance and youth demographics will be shared by the organizations implementing the programs with the MITRE project team via MITRE’s Secure File Transfer (SFT) environment. The SFT environment is approved by the MITRE Privacy Office for PII storage and transfer. To protect youth privacy, demographic and attendance data will only be shared in conjunction with unique identifiers, and never shared with youth names or other demographic information. Only the unique identification number will be stored with the data.

MITRE will use Qualtrics, which is a software as a service (SaaS) survey tool, to collect responses from youth participants. Qualtrics is an online survey platform that will allow MITRE to design electronic surveys that will be electronically administered to youth on tablets or computers. PII contained in the youth surveys will be accessible to MITRE via Qualtrics. Qualtrics encrypts data in transit and at rest in their data centers. Online administration of surveys allows instant uploading of responses to the Qualtrics server. Offline administration is not instantaneous, but allows secure data export from the application on a tablet or other device, to the Qualtrics server without revealing respondents’ survey responses to the user who exports the data. This method ensures greater privacy and security of the data collection, compared to paper administration. No one except for the respondent and the study team member managing access to the Qualtrics server will be able to see survey responses in Qualtrics. No person involved in the delivery/implementation of the pregnancy prevention programs will be able to see youth survey responses.

After receiving data via the SFT environment or Qualtrics, MITRE will encrypt all data files. MITRE will also ensure that survey and focus group responses are stored separately. Privacy training and controlled administrative access approvals will be required for project team members to access all files and environments containing PII.

All hardcopy files that could be used to link individuals with their responses will be in locked file cabinets at the project team offices. Any computer data files that contain this information will be encrypted. Three years after the conclusion of the project and with the approval of the Federal Project Officer, all files containing information that might link youth with their survey responses or focus group answers will be destroyed, including audio recordings. Interview and data management procedures that ensure confidentiality will be a major part of training for data collection.

The evaluation procedures will comply with HHS procedures for maintaining privacy and data protections. These include the following::

notarized nondisclosure affidavits obtained from all evaluation team members who will have access to individual identifiers;

training regarding the meaning of privacy for team members;

controlled and protected access to computer files for team members; and

built-in safeguards concerning status monitoring and receipt control systems.

Additional procedures for maintaining privacy and data protections for subcontractors (that is, the IOs and all data collection subcontractors) will include:

signed agreements for all involved in collecting data (including interpreters if used) to strictly maintain the privacy of youth;

signed agreements for youth who take the survey not to discuss the contents with anyone else (included as part of assent within and prior to the administration of the survey)

documented verbal assent for youth who participate in the focus group to follow specific instructions for protecting participants’ privacy should they need to discuss the focus group with a trusted adult.

Per HHS 45 CFR 46, all data will be retained for 3 years after MITRE submits its final expenditure report. Then it will be destroyed. A de-identified dataset may be provided to OASH upon completion of the evaluation. Before delivery of any de-identified datasets to OASH, the project team will conduct a privacy analysis and will aggregate reporting categories for demographic variables to ensure that no fewer than five individuals are in any combination of key demographic categories to mitigate the risk of re-identification. The evaluation has completed a review of privacy protections consistent with those required by HHS, and the information collection has been issued a Certificate of Confidentiality from NIH (Appendix G). Appendix H contains the IRB approval.

Information about the primary outcomes of interest in the current evaluation (knowledge, attitudes, beliefs, intentions, and behaviors related to sexual activity and health) can only be provided by the adolescent participants in the study. The nature of the question asked in this evaluation may include information about whether youth have ever engaged in voluntary sexual intercourse, or their perspectives about sexual activity. Display and skip patterns will be built into the survey in order to ensure the most minimal possible exposure to sensitive questions for respondents. For example, youth who respond that they have never engaged in sexual behavior will not view further questions that probe additional details about timing and partners. In contrast, youth who respond that they have engaged in sexual behavior will be asked additional questions. Despite the sensitivity of these questions, questions about a respondent’s attitudes and beliefs about sex, past sexual behavior and intention to engage in future sexual behaviors, and knowledge about sex and reproductive health are necessary to measure the primary proximal outcomes in the current study.

The voluntary nature of the questions, the data protection protocol, the purposes of and uses for the data collection will be stated in the parental consent and youth assent forms. The participants will be reassured in writing before completing the survey, and in person before the focus groups that their participation in the evaluation is completely voluntary. Participants may also choose to conclude their participation in any of the surveys or the focus group at any time. The privacy of all survey and focus group responses will be maintained, and individual responses or answers will not be reported to any program or agency except at summary levels.

Estimated Number of Respondents. The estimated numbers of respondents are based on the current state of the competitive source selection process for implementing organizations. At this point, eight IOs have been selected to enter preliminary negotiations; therefore, our burden estimates are based on the information provided by these 8 IOs.

MITRE has stipulated that IOs must run at least two, but no more than three consecutive program replications between subcontract start date (earliest possible date is approximately May 2020, pending OMB approval) and December 2020, and that IOs must enroll at least 10 youth in the evaluation prior to implementation. Based on these requirements and the sample sizes estimated by the IOs during the source selection process, we estimate that up to 1,871 program youth will be enrolled and asked for parental consent. The burden estimates below rely on assumptions about response rates at each stage of the data collection process that are described in more detail in Supporting Statement B, and substantiated by prior reports. 6

Exhibit 6. Estimated Annualized Burden Hours

Respondents |

Form Name |

Max No. of Respondents |

Average Burden per Response (hours) |

Total Max Burden (hours) |

Youth Program Participants/Youth Comparison Participants |

Baseline survey and youth assent |

4,163 |

15/60 |

1,041 |

First follow-up survey |

1,460 |

50/60 |

1,217 |

|

3-month follow-up survey |

876 |

50/60 |

730 |

|

Focus group assent |

474 |

15/60 |

119 |

|

Focus group protocol |

474 |

1.50 |

711 |

|

Parents/Guardians |

Parental consent |

4,163 |

15/60 |

1,041 |

Total Burden |

|

11,610 |

|

4,859 |

Estimated Annualized Burden Hours (Exhibit 6). Based on the assumptions described above, the annualized burden is estimated to be 4,859 hours. This hour-burden estimate includes time spent by program youth, comparison group youth, and parents/guardians of both groups.

Estimated Annualized Respondent Cost Burden (Exhibit 7) Given that youth will be between ages 12 and 16 (and thus many may not be eligible to work), we used the federal minimum hourly wage of $7.25 as an approximation of the maximum hourly wage that youth could earn. We used the average federal hourly wage of $24.98 for all adults to estimate parent/guardian hourly wages. 7 The total maximum respondent costs are $53,684.

Exhibit 7. Estimated Annualized Cost to Respondents

Respondents |

Form Name |

Total Max Burden (hours) |

Adjusted Hourly Wage |

Total Max Respondent Costs |

Youth Program Participants/Youth Comparison Group Participants |

Baseline survey |

1,041 |

$7.25 |

$7,547.25 |

First follow-up survey |

1217 |

$7.25 |

$8,823.25 |

|

3-month follow-up survey |

730 |

$7.25 |

$5,292.50 |

|

Focus group assent |

119 |

$7.25 |

$862.75 |

|

Focus group protocol |

711 |

$7.25 |

$5,154.75

|

|

Parents/Guardians |

Parental consent |

1,041 |

$24.98 |

$26,004 |

Total Burden |

|

4,859 |

|

$53,684.50 |

This information collection entails no respondent costs other than the cost associated with response time burden, and no non-labor costs for capital, startup or operation, maintenance, or purchased services.

Annualized Cost to Federal Government

The cost estimate for the design and conduct of the evaluation will be $16 million over the course of three years, with $3 million the first year, $9 million the second year, and $4 million the third year. This total cost covers all evaluation activities, including the estimated cost of coordination among OASH, the contractors (MITRE), and the IOs; OMB package development; project planning and schedule development; Institutional Review Board applications; study design; technical assistance to IOs for data collection; data collection procedures and implementation; data analysis and reporting.

This will be a new information collection.

This section describes the planned reports and project schedule, including planned time to collect information and complete analyses and writing. Data will be collected between May and December 2020. Data will be tabulated and presented to OASH in September 2021. See Exhibit 8 for the anticipated schedule.

Exhibit 8. Approximate Schedule for the Evaluation

-

Activity

# of Months Following OMB Approval Activity Started

Start Date

End Date

MITRE Awards Subcontracts to Multiple Implementing Organizations

N/A

December 2019

February 2020

Collection of parental consent

Less than 1 month

May 2020

December 2020

Youth data collection

Less than 1 month

May 2020

December 2020

Youth focus groups

2-3 months

May 2020

December 2020

Summary report

12 to 16 months

May 2021

September 2021

The expiration date for OMB approval will be displayed on all data collection instruments.

There are no exceptions to the certification.

References

Abt Associates, Inc. (2015). Teen Pregnancy Prevention (TPP) Replication Study: Impact Study

Design Report. Abt Associates, Inc. Technical Report. Cambridge, MA.

https://aspe.hhs.gov/system/files/pdf/164426/Impact.pdf

Barbee, A. P., Cunningham, M. R., van Zyl, M. A., Antle, B. F., & Langley, C. N. (2016). Impact

of two adolescent pregnancy prevention interventions on risky sexual behavior: A three-arm

cluster randomized control trial. American Journal of Public Health, 106, S85-S90.

doi:10.2105/AJPH.2016.303429

Boonstra, H. D. (2011). Teen pregnancy among women in foster care: A primer. Guttmacher Policy

Review. 14(2). Retrieved from https://www.guttmacher.org/gpr/2011/06/teen-pregnancy- among-young-women-foster-care-primer

Bull, S., Devine, S., Schmiege, S. J., Pickard, L., Campbell, J., & Shlay, J. C. (2016). Text messaging, teen outreach program, and sexual health behavior: A cluster randomized trial.

American Journal of Public Health, 106, S117-S124. doi:10.2105/AJPH.2016.303363

Centers for Disease Control and Prevention (CDC). (2019). Reproductive Health: Teen Pregnancy. Retrieved from https://www.cdc.gov/teenpregnancy/about/index.htm.

Cole, R. & Chizek, S. (2014). Evaluation Technical Assistance Brief for OAH & ACYF Teenage Pregnancy Prevention Grantees: Sample Attrition in Teen Pregnancy Prevention Impact Evaluations (Contract #HHSP233201300416G). Washington, D.C.: U.S. Department of Health and Human Services, Office of Adolescent Health

Covington, R. D., Goesling, B., Clark Tuttle, C., Crofton, M., Manlove, J., Oman, R. F., and Vesely, S. (2016) Final Impacts of the POWER Through Choices Program. Washington, DC: U.S. Department of Health and Human Services, Office of Adolescent Health.

Cunningham, M. R., van Zyl, M. A., & Borders, K. W. (2016). Evaluation of Love Notes and Reducing the Risk in Louisville, Kentucky. Final Evaluation Report to the University of Louisville Research Foundation. Louisville, KY: University of Louisville.

Dierschke, N., Gelfond, J., Lowe, D., Schenken, R.S., and Plastino, K. (2015). Evaluation of Need to Know (N2K) in South Texas: Findings From an Innovative Demonstration Program for Teen Pregnancy Prevention Program. San Antonio, Texas: The University of Texas Health Science Center at San Antonio.

Goesling, B., Wood, R.G., Lee, J., & Zief, S. (2017). Adapting an Evidence-based Curriculum in a Rural Setting: The Early Impacts of Reducing the Risk in Kentucky, OPRE Report # 2017-43, Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services.

Goesling, B., Wood, R.G., Lee, J., & Zief, S. (2018). Adapting an Evidence-based Curriculum in a Rural Setting: The Longer-Term Impacts of Reducing the Risk in Kentucky, OPRE Report # 2018-27, Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services

Jemmott, J. B., Jemmott, L. S., & Fong, G. T. (1998). Abstinence and safer sex HIV risk-reduction interventions for African American adolescents: a randomized controlled trial. Jama, 279(19), 1529-1536.

Kays, K., Gathercoal, K., & Buhrow, W. (2012). Does survey format influence self-disclosure on sensitive question items? Computers in Human Behavior, 28(1), 251-256.

Kirby, D. & Lepore, G. (2007). Sexual risk protective factors: Factors affecting teen sexual behavior, pregnancy, childbearing, and sexually transmitted disease. Which are important? Which can you change? Retrieved from ETR Associates website: http://recapp.etr.org/recapp/documents/theories/RiskProtectiveFactors200712.pdf

Koniak-Griffin, D., & Stein, J. A. (2006). Predictors of sexual risk behaviors among adolescent

mothers in a human immunodeficiency virus prevention program. Journal of Adolescent

Health, 38(3), 297-e1.

Office of Adolescent Health, U.S. Department of Health and Human Services. (2019). Teen Pregnancy Prevention Program Evaluations. https://www.hhs.gov/ash/oah/evaluation-and-research/teen-pregnancy-prevention-program-evaluations/index.html

Office of the Assistant Secretary for Planning and Evaluation, Office of Human Services Policy, U.S. Department of Health and Human Services. (2015). Improving the Rigor of Quasi-Experimental Impact Evaluations. ASPE Research Brief. https://tppevidencereview.aspe.hhs.gov/pdfs/rb_TPP_QED.pdf.

Piotrowski, Z. H., & Hedeker, D. (2016). Evaluation of the Be the Eexception sixth-grade program in rural communities to delay the onset of sexual behavior. American Journal of Public Health, 106(S1), S132-S139.

Robinson, W.T., Kaufman, R. & Cahill, L. (2016). Evaluation of the Teen Outreach Program in Louisiana. New Orleans, LA. Louisiana State University Health Sciences Center at New Orleans, School of Public Health, Initiative for Evaluation and Capacity Building.

Rotz, D.,Goesling, B., Crofton, M., Manlove, J., and Welti, K. (2016). Final Impacts of Teen PEP (Teen Prevention Education Program) in New Jersey and North Carolina High Schools. Washington, D.C.: U.S. Department of Health and Human Services, Office of Adolescent Health.

Ruwe, M.B., McCloskey, L., Meyers, A., Prudent, N., and Foureau-Dorsinville, M. (2016) Evaluation of Haitian American Responsible Teen. Findings from the Replication of an Evidence-based Teen Pregnancy Prevention Program in Eastern Massachusetts. Washington, DC: U.S. Department of Health and Human Services, Office of Adolescent Health.

Schwinn, T, Kaufman, C. E., Black, K., Keane, E. M, Tuitt, N. R., Big Crow, C. K., Shangreau, C., Schaffer, G, & Schinke, S. (2015). Evaluation of mCircle of Life in Tribes of the Northern Plains: Findings from an Innovative Teen Pregnancy Prevention Program. Final behavioral impact report submitted to the Office of Adolescent Health. Washington, D.C.: U.S. Department of Health and Human Services, Office of Adolescent Health.

Slater, H.M., and Mitschke, D.B. (2015). Evaluation of the Crossroads Program in Arlington, TX: Findings from an Innovative Teen Pregnancy Prevention Program. Arlington, TX: University of Texas at Arlington.

Smith, T., Clark, J. F., & Nigg, C. R. (2015). Building Support for an Evidence-Based Teen Pregnancy and Sexually Transmitted Infection Prevention Program Adapted for Foster Youth. Hawaii Journal of Medicine & Public Health, 74(1).

Smith, K. & Coleman, S. (2012). Evaluation of Adolescent Pregnancy Prevention Approaches:

Design of the Impact Study. Mathematica Technical Report 06549.070. Princeton, NJ. https://www.hhs.gov/ash/oah/sites/default/files/ash/oah/oah- initiatives/assets/ppa_design_report.pdf

Smith, K. V., Dye, C, Rotz D., Cook, E., Rosinsky, K., & Scott, M. (2016) Interim Impacts of the Gender Matters Program. Washington, DC: U.S. Department of Health and Human Services, Office of Adolescent Health.

Tolou-Shams, M., Houck, C., Conrad, S. M., Tarantino, N., Stein, L. A. R., & Brown, L. K. (2011). HIV prevention for juvenile drug court offenders: A randomized controlled trial focusing on affect management. Journal of Correctional Health Care, 17(3), 226-232.

U.S. Department of Health and Human Services, Office of Adolescent Health. (2019). Trends in Teen Pregnancy and Childbearing. Retrieved from https://www.hhs.gov/ash/oah/adolescent- development/reproductive-health-and-teen-pregnancy/teen-pregnancy-and- childbearing/trends/index.html#_ftn8

Usera, J. J. & Curtis, K. M (2015). Evaluation of Ateyapi Identity Mentoring Program in South Dakota: Findings from the Replication of an Evidence-Based Teen Pregnancy Prevention Program. Sturgis, SD: Delta Evaluation Consulting, LLC.

Vyas, A., Wood, S., Landry, M., Douglass, G., and Fallon, S. (2015). The Evaluation of Be Yourself/Sé Tú Mismo in Montgomery & Prince Georges Counties, Maryland. Washington, DC: The George Washington University Milken Institute School of Public Health.

Walker, E. M., Inoa, R,. & Coppola, N. (2016). Evaluation of Promoting Health Among Teens Abstinence-Only Intervention in Yonkers, NY. Sametric Research. Princeton, N.J. 08540

1 Furthermore, this birth rate does not account for the proportion of youth who may have given birth more than once. Indeed, about a fifth of all births to youth aged 15 to 19 are repeat births (second or more pregnancies; CDC, 2019), indicating that programs targeting pregnant or parenting youth are needed. Although adolescent pregnancy rates overall are declining, these disparities persist, suggesting that programs targeting these high-risk, vulnerable, or understudied youth are sorely needed.

2 http://www.ncsl.org/research/health/state-policies-on-sex-education-in-schools.aspx

3 Programs delivered in clinics and private non-group and non-licensed homes will not eligible for funding under the MITRE acquisition.

5 https://www.hhs.gov/ash/oah/evaluation-and-research/teen-pregnancy-prevention-program-evaluations/meta-analysis/index.html

6 Prior evaluations of adolescent pregnancy prevention programs that were used to inform attrition estimates included:

School-based programs: the Ateyapi Identity Mentoring Program (Usera & Curtis, 2015), Crossroads (Slater & Mitschke, 2015), Need to Know (Dierschke et al., 2015), Be the Exception (Piotrowski & Hedeker, 2016), and the Promoting Health Among Teens! Programs (Walker, Inoa, & Coppola, 2016)

After school programs: the Be Yourself/Se Tu Mismo (Vyas, Wood, Landry, Douglass, & Fallon, 2015), Haitian American Responsible Teen (Ruwe et al., 2016), and Multimedia Circle of Life (Schwinn et al., 2015) programs

Programs serving adjudicated or foster care youth: the PATH Program (Tolou-Shams et al., 2011), the POWER through Choices Program (Covington et al., 2016), and the Making Proud Choices Program (Smith, Clark & Nigg, 2015; Jemmott, Jemmott, & Fong, 1998)

Community-based programs: Gender Matters (Smith et al., 2016), the Haitian American Responsible Teen (also assessed in an after-school setting; Ruwe et al., 2016), Reduce the Risk (Cummingham et al., 2016; Barbee et al., 2016), Love Notes (Cummingham et al., 2016; Barbee et al., 2016), Teen Outreach Program (Bull et al., 2016; ), Youth All Engaged (Bull et al., 2016), and the Children’s Aid Society-Carrera (Robinson, Kaufman, & Cahill, 2016) Programs

7 Source: “Occupational Employment and Wage Estimates May 2017,” U.S. Department of Labor, Bureau of Labor Statistics. http://www.bls.gov/oes/current/oes_nat.htm

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Schmidt, Stefanie R. |

| File Modified | 0000-00-00 |

| File Created | 2021-01-22 |

© 2026 OMB.report | Privacy Policy