HPOG 1 Impact 72 Mo Follow-up OMB Part B 06062017

HPOG 1 Impact 72 Mo Follow-up OMB Part B 06062017.docx

Health Profession Opportunity Grants (HPOG) program: Third Follow-Up Data Collection

OMB: 0970-0394

Health Profession Opportunity Grant (HPOG) program: Third Follow-Up Data Collection

OMB Information Collection Request

OMB No. 0970-0394

Supporting Statement

Part B

Submitted by:

Nicole Constance

Office of Planning,

Research & Evaluation

Administration for Children & Families

U.S.

Department of Health

and Human Services

4th Floor, Mary E. Switzer Building

330 C Street, SW

Washington, D.C. 20201

Table of Contents

B.1 Respondent Universe and Sampling Methods 1

B.1.1 HPOG Programs and Study Participants 1

B.1.4 Estimation Procedures for HPOG-Impact Analyses 4

B.1.5 Degree of Accuracy Required 5

B.2 Procedures for Collection of Information 6

B.2.1 Preparation for the Interviews 6

B.2.2 In-Person Interviewing 7

B.2.3 Procedures with Special Populations 8

B.3 Methods to Maximize Response Rates and Deal with Nonresponse 8

B.3.1 Participant Contact Updates and Locating 8

B.3.2 Tokens of Appreciation 10

B.3.3 Sample Control to Maximize the Response Rate 11

B.3.4 Nonresponse Bias Analysis and Nonresponse Weighting Adjustment 12

B.5 Individuals Consulted on Statistical Aspects of the Design 13

Appendices:

Appendix A: HPOG Logic Model

Appendix B: HPOG-Impact 72-month Follow-up Survey

Appendix C: HPOG-Impact 72-month Follow-up Survey – Sources

Appendix D: HPOG-Impact Federal Register Notice

Appendix E: HPOG-Impact Previously Approved Contact Update Form

Appendix F: Contact Update Letters

F1: HPOG-Impact Previously Approved Contact Update Letter for 36-Month Survey

F2: HPOG-Impact 72-Month Contact Update Letter

Appendix G: HPOG-Impact Previously Approved Consent Form

Appendix H: HPOG-Impact 72-Month Participant Newsletter

Appendix I: HPOG-Impact 72-Month Survey Flyer

Appendix J: HPOG-Impact 72-Month Email Text

Appendix K: Survey Advance Letters

K1. HPOG-Impact Previously Approved 36-Month Survey Advance Letter

K2. HPOG-Impact 72-Month Survey Advance Letter

Appendix L: Previously Approved Surveys

L1. HPOG-Impact 36-month Follow-up Survey – Control Group Version

L2. HPOG-Impact 36-month Follow-Up Survey – Treatment Group Version

Appendix M: Contact Update Call Script

Part B: Statistical Methods

This document presents Part B of the Supporting Statement for the 72-month follow-up data collection activities for the first round of Health Profession Opportunity Grants (HPOG) Impact Study (HPOG-Impact). The study is sponsored by the Office of Planning, Research and Evaluation (OPRE) in the Administration for Children and Families (ACF) in the U.S. Department of Health and Human Services (HHS).

B.1 Respondent Universe and Sampling Methods

For the 72-month follow-up data collection, the respondent universe for the HPOG evaluation includes a sample of HPOG study participants.

B.1.1 HPOG Programs and Study Participants

Thirty-two grants were awarded to government agencies, community-based organizations, post-secondary educational institutions, and tribal-affiliated organizations to conduct the training and support service activities needed to implement the HPOG program. Of these, 27 were awarded to agencies serving TANF recipients and other low-income individuals. Twenty of those 27 grantees participate in HPOG-Impact. Three more participate in the Pathways for Advancing Careers and Education (PACE) project. Together, these 23 grantees run 42 programs, which comprise the HPOG-Impact sample.

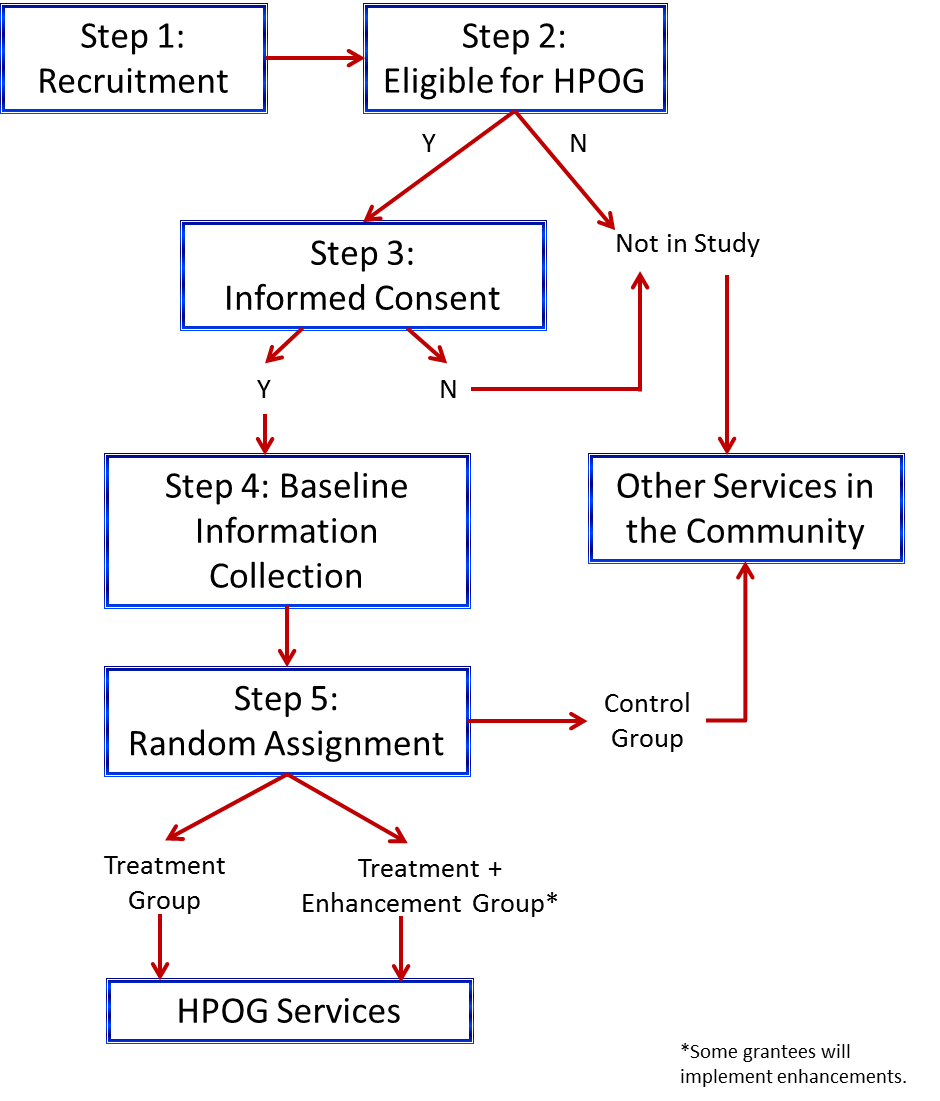

For HPOG-Impact, the universe for the current proposed data collection includes all study participants—both treatment group members that had access to HPOG services and control group members who did not receive HPOG services. Program staff recruited these individuals and determined eligibility. For those individuals deemed eligible for the program and who furthermore agreed to be in the study, program staff obtained informed consent. (If individuals did not agree to be in the study, they were not eligible for services under the first round of HPOG grants.)

For HPOG-Impact, 10,617 participants enrolled in the study across the 20 participating grantees, including two treatment groups and a control group. Ten of the 20 grantees (including 19 of the 42 programs) randomized into two treatment arms (a basic and an enhanced version of the intervention) and a control group. Those in the treatment enhanced group are participating in one of three enhanced HPOG services, in which the basic HPOG programs are augmented by an additional program component. In this subset of grantees, program applicants were randomly assigned to (1) the “standard” HPOG program, (2) an “enhanced” HPOG program (i.e., the HPOG program plus an enhancement) or (3) a control group that is not offered the opportunity to enroll in HPOG.1

Exhibit B-1 summarizes the study’s general randomization process.

Exhibit B-1: HPOG-Impact Study Participant Recruitment and Random Assignment Process

B.1.2 Sample Design

For the 15-month and 36-month surveys, all HPOG study participants were targeted for the survey. Additionally, for every HPOG participant with minor children, a focal child was selected for a child outcome module at 36 months. Given budget constraints, ACF has decided to sample at most 4,000 HPOG study participants for the 72-month survey, with an expected response rate of 74 percent or 2,960 completed interviews. The sample selection plan is described below, followed by a description of how the focal children were selected for the child outcome module. At 72 months, focal child sample selection will be based on whether or not a focal child was selected at the time of the 36-month follow-up. That is, if a participant had a focal child selected for the child outcome module at 36-months, that same child will be selected for the child outcome module at 72-months.

Selection of HPOG Program for 72-MonthSurvey

This section describes the plan for deciding at which HPOG programs to administer the 72-month survey to participants.

The research team will focus on the following criteria when selecting programs for the follow-up survey:

The likelihood of longer-term impacts on the outcomes (such as credential attainment, poverty, welfare dependence, economic self-sufficiency, health insurance, being employed with benefits, food security, mental health, debt, resilience to financial shocks, and child well-being) measured in the 72-month survey; and

The likely practical and policy-relevant magnitude of those impacts.

Based on its cumulative experience, the research team came to the judgment that factors such as the plausibility of a program’s logic model or the quality of implementation by themselves are poor predictors of impacts in the long term. Instead, the study team will primarily use an empirically driven approach that looks for shorter-term impacts on educational progress, employment and earnings to suggest a program is likely to produce long-term impacts on broad (survey-based) measures of economic independence and family well-being.

The decision will primarily be based of shorter-term impacts on earnings from the 15-month follow-up survey and from the National Directory of New Hires (NDNH). NDNH data permits estimation of impacts at a slightly longer follow-up period, 24 months. (The 36-month survey cannot be used because it is still underway.) In addition to examining impacts on employment and earnings at 24 months with the NDNH, the study team will also use 15-month survey results on educational outcomes and employment in the healthcare sector (a key outcome for HPOG) as predictors of future success.

The research team will examine these impacts at the program level and also consider the precision with which the impacts are estimated and the sample sizes available at the program level.

Sampling Plan for Study of Impacts on Child Outcomes

Like the 36-month survey, the 72-month survey will have a child module to assess program impacts on the participants’ children. The 72-month survey will use the same focal child sample selected for the 36-month survey. The child module in the 72-month survey will be asked of all respondents who had at least one focal child selected for the 36-month follow-up survey.

The focal child selection at 36-months was confined to participants who reported at least one child in these households at baseline that would be between the ages of 3 and 18 years at the time of the 36-month survey. A given respondent was asked to confirm that the selected focal child resided with the respondent more than half time during the 12 months prior to the 36-month survey administration. Each household was asked about a specific focal child in the household regardless of how many children were eligible. The 36-month sampling plan selected approximately equal numbers of children from each of three age categories at baseline: preschool-age children aged 3 through 5 and not yet in kindergarten; children in kindergarten through grade 5; and children in grades 6 through 12. The procedure for selecting a focal child from each household depended on the configuration of children from each age category present in the household. Specifically, there are seven possible household configurations of age groups in households with at least one child present:

Preschool child(ren) only

Child(ren) in K – 5th grade only

Children in 6th – 12th grades only

Preschool and K -5th grade children

K – 5th grade and 6th – 12 grade children

Preschool and children 6th – 12 grade children

Preschool-age, K – 5th grade, and 6th – 12 grade children.

The sampling plan was as follows:

For household configurations 1, 2, and 3, only one age group was available for the sample, so the focal child was selected from that age group; if there were multiple children in that age group, one child was selected at random.

For household configurations 4 and 5, a K – 5th grade child was selected from a random 30 percent of households and a child from the other age category in the household (preschool-age or 6th – 12th grade) was selected from 70 percent of households.

For household configuration 6, a preschool child was selected from a random 50 percent of the households, and a child in 6th – 12th grade was selected from the remaining 50 percent of the households.

For household configuration 7, a child in K – 5th grade was selected from a random 20 percent of households, a preschool-age child was selected from a random 40 percent of households, and a child in 6th – 12th was selected from the remaining 40 percent of households.

Sampling weights will be used to account for the differential sampling ratios for some child age categories in some household configurations. By applying the sampling weights, the sample for estimating program impacts on children will represent the distribution of children ages across study households.

B.1.3 Target Response Rates

Overall, the research team expects response rates to be sufficiently high in this study to produce valid and reliable results that can be generalized to the study’s overall participant group. The response rate for the 15-month follow-up survey is 76 percent and as of April 11, 2017 the response rate for the 36-month follow-up survey (ongoing) is currently 73 percent for the sample cohorts that have been completed. We expect this to rise slightly based on experience with the 15-month survey and our experience on early cohorts on PACE 36-month survey. Based on these prior experiences with the HPOG-Impact survey and the PACE survey, we expect to conclude the HPOG 72-Month Follow-up Survey with a 74 percent overall response rate.

B.1.4 Estimation Procedures for HPOG-Impact Analyses

For overall treatment impact estimation, the research team will use multivariate regression (specified as a multi-level model to account for the multi-site clustering). The team will include individual baseline covariates to improve the power to detect impacts. The research team has already pre-selected the covariates, as documented in the study’s Analysis Plan (Harvill, Moulton & Peck, 2015; http://www.career-pathways.org/acf-sponsored-studies/HPOG/). The team will estimate the ITT (intention to treat) impact for all sites pooled and will conduct pre-specific subgroup analyses. In general, analyses that use administrative data will use everyone who gave informed consent during the randomization period for HPOG-Impact. Analyses that rely on survey data will use everyone who gave informed consent during the randomization period and who responds to the follow-up survey. Analyses based on the survey data will deal with nonresponse by including covariates in the impact estimation models as well as using nonresponse weights, as described in the earlier study’s Analysis Plan.

In addition to the straightforward experimental impact analysis, the study’s 15-month analysis undertakes a supplemental (non-experimental) analysis of which program components are the more important contributors to program impact. Future analyses may build on that earlier work. All of the study’s design and analytic plans are publicly available in a series of documents that includes Health Profession Opportunity Grants Impact Study Design Report (Peck, et al., 2014); Health Profession Opportunity Grants Impact Study Technical Supplement to the Evaluation Design Report: Impact Analysis Plan (Harvill, Moulton & Peck, 2015); and Health Profession Opportunity Grants (HPOG) Impact Study: Amendment to the Technical Supplement to the Evaluation Design Report (Harvill, Moulton & Peck, 2017).

B.1.5 Degree of Accuracy Required

The research team has estimated the MDEs. As shown in Exhibit B-2 below, the MDE is the smallest true impact that the study will have an 80 percent probability of detecting when the test is for the hypothesis of “no impact” and has just a 10 percent chance of finding an impact if the true impact is zero.

Three survey measures of particular importance are used here for calculating minimum detectable effect sizes (MDEs): employment in a healthcare occupation, household income, and a measure of a parental report of child well-being. Exhibit B-2 relies on findings from the current HPOG Impact Study to inform the future MDEs.

Exhibit B-2: Minimum Detectable Effects for Employment in a Healthcare Occupation, Household Income, and Parental Report of Child Well-being

Outcome of interest (measure) |

Sample Size (N=number of completed interviews) |

Associated MDEs |

|

|

|||

Employment in healthcare (rate) |

2,960 |

3.7 percentage points |

|

Household Income (monthly) |

2,960 |

$133 |

|

Parental report of children’s school compliance (z-score) |

2,960 |

0.07 |

|

Note: MDEs are based on 80 percent power with a 10 percent significance level in a one-tailed test, assuming estimated in model where baseline variables explain 20 percent of the variance in the outcome. Mean and standard deviation values of each variable come from the analyses of the HPOG Impact Studies’ data: the 15-mo survey for employment in a healthcare occupation, and household income and the 36-mo survey for parental report of child well-being data. Sample sizes associated with the parental report MDE assume that the entire survey subsample is parents: if only two-thirds of the survey subsample were parents (as is their representation in the research sample), then the MDE would be 0.09.

These MDEs are sufficient to detect likely impacts of policy relevance. They reflect a survey subsample of about 40 percent of the HPOG-Impact research participants, or about 4,000 individuals in the survey sample. Given the anticipated 74 percent response rate, this results in an analysis sample of 2,960. The MDEs assume that the 2:1 treatment-control ratio is maintained in the sampling strategy; and, as such, the MDEs are a conservative estimate: if the research team chooses to select a survey sample with a 1:1 treatment-control ratio, then the MDEs would shrink slightly.

The MDE for employment in the healthcare sector is 3.7 percentage points. This is smaller than the impacts that the study is currently observing, and so provides an opportunity for the impacts to wane over time and still be detectably non-zero.

Next, the $133 effect on monthly income is sufficiently small (< $1,600 annually) that it is a non-trivial, but reasonable threshold to determine the program’s impact over the long term. This impact magnitude is on par with what the study is currently observing for this outcome measure.

Third, a new set of measures from the 36-month follow-up survey considers child well-being as reported by parents’ assessment of their development, academic performance, and school compliance behavior. The measure used above to gauge future MDEs is a scale of children’s school attendance, tardiness, suspension and expulsion behavior: the average control group measure is about 0.5 absolute units (where zero is neutral and a positive value reflects favorable school compliance), and the scores are standardized to a mean of zero. As such, the forecasted MDE on such a measure is 0.07 standard deviations. This is a modestly-sized impact for this kind of measure and one that is expected to be achievable—if the intervention has effects on children via their parents’ training, education and subsequent employment experiences—and therefore detectable.

B.2 Procedures for Collection of Information

Experience with the 15- and 36-month survey data collection efforts shows that the study population is more responsive to outreach attempts by local interviewers with local phone numbers than it is to calls from telephone research center. In response, for the 72-month follow-up survey, local field interviewers will conduct all interviews. Interviewers will conduct the 72-month follow-up survey using encrypted devices equipped with computer-assisted personal interviewing (CAPI) technology. CAPI technology, by design, will not allow inadvertent missing responses and will reduce the number of outlying responses to questions—such as exceptionally high or low hours worked per week. CAPI technology also houses the complete participant contact history, interviewer notes, and case disposition summaries, eradicating the need for paper records in the field. Abt’s survey team will conduct the survey.

B.2.1 Preparation for the Interviews

Prior to the interview, interviewers will be hired and trained, an advance letter will be sent, and the interviewer will send an email reminder.

Interviewer Staffing: An experienced, trained staff of interviewers will conduct the HPOG 72-Month Follow-Up Survey. The training includes didactic presentations, numerous hands-on practice exercises, and role-play interviews. Special emphasis will be placed on project knowledge and sample complexity, gaining cooperation, refusal aversion, and refusal conversion.

Abt maintains a roster of approximately 1,700 experienced in-person interviewers across the country. To the extent possible, the new study will recruit in-person interviewers who worked successfully on the HPOG 15-month and 36-month surveys. These interviewers are familiar with the study and have already developed rapport with respondents and gained valuable experience locating difficult-to-reach respondents.

All potential in-person interviewers will be carefully screened for their overall suitability and ability to work within the project’s schedule, as well as the specific skill sets and experience needed for the study (e.g., previous in-person data collection experience, strong test scores for accurately recording information, attention to detail, reliability, and self-discipline).

Advance Letter: To support the 72-month survey effort, an advance letter will be mailed to participants approximately one and a half weeks before we start to call them for interviews. The advance letter serves as a way to re-engage the participant in the study, alert them to the upcoming effort so that they are more prepared for the interviewer’s call. (Advance Letters are in Appendices K.)

The sample file is updated prior to mailing using Accurint to help ensure that the letter is mailed to the most up to date address. This is a personalized letter that will remind participants of their agreement to participate in the study and that interviewers will be calling them for the follow-up interview. The letter also assures them that their answers will be kept private and provides them with a toll-free number that they can call to set-up an interview. We may also send an email version of the advance letter to participants for whom we have email addresses.

Email Reminder: Interviewers attempt to contact participants by telephone first. If initial phone attempts are unsuccessful, interviewers can use their project-specific email accounts to introduce themselves as the local staff, explain the study and attempt to set up an interview. They send this email, along with the advance letter, about halfway through the time during which they are working the cases. (See Appendix J email reminder text.)

B.2.2 In-Person Interviewing

Data collection begins when local interviewers attempt to reach the study participants by telephone. The interviewers will call the numbers provided for the respondent first and then attempt any alternate contacts. Interviewers will have access to the full history of respondent telephone numbers collected at baseline and updated throughout the 72-month follow-up period.

Interviewers will dial through the complete phone number history for participants as well as any alternate contact information. After they exhaust phone efforts, they will start to work non-completed cases in person. Interviewers may leave specially designed project flyers and Sorry-I-Missed-You cards with family members or friends (see Appendices J1 and J2 for the 36-month and 72-month surveys respectively).

B.2.3 Procedures with Special Populations

All study materials designed for HPOG participants will be available in English and Spanish. Interviewers will be available to conduct the Participant Follow-Up survey interview in either language. Persons who speak neither English nor Spanish, deaf persons, and persons on extended overseas assignment or travel will be ineligible for follow-up, but interviewers will collect information on reasons for ineligibility. Persons who are incarcerated or institutionalized will also be ineligible for follow-up.

B.3 Methods to Maximize Response Rates and Deal with Nonresponse

For the 72-month follow-up, the following methods will be used to maximize response:

Participant contact updates and locating;

Tokens of appreciation; and

Sample control during the data collection period.

B.3.1 Participant Contact Updates and Locating

The HPOG team has developed a comprehensive system to maintain updated contact information and maximize response to the 15-month and 36-month survey efforts. A similar strategy will be followed for the 72-month survey. This multi-stage locating strategy blends active locating efforts (which involve direct participant contact) with passive locating efforts (which rely on various consumer database searches). At each point of contact with a participant (through contact update letters), the research team will collect updated name, address, telephone and email information. In addition, the team will use information collected in earlier surveys on contact data for up to three people who did not live with the participant, but will likely know how to reach him or her. Interviewers only use secondary contact data if the primary contact information proves to be invalid—for example, if they encounter a disconnected telephone number or a returned letter marked as undeliverable. Appendix F1 shows a copy of the contact update letter currently in use for the 36-month survey and Appendix F2 shows the contact update letter for use in the 72-month survey efforts. The research team will continue to send contact update letters periodically leading up to the 36-month survey, for study participants that have not yet completed it, and for all participants selected for interviewing prior to the72-month follow-up survey (pending OMB approval).

Given the time between the 15-month or 36-month follow-up surveys and the 72-month follow-up surveys, it is likely that many study participants will have changed telephone numbers (landlines as well as cell phones) and addresses, in some cases multiple times. Accurate locating information is crucial to achieving high survey response rates in any panel study; it is particularly relevant for a 72-month follow-up study.

The research team has developed a contact update approach that includes innovative and creative new ideas to supplement protocols used on the earlier survey surveys. The team will still use a mix of activities that build in intensity over time. We will use passive searches early on (batch Accurint processing) and build up to more intensive active contact update efforts in the 12 months leading up to the release of the 72-month survey. Abt Associates has used these approaches on other studies of traditionally difficult-to-reach populations, such as homeless families and TANF recipients. Exhibit B-3 shows our planned contact update activities and the schedule for conducting each one—in terms of the number of months prior to the 72-month survey data collection.

Exhibit B-3: Participant Contact Update and Data Collection Schedule

Contact Update Activity |

Timing (Months prior to 72-month survey) |

Accurint (Passive) Look-up |

Every 6 months starting in mid-2017 |

Participant Contact Update |

12 |

Participant Newsletter |

8 |

Contact Update Check-in Call |

4 |

Long-Term Survey |

0 |

Participant locating in the Field |

From start of survey to survey completion or cohort closure |

Our first contact update activity will be a passive database search followed by our standard participant contact update mailing (See Appendices E and F.) Passive database searches allow us to gain updated respondent contact data using proprietary databases of vendors such as Accurint. This way, the research team can update contact data quickly and efficiently, without any respondent burden. Accurint lookups for the study sample will be conducted every six months.

Twelve months before a participant is scheduled to be interviewed, participants will be mailed a standard contact update mailing for all potential survey sample members. The letter will invite them to update their contact information as well as information for up to three alternative contacts. This contact update will help reestablish communication with study participants who have not had any recent interaction with the study.

Participant newsletter (Appendix H): To reengage the study participants selected for the 72-month long-term survey sample, our team needs to catch their attention, draw them back in and help re-engage them in the study. This participant newsletter will start by thanking participants for their continued cooperation and emphasize the importance of their participation—even if they were assigned to the control group and did not participate in the program. It will include some study highlights from the HPOG-Impact study. Finally, it will explain the remaining data collection activities and offer participants an opportunity to update their contact information via the online system or on paper. This newsletter will be sent eight months prior to the release of the 72-month survey, replacing the standard contact update letter that would have gone out at that time.

Contact Update Check-in Call (Appendix M): The local interviewers will attempt to conduct a short check-in call with the study participants four months prior to the release of the 72-month survey. This call allows the local interviewers to build upon their existing rapport with participants established during the 15-month and 36-month follow-up data collection efforts. Interviewers will inform study participants about the next survey data collection effort and address any questions about the study such as “Why are you calling me again?” or “Why should I participate?” Interviewers would conclude the call by collecting updated contact data. All new contact information will be appended to the study database prior to the start of the 72-month follow-up survey.

B.3.2 Tokens of Appreciation

Monetary incentives show study participants that the research team respects and appreciates the time they spend participating in study information collection activities. Although evidence of the effectiveness of incentives in reducing nonresponse bias appears to be nearly nonexistent, it is well established that incentives strongly reduce attrition in panel studies.2

In longitudinal studies such as HPOG-Impact, panel retention during the follow-up period is critical to minimizing the risk of nonresponse bias and to achieving sufficient sample for analysis. Although low response rates do not necessarily lead to nonresponse bias and it is at least theoretically possible to worsen nonresponse bias by employing some techniques to boost response rates (Groves, 2006), most statisticians and econometricians involved in the design and analysis of randomized field trials of social programs agree that it is generally desirable to obtain a response rate as close to 80 percent as possible in all arms of the trial (Deke & Chiang, 2016). The work of Deke and Chiang underlies the influential guidelines of the What Works Clearinghouse (WWC). Under those guidelines, the evidential quality rating of an evaluation is downgraded if the differential exceeds a certain tolerance (e.g., 4 percentage points when the overall response rate is 80 percent).

Mindful of these risks and the solid empirical base of research demonstrating that incentives do increase response rates, OPRE and OMB authorized incentives for the prior rounds of data collection at 15 and 36 months (OMB control number 0970-0397). At 15 months, the incentive was $30 for completing the interview and $5 for updating their contact information in advance of the scheduled interview time. With these incentives, HPOG-Impact achieved response rates for the treatment group varying from 65.2 percent to 92.3 percent (treatment) across all sites. Response rates on the control groups were generally lower varying from 59.8 percent to 80.5 percent. Overall, HPOG-Impact experienced a differential response rate of 6.9 percentage points between the treatment and control groups. Site-specific differences ranged from -3.4 to +17.7 percentage points.

Given these response rates and gaps, at 36 months, the conditional incentive for completing the main interview was increased to $40, the $5 incentive for updating contact information was changed to a $2 prepayment included in the request for a contact update, and a prepayment of $5 was added to the advance letter package to remind them of the study and take note that a legitimate interviewer would be calling them shortly to learn about their experiences since study enrollment.

In most longitudinal studies, response rates decline over follow-up rounds. Abt Associates is currently about mid-way through data collection for the 36-month follow-up. Perhaps due to the increased incentives, among other efforts, as of April 11, 2017, the average response rate is only three percentage points lower than it was for the 15-month follow-up. Of course, the 72 month follow-up is three years later and locating challenges will be even greater. Given a target of a 74 percent response rate for the 72-month follow-up, it would be wise to further increase incentives, as well as to slightly restructure them.

The amounts proposed (subject to OMB approval) for the 72-month survey and contact update responses are as follows:

$5 token of appreciation for responding to the first contact update letter;

$10 token of appreciation for the completing the check-in call;

$45 token of appreciation for completing the survey.

Finally, the team does not rely solely on the use of tokens of appreciation to maximize response rates—and therefore reduce nonresponse bias. The next section summarizes other efforts to maximize response rates.

B.3.3 Sample Control to Maximize the Response Rate

During the data collection period, the research team will minimize nonresponse levels and the risk of nonresponse bias by:

Using trained interviewers who are skilled at working with low-income adults and skilled in maintaining rapport with respondents, to minimize the number of break-offs and incidence of nonresponse bias.

Providing a Spanish language version of the survey instrument. Interviewers will be available to conduct the interview in Spanish to help achieve a high response rate among study participants with Spanish as a first language. Based on our experience on the earlier surveys, we know which sites need Spanish language interviewers and Abt will hire the most productive bilingual interviewers from these survey efforts.

Using a mixed-mode approach, a telephone interview with an in-person follow-up, but having local interviews do both the telephone and in-person interviews. Our experience on the earlier surveys is that local interviewers are more likely than the phone center to get their calls answered. This will also allow the local interviewer working the sample earlier and they will have firsthand knowledge of the obstacle encountered if a phone interview cannot be completed.

Using contact information updates, a participant newsletter, and check-in calls to keep the sample member engaged in the study and to enable the research team to locate them for the follow-up data collection activities. (See Appendix F2 for a copy of the contact information update letter.)

Using the participant newsletter to clearly convey the purpose of the survey to study participants and provide reassurances about privacy, so they will perceive that cooperating is worthwhile. (See Appendix H for a copy of the participant newsletter.)

Sending email reminders to non-respondents (for whom we have an email address) informing them of the study and allowing them the opportunity to schedule an interview (Appendix J).

Providing a toll-free study hotline number—which will be included in all communications to study participants—for them to use to ask questions about the survey, to update their contact information, and to indicate a preferred time to be called for the survey.

Taking additional locating steps in the field, as needed, when the research team does not find sample members at the phone numbers or addresses previously collected.

Using customized materials in the field, such as “tried to reach you” flyers with study information and the toll-free number (Appendix I).

Requiring the survey supervisors to manage the sample to ensure that a relatively equal response rate for treatment and control groups is achieved.

Through these methods, the research team anticipates being able to achieve the targeted 74 percent response rate for the follow-up survey. As discussed in Section B.1, the targeted rate of 74 percent is based on our response rates from the 15-month survey and the early results from the 36-month survey.

B.3.4 Nonresponse Bias Analysis and Nonresponse Weighting Adjustment

If interviewers achieve a response rate below 80 percent as expected, the research team will conduct a nonresponse bias analysis. Regardless of the final response rate, the team will construct and use nonresponse weights, using the same approach as currently undertaken with the study’s 15-month survey data analysis (details appear in Harvill, Moulton & Peck, 2015). Using both baseline survey data and administrative data from the NDNH, the research team will estimate response propensity—separately for each randomized arm—by a logistic regression model, group study participants into intervals of response propensity, and then assign weights equal to the inverse empirical response rate for individuals within each interval bin.

B.4 Tests of Procedures

In designing the 72-month follow-up survey, the research team included items used successfully in previous waves (15-month or 36-month follow-up surveys) or in other national surveys. Consequently, many of the survey questions have been thoroughly tested on large samples.

To ensure the length of the instrument is within the burden estimate, we took efforts to pretest with fewer than 10 people and edit the instruments to keep burden to a minimum. During internal pretesting, all instruments were closely examined to eliminate unnecessary respondent burden and questions deemed unnecessary were eliminated.

B.5 Individuals Consulted on Statistical Aspects of the Design

The individuals listed in Exhibit B-4 below made a contribution to the design of the evaluation.

Exhibit B-4: Individuals Consulted

The individuals shown in Exhibit B-5 assisted ACF in the statistical design of the evaluation.

Name |

Role in Study |

Larry Buron Abt Associates Inc. |

Project Director, 36-month and 72-month follow-up studies |

Laura R. Peck Abt Associates Inc. |

Co-PI on HPOG-Impact15-month study 36-and 72-Month follow-up studies |

Alan Werner Abt Associates Inc. |

Co-PI on HPOG-Impact 15-month study 36-and 72-Month follow-up studies |

David Fein Abt Associates Inc. |

Principal Investigator on PACE 15-month study and Co-PI of 36-and 72-month follow-up studies |

David Judkins Abt Associates Inc. |

Sampling Statistician |

Inquiries regarding the statistical aspects of the study’s planned analysis should be directed to:

Larry Buron Project Director

Laura Peck Co-Principal Investigator

Alan Werner Co-Principal Investigator

Nicole Constance Federal Contracting Officer’s Representative (COR),

Administration on Children and Families, HHS

References

Deke, J. and Chiang, H. (2016). The WWC attritional standard: Sensitivity to assumptions and opportunities for refining and adapting to new contexts. Evaluation Review. [epub ahead of print]

Groves, R. (2006). Nonresponse rates and nonresponse bias in household surveys. Public Opinion Quarterly, 70, 646-675.

Harvill, Eleanor L., Shawn Moulton, and Laura R. Peck. (2015). Health Profession Opportunity Grants Impact Study Technical Supplement to the Evaluation Design Report: Impact Analysis Plan. OPRE Report No. 2015-80. Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services.

Eleanor L. Harvill, Shawn Moulton, and Laura R. Peck. (2017). Health Profession Opportunity Grants (HPOG) Impact Study: Amendment to the Technical Supplement to the Evaluation Design Report. OPRE Report #2016-07. Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services.

Judkins, D., Morganstein, D., Zador, P., Piesse, A., Barrett, B., Mukhopadhyay, P. (2007). Variable Selection and Raking in Propensity Scoring. Statistics in Medicine, 26, 1022-1033.

Little, T.D., Jorgensen, M.S., Lang, K.M., and Moore, W. ( 2014). On the joys of missing data. Journal of Pediatric Psychology, 39(2), 151-162.

Lynn, P. (ed.) (2009). Methodology of Longitudinal Surveys. Chichester: John Wiley & Sons.

Peck, Laura R., Alan Werner, Alyssa Rulf Fountain, Jennifer Lewis Buell, Stephen H. Bell, Eleanor Harvill, Hiren Nisar, David Judkins and Gretchen Locke. (2014). Health Profession Opportunity Grants Impact Study Design Report. OPRE Report #2014-62. Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services.

1 Participants assigned to the HPOG enhanced group receive regular HPOG program services and, depending on the program, one of the following enhancements: emergency assistance, non-cash incentives, or facilitated peer support.

2 See Chapter 12 of Lynn (2009), in particular, section 12.5 that reviews the effects of incentives in several prominent longitudinal studies.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2021-01-13 |

© 2026 OMB.report | Privacy Policy