CMS-10769_Sup_Statement_Part_B 6-7-21

CMS-10769_Sup_Statement_Part_B 6-7-21.docx

Evaluation of the CMS Network of Quality Improvement and Innovation Contractors Program (NQIIC) (CMS-10769)

OMB: 0938-1424

Supporting Statement – Part B

Collection of Information Employing Statistical Methods

The Independent Evaluation Contractor (IEC) will recruit two distinct healthcare groups for participation in the surveys: nursing home administrators and hospital administrators. The target samples for both surveys will be divided into two groups: 1) administrators of facilities that are supported by the Centers for Medicare & Medicaid Services (CMS) National Quality Innovation and Improvement Contractors (NQIIC), and 2) administrators of facilities with no or low participation in these programs. Nursing homes and hospitals are served by different types of contractors under the NQIIC Program: nursing homes are supported by Quality Innovation Network-Quality Improvement Organizations (QIN-QIOs) and hospitals are supported by Hospital Quality Improvement Contractors (HQICs).

The sample universe will be the nursing home and hospital administrators who are most familiar with the quality improvement initiatives of their organizations and who represent organizations that are qualified to participate in the QIN-QIO and HQIC programs being evaluated. Currently, IEC estimates that 9,213 nursing homes and 2,645 hospitals qualify to participate in these programs. IEC maintains a list of all qualifying and enrolled nursing homes and hospitals. Prior to sampling, IEC will remove any facilities that have been closed from its lists so that all nursing homes and hospitals in the sample will be active billing facilities.

Among the qualifying nursing homes and hospitals, a certain portion will not be enrolled in an NQIIC program. The size of this target subpopulation is small for nursing homes (84 nursing homes). Since non-enrollment is such a small proportion of qualified nursing homes (less than 1%), this group will be combined with nursing homes that are enrolled in the program but have low or no participation. The determination of nursing homes with low participation in the NQIIC program will be made using updated participation data when the final sample is drawn1. The participation data for each nursing home enrolled by a QIN-QIO is continuously updated by the contractors in a dataset housed in CMS’ host environment. The data describes each encounter between the QIN-QIO and nursing home and includes fields for time spent (in hours). Additional information on participation will be sourced from records of nursing home management and staff who completed training in a series of online modules focused on preventing and containing COVID-19 infections hosted by CMS and promoted by QIN-QIOs. For the hospital samples, there are 673 hospitals that are eligible for the program and are not enrolled, which is sufficient to meet the needs of the sample design. There is currently no data available that can be used to gauge the level of participation of enrolled hospitals, but level of engagement is measured in the survey questionnaire. Therefore, the sample that would be allocated to the low participation group was combined with the moderate to high participation group.

For the nursing home survey, IEC will collect 250 completed surveys from administrators of facilities actively participating in the QIN-QIO programs. An additional target of 250 completed surveys will be allocated to nursing homes that are enrolled but low participating or are qualified but did not enroll. For hospitals, IEC will seek a target of 375 completes among hospitals that are enrolled (regardless of participation level). An additional target of 125 completed surveys will be allocated to hospitals that qualify but are not enrolled.

Table 1a: Summary of Sample Targets for Nursing Homes by Strata

Strata |

Nursing Homes |

Description |

Enrolled Moderate or High Level of Participation |

250 |

Enrolled facilities demonstrating moderate or high participation from encounter and training data |

Enrolled Low Participation & Not Enrolled |

250 |

Enrolled, but demonstrating low participation & qualifying, but not enrolled

|

Total |

500 |

|

Table 1b: Summary of Sample Targets for Hospitals by Strata

Strata |

Hospitals |

Description |

Enrolled Low, Average or High Level of Participation |

375 |

Enrolled facilities demonstrating low, average or high participation from encounter and training data |

Not Enrolled |

125 |

Qualifying, but not enrolled |

Total |

500 |

|

IEC expects to achieve a response rate of 40% among the nursing home administrators asked to participate because of difficulties scheduling and conducting telephone interviews during normal working hours. This anticipated response rate is based on similar surveys with this population conducted previously by IEC team members. For hospital administrators, however, the IEC anticipates a somewhat lower response rate (35%) because there may be difficulties achieving this rate due to incomplete contact information, administrators having broader responsibilities and less available staff among the hospitals eligible for the program. Response rates may vary by strata, particularly within the not-enrolled strata for both surveys which, we expect, will be difficult to recruit for these surveys because difficulty in contacting these administrators is a primary reason that the QIOs and HQICs cite as a barrier to recruiting these organizations.

IEC will conduct a stratified random sample for each survey (see Table 1a and Table 1b above for targets).

Within each stratum, we will employ a systematic random selection that assures that the sample is well representative of the population. Such selection process can include a number of balancing variables. These variables will include QIN-QIOs/HQICs serving the facilities, facility performance (star rating), facility characteristics (e.g., hospital setting (urban/rural), size, etc.), and program data (e.g., level of participation , qualification category, etc.). Before drawing the sample, IEC will conduct analysis to determine which balancing variables to use for each survey, the categories/ number of categories most relevant for each variable while limiting small cells, and the optimal sort order prioritization. This methodology will produce samples that are more representative across the balancing variables categories.

Estimation Procedure

IEC’s evaluation plan outlines the analytic method for each task’s evaluation questions to address the opinions of administrators about the QIN-QIO and HQIC programs and the programs’ subjective importance on their facilities’ quality improvement efforts. Our analysis will begin with descriptive statistics, including percentages and means in total and across subgroups. IEC will employ appropriate statistical tests, including t-tests, chi-square tests, and analyses of variance (ANOVA).

Degree of accuracy needed for the purpose described in the justification

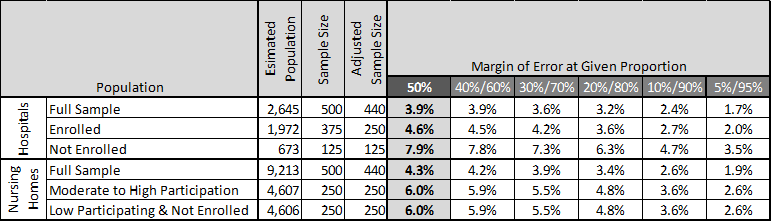

The margins of error (MOEs) with 95% level of confidence for these

surveys combined across strata (500 completes) is slightly lower than

a simple random sample of 500

(+/-4.4%) due to the

disproportional sampling. While the level of disproportionality is

currently unknown, a rough estimate would produce an effective sample

size of 440, which would yield an MOE of +/- 4.7%. Using a finite

population size adjustment, the MOE decreases to +/- 4.6% for nursing

homes and +/- 4.3% for hospitals.

Within the stratum with 250 target completes, the sample will be proportionate, yielding an MOE of +/- 6.2%. Most of the strata will have only small finite population adjustment. The hospital non-enrolled stratum (125 completes) is the smallest target population. ) In this case, the MOE is +/-7.9% if the population is adjusted for the relatively small population (673 hospitals), compared to an unadjusted MOE that would be +/-8.8% .

Table 2 shows the planned MOEs for different levels of estimates under these sample plans for the total sample of 500 and for the subsample of participating and non-participating facilities. The MOEs are corrected for their finite populations. The maximum value is at 50% with a maximum margin of error of ±4.90%. The MOEs do not take into account any correction for the design effect resulting from the balancing methodology or if IEC applies no weights to correct for differential unit non-response.

Table 2: Sample Size, Estimated Effective Sample Size, and Margin of Error (MOE)1

1 Use of balancing methodology in the sample (described above) will result in slightly lower MOEs to the extent that the balancing criteria are predictors of survey responses.

Unusual problems requiring specialized sampling procedures.

IEC does not foresee any unusual problems that require specialized sampling procedures.

Any use of periodic (less frequent than annual) data collection cycles to reduce burden.

In this rendition, the nursing home administrator and hospital administrator surveys are intended to measure attitudes for a single point in time. The surveys will collect cross-sectional data, so IEC will not request information from the same participants more than once.

Pre-survey notification letters that provide more information on a study increase respondent confidence in the validity and the importance of the study, resulting in higher response rates.2 The IEC will use a pre-survey notification letter to introduce and explain this data collection effort. The content of pre-notification letters can be viewed in Appendices C.1 for nursing homes and C.3 for hospitals.

Based on IEC’s experience in conducting surveys among health care professionals, we have found that leaving a voicemail message on the first and second call attempt has positive results on response rates, but that leaving additional messages does not lead to increased responses. IEC’s interviewers will leave one voice mail message for any respondent for whom we receive an answering machine message and provide a toll-free number for these respondents to call back to complete a survey. We will also send an email with similar text inviting respondents to send a reply to a secure email address. The content of messages is available in Appendices C.2 for nursing homes and C.4 for hospitals.

If a respondent is unable to complete the survey at the time of the initial call, our interviewers will arrange for a best date and time to make a second attempt and will record this information in the call record. Interviewers will not attempt to contact the respondent again until the specified date and time.

Interviewers will be able to accommodate respondents who need to abandon the survey before its completion. They will schedule a callback for a date and time of the respondent’s preference, and then code the record as “incomplete.” When the callback time arrives, the interviewer will continue from the last completed item, and the respondent will finish the survey.

Interviewers will make six attempts for each telephone number before that number is taken out of our sample.

Since CMS is using widely accepted data collection techniques and is devoting substantial resources to efforts designed to minimize non-response, we expect the response rate to this survey to be comparable or better than that achieved for other healthcare administrator surveys conducted by IEC team members in the past. Furthermore, IEC has conducted numerous surveys on a variety of topics that have achieved response rates comparable to, or exceeding, the response rate estimated for this survey.

Should a respondent refuse to complete the survey, the refusal will be classified into two groups: hard refusals and soft refusals. Hard refusals are defined as situations where respondents adamantly state that they do not wish to be called again. Records coded with this disposition do not reappear in the interviewers’ call queues for the remainder of the project.

Soft refusals include situations where respondents simply hang up on an interviewer or refuse the initial contact in a less aggressive manner. These cases are still considered “active,” but are placed on hold and re-contacted as necessary as the number of respondents in the viable sample dwindles.

IEC will conduct a thorough non-response analysis upon completion of the data collection. This analysis will examine if there are systematic patterns in non-response that lead to the under and/or over representation of particular subpopulations. If such distortions are detected, IEC will apply non-response weights sparingly to address the distortions while managing the design effect of weights.

Generalizing to the Universe Studied

Since IEC is conducting a stratified random sample, we expect that the information collected will yield reliable data that can be generalized to the universe studied.

As part of developing the survey instruments, IEC has already conducted internal beta-testing to assess the hour burden per respondent and to ensure the questions and responses are readily understandable and skip patterns are logical.

Additionally, before full-scale implementation, we will conduct pre-testing of the surveys. Respondents for pre-testing will be contacted and, once they agree to help CMS refine the survey, they will be given an opportunity to schedule a telephone interview. Once the interviews are completed, we will solicit these respondents’ feedback about possible improvements that can be made to the survey and the survey administration process.

This pre-testing will enable IEC to assess and correct any ambiguities or shortcomings in the survey questions and instructions. We do not anticipate that this process will result in substantive changes affecting the survey content or length.

Table 3 provides names and affiliation for those consulted on the statistical aspects of the survey design and who will collect or analyze the information.

Table 3: Individuals

Consulted on Statistical Aspects

and Performing Data Collection

& Analysis

-

Name

Affiliation

Ping Yu, PhD

Booz Allen Hamilton

Sandy Lesikar, PhD

Booz Allen Hamilton

Kathryn Schulke, BSN

Booz Allen Hamilton

Elyse Levine, PhD

Booz Allen Hamilton

Stephen Tregear, PhD

Booz Allen Hamilton

Kevin Shang

Booz Allen Hamilton

Xiaoying Xiong

Booz Allen Hamilton

Jia Zhao, PhD

Booz Allen Hamilton

Mark Andrews, MA

Booz Allen Hamilton

Stephanie Fahy, PhD

Booz Allen Hamilton

Allen Dobson, PhD

Dobson DaVanzo & Associates, LLC

Joan DaVanzo, PhD

Dobson DaVanzo & Associates, LLC

Jeffrey C. Henne

The Henne Group

Sergio Garcia

The Henne Group

Catherine Shinners

The Henne Group

Nyree Young

The Henne Group

Stephen Schwartz

The Henne Group

Table 4 shows the name of the CMS staff member who advised on the survey design.

Table 4: CMS Staff Member Who Advised on Survey Design

-

Name

Affiliation

Nancy Sonnenfeld, PhD

Center for Clinical Standards and Quality

Kurt Herzer, MD, PhD

Center for Clinical Standards and Quality

Geoffrey Berryman

Center for Clinical Standards and Quality

Elizabeth Flow-Delwiche, PhD

Center for Clinical Standards and Quality

Ian Craig

Center for Clinical Standards and Quality

1 Because early encounters include administrative tasks and needs assessments program administrators have indicated that, programmatically, the threshold should be 1-2 hours before they would expect any benefit from the program to be realized. This is partly due to the administrative and assessment activities that take place in the initial encounters.

2 Dykema J, Stevenson J, Day B, Sellers SL, Bonham VL. Effects of incentives and prenotification on response rates and costs in a national web survey of physicians. Eval Health Prof. 2011;34(4):434-447. doi:10.1177/0163278711406113

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | IEC OMB Supporting Statement B |

| Subject | Sample, statistical methods, and analysis for nursing home survey for the Information Collection Request for the CMS Independent |

| Author | CMS |

| File Modified | 0000-00-00 |

| File Created | 2021-07-07 |

© 2026 OMB.report | Privacy Policy