MTSS-R OMB Part A 12.20.2021-Clean

MTSS-R OMB Part A 12.20.2021-Clean.docx

Impact Evaluation of Training in Multi-Tiered Systems of Support for Reading in Early Elementary School

OMB: 1850-0953

December 2021

Impact Evaluation of Training in Multi-Tiered Systems of Support for Reading in Early Elementary School

OMB Clearance Request: Supporting Statements, Part A

Data Collection

Impact

Evaluation of Training in OMB Clearance Request: Supporting Statements Part A Data Collection December 2021

|

www.air.org Copyright © 2021 American Institutes for Research. All rights reserved. |

Contents

Page

Part A. Supporting Statement for Paperwork Reduction Act Submission 1

1. Circumstances Making Collection of Information Necessary 1

2. Purpose and Use of the Data 5

3. Use of Technology to Reduce Burden 7

4. Efforts to Avoid Duplication 7

5. Methods to Minimize Burden on Small Entities 7

6. Consequences of Less Frequent Data Collection 7

7. Special Circumstances Relating to the Guidelines of 5 CFR 1320.5 8

8. Federal Register Comments and People Consulted Outside the Agency 9

9. Payment or Gifts to Respondents 9

10. Assurances of Confidentiality Provided to Respondents 10

11. Justification of Sensitive Questions 11

12. Estimates of Annualized Burden Hours and Costs 11

13. Estimates of Other Total Annual Cost Burden to Respondents and Record Keepers 12

14. Annualized Cost to the Federal Government 12

15. Explanation for Program Changes or Adjustments 12

16. Plans for tabulation and publication of results 12

17. Approval to Not Display OMB Expiration Date 14

18. Explanation of Exceptions to the Paperwork Reduction Act 14

Exhibits

Page

Exhibit 1. Four Components of the Comprehensive MTSS-R Model 2

Exhibit 2. Student Cohorts Included in the MTSS-R Evaluation 4

Exhibit 3. Questions the MTSS-R Study Will Address 4

Exhibit 4. Timeline of Data Collection Activities 5

Exhibit 5. Technical Working Group Members 9

Exhibit 6. Estimated Annual Burden and Costs for Data Collection in the Current Request 11

Part A. Supporting Statement for Paperwork Reduction Act Submission

This package requests clearance from the Office of Management and Budget (OMB) to conduct initial data collection activities for the Impact Evaluation of Training in Multi-Tiered Systems of Support for Reading in Early Elementary School (the MTSS-R Study). The Institute of Education Sciences (IES), within the U.S. Department of Education (ED), awarded the MTSS-R Study contract to the American Institutes for Research (AIR) and its partners, Instructional Research Group (IRG) and School Readiness Consulting (SRC), in September 2018. The purpose of this evaluation is to provide information for policy makers, administrators, and educators on the effectiveness of two MTSS-R approaches in improving classroom reading instruction and students’ reading skills. In addition, the evaluation will examine implementation challenges and costs of the two MTSS-R approaches.

This package provides a detailed discussion of all evaluation activities. However, the package only requests clearance for the teacher, reading interventionist, and MTSS-R team leader surveys, MTSS-R team leader interviews, and the Tier I and II post-observation interviews, which will take place from the spring of SY2021-22 through SY2023–24. A previous package submitted in June of 2020 and cleared in December of 2020 (OMB Control Number: 1850-0953) requested clearance for the data collection activities that occur in the fall of 2021 (parent consent forms for student participation in data collection activities, district records requests to identify students in the sample, and district cost interviews).

Justification

Circumstances Making Collection of Information Necessary

Statement on the need for an evaluation of MTSS-R

Young children must acquire critical foundational reading skills (Fiester, 2010; Foorman et al., 2016) to succeed academically. Students who are not fluent readers by third grade often fall behind their peers and are more likely to drop out of high school (Hernandez, 2011). Alarmingly, nearly one third of all students and more than two thirds of students with disabilities do not reach reading proficiency by Grade 4 (National Assessment of Educational Progress, 2017). Although educators recognize the importance of helping young children learn to read, they may have limited resources or limited knowledge of how best facilitate this learning.

Multitiered systems of support for reading (MTSS-R) have emerged as a promising solution. Federal policies—including the Individuals with Disabilities Education Act of 1990, the No Child Left Behind Act of 2002, and the Every Student Succeeds Act of 2015—have led to widespread adoption of MTSS-R in the early grades (e.g., Denton, Fletcher, Taylor, & Vaughn, 2014; Vadasy, Sanders, & Tudor, 2007).

Despite the widespread popularity of MTSS-R and policies encouraging its adoption, schools often struggle to implement MTSS-R, and a comprehensive MTSS-R model has not been rigorously evaluated on a large scale.1 An evaluation is thus warranted to determine whether rigorous, well-implemented MTSS-R approaches improve students’ general and foundational reading skills.

The goal of the MTSS-R Study is to rigorously evaluate two approaches to MTSS-R. The evaluation will describe the extent to which the MTSS-R approaches have an impact on classroom teachers’ and interventionists’ instructional practices and students’ reading outcomes. The evaluation also will examine classroom teachers’ and interventionists’ experiences with MTSS-R training and supports, their implementation of MTSS-R (e.g., fidelity of implementation and challenges to implementation), and the cost of the MTSS-R approaches.

The data collection described in this package is necessary because school districts and schools do not systematically collect student reading achievement data for Grade 1 and Grade 2 students or reading instruction observation data for teachers and reading interventionists.

MTSS-R logic model and approaches

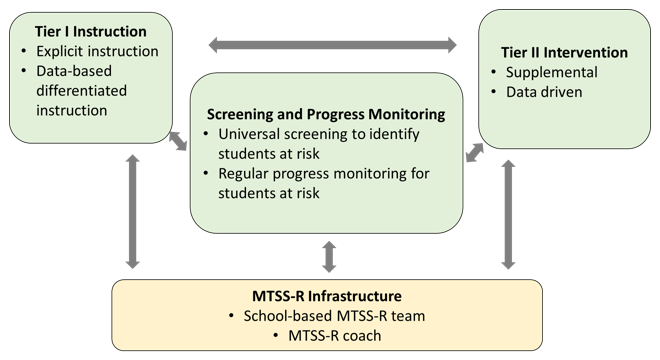

The MTSS-R model to be tested focuses on Grades 1 and 2 and includes four core components (see Exhibit 1): (a) Tier I instruction, (b) Tier II intervention, (c) screening and progress monitoring, and (d) MTSS-R infrastructure.2 We describe each of the four components of the MTSS-R model below.

Exhibit 1. Four Components of the Comprehensive MTSS-R Model

Explicit and Differentiated Tier I Instruction. In the MTSS-R model tested in this study, Tier I instruction includes an emphasis on teachers’ delivery of their core reading curriculum using explicit instruction and data-based differentiated instruction. To engage in explicit instruction, teachers offer supports or scaffolds (e.g., modeling, ongoing systematic review and feedback) that guide students through the learning process, starting with clear statements about the purpose and rationale for learning a new skill, clear explanations and demonstrations of the new skill, and supported practice with feedback until students reach independent mastery of the new skill. To engage in data-based differentiated instruction, teachers use a variety of data—such as assessment data, in-class work, homework, or notes from student observation—to tailor the content or delivery of instruction.

Tier II Intervention. Tier II intervention is supplemental instruction provided to a subset of students identified through screening and progress monitoring as needing additional support. Tier II emphasizes foundational reading/decoding skills and includes high-leverage instructional practices such as modeling, multiple opportunities to respond, and explicit feedback.

Screening and Progress Monitoring. Screening and progress monitoring tools guide student placement in Tier II reading intervention and movement between tiers. These brief, reliable, and valid student assessments measure foundational reading skills in Grades 1 and 2 (e.g., word identification and word and passage-reading fluency). Schools use a data system to support the systematic collection and analysis of these data. Within this study, schools will screen all students at least twice annually to determine if they need Tier II reading intervention. In addition, schools will collect progress-monitoring data on students receiving Tier II at least once every four weeks.

Multitiered Systems of Support for Reading Infrastructure. The MTSS-R model includes (a) a school-based MTSS-R team that meets regularly to lead and coordinate MTSS-R implementation, and (b) a district-based MTSS-R coach who will support school staff in implementing MTSS-R over three years. The MTSS-R teams, which typically include a wide range of staff (including administrators, reading specialists, classroom teachers, and interventionists), meet regularly to examine MTSS-R activities, support MTSS-R implementation, and oversee screening and progress monitoring. The MTSS-R coach will support each school in implementing MTSS-R with fidelity, support the MTSS-R and data/grade level teams, and support the Tier I and Tier II practices of teachers and interventionists.

Two Approaches Tested. Because this MTSS-R model can be operationalized in various ways, we plan to test two different approaches, each supported by a different training provider.

The first approach (Approach A) develops students’ foundational skills in Tier I by following principles of direct instruction (e.g., specific modeling of key reading skills and concepts with multiple opportunities to respond and immediate clear feedback). This approach provides teachers with scripted lesson plans, including instructional routines mapped to teachers’ core reading program. The approach also conceptualizes the primary purpose of Tier II intervention to be pre- and reteaching Tier I content. Although Approach A appears promising based on prior research, the studies have been small-scale efficacy evaluations, and thus this larger rigorous study will provide important information for policy makers and practitioners about whether the approach is effective when implemented on a large-scale.

The second approach (Approach B) develops students’ foundational skills in Tier I by increasing the use of explicit instructional strategies in the classroom (e.g., teachers offering supports or scaffolds that guide students through the learning process, starting with clear statements about the purpose and rationale for learning a new skill, clear explanations and demonstrations of the new skill, and supported practice with feedback until students reach independent mastery of the new skill). Approach B provides teachers with training on evidence-based reading instruction along with lesson plan templates teachers can use with their reading programs. In Tier II, the provider uses a separate, fully developed and commercially available evidence-based intervention program that is aligned to student needs, and is designed to develop foundational reading skills. Although Approach B is in common use, there is little rigorous research on its effectiveness as a whole; thus including Approach B in the planned study will provide important information for policy makers and practitioner about the effectiveness of this prevalent approach.

Overview of the study design and research questions

To test the two approaches described above, we will randomly assign approximately 150 schools across approximately 9 districts to one of three conditions: Approach A, Approach B, or a business-as-usual control group. Random assignment will take place within districts, and we will include additional blocking variables as needed to ensure baseline equivalence (e.g., percent of students who are at-risk for reading difficulties, school size). The study will collect outcome data over time, from Grades 1 through 5 (shown in Exhibit 2), for two cohorts of students. Cohort 1 students are those who enter first grade in SY2021–22; Cohort 2 students are those who enter first grade in SY2022–23.

Exhibit 2. Student Cohorts Included in the MTSS-R Evaluation

Student Cohort |

SY2021–22 |

SY2022–23 |

SY2023–24 |

SY2024–25 |

SY2025–26 |

SY2026–27 |

Cohort 1 |

Grade 1 |

Grade 2 |

Grade 3 |

Grade 4 |

Grade 5 |

|

Cohort 2 |

|

Grade 1 |

Grade 2 |

Grade 3 |

Grade 4 |

Grade 5 |

The study will address the nine research questions (RQs) and their subquestions shown in Exhibit 3.

Exhibit 3. Questions the MTSS-R Study Will Address

Research Questions About Impact |

|

RQ1 |

What is the impact of each MTSS-R training and TA strategy on reading instructional practices? |

RQ2 |

What is the impact of each MTSS-R training and TA strategy on student reading outcomes? |

Research Questions About Implementation |

|

RQ3 |

To what extent is the training and TA for the two MTSS-R strategies provided as intended in the treatment schools? |

RQ4 |

How does the training and TA for MTSS-R differ between the treatment and control schools? |

RQ5 |

To what extent are the components of the MTSS-R model implemented as intended in treatment schools? |

RQ6 |

How does implementation of the components of the MTSS-R model differ between the treatment and control schools? |

Research Question About Costs |

|

RQ7 |

What is the cost and cost-effectiveness of implementing each of the two MTSS-R strategies? |

Supplemental Research Questions About Implementation |

|

RQ8 |

What challenges are faced in the training and in implementing MTSS-R, and how are they addressed? |

RQ9 |

What are the characteristics of the screening tools used by districts, and to what extent do the screening tools identify struggling readers? |

Our proposed data collection activities will allow the study team to examine the impact of the two MTSS-R approaches on instructional practices and students reading skills as well as provide comprehensive information to describe the implementation fidelity, service contrast, cost, and cost effectiveness of the two MTSS-R approaches. The purposes of the planned data collection activities are described below, and the timeline for data collection is shown in Exhibit 4. AIR and its partners (IRG and SRC) will collect all data. We have received OMB approval for the district cost interviews, parent consent forms, and the district records requests. At this time, we are requesting approval for the teacher, reading interventionist, and MTSS-R team leader surveys, the MTSS-R team leader interviews, and the Tier I and II post-observation interviews.

Exhibit 4. Timeline of Data Collection Activities

Data Collections |

2021–22 SY |

2022–23 SY |

2023–24 SY |

2024–25 SY |

2025–26 SY |

2026–27 SY |

||||||

Fall |

Spr |

Fall |

Spr |

Fall |

Spr |

Fall |

Spr |

Fall |

Spr |

Fall |

Spr |

|

Data collections that do not require clearance |

|

|

|

|

|

|

|

|

|

|

|

|

Study-administered student tests |

|

|

|

|

|

|

|

|

|

|

|

|

Tier I and II observations |

|

|

|

|

|

|

|

|

|

|

|

|

Data collections under the prior clearance request |

|

|

|

|

|

|

|

|

|

|

|

|

Parent consent forms |

|

|

|

|

|

|

|

|

|

|

|

|

District cost interviews |

|

|

|

|

|

|

|

|

|

|

|

|

District records requests |

|

|

|

|

|

|

|

|

|

|

|

|

Data collections under the current clearance request |

|

|

|

|

|

|

|

|

|

|

|

|

Teacher surveys |

|

|

|

|

|

|

|

|

|

|

|

|

Reading interventionist surveys |

|

|

|

|

|

|

|

|

|

|

|

|

MTSS-R team leader surveys |

|

|

|

|

|

|

|

|

|

|

|

|

MTSS-R team leader interviews |

|

|

|

|

|

|

|

|

|

|

|

|

Tier I and II post-observation interviews |

|

|

|

|

|

|

|

|

|

|

|

|

Data collections that do not require clearance

Study-administered student tests (RQ2). The study team will administer the Woodcock Reading Mastery Test to capture students’ reading skills in the fall of first grade (i.e., baseline) and in the spring of first and second grades. Spring student test data will be used as the main outcomes to address the impact of the two MTSS-R approaches on reading skills (RQ2), while the baseline student test will be used to identify which students are at risk for reading difficulties and as a covariate in the impact models.

Tiers I and II observations (RQ1). The classroom observations will capture the explicitness of teachers’ instruction and teachers’ instructional intensity.

Data collections under the prior clearance request

Parent consent forms (RQ2). The study will obtain informed parent consent in all districts. Only students whose parents consent (in districts that require active parental consent) or whose parents do not opt them out (in districts that do not require active parental consent) will be included in the study-administered student tests.3 Two data collections that obtain information about students: the study-administered student tests and district records requests. Based on our conversations to date with districts that may participate, only two require active parental consent; the remaining have waived active parental consent. Parents in districts that require active consent will be requested to return a form that indicates whether they allow or refuse testing of their child for the purpose of the study. Parents in districts that waive active parental consent will be informed about the study and study related student testing and will have the opportunity to opt their child out of the student testing.

District cost interviews (RQ7). The district cost interviews will capture the amount of time central office staff spend to get Approach A and B up and running (e.g., time dedicated to identifying and hiring coaches and supporting implementation), as well as the additional resources the district devotes to implementation.

District records requests (RQs 1—7, 9). The district records will be used to identify the sample teachers, reading interventionists, and students (e.g., class rosters), as well as to capture teacher, reading interventionist, and student background characteristics. Screening and progress monitoring data will be used to describe the sample and examine the characteristics of the screening tools. In addition, the third-, fourth-, and fifth-grade achievement data will be used to examine the longer-term impacts of Approach A and B on reading skills, and on identification for special education. (See Appendix C for the extant data request form).

Data collections under the current clearance request

Teacher and reading interventionist surveys (RQs 3—6 and 8). The teacher and reading interventionist surveys will capture teachers’ and interventionists’ experiences in and perceptions of the MTSS-R trainings and supports, their involvement with MTSS-R teams, their use of screening and progress monitoring data, and their practices related to differentiated instruction. The surveys of teachers and interventionists in schools implementing Approach A or B will ask about implementation challenges. (See Appendix A and B for the teacher and reading interventionist surveys).

MTSS-R team leader survey (RQs 3—6). The MTSS-R team leader survey will capture information on schools’ policies regarding screening, progress monitoring, and team meetings. (See Appendix C for the MTSS-R team leader survey).

MTSS-R team leader interview (RQ8). The MTSS-R team leader interviews will capture the challenges schools faced implementing the MTSS-R approaches and the schools’ proposed solutions to those challenges. We will use separate interview protocols for schools implementing Approaches A and B. (See Appendix D for the MTSS-R team leader interview).

Tier I and II post-observation interviews (RQ1). The post-observation interview will capture information about teachers’ rationales for any student grouping used during the observed lessons (e.g., whether the groupings were based on data and, if so, on what data), to measure the level of differentiated instruction. (See Appendix E for the Tier I and Tier II post-observation interview).

The interviews with district personnel will be conducted by phone to reduce the burden on respondents, as well as to minimize travel costs for the evaluation. Additionally, all district records needed for this evaluation will be requested in an electronic format and will be transferred to AIR through a secure file transfer protocol. Our district records requests will detail the data elements needed, and example coding; however, to reduce burden on the districts we will accept the data in any format in which they are provided. Our analysts will convert all files to a consistent format in order to combine them for analysis.

Throughout the evaluation, efforts will be made to reduce the burden on respondents. Wherever possible, we rely on secondary data sources to reduce burden on district and school personnel. The data collections effort planned for this project will produce data that are unique, that target the research questions identified for this project, and that are not available from extant data for the participating districts and schools.

The data will be collected from district and school staff. No small businesses or entities will be involved in the data collection.

The proposed data collections described in this submission are necessary to address the study’s research questions in reports and research briefs, and to support IES in reporting to Congress, other policy makers, and practitioners seeking effective ways to support student learning. The consequences of not collecting specific data are outlined below.

Data collections that do not require clearance

Without the study-administered student tests, we would not have the data to identify students’ baseline performance in reading, identify which students are at-risk for reading difficulties, or to address the impact of the two MTSS-R approaches on reading skills (RQ2).

Without the Tier I and II observations, we would not have the data to examine whether MTSS-R Approaches A or B improved the explicitness of teachers’ instruction, teachers’ instructional intensity, or the degree of differentiation of instruction (RQ1).

Data collections under the prior clearance request

Without the parent consent forms, we would not be able to collect the study-administered student tests, making it impossible identify students’ baseline performance in reading, identify which students are at-risk for reading difficulties, or address the impact of the two MTSS-R approaches on reading skills (RQ2).

Without the district cost interviews, we would not be able to capture the costs related to districts’ support for the two MTSS-R approaches, making the cost analyses incomplete (RQ7). We would also not be able to describe what activities took place at the district-level to support the implementation of the MTSS-R approaches.

Without the district records requests, we would not be able to identify the sample of students, teachers, interventionists, or team leaders, describe the sample, or examine the characteristics of the screening tools (RQs 1—7 and 9). Additionally, we would not be able to examine the impacts on achievement in the third, fourth, or fifth grade (RQ2).

Data collections under the current clearance request

Without the teacher and reading interventionist surveys, we would not have the data required to understand school staff experiences with the four MTSS-R components, making it impossible to know the extent to which teacher or interventionist experiences differed by treatment condition (RQs 3—6). We would also be unable to assess the perceptions of treatment teachers and interventionists on implementation challenges (RQ8).

Without the MTSS-R team leader survey, we would not have the data required to understand schools’ infrastructure and procedures for MTSS-R teams, screening, and progress monitoring, and how the infrastructure and procedures differ by treatment condition (RQs 3—6).

Without the MTSS-R team leader interview, we would not have the data to understand the challenges schools faced implementing the MTSS-R approaches and the schools’ proposed solutions to these challenges (RQ8).

Without the Tier I and II post-observation interviews, we would not have the data to examine whether MTSS-R Approaches A or B improved the explicitness of teachers’ the degree of differentiation of instruction (RQ1).

No special circumstances apply to this study.

The 60-day Federal Register notice was published on August 16, 2021, Vol. 86, page 45713. One supportive comment was received on Aug 31, 2021.

Consultants outside the agency

The individuals listed in Exhibit 5 serve on the Technical Working Group (TWG) for the Evaluation.

Exhibit 5. Technical Working Group Members

Expert |

Organization |

David Francis |

University of Houston |

Elizabeth Tipton |

Northwestern University |

Julie Washington |

Georgia State University |

Lynne Vernon-Feagans |

University of North Carolina |

Matthew Burns |

University of Minnesota |

Michael Conner |

Middletown Public Schools, Connecticut |

Michael Coyne |

University of Connecticut |

Nathan Clemens |

University of Texas at Austin |

Nicole Patton Terry |

Florida State University |

Stephanie Al Otaiba |

Southern Methodist University |

Sylvia Linan-Thompson |

University of Oregon |

Yaacov Petscher |

Florida State University |

To date, the TWG members have convened once in person, and a subset of the TWG members met virtually to discuss the study design and data collection plan. Project staff will continue to consult TWG members individually or in small groups on an as-needed basis.

Teachers and interventionists will be offered a $25 incentive to complete each survey; surveys are expected to take approximately 25 minutes. The MTSS-R team leaders will be offered $15 to complete the MTSS-R survey. MTSS-R team leaders will also be offered a $45 incentive to complete each interview; interviews are expected to take approximately 45 minutes. The incentive amounts were determined based on NCEE guidance (NCEE, 2005). High response rates are needed to reach valid conclusions about the impact and implementation of the two approaches to MTSS-R being tested. Offering honoraria for teachers and interventionists will help achieve high response rates on the end-of-year surveys.

The importance of providing data collection incentives in federal studies has been described by other researchers, given the recognized burden and need for high response rates.4 The use of incentives has been shown to be effective in improving response rates and reducing the level of effort required to obtain completions.5 Incentives in educational settings, in particular, have been shown to be effective; for example, in the Reading First Impact Study commissioned by IES, monetary incentives had significant effects on response rates among teachers. A substudy requested by the Office of Management and Budget (OMB) on the effect of incentives on survey response rates for teachers showed significantly higher response rates when an incentive of $15 or $30 was offered to teachers, as opposed to no incentive.6

All data collection activities will be conducted in full compliance with ED regulations to maintain the confidentiality of data obtained on private persons and to protect the rights and welfare of human research subjects. In addition, these activities will be conducted in compliance with other Federal regulations including the Privacy Act of 1974, P.L. 93-579, 5 USC 552 a; the Family Educational Rights and Privacy Act of 1974, 20 USC 1232g, 34 CFR Part 99; and related regulations, including but not limited to: 41 CFR Part 1-1 and 45 CFR Part 5b. Information collected for this study comes under the confidentiality and data protection requirements of the Education Science Reform Act of 2002, Title 1, Part E, Section 183.

Responses to this data collection will be used only for statistical purposes. Personally identifiable information (PII) about individual respondents will not be reported. We will not provide information that identifies an individual, school, or district to anyone outside the study team, except as required by law.

An explicit verbal or written statement describing the project, the data collection, and confidentiality will be provided to study participants. These participants will include teachers, interventionists, MTSS-R team leaders, district staff participating in interviews, and parents of students.

AIR takes the following steps to protect confidentiality:

All data collection staff at AIR and any data collection subcontractors will go through any required background clearances (i.e., e-QIP) and will sign confidentiality agreements that emphasize the importance of confidentiality and specify employees’ obligations to maintain it.

All staff will receive training regarding the meaning of confidentiality, particularly as it relates to handling requests for information and providing assurance to respondents about the protection of their responses. Measures to maintain confidentiality will include built-in safeguards concerning status monitoring and receipt control systems.

PII will be maintained on separate forms and files that will be linked only by study-specific identification numbers. All data containing such information will be stored in a cloud-based server system that meets ED’s security requirements.7

Access to a crosswalk file linking study-specific identification numbers to PII and contact information will be limited to a small number of individuals who have a need to know this information. All staff with access to these data will go through required background clearances (i.e., e-QIP) and will receive training about confidentiality.

Access to print documents will be strictly limited. Documents will be stored in locked files and cabinets. Discarded materials will be shredded.

Access to electronic files will be protected by secure usernames and passwords that will be available only to approved users. All data collected in the field will be saved in fully encrypted laptops until the data can be moved to a cloud-based server system that meets ED’s security requirements.

There are no sensitive questions in any of the data collections.

Exhibit 6 summarizes reporting burden on respondents for data collections included in the current request over the next three years. The estimated hour burden for these study data collections is 628 hours in SY2021–22; 675 hours in SY2022–23; and 619 hours in SY2023–24. Assuming an average salary of $30 per hour for teachers and interventionists, and $35 per hour for MTSS-R team leaders, the total burden cost is $59,659 or $19,886 per year.

Exhibit 6. Estimated Annual Burden and Costs for Data Collection in the Current Request

|

Number of Respondents |

Estimated Number of Responses‡ |

Average Hours per Response (Hours) |

Total Burden (Hours) |

Estimated Average Hourly Wage |

Respondent Annual Cost Burden |

Current Request |

|

|

|

|

|

|

SY2020–21 |

|

|

|

|

|

|

Teacher surveys |

900 |

810 |

0.42 |

338 |

$30 |

$10,125 |

Reading interventionist surveys |

450 |

405 |

0.42 |

169 |

$30 |

$5,063 |

MTSS-R team leader surveys |

150 |

135 |

0.40 |

54 |

$35 |

$1,890 |

MTSS-R team leader interviews |

100 |

90 |

0.75 |

68 |

$35 |

$2,363 |

Total for SY2020–21 |

1,600 |

1,440 |

|

628 |

|

$19,440 |

SY2021–22 |

|

|

|

|

|

|

Teacher surveys |

900 |

810 |

0.42 |

338 |

$30 |

$10,125 |

Reading interventionist surveys |

450 |

405 |

0.42 |

169 |

$30 |

$5,063 |

MTSS-R team leader surveys |

150 |

135 |

0.33 |

45 |

$35 |

$1,575 |

Tier I and II post-observation interview |

1,650 |

1,485 |

0.08 |

124 |

$35 |

$4,331 |

Total for SY2021–22 |

3,150 |

2,835 |

|

675 |

|

$21,094 |

SY2022–23 |

|

|

|

|

|

|

Teacher surveys |

900 |

810 |

0.42 |

338 |

$30 |

$10,125 |

Reading interventionist surveys |

450 |

405 |

0.42 |

169 |

$30 |

$5,063 |

MTSS-R team leader surveys |

150 |

135 |

0.33 |

45 |

$35 |

$1,575 |

MTSS-R team leader interviews |

100 |

90 |

0.75 |

68 |

$35 |

$2,363 |

Total for SY2022–23 |

1,600 |

1,440 |

|

619 |

|

$19,125 |

Total Over Three Years |

6,350 |

5,715 |

|

1,922 |

|

$59,659 |

Average Over Three Years |

2,117 |

1,905 |

|

641 |

|

$19,886 |

Notes: Each data collection has just one administration per year.

‡ We’ve assumed a 90% response rate for each of these data collections.

Not applicable. The information collection activities do not place any capital cost or cost of maintaining capital requirements on respondents.

The total cost for the study is $37,447,225 over eleven years and three months, for an annualized cost of $1,852,792 in Year 1; $3,698,282 in Year 2; $5,547,423 in Year 3; $9,787,528 in Year 4; $8,934,847 in Year 5; $6,092,095 in Year 6; $642,580 in Year 7; $384,995 in Year 8; $394,867 in Year 9; $158,962 in Year 10; and $58,853 in Year 11. The annual cost to the federal government is $3,404,293 per year.

This is an impact evaluation that includes the collection of school staff surveys and interviews that will take place from the spring of the 2021-2022 school year through the 2023-2024 school year in 9 geographically-dispersed US school districts and 150 elementary schools. This collection is a revision of a previous package (OMB Control Number: 1850-0953) that was approved to collect parent consent forms and district records, along with interviews regarding districts' spending to support the study training. The previously approved collection will be ongoing simultaneous to the revised information request. Therefore, hours from the approved collection roll over to the revised request, along with the requested hours for the new information collection. The increase in hours is considered a program change.

Plans for tabulation and publication of results

Analysis Plan

AIR anticipates that the project will produce a final report and five supplementary briefs. Below, we describe the main analyses for each:

Final report: The final report will provide an overview of the study (e.g., sample, MTSS-R models) and will succinctly address research questions 1—7, which examine the impact of the two MTSS-R approaches; the fidelity of implementation; the contrast between treatment and control schools in the MTSS-R training and support they receive and the implementation of MTSS-R; and the cost and cost effectiveness of the MTSS-R approaches. The appendices will provide more detail (e.g., detailed descriptions of the MTSS-R model, trainings and supports, data collection response rates). The report will follow guidance provided in the National Center for Education Statistics (NCES) Statistical Standards8 and the IES Style Guide.9

Impact Analyses. We will estimate the impact of each MTSS-R approach on instructional practices (RQ1) and student reading outcomes (RQ2). Instructional practice will be assessed using the classroom observations of teachers and interventionists, conducted in the spring of Year 2. The observations will measure teacher-led explicit instruction. Differentiated instruction will be captured during the observations and in the post-observation interviews. Student reading outcomes will be assessed using the study-administered student tests, as well as district records. Impact estimates will be based on hierarchical linear models to take nesting into account (i.e., observations within teachers within classrooms; students within classrooms within schools). The models will be based on an intent to treat approach and will incorporate covariates measured at baseline to maximize precision.

Implementation Analyses. We will examine the extent to which training was provided in the treatment schools (RQ3) and the fidelity of implementation of MTSS-R (RQ5) using data from the teacher, reading interventionist, and MTSS-R team leader surveys. RQ3 will be addressed through descriptive analyses of survey data on the training and supports provided to teachers in Approach A and Approach B schools. RQ5 will be addressed by examining each of the four components of MTSS-R (i.e., Tier I instruction, Tier II intervention, screening and progress-monitoring practices, and infrastructure) for each MTSS-R approach.

Service Contrasts. We will examine the differences between the treatment and control schools in the MTSS-R training received (RQ4) and in the implementation of MTSS-R (RQ6), drawing on survey data. Analyses will compare the survey responses of staff in the treatment schools (i.e., Approach A or Approach B) and the control schools, focusing on (1) the duration and focus of recent (e.g., prior summer) training related to MTSS-R, (2) the frequency, duration, and focus of coaching activities, (3) participation in and activities of MTSS-R teams, and (4) the use of data to inform Tier I instructional activities and Tier II intervention groupings.

Cost Analyses. We will calculate the cost of each approach. Cost data will come from the structured cost interviews with district personnel and from district accounting systems (through the extant data request), as well as from AIR’s accounting system. The cost interview data will contain information on the costs of (1) hiring or identifying the MTSS-R coaches, (2) any changes to the districts’ screening and progress-monitoring systems, (3) meetings to prepare schools to implement the MTSS-R approaches, and (4) ongoing support from district staff to support or monitor implementation. Costs associated with the MTSS-R approaches will be combined with the results of the impact analysis to estimate the effectiveness of each MTSS-R approach in terms of the cost per additional unit improvement in reading (e.g., cost per increase in one standard deviation in general reading).

Supplementary briefs: Five briefs will focus on specific topics of interest to reading practitioners and researchers such as implementation challenges and lessons learned; screening tools used by districts and the predictive validity of these tools in identifying struggling readers; and the effect of MTSS-R on English learners and students with disabilities.

Publication Plan

The final report, which will include impact, implementation, service contrast, and cost findings, is anticipated to be released in 2027. Supplementary briefs will occur during the course of the evaluation consistent with availability of the information.

All data collection instruments will include the OMB expiration date.

No exceptions are needed for this data collection.

References for Supporting Statements, Part A

Bailey, T. R. (2017). Hot topics in MTSS: Current research to address some of the big questions impacting implementation. Paper presented at the Council for Exceptional Children’s annual convention, Boston, MA.

Balu, R., Zhu, P., Doolittle, F., Schiller, E., Jenkins, J., & Gersten, R. (2015). Evaluation of response to intervention practices for elementary school reading. Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance.

Berry, S.H., Pevar, J., & Zander-Cotugno, M. 2008. The use of incentives in surveys supported by federal grants. Santa Monica, CA: RAND Corporation.

Bradley, M. C., Daley, T., Levin, M., O’Reilly, F., Parsad, A., Robertson, A., & Werner, A. (2011). IDEA national assessment implementation study (NCEE 2011-4027). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance.

Denton, C. A., Fletcher, J. M., Taylor, W. P., & Vaughn, S. (2014). An experimental evaluation of guided reading and explicit interventions for primary-grade students at risk for reading difficulties. Journal of Educational Effectiveness, 7(3), 268–293.

Dillman, D. A. 2007. Mail and internet surveys: The Tailored Design Method (2nd ed.). Hoboken, NJ: John Wiley & Sons.

Fiester, L. (2010). Early warning! Why reading by the end of third grade matters. Baltimore, MD: Annie E. Casey Foundation. Retrieved from https://files.eric.ed.gov/fulltext/ED509795.pdf

Foorman, B., Beyler, N., Borradaile, K., Coyne, M., Denton, C. A., Dimino, J., … Wissel, S. (2016). Foundational skills to support reading for understanding in kindergarten through 3rd grade (NCEE No. 4008). National Center for Education Evaluation and Regional Assistance Working Paper. Washington, DC: U.S. Department of Education. Retrieved from http://eric.ed.gov/?id=ED566956

Hernandez, D. J. (2011). Double jeopardy: How third-grade reading skills and poverty influence high school graduation. Baltimore, MD: Annie E. Casey Foundation.

James, T. (1997). Results of the Wave I incentive experiment in the 1996 Survey of Income and Program Participation. In Proceedings of the Survey Methods Section (pp. 834–839), American Statistical Association.

National Assessment of Educational Progress. (2017). Reading assessment. Retrieved from https://nces.ed.gov/nationsreportcard/reading

National Center for Education Evaluation. (2005, March 22). Guidelines for incentives for NCEE impact evaluations. Washington, DC: National Center for Education Evaluation.

National Center for Education Statistics. (2002). Statistical standards (NCES 2003-601). Washington, DC: National Center for Education Statistics. Retrieved from https://nces.ed.gov/pubs2003/2003601.pdf

Singer, E., & Kulka, R. A. (2002). Paying respondents for survey participation. In M. Vander Ploeg, R. R. Moffitt, & C. F. Citro (Eds.), Studies of welfare populations: Data collection and research issues (pp. 105–128). Washington, DC: National Academy Press.

U.S. Department of Education, Institute for Education Sciences. 2005. IES Style Guide. Retrieved from http://www.nces.ed.gov.

Vadasy, P. F., Sanders, E. A., & Tudor, S. (2007). Effectiveness of paraeducator-supplemented individual instruction: Beyond basic decoding skills. Journal of Learning Disabilities, 40(6), 508–525.

ABOUT AMERICAN INSTITUTES FOR RESEARCH

Established in 1946, with headquarters in Washington, DC, American Institutes for Research (AIR) is an independent, nonpartisan, not-for-profit organization that conducts behavioral and social science research and delivers technical assistance both domestically and internationally. As one of the largest behavioral and social science research organizations in the world, AIR is committed to empowering communities and institutions with innovative solutions to the most critical challenges in education, health, workforce, and international development.

1000

Thomas Jefferson Street NW

Washington, DC

20007-3835

202.403.5000

www.air.org

![]()

LOCATIONS

Domestic

Washington, DC

Arlington, VA

Atlanta, GA

Austin, TX

Baltimore, MD

Cayce, SC

Chapel Hill, NC

Chicago, IL

Columbus, OH

Frederick, MD

Rockville, MD

Honolulu, HI

Indianapolis, IN

Metairie, LA

Naperville, IL

New York, NY

Rockville, MD

Sacramento, CA

San Mateo, CA

Waltham, MA

International

Egypt

Honduras

Ivory Coast

Kyrgyzstan

Liberia

Tajikistan

Zambia

1 According to Bradley et al. (2011), 61% of elementary schools reported using response to intervention (RTI), a framework similar to MTSS-R, to respond to academic needs. On the basis of data collected in 2011, Balu et al. (2015) found that 71% of a representative sample of schools in 13 states reported using RTI for primary-grade reading. A recent review of state policy found that all 50 states recommend MTSS to address student academic or behavioral needs (Bailey, 2017).

2 Although MTSS-R typically includes three tiers, providing support for intensive individualized Tier III supports is beyond the scope of the planned study.

3 We will include the district record requests on the consent form as well if the district requires it.

4 Berry, Pevar, & Zander-Contugno, 2008; Singer & Kulka, 2002.

5 Dillman, 2007; James, 1997.

6 National Center for Education Evaluation, 2005.

7 The PII will be stored either on ED’s cloud-based system or AIR’s cloud-based system, Secure Analytics Workbench, which will have secured Authorization to Operate status before data collection.

8 National Center for Education Statistics, 2002.

9 U.S. Department of Education, Institute of Education Sciences, 2005.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Title | The Impact Evaluation of Parent Messaging Strategies |

| Subject | OMB Clearance Request |

| Author | AIR |

| File Modified | 0000-00-00 |

| File Created | 2021-12-28 |

© 2026 OMB.report | Privacy Policy