NLSY97_R21_OMB_PartA_Final

NLSY97_R21_OMB_PartA_Final.docx

National Longitudinal Survey of Youth 1997

OMB: 1220-0157

National Longitudinal Survey of Youth 1997

OMB Control Number: 1220-0157

OMB Expiration Date: 08/31/2024

SUPPORTING STATEMENT FOR

The National Longitudinal Survey of Youth 1997

OMB CONTROL NO. 1220-0157

This Information Collection Request seeks to obtain clearance for the main fielding of Round 21 of the National Longitudinal Survey of Youth 1997 (NLSY97) and for an interim contact to be made after Round 21 but before Round 22. The main NLSY97 sample includes 8,984 respondents who were born in the years 1980 through 1984 and lived in the United States when the survey began in 1997. Sample selection was based on information provided during the first round of interviews. This cohort is a representative national sample of the target population of young adults. The sample includes an overrepresentation of Black and Hispanic people to facilitate statistically reliable analyses of these racial and ethnic groups. Appropriate weights are provided so that the sample components can be combined in a manner to aggregate to the overall U.S. population of the same ages.

The survey is funded primarily by the U.S. Bureau of Labor Statistics (BLS) at the U.S. Department of Labor (DOL). Additional funding has been provided in some years by various agencies including the Departments of Health and Human Services, Education, Defense, and Justice, and the National Science Foundation. The BLS has overall responsibility for the project. The BLS has contracted with NORC at the University of Chicago to conduct the survey. The contractor is responsible for survey design, interviewing, data preparation, documentation, and the preparation of public-use data files.

The data collected in this survey are part of a larger effort that involves repeated interviews administered to several cohorts in the U.S. Many of the questions are identical or very similar to questions previously approved by OMB that have been asked in other cohorts of the National Longitudinal Surveys (NLS). This round will be the fourth in which the NLSY97 interviews will be conducted primarily by telephone.

JUSTIFICATION

1. Explain the circumstances that make the collection of information necessary. Identify any legal or administrative requirements that necessitate the collection. Attach a copy of the appropriate section of each statute and regulation mandating or authorizing the collection of information.

This statement covers main fielding of Round 21 of the National Longitudinal Survey of Youth 1997 (NLSY97) and an interim contact with NLSY97 sample members to be made between Rounds 21 and 22. The NLSY97 is a nationally representative sample of persons who were ages 12 to 16 on December 31, 1996. The BLS contracts with external organizations to interview these youths, to study how young people make the transition from full-time schooling to the establishment of their families and careers. Interviews were conducted on a yearly basis through Round 15; beginning with Round 16, they are interviewed on a biennial basis. The longitudinal focus of this survey requires information to be collected about the same individuals over many years in order to trace their education, training, work experience, fertility, income, and program participation.

The mission of the DOL is, among other things, to promote the development of the U.S. labor force and the efficiency of the U.S. labor market. The BLS contributes to this mission by gathering information about the labor force and labor market and disseminating it to policymakers and the public so that participants in those markets can make more informed and, thus more efficient, choices. The charge to the BLS to collect data related to the labor force is extremely broad, as reflected in Title 29 U.S.C. Section 1:

“The general design and duties of the Bureau of Labor Statistics shall be to acquire and diffuse among the people of the United States useful information on subjects connected with labor, in the most general and comprehensive sense of that word, and especially upon its relation to capital, the hours of labor, the earnings of laboring men and women, and the means of promoting their material, social, intellectual, and moral prosperity.”

The collection of these data contributes to the BLS mission by aiding in the understanding of labor market outcomes faced by individuals in the early stages of career and family development. See attachment 1 for Title 29 U.S.C. Sections 1 and 2.

2. Indicate how, by whom, and for what purpose the information is to be used. Except for a new collection, indicate the actual use the agency has made of the information received from the current collection.

The proposed collection will allow the NLSY97 to continue serving its broad purpose as a vital source of labor market information, by adding a 21st round to the survey’s longitudinal dataset. The NLSY97 is an omnibus study designed to understand labor market outcomes and serves a variety of labor market-related research interests. Among the major purposes of its collection is to examine the transition from school to the labor market and into adulthood. Additional major purposes fall into several categories as elaborated in attachment 2: (1) to explore factors affecting an individual’s orientation to the labor market and its long- and short-term effects on labor market outcomes; (2) to explore factors affecting an individual’s educational progress and its various repercussions in the labor market; (3) to study characteristics of the work environment experienced by individuals and the interplay between these characteristics and other labor market outcomes; (4) to examine race, sex, and ethnicity differences in employment and earnings; (5) to explore the relationships between economic and social factors, family transitions, and well-being; (6) to examine associations between geographic mobility, local and national levels of economic activity, and social, economic, and demographic characteristics of respondents and their families; and (7) to measure gross changes in the labor market status of adults in the reference population. In addition, the NLSY97 can be used to meet the data collection and research needs of various government agencies that have been interested in the relationships between child and maternal health, other child outcomes, drug and alcohol use, juvenile deviant behavior and education, employment, and family experiences.

The study relates each respondent’s educational, family, and community background to his or her success in finding a job and establishing a career. During Round 1, the study included a testing component sponsored by the Department of Defense that assessed the aptitude and achievement of the youths in the study so that these factors can be related to career outcomes. This study, begun when most participants were in middle school or high school, has followed them as they enter college or training programs and join the labor force. Continued biennial interviews will allow researchers and policymakers to examine the transition from school to work. This study will help researchers and policymakers to identify the antecedents and causes for difficulties some youths experience in making the school-to-work transition. By comparing these data to similar data from previous NLS cohorts, researchers and policymakers will be able to identify and understand some of the dynamics of the labor market and whether and how the experiences of this cohort of young people differ from those of earlier cohorts.

The NLSY97 has several characteristics that distinguish it from other data sources and make it uniquely capable of meeting the goals described above. The first of these is the breadth and depth of the types of information that it collects. It has become increasingly evident in recent years that a comprehensive analysis of the dynamics of labor force activity requires a theoretical framework that draws on several disciplines, particularly economics, sociology, and psychology. For example, the exploration of the determinants and consequences of the labor force behavior and experience of this cohort requires information about (1) the individual’s family background and ongoing demographic experiences; (2) the character of all aspects of the environment with which the individual interacts; (3) human capital inputs such as formal schooling and training; (4) a complete record of the individual’s work experiences; (5) the behaviors, attitudes, and experiences of family members, including spouses and children; and (6) a variety of social psychological measures, including attitudes toward specific and general work situations, personal feelings about the future, and perceptions of how much control one has over one’s environment.

A second major advantage of the NLSY97 is its longitudinal design. This design permits investigations of labor market dynamics that would not be possible with one-time surveys and allows directions of causation to be established with much greater confidence than cross-sectional analyses permit. Also, the considerable geographic and environmental information available for each respondent for each survey year permits a more careful examination of the impact that local labor market conditions have on the employment, education, and family experiences of this cohort.

Third, the NLSY97’s supplemental samples of Black and Hispanic people make possible more detailed statistical analyses of those groups than would otherwise be possible.

The NLSY97 is part of a broader group of surveys that are known as the BLS National Longitudinal Surveys program. In 1966, the first interviews were administered to persons representing two cohorts, Older Men ages 45-59 in 1966 and Young Men ages 14-24 in 1966. The sample of Mature Women ages 30-44 in 1967 was first interviewed in 1967. The last of the original four cohorts was the Young Women, who were ages 14-24 when first interviewed in 1968. The survey of Young Men was discontinued after the 1981 interview, and the last survey of the Older Men was conducted in 1990. The Young and Mature Women surveys were discontinued after the 2003 interviews. In 1979, the National Longitudinal Survey of Youth 1979 (NLSY79 – OMB Clearance Number 1220-0109), which includes persons who were ages 14–21 on December 31, 1978, began. The NLSY79 was conducted yearly from 1979 to 1994 and has been conducted every two years since 1994. One of the objectives of the National Longitudinal Surveys program is to examine how well the nation is able to incorporate young people into the labor market. These earlier surveys provide comparable data for the NLSY97.

The most recent BLS news release that examines NLSY97 data was published on March 29, 2022, available online at https://www.bls.gov/news.release/archives/nlsyth_03292022.htm. In addition to BLS publications, analyses have been conducted in recent years by other agencies of the Executive Branch, the Government Accountability Office, and the Congressional Budget Office. The surveys also are used extensively by researchers in a variety of academic fields. A comprehensive bibliography of journal articles, dissertations, and other research that have examined data from all National Longitudinal Surveys cohorts is available at http://www.nlsbibliography.org/.

In addition to collecting a 21st round for the NLSY97’s main fielding, NLS also proposes to contact sample members between Rounds 21 and 22 to ensure the continued strong connection of sample members with the survey. The details of such interim contact, which depend on NLS’ experiences during Round 21, will be submitted as a non-substantive change to this OMB package.

3. Describe whether, and to what extent, the collection of information involves the use of automated, electronic, mechanical, or other technological collection techniques or other forms of information technology, e.g., permitting electronic submission of responses, and the basis for the decision for adopting this means of collection. Also, describe any consideration of using information technology to reduce burden.

The NLS program and its contractors have led the industry in survey automation and continue to use up-to-date methods for the NLSY97. This includes the continued use of computer-assisted interviewing (CAI) for the survey. CAI can reduce respondent burden and produce data that can be prepared for release and analysis faster and more accurately than is the case with pencil-and-paper interviews.

In Round 21, NLS proposes to collect data primarily by telephone, as in Rounds 18, 19, and 20. While historically an in-person survey, the NLSY97 was converted to a predominantly telephone survey in Round 18, when approximately 90 percent of interviews were completed by telephone. This reflected a dramatic increase over Rounds 16 and 17, in which 15 and 27 percent were completed by telephone, respectively. In Round 19, the ability to collect information over the phone was a key factor in the survey’s ability to maintain response rates during the Coronavirus pandemic. Approximately 98 percent were collected via telephone in Round 20.

NLS expects to supplement telephone interviewing with in-person contacts as a non-response follow-up strategy for a small number of cases that are identified by special circumstances, such as difficulty locating a respondent without in-person efforts, respondent’s lack of a telephone, or prison cases where only an in-person interview is approved. In-person interviewing has been deployed similarly in NLS surveys for many years. NLS does not propose to use self-administered modes in Round 21, but NLS is evaluating the results of a separate collection effort administered via a web application between Rounds 19 and 20 for potential use in future rounds; a small number of questions were asked in Round 20 to help in this analysis.

Mode effects on item non-response may vary. Compared to self-administered modes, interviewer-administered collection has been found to result in the under-reporting of sensitive items (Tourangeau and Yan, Psychological Bulletin 2007, Vol. 133, No. 5, 859–883). For drug use, for example, estimates of under-reporting range from 19 to 30 percent across multiple meta-analyses of experimental and quasi-experimental studies (Tourangeau and Yan 2007, Richmand, Kiesler, Weisband, and Drasgow 1999). However, this effect may be ameliorated by the use of telephone interviewing, which has been found to lead to less under-reporting of sensitive items such as income and other financial items than face-to-face interviewing. (de Leeuw E.D., van der Zouwen J. (1988). “Data quality in telephone and face to face surveys: a comparative meta-analysis.” In: Groves RM, Biemer PP, Lyberg LE, Massey JT, Nicholls WL II, Waksberg J, eds. Telephone Survey Methodology, New York: Wiley: 273:99). Indeed, item non-response did decrease from Round 17 to Round 18 for income and financial items. For instance, the percentage of respondents reporting “don’t know” on the item asking about wages or salary from work fell from 14 to 8.

The collection effort will employ multiple modes of contact to encourage and facilitate its telephone interviews. These modes include mail, email, telephone, and text messaging including mass texting. NLS targets its outreach methods based on the experiences with respondents in past rounds. As some respondents have indicated that text messaging is their preferred mode of communication, the use of texts is a significant tool for encouraging participation. Individual text messages are sent by interviewers through NLS-approved, project-issued phones, set to fully comply with all security requirements including multiple layers of password protection, remote monitoring, and complete remote wipe if stolen or lost. The NLS project will also employ mass-texting to texting preferred respondents of reminder messages at a rate of approximately one per month until survey completion using a third party mass texting application. Except for the cell phone number, no identifying information is passed to this application. The mass texts follow a BLS-approved script and do not include names, links, the survey name or other identifying characteristics.

In order to carefully meter the number of in-person interviews that will be conducted, NLS will employ practices that the NLS has used for years. In-person interviewing will require approval, based on the facts of the case. Hearing-related disability or inadequate telephone service will be readily approved for in-person interviewing. In limited situations where the respondent does not have their own phone or the ability to borrow someone’s phone for the length of the interview, NLS will send a pre-paid cell phone to the respondent with enough minutes to allow the respondent the opportunity to participate. When addressing a respondent preference, in-person interviewing will only be approved when interviewers and field manager believe that the only alternative to in-person interview is respondent non-interview. The staffing model for Round 21 will be geographically diverse to permit in-person outreach.

The Round 21 questionnaire is programmed in a single computer-assisted interviewing (CAI) platform that interviewers can use for either telephone or in-person administration. The programming of the questionnaire is highly complex and uses extensive information from prior interviews as well as different points in the current interview. Paper and pencil administration would likely involve a high rate of error. Using a single platform for both interviewing modes greatly improves the efficiency of questionnaire programming, data quality assurance, and post-data collection data processing activities relative to having separate platforms for different interviewing modes.

The CAI questionnaire offers additional features for data quality assurance. These include the capture of item-level timings, recordings of the interviewer-respondent interaction, and the ability to monitor when the interview might have been interrupted or resumed. The availability of these features allows the data collection team to monitor interviewer performance and inspect for data falsification with minimal additional burden on respondents; as described in Section 12 below, NLS expects to conduct less than 100 short validation interviews. Re-interviews are then conducted only in the event of concerns about how an interview was conducted based on the available recordings, timings, and other data.

The questionnaire’s consent statement indicates that the interview will be recorded for quality control purposes. If a respondent declines to be recorded, the timings and other data quality-related paradata can generally provide adequate information for data quality assurance. If necessary, re-interviews can be conducted with a small number of respondents. NLS expects that re-interviews will be conducted for less than 1 percent of all interviews .

4. Describe efforts to identify duplication. Show specifically why any similar information already available cannot be used or modified for use for the purposes described in Item A.2 above.

NLS does not know of a national longitudinal survey that samples this age bracket and explores an equivalent breadth of substantive topics including labor market status and characteristics of jobs, education, training, aptitudes, health, fertility, marital history, income and assets, participation in government programs, attitudes, sexual activity, criminal and delinquent behavior, household environment, and military experiences. Data collection for the National Longitudinal Study of Adolescent Health (Add Health) is less frequent and addresses physical and social health-related behaviors rather than focusing on labor market experiences. The studies sponsored by the National Center for Education Statistics do not include the birth cohorts 1980 through 1984. The Children of the NLSY79, also part of the NLS program, spans the NLSY97 age range and touches on many of the same subjects, but does not yield nationally representative estimates for these birth cohorts. Further, the NLSY97 is a valuable part of the NLS program as a whole, and other surveys would not permit the kinds of cross-cohort analyses that are possible using the various cohorts of the NLS program.

The repeated collection of NLSY97 information permits consideration of employment, education, and family issues in ways not possible with any other available data set. The combination of (1) longitudinal data covering the time from adolescence; (2) a focus on youths and young adults; (3) national representation; (4) large minority samples; and (5) detailed availability of education, employment and training, demographic, health, child outcome, and social-psychological variables make this data set and its utility for social science policy research on youth issues unique.

5. If the collection of information impacts small businesses or other small entities, describe any methods used to minimize burden.

The NLSY97 is a survey of individuals in household and family units and therefore does not involve small organizations.

6. Describe the consequence to federal program or policy activities if the collection is not conducted or is conducted less frequently, as well as any technical or legal obstacles to reducing burden.

The frequency with which the NLSY97 is collected is carefully calibrated to balance informational needs against the cost and burden of collection. In its first 15 rounds, the survey was conducted annually, as necessary for accurately capturing the educational, training, labor market, and household transitions that young people typically experience. Starting in Round 16 data collection changed to a biennial schedule, diminishing the survey’s burden. This transition was made feasible by the lower frequency of educational and labor market transitions that NLSY97 respondents experience as they age.

Two other considerations impact the determination of collection frequency. First, among variables for which the survey generates a full event history (employment, marriage, fertility, and schooling), long lags between collections may create measurement error through inaccurate recall as well as increasing the length of the interview. The NLSY97 mitigates these concerns by using memory aides and bounding techniques in NLS’ interviewing. However, diminishing the frequency of collection would heighten the concerns.

A second consideration is the potential for effects on response. As shown in Table 1, the NLSY97 experienced a decline in response rate during Rounds 16, 17, and 18 after the transition to biennial interviewing, as did the NLSY79 after its transition. Although the likely causes of the decline in Round 16 were manifold, including a pause in data collection related to a partial shutdown of the federal government and an extremely severe winter, the reduction in respondent contact inherent in the transition to biennial interviewing may have played a role. The decline in response in Round 18 was larger than expected and, in part, may be attributed to the change in primary mode from in-person to telephone; the rate rebounded in Round 19 (to 79.4) after NLS made adjustments to its fielding procedures. It was 77.0 percent in Round 20, which was impacted by the coronavirus pandemic. Based on this experience, NLS would anticipate that further reductions in the frequency of collection would further diminish response.

Table 1. NLSY79 and NLSY97 Response Rates Surrounding the Transition to Biennial Interviewing

|

NLSY79 Response Rate* (Year) (Round) |

NLSY97 Response Rate* (Year) (Round) |

Two rounds prior to transition to biennial |

92.1 (1993) (R15) |

84.4 (2010) (R14) |

One round prior to transition to biennial |

91.1 (1994) (R16) |

83.9 (2011) (R15) |

First round after 2-year gap |

88.8 (1996) (R17) |

80.8 (2013) (R16) |

Second round after transition to biennial |

86.7 (1998) (R18) |

80.6 (2015) (R17) |

Third round after transition to biennial |

83.2 (2000) (R19) |

76.7 (2017) (R18)** |

Fourth round after transition to biennial |

80.3 (2002) (R20)** |

79.4 (2019) (R19) |

Fifth round after transition to biennial |

80.1 (2004) (R21) |

77.0 (2021) (R20) |

* Retention rates exclude deceased and out of sample cases.

** Collection transitioned to telephone as a primary mode.

7. Explain any special circumstances that would cause an information collection to be conducted in a manner:

requiring respondents to report information to the agency more often than quarterly;

requiring respondents to prepare a written response to a collection of information in fewer than 30 days after receipt of it;

requiring respondents to submit more than an original and two copies of any document;

requiring respondents to retain records, other than health, medical, government contract, grant-in-aid, or tax records for more than three years;

in connection with a statistical survey, that is not designed to produce valid and reliable results that can be generalized to the universe of study;

requiring the use of statistical data classification that has not been reviewed and approved by OMB;

that includes a pledge of confidentially that is not supported by authority established in statute or regulation, that is not supported by disclosure and data security policies that are consistent with the pledge, or which unnecessarily impedes sharing of data with other agencies for compatible confidential use; or

requiring respondents to submit proprietary trade secret, or other confidential information unless the agency can demonstrate that it has instituted procedures to protect the information's confidentially to the extent permitted by law.

None of the listed special circumstances apply.

8. If applicable, provide a copy and identify the date and page number of publication in the Federal Register of the agency's notice, required by 5 CFR 1320.8(d), soliciting comments on the information collection prior to submission to OMB. Summarize public comments received in response to that notice and describe actions taken by the agency in response to these comments. Specifically address comments received on cost and hour burden.

Describe efforts to consult with persons outside the agency to obtain their views on the availability of data, frequency of collection, the clarity of instructions and recordkeeping, disclosure, or reporting format (if any), and on the data elements to be recorded, disclosed, or reported.

Consultation with representatives of those from whom information is to be obtained or those who must compile records should occur at least once every 3 years -- even if the collection-of-information activity is the same as in prior periods. There may be circumstances that may preclude consultation in a specific situation. These circumstances should be explained.

One comment was received as a result of the Federal Register notice published on March 3, 2023 (88 FR 13471). The comment, which was e-mailed to BLS on March 4, 2023, expressed the opinion that the survey does not benefit the citizens of the country.

BLS’ response to the comments is that the National Longitudinal Surveys are widely used by researchers to learn about the functioning of the labor market and the multiple factors that affect it. They have supported several thousand published studies. These studies, in turn are used widely by policymakers to formulate and adjust policies that represent the interests of the Nation. Additionally, the NLS program has partnered extensively with other Federal agencies to discover facts that are especially relevant to particular policies and programs.

There have been numerous consultations regarding the NLSY97. In 1988, the National Science Foundation sponsored a conference to consider the future of the NLS. This conference consisted of representatives from a variety of academic, government, and nonprofit research and policy organizations. The participants endorsed the notion of conducting a new youth survey. The NLSY97 incorporates many of the major recommendations that came out of that conference.

Also, on a continuing basis, BLS and its contractors encourage NLS data users to suggest ways in which the quality of the data can be improved and to suggest additional data elements that should be considered for inclusion in subsequent rounds. NLS encourages this feedback through the public information office of each organization and through the ‘Suggested Questions for Future NLSY Surveys’ (available online at https://www.nlsinfo.org/nlsy-user-initiated-questions).

The NLS program also has a technical review committee that provides advice on interview content and long-term objectives. This group typically meets twice each year. Table 2 below shows the current members of the committee.

Table 2. National Longitudinal Surveys Technical Review Committee (January 2023)

Fenaba Addo Department of Public Policy and Department of Sociology University of North Carolina, Chapel Hill |

Jennie Brand Department of Sociology and Department of Statistics University of California, Los Angeles |

Sarah Burgard Department of Sociology and Department of Epidemiology University of Michigan

|

Lisa Kahn Department of Economics University of Rochester |

Michael Lovenheim Department of Policy Analysis and Management Cornell University

|

Nicole Maestas Harvard Medical School

|

Melissa McInerney (chair) Department of Economics Tufts University

|

Kristen Olson Department of Sociology University of Nebraska-Lincoln

|

Emily Owens Department of Criminology, Law & Society and Department of Economics University of California, Irvine

|

John Phillips Division of Behavioral and Social Research National Institute on Aging/NIH

|

Rebecca Ryan Department of Psychology Georgetown

University |

Narayan Sastry Population Studies Center University of Michigan |

9. Explain any decision to provide any payments or gifts to respondents, other than remuneration of contractors or grantees.

The NLSY97 is a long-term study in which the same respondents were interviewed on an annual basis for 15 rounds before moving to biennial interviewing; Round 21 will be the sixth round during the biennial phase. Because minimizing sample attrition is critical to sustaining this type of longitudinal study, respondents in all prior rounds have been offered financial and in-kind incentives as a means of securing their long-term cooperation and minimizing any decline in response rates. The goal of these incentives is to minimize non-response bias, both by promoting high overall response rates and by ensuring adequate response among subpopulations of analytical interest to data users.

Incentives are commonly used in longitudinal surveys, both because survey participation typically imposes a high burden on each respondent and because repeated cooperation has a particularly high value (Laurie and Lynn, 2009). The accumulated evidence shows that these incentives can have positive effects on both respondent and interviewer behavior. They are effective in gaining conversions among those least likely to participate as well as quicker cooperation among those more likely to participate. Small increases or one-time bonuses can have a halo effect that results in future participation (Wong, 2020). However, because of the relative scarcity of longitudinal studies, especially with the high response rates and intensive data collection approach of the NLSY97, the evidence from the literature on detailed design components is relatively sparse. The NLS program thus designs its incentive offerings by drawing on the literature as much as possible, but also by relying on its own accumulated experiences in incentivizing survey completions. To foster continual improvement in the efficacy of its incentives, it often seeks to build evidence through experimentation as it designs each succeeding round. Such experimentation has included, for example, the introduction of novel incentives and the imposition of randomization on some incentives to facilitate subsequent evaluation.

Respondent Segments

The incentive design for Round 21 complements a base incentive with a series of targeted incentive components that are tailored to appeal to specific segments of the sample, as defined by past response behavior. The segments comprise three categories,1 as described in Figure 1 below.

“Perfect” responders who have never missed an interview (4,219);

Round 20 completers who have previously missed at least one interview (2,494);

Round 20 non-completers (~1,950).

Within the first segment, a portion of the sample will be randomly chosen to receive a “Thank You” bonus in Round 21, continuing the three-round sequence began in Round 20. Within the third segment, “Missed Round” incentives will vary depending on the length of time since the last completed interview. In addition to these incentive components targeted by segment, NLS will offer “Final Push” incentives to reluctant responders as the fielding period advances to its conclusion. The details of these incentive components are described below.

Figure 1: Round 21 Respondent Segments

Incentive Components

The Round 21 incentive strategy includes a base incentive, a thank you bonus to the most cooperative respondents, a graduated, missed round incentive for respondents who did not complete Round 20, and small, discretionary, in-kind supplements. In addition, a two-level final push incentive for individuals for whom the standard protocol has been unsuccessful in securing cooperation is proposed for Round 21. Table 7 at the end of this document summarizes the incentive components.

Base Incentive

A base incentive of $50 will be given to all three segments of respondents who complete the Round 21 interview. This base fee is identical to that offered in Round 20.

Segment 1: Perfect Responders

Thank You Bonus

In honor of the survey’s 20th round, NLS started offering a “Thank You Bonus” of $25 to acknowledge the high levels of cooperation exhibited by perfect responders who have completed interviews in every previous Round. As these respondents make up over half of the NLSY97 sample and have an expected completion rate of over 96 percent, they are extremely important to the ongoing, long-term success of the survey. (In comparison, individuals who completed the prior interview but have previously missed at least one round, who make up about 29 percent of the sample, have an expected completion rate of 85 percent.) By cultivating the spirit of reciprocity between the respondents and the survey program, this “Thank You Bonus” can reinforce the respondents’ sense that their responses are valued and important (Laurie and Lynn, 2009).

As discussed in the Supporting Statement for NLSY97 Round 20, the bonus will be implemented over the course of three data collection rounds. In Round 20, NLS randomly selected one-third of these 4,384 individuals and offered them a bonus of $25 if they complete the Round 20 interview. To continue this bonus in Round 21, the same $25 will be offered to another randomly selected portion of these individuals (half of the remaining perfect responders, excluding those selected in Round 20). The remainder will be offered the bonus in Round 22. NLS will convey that this is a one-time bonus to acknowledge their many rounds of cooperation, and that NLS will not award the same bonus in future rounds. This three-thirds design spreads the cost of the bonus across three rounds and also provides leverage for evaluating the incentive’s long-term impact.

NLS previously implemented a “Thank You Bonus” in Rounds 12 through 14 of the NLSY97 for respondents who did not miss a round in the first 11, and it showed an increase in completion rates, both in the round the bonus was offered and in the two subsequent rounds.

In

Round 20, the completion rate was only slightly higher among the

perfect responders who received the bonus than those who did not

(96.51% vs. 96.13%). Although this immediate impact is lower than

that of the thank you bonus in Round 12-14, we will continue to track

its effectiveness in future rounds, since the previous experience

indicated an increase in completion rates over several rounds.

Segment 2:

Completed Round 20: Not Perfect or Initially Refused

Segment 2 includes responders who participated in Round 20 but have missed one or more earlier Rounds and responders who initially refused but later participated in Round 20. Not perfect responders will receive the standard base incentive of $50 in addition to any final push incentives they may qualify for. Initial refusers will receive an additional $25 incentive in Round 21 (see below for details on Potential New Initial Refuser/Hard to Reach Incentives proposed experiment).

Segment

3: Missed Round 20

Missed Round 20 Incentive

NLS will continue to offer a graduated missed round incentive to encourage attriters to return to the study. Anyone returning after only missing Round 20 will receive a $40 missed round incentive in Round 21. The amount of the incentive increases by an additional $20 for up to four rounds out (for example, a respondent returning after four rounds out would receive a $100 missed round incentive ($40 + 3*$20) in addition to the $50 base incentive. The missed round incentive for those out for 5 or more rounds would be $150. This graduated structure accounts both for the fact that the difficulty of gaining participation increases as the non-completion period increases, and the fact that those returning from a long lapse in response will be asked to complete a longer interview (each interview traces the respondent’s job history back to the last completed interview). NLSY97 implemented the same structure of graduated missed round incentives in Round 20.

Table 3 shows the completion rates of respondents by these completion groups for Round 17, when only the first tier of missed round incentives (out at least one round) were offered, and Rounds 18-20, which have employed a graduated structure like the one offered in Round 21. The results from Rounds 18 and 19 showed increased response rates in the higher tiers, suggesting that the increased payments targeted to respondents who had been out multiple rounds were effective. Results from Round 20 were less suggestive of this effect, although we note that the composition of these groups changes over time, so comparisons across rounds may not be indicative of the effect of a given incentive. We will continue to monitor these trends in subsequent rounds.

Table 3: Completion Rates by Prior Response Behavior, Rounds 17-20

|

Group |

||||||

Round |

Completed last two rounds |

Responded to last round, out the round before (i.e., returners) |

Missed prior round only (i.e., out one round) |

Out two rounds |

Out 3 rounds |

Out 4 rounds |

Missed all of the prior 5 rounds |

R17 |

94.81% |

67.83% |

45.47% |

32.79% |

16.13% |

6.32% |

11.09% |

R18 |

91.41% |

61.54% |

38.76% |

27.43% |

30.56% |

20.59% |

11.09% |

R19 |

94.33% |

72.25% |

56.11% |

38.40% |

29.90% |

26.67% |

17.54% |

R20 |

96.26% |

81.61% |

46.50% |

26.33% |

13.92% |

19.35% |

9.63% |

Incentivizing completion among those who refused to respond in earlier waves has been found to be effective in the Survey of Income and Program Participation (Martin et al 2001), the Health and Retirement Survey (Rodgers 2011), and the Survey of Program Dynamics (Creighton et al 2011). The experience of the NLS has been consistent with these positive results. For example, an experiment in Round 18 showed that the completion rate was roughly double for those receiving the missed round incentive compared with the control group who did not receive the incentive. Results of the NIR bonus experiment in Round 18 are shown in Table 4. As shown in Panel A, the completion rate of those receiving the bonus was roughly twice that of the control group. Following Round 18, NLS followed up to examine whether this increase in completes also translated to an increase in completes one round after the original bonus. Panel B shows the Round 19 completion rates for the Round 18 experimental groups. The results show a potential small effect of 2.38 percentage points. Panel C shows continued fade out in Round 20, with a less than 1 percentage point difference remaining between the control and treatment groups.

Table 4: Big NIR Bonus Experiment Results

NIR Bonus Received |

Total Respondents |

Completed Cases |

% Complete (Total) |

Panel A: Round 18 Completion |

|||

Control |

389 |

33 |

8.48% |

Treatment |

170 |

27 |

15.88% |

Panel B: Round 19 Completion |

|||

Control |

389 |

80 |

20.56% |

Treatment |

170 |

39 |

22.94% |

Panel C: Round 20 Completion |

|||

Control |

389 |

66 |

16.97% |

Treatment |

170 |

30 |

17.65% |

Token In-Kind Incentive

NLS plans to use in-kind gifts such as a cup of coffee, a reusable cup, or a pen. As in recent rounds of the NLSY97, we propose to use a maximum value of $10 for as many as 2,000 respondents and propose to use them in mailings or in door hangers. Many NLS interviewers report that having something to offer respondents, such as a monetary incentive, additional in-kind offering, or new conversion materials, allows them to open the dialogue with formerly reluctant respondents. They find it useful for gaining response if they have a variety of options to respond to the particular needs, issues, and objections of the respondent. NLS has used similar gifts in many recent rounds to strengthen the ongoing relationships between the program and its respondents. NLS would like to include a token in a mailing that either makes the envelope heavier or comes in a box, and thus may induce the sample member to open the mail, read the message, engage with the project through calls to the 800 number, or respond when the interviewer calls or stops by. Decisions regarding who receives this incentive would be made by a central office; interviewers would not have discretion over which respondents receive this incentive. Records will be maintained of which respondents received which gifts, to allow for future analysis.

Final Push Incentive

NLS will also employ additional incentives in the latter portions of the fielding period to induce reluctant respondents in all three segments to complete the survey. Starting after the first 12 weeks of the round, cases that have had at least seven contact attempts or at least one refusal will be offered a final push incentive of $25. To facilitate a timely close to the fielding, starting six months after the start of fielding, all cases will be offered this incentive. By the time of the final push offer, respondents are typically quite challenging, requiring many outreach attempts by telephone, mail or even in person. Even small reductions in the necessary outreach can offset the additional $25 conditional payment.

This type of incentive has been shown to be effective in the Panel Study of Income Dynamics, with little effect on noncontact rates (McGonagle and Freedman 2016). In addition, NLS conducted an experiment in Round 29 of the NLSY79 to evaluate the effectiveness of the final push on the NLSY79 sample. This experiment was conducted by randomly assigning interviewers into two separate groups. The first group had their cases eligible for the final push and enhanced final push at the traditional time, while the second group had their cases eligible for the final push and enhanced final push 6 weeks later. This staggered implementation of the final push allowed an opportunity to evaluate the effectiveness of the final push by comparing the trajectories of completion rates and effort for cases eligible for final push to randomly assigned cases that were not eligible at the time.

If the final push incentive had no effect on completion, then NLS would expect that cases randomly assigned to be eligible for final push would have similar completion rates as those eligible for final push 6 weeks later. However, NLS found that final push incentives increased overall completion rates by about three percentage points. Significant increases in response were seen among each type of response probability group. Final push incentives almost doubled the completion rate for those in the high response probability group (see Table 5).

Table 5: Final Push Evaluation Results (six-week treatment period)

Response Probability Group |

Final Push Experiment Group |

N |

Completes |

Pending |

Completion Rate |

High |

Treatment |

202 |

57 |

127 |

28.22% |

|

Control |

260 |

37 |

205 |

14.23% |

Mid |

Treatment |

451 |

84 |

321 |

18.63% |

|

Control |

479 |

64 |

355 |

13.36% |

Low |

Treatment |

955 |

29 |

708 |

3.04% |

|

Control |

742 |

14 |

582 |

1.89% |

Total |

Treatment |

1,608 |

170 |

1,156 |

10.57% |

|

Control |

1,481 |

115 |

1,142 |

7.77% |

*All statistics in the table reflect only the experimental time period from 3/29/2021-5/9/2021 when the treatment group was eligible for the final push incentive and the control group was not.

These findings, in conjunction with the results from the Round 20 NLSY97 enhanced final push incentive experiment discussed below, further support the effectiveness of these later round incentives.

Enhanced Final Push

In order to support sample representativeness, NLS plans to increase the final push incentive for respondents in all three segments whose completion rate significantly lags the overall sample at a given point in time. NLS describes this increase as an enhanced final push incentive. Individuals offered the enhanced final push incentive can receive a $50 final push (instead of $25) by completing the survey. Individuals not having completed an interview in the last five rounds will be ineligible for the enhanced final push.

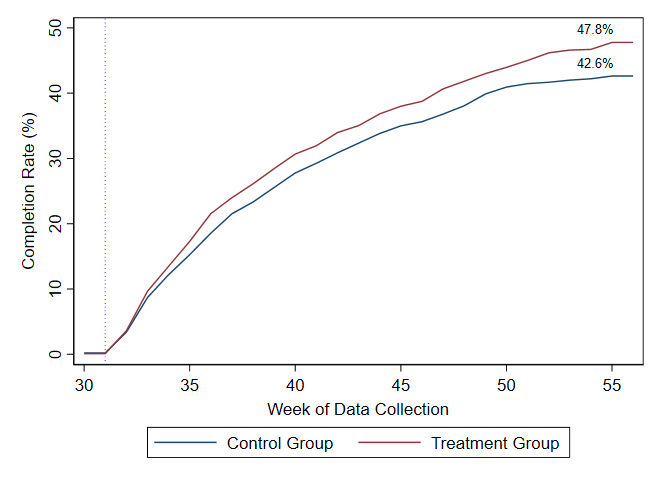

In Round 20, NLS conducted an experiment to evaluate the effectiveness of the enhanced final push incentive. The results of the experiment found that the enhanced final push was effective in increasing completion rates during the data collection period. At the end of the first month of implementation, the treatment group had a completion rate of 13.5% relative to the control group, at a rate of 12.2%. The gap in completion rates between treatment and control respondents continued to grow slowly over the course of data collection, evident by the widening gap between treatment and control completes shown in Figure 2. At the end of data collection, the completion rate for the treatment group was 5.2 percentage points higher than the completion rate for the control group, with 47.8% in the treatment group completing compared to 42.6% in the control group.

Figure 2: Results of the Round 20 Enhanced Final Push Experiment

In Round 21, NLS will keep the selection process of subgroups eligible for the enhanced final push similar to previous rounds. Subgroups are defined by the following measures in the most recent completed interview going back to Round 12: gender, race/ethnicity, score on the Armed Forces Qualifying Test (a measure of cognitive skill), weeks worked in the prior calendar year, educational attainment, household income in the prior calendar year, residence in a metropolitan area, and presence of biological children in the household, and each of these with gender and race/ethnicity. The subgroup definitions are based on variables that describe or affect labor market activity, the central topic of the NLSY97.

Given the success of the enhanced final push as shown in Round 20, we propose expanding the cutoff to include anyone who meets the eligibility criteria and include roughly 2000 respondents as eligible for the enhanced final push (increasing the number eligible for enhanced final push by about 1000).

To consider potential cost effectiveness of this incentive, consider that for the R20 enhanced final push experiment at the end of data collection, the completion rate for the treatment group (47.8%) was 5.2 percentage points higher than the completion rate for the control group (42.6%). This translates into an additional 48 completes attributable to enhanced final push (450 completes in treatment, 402 in control). At a cost of $11,250, this translates to $234.38 per additional respondent. This amount translates to roughly 6.5 hours of additional interview time. Given that the enhanced final push is specifically targeted at difficult-to-reach respondents where interviews often spend substantial time on outreach to encourage completion, this represents a reasonable cost per additional completed case.

New Initial Refuser/Hard to Reach Incentive

Because extensive information about sample members and their prior response behavior is known in longitudinal surveys, the NLS and other longitudinal surveys provide ideal opportunities for tailoring incentives, including incentives based on the level of response or level of effort required to get a completion in prior rounds (Laurie and Lynn, 2008). Relatedly, the Health and Retirement Study has provided larger incentives for those who initially refuse during a round or require additional persuasion outreach and efforts but has not experimented directly with different incentive amounts for this group (Lengacher et al, 1995). To explore incentives for sample members who initially refused or required extensive outreach and persuasion, NLS will experiment with a new $25 incentive for Round 21. Two groups of respondents who completed in Round 20 will be considered as eligible for the experiment: a) respondents who initially refused in Round 20 but went on to complete and b) respondents who needed at least 40 outreach attempts in order to complete. Prior round data shows that the Round 20 completion rate was lower among those who refused at least once in Round 19 than those who never refused (68.6% vs. 91.8%) and among those who needed 40 or more outreach attempts than those with fewer than 40 attempts (72.4% vs. 98.3%). This incentive would target these prior round refusers and hard to reach respondents to try to increase their completion rate this round and potentially decrease the effort needed to get a completion.

In

order to experimentally evaluate the efficacy of this incentive, NLS

will randomly assign half of the sample members within each group to

control and treatment, stratifying if possible by eligibility group

(initial refusers, 40+ outreach attempts) and response probability

group. The effect of the incentive will be evaluated by comparing

the response rates of those receiving the incentive to those who

don’t; separate comparisons for the initial refusers will be

explored as the data permit.

Table 6: Initial Refusers and Hard to Reach Incentive Assignment

|

Initial Refusers |

40+ Outreach Attempts |

Total |

R20 response rate |

68.6 |

72.4 |

71.6 |

Sample Size |

358 |

1,263 |

1,621 |

Treatment Group |

179 |

631 |

810 |

Control Group |

179 |

632 |

811 |

Summary of Incentive Components

Table 7 summarizes the incentive components described above, mapping them to the corresponding sample segments. Segment 1, “perfect responders,” includes some respondents who will be randomly chosen to be offered the “Thank You Bonus” and some that will not. Segment 2 includes those who completed Round 20 and are not “perfect” responders and those who completed Round 20 but initially refused during that round. Segment 3 includes several subgroups who will be offered different amounts of the missed round incentive. All segments may include some respondents who will receive the final push and/or the enhanced final push incentives.

Table 7. Summary of Round 21 Incentive Structure

|

Segment 1 |

Segment 2 |

Segment 3 |

|||||

|

“Perfect” |

Completed R20, not “perfect” |

Completed R20, initially refused |

Missed R20 only |

Missed R20 and R19 only |

Missed R20, R19, and R18 only |

Missed R20, R19, R18, and R17 only |

Missed all of the prior 5 rounds |

Base |

$50 |

$50 |

$50 |

$50 |

$50 |

$50 |

$50 |

$50 |

Thank You* |

$25 |

- |

- |

- |

- |

- |

- |

|

Initial Refuser |

- |

- |

$25 |

- |

- |

- |

- |

- |

Missed Round |

- |

- |

- |

$40 |

$60 |

$80 |

$100 |

$150 |

Final Push |

$25 |

$25 |

$25 |

$25 |

$25 |

$25 |

$25 |

$25 |

Enhanced Final Push |

$25 |

$25 |

$25 |

$25 |

$25 |

$25 |

$25 |

|

Min |

$50 |

$50 |

$75 |

$90 |

$110 |

$130 |

$150 |

$200 |

Max |

$125 |

$100 |

$125 |

$140 |

$160 |

$180 |

$200 |

$225 |

Typical |

$50 |

$50 |

$75 |

$90 |

$110 |

$130 |

$150 |

|

|

Incentive Enhancements |

|||||||

Thank You Bonus |

$25 for an estimated 1,400 completed interviews out of roughly 1,450 eligible respondents. |

|||||||

Final Push |

$25 for an estimated 1,000 respondents completing after the start of final push. |

|||||||

Enhanced Final Push |

$25 for an estimated 500 completed interviews out of 2,000 eligible respondents. |

|||||||

Token |

Up to $10 per respondent for up to 2,000 respondents may be spent on in-kind token items. The distribution of these respondents across the almost 9,000 respondents in the above four groups is not known ex ante. |

|||||||

Initial Refuser/ Hard to Reach Incentive |

$25 for an estimated 703 completed interviews out of 810 eligible respondents (the 1,621 eligible respondents who refused in R20 or took 40 or more attempts before completing the interview would be divided equally among the treatment and control group). |

|||||||

Incentive Payments and Costs

Respondents will be able to receive their payments electronically through Paypal or Zelle. Electronic payments were offered to all respondents in Rounds 18-20. NLS proposes to offer them again to all respondents. Table 8 lists the total cost of incentives by respondent pool.

Table 8. Round 21 Incentive Costs by Respondent Pool (based on approximately 6,570 expected total completes)

|

Segments 1 and 2 |

Segment 3 |

|

||||

Incentive Type |

Completed R19 |

Missed R19, Completed R18 |

Missed R19 and R18, Completed R17 |

Missed R19, R18, R17 |

Missed R19, R18, R17, R16 |

Missed all of the prior 5 rounds |

|

Sample size* |

6,713 |

638 |

258 |

261 |

163 |

951 |

|

Expected completion rate |

90.82% |

44.40% |

23.24% |

11.89% |

16.22% |

||

Expected completes |

6,097 |

283 |

60 |

31 |

26 |

||

Base |

$50 |

$50 |

$50 |

$50 |

$50 |

$50 |

|

Missed Round(s) |

|

$40 |

$60 |

$80 |

$100 |

||

Total from Base and Missed Rounds |

$304,850 |

$25,470 |

$6,600 |

$4,030 |

$3,900 |

$14,800 |

|

Total from Above Row |

$359,650 |

||||||

Incentive Enhancements |

|||||||

Thank You Bonus |

$35,000 |

||||||

Final Push |

$25,000 |

||||||

Enhanced Final Push |

|||||||

Token In-Kind |

Maximum $20,000 |

||||||

Initial Refuser/ Hard to Reach Incentive |

$17,575 |

||||||

Incentive Enhancement Total |

|||||||

Total |

|||||||

* Note: Sample sizes exclude deceased and blocked cases. Expected completes based on slight reduction from Round 20 completes total. As a point of reference, total incentive costs in Round 20 were roughly $465,000 (including roughly $25,000 on a one-time supplement during the Covid-19 pandemic that will not be repeated).

10. Describe any assurance of confidentiality provided to respondents and the basis for the assurance in statute, regulation, or agency policy.

a. BLS Confidentiality Policy

The information that NLSY97 respondents provide is protected by the Privacy Act of 1974 (DOL/BLS – 17 National Longitudinal Survey of Youth 1997 (67 FR 16818)) and the Confidential Information Protection and Statistical Efficiency Act (CIPSEA). CIPSEA is shown in attachment 3.

CIPSEA safeguards the confidentiality of individually identifiable information acquired under a pledge of confidentiality for exclusively statistical purposes by controlling access to, and uses made of, such information. CIPSEA includes fines and penalties for any knowing and willful disclosure of individually identifiable information by an officer, employee, or agent of the BLS.

Based on this law, the BLS provides respondents with the following confidentiality pledge/informed consent statement in the advance letter:

We want to reassure you that your confidentiality is protected by law. In accordance with the Confidential Information Protection and Statistical Efficiency Act, the Privacy Act, and other applicable Federal laws, the Bureau of Labor Statistics, its employees and agents, will, to the full extent permitted by law, use the information you provide for statistical purposes only, will hold your responses in confidence, and will not disclose them in identifiable form without your informed consent. All the employees who work on the survey at the Bureau of Labor Statistics and its contractors must sign a document agreeing to protect the confidentiality of your data. In fact, only a few people have access to information about your identity because they need that information to carry out their job duties.

Some of your answers will be made available to researchers at the Bureau of Labor Statistics and other government agencies, universities, and private research organizations through publicly available data files. These publicly available files contain no personal identifiers, such as names, addresses, Social Security numbers, and places of work, and exclude any information about the states, counties, metropolitan areas, and other, more detailed geographic locations in which survey participants live, making it much more difficult to figure out the identities of participants. Some researchers are granted special access to data files that include geographic information, but only after those researchers go through a thorough application process at the Bureau of Labor Statistics. Those authorized researchers must sign a written agreement making them official agents of the Bureau of Labor Statistics and requiring them to protect the confidentiality of survey participants. Those researchers are never provided with the personal identities of participants. The National Archives and Records Administration and the General Services Administration may receive copies of survey data and materials because those agencies are responsible for storing the Nation’s historical documents.

BLS policy on the confidential nature of respondent identifiable information (RII) states that “RII acquired or maintained by the BLS for exclusively statistical purposes and under a pledge of confidentiality shall be treated in a manner that ensures the information will be used only for statistical purposes and will be accessible only to authorized individuals with a need-to-know.”

By signing a BLS Agent Agreement, all authorized agents employed by the BLS, the prime contractor and associated subcontractors pledge to comply with the Privacy Act, CIPSEA, other applicable federal laws, and the BLS confidentiality policy. No interviewer or other staff member is allowed to see any case data until the BLS Agent Agreement, BLS Confidentiality Training certification, and Department of Labor Information Systems Security Awareness training certification are on file. Respondents will be provided a copy of the questions and answers shown in attachment 4 about uses of the data, confidentiality, and burden. These questions and answers will appear on the back of the letter that respondents will receive in advance of the Round 21 interviews. Attachment 4 also shows the combination advance letter and locating card for Round 21.

b. Contractor Confidentiality Safeguards

NLS contractors have safeguards to provide for the security of NLS data and the protection of the privacy of individuals in the sampled cohorts. These measures are used for the NLSY97 as well as the other NLS cohorts. Safeguards for the security of data include:

1. Like all federal systems, NLS and its contractors follow the National Institute of Standards and Technology (NIST) guidelines found in Special Publication 800-53 to ensure that appropriate security requirements and controls are applied to the system. This framework provides guidance, based on existing standards and best practices, for organizations to better understand, manage and reduce cybersecurity risk.

2. Storage of printed survey documents in locked space.

3. Protection of computer files against access by unauthorized individuals and groups. Procedures include using passwords, high-level “handshakes” across the network, data encryption, and fragmentation of data resources. As an example of fragmentation, should someone intercept data files over the network and defeat the encryption of these files, the meaning of the data files cannot be extracted except by referencing certain cross-walk tables that are neither transmitted nor stored on the interviewers’ laptops. Not only are questionnaire response data encrypted, but the entire contents of interviewers’ laptops are now encrypted. Interview data are frequently removed from laptops in the field so that only information that may be needed by the interviewer is retained.

Protection of computer files against access by unauthorized persons and groups. Especially sensitive files are secured via a series of passwords to restricted users. Access to files is strictly on a need-to-know basis. Passwords change every 90 days.

To assure NORC and its subcontractor the CHRR are in compliance with the Federal Information Security Modernization Act of 2014 (FISMA) and adequately monitoring all cybersecurity risks to NLS assets and data, in addition to regular self-assessments, the NLS system undergoes a full NIST 800-53 audit every 3 years using an outside independent cybersecurity auditing firm.

Protection of the privacy of individuals is accomplished through the following steps:

Oral permission for the interview is obtained from all respondents, after the interviewer ensures that the respondent has been provided with a copy of the appropriate BLS confidentiality information and understands that participation is voluntary.

Information identifying respondents is separated from the questionnaire and placed into a nonpublic database. Respondents are then linked to data through identification numbers.

The public-use version of the data, available on the Internet, masks data that are of sufficient specificity that individuals could theoretically be identified through some set of unique characteristics.

Other data files, which include variables on respondents’ State, county, metropolitan statistical area, zip code, and census tract of residence and certain other characteristics, are available only to researchers who undergo a review process established by BLS and sign an agreement with BLS that establishes specific requirements to protect respondent confidentiality. These agreements require that any results or information obtained as a result of research using the NLS data will be published only in summary or statistical form so that individuals who participated in the study cannot be identified. These confidential data are not released to researchers without express written permission from NLS and are not available on the public use internet site.

NLS has attempted to frame questions of a more private nature so that individuals overhearing the respondent’s answers only would not be able to infer content.

In Round 21, the project team will continue several training and procedural changes that were begun in Round 11 to increase protection of respondent confidentiality. These include an enhanced focus on confidentiality and data security in training materials, clearer instructions in the Field Interviewer Manual on what field interviewers may or may not do when working cases, continued reminders of respondent confidentiality in field communications throughout the field period, and formal separation procedures when interviewers complete their project assignments. Also, online and telephone respondent locating activities have been moved from geographically dispersed field managers to locating staff in central offices.

Date of birth will be verified at the beginning of the interview; thus, preventing the interviewer from starting the interview with the wrong person and then recognizing the error during the interview when the preloaded data were questioned. This check has been in place since Round 16.

11. Provide additional justification for any questions of a sensitive nature, such as sexual behavior and attitudes, religious beliefs, and other matters that are commonly considered private. This justification should include the reasons why the agency considers the questions necessary, the specific uses to be made of the information, the explanation to be given to persons from whom the information is requested, and any steps to be taken to obtain their consent.

Continuing the practice of the last few rounds of the NLSY97, the Round 21 questionnaire includes a variety of items that permit the respondents to provide more qualitative information about themselves. Informal feedback from the interviewers and respondents indicates that this type of subjective data carries greater resonance with respondents as being informative about who they are, rather than the behavioral data that are the mainstay of the NLSY97 questionnaire. The items selected for self-description are all hypothesized in the research literature to be predictive of or correlated with labor market outcomes. There are several broad sets of questions in the NLSY97 data-collection instruments that may be considered sensitive. NLS addresses each of these categories separately below.

a.) Anti-Social Behavior

The educational and labor force trajectory of individuals is strongly affected by their involvement in delinquent and risk-taking behaviors, criminal activity, and alcohol and drug use. There is widespread interest in collecting data on such behaviors. The challenge, of course, is to obtain accurate information on activities that are socially unacceptable or even illegal. Questions on these activities have been asked in the self-administered portions of the NLSY97 through Round 18, and asked by interviewers directly for the 90 to 95 percent of interviews conducted by telephone in Rounds 18, 19 and 20. Most of these items will again be asked by interviewers in Round 21 for those interviews conducted by telephone.

Criminal Justice. The longitudinal collection of arrests, convictions, and incarceration permits examination of the effects of these events on employment activity. Through Round 18, the NLSY97 collected data on criminal activity, permitting the study of whether and how patterns of criminal activity relate to patterns of employment. Using data on both self-reported behavior and official disciplinary and court actions allows NLSY97 users to separate the effects of criminal activity that led to an arrest or other legal action from criminal activity that remained unpunished. Beginning in Round 19, NLS stopped asking the questions on criminal activity but have maintained the self-reports on arrests, convictions, and time served.

Experiences with the correctional system. The Round 21 questionnaire continues to ask several questions on incarceration and parole that were added in Round 12. These include detailed questions on parole and probation status as well as violations of that status, questions about experiences and services received while incarcerated, and questions about experiences and behaviors since release from incarceration. The questions on experiences and services received while incarcerated will be asked of currently incarcerated respondents and those who were incarcerated and released since the last interview. Respondents who were released from incarceration since the last interview also will be asked about their experiences and behaviors since they were released. For those respondents who are interviewed in person, these questions will continue to appear in the self-administered section, but for those interviewed by telephone they will be asked by interviewers directly.

b.) Mental health

The literature linking mental health with various outcomes of interest to the NLSY97, including labor force participation, is fairly well-established. The Round 19 and Round 20 questionnaires included questions on how many times the respondent has been treated for emotional, mental, or psychiatric problems and how many times the respondent missed work or activities because of such problems in the past year, as well as the seven-item Center for Epidemiological Depression Scale (CESD), a screening instrument for depression, and the Generalized Anxiety Disorder Screener-7 (GAD-7), a screening instrument for anxiety. NLS proposes to ask these questions again in Round 21. Note that NLS also collected the CESD measure for the NLSY97 sample in the interim supplement fielded between Rounds 19 and 20. Repeated collection of this measure at different points around the Coronavirus pandemic will be valuable for tracing the effect of the pandemic on mental health and other outcomes that are mediated through mental health.

c.) Income, Assets, and Program Participation

The questionnaire asks all respondents about their income from wages, salaries, and other income received in the last calendar year. Other income is collected using a detailed list of income sources such as self-employment income, receipt of child support, interest or dividend payments, or income from rental properties. Respondents also are asked about their participation in government programs. Included are specific questions regarding a number of government assistance programs such as Unemployment Compensation, AFDC/TANF/ADC, and food stamps. For Unemployment Compensation, respondents will be asked about their application for Unemployment Insurance, as well as participation and income.

In addition to income, respondents are periodically asked about current asset holdings. Questions include the market value of any residence or business, whether the respondent paid property taxes in the previous year, and the amount owed on motor vehicles. Other questions ask about the respondent’s current checking and savings account balances, the value of various assets such as stocks or certificates of deposit, and the amount of any loans of at least $200 that the respondent received in the last calendar year. To reduce respondent burden, the asset questions are not asked of each respondent in every round. These questions have been asked in the first interview after the respondent turns 18, and the first interviews after the respondent’s 20th, 25th, 30th, and 35th birthdays. Because asset accumulation is slow at these young ages, this periodic collection is sufficient to capture changes in asset holdings. In Rounds 17 through 20, respondents were asked to report their assets as of age 35. Collection of assets as of age 40 began in Round 19 for the oldest members of the cohort.

Given the high fraction of household wealth associated with home ownership, the NLSY97 questionnaire collects home ownership status and (net) equity in the home from respondents each year that they are not scheduled for the full assets module. In Round 14 NLS added a question on present value of the home. NLS also asks respondents who owned a house or other dwelling previously and no longer live there about what happened to their house or dwelling. To allow for respondent-interviewer rapport to build before these potentially sensitive items are addressed, the housing value questions were moved from the (first) Household Information section to the Assets section, which occurs late in the interview. This question series also permits the respondent to report the loss of a house due to foreclosure.

Rounds 1-13 from the NLSY97 questionnaires collected month-by-month participation status information for several government programs. These questions have been substantially reduced so that monthly data were no longer collected beginning with the Round 14 interview except from respondents who did not complete the Round 13 interview. The relatively low levels of participation reported did not seem to justify the respondent burden imposed by these questions. More detailed questions about receipt of income from government programs are now asked in the Income section with other sources of income.

d.) Financial Health

To get a better understanding of the financial well-being of the respondents, in Round 21 NLS continues some of the questions that were introduced in Round 10 about a respondent’s financial condition. NLS asks a set of questions to measure the financial distress of the respondents in the past 12 months. In particular, NLS asks whether respondents have been 60 days late in paying their mortgage or rent, and whether they have been pressured to pay bills by stores, creditors, or bill collectors. In addition, NLS asks respondents to pick the response that best describes their financial condition from the following list:

very comfortable and secure

able to make ends meet without much difficulty

occasionally have some difficulty making ends meet

tough to make ends meet but keeping your head above water

in over your head

The goal of these questions is to understand better the financial status of these respondents and how this status affects and is affected by their labor market activities.

Respondents are free to refuse to answer any survey question, including the sensitive questions described above. NLS’ experience has been that participants recognize the importance of these questions and rarely refuse to answer.

12. Provide estimates of the hour burden of the collection of information. The statement should:

Indicate the number of respondents, frequency of response, annual hour burden, and an explanation of how the burden was estimated. Unless directed to do so, agencies should not conduct special surveys to obtain information on which to base hour burden estimates. Consultation with a sample (fewer than 10) of potential respondents is desirable. If the hour burden on respondents is expected to vary widely because of differences in activity, size, or complexity, show the range of estimated hour burden, and explain the reasons for the variance. General, estimates should not include burden hours for customary and usual business practices.

If this request for approval covers more than one form, provide separate hour burden estimates for each form

Provide estimates of annualized cost to respondents for the hour burdens for collections of information, identifying and using appropriate wage rate categories. The cost of contracting out or paying outside parties for information collection activities should not be included here. Instead, this cost should be included in Item 14.

The Round 21 field effort will seek to interview each respondent identified when the sample was selected in 1997. NLS will attempt to contact approximately 8,720 sample members who are not known to be deceased. BLS expects that interviews with approximately 6,570 of those sample members will be completed. The content of the interview will be similar to the interview in Round 20, with a small number of additions and deletions. Based upon interview length in past rounds, NLS estimates the interview time will average about 74 minutes.

Interview length will vary across respondents. For example, the core of the interview covers schooling and labor market experience. Naturally, respondents vary in the number of jobs they have held, the number of schools they have attended, and their experiences at work and at school. The aim is to be comprehensive in the data NLS collects, and this leads to variation in the time required for the respondent to remember and relate the necessary information to the interviewer. For these reasons, the timing estimate is more accurate on average than for each individual case.

The estimated burden in Round 21 includes an allowance for attrition that takes place during the course of longitudinal surveys. To minimize the effects of attrition, NLS will seek to complete interviews with living respondents from Round 1 regardless of whether the sample member completed an interview in intervening rounds.