Part B NHES 2023 v20

Part B NHES 2023 v20.docx

National Household Education Survey 2023 (NHES:2023) Full-scale Data Collection

OMB: 1850-0768

National Household Education Survey 2023 (NHES:2023)

Full-scale Data Collection

OMB# 1850-0768 v.20

Part B

Description of Statistical Methodology

February 2022

revised May 2023

TABLE OF CONTENTS

DESCRIPTION OF STATISTICAL METHODOLOGY 1

B.1 Respondent Universe and Statistical Design and Estimation 1

B.1.2 Within-Household Sampling 4

B.1.6 Nonresponse Bias Analysis 9

B.2 Statistical Procedures for Collection of Information 11

B.3 Methods for Maximizing Response Rates 23

B.4 Tests of Procedures and Methods 24

B.4.1 Experiments Influencing the Design of NHES:2023 24

B.4.2 Experimental Conditions Included in the Design of NHES:2023 25

B.5 Individuals Responsible for Study Design and Performance 28

List of Tables

Table Page

Sample allocation by race/ethnicity stratum and child stratum: NHES:2023 3

Expected percentage of households with eligible children, by topical domain 7

Expected numbers of sampled and completed screeners and topical surveys for households 7

4 Numbers of completed topical interviews in previous NHES administrations 7

5 Expected margins of error for NHES:2023 screener, by survey proportion estimates and subgroup

size 8

6 Expected margins of error for NHES:2023 topical survey percentage estimates, by subgroup size,

topical survey, and survey estimate proportion 8

List of Figures

Figure Page

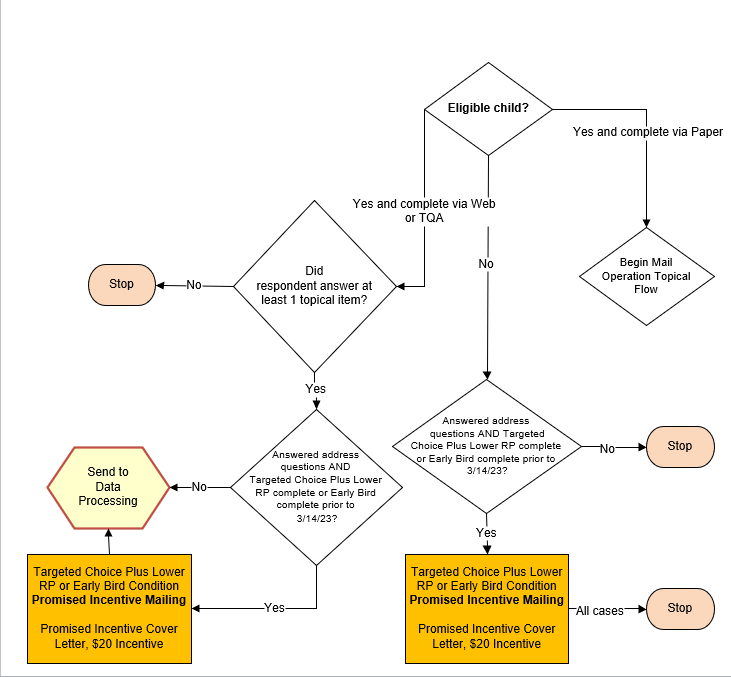

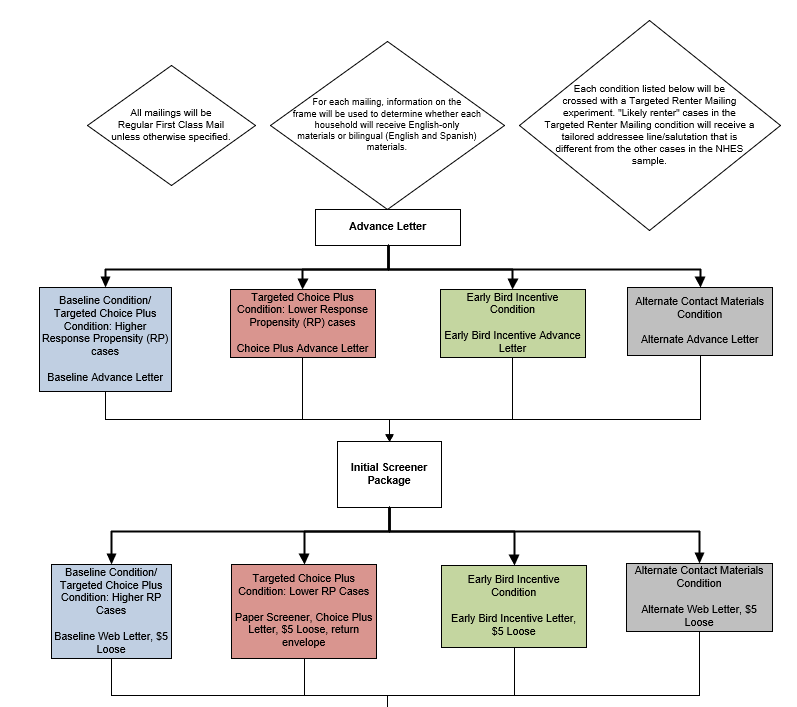

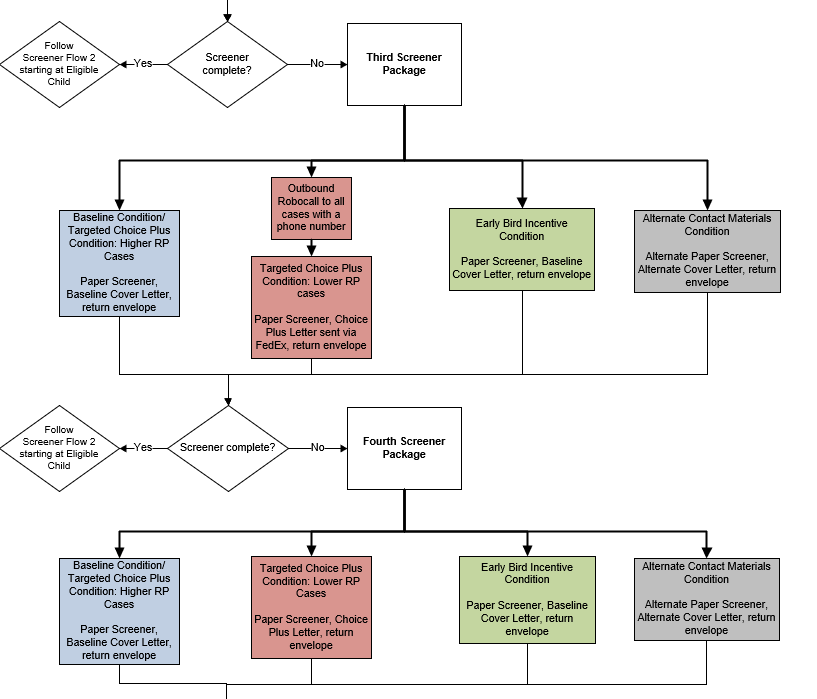

1 Screener Data Collection Plan Flow 1 15

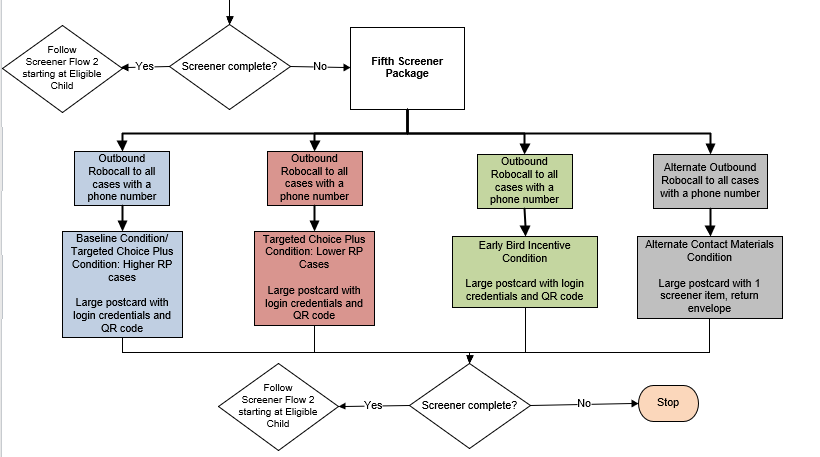

2 Screener Data Collection Plan Flow 2 19

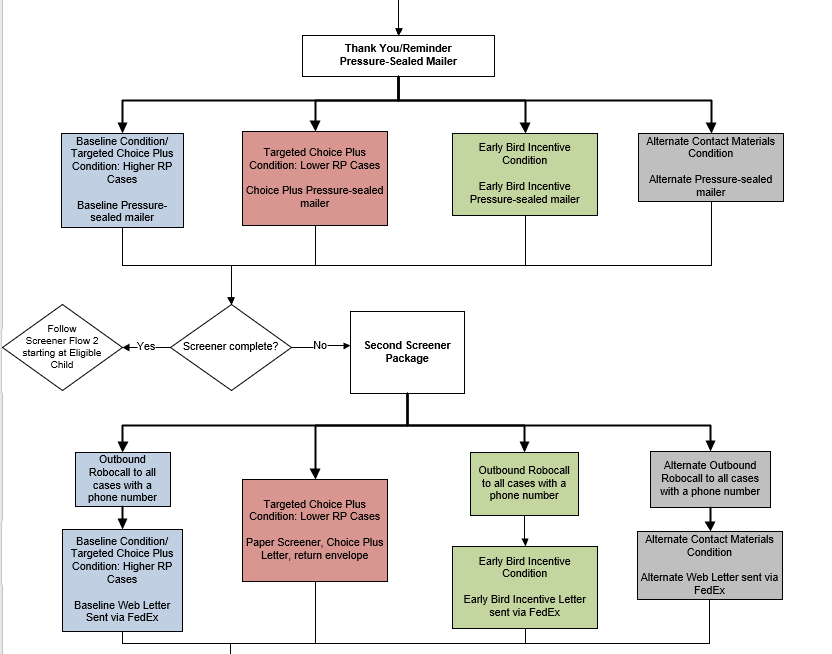

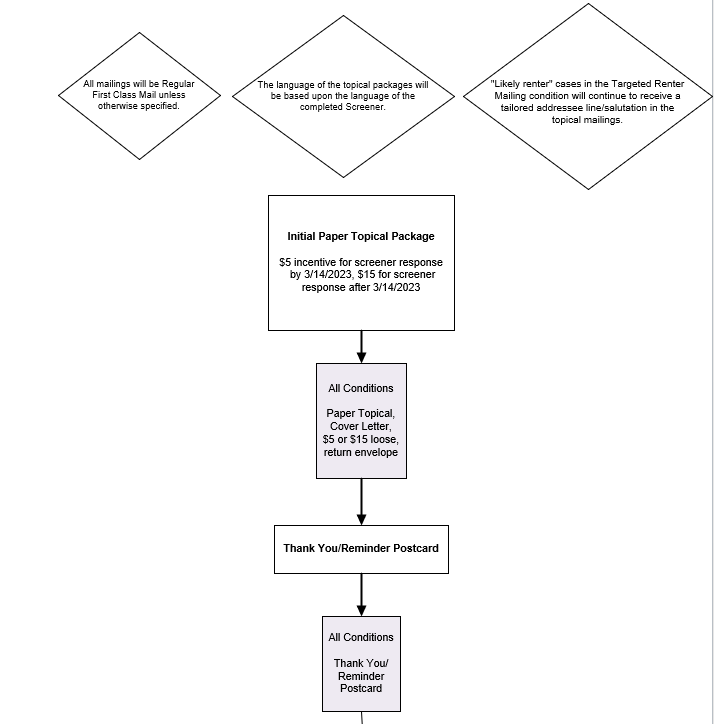

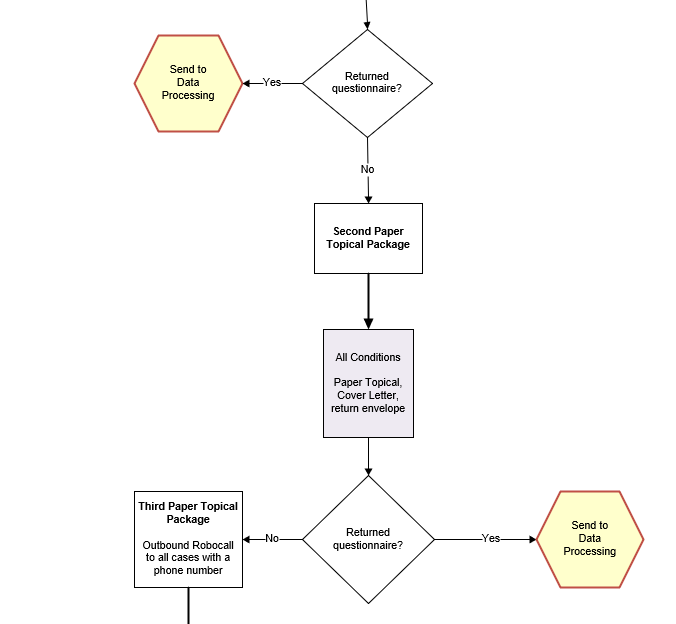

3 Topical Mail Out Data Collection Plan 20

DESCRIPTION OF STATISTICAL METHODOLOGY

B.1 Respondent Universe and Statistical Design and Estimation

Historically, an important purpose of the National Household Education Surveys Program (NHES) has been to collect repeated measurements of the same phenomena at different points in time. Decreasing response rates during the past decade required NCES to redesign NHES. This redesign involved changing the sampling frame from a list-assisted Random Digit Dial (RDD) to an Address-Based Sample (ABS) frame. The mode of data collection also changed from telephone, interviewer administered to web and mail, self-administered.

The last NHES data collection prior to the redesign was conducted in 2007 and included the Parent and Family Involvement in Education (PFI) and the School Readiness (SR) survey.1 NHES:2012, comprised of the PFI and Early Childhood Program Participation (ECPP) topical surveys, was the first full-scale data collection using an addressed-based sample and a self-administered questionnaire. The overall screener plus topical response rates for the PFI and ECPP surveys were approximately 58 percent in 2012, 49 percent in 2016, and 53 to 54 percent in 2019, compared to the (pre-redesign) 2007 overall response rate of 39 to 41 percent. These results suggest that the new methodology did help to increase response rates and address coverage issues identified in the 2007 data collection.

In addition, NHES:2016 and 2019 had a web component to further help increase response rates and reduce costs. Sample members who were offered web in both administration years were offered paper surveys after the first two web survey invitations were unanswered; therefore, we refer to those cases as receiving a “sequential mixed-mode”2 survey protocol. For the sequential mixed-mode experiment, the overall screener plus topical response rate was approximately 52 percent in 2016 and 2019 for both the ECPP and PFI. The use of web components allowed for higher topical response rates than the use of paper questionnaires (by 4 to 5 percentage points) because the screener and topical survey on the web appear to the respondent to be one seamless instrument instead of two separate survey requests. NHES:2023 will use a similar mixed-mode web design based on lessons learned from the NHES:2019.

NHES:2023 will be an address-based sample covering the 50 states and the District of Columbia, and will be conducted by web and mail from January through August 2023. The household target population is all residential addresses (excluding P.O. Boxes that are not flagged by the United States Postal Service [USPS] as the only way to get mail) and is estimated at 131,053,037 addresses.

Households will be randomly sampled as described in section B.1.1, and an invitation letter to complete the survey on the web and/or a paper screener questionnaire will be sent to each sampled household. Demographic information about household members provided on the screener will be used to determine whether the household is eligible for the ECPP survey or the PFI.

The target population for the ECPP survey consists of children age 6 or younger who are not yet in kindergarten. The target population for the PFI survey consists of children/youth ages 20 or younger who are enrolled in kindergarten through twelfth grade or who are homeschooled for those grades. Age and enrollment status will be determined based on information provided in the screener.

B.1.1 Sampling Households

A nationally representative sample of 205,000 addresses will be drawn in a two-stage process from a file of residential addresses maintained by a vendor, Marketing Systems Group (MSG; MSG has provided the sample for all NHES administrations since 2012). The MSG file is based on the USPS address-based Computerized Delivery Sequence (CDS) File. In the first sampling stage, the initial sample will consist of 305,000 addresses randomly selected by MSG from the USPS address-based frame. After invalid addresses are removed from the 305,000-household sample, a second stage of random sampling will be conducted by NCES’s sample design contractor, the American Institutes for Research (AIR), yielding a 205,000-household final screener sample. The remaining households will remain unused to protect respondent confidentiality.3

B.1.1.1 Black and Hispanic Oversample

As in past NHES surveys, NHES:2023 will oversample Black and Hispanic households using Census tract when the initial sample is drawn. This oversampling is necessary to produce more reliable estimates for subdomains defined by race and ethnicity. NHES:2023 will use the stratification methodology that was used for the NHES:2012, 2016 and 2019 administrations. The sampling frame includes information on Census tracts likely to contain relatively high proportions of these subgroups. The three strata for NHES:2023 are defined as households in:

1) Tracts with 25 percent or more Black persons;

2) Tracts with 40 percent or more Hispanic persons (and not 25 percent or more Black persons);

3) All other tracts.

The sample allocation to the three strata will be 20.7 percent to the Black stratum, 10.7 percent to the Hispanic stratum and 68.6 percent4 to the third stratum in the initial sample of 305,000 addresses. This allocation will improve the precision of estimates by race/ethnicity compared to the use of simple random selection of households across strata, and will protect against unknown factors that may affect the estimates for key subgroups, especially differential response rates. The Hispanic stratum contains a high concentration of households in which at least one adult age 15 or older speaks Spanish and does not speak English very well, and also will be used for the assignment of bilingual screener mailing materials.

In addition to stratifying by the race/ethnicity groups mentioned above, these three strata will be sorted by a poverty variable and the sample will be selected systematically from the sorted list to maintain the true poverty level proportions in the sample. The Census tracts will be used to define the two categories of poverty level as households in:

Tracts with 20 percent or more below the poverty line;

Tracts with less than 20 percent below the poverty line.

B.1.1.2 Child Oversample

In NHES:2019, about 25 percent of the households who responded to the screener questionnaire had eligible children for the study. To increase the sampling efficiency for NHES:2023, the sample of 205,000 addresses will be further stratified into two strata by whether a household is predicted to have any NHES-eligible children. Households predicted to have NHES-eligible children will be selected at a higher probability than those predicted not to have eligible children.

This new approach is being adopted in NHES:2023 in order to (1) increase the topical eligibility rate; (2) maintain effective topical sample sizes, which helps reach the desired precision of ECPP and PFI survey estimates; and (3) facilitate trend comparisons to prior-year estimates.

Each household will be assigned a predicted probability indicating the likelihood that an NHES-eligible child is present, based on the results of a predictive model.5 Two child strata are defined as:

Households with a predicted probability of 0.4 or higher (the “likely-child” stratum);

Households with a predicted probability below 0.4 (the “not-likely-child” stratum).

The sample allocation will be 70,000 households to the likely-child stratum and 135,000 households to the not-likely-child stratum.

B.1.1.3 Subsampling for Each Stratum

A total of six screener strata will be formed by crossing the three race/ ethnicity strata with the two child strata. The final screener sample will maintain the same proportion of Black and Hispanic households as in the NHES:2019 (i.e. 20% from Black stratum, 15% from Hispanic stratum, and 65% from All other stratum) to ensure enough survey responses collected from the Black and Hispanic populations. The final sample sizes for each of the screener strata are shown in table 1.

Table 1. Sample allocation by race/ethnicity stratum and child stratum: NHES:2023

Race/ethnicity stratum |

Child stratum |

Total |

|

Likely-child (%) |

Not-likely-child (%) |

||

Black |

14,000 (20%) |

27,000 (20%) |

41,000 |

Hispanic |

10,500 (15%) |

20,250 (15%) |

30,750 |

All Other |

45,500 (65%) |

87,750 (65%) |

133,250 |

Total |

70,000 (100%) |

135,000 (100%) |

205,000 |

B.1.1.4 Assigning Final Screener Sample to Experiments

Households in the final screener sample will be randomly assigned to one of four data collection treatment conditions (baseline, early bird incentives, alternate materials, and targeted choice-plus conditions).

The baseline sample will mimic the web-push design protocols in 2019, with some modifications based on the lessons learned from that design. These cases will serve as a baseline for comparisons to other treatment conditions.

The sample allocated for early bird incentives condition will test the utility of a promised incentive contingent on response by a specified deadline, within the same web-push protocol as the baseline sample.

Alternate materials experiment will attempt to increase response rates (for both households with and without children), within the same web-push protocol as the baseline sample, by being more explicit in the screener materials about how the purpose and expectation of the survey requests differ depending on whether or not a household has children.

The targeted choice-plus treatment will mimic the 2019 choice-plus protocol.6 However, since this protocol is costly, the 2023 design will test the feasibility and utility of limiting choice-plus to relatively low-response-propensity (RP) cases while using web-push for the remaining cases. The lower-RP households will be provided web and paper options but will be incentivized to respond by web.

A total of 53,000 households will be chosen for the baseline condition, 53,000 for the early bird incentive condition, 4,000 for the alternate materials condition, and 89,500 for the targeted choice-plus condition.

In addition, the four data collection conditions will be fully crossed with a mailing treatment condition (targeted renter mailings) and two ECPP split panels (for health items, and for child care arrangement items), resulting in a 4x2x2x2 factorial design.

The targeted renter mailings condition will assess whether using a tailored addressee line and salutation, compared to the use of a standard addressee line and salutation, increases the response rate for the renter households. Half of the NHES:2023 sample (102,500) will be randomly assigned to tailored renter mailings, while the other half (102,500) will be assigned to standard mailings.

The ECPP health item split panel will test the new and redesigned child’s health items as potential replacements for the NHES:2019 health items. The health item split panel will be tested in both the web instrument and the paper survey. Half of the NHES:2023 sample (102,500) will be randomly assigned to receive the same health items as in NHES:2019, while the other half (102,500) will be assigned to the alternate health condition items.

The ECPP child care arrangement items split panel will test a new grid format of child care arrangements items in the web instrument only. A total of 40,000 households will be randomly assigned to receive the new version of the child care arrangement items, while the remaining 165,000 households will be assigned to the same version of the items as in NHES:2019.

B.1.2 Within-Household Sampling

Among households that complete the screener and report children eligible for one or more topical surveys, a three-phase procedure will be used to select a single child to receive a topical questionnaire. In order to minimize household burden, only one eligible child from each household will be sampled; therefore, each household will receive only one of the two topical surveys. In households with multiple children, homeschooled children are chosen at higher rates than enrolled children, and young children who are eligible to be sampled for the ECPP survey are chosen at higher rates than K-12 enrolled students.

The topical sampling procedure works by first randomly assigning two pre-designations to each household. Because the sampling frame doesn’t include information about the eligibility of the households for the NHES survey, two pre-designations will be assigned to all households in the screener sample to ensure the desired selection probabilities for homeschooled and ECPP children, once their eligibility is determined through their screener response. These pre-designations are a homeschooled domain flag and a child domain flag that assign children in a household to one of the three topical domains: PFI-Homeschooled (homeschooled K-12 students), PFI-Enrolled (K-12 enrolled students in school), and ECPP (children age 6 or below before kindergarten). Households selected for the PFI-Homeschooled and PFI-Enrolled domains will receive the PFI survey.7 Households selected for the ECPP domain will receive the ECPP survey.

Depending on the composition of the household, one or both of these pre-designations are used to assign the household to one of the two topical surveys. If the household has more than one child eligible for the survey for which it is selected, the final phase of sampling randomly selects one of these children for that survey. The remainder of this section provides additional detail on each of the three phases, and provides examples of how this procedure would work for three hypothetical households.

First-Phase Sampling: Assign Topical Pre-designation Flags

In the first phase of sampling, all households in the screener sample will be assigned two domain pre-designations-- homeschooling pre-designation and child-domain pre-designation--prior to the screener data collection. The two designations will allow for oversampling of PFI-Homeschooled children and ECPP children since they account for a smaller percentage of the child population, compared to the PFI-enrolled children. These designations will be used in the second phase of sampling to assign each household to a sampling domain once households return their screener.

Assign Homeschooled Domain Flag (Homeschool or not)

Each address in the screener sample will be randomly pre-designated as either a “homeschool household” or an “other household.” A household will be pre-designated as a “homeschool household” with a 0.8 probability and as an “other household” with a 0.2 probability. In later sampling steps, this pre-designation is referred to as the homeschooling pre-designation. Depending on the child composition of a household, this pre-designation may not be used for sampling in the later steps.

The purpose of assigning a higher rate of the households as a “homeschool household” is to increase the number of homeschooled children for whom data are collected. NHES is the only nationally representative survey that collects information about homeschooled children in the United States. Because this is such a small sub-population (about 3 percent of K-12 enrollees), this sampling scheme was introduced in NHES:2016 (and used in both NHES:2016 and 2019) and resulted in a doubling of the number of homeschool respondents compared to the NHES:2012 administration. Due to the small number of households with homeschooling children, this oversampling of homeschooled children is expected to have a minimal impact on sample sizes and design effects for the topical surveys.

Assign Child-Domain Flag (ECPP or PFI)

In addition to homeschool pre-designation, each household in the screener sample will be pre-designated as an “ECPP household” with a 0.7 probability, or as a “PFI-Enrolled household” with a 0.3 probability. In later survey steps, this pre-designation is referred to as the child-domain pre-designation. Depending on the child composition of a household, this pre-designation may not be used for sampling in the later steps.

Because the ECPP population is smaller than the PFI-Enrolled population, fewer children are eligible for the ECPP survey than PFI survey. A higher rate of households is pre-designated as “ECPP household” to ensure a sufficient sample size for ECPP survey.

Second-Phase Sampling: Sampling One Survey for Each Household

Once households return their screener response, the two domain pre-designations will be used sequentially to determine which survey a household should be sampled for. The homeschool pre-designation will be applied first to ensure homeschooled children are sampled at a higher rate (with a probability of 80 percent) than ECPP and PFI-Enrolled children. This pre-designation will only be used (1) when a household has any child eligible for the PFI-Homeschool domain and any child eligible for ECPP or PFI-Enrolled domain, or (2) when a household has children from all three domains, to determine which child a household will be sampled for. If a household meets one of these two conditions, a household pre-designated as “homeschool household” will be sampled for a homeschooled child and receive the PFI survey, while a household pre-designated as “other household” will be sampled for either the ECPP or PFI-Enrolled child, dependent on their pre-designated value on the child-domain flag. The homeschool pre-designation will not be used if a household only has a child or children eligible for the PFI-Homeschooled domain, or if a household doesn’t have any child eligible for the PFI-Homeschooled domain.

Next, the child-domain pre-designation will be used to determine the survey to which the household will be assigned if (1) the household has children eligible for both ECPP and PFI-Enrolled domains, and (2) a homeschooled child was not selected, either because the household is pre-designated as “other household”, or because the household does not have any homeschooled children. In these cases, a child’s age, among other factors, will determine if the child is eligible for the ECPP or the PFI-Enrolled domain. ECPP children will be sampled at a higher rate (with a probability of 70 percent) than PFI-Enrolled children for these households. The households pre-designated as “ECPP household” will be sampled for ECPP domain and receive the ECPP topical survey, while the households pre-designated as “PFI-Enrolled household” will be sampled for PFI-Enrolled domain and receive the PFI topical survey. The child-domain pre-designation will not be used if a homeschooled child is already selected based on the homeschool pre-designations, or a household only has children eligible for either the ECPP or the PFI-Enrolled domain.

If a household has children eligible for only one of the three domains, that household will be sampled for the only domain the children are eligible for, regardless of the two pre-designations. That is, if a household only has children eligible for the PFI-Homeschooled domain, and not the ECPP or PFI-Enrolled domains, a homeschooled child in that household will be sampled and the household will receive the PFI survey. Likewise, if a household has children eligible for only the ECPP domain and not the PFI-Enrolled or PFI-Homeschooled domains, that household will be sampled for the ECPP domain and receive the ECPP survey.

Third-Phase Sampling: Sampling Only One Child from Each Household

The third phase of sampling is at the child level. If a household has only one child that is eligible for the domain that was selected in the first two phases of sampling, that child is selected as the sample member about whom the topical survey is focused. If a household has two or more children eligible for the domain that was selected in the first two phases of sampling, one of those children will be randomly selected (with equal probability) as the sample member to be the focus of the appropriate topical survey. For example, if there are two children in the house eligible for the ECPP survey and the household was pre-designated an ECPP household, then one eligible child will be randomly selected (with equal probability). Therefore, at the end of the three phases of sampling, among households with eligible children who have a completed screener, only one eligible child within each household will be sampled for the ECPP or PFI topical survey, respectively.

Topical Sampling Examples

The use of the topical pre-designations can be illustrated by considering hypothetical households with members eligible for various combinations of the topical surveys. First, consider a household with four children: one child eligible for the ECPP survey, and three children eligible for the PFI survey with one child homeschooled. Suppose that the household is pre-designated as an “other household” and a “PFI household”. The homeschool pre-designation indicates that we should not sample the homeschool child; the child-domain pre-designation indicates that we should sample the PFI-Enrolled children. Because the household has two children eligible for the PFI-Enrolled domain, one of these non-homeschooled children is randomly selected for the PFI survey with 0.5 probability in the final sampling phase.

Second, consider a household with two children: one child eligible for the PFI survey who is homeschooled and one child eligible for the ECPP survey. Suppose that the household is pre-designated as a “homeschool household” and an “ECPP household”. Because the household has a child eligible for the PFI-Homeschooled domain, the homeschool pre-designation is used to assign the household to the PFI survey. Because a homeschooled child has been selected, the child-domain pre-designation is not used and in the final phase the homeschooled child is selected with certainty for the PFI survey.

Finally, consider a household with three children: one child eligible for the ECPP survey and two children eligible for the PFI survey (no children are homeschooled). Suppose that the household is pre-designated as a “homeschool household” and an “ECPP household”. Because the household has no children eligible for the PFI-Homeschooled domain, the homeschool pre-designation is not used. Since the household child-domain pre-designation is “ECPP household”, the household is assigned to receive the ECPP survey. Because only one child is eligible for the ECPP domain, this child is selected with certainty for the ECPP survey in the final sampling phase.

B.1.3 Expected Yield

As described above, the initial sample will consist of approximately 205,000 addresses. An expected screener response rate of approximately 57.8 percent and an address ineligibility8 rate of approximately 8.6 percent are assumed, based on results from NHES:2019 and expected differences in screener eligibility and response rates due to the child oversample design and between experimental treatment groups. Under this assumption, the total number of expected completed screeners is 108,262.

The ECPP and PFI topical surveys will be administered to households with completed screeners that have eligible children. For NHES:2023, we expect to achieve a total percentage of households with eligible children of approximately 29.8 percent.9 Expected estimates of the percentage of households with eligible children overall and in each sampling domain are given in table 2, as well as the expected number of screened households in the nationally representative sample of 205,000, based on the distribution of household composition and assuming 108,262 total completed screeners.

Table 2. Expected percentage of households with eligible children, by topical domain |

|

||||

Household composition |

Percent of households |

Expected number of screened households |

|||

Total households with eligible children |

29.789 |

32,250 |

|||

At least one ECPP-eligible child and no PFI-Enrolled- or PFI-Homeschooled-eligible children |

5.075 |

5,494 |

|||

At least one PFI-Enrolled-eligible child and no PFI-Homeschooled- or ECPP-eligible children |

18.560 |

20,094 |

|||

At least one PFI-Homeschooled-eligible child and no PFI-Enrolled- or ECPP-eligible children |

0.534 |

578 |

|||

At least one ECPP-eligible child, at least one PFI-Enrolled-eligible child, and no PFI-Homeschooled-eligible children |

5.130 |

5,553 |

|||

At least one ECPP-eligible child, at least one PFI-Homeschooled-eligible child, and no PFI-Enrolled-eligible children |

0.257 |

278 |

|||

At least one PFI-Enrolled-eligible child, at least one PFI-Homeschooled-eligible child, and no ECPP-eligible children |

0.193 |

209 |

|||

At least one PFI-Enrolled-eligible child, at least one PFI-Homeschooled-eligible child, and at least one ECPP-eligible child |

0.039 |

43 |

|||

NOTE: The distribution in this table assumes 108,262 screened households. Detail may not sum to totals because of rounding. Estimates are based on calculations from NHES:2019 and adjusted for the child oversample design and experimental conditions in NHES:2023. See part A of the OMB packages for the details of the experiments to be conducted in NHES:2023. |

|

||||

Table 3 summarizes the expected numbers of completed interviews for NHES:2023 for each survey, and for homeschooled children. These numbers take into account within-household sampling. Based on results from NHES:2019 and the adjustments for the 2023 child oversample design and experimental treatments, a topical response rate of approximately 90.5% is expected for the ECPP, approximately 87.2% for the PFI.10 Based on an initial sample of 205,000 addresses and an expected eligibility rate of 91.4 percent, the expected number of completed screener questionnaires is 108,262. Of these, we expect to have approximately 9,443 completed questionnaires for the ECPP survey and 22,807 completed questionnaires for the PFI survey (1,002 of which are expected to be completed questionnaires from homeschooled children).

Table 3. Expected number of sampled and completed screeners and topical surveys for households |

||

Survey |

Expected number sampled NHES:2019 |

Expected number of completed interviews NHES:2019 |

Household screeners |

205,000 |

108,262 |

ECPP |

9,443 |

8,542 |

PFI |

22,807 |

19,886 |

Homeschooled children |

1,002 |

797 |

Note: The PFI combined response rate is expected to be 87.192% but the expected response rates differ depending on the type of respondent. For PFI homeschooled children the response rate is 79.544% and for PFI children enrolled in school is 87.544%.

For comparison purposes, Table 4 shows the number of ECPP and PFI completed questionnaires from previous NHES surveys administrations.

Table 4. Numbers of completed topical questionnaires in previous NHES administrations |

|

|

|

||||||||

Survey |

NHES surveys administration |

||||||||||

1993 |

1995 |

1996 |

1999 |

2001 |

2003 |

2005 |

2007 |

2012 |

2016 |

2019 |

|

ECPP |

† |

7,564 |

† |

6,939 |

6,749 |

† |

7,209 |

† |

7,893 |

5,844 |

7,092 |

PFI - Enrolled |

19,144 |

† |

17,774 |

17,376 |

† |

12,164 |

† |

10,370 |

17,166 |

13,523 |

16,446 |

PFI - Homeschooled |

† |

† |

† |

285 |

† |

262 |

† |

311 |

397 |

552 |

519 |

† Not applicable; the specified topical survey was not administered in the specified year. |

|

||||||||||

B.1.4 Reliability

The reliability of estimates produced given specific sample sizes is measured by the margin of error. The margins of error for selected percentages from the screener (based on a sample size of 108,262) is shown in table 5. As an example, if an estimated proportion is 30 or 70 percent, the margin of error will be below 1 percent for the overall population, as well as within subgroups that constitute 50 percent, 20 percent, and 10 percent of the population. The same is true for survey estimate proportions of 10, 20, 80 or 90 percent.

Table 5. Expected margins of error for NHES:2023 screener, by survey proportion estimate and subgroup size† |

||||

Survey estimate proportion |

Margin of error for estimate within: |

|||

All respondents |

50% subgroup |

20% subgroup |

10% subgroup |

|

10% or 90% |

0.19% |

0.27% |

0.43% |

0.60% |

20% or 80% |

0.25% |

0.36% |

0.57% |

0.81% |

30% or 70% |

0.29% |

0.41% |

0.65% |

0.92% |

40% or 60% |

0.31% |

0.44% |

0.70% |

0.99% |

50% |

0.32% |

0.45% |

0.71% |

1.01% |

† Based on NHES:2019, a design effect of 1.144 and an effective sample size of 94,670 were used in the calculations for this table. The design effect accounts for the unequal weighting at the screener level. The margins of error were calculated assuming a confidence level of 95 percent, using the following formula: 1.96*sqrt[p*(1-p)/ne], where p is the proportion estimate and ne is the effective sample size for the screener survey. |

||||

Table 6 shows the expected margins of error for topical respondents. A higher margin of error indicates a less reliable estimate. The expected margin of error for each estimate is expected to be between 1 percentage point and 16.0 percentage points depending on the subgroup size, with the highest margins of error derived from the (relatively small) PFI homeschooled sample.

Table 6. Expected margins of error for NHES:2023 topical survey percentage estimates, by subgroup size, topical survey, and survey estimate proportion† |

|||||

Topical survey |

Survey estimate proportion |

Margin of error for estimate within: |

|||

All respondents |

50% subgroup |

20% subgroup |

10% subgroup |

||

ECPP |

10% or 90% |

0.92% |

1.31% |

2.07% |

2.92% |

20% or 80% |

1.23% |

1.74% |

2.75% |

3.90% |

|

30% or 70% |

1.41% |

2.00% |

3.16% |

4.46% |

|

40% or 60% |

1.51% |

2.13% |

3.37% |

4.77% |

|

50% |

1.54% |

2.18% |

3.44% |

4.87% |

|

PFI |

10% or 90% |

0.68% |

0.96% |

1.51% |

2.14% |

20% or 80% |

0.90% |

1.28% |

2.02% |

2.85% |

|

30% or 70% |

1.03% |

1.46% |

2.31% |

3.27% |

|

40% or 60% |

1.11% |

1.56% |

2.47% |

3.50% |

|

50% |

1.13% |

1.60% |

2.52% |

3.57% |

|

PFI homeschooled children |

10% or 90% |

3.04% |

4.29% |

6.79% |

9.60% |

20% or 80% |

4.05% |

5.72% |

9.05% |

12.80% |

|

30% or 70% |

4.64% |

6.56% |

10.37% |

14.66% |

|

40% or 60% |

4.96% |

7.01% |

11.08% |

15.67% |

|

50% |

5.06% |

7.15% |

11.31% |

16.00% |

|

† Based on preliminary analysis, the following design effects were used in the calculations for this table: 2.109 for the ECPP, 2.636 for the overall PFI, and 2.125 for the PFI homeschool children. These represent the design effects due to unequal weighting at the screener and topical levels. The expected effective sample size is 7,545 for the overall PFI, 375 for homeschoolers, and 4,051 for the ECPP. The margins of error were calculated assuming a confidence level of 95 percent, using the following formula: 1.96*sqrt[p*(1-p)/ne], where p is the proportion estimate and ne is the effective sample size for the topical survey. |

|||||

B.1.5 Estimation Procedures

The data sets from NHES:2023 will have weights assigned to households to facilitate estimation of nationally representative statistics. All households responding to the screener will be assigned weights based on their probability of selection and a non-response adjustment, making them representative of the target child population. All households in the national sample responding to the topical questionnaires will have a record with a person weight designed for the children selected from the households, such that the complete data set represents each targeted child population of the survey.

The final person-level weights for NHES:2023 surveys will be formed in stages. The first stage is the creation of a base weight for the household, which is the inverse of the probability of selection of the address. The second stage incorporates a screener nonresponse adjustment that is based on characteristics available on the household sampling frame.

These household-level weights then serve as the base weights for the person-level weights. For each completed topical questionnaire, the person-level weights also undergo a series of adjustments. The first stage is the adjustment of the household-level weights for the probability of selecting the child within the household. The second stage is the adjustment of the weights for topical survey nonresponse to be performed based on characteristics available on the household sampling frame and the screener (see next section). The third stage is the raking adjustment of the weights to Census Bureau estimates of the target population. The variables that may be used for raking at the person level include race and ethnicity of the sampled child, household income, home tenure (own/rent/other), region of the country where the household resides, child’s age, child’s grade, child’s gender, family structure (one parent or two parent), and highest educational attainment in household. These variables have been shown to be associated with response rates based on the weighting analysis from prior administrations.11 The final raked person-level weights include under-coverage adjustments as well as adjustments for nonresponse.

Standard errors of the estimates will be computed using a jackknife replication method. The replication process repeats each stage of estimation separately for each replicate. The replication method is especially useful for obtaining reliable standard errors for NHES:2023 statistics of NHES:2023 that account for the variability in weights introduced through the multi-stage weighting process.

B.1.6 Nonresponse Bias Analysis

To the extent that those who do and do not respond to surveys differ in important ways, there is a potential for nonresponse biases in estimates from survey data. The estimates from NHES:2023 are subject to bias because of unit nonresponse to both the screener and the topical surveys, as well as nonresponse to specific items. Per NCES statistical standards, a unit-level nonresponse bias analysis will be conducted if the NHES:2023 overall unit response rate (the screener response rate multiplied by the topical response rate) falls below 85 percent (as is expected). Additionally, any item with an item-level response rate below 85 percent will be subject to an examination of bias due to item nonresponse.

Unit nonresponse

The main way to identify and correct for nonresponse bias is through the adjustment of the sample weights for nonresponse. The adjustment for nonresponse will be computed through a categorical search algorithm called Chi-Square Automatic Interaction Detection (CHAID). CHAID begins by identifying the characteristic of the data that is the best predictor of a unit nonresponse. Then, within the levels of that characteristic, CHAID identifies the next best predictor(s) of unit nonresponse, and so forth, until a tree is formed with all of the predictors that were identified at each step. The result is a division of the entire data set into cells by attempting to determine sequentially the cells that have the greatest discrimination with respect to the unit response rates. In other words, it divides the data set into groups so that the unit response rate within cells is as constant as possible, and the unit response rate between cells is as different as possible.

Since the variables considered for use as predictors of unit nonresponse must be available for both respondents and nonrespondents, demographic variables from the sampling frame provided by the vendor (including household education level, household race/ethnicity, household income, age of head of household, whether the household owns or rents the dwelling, and whether there is a surname and/or phone number present on the sampling frame) will be included in the CHAID analysis at the screener level. Additionally, the same set of the predictors from the screener CHAID analysis, plus data from the screener (including number of children in the household), will be included in the CHAID analysis at the topical level. The results of the CHAID analysis will be used to statistically adjust estimates for nonresponse. Specifically, nonresponse-adjusted weights will be generated by multiplying each household’s and person’s unadjusted weight (the reciprocal of the probability of selection, reflecting all stages of selection) by the inverse response rate within its CHAID cell.12

The extent of potential unit nonresponse bias in the estimates will be analyzed in several ways. First, the percentage distribution of variables available on the sampling frame will be compared between the entire eligible sample and the subset of the sample that responded to the screener. While the frame variables are of unknown accuracy and may not be strongly correlated with key estimates, significant differences between the characteristics of the respondent pool and the characteristics of the eligible sample may indicate a risk of nonresponse bias. Respondent characteristics will be estimated using both unadjusted and nonresponse-adjusted weights (with and without the raking adjustment), to assess the potential reduction in bias attributable to statistical adjustment for nonresponse. A similar analysis will be used to analyze unit nonresponse bias at the topical level, using both sampling frame variables and information reported by the household on the screener.

In addition to the above, the magnitude of unit nonresponse bias and the likely effectiveness of statistical adjustments in reducing that bias will be examined by comparing estimates computed using nonresponse-adjusted weights to those computed using unadjusted weights. In this analysis, differences in estimates that show statistical significance will be reported for key survey estimates including, but not limited to, the following:

All surveys

Age/grade of child

Census region

Race/ethnicity

Parent 1 employment status

Parent 1 home language

Educational attainment of parent 1

Family type

Household income

Home ownership

Early Childhood Program Participation (ECPP)

Child receiving relative care

Child receiving non-relative care

Child receiving center-based care

Number of times child was read to in past week

Someone in family taught child letters, words, or numbers

Child recognizes letters of alphabet

Child can write own name

Child is developmentally delayed

Child has health impairment

Child has good choices for child care/early childhood programs

Parent and Family Involvement in Education (PFI)

School type: public, private, homeschool, virtual school

Whether school assigned or chosen

Child’s overall grades

Contact from school about child’s behavior

Contact from school about child’s school work

Parents participate in 5 or more activities in the child’s school

Parents report school provides information very well

About how child is doing in school

About how to help child with his/her homework

About why child is placed in particular groups or classes

About the family's expected role at child’s school

About how to help child plan for college or vocational school

Parents attended a general school meeting (open house), back-to-school night, meeting of parent-teacher organization

Parents went to a regularly scheduled parent-teacher conference with child’s teacher

Parents attended a school or class event (e.g., play, sports event, science fair) because of child

Parents acted as a volunteer at the school or served on a committee

Parents check to see that child's homework gets done

The next component of the bias analysis will include comparisons between respondent characteristics and known population characteristics from extant sources including the Current Population Survey (CPS) and the American Community Survey (ACS). Additionally, for substantive variables, weighted estimates will be compared to prior NHES administrations if available. While differences between NHES:2023 estimates and those from external sources as well as prior NHES administrations could be attributable to factors other than bias, differences will be examined in order to confirm the reasonableness of the 2023 estimates. The final component of the bias analysis will include a comparison of the base-weighted estimates between early and late respondents of the topical surveys.

Item nonresponse

In order to examine item nonresponse, all items with response rates below 85 percent will be identified. Alternative sets of imputed values will be generated for these items by imposing extreme assumptions on the item nonrespondents. For most items, two new sets of imputed values—one based on a “low” assumption, and one based on a “high” assumption—will be created. For most continuous variables, a “low” imputed value variable will be created by resetting imputed values to the value at the fifth percentile of the original distribution; a “high” imputed value variable will be created by resetting imputed values to the value at the 95th percentile of the original distribution. For dichotomous and most polytomous variables, a “low” imputed value variable will be created by resetting imputed values to the lowest value in the original distribution, and a “high” imputed value variable will be created by resetting imputed values to the highest value in the original distribution (e.g., 0 and 1, respectively, for dichotomous yes/no variables). Both the “low” imputed value variable distributions and the “high” imputed value variable distributions will be compared to the unimputed distributions. This analysis helps to place bounds on the potential for item nonresponse bias using “worst case” scenarios.

B.2 Statistical Procedures for Collection of Information

This section describes the data collection procedures to be used in NHES:2023. These procedures represent a combination of best practices to maximize response rates based on findings from NHES:2012, NHES:2016, NHES:2017 Web Test, and NHES:2019, within NCES’s cost constraints. NHES is a two-phase self-administered survey. In the first phase, households are screened to determine if they have eligible children. In the second phase, households with eligible children are provided a topical questionnaire. NHES employs multiple contacts with households to maximize response. These include an advance letter and up to four completion request mailings for the screener survey. Households that complete the screener on the web are automatically sampled for the topical survey and directly routed to the appropriate web topical questionnaire, while those that complete the paper survey and are selected for a topical survey subsequently receive topical request mailings. In addition, households will receive a reminder pressure sealed envelope after the initial mailing of a screener or topical questionnaire. New for NHES:2023, a fifth mailing will be included that will be an over-sized reminder postcard.13 Respondent contact materials are provided in Appendix 1, paper survey instruments in Appendix 2, and web screener and topical instruments in Appendix 3.

Screener Procedures

Advance letter. The NHES:2023 data collection will begin with the mailing of an advance notification letter in early January 2023. Advance letters have been found to increase response rates in the landline phone, paper, and web-first modes in previous NHES administrations.

Screener package. Following the advance letter, all sampled households will receive a screener package. Most households will receive an invitation to respond to the web survey in their first screener package. Figures 1-3 present the data collection plan for the web mode households. The initial mailing will include an invitation letter (which includes the web survey URL and log-in credentials) and a $5 cash incentive. However, for targeted choice-plus households, the package will also contain a paper questionnaire. The cover letter will explain to the household that they have the option to complete the survey by web or paper, but that the web survey will save the government money and thus is further incentivized (as detailed below).

Incentive. Many years of testing in NHES have shown the effectiveness of a small cash incentive on increasing response (see Part A, section A.9 of this submission). NHES:2023 will continue to use a $5 screener incentive.

Two incentive experiments will be conducted in NHES:2023 for a select number of cases. Both are intended to encourage sample members to respond via web or the helpdesk phone (Census Telephone Questionnaire Assistance (TQA) line) instead of by paper. In the first experiment (“targeted choice-plus”), lower response propensity sample members will be offered a $20 contingent incentive for responding to all the surveys they are eligible for via web or the TQA line. For example, if the household does not have an eligible child, they will receive the incentive for completing the screener online or by telephone. A household that does have an eligible child will receive the promised incentive for completion of the screener and topical surveys online or by telephone. In the second experiment (“early bird incentive”), a promised incentive of $20 cash will be offered for responding to all the surveys they are eligible for by web or telephone prior to March 14, 2023, the pull date for the third screener package (which is the package that switches to requesting paper response). The promised incentive for both experiments will be offered in addition to the $5 prepaid incentive and will be mailed to the respondent 3-5 weeks after they respond through the appropriate mode. Part A, section A.9 of this submission provides a detailed description of these experiments.

Reminders and nonresponse follow-up. The reminder and nonresponse follow-up varies depending on the experimental treatment to which the case is assigned. The first reminder mailing, a pressure-sealed envelope, will be sent to sampled addresses approximately one week after the initial mailing. This pressure-sealed envelope will include the URL and log-in credentials to complete the web survey. Three nonresponse follow-up mailings follow this pressure-sealed envelope mailing. The mailings will contain reminder letters with URL and log-in credentials. The second reminder mailing will be sent to nonresponding households approximately two weeks after the pressure-sealed envelope. For most cases the second reminder mailing will be sent using rush delivery (FedEx or USPS Priority Mail14). However, cases receiving the choice-plus treatment in the targeted choice-plus condition will not receive FedEx at the second mailing; instead, approximately three weeks after the second mailing, a third reminder mailing will be sent to any non-responding households targeted for the choice-plus treatment, using rush delivery.. Most sampled cases will receive the third survey package by USPS. This mailing will include a paper screener questionnaire with a business reply envelope. The fourth screener questionnaire package will be sent to nonresponding households approximately three weeks after the third screener questionnaire mailing by USPS. Finally, a fifth screener mailing will be sent. For most cases this is a large reminder postcard with web login credentials and QR code that will be in a large postcard-size envelope. It was designed to allow sample members to prop the postcard in the keyboard while typing in the URL and web login information. For the alternate materials condition this fifth screener mailing will be slightly different; this mailing will be a large reminder postcard with one question asking if the household has children or youth 20 years or younger, and will include a return envelope.

Finally, if a sampled address has a phone number on the frame, two reminder calls will be conducted. The first reminder call will occur concurrently with the mailing date for the second screener package. However, for the targeted choice-plus condition cases, it will occur concurrently with the mailing date for the third screener package. The second reminder call will occur concurrently with the mailing date for the fifth screener mailing.

Language. To determine which addressees receive a bilingual screener package, the following criteria were used in NHES:2012, NHES:2016, and NHES:2019, and are proposed again for NHES:2023:

• First mailing criteria: An address is in the Hispanic stratum, or a Hispanic surname is associated with the address, or the address is in a Census tract where 10 percent or more of households meet the criterion of being linguistically isolated Spanish-speaking.

• Second mailing criteria: An address is in the Hispanic stratum, or a Hispanic surname is associated with the address, or the address is in a Census tract where 3 percent or more of households meet the criterion of being linguistically isolated Spanish-speaking.

• Third and fourth mailing criteria: An address is in the Hispanic stratum, or a Hispanic surname is associated with the address, or the address is in a Census tract where 2 percent or more of households meet the criterion of being linguistically isolated Spanish- speaking, or the address is in a Census tract where 2 percent or more of the population speak Spanish.

All pressure sealed envelopes and postcards will be bilingual.

Targeted addressee line. As an experiment, some households sampled for NHES:2023 will receive an addressee line and salutation (“Person living at this <CITY> address and “Dear person living at this <CITY> address”, respectively) that are targeted to renters . Households in this group will follow the same sequence of contacts as those described above(two web invitations, pressure sealed envelope, two paper survey invitations, and a fifth mailing). Materials sent to the treatment group for this experiment will include a different addressee and salutation line that should engage renters to open the mailing and feel like the materials are meant for them to complete.

Topical Procedures

Topical survey mailings will follow procedures similar to the screener procedures. As described earlier, households with at least one eligible person will be assigned to one of two topical survey mailing groups:

Households that have been selected to receive an ECPP questionnaire and

Households that have been selected to receive a PFI questionnaire.

For the ECPP a split panel experiment will be conducted, as described in Part A, section A.2 of this submission. The tested ECPP questionnaires will differ depending on the mode in which the respondent completes the questionnaire. The questionnaires will test (1) revised child’s health items in both the web and paper instruments and (2) in the web instrument only, a single grid item at the start of the section asking about child care arrangements instead of an interleafed format.

Within each paper-respondent group, questionnaires will be mailed in waves to minimize the time between the receipt of the screener and the mailing of the topical. Regardless of wave, households with a selected sample member will have the same topical contact strategy. The initial topical mailing for sampled households will include a cover letter, questionnaire, business reply envelope, and a $5 or $15 cash incentive. A reminder/thank you postcard is then sent to all topical sample members. A total of three nonresponse follow-up mailings follow. All nonresponse follow-up mailings will contain a cover letter, replacement questionnaire, and business reply envelope. The third and fifth mailings will be sent rush delivery.

Incentive. The NHES:2011 Field Test, NHES:2012, NHES-FS, NHES:2016, and NHES:2019 showed that a $5 incentive was effective with most respondents at the topical level. The results from NHES:2011 Field Test indicated that, among later screener responders, the $15 incentive was associated with higher topical response rates compared to the $5 incentive. Based on these findings, we used an incentive model that provided a $5 topical incentive for early-screener responders and a $15 topical incentive for late-screener respondents for NHES:2016 and NHES:2019. As a result, for NHES:2023 we will offer late screener responders a $15 prepaid cash incentive with their first topical questionnaire mailing. All other respondents will receive $5 cash with their initial topical mailing.

As described above, a $20 contingent incentive will be offered to targeted choice-plus cases that respond via web or the TQA to all the surveys they are eligible for.

Language. Households completing the screener by paper will receive a topical package in English or Spanish depending on the language they used to complete the screener. Households that prefer to complete the topical questionnaire in a different language from that used to complete the screener will be able to contact the Census Bureau to request the appropriate questionnaire. Households responding by web will be able to toggle between Spanish and English at any time in the web topical instruments.

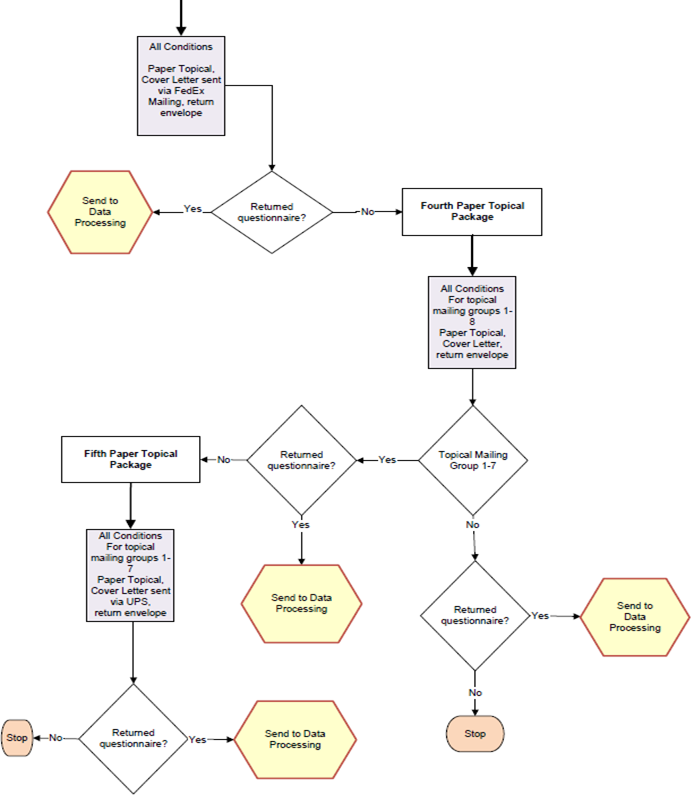

Reminders and nonresponse follow-up. Topical reminders and nonresponse follow-up activities will only be conducted for paper screener respondents. Web screener respondents will be routed directly to the topical survey and will not receive reminders if they do not start or complete the topical survey. Paper screener households that do not respond to the initial topical mailing will be mailed a second topical questionnaire approximately two weeks after the reminder postcard. If households that have been mailed a second topical questionnaire do not respond, a third package will be mailed by rush delivery (FedEx or USPS Priority Mail) approximately three weeks after the second mailing. Most households will be sent packages via FedEx delivery; only those households with a P.O. Box-only address will be sent USPS Priority Mail. If the household does not respond to the third mailing, a fourth mailing will be sent via USPS First Class mail. All households that received their first mailing before June 1, 2023 (topical mailing groups 1-7) and have received a fourth mailing but have not yet responded will receive a final fifth mailed package via UPS delivery with P.O. Box-only addresses receiving packages via USPS Priority Mail. Figure 4 show the topical data collection plan for mail treatment households for the ECPP and PFI.

Figure 1: Screener Data Collection Plan Flow 1

See continued flow chart on the next page.

Figure 1: Screener Data Collection Plan Flow 1–Continued

See continued flow chart on the next page.

Figure 1: Screener Data Collection Plan Flow 1–Continued

See continued flow chart on the next page.

Figure 1: Screener Data Collection Plan Flow 1–Continued

Figure

2: Screener Data Collection Plan Flow 2

Figure

2: Screener Data Collection Plan Flow 2

Figure 3: Topical Mail Out Data Collection Plan

See continued flow chart on the next page.

Figure 3: Topical Mail Out Data Collection Plan–Continued

See continued flow chart on the next page.

Figure 3: Topical Mail Out Data Collection Plan–Continued

Web and mail survey returns will be processed upon receipt, and reports from the Census Bureau’s survey management system will be prepared at least weekly. The reports will be used to continually assess the progress of data collection.

B.3 Methods for Maximizing Response Rates

The NHES:2023 design incorporates several features to maximize response rates. This section discusses those features.

Total Design Method/Respondent-Friendly Design. Surveys that take advantage of respondent-friendly design have demonstrated increases in survey response (Dillman, Smyth, and Christian 2008; Dillman, Sinclair, and Clark 1993). To ensure a respondent-friendly design, we have honed the design of the NHES forms through multiple iterations of cognitive interviewing and field testing. These efforts have focused on the design and content of all respondent instruments and contact materials. In addition, in January 2018, an expert panel was convened at NCES to review contact materials center-wide. The NHES materials were reviewed, and the panel provided guidance for improvement to the letters that was applied to all NHES:2019 contact materials. Specifically, the panel advised that each letter convey one primary message, and that the sum total of all letters should constitute a campaign to encourage the sample member’s response. This is in contrast to previous contact strategies which tended to reiterate the same information in the letter for each stage of nonresponse follow-up.

Overall, the main components of the letters used in NHES:2019 will continue to be used in the NHES:2023, except for a few minor changes and one redesigned letter. Changes were made to the cover letter in the third screener mailing package for NHES:2023. Findings from the In-Person Study of NHES:2019 Nonresponding Households indicated that the language that said that a lack of response causes harm to NHES study results was found to be negative or condescending by some participants. The NHES:2023 letter removes this language. Findings from the In-Person Study of NHES:2019 Nonresponding Households also suggested that some sample members from households that did not include children assumed that the survey request was neither relevant to them nor intended for them and that they should not respond to it. Language has been added to the 2023 letters to make it clear that the study also wants to hear from households that do not have children. Additionally, some of the 2019 nonresponse study participants assumed that the federal government already had access to the kind of information asked about in the screener, and therefore there was no reason for them to respond to the survey request. Text explicitly noting the desire and need to hear from households was added to the letters to counteract these sample members’ interpretation of the request. All of these changes were tested in Spring 2021 (OMB# 1850-0803 v.290) and found to work well with participants and will be implemented in the 2023 administration.

As noted previously, we will include a respondent incentive in the initial screener invitation mailing. Respondent incentives will also be used in the initial topical mailing, where applicable. Many years of testing in NHES have shown the effectiveness of a small prepaid cash incentive for increasing response. The Census Bureau will maintain an email address and a TQA line to answer respondent questions or concerns. If a respondent chooses to provide their information to the TQA staff, staff will be able to collect the respondent’s information on Internet-based screener and topical instruments. Additionally, the web respondent contact materials, questionnaires, and web data collection instrument contain frequently asked questions (FAQs) and contact information for the Project Officer.

Engaging Respondent Interest and Cooperation. The content of respondent letters and FAQs is focused on communicating the legitimacy and importance of the study. Experience has shown that the NHES child survey topics are salient to most parents. In NHES:2012, Census Bureau “branding” was experimentally tested against Department of Education branding. Response rates to Census Bureau branded questionnaires were higher compared to Department of Education branded questionnaires and was used in the NHES:2016 and NHES:2019 collections. NHES:2023 will continue to use Census Bureau-branded materials in the data collection for all except the final, fifth topical mailing which will also have Department of Education branding.

Additionally, NCES has recently experimented with addressee and salutation lines in cover letters targeted to renters. A modified addressee line and salutation will be used for renter addresses, with the goal of encouraging response from this typically underrepresented subgroup. The primary motivation for conducting this experiment comes from the In-Person Study of NHES:2019 Nonresponding Households finding that—because renters were accustomed to regularly receiving mail for the property owner—some renters assumed that the standard NHES screener-phase addressee line and salutation (“Member of <CITY> household” and “Dear <CITY> household,” respectively) indicated that the mailings were meant for the property owner and not for them. Multiple addressee/salutation lines were cognitively tested in the Fall of 2021 (OMB# 1850-0803 v.296) and the addressee line “Person living at this <CITY> address” and salutation “Dear person living at this <CITY> address” were preferred over others and will be used for this experiment in the NHES:2023.

Nonresponse Follow-up. The data collection protocol includes several stages of nonresponse follow-up at each phase. In addition to the number of contacts, changes in contact method (USPS First Class mail, FedEx, and automated reminder phone calls) and materials (letters, pressure sealed envelopes, and oversized postcards) are designed to capture the attention of potential respondents.

B.4 Tests of Procedures and Methods

NHES has a long history of testing materials, methods, and procedures to improve the quality of its data. Section B.4.1 describes the NHES:2019 experiments that have most influenced the NHES:2023 design. Section B.4.2 describes the proposed experiments for NHES:2023.

The following experiments are planned for the NHES:2023 data collection:

Targeted choice-plus

Early bird incentive

Alternate materials

Targeted renter mailings

ECPP health items split panel

ECPP care arrangements items split panel

B.4.1 Experiments Influencing the Design of NHES:2023

NHES:2019 Updated Sequential Mixed Mode Experiments

NHES:2019 included experiments to maximize strategies used to increase the effectiveness of an “improved” sequential mixed mode design. Results of each of these experiments is briefly described below, along with its results and implications for the NHES:2023 design.

Opt-out screener and cover letters. This experiment was designed to evaluate whether using an opt-out screener would increase overall screener response rates, specifically for households flagged as not having children. All cases in this condition received an “opt-out” paper screener, which showed the first item (asking if any children live in the household) on the cover of the questionnaire rather than on the inside. The screener letters emphasized that the survey was very short for households without children.

However, the results of the opt-out screener and cover letters were opposite of expectations. Among households flagged on the NHES sampling frame as not having children, the opt-out materials had no impact on the screener response rate. However, among households that were flagged as having children, the opt-out materials increased the screener response rate (by 5 percentage points). Hence, the NHES:2023 administration will not use the opt-out screener materials except within the alternate materials experiment discussed in B.4.2 below.

Advance Mailing Type. The purpose of this experiment was to determine the ideal number and types of advance mailings to send prior to the survey invitation in a web-push protocol. Sample members receiving the updated web-push mailing protocol were randomly assigned to one of three advance mailing conditions.

An advance letter-only condition, in which households were sent a letter a few days before the initial screener package letting them know that the survey invitation was coming soon.

An advance mailing campaign condition, in which households were sent two oversized, glossy postcards prior to the advance letter (for a total of three advance mailings). These postcards presented interesting statistics from NHES:2016, but they did not mention that the household had been sampled for NHES:2019. It was hypothesized that these mailings would increase familiarity with and build engagement with the NHES.

A no-advance mailings condition, in which households were not sent any mailings prior to the initial screener package. The purpose of this condition was to serve as a control condition in the context of an NHES web-push design.

The results from this experiment showed that while sending an advance letter did not increase the final screener response rate, it did increase early screener response (by 4 percentage points) and screener response by web (by 3 percentage points). This suggests that the advance letter helped reduce screener-phase costs by decreasing the number of follow-up mailings that needed to be sent and the number of paper questionnaire responses that needed to be processed. Sending an advance letter also increased the ECPP overall response rate (by 3 percentage points) but did not have a significant impact on the PFI overall response rate. Based on these results, the NHES:2023 administration will include an advance letter but will not incorporate the advance mailing campaign postcards.

Pressure-Sealed Envelope versus Reminder Postcard. In NHES:2019, cases assigned to the baseline web-push condition were sent a reminder postcard, and cases receiving the updated web-push mailing protocol were sent a pressure-sealed envelope. The purpose of both materials was to remind sample members to respond who had not yet done so and to thank those who had already responded, and they were sent out a week after the first NHES screener package. The pressure-sealed envelope included the web survey URL and the household’s unique web login credentials, but the reminder postcard did not (because the postcard format did not allow for sufficient protection of the household’s web login credentials). Sending a pressure-sealed envelope increased response to that reminder mailing by 4 percentage points as compared to sending a reminder postcard. Hence, NHES:2023 will use a pressure-sealed envelope as a reminder after the initial screener mailing, rather than a reminder postcard.

FedEx Timing Experiment. The goal of the NHES:2019 FedEx timing experiment was to determine the ideal timing for sending FedEx reminders in a mixed-mode design, balancing the potential response gains of sending FedEx mailings earlier with the additional shipping costs associated with doing so. Sample members in the updated mixed-mode condition were assigned to one of three conditions: random FedEx second (in which all sample members were sent the second screener package via FedEx), random FedEx fourth (in which all sample members were sent the fourth screener package via FedEx), and modeled FedEx (in which FedEx timing was based on a cost-weighted response propensity model that classified cases as FedEx-high and FedEx-low priority cases). In the modeled FedEx condition, FedEx-high-priority cases were sent the second screener package via FedEx and FedEx-low-priority cases were sent the fourth screener package via FedEx. These conditions also were compared to the baseline web-push condition, in which sample members were sent the third screener package via FedEx (a random FedEx third condition).

The final screener response rate in the random FedEx fourth condition was lower than in the random FedEx second, random FedEx third, and modeled FedEx conditions (by 1 to 2 percentage points). But there were no statistically significant differences between the final screener response rates for the other three conditions.

However, in the random FedEx second condition, the screener response rate after the early mailings was higher than in the random FedEx third and FedEx fourth conditions (by 7 percentage points), suggesting that sending the FedEx mailing earlier helped reduce the number of follow-up mailings that needed to be sent and the number of paper questionnaire responses that needed to be processed.

The screener response rate by web also was higher in the random FedEx second condition than in the random FedEx third or fourth conditions (by 5 to 6 percentage points). Because web screener respondents tend to have higher topical response rates than paper screener respondents, this led to the overall response rates for both topical surveys being higher in the random FedEx second condition than in the random FedEx third or fourth conditions (by 2 to 3 percentage points).

The modeled FedEx results tended to fall between those of the random FedEx second and random FedEx fourth conditions. The cost per response in the modeled FedEx condition was slightly lower than in the random FedEx second condition. However, there do not appear to be sufficient cost savings associated with modeling FedEx timing to make it worth the increased operational complexity.

Based on these results, the NHES:2023 administration will send the second screener package via FedEx for all cases. Using FedEx at the second screener package helps to increase screener response by web, which in turn helps to increase the overall response rates. Although the response rate results were relatively similar for the random FedEx second and modeled FedEx conditions, delaying the FedEx mailing for some cases in the modeled condition did not result in much of a cost savings and would increase the operational complexity of the data collection.

B.4.2 Experimental Conditions Included in the Design of NHES:2023

To address declining response rates among households and to improve the quality of NHES data, a few experimental conditions will be included in the NHES:2023. Many of these conditions will seek to maximize successes gained from the NHES:2019 administration. After discussing each condition, we provide the planned analysis. For all experimental comparisons except the ECPP split panel experiments, the minimum detectible difference in response rates will be 3 percentage points for the screener and 5 percentage points for the pooled ECPP-PFI topicals (as compared to the baseline condition or other conditions noted for the given analysis, and with 80 percent power and a significance level of 0.05). The minimum detectible difference of the ECPP split panel experiments is provided in the discussion below.

Targeted choice-plus condition. As described in Part A, section A.2 and section B.2, NHES:2023 includes a targeted choice-plus condition that will test the utility of limiting the choice-plus option to relatively low-response-propensity (RP) cases while using a web-push protocol for the remaining cases. Each sampled address’s RP score will be determined using a logistic regression model estimated on NHES:2019 data. The approximately 89,500 cases not randomly assigned to other experimental conditions will be assigned to the targeted choice-plus condition. Among these 89,500 cases, all cases whose RP is below approximately the 57th percentile (about 51,250 cases) will receive a choice-plus protocol that uses a concurrent mixed-mode design and provides a $20 cash incentive for web or inbound telephone response, in addition to the NHES’s standard $5 prepaid incentive. The remaining cases whose RP is above approximately the 57th percentile will receive the baseline web-push protocol. This cut point was chosen to meet NCES’s goal of using choice-plus for approximately 25 percent of the total sample.

Planned analyses for the targeted choice-plus condition

Does a targeted choice-plus design increase response rates overall, within the treated cohort (those whose RP score is below approximately the 57th percentile), or within the low-RP cohort (those whose RP score is below the 25th percentile)? This analysis will compare the early screener response rate, final screener response rate, and final topical response rate, between the choice-plus condition and the baseline condition for each of the three groups (overall, treated, low-RP).

Does a targeted choice-plus design change the distribution of the mode of screener responses overall, within the treated cohort, or within the low-RP cohort? This analysis will compare the percent of screener respondents responding to the screener by web or telephone, between those in the targeted choice-plus condition and those in the baseline condition; for all cases, for the treated cohort, and for the low-RP cohort.

Does using choice-plus only for lower-RP cases reduce screener-phase nonresponse bias over the whole condition? This analysis will compare R-indicators and the distribution of frame variables among screener respondents between all cases in the targeted choice-plus condition and all cases in the baseline condition.

Does using choice-plus only for lower-RP cases affect costs over the whole condition, relative to using web-push or choice-plus for all cases? This analysis will compare cost data—cost per case, cost per screener response, and cost per topical response—between the NHES:2023 choice-plus condition, the NHES:2023 baseline condition, and the NHES:2019 $20 choice-plus condition. The NHES:2019 $20 choice-plus condition will be used for some cost comparisons, since NHES:2023 will not include a condition that uses choice-plus for all cases regardless of their RP score. This comparison will be partially confounded by the fact that the NHES:2023 choice-plus condition will diverge from the NHES:2019 choice-plus condition in a few respects. However, these are minor enough that it should be possible to attain a reasonable estimate of the cost reduction associated with using choice-plus only for low-RP cases. If necessary, the comparison can be adjusted to account for general inflation in data collection costs from NHES:2019 to NHES:2023

Early bird incentive condition: As described in Part A, section A.2, NHES:2023 will also include an experimental condition designed to test the utility of a promised incentive contingent on response by a specified deadline, within a web-push protocol. The promised incentive will be $20 cash. It will be offered in addition to the $5 prepaid incentive that is sent with the initial screener package. To receive the incentive, sample members will need to respond to the survey by web or telephone prior to March 14, 2023, the pull date for the third screener package. There will be 53,000 cases randomly assigned to this condition. The deadline for obtaining the promised incentive will be communicated in the first two screener packages and in the pressure-sealed envelope.

Planned analyses for the early bird incentive condition

The planned analyses will compare results of the early bird incentive condition to (a) the baseline condition and (b) the targeted choice-plus condition. Results will be compared to the targeted choice-plus condition because there is interest in assessing whether an early bird incentive can increase the screener response rate as much as a choice-plus protocol would—but without the expense of mailing and processing additional paper questionnaires that is incurred in a choice-plus protocol.

Does an early bird incentive increase response rates overall or within the low-RP cohort? This analysis will compare the early screener response rate (prior to the incentive deadline), final screener response rate, and final topical response rate between (a) the early bird incentive and baseline conditions and (b) the early bird incentive and targeted choice-plus conditions; for all cases and for those in the low-RP cohort.

Does an early bird incentive change the distribution of the mode of screener responses overall or within the low-RP cohort? This analysis will compare the percent of screener respondents responding to the screener by web or telephone between (a) the early bird incentive and baseline conditions and (b) the early bird incentive and targeted choice-plus conditions; for all cases and for those in the low-RP cohort.