Ssb

Copy Testing SSB FINAL.docx

Generic Clearance for the Collection of Quantitative Data on Tobacco Products and Communications

SSB

OMB: 0910-0810

U.S. Food and Drug Administration

Copy Testing of Tobacco Prevention and Cessation Advertisements Research Study

OMB Control Number 0910-NEW

SUPPORTING STATEMENT B

B. Statistical Methods

Respondent Universe and Sampling Methods

The respondent universe for this study is youth aged 13 to 17 years who are current electronic nicotine delivery systems (ENDS) or cigarette users, susceptible never triers of ENDS or cigarettes, or susceptible lifetime users of ENDS or cigarettes. The study will include recruitment of youth ages 13-17 through their parents using an online survey panel and recruitment of youth ages 15-17 directly using social media advertising. The specific eligibility criteria vary slightly between these two recruitment sources, as illustrated in Table 1. The screener survey will also include questions assessing race, ethnicity, gender identity, and state of residence, to ensure that the sample is reasonably diverse on these characteristics.

The study will use a convenience sample rather than probability sample. We do not intend to generate nationally representative results or precise estimates of population parameters from the study; generating a representative sample of the size necessary for this study (e.g., using random digital dialing or a similar method) would be cost prohibitive. Additionally, it is not necessary as this is a copy testing study.

Table 1. Eligibility and Exclusion Criteria for Youth Participants, by Recruitment Source

Recruitment Source |

Eligibility Criteria for Youth |

Exclusion Criteria for Youth |

Panel via parent |

Must be:

|

Excluded if:

|

Social media direct to youth |

Must be:

|

Excluded if:

|

1Vaped or used cigarettes on 1 or more of the past 30 days. 2Never vaped or used cigarettes and responds anything but “Definitely not” to at least 1 of 3 survey items about susceptibility (whether will vape/smoke in the next year, try vaping/smoking soon, and try vaping/smoking if offered by a friend). 3Have vaped or smoked cigarettes at least once in lifetime and responds anything but “Definitely not” to at least 1 of 3 survey items about susceptibility. 4Never vaped or used cigarettes and responds “Definitely not” to all 3 survey items about susceptibility. 5Have vaped or smoked cigarettes but not in the past 30 days and respond “Definitely not” to all 3 of the survey items about susceptibility.6Defined as having smoked 100 cigarettes in their lifetime.

Procedures for the Collection of Information

This section provides an overview of the study procedures, provides information on the degree of accuracy required for the study, and discusses the estimation procedures.

2a. Study Procedures

This study includes the following research questions:

RQ1: To what extent do ad reactions vary between pre- and post-production ad versions? Compared with pre-production ads, are post-production versions perceived as less, more or equally effective?

RQ2: To what extent are ad reactions for pre-production ads associated with ad reactions for post-production ads?

To address the research questions above, we will administer an online survey to youth aged 13-17 who meet the eligibility criteria described above in Table 1. As part of the survey, participants will be randomly assigned to one of four study conditions:

ENDS pre-production ads

ENDS post-production ads

Cigarette (CIGS) pre-production ads

Cigarette (CIGS) post-production ads

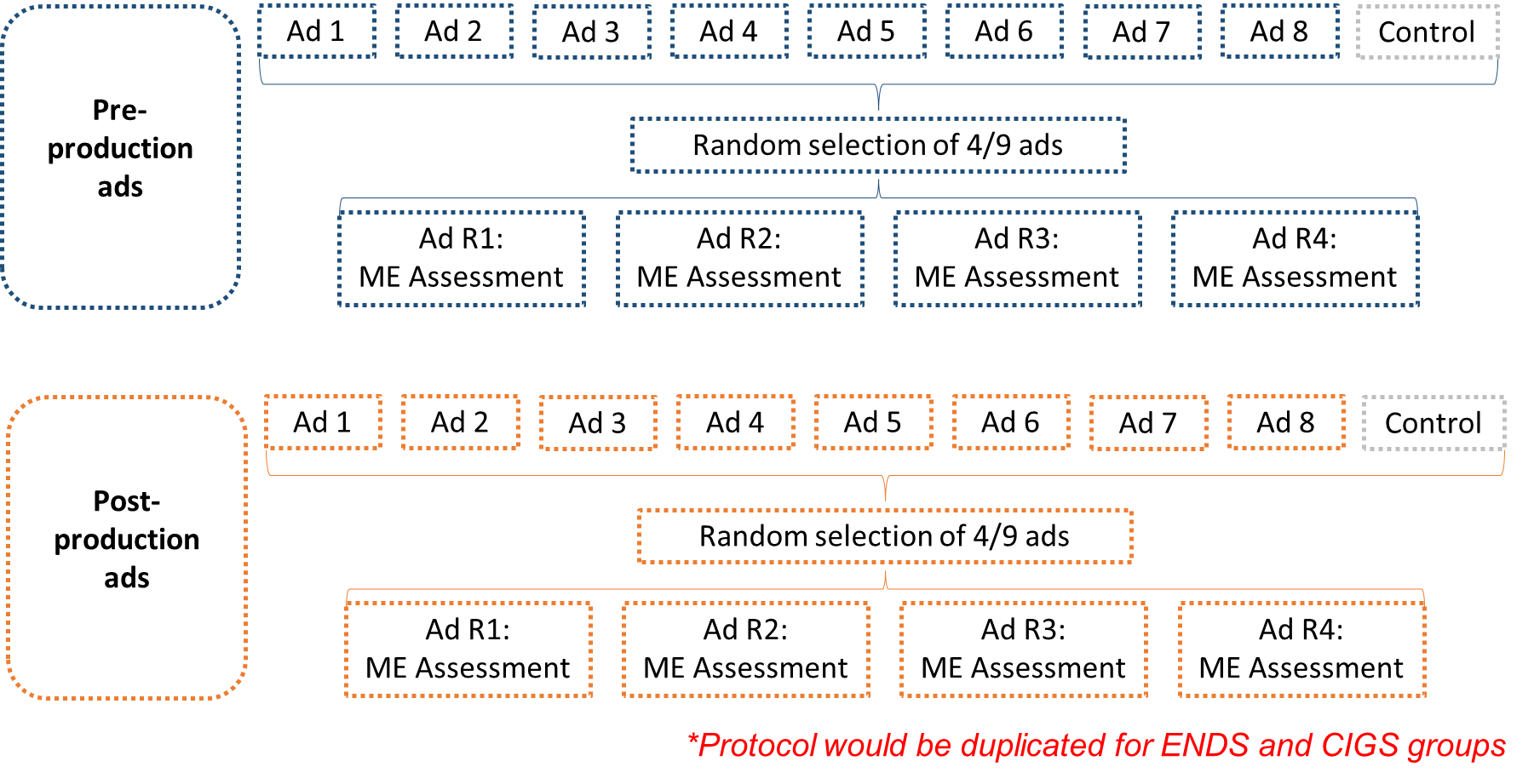

Within each study condition, participants will be shown a random selection of 4 ads from a set of 9 ads (8 from FDA’s Real Cost campaign and 1 “control” ad with informational text and voiceover only), with variation in the tobacco product described in the ad (ENDS vs. CIGS) and production status (pre-production vs. post-production). Following each ad viewing, participants will be asked to respond to message evaluation (ME) questions about the ad. Based on skip pattern testing and instrument length, some items may be excluded. Survey testing will aid survey clarity (e.g., address typos and decrease confusing wording) and will ensure that all survey questions are in accordance with the instruments approved by OMB and IRB. The survey will also assess awareness of antitobacco media campaigns and demographic characteristics. Exhibit 1 illustrates the study design.

Exhibit 1. Study Design

Study participants will be recruited through one of these methods:

Panel Via Parent: An online survey panel vendor will identify adult panel members who might have children in the appropriate age range of 13-17 (see Sample E-mail Prompt attachment). The parent will provide affirmative consent for a youth to participate (see Parent and Legal Guardian ICF attachment), and then the youth will complete a screener (see Screener attachment) to determine eligibility, provide affirmative assent (see Assent ages 13-17 attachment), and complete the online survey (see Survey Instrument attachment). In appreciation of their child’s participation in the surveys, adult panelists will receive non-monetary points that can be redeemed for goods, services, or cash.

Social Media Direct to Youth: Youth ages 15-17 recruited via social media will see social media ads (see Sample Social Media ads attachment), click on them, complete a screener to determine eligibility, provide affirmative assent (if eligible and interested in participating), and complete the online survey. As a token of appreciation, participants who qualify and complete the survey will receive one $10 digital gift card from Amazon within 1-2 weeks of survey completion.

For both recruitment methods, no effort is made to convert refusals. If the parent or youth indicates that they do not wish to participate they do not receive a message prompting them to reconsider. The screener simply comes to a close.

2b. Degree of Accuracy Required for the Study

To address our primary research question (RQ1), we will examine whether ME scores for pre-production ads are substantively different than those of post-production ads using a statistical method called equivalence testing.1 In equivalence tests, upper and lower equivalence bounds are specified based on a practically meaningful difference and are used to reject (or not reject) the presence of effects large enough to be considered meaningful.

To determine the appropriate sample size needed to address RQ1, we quantified the sample size needed to test that ME scores for pre- and post-production ad viewing groups are “equivalent” on ME scores given the estimated effect size of interest, the variance of the measure, and the equivalence bounds. We estimated the smallest effect size of interest by drawing on aggregate perceived effectiveness2 scores from a previous unpublished vaping prevention ad copy testing study conducted by FDA and RTI. Specifically, we calculated the difference between the overall mean perceived effectiveness score (mean = 3.93) and the lowest mean score across ads tested (to serve as a proxy for pre-production ads) (mean = 3.31), resulting in a mean difference of 0.62.

In the proposed study, individuals are randomly assigned to one of two ad viewing groups (pre-production vs. post-production), within each ad type (ENDS and CIGS). An ME score is recorded for each individual, and the difference in the mean value of the ME score between the two groups is calculated. We test the null hypothesis that the difference in means is larger than 0.62 or less than -0.62. Rejecting this null hypothesis implies statistical evidence of the alternative hypothesis, that the difference in the mean of the two groups is between -0.62 and 0.62. Since differences in this range are considered not practically meaningful, by rejecting the null hypothesis, we consider the two groups to be “equivalent” for this measure.

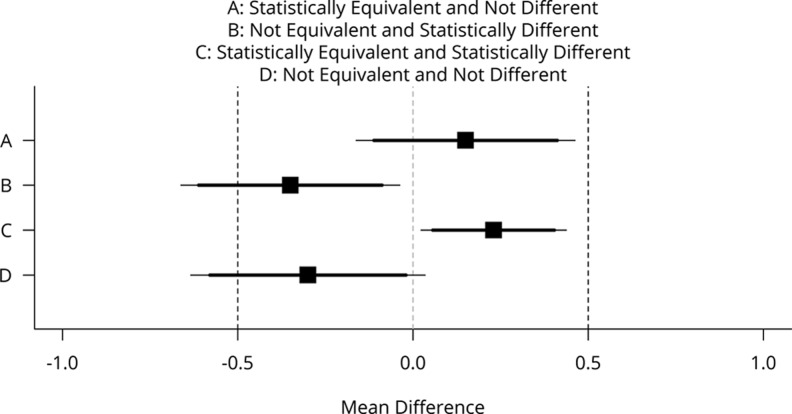

One test of equivalence is the two one-sided tests (TOST) procedure described by Schuirmann, 19973, and described in a more accessible form in Lakens, 20174. Exhibit 1 (copied from Lakens, 2017) displays four possible combinations of the two scenarios (1) a difference in means is or is not statistically different than zero, and (2) the means in two groups is equivalent or not equivalent. For example, the outcome A in Exhibit 2 displays an outcome that is not different than zero since the 95% confidence interval contains 0 and is equivalent because the 90% confidence interval is entirely contained between the equivalence bounds, in this case between -0.5 and 0.5.

Exhibit 2: Depiction of the TOST procedure (Lakens, 2017)

Mean differences (black squares) and 90% confidence intervals (CIs; thick horizontal lines) and 95% CIs (thin horizontal lines) with equivalence bounds ΔL = −.5 and ΔU = .5 for four combinations of test results that are statistically equivalent or not and statistically different from zero or not.

To determine the combinations of sample size and mean difference in the population that results in 80% to reject the null hypothesis that the two study groups are not equivalent, for each combination of respondents and variance, we:

simulated 3000 trials of the data,

tested the null hypothesis that the difference in means is larger than 0.62 or less than -0.62.

applying a grid search, we calculated the proportion of the times the null hypothesis is rejected for different values of mean difference in the population

estimated the mean difference that results in rejecting the null hypothesis 80% of the time as the difference with 80% power.

We calculated power for three values of standard deviation of ME in each study group: 1, 1.25, and 1.5. Since the study groups are independent, the variance of the differences is the sum of the variances. To determine the standard deviation for the difference in ME scores between study groups, we apply the following formula which is illustrated for the case where the standard deviation in one arm is 1.

Table 2 illustrates the mean difference needed in the population for different combinations of sample size and standard deviation that results in 80% to reject the null hypothesis that the two treatments are not equivalent.

Table 2: Combinations of sample size and mean difference that results in 80% to reject the null hypothesis that the two treatments are not equivalent

-

N per Study Group (pre- vs. post-production)

Standard Deviation

1

1.25

1.5

50

0.466

0.423

0.318

75

0.491

0.458

0.417

100

0.506

0.478

0.449

125

0.520

0.495

0.470

150

0.528

0.504

0.480

175

0.532

0.510

0.488

200

0.538

0.518

0.497

225

0.542

0.523

0.503

250

0.547

0.528

0.510

These results demonstrate that with an N of 200 per study group and standard deviation of 1, we are 80% powered to reject the null hypothesis with a change score of 0.53 (SD=1), which is close to 0.62 and with diminishing returns at values great than that. Based on these results, we will allocate N = 200 to each study group (pre-production vs. post-production) and across both ad types (ENDS vs. CIGS), for a total sample size of 800. Note that the planned sample size is 800, but we have conservatively assumed a sample of 900 in case of recruitment overages.

2c. Estimation Procedures

Statistical analyses will be conducted to address the study’s research questions.

For RQ1 (To what extent do ad reactions vary between pre- and post-production ad versions? Compared with pre-production ads, are post-production versions perceived as less, more or equally effective?), we will use the TOST procedure described above to estimate differences in mean ME scores between pre- and post-production study groups and test whether scores between the two groups are substantively “equivalent.” Analyses will be repeated for ENDS and CIGS ad groups separately.

For RQ2 (To what extent are ad reactions for pre-production ads associated with ad reactions for post-production ads?), we will descriptively examine correlations and statistical associations between pre- and post-production ME scores.

Methods to Maximize Response Rates and Deal with Nonresponse

To maximize participation, we will incorporate best practices from similar online surveys into our data collection procedures. These include:

Implementing a soft launch of the online survey to a small number of selected panel members to detect and resolve any technical difficulty.

Keeping the questionnaire at a reasonable length to minimize break-offs.

Including a brief introduction to the study that identifies FDA as the sponsor, states the purpose of the study, and provides toll-free telephones numbers for participants to call RTI with any questions about the study or their rights as a study participant.

Inviting panel members who appear to be eligible based on their member profile. As part of the process of registering with the survey panel, panelists provide information about a range of sociodemographic characteristics, including whether or not they have children, that can be used to target particular groups. The panel provider actively manages panelist profiles, requesting updated information on an ongoing basis to ensure that profile information is up to date.

Recruiting verified panelists. The panel provider uses a double opt-in registration process whereby panelists are invited to participate and then must sign up through an opt-in confirmation e-mail. This process protects against fraudulent account registrations and ensures that panelists are actively motivated to participate in surveys.

For participants recruited via social media, employing targeted advertising to best reach the desired sample.

To minimize nonresponse, the panel provider will conduct ongoing monitoring of response levels and drop-off rates. The panel provider will work with RTI project staff to address any problems that arise throughout the course of the collection of information.

Test of Procedures or Methods to be Undertaken

RTI International will conduct rigorous internal testing of the online survey instruments prior to fielding. Survey testers will review the online test version of the instrument that we will use to verify that instrument skip patterns are functioning properly, and that all survey questions are worded correctly and are in accordance with the instrument approved by IRB and OMB. Lightspeed will begin data collection with a soft launch during which they will send invitations to a small subset of panel members and review their responses to ensure the online survey is working properly.

Individuals Consulted on Statistical Aspects and Individuals Collecting and/or Analyzing Data

The following individuals inside the agency have been consulted on the design and statistical aspects of this information collection as well as plans for data analysis:

Emily Peterson

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993

Phone: 240-402-9281

E-mail:

Lindsay Pitzer

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993

Phone: 240-620-9526

E-mail: [email protected]

Emily Sanders

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993

Phone: 240-402-4269

E-mail: [email protected]

Anh (Bao) Zarndt

Office of Health Communication & Education

Center for Tobacco Products

Food and Drug Administration

10903 New Hampshire Avenue

Silver Spring, MD 20993

Phone: 240-994-2023

E-mail: [email protected]

The following individuals outside of the agency have been consulted on questionnaire development and/or will be collecting and/or analyzing data:

Matt Eggers

RTI International

3040 Cornwallis Road

Research Triangle Park, NC 27709

Phone: 919-541-6683

E-mail: [email protected]

Jim Nonnemaker

RTI International

3040 Cornwallis Road

Research Triangle Park, NC 27709

Phone: 919-541-6683

E-mail: [email protected]

1 Lakens, D. (2017). Equivalence tests: A practical primer for t tests, correlations, and meta-analyses. Social psychological and personality science, 8(4), 355-362.

2 Davis, K. C., Nonnemaker, J., Duke, J., & Farrelly, M. C. (2013). Perceived effectiveness of cessation advertisements: the importance of audience reactions and practical implications for media campaign planning. Health communication, 28(5), 461-472.

3 Schuirmann D. J. (1987). A comparison of the two one-sided tests procedure and the power approach for assessing the equivalence of average bioavailability. Journal of Pharmacokinetics and Biopharmaceutics, 15, 657–680.

4 Lakens D. Equivalence Tests: A Practical Primer for t Tests, Correlations, and Meta-Analyses. Soc Psychol Personal Sci. 2017 May;8(4):355-362. doi: 10.1177/1948550617697177. Epub 2017 May 5. PMID: 28736600; PMCID: PMC5502906.

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2023-08-26 |

© 2026 OMB.report | Privacy Policy