0648-NERR Supporting Statement Part B

0648-NERR Supporting Statement Part B.docx

Assessing Public Preferences and Values to Support Coastal and Marine Management

OMB: 0648-0829

SUPPORTING STATEMENT

U.S. Department of Commerce

National Oceanic & Atmospheric Administration

Assessing Public Preferences and Values to Support Coastal and Marine Management

OMB Control No. 0648-NERR

B. Collections of Information Employing Statistical Methods

Describe (including a numerical estimate) the potential respondent universe and any sampling or other respondent selection method to be used. Data on the number of entities (e.g., establishments, State and local government units, households, or persons) in the universe covered by the collection and in the corresponding sample are to be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate expected response rates for the collection as a whole. If the collection had been conducted previously, include the actual response rate achieved during the last collection.

Potential Respondent Universe and Response Rate

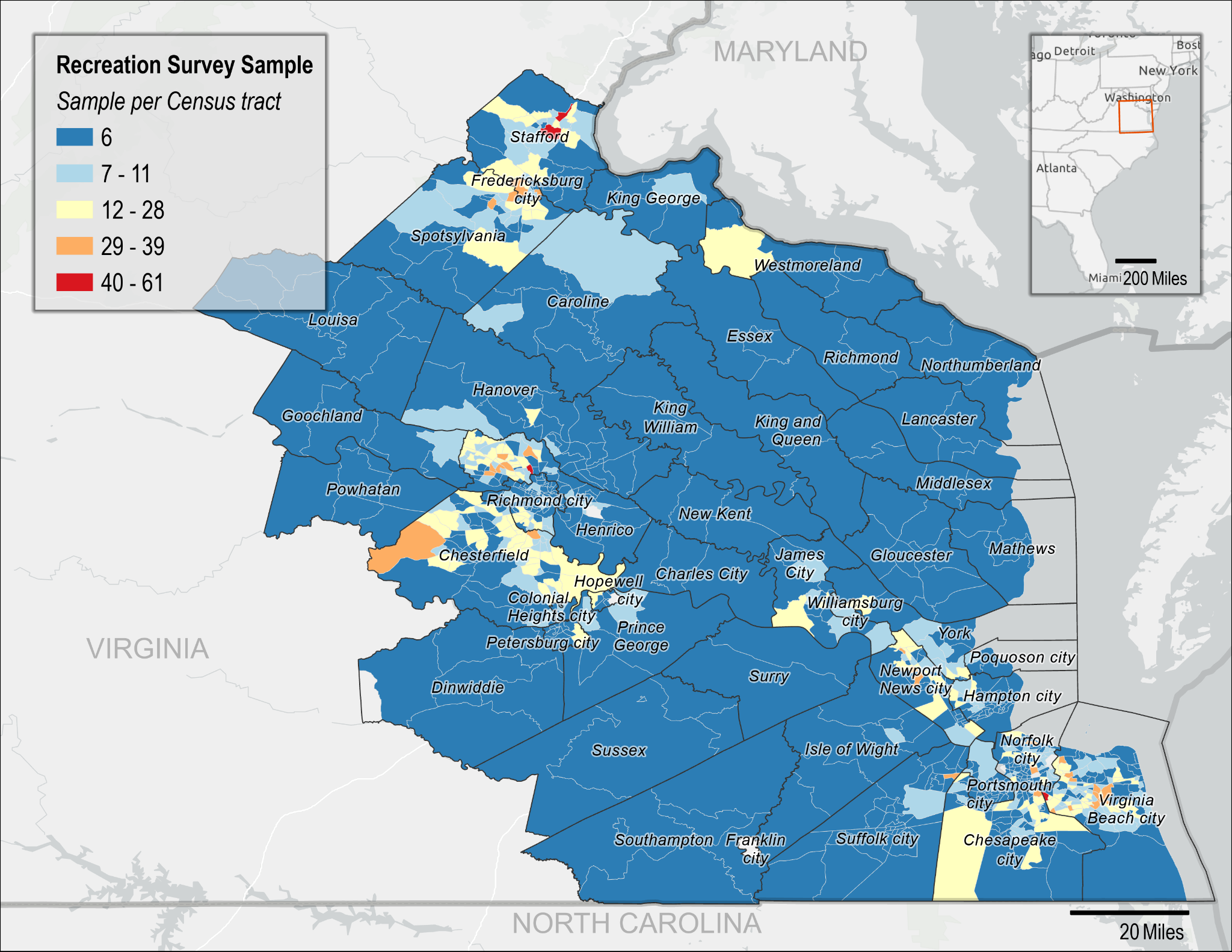

Coastal VA - Outdoor Recreation:

The potential respondent universe for this study includes residents aged 18 and over living within a one-hour drive of the York River. The one-hour driving radius for the Coastal Virginia study is based on human mobility data from 2022, which found that roughly two-thirds of visitors to the York River live up to an hour away. The estimated total number of occupied households in this study area is 1,347,170 (US Census Bureau, 2020) and the estimated total population 18 years and over is 2,739,072 (US Census Bureau/ACS, 2020).

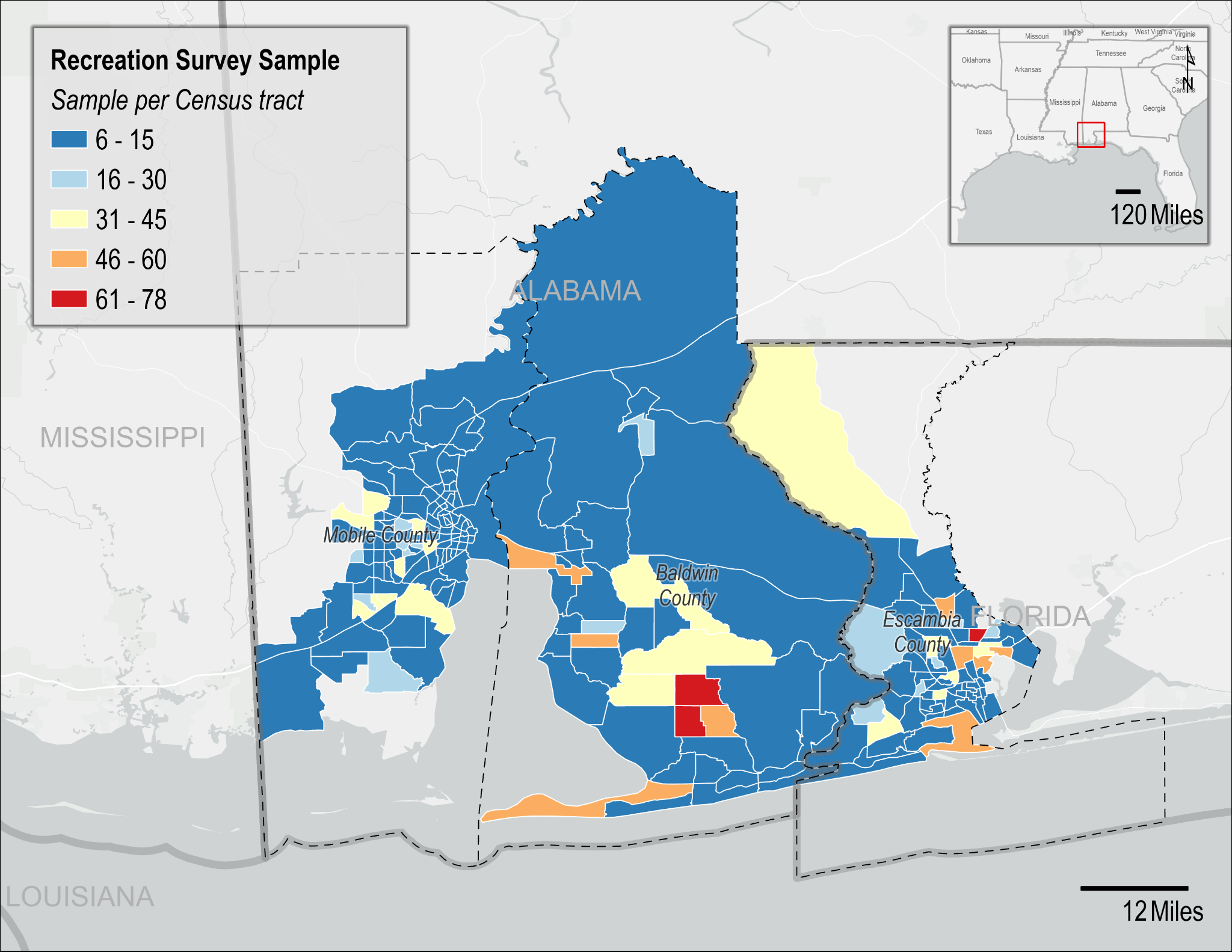

Gulf - Outdoor Recreation:

The potential respondent universe for this study includes residents aged 18 and over living within a one-hour drive of Weeks Bay. There is no existing human mobility data for this region, but researchers anticipate similar visitation patterns to the York River.

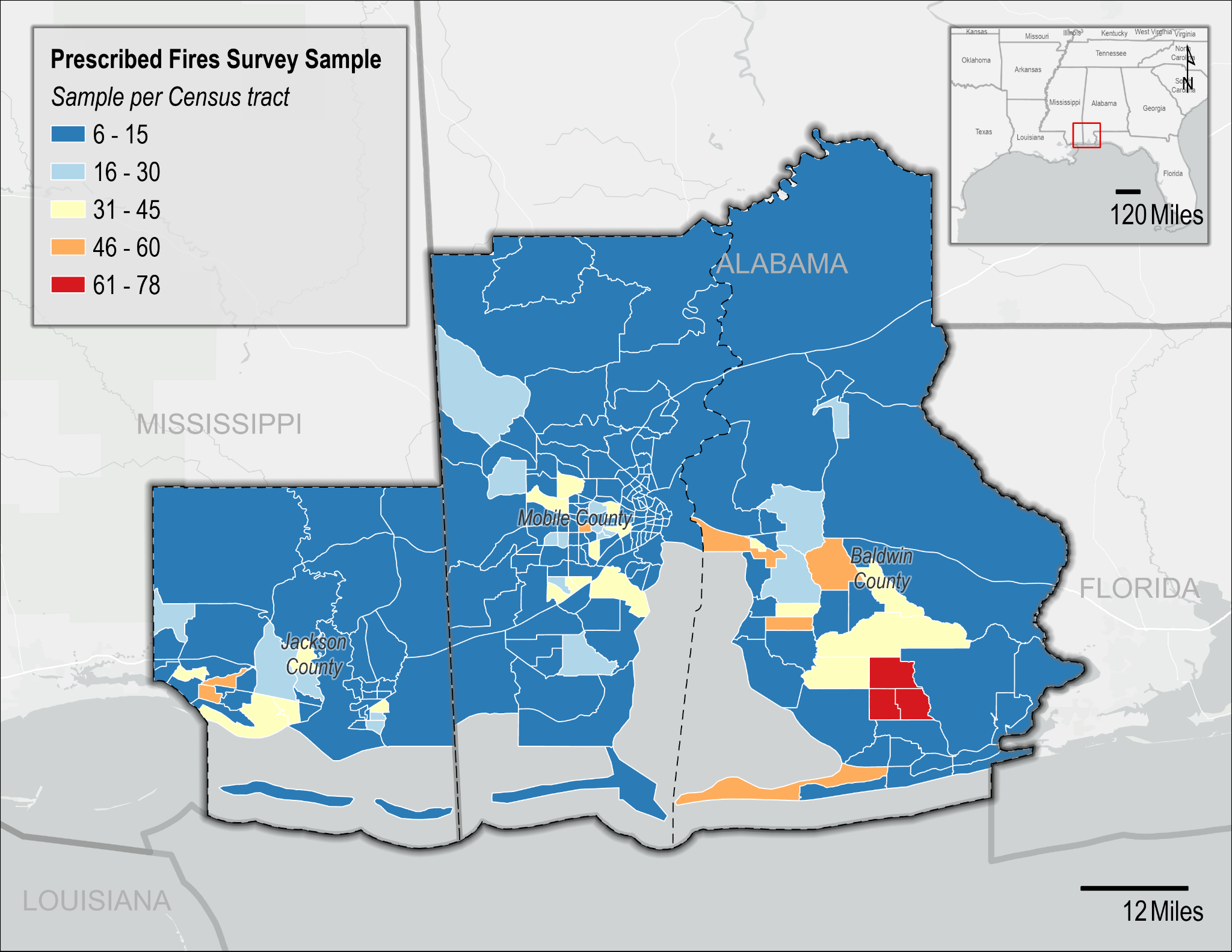

Gulf - Prescribed Fires:

The potential respondent universe for this study includes residents aged 18 and over living within Mobile and Baldwin Counties in Alabama and Jackson County in Mississippi. The estimated total number of occupied households in this study area is 299,418 (US Census Bureau, 2020) and the estimated total population 18 years and over is 604,394 (US Census Bureau/ACS, 2020).

All Surveys Combined:

Mail-back surveys typically achieve a response rate of 20-30%1. Recent studies on similar topics have yielded similar response rates (Gómez and Hill, 2016; Schuett et al., 2016; Ellis et al., 2017; Guo et al., 2017; Murray et al., 2020; Knoche and Ritchie, 2022). However, studies have shown that response rates tend to be lower for minority populations (e.g., Sykes et al., 2010; Link et al., 2006; Griffin, 2002). Based on these estimates, researchers conservatively anticipate a response rate of 20% to 25% for each survey depending on the socio-demographics of the individual strata.

Sampling and Respondent Selection Method

Data will be collected using a two-stage stratified random sampling design. The study region will be stratified geographically by county and Census tract. Details of the strata are explained below. Within each stratum, households will be selected at random, and within each selected household, the individual with the next upcoming birthday who is aged 18 or older will be requested to complete the survey to approximate random selection. Therefore, the primary sampling unit (PSU) is the household, and the secondary sampling unit (SSU) consists of individuals selected within each household.

The goals of the proposed strata are to ensure spatial representation and allow researchers to examine the influence of geographic proximity on responses. Additionally, researchers would like to develop estimates for specific Environmental Justice communities (as identified in the Environmental Protection Agency’s EJScreen tool2). Therefore, Census tracts with high proportions of those communities will be oversampled (see question 2.iii). The maps below show the final sample size for each Census tract for the Coastal Virginia study (Figure 1), and for the Gulf study (Figure 2 and 3). The sample sizes for the pre-test will be downscaled proportionately to the final sample sizes.

Figure

1: Coastal VA - Outdoor Recreation proposed sample size per Census

tract.

Figure

1: Coastal VA - Outdoor Recreation proposed sample size per Census

tract.

Figure 2: Gulf - Outdoor Recreation proposed recreation survey sample size per Census tract.

Figure 3: Gulf - Prescribed Fires proposed prescribed fire survey sample size per Census tract.

Table 2 provides a breakdown of the tentative estimated number of completed surveys desired for each state, along with the sample size per state. In order to obtain our estimated minimum number of respondents (d), the sample size needs to be increased to account for both non-response (e) and mail non-delivery (f). Estimated non-response rates vary by Census tract. Those with at least 5% of the population within any Environmental Justice community are estimated to have a 20% response rate, and all other Census tracts are estimated to have a 25% response rate. Therefore, direct stratum-level calculations cannot be shown in Table 2; however, estimated response rates for each stratum averages around 20%. For example, at least 5% of the population within all Census tracts in the Coastal VA study area are within at least one Environmental Justice community. Therefore, the expected response rate for each of these Census tracts is 20%. Given that partial respondents are rounded (e.g., 0.6 respondents would be rounded up to 1 respondent), the sample size is slightly larger than the estimated number of respondents divided by the response rate (i.e., 1,716/0.2 = 8,580 < 8,786). The exact discrepancy will vary.

Note that these response rates assume a $2 incentive (see Section 3). Therefore, based on the statistical sampling methodology discussed in detail in Question 2 below, the estimated response rate, and the 10% non-deliverable rate, the sample size for the final collections will be 20,323. See Section 2.v. below for more details on determining the minimum sample size.

Table 2: Estimates of sample size by survey

Survey |

Strata |

Population Estimates |

Pretest Sample (c) |

Estimated Min Number of Respondents (d) |

Sample Size Adjusted for |

||

18+ (a) |

Occupied Households (b) |

~20% RR (e) = (d) ÷ ~20% |

10% Non- Deliverable Rate (f) = (e) ÷ (1−10%) |

||||

Coastal VA |

Census tracts |

2,739,072 |

1,347,170 |

1,182 |

1,716 |

8,786 |

9,762 |

Gulf - Outdoor Recreation |

Baldwin, AL Census tracts |

178,105 |

87,190 |

273 |

369 |

1,872 |

2,080 |

Mobile, AL Census tracts |

316,795 |

158,045 |

517 |

672 |

3,458 |

3,842 |

|

Jackson, MS Census tracts |

109,494 |

54,183 |

224 |

303 |

1,545 |

1,545 |

|

SUB-TOTAL |

604,394 |

299,418 |

1,014 |

1,344 |

6,875 |

7,639 |

|

Gulf - Prescribed Fire |

Baldwin, AL Census tracts |

227,131 |

87,190 |

157 |

157 |

794 |

882 |

Mobile, AL Census tracts |

414,620 |

158,045 |

242 |

242 |

1,256 |

1,395 |

|

Jackson, MS Census tracts |

142,993 |

54,183 |

114 |

114 |

581 |

645 |

|

SUB-TOTAL |

784,744 |

299,418 |

513 |

513 |

2,630 |

2,922 |

|

|

TOTAL |

4,128,210 |

1,946,006 |

2,709 |

3,573 |

18,291 |

20,323 |

2. Describe the procedures for the collection of information including: statistical methodology for stratification and sample selection; estimation procedure; degree of accuracy needed for the purpose described in the justification; unusual problems requiring specialized sampling procedures; and any use of periodic (less frequent than annual) data collection cycles to reduce burden.

Stratification and Sample Selection

A two-stage stratified random sampling design will be used for data collection. First, the study region will be stratified geographically by county and Census tract. Then, residential households will be randomly selected from each stratum using an address-based frame procured from the U.S. Postal Service.

Estimation Procedures

For obtaining population-based estimates of various parameters, each responding household will be assigned a sampling weight. The weights will be used to produce estimates that:

are generalizable to the population from which the sample was selected;

account for differential probabilities of selection across the sampling strata;

match the population distributions of selected demographic variables within strata; and

allow for adjustments to reduce potential non-response bias.

These weights combine:

a base sampling weight which is the inverse of the probability of selection of the household;

a within-stratum adjustment for differential non-response across strata; and

a non-response weight.

Post-stratification adjustments will be made to match the sample to known population values (e.g., from Census data).

There are various models that can be used for non-response weighting. For example, non-response weights can be constructed based on estimated response propensities or on weighting class adjustments. Response propensities are designed to treat non-response as a stochastic process in which there are shared causes of the likelihood of non-response and the value of the survey variable. The weighting class approach assumes that within a weighting class (typically demographically-defined), non-respondents and respondents have the same or very similar distributions on the survey variables. If this model assumption holds, then applying weights to the respondents reduces bias in the estimator that is due to non-response. Several factors, including the difference between the sample and population distributions of demographic characteristics, and the plan for how to use weights in the regression models will determine which approach is most efficient for both estimating population parameters.

Degree of Accuracy Needed for the Purpose Described in the Justification

The

following formula can be used to determine the minimum required

sample size, ,

for analysis

,

for analysis

Where

is the z-value required for a specified confidence level (here, 95%),

is the z-value required for a specified confidence level (here, 95%),

is the proportion of the population with a characteristic of interest

(here, p=0.5 conservatively), and

is the proportion of the population with a characteristic of interest

(here, p=0.5 conservatively), and

is the margin of error (here, 0.05). Therefore,

is the margin of error (here, 0.05). Therefore,

This means a minimum sample size of 384 is required to be able to test for differences in means at the 95% confidence level with a 5% margin of error. This is met by our sampling plan for the study population, at the state level, and some counties and EJ socio-demographic factors. For example, we expect roughly 800 respondents who are “not White alone” for the Coastal VA - Outdoor Recreation survey, and roughly 200 from each of the Gulf surveys.

Unusual problems requiring specialized sampling procedures

There are no unusual problems requiring specialized sampling procedures.

Use of periodic (less frequent than annual) data collection cycles to reduce burden

Data will not be collected annually from each individual site as we do not anticipate substantive changes in public preferences and values from year-to-year. Secondary data sources, such as human mobility data, may be used to track changes in visitation over time to reduce burden.

3. Describe methods to maximize response rates and to deal with issues of non-response. The accuracy and reliability of information collected must be shown to be adequate for intended uses. For collections based on sampling, a special justification must be provided for any collection that will not yield "reliable" data that can be generalized to the universe studied.

Focus Groups

The first step in achieving a high response rate is to develop a survey that is easy for respondents to complete. Researchers conducted focus groups to determine 1) if questions are easy to understand, 2) the survey response process, 3) if questions and responses are relevant and comprehensive, and 4) if enough information is provided for individuals to confidently respond. Local partners assisted in a targeted recruitment of seven focus group participants per topic (prescribed fire (Gulf), recreation (Gulf, Virginia), and terminology (Virginia)) per implementation of the survey with a goal of ensuring socio-demographic and geographic representation. Most questions were easy to understand for focus group participants, but the study areas, terminology (such as the use of the word “trip”), and management goals of prescribed fires were clarified. Prescribed fire focus group participants recommended survey revisions that included additional questions related to trust in public land managers; these survey modifications were accepted. Participants read and followed the survey instructions, and were able to confidently respond. There was a need, however, to shorten and repeat instructions throughout the survey.

Implementation Techniques

The implementation techniques that will be used are consistent with methods that maximize response rates. Researchers propose a mixed-mode system, employing mail contact and recruitment, following the Dillman Tailored Design Method (Dillman et al., 2014), and online survey administration. To maximize response, potential respondents will be contacted multiple times via postcards and other mailings; this will include a pre-survey notification postcard, a letter of invitation, and follow-up reminders (see Appendix B for postcard and letter text). Final survey administration procedures and design of the survey administration tool will be subject to the guidance and expertise of the vendor hired to provide the data with regard to maximizing response rate, based on their experience conducting similar collections in the region of interest. One criterion in selecting this vendor will be existing trust they have established with the community of interest, such as local university survey centers, which will increase response rate (Ladik et al., 2007).

Additionally, the survey will be translated into additional languages to encourage participation by limited English speaking households and reduce the potential for non-response bias (Moradi et al., 2010, Smith, 2007). For Coastal VA - Outdoor Recreation, these languages may include Spanish, Arabic, Korean, and Chinese. For the Gulf surveys, these languages may include Spanish and Vietnamese.

Incentives

Incentives are consistent with numerous theories about survey participation (Singer and Ye, 2013), such as the theory of reasoned action (Ajzen and Fishbein, 1980), social exchange theory (Dillman et al., 2014), and leverage-salience theory (Groves et al., 2000). Inclusion of an incentive acts as a sign of good will on the part of the study sponsors and encourages reciprocity of that goodwill by the respondent.

Dillman et al. (2014) recommends including incentives to not only increase response rates, but to decrease nonresponse bias. Specifically, an incentive amount between $1 and $5 is recommended for surveys of most populations.

Church (1993) conducted a meta-analysis of 38 studies that implemented some form of mail survey incentive to increase response rates and found that providing a prepaid monetary incentive with the initial survey mailing increases response rates by 19.1% on average. Lesser et al. (2001) analyzed the impact of financial incentives in mail surveys and found that including a $2 bill increased response rates by 11% to 31%. Gajic et al. (2012) administered a stated-preference survey of a general community population using a mixed-mode approach where community members were invited to participate in a web-based survey using a traditional mailed letter. A prepaid cash incentive of $2 was found to increase response rates by 11.6%.

Given these findings, we believe a small, prepaid incentive will boost response rates by at least 10% and would be the most cost-effective means to increase response rates. This increased response rate is reflected in Table 2. A $2 incentive was chosen due to considerations for the population being targeted and the funding available for the project.

Decreasing survey response rates is a growing concern due to the increased likelihood of non-response bias, which can limit the ability to develop population estimates from survey data. Non-response bias may still exist even with high response rates if non-respondents differ greatly from respondents; however, information on non-respondents is often unavailable. One approach to estimating non-response bias in the absence of this information is the “continuum of resistance” model (Lin and Schaffer, 1995), which assumes that those who only respond after repeated contact attempts (delayed respondents) would have been non-respondents if the data collection had stopped early. Therefore, non-respondents are more similar to delayed respondents than to those who respond quickly (early respondents). Researchers will assess the potential for non-response bias by comparing responses across contact waves. If found, a weighting procedure, as discussed in Section B.1.ii above, can be applied, and the implications towards policy outcome preferences will be examined and discussed.

4. Describe any tests of procedures or methods to be undertaken. Testing is encouraged as an effective means of refining collections of information to minimize burden and improve utility. Tests must be approved if they call for answers to identical questions from 10 or more respondents. A proposed test or set of tests may be submitted for approval separately or in combination with the main collection of information.

See response to Part B Question 3 above.

5. Provide the name and telephone number of individuals consulted on statistical aspects of the design and the name of the agency unit, contractor(s), grantee(s), or other person(s) who will actually collect and/or analyze the information for the agency.

Consultation on the statistical aspects of the study design was provided by Trent Buskirk ([email protected]).

This project will be implemented by researchers with NOAA’s National Centers for Coastal Ocean Science. The project Principal Investigator is:

Sarah Gonyo, PhD (Lead)

Economist

NOAA National Ocean Service

National Centers for Coastal Ocean Science

Email: [email protected]

Data collection will be contracted out to an external vendor which has yet to be solicited and selected. Data analysis will be conducted by the project principal investigators along with the following research team members, all members of the NOAA National Ocean Service National Centers for Coastal Ocean Science Social Science Team:

Heidi Burkart - [email protected]

Ramesh Paudyal - [email protected]

Uzma Aslam - [email protected]

REFERENCES

Ajzen, I., & Fishbein, M. (1980). Understanding attitudes and predicting social behavior. Englewood Cliffs, NJ: Prentice-Hall.

Ascher, T. J., Wilson, R. S., & Toman, E. (2012). The importance of affect, perceived risk and perceived benefit in understanding support for fuels management among wildland–urban interface residents. International Journal of Wildland Fire, 22(3), 267-276.

Baran, P. K., Smith, W. R., Moore, R. C., Floyd, M. F., Bocarro, J. N., Cosco, N. G., & Danninger, T. M. (2014). Park use among youth and adults: examination of individual, social, and urban form factors. Environment and Behavior, 46(6), 768-800.

Bell, S., Tyrväinen, L., Sievänen, T., and Pröbstl, U. (2007). Outdoor recreation and nature tourism: A European perspective. Living Rev. Landsc. Res. 1, 1–46.

Blanchard, Brian, and Robert L. Ryan. "Community perceptions of wildland fire risk and fire hazard reduction strategies at the wildland-urban interface in the northeastern United States." In Proceedings of the 2003 Northeastern Recreation Research Symposium, vol. 317, pp. 285-294. 2003.

Bryman, A. (2016). Social research methods. Oxford university press.

Church, A.H. (1993). Estimating the effect of incentives on mail survey response rates: A meta-analysis. Public Opinion Quarterly, 57(1): 62-80.

Cottrell, S. (2002). Predictive model of responsible environmental behaviour: application as a visitor monitoring tool. Monitoring and management of visitor flows in recreational and protected areas, 129135.

Dillman, D. A., Smyth, J. D., and Christian, L. M. (2014). Internet, mail, and mixed-mode surveys: The tailored design method. Hoboken, NJ: Wiley.

Ellis, J.M., Yuan, R., He, Y., and Zaslow, S. (2017). 2017 Virginia Outdoors Demand Survey.

Engle, V. D. (2011). Estimating the provision of ecosystem services by Gulf of Mexico coastal wetlands. Wetlands, 31(1), 179-193.

Feagin, R. A., Williams, A. M., Martínez, M. L., & Pérez-Maqueo, O. (2014). How does the social benefit and economic expenditures generated by a rural beach compare with its sediment replacement cost? Ocean & coastal management, 89, 79-87. DOI: 10.1016/j.ocecoaman.2013.12.017

Firestone, J. and Kempton, W. (2007). Public opinion about large offshore wind power: Underlying factors. Energy Policy, 35(3): 1584-1598. DOI: 10.1016/j.enpol.2006.04.010.

Fleming, C. M., & Cook, A. (2008). The recreational value of Lake McKenzie, Fraser Island: An application of the travel cost method. Tourism Management, 29(6), 1197-1205.

Fleming, C.S., Gonyo, S.B., Freitag, A., and Goedeke, T.L. Engaged minority or quiet majority? Social intentions and actions related to offshore wind energy development in the United States. Energy Research and Social Science, 84 (2022) 102440. https://doi.org/10.1016/j.erss.2021.102440.

Flores, D., Falco, G., Roberts, N. and Valenzuela III, F. (2018). Recreation Equity: Is the Forest Service Serving its Diverse Publics? Journal of Forestry. 116(3): 266-272.

Fried, J. S., Gatziolis, D., Gilless, J. K., Vogt, C. A., & Winter, G. (2006). Changing beliefs and building trust at the wildland urban interface. Fire Management Today, 66(3), 51-54.

Friess, D. A., Yando, E. S., Alemu, J. B., Wong, L.-W., Soto, S. D., & Bhatia, N. (2020). Ecosystem services and disservices of mangrove forests and salt marshes. Oceanography and marine biology, 58.

Gajic, A., Cameron, D., & Hurley, J. (2012). The cost-effectiveness of cash versus lottery incentives for a web-based, stated-preference community survey. The European Journal of Health Economics, 13, 789-799. DOI: 10.1007/s10198-011-0332-0

Ghimire, R., Green, G., Poudyal, N. and Cordell, K. (2016). Who Recreates Where: Implications from a National Recreation Household Survey. Journal of Forestry. 114(4): 458-465.

Gill, J. K., Bowker, J., Bergstrom, J. C., & Zarnoch, S. J. (2010). Accounting for Trip Frequency in Importance-Performance Analysis. Journal of Park & Recreation Administration, 28(1).

Griffin, D. H. (2002, August). Measuring survey nonresponse by race and ethnicity. In Proceedings of the Annual Meetings of the American Statistical Association (pp. 11-15).

Gómez, E. and Hill, E. (2016). First Landing State Park: Participation Patterns and Perceived Health Outcomes of Recreation at an Urban-Proximate Park. Journal of Park and Recreation Administration, 34(1), 68-83. DOI: 10.18666/JPRA-2016-V34-I1-7034

Grooms, B., Rutter, J. D., Barnes, J. C., Peele, A., & Dayer, A. A. (2020). Supporting wildlife recreationists in Virginia: Survey report to inform the Virginia Department of Wildlife Resources' wildlife viewing plan. V. Tech.

Groves, R.M., Singer, E., and Corning, A. (2000). Leverage-Saliency Theory of Survey Participation: Description and an Illustration. Public Opinion Quarterly, 64(3): 299-308.

Guo, Z., Robinson, D., & Hite, D. (2017). Economic impact of Mississippi and Alabama Gulf tourism on the regional economy. Ocean & Coastal Management, 145, 52-61.

https://doi.org/10.1016/j.ocecoaman.2017.05.006

Haspel, A. E., & Johnson, F. R. (1982). Multiple destination trip bias in recreation benefit estimation. Land economics, 58(3), 364-372.

Jacobson, S. K., Monroe, M. C., & Marynowski, S. (2001). Fire at the wildland interface: the influence of experience and mass media on public knowledge, attitudes, and behavioral intentions. Wildlife Society Bulletin, 929-937. https://www.jstor.org/stable/3784420

Jarrett, A., Gan, J., Johnson, C., & Munn, I. A. (2009). Landowner awareness and adoption of wildfire programs in the southern United States. Journal of Forestry, 107(3), 113-118.

Johnson, C.Y., Bowker, J., Green, G. and Cordell, H. (2007). “Provide it...But Will They Come?”: A Look at African American and Hispanic Visits to Federal Recreation Areas. Journal of Forestry. 105(5): 257-265.

Johnston, R. J., Grigalunas, T. A., Opaluch, J. J., Mazzotta, M., & Diamantedes, J. (2002). Valuing estuarine resource services using economic and ecological models: the Peconic Estuary System study. Coastal Management, 30(1), 47-65.

Kane, B., Zajchowski, C. A., Allen, T. R., McLeod, G., & Allen, N. H. (2021). Is it safer at the beach? Spatial and temporal analyses of beachgoer behaviors during the COVID-19 pandemic. Ocean & coastal management, 205, 105533. https://doi.org/10.1016/j.ocecoaman.2021.105533

Kliskey, A. D. (2000). Recreation terrain suitability mapping: A spatially explicit methodology for determining recreation potential for resource use assessment. Landscape and Urban Planning, 52, 33–43.

Kliskey, A. D., Lofroth, E. C., Thompson, W. A., Brown, S., & Schreier, H. (1999). Simulating and evaluating alternative resource-use strategies using GIS-based habitat suitability indices. Landscape and Urban Planning, 45,163–175.

Knoche, S. and Ritchie, K. (2022). A travel cost recreation demand model examining the economic benefits of acid mine drainage remediation to trout anglers. Journal of Environmental Management, 319: 115485. https://doi.org/10.1016/j.jenvman.2022.115485

Krymkowski, D., Manning, R. and Valliere, W. (2014). Race, ethnicity, and visitation to national parks in the United States: Tests of the marginality, discrimination, and subculture hypotheses with national-level survey data. Journal of Outdoor Recreation and Tourism. 7(8): 35-43.

Ladik, D. M., Carrillat, F. A., & Solomon, P. J. (2007). The effectiveness of university sponsorship in increasing survey response rate. Journal of Marketing Theory and Practice, 15(3), 263-271.

Lesser, V.M., Dillman, D.A., Carlson, J., Lorenz, F., Mason, R., and Willits, F. (2001). Quantifying the influence of incentives on mail survey response rates and nonresponse bias. Presented at the Annual Meeting of the American Statistical Association, Atlanta, GA

Lin, I., & Schaeffer, N.C. (1995). Using survey participants to estimate the impact of nonparticipation. Public Opinion Quarterly, 59, 236-258. DOI: 10.1086/269471

Link, M.W., Mokdad, A.H., Stackhouse, H.F., Flowers, N.T. (2006). Race, ethnicity, and linguistic isolation as determinants of participation in public health surveillance surveys. Prev Chronic Dis., 3(1): A09. Epub 2005 Dec 15. PMID: 16356362; PMCID: PMC1500943.

Lipton, D. (2004). The value of improved water quality to Chesapeake Bay boaters. Marine Resource Economics, 19(2), 265-270. https://doi.org/10.1086/mre.19.2.42629432

Manfredo, M.J., Driver, B.L., and Tarrant, M.A. (1996). Measuring Leisure Motivation: A Meta-Analysis of the Recreation Experience Preference Scales. Journal of Leisure Research. 28(3): 188-213.

Martínez-Espiñeira, R., & Amoako-Tuffour, J. (2009). Multi-destination and multi-purpose trip effects in the analysis of the demand for trips to a remote recreational site. Environmental management, 43, 1146-1161.

McCaffrey, S. (2009). Crucial factors influencing public acceptance of fuels treatments. Fire Management Today, 69(1), 9.

Midway, S. R., Adriance, J., Banks, P., Haukebo, S., & Caffey, R. (2020). Electronic self‐reporting: angler attitudes and behaviors in the recreational Red Snapper fishery. North American Journal of Fisheries Management, 40(5), 1119-1132.

Moradi T, Sidorchuk A, Hallqvist J. Translation of questionnaire increases the response rate in immigrants: filling the language gap or feeling of inclusion? Scand J Public Health. 2010 Dec;38(8):889-92. DOI: 10.1177/1403494810374220. Epub 2010 Jun 9. PMID: 20534633.

Murray, R., Wilson, S., Dalemarre, L., Chanse, V., Phoenix, J., and Baranoff, L. (2020). Should We Put Our Feet in the Water? Use of a Survey to Assess Recreational Exposures to Contaminants in the Anacostia River. Environmental Health Insights, 9(s2). https://doi.org/10.1177/EHI.S19594

National Science Foundation. (2012). Chapter 7. Science and Technology: Public Attitudes and Understanding. In Science and Engineering Indicators 2012.

Reja, U., Manfreda, K. L., Hlebec, V., & Vehovar, V. (2003). Open-ended vs. close-ended questions in web questionnaires. Developments in applied statistics, 19(1), 159-177.

Rideout, S., Oswald, B. P., & Legg, M. H. (2003). Ecological, political and social challenges of prescribed fire restoration in east Texas pineywoods ecosystems: a case study. Forestry, 76(2), 261-269. https://doi.org/10.1093/forestry/76.2.261

Roemmich, J. N., Johnson, L., Oberg, G., Beeler, J. E., & Ufholz, K. E. (2018). Youth and adult visitation and physical activity intensity at rural and urban parks. International Journal of Environmental Research and Public Health, 15(8), 1760.

Rosen, Z., Henery, G., Slater, K. D., Sablan, O., Ford, B., Pierce, J. R., Fischer, E. V., & Magzamen, S. (2022). A Culture of Fire: Identifying Community Risk Perceptions Surrounding Prescribed Burning in the Flint Hills, Kansas. Journal of Applied Communications, 106(4), 6.

Rushing, B.R., Leavell, M., Nzaku, K., and Black, N. (2021). Outdoor Recreation in Alabama.

Schuett, M., Ding, C., Kyle, G., & Shively, J.D. (2016) Examining the Behavior, Management Preferences, and Sociodemographics of Artificial Reef Users in the Gulf of Mexico Offshore from Texas. North American Journal of Fisheries Management, 36:2, 321-328. DOI: 10.1080/02755947.2015.1123204

Singer, E. and Ye, C. (2013). The Use and Effects of Incentives in Surveys. The Annals of the American Academy of Political and Social Science, 645: 112-141.

Smith, T.W. (2007) ‘An evaluation of Spanish questions on the 2006 General Social Surveys’. GSS Methodological Report No.109.

Starbuck, C. M., Berrens, R. P., & McKee, M. (2006). Simulating changes in forest recreation demand and associated economic impacts due to fire and fuels management activities. Forest Policy and Economics, 8(1), 52-66.

Strickler, M.J., Cristman, C.E., and Poole, D. (2018). Virginia Outdoors Plan 2018. Virginia Department of Conservation and Recreation.

Sykes, L. L., Walker, R. L., Ngwakongnwi, E., & Quan, H. (2010). A systematic literature review on response rates across racial and ethnic populations. Canadian Journal of Public Health, 101, 213-219. DOI: 10.1007/BF03404376

Thapa, B., Holland, S. M., & Absher, J. D. (2008). Perceived risk, attitude, knowledge, and reactionary behaviors toward wildfires among Florida tourists. In D. J. Chavez, J. D. Absher, & P. L. Winter (Eds.), Fire social science research from the Pacific Southwest research station: Studies supported by national fire plan funds. (Vol. General Technical Report PSW-GTR-209, pp. 87). USDA Forest Service: Pacific Southwest Research Station.

Toman, E., Stidham, M., McCaffrey, S., & Shindler, B. (2013). Social science at the wildland-urban interface: A compendium of research results to create fire-adapted communities. In Gen. Tech. Rep. NRS-111. Newtown Square, PA, USA.: USDA Forest Service, Northern Research Station.

US Census Bureau. (2020). "H1: Occupancy Status." 2020 Census Redistricting Data (Public Law 94-171).

US Census Bureau/American Community Survey. (2020). "B01001: Sex By Age." 2015-2020 American Community Survey.

US Census Bureau. (2021). Computer and internet use in the United States: 2018. Available online: https://www.census.gov/content/dam/Census/library/publications/2021/acs/acs-49.pdf

USDA Forest Service. (2018). Introduction to prescribed fire in southern ecosystems. Research & Development, Southern Research Station. Science Update SRS-054.

Usher, L. E. (2021). Virginia and North Carolina surfers’ perceptions of beach nourishment. Ocean & Coastal Management, 203, 105471. https://doi.org/10.1016/j.ocecoaman.2020.105471

English, E., von Haefen, R. H., Herriges, J., Leggett, C., Lupi, F., McConnell, K., ... & Meade, N. (2018). Estimating the value of lost recreation days from the Deepwater Horizon oil spill. Journal of Environmental Economics and Management, 91, 26-45. DOI: 10.1016/j.jeem.2018.06.010

Winter, P., Crano, W., Basáñez, T. and Lamb, C. (2020). Equity in Access to Outdoor Recreation - Informing a Sustainable Future. Sustainability. 12(1): 124.

Wu, H., Miller, Z. D., Wang, R., Zipp, K. Y., Newman, P., Shr, Y.-H., Dems, C. L., Taylor, A., Kaye, M. W., & Smithwick, E. A. (2022). Public and manager perceptions about prescribed fire in the Mid-Atlantic, United States. Journal of Environmental Management, 322, 116100.

1 Dillman, Don A. Jolene D. Smyth, and Leah Melani Christian. (2009). Internet, Mail and Mixed-Mode Surveys: The Tailored Design Method. 3rd ed. Hoboken, NJ: John Wiley & Sons, Inc.

2 https://www.epa.gov/ejscreen/overview-socioeconomic-indicators-ejscreen

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2024-07-27 |

© 2026 OMB.report | Privacy Policy