NHSBH-SSB _Final

NHSBH-SSB _Final.docx

National Household Survey on Behavioral Health (NHSBH)

OMB: 0930-0110

Center for Behavior Health Quality and Statistics (CBHSQ) National Survey on Drug Use and Health (NSDUH)

SUPPORTING STATEMENT

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

*Note – For 2025, SAMHSA is proposing to change the name of the NSDUH to the National Household Survey on Behavioral Health (NHSBH). The justification for the change is discussed in Section A.1. In this document, the term NHSBH is used for all main study (i.e., non-MICS related) sections.

1. Respondent Universe and Sampling Methods

NHSBH Main Study:

The respondent universe for the NHSBH is the civilian, noninstitutionalized population aged 12 or older in each state and the District of Columbia. The NHSBH universe includes residents of noninstitutional group quarters (e.g., shelters, rooming houses, dormitories), and civilians residing on military bases. Persons excluded from the universe include those with no fixed household address (e.g., homeless transients not in shelters, and residents of institutional group quarters such as jails and hospitals).

NHSBH data will continue to be collected from respondents using a combination of in-person and web-based data collection modes. Like previous NHSBHs, the overall sample design will consist of a stratified, multi-stage area probability design. Age categories for interview respondents (IRs) include: 12-17, 18-25, 26-34, 35-49, and 50 and over. The sampling frame will be based on tract- and block group-level geographical units defined by the decennial 2020 Census. The selection of 6,096 sample segments will be made to ensure the areas are as representative of the population as possible. For the 2025 survey, approximately 290,000 dwelling units (DUs) nationwide will be contacted and screened for eligibility either in-person or via web. From these DUs, we expect to complete approximately 68,467 interviews across both modes.

Like the 2023 and 2024 NHSBHs, the selection of smaller area segments within the secondary sampling units (SSUs; one or more Census Block Groups) will be eliminated to reduce intracluster correlation and increase precision. Also, a hybrid address-based sampling (ABS) and electronic listing (eListing) approach will be used to construct DU frames. ABS refers to the sampling of residential addresses from a list based on the U.S. Postal Service's Computerized Delivery Sequence file. In areas with high expected ABS coverage, the ABS frame is used. In all other areas, eListing is used to construct the DU frames. SSUs will be assigned to ABS or eListing based on ABS coverage, proportion of group quarters units, and proportion of ABS drop points.

The sample design will help ensure the ability of the NHSBH to support comparisons of population subgroups defined by the intersection of race and gender, or other demographic variables that may be associated with disparities in survey outcomes for these important demographic subpopulations. As with most area household surveys, the NHSBH design continues to offer the advantage of minimizing interviewing costs by clustering the sample. This design also maximizes coverage of the respondent universe since an adequate dwelling unit and/or person-level sample frame is not available. Although the main concern of area surveys is the potential variance-increasing effects due to clustering and unequal weighting, these potential problems are directly addressed for NHSBH by selecting a relatively large sample of clusters at the early stages of selection, and by selecting these clusters with probability proportionate to a composite size measure. This selection maximizes precision by allowing one to achieve an approximately self-weighting sample within strata at the latter stages of selection. Furthermore, it is appealing because the design of the composite size measure makes the field interviewer (FI) workload roughly equal among clusters within strata.

To accurately measure drug use and related mental health measures the NHSBH design allocates the sample to the 12 to 17, 18 to 25, and 26 or older age groups in proportions of 25 percent, 25 percent, and 50 percent, respectively.

While age group is currently the only stratification variable at the person level, stratification by other demographic subgroups has been considered in the past. Prior to the 2014 sample redesign, estimates by four-level race and ethnicity (Hispanic, NonHispanic White, NonHispanic Black, and NonHispanic Other) were deemed to have sufficient precision based on a sample of 67,500 completed interviews without needing to stratify or oversample these subgroups. Because the NHSBH targets 68,467 interviews each year, estimates by four-level race and ethnicity continue to have sufficient precision without stratification or oversampling. Further, four of the 25 “key” measures for which precision is monitored each year are among the American Indian and Alaska Native (AIAN) and Asian or Pacific Islander (API) populations. Finally, four of the annual detailed tables report estimates by four-level race and ethnicity (Hispanic, NonHispanic White, NonHispanic Black, and NonHispanic Other) by gender using data from two years combined (e.g. 2019 and 2020): numbers (in thousands) of people aged 12 or older by age group and demographic characteristics; numbers (in thousands) of people aged 12 or older by underage and legal drinking age groups and demographic characteristics; alcohol use in lifetime, past year, and past month and binge alcohol and heavy alcohol use in past month among people aged 12 to 20 by demographic characteristics; and alcohol use in lifetime, past year, and past month and binge alcohol and heavy alcohol use in past month among people aged 21 or older by demographic characteristics. Estimates in these tables typically have sufficient sample and precision to be reported. Thus, there is no need to stratify and oversample by four-level race/ethnicity and gender based on current reporting needs. However, options for stratifying the sample to facilitate finer race/ethnicity categories (e.g., subgroups under “Asian”) and gender or oversampling areas with higher concentrations of the demographic subgroups of interest may be researched for future consideration.

Using 2020 decennial census data, a multi-year coordinated sample will be selected for the 2025-2027 NHSBHs. The sample selection procedures will begin by geographically partitioning each state into roughly equal size state sampling regions (SSRs). Regions will be formed so that each area within a state yield, in expectation, roughly the same number of interviews during each data collection period.

Within each of the 762 SSRs formed for the 2025-2027 NHSBHs, a sample of Census tracts will be selected. Then, within sampled Census tracts, Census block groups or SSUs will be selected. A total of 80 SSUs per SSR will be selected: 24 to field the 2025-2027 surveys and 56 “reserve” SSUs to carry the sample through the next decennial census, if desired.

In summary, the first-stage stratification for the 2025-2027 NHSBHs is states and SSRs within states, the first-stage sampling units are Census tracts, and the second-stage sampling units are Census block groups. This design for the 2025-2027 NHSBHs at the first stages of selection is desirable because of (a) the large person-level sample required at the latter stages of selection in the design and (b) continued interest among NHSBH data users and policymakers in state and other local-level statistics. Further, eliminating the selection of smaller area segments within SSUs increases precision by reducing intracluster correlation.

To construct dwelling unit frames for the fourth stage of sample selection, a combination of eListing and ABS will be used. ABS refers to the sampling of residential addresses from a list based on the U.S. Postal Service's Computerized Delivery Sequence file. Relative to field enumeration, ABS has the potential to greatly reduce costs, improve timeliness, and improve frame accuracy in areas with controlled access. However, ABS has some limitations. To maximize coverage and minimize costs, many studies use a hybrid ABS design that uses both ABS and field enumeration, depending on the expected coverage of the ABS frame for particular areas.1

For the 2025 NHSBH hybrid ABS design, ABS coverage estimates will first be computed for all selected second-stage sampling units (SSUs; one or more census block groups). In addition to having high expected ABS coverage (90 percent or more), SSUs will be required to meet separate criteria for group quarters and drop points.2 SSUs that meet the ABS coverage criteria will use the ABS frame. SSUs that do not meet the ABS coverage criteria will require eListing.

Similar to previous NHSBHs, at the latter stages of selection, five age group strata are sampled at different rates. These five strata are defined by the following age group classifications: 12 to 17, 18 to 25, 26 to 34, 35 to 49, and 50 or older. Adequate precision for race/ethnicity estimates at the national level is achieved with the larger sample size and the allocation to the age group strata. Consequently, race/ethnicity groups are not over-sampled. However, consistent with previous NHSBHs, the 2025 NHSBH is designed to over-sample the younger age groups.

In addition, all estimates ultimately released as NHSBH data must meet published precision levels. Through a thorough review of all data, any direct estimates from NHSBH designated as unreliable are not shown in reports or tables and are noted by an asterisk (*). The criteria used to define unreliability of direct estimates from NHSBH is based on the prevalence (for proportion estimates), relative standard error (RSE) (defined as the ratio of the standard error (SE) over the estimate), nominal (actual) sample size, and effective sample size for each estimate. These suppression criteria for various NHSBH estimates are summarized in the table below.

Summary of NHSBH Suppression Rules

-

Estimate

Suppress if:

Prevalence Estimate,

,

with Nominal Sample Size, n,

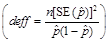

and Design Effect, deff

,

with Nominal Sample Size, n,

and Design Effect, deff

(1) The estimated prevalence estimate,

,

is < 0.00005 or > 0.99995, or

,

is < 0.00005 or > 0.99995, or(2)

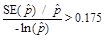

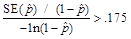

when

when

,

or

,

or when

when

,

or

,

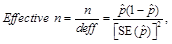

or(3) Effective n < 68, where

or

or(4) n < 100.

Note: The rounding portion of this suppression rule for prevalence estimates will produce some estimates that round at one decimal place to 0.0 or 100.0 percent but are not suppressed from the tables.2

Estimated Number

(Numerator of

)

)The estimated prevalence estimate,

,

is suppressed.

,

is suppressed.Note: In some instances when

is not suppressed, the estimated number may appear as a 0 in the

tables. This means that the estimate is greater than 0 but less

than 500 (estimated numbers are shown in thousands).

is not suppressed, the estimated number may appear as a 0 in the

tables. This means that the estimate is greater than 0 but less

than 500 (estimated numbers are shown in thousands).Note: In some instances when totals corresponding to several different means that are displayed in the same table and some, but not all, of those means are suppressed, the totals will not be suppressed. When all means are suppressed, the totals will also be suppressed.

Means not bounded between 0 and 1 (i.e., Mean Age at First Use, Mean Number of Drinks),

,

with Nominal Sample Size, n

,

with Nominal Sample Size, n(1)

,

or

,

or(2) n < 10.

deff = design effect; RSE = relative standard error; SE = standard error.

NOTE: The suppression rules included in this table are used for detecting unreliable estimates and are sufficient for confidentiality purposes in the context of NHSBH’s annual finding reports and detailed tables.

NOTE: Starting in 2020 for confidentiality protection, survey sample sizes greater than 100 were rounded to the nearest 10, and sample sizes less than 100 were not reported (i.e., are shown as “<100” in tables).

Mental Illness Calibration Study:

The MICS samples will be selected from the 2023–2024 NSDUH samples. Unlike for the Clinical Validation Study (CVS) conducted as part of the 2020 NSDUH, there will be no alteration (i.e., increase) to the NSDUH sample to account for MICS minimal sample requirements. This is because everyone in the sample – those who participate in the MICS and those who do not – will receive the same version of the NSDUH main study survey. The only difference between the MICS and non-MICS groups of respondents is that those who participate in the MICS will receive a follow-up clinical interview after completing the main NSDUH survey.

The 2023 and 2024 MICS samples are designed to yield 2,000 interviews per year. Each year, the probability sample will be distributed across four calendar quarters, resulting in approximately 500 MICS follow-up clinical interviews per quarter. Like the 2008 – 2012 Mental Health Surveillance Study (MHSS), the probability sample will be embedded in the main study sample; therefore, the initial interview for the validation cases will be included in the target study sample of approximately 50,625 main study adult interviews.

Individuals eligible for the MICS are adults aged 18 or older who answer the NSDUH main study interview questions in English. Persons who complete the main study interview in Spanish will not be eligible for MICS. Based on prior main study NSDUH experience, we expect that approximately 97 percent of the main study respondents aged 18 or older will be eligible for MICS. Similar to the MHSS, a subsample of NSDUH respondents will be selected with probabilities determined by their K6 nonspecific distress scale (Kessler et al., 2003), WHODAS score, and age group. A probability sampling algorithm will be programmed in the main study interview instrument so that selected respondents are recruited for the follow-up clinical interview at the end of the main study interview.

Like the MHSS, the MICS will be a stratified sample selected from the NSDUH respondents based on strata defined by their K6 and WHODAS scores. Neyman optimal allocation (Lohr, 1999) will be applied to the strata (i.e., stratum selection probabilities are proportional to the standard deviation of the measure in question). Neyman allocation results in increased precision of key outcome variables (e.g., SMI) compared with other allocations, assuming a fixed sample size and equal costs across strata. Also, in the event that sample sizes fall short in some strata, Neyman allocation produces robust estimates of stratum sample sizes (i.e., meaning that moderate deviations will result in only a small loss of precision).This design minimizes the sample size required for the MICS in two ways: (1) by increasing the yield of SMI cases within the MICS sample (i.e., the closer the yield is to 50 percent, the smaller the required sample size will be for analysis, given all else is equal), and (2) by reducing the design effect within the MICS sample due to the Neyman allocation.

2. Information Collection Procedures

NHSBH Main Study:

Prior to the FI’s arrival at the SDU, a Lead Letter (Attachment A) will be mailed to the resident(s) briefly explaining the survey and requesting their cooperation. In this letter, adult residents of each SDU will be presented with the option to participate in NHSBH via the web-based instruments (screening and interview) or to contact the Contractor directly about participating in-person with screenings and interviews administered by an FI.

Respondents may select to participate via web or in-person at their choosing. Throughout the data collection period, respondents retain the option to participate via web at their discretion, completing screenings and/or interviews online instead of doing so in-person with an FI or vice versa, as well as transitioning between modes when available.

In-Person Data Collection:

Upon arrival at the SDU, the FI will refer the resident to the Lead Letter and answer any questions. If the resident has no knowledge of the letter, the FI will provide another copy, explain that one was previously sent, and then answer any questions. If no one is home during the initial visit to the SDU, the FI may leave a Sorry I Missed You Card (included in Attachment D) informing the resident(s) that the FI plans to make another callback at a later date/time. The card also reminds respondents about their option to complete the screening (and/or interview) online. Callbacks will be made as soon as feasible following the initial visit. FIs will attempt to make at least four callbacks (in addition to the initial call) to each SDU to complete the screening process and complete an interview, if yielded.

If the FI is unable to contact anyone at the SDU after repeated attempts, that FI’s Field Supervisor (FS) may send an Unable-to-Contact (UTC) letter (included in Attachment F). These UTC letters reiterate information contained in the Lead Letter and present a plea for the resident to participate in the study.

When in‑person contact is made with an adult member of the SDU and introductory procedures are completed, the FI will present a Study Description (Attachment E) and answer any questions that person might have concerning the study.

FIs may also give respondents a Question & Answer Brochure (Attachment B) that provides answers to commonly-asked questions. In addition, FIs are supplied with copies of the NHSBH Highlights (Attachment O) for use in eliciting participation, which can be left with the respondent.

Also, the FI may utilize the multimedia capability of the touch-screen tablet to display one of two short videos (approximately 60 seconds total run time per video) for members of the SDU to view; one which provides a brief explanation of the study (NHSBH Introductory Video) and the other explains the benefits for participating in the study (NHSBH Benefits Video). The scripts for these videos are included in Attachment C

If a potential respondent refuses to be screened, the FI has been trained to accept the refusal in a positive manner, thereby minimizing the possibility of creating an adversarial relationship that might preclude future opportunities for contact. The FS may then request that one of several Refusal Letters (Attachment G) be sent to the residence. The letter sent addresses common concerns and asks them to reconsider participation. Refusal letters are customized and include the FS’s phone number in case the potential respondent has questions or would like to set up an appointment with the FI. Also, the refusal letters provide information to complete the screening (and interview) via the web. Unless the respondent calls the FS, CBHSQ or the Contractor’s office to refuse participation, an in-person conversion is then attempted by specially-selected FIs with successful conversion experience.

For respondents that request to complete the screening (or interview) online or refuses the in-person screening (or interview), the FI has the option to offer the sample dwelling unit’s (SDU) unique 10-digit alphanumeric participant code for online participation. If a respondent agrees to accept the code, the FI accesses the code from their tablet, writes the code onto the Participant Code Card (Attachment P), and hands the prepared card to the respondent. This card also provides instructions for accessing the NHSBH screening/interviewing website (including a QR code for quick access) and NHSBH’s toll-free respondent call line (RCL) number in case they have questions.

In addition, to help communicate that a diverse group of people participate in NHSBH and therefore increase their level of interest, the FI has the option to provide the Engaging Respondents Handout (Attachment Q) to respondents. This handout was designed to promote respondent participation among under-represented subpopulations, including LGBTQI+, Asian, and Native Hawaiian and Pacific Islander.

To help allay respondent fears about confidentiality and data security, FIs may also provide the Data Security Handout (Attachment R) to help address respondents’ main barriers (e.g., feeling targeted, fear of being scammed, concerns about how the federal government will handle their data, etc.). Furthermore, to help convey the legitimacy and importance of NHSBH, especially to those respondents who want to know how NHSBH data are used in their state, FIs may provide the State Highlight (see example in Attachment S) for their respective state to respondents. This material provides state-level statistics depicted in graphs, which may convey legitimacy to respondents who misinterpret NHSBH as a marketing survey. With respondent cooperation, the FI will begin screening the SDU by asking either the Housing Unit Screening questions, or the Group Quarters Unit Screening questions, as appropriate. The screening questions are administered using a touch screen Android tablet computer. The housing unit and group quarters unit screening questions are shown in Attachment T.

Once all household members aged 12 or older have been rostered, the hand-held computer performs the within-dwelling-unit sampling process, selecting zero, one, or two members to complete the interview. For cases with no one selected, the FI asks for a name and phone number for use in verifying the quality of the FI’s work, thanks the respondent, and concludes the household contact.

For each person selected to complete the interview, the FI follows these steps:

If the selected individual is aged 18 or older and is currently available, the FI immediately seeks to obtain informed consent. Once consent is obtained, the FI begins to administer the questionnaire in a private setting within the dwelling unit. As necessary and appropriate, the FI may make use of the Appointment Card (included in Attachment D) for scheduled return visits with the respondent.

If the selected individual is 12 to 17 years of age, the FI reads the Parental Introductory Script (Attachment L) from the tablet to the parent or guardian before speaking with the youth about the interview. Subsequently, parental consent is sought from the selected individual’s parent or legal guardian using the parent section of the youth version of the Introduction and Informed Consent Scripts (Attachment J). Once parental consent is granted, the minor is then asked to participate using the youth section of the same document. If assent is received, the FI begins to administer the questionnaire in a private setting within the dwelling unit with at least one parent, guardian or another adult remaining present in the home throughout the interview.

As mentioned in Section A.3, the FI administers the interview in a prescribed and uniform manner with sensitive portions of the interview completed via ACASI. Concerning the guidelines for meeting the OMB minimum race/ethnicity categories, CBHSQ is requesting an exemption to the revisions of race and ethnicity data classification related to revised Statistical Policy Directive No 15: Standards for Maintaining, Collecting, and Presenting Federal Data on Race and Ethnicity (SPD 15) (See Section A. 7 for more details).

In order to facilitate the respondent’s recollection of prescription-type drugs and their proper names, pill images will appear on the laptop screen during the ACASI portions of interviews as appropriate. Also, respondents will use an electronic reference date calendar, which displays automatically on the computer screens when needed throughout the ACASI parts of the interview. Finally, in the FI-administered portion of the questionnaire, showcards are included in the Showcard Booklet (Attachment K) that allow the respondent to refer to information necessary for accurate responses.

After the interview is completed, each respondent is given a $30 cash incentive and an Interview Incentive Receipt (Attachment H) signed by the FI.

For verification purposes, IRs are asked to complete an electronic Quality Control Form that requests their telephone number so Contractor staff can potentially call to ensure the FI did their job appropriately. Respondents are informed that completing the Quality Control Form is voluntary. If they agree, the FI completes this form using the handheld tablet computer and those data are securely delivered directly to the Contractor’s office as part of that FI’s next successful transmission.

FIs may give a Certificate of Participation (Attachment U) to interested respondents after the interview is completed. Respondents may attempt to use these certificates to earn school or community service credit hours. As stated on the certificate, no guarantee of credit is made by SAMHSA or the Contractor. The respondent’s name is not written on the certificate. The FI signs their name and dates the certificate, but for confidentiality reasons the section for recording the respondent’s name is left blank. The respondent can fill in their name at a later time so the FI will not be made aware of the respondent’s identity. It is the respondent’s choice whether they would like to be identified as a NHSBH respondent by using the certificate in an attempt to obtain school or community service credit.

A random sample of those who complete Quality Control Forms are contacted via telephone to answer a few questions verifying that the interview took place, that proper procedures were followed, and that the amount of time required to administer the interview was within expected parameters. The CATI Verification Scripts (Attachment V) contain the scripts for these interview verification contacts via telephone, as well as the scripts used when verifying a percentage of certain completed screening cases in which no one was selected for an interview or the SDU was otherwise ineligible (vacant, not primary residence, not a dwelling unit, dwelling unit contains only military personnel, respondents living at the sampled residence for less than half of the quarter).

All interview data are transmitted on a regular basis via secure encrypted data transmission to the Contractor’s offices in a FIPS-Moderate environment, where the data are subsequently processed and prepared for reporting and data file delivery.

In-Person Questionnaire:

As explained in Section A.3, the version of the NHSBH questionnaire to be fielded is a computerized (CAPI/ACASI) instrument.

As in past years, two versions of the instrument will be prepared: an English version and a Spanish translation. Both versions will have the same essential content.

The proposed In-Person CAI Questionnaire is shown in Attachment N. While the actual administration will be electronic, the document shown is a paper representation of the content that is to be programmed. The interview process is designed to retain respondent interest, ensure confidentiality, and maximize the validity of responses. The questionnaire is administered in such a way that FIs do not know respondents’ answers to sensitive questions, including those on illicit drug use and mental health. These questions are self-administered using ACASI. The respondent listens to the questions privately through headphones so even those who have difficulty seeing or reading are able to complete the self-administered portion. Topics administered by the FI (i.e., the CAPI section) are limited to Demographics, Health Insurance, and Income. Respondents are given the option of designating an adult proxy who is at home to provide answers to questions in the Health Insurance and Income sections.

The interview consists of a combination of FI-administered and self-administered questions. FI-administered questions at the beginning of the interview consist of initial demographic items. The first set of self-administered questions pertain to the use of nicotine, alcohol, marijuana, cocaine, crack, heroin, hallucinogens, inhalants, methamphetamine, and prescription psychotherapeutic drugs (i.e., pain relievers, tranquilizers, stimulants, and sedatives). Similar questions are asked for each substance or substance class, ascertaining the respondent’s history in terms of age of first use, most recent use, number of times used in lifetime, and frequency of use in past 30 days and past 12 months. These substance use histories allow estimation of the incidence, prevalence, and patterns of use for licit and illicit substances.

Additional self-administered questions or sections follow the substance use questions and ask about a variety of sensitive topics related to substance use and mental health issues. These topics include (but are not limited to) injection drug use, perceived risks of substance use, substance use disorders, arrests, treatment for substance use problems, pregnancy, mental illness, the utilization of mental health services, disability, and employment and workplace issues. Additional FI-administered CAPI sections at the end of the interview ask about the household composition, the respondent’s health insurance coverage, and the respondent’s personal and family income.

The detailed specifications for the proposed In-Person CAI Questionnaire are provided in Attachment N.

Web-based Data Collection:

The Lead Letter received at all SDUs will contain the mailing address and 10-digit alphanumeric participant code unique to each dwelling unit for use by those selecting to participate via web. The letter also contains the address to the NHSBH respondent web site, which provides general information about the study for all respondents, and the toll-free number for the NHSBH RCL. The letter also emphasizes that a resident must be 18 or older to participate as a screening respondent and that parental permission is required for interviews with youth respondents. Using the website address provided in the Lead Letter, an adult resident of the SDU (aged 18 or older) will access the NHSBH web screening from any device with internet access (computer, tablet, phone, etc.). The website’s HTTPS encryption will provide sufficient security for all information entered from any device via any internet connection (public Wi-Fi, cellular, at-home Wi-Fi, etc.).

The letter also includes a QR code that respondents can scan to access the web screening site without having to type in the web address – though respondents will need to enter their unique participant code to begin the screening.

The web-based screening (and any subsequent interviews) will be available in English and Spanish. Those living in SDUs who are not able to read English and Spanish well enough to complete the screening (and interview) without assistance will not be able to participate as they will not be able to read and understand the Lead Letter they receive.

If the resident chooses to complete the online screening, included as WEB-6, all household members aged 12 and older will be rostered, which involves the screening respondent providing basic demographic information for each, and the selection algorithm will then determine if any eligible respondents are selected for the interview. At the end of the web-based screening, just like the in-person screening, 0, 1, or 2 residents aged 12 or older may be selected to complete the NHSBH interview.

The IRs will be identified on the interview selections screen according to their age and relationship to the SR (i.e., 14-year-child, 46-year-old spouse, etc.) and clearly labeled as Interview A and/or Interview B.

If one or both of the adult IRs are not the screening respondent, the screening respondent will be asked to inform those adult IR(s) verbally of the interview selection. (Also, as described below, each adult IR will receive a follow-up letter about completing the interview.)

If one or both of the IRs are youth respondents (aged 12-17), the screening respondent will be asked to verbally inform the parent(s) of the youth (if the screening respondent is not also the parent) that: 1) the youth was selected for an interview; 2) verbal parental permission is required via telephone before the youth can participate in the interview; and 3) the toll-free phone number to call to conduct the parental permission and youth assent process for the interview.

In addition to the Lead Letter, SDUs will receive a series of follow-up letters reminding them of their selection for NHSBH. A few days after receiving the Lead Letter, all SDUs will receive a Web Follow-up Letter (included in Attachment WEB-3) as a reminder to complete screening. This letter will contain the website address to the screening website (including a QR code for quick access) and the SDU’s participant code.

Then once each week for the next three weeks following the mailing of the Lead Letter, residents at SDUs who have not yet completed the web screening will receive additional follow up correspondence (included in Attachment WEB-3). Once an adult resident of an SDU completes the web screening, the screening follow-up letters will no longer be mailed to that SDU.

If the screening respondent is one of the adult IRs, they have the option to immediately begin the web interview once selected. IRs will be able to access their interview at a later time of their choosing (until the end of the quarterly data collection period) by using the website address provided on the Lead Letter – the same address for the screening – and inserting the participant code unique to that SDU.

Once the IR accesses the interview, each IR will first have to review the informed consent documentation for the web-based interview. This text provides many of the same elements as the informed consent text read to in-person interview respondents by FIs but has been updated to incorporate text specific to the web mode administration.

This Intro to CAI text – included in the web-based CAI Questionnaire specs, Attachment WEB-4 – asks each adult IR to confirm they are 18 years old or older and that they are at home in a private location where no one else can see their answers. IRs are also encouraged to conduct the interview in one sitting, rather than conducting a break-off.

Also, the screen with this Intro to CAI text will contain a link to the NHSBH Web-based Study Description (Attachment WEB-2); when clicked, a separate window will display the Study Description for an IR to review, download as a PDF and/or print at their discretion.

At the end of the Intro to CAI, each respondent will be asked to confirm he/she is 18 years old or older, is a current resident of the SDU, is at home in a private location and has read and understands the information provided in the Intro to CAI about participating in the web interview. After clicking an acceptance box, the IR can then advance to the next screen. Those who do not wish to proceed are then given the option to exit the interview at that time.

As an additional layer of security, after advancing past the Intro to CAI text, each adult respondent will be required to set a unique 4-digit PIN code of their own choosing before they begin self-administering interview questions. This will prevent anyone else within the dwelling unit from accessing the interview and seeing answers to questions.

Before a web-based interview with a youth respondent can begin, a parent/guardian of that youth respondent (and the youth selected as the respondent) must speak with a telephone interviewer (TI) at the Contractor’s call center. Parents will be given a telephone number displayed at the end of the web screening (and in follow-up letters mailed after the screening) to place a call to speak with the TI.

Once the parent and TI are on the phone, the TI will ask the parent to confirm the SDU address and the unique Participant Code printed on the Lead Letter (and any follow-up letters) mailed to that SDU to verify the TI is speaking with residents of the dwelling unit. All required elements of this call, interactions with parents and youth respondents, will be scripted for each TI to read and are included as Attachment WEB-5.

The TI will read an informed consent script to the parent over the telephone. The script read by the TI first to the parent includes the expectation the parent and youth be at home, youth must be in a private location, parents cannot view answers, nature of the questions, etc. After speaking with the parent, the TI will then read an assent script to the youth about the content of the interview.

A text version of this youth assent script will also be displayed again within the online interview for the youth to read and acknowledge before they begin the interview (described in detail below).

Once parental permission and youth assent have been given verbally, at that point the youth interview can be accessed from the NHSBH website at any time. Until that permission is logged, the youth interview cannot be accessed.

Similar to the adult interviews, as an additional layer of security, after advancing past the Intro to CAI text, each youth respondent will be required to set a unique 4-digit PIN code of their own choosing before they begin self-administering interview questions. This will prevent anyone else within the dwelling unit from accessing the interview and seeing answers to questions.

Every respondent who completes the web-based interview, either an adult or youth respondent – will be eligible for the $30 incentive and will select a preferred method for receiving that incentive – either an electronic Visa or MasterCard gift card or a physical Visa or MasterCard gift card delivered to the SDU. Each respondent will also receive a thank you e-mail or letter for their participation (Attachment WEB-7).

Each IR will also be offered a link to the web-based version of the NHSBH Q&A brochure – similar the brochure given by FIs during in-person data collection but with a format optimized for web without mention of an in-person interview (included as Attachment WEB- 1).

Web-based Questionnaire:

Those respondents who choose to take part in the web-based interview will be asked to complete the interview while at home, in a private location within the home and to allow sufficient time to complete the interview in one sitting. Respondents can access the interview from any device with internet access (smartphone, tablet, computer, etc.), but it will be recommended that they self-administer the interview from a laptop or desktop computer in their home to allow for the optimal viewing experience with the interview program via Blaise 5. The entire questionnaire for the web interview is included as Attachment WEB-4.

Also, those respondents who have difficulty accessing the web interview, need other assistance with the interview or have any questions about their participation can call the toll-free telephone number provided on the lead letter and receive technical support and answers to their questions about participation in NHSBH.

For web-based interviewing, various design changes were implemented in 2020 to optimize the experience for each web respondent, in particular those with lower literacy levels who may have benefited from the ACASI text-to-speech element of the in-person interview not available in the web interview. These changes include the following:

use of bold and blue-colored text to draw attention to keywords;

transition of several screens to a more user-friendly grid format to limit the repetitiveness of some questions; and

spreading text-heavy items, such as the Intro to CAI, across multiple screens to avoid excessive scrolling, etc.

The decision to not include ACASI as part these web-based interviews was made following consultation with subject matter experts which concluded that offering audio would result in a larger risk of breeching confidentiality (allowing others to potentially hear items positively endorsed previously by a respondent and “filled” in a new item query) than not having audio at all.

Prior to particularly sensitive modules in the ACASI section of the web-based interview, prompts are available to remind respondents to be sure they are in a private location. Also, similar to the in-person interview, respondents will have the option to enter “Don’t Know” or “Refused” to any question, with those options shown on screen with the other answer choices.

For those respondents who answer “Yes” to any of the questions about past year suicidal ideation or attempts, that respondent will receive a follow-up screen at the end of the series of questions with a reminder of the contact information for the helpline if that respondent would like to speak with a mental health professional.

The detailed specifications for the proposed web-based interview are provided in Attachment WEB-4.

Mental Illness Calibration Study:

Both NSDUH main study interview respondents selected for the MICS and those not selected for the MICS will complete the NSDUH main study interview. The NSDUH instrument does not differ based on whether the respondent is selected for MICS, as the selection process occurs after the main study interview is complete.

Respondents aged 18 years and older who complete the main study interview, either web-based or in-person, and are selected for the MICS interview, will be recruited to participate. FIs and respondents will be blind to the respondent selection criteria for the MICS and will not know a respondent has been selected for the MICS until the follow-up clinical interview recruitment scripts (included in Attachment MICS-2) appear at the end of the main study NSDUH interview.

In-person MICS Recruitment:

NSDUH FIs will be trained on the in-person MICS recruitment process as part of veteran and new-to-project FI training sessions. The FI will read the follow-up clinical interview recruitment scripts from the laptop verbatim to the selected respondent and provide them with a hard copy of the Follow-up Study Description (Attachment MICS-7). The Follow-up Study Description will include important details about the follow-up clinical interview, a clear description of the follow-up clinical interview procedures, and information about confidentiality protections identical to the information provided for the main study.

If the respondent agrees, the FI will ask for the respondent’s first name, phone number, and email address so the respondent can be contacted later to schedule the follow-up interview. The FI will then hand the respondent a schedular card (Attachment MICS-3) that will allow them to access the project’s online scheduling system, via URL or QR code, to select an interview date and time. After these recruitment screens are completed, the FI will no longer have contact with the respondent, and all future contact will come from CIs or MICS systems.

Web-based MICS Recruitment:

The follow-up clinical interview recruitment scripts that appear at the end of the web-based main study interview are self-administered. The respondent will be led through screens that let them know they have been selected for the follow-up clinical interview, provided a web-based version of the Follow-up Study Description (Attachment MICS-7), and, if the respondent agrees, asked to provide their first name, phone number, and email address so they can be contacted. Although the respondent’s first name, phone number, and email address will be collected within the main study interview, it will be used only for re-contact purposes.

If a respondent agrees to complete a follow-up clinical interview, the web-based main study interview will automatically launch the project’s online scheduling system in a new internet browser. This allows the respondent to select a follow-up clinical interview date and time on the spot. After these recruitment screens are completed, all future contact will come from CIs or MICS systems.

Online Scheduling System & Public and Private Sites:

After an in-person or web respondent agrees to participate in the follow-up clinical interview and provides a valid email address, the respondent will receive via email information for accessing the project’s online scheduling system. This will contain a respondent-specific URL. Access is provided through logging into a specific page on the NSDUH website, either manually when the passcode is provided by the FI or automatically from the URL included in the email, which embeds the respondent-specific password.

CIs will have access to the project’s private website, which includes a calendar system that works in real-time alongside the project’s online scheduling system to offer interview appointment options for each respondent. Once a respondent selects an interview appointment time, that timeslot is no longer offered to other respondents, and the case is assigned to the CI.

Follow-up Clinical Interview Contacting Procedures:

Follow-up contact with respondents will occur using two distinct, yet connected, processes – automated contacting and CI contacting – that differ based on case status.

Automated contacting methods will include email and text messaging that are generated by various project systems based on current case status.

Automated emails – Multiple systems work together to enable this process, which will be used to automatically send emails to respondents and CIs at different stages of the data collection process based on current status or recent event. These emails—included in Attachment MICS-10—will only be sent to respondents that provide a valid email address at the time of recruitment.

Text messaging – This method will be used to send respondents messages when an appointment is scheduled or missed (Attachment MICS-11). CIs will request text messages be sent to respondents through the MICS case management system (CMS). If respondents do not provide a valid phone number and/or do not consent to text messaging at the time of recruitment, these messages will not occur.

CI contacting methods include phone calls and emails from the assigned CI. CIs will be provided with talking points (Attachment MICS-12) to use when contacting respondents. All CIs will be required to call or email respondents both on the day they provide the interview’s Zoom meeting information (Attachment MICS-13) and the day before the scheduled interview.

All contacts between CIs and respondents will be documented within the MICS CMS on the day the contact occurs. This will ensure automated systems work hand-in-hand with CI contacting procedures.

Additionally, letters will be sent to respondents who have refused to participate (Attachment MICS-14), are unable to contact via phone or email (Attachment MICS-15) or have not scheduled an interview and previous contacting attempts have been unsuccessful to date (Attachment MICS-16). These letters will be requested ad-hoc by project Data Collection Managers.

Missed appointments will be handled in several ways:

No-show protocol – CIs will adhere to the following procedure when a respondent is late or does not show up during their scheduled interview time:

If a respondent has not joined Zoom within the first 5 minutes, the CI calls (if a valid phone number was provided) the respondent to ensure they are not having technical difficulties. If the respondent doesn’t answer, the CI leaves a message. Next, the CI sends a follow-up email (if a valid email address was provided), reminding the respondent of their appointment, and asking if the respondent needs to reschedule. This process takes approximately 5 minutes. If the respondent does not show up within 15 minutes of the scheduled start time, the CI documents the event as a missed appointment.

First and second missed appointments – CIs will enter an event code based on how many times the respondent misses an appointment. After the first two times, if a respondent provides a valid email address, missed appointment emails will be automatically sent to the respondent asking them to reschedule.

Third missed appointment – CIs will finalize the case as incomplete and no further contact is made.

In addition, CIs will have the option to send a missed appointment text to respondents who consent to texting and provide a valid phone number. However, CIs must never store contact information for respondents in personal telephones and should delete any entries made in call logs for all incoming and outgoing text messages with respondents. As discussed above, if an email address is provided, automated emails will also utilized. Interviewing Procedures:

The total average interview time for MICS respondents is expected to be about 120 minutes. This includes approximately 60 minutes for the NSDUH main study interview and approximately 60 minutes for the MICS follow-up clinical interview. The estimated time to complete the follow-up clinical interview is based primarily on timing observed during the Mental Disorder Prevalence Study (MDPS) sponsored by SAMHSA. The SCID-5 used for MDPS, which included the overview and nine full diagnostic modules including schizophrenia and substance use disorders, averaged about 83 minutes, and this included about 20 minutes of back-end Blaise material that was part of MDPS but will not be part of MICS. The abbreviated SCID-5 being used for MICS does not include the schizophrenia or substance use disorder modules, each of which take 10-20 minutes, on average, to complete. Although MICS includes more disorders, many are rare disorders and/or have much shorter administration times (fewer questions) than the modules shared with MDPS. In addition, the use of the SCID screener items will allow respondents to skip out of modules entirely if they do not endorse specific gate items. These factors, combined with the use of the NetSCID (Attachment MICS-1) and seasoned CIs who have gained efficiency working on MDPS, will keep the MICS instrument close to a 60-minute administration time.

Follow-up clinical interviews must be completed within four weeks following the completion of the NSDUH main study interview. Except for Quarter 4 data collection, MICS interviews generated during the last month of the quarter will be completed up to four weeks into the next data collection quarter. For Quarter 4 2023, the final date to complete a MICS interview will be December 31, 2023. For Quarter 4 2024, the final date to complete a MICS interview will be December 31, 2024. These data collection procedures mirror those used on prior NSDUH follow-up studies.

The follow-up clinical interview will be conducted in English only. The interview will include four sections: front-end Blaise, SCID overview, pertinent SCID screener modules, and back-end Blaise. The total number of questions asked of each respondent will depend on their answers to each SCID screener module. At the end of the follow-up interview, after the respondent leaves the Zoom meeting room, CIs will answer debriefing questions about the interview quality and confidentiality on their own.

CIs will record respondents’ answers directly into the Blaise and NetSCID instruments. At the beginning of the interview, after the informed consent process, CIs will ask respondents if they consent to the interview being recorded. If not, the interview will not be recorded. If yes, the interview will be recorded, and CIs will upload recordings to the project’s secure private site, from which interviews can be reviewed for quality purposes.

3. Methods to Maximize Response Rates

NHSBH Main Study:

In 2022, the weighted response rates were 25 percent for screening and 47 percent for interviews, with an overall response rate (screening * interview) of 12 percent. Despite being able to collect data in most areas, field response rates continued to be lower than they were before the COVID-19 pandemic. Further, because web-based data collection was expected to result in a much lower overall response rate than in-person data collection, a very large sample of DUs was selected in 2022.

SAMHSA and Contractor staff work continuously with FIs to ensure all necessary steps are taken so that the best response rates possible are achieved. Despite those efforts, as shown in Table 2 (“Screening, Interview, and Overall NHSBH Weighted Response Rates, by Year” in Section A.9), response rates continue to decline. This decline of the screening, interview and overall response rates over the past 10 years can be attributed to four main factors:

Increase in respondent refusals;

Decrease in refusal conversion;

Increase in controlled access barriers (i.e., gated communities, secured buildings, etc.); and

Addition of the web mode option for respondents.

Refusals at the screening and interviewing level have historically been a problem for due to: 1) respondents feeling they are too busy to participate; 2) respondents feeling inundated with market research and other survey requests; 3) growing concerns about providing personal information due to raised awareness of identity theft and leaks of government and corporate data; and 4) concerns about privacy and increased anti-government sentiment.

The $30.00 cash incentive for interview completion was implemented beginning in 2002 (Wright et al., 2005). The decision to offer an incentive was based largely on an experiment conducted in 2001, which showed that providing incentives of this amount appeared to increase response rates. Wright and his coauthors explored the effect that the incentive had on nonresponse bias. The sample data were weighted by likelihood of response between the incentive and non-incentive cases. Next, a logistic regression model was fit using substance use variables and controlling for other demographic variables associated with either response propensity or drug use. The results indicate that for past year marijuana use, the incentive either encourages users to respond who otherwise would not respond or encourages respondents who would have participated without the incentive to report more honestly about drug use. Therefore, it is difficult to determine whether the incentive money is reducing nonresponse bias, response bias, or both. However, reports of past year and lifetime cocaine did not increase in the incentive category, and report of past month use of cocaine actually was lower in the incentive group than in the control group.

In addition to the $30.00 cash incentive and contact materials, to achieve the expected response rates, NHSBH will continue utilizing study procedures designed to maximize respondent participation. This begins with assignment of the cases prior to the start of field data collection, accompanied by weekly response rate goals that are conveyed to the FIs by the FS. When making assignments, FSs take into account which FIs are in closest proximity to the work, FI skill sets, and basic information about the segment (demographics, size, etc.). FSs assign cases to the FIs in order to ensure maximum production levels at the start of the data collection period. To successfully complete work in remote segments or where no local FI is available, a traveling FI (i.e., a veteran NHSBH FI with demonstrated performance and commitment to the study) or a “borrowed” FI from another FS region can be utilized to prevent delays in data collection.

Once FIs transmit their work, data are processed and summarized in daily reports posted to a web-based CMS accessed by FSs, which requires two-factor authentication for log-in as part of a FIPS-Moderate environment. On a daily basis, FSs use reports on the CMS to review response rates, production levels, and record of call information to determine an FI’s progress toward weekly goals, to determine when FIs should attempt contact with a case, and to develop plans to handle challenging cases such as refusal cases and cases where an FI is unable to access the dwelling unit. FSs discuss this information with FIs on a weekly basis. Whenever possible, cases are transferred to available FIs with different skill sets to assist with refusal conversion attempts or to improve production in areas where the original FI has fallen behind weekly response rate goals.

Additionally, FSs hold group calls with FI teams to continuously improve FIs’ refusal conversion skills. FIs report the types of refusals they are currently experiencing, and the FS leads the group discussion on how to best respond to the objections and successfully address these challenges. FSs regularly, on group and individual FI calls, work to increase the FI’s ability to articulate pertinent study information in response to common respondent questions.

Periodically throughout the year, response rate patterns are analyzed by state. States with significant changes are closely scrutinized to uncover possible reasons for the changes. Action plans are put in place for states with significant declines. Response and nonresponse patterns are also tracked by various demographics on an annual basis in the NHSBH Data Collection Final Report. The report provides detailed information about noncontacts versus refusals, including reasons for refusals. This information is reviewed annually for changes in trends.

As noted in Section B.2 above, FIs may use a Sorry I Missed You Card (included in Attachment D), NHSBH Highlights (Attachment O), and a Certificate of Participation (Attachment U) to help make respondent contact and encourage participation. To aid in refusal conversion efforts, Refusal Letters (Attachment G) can be sent to any case that has refused. Similarly, a UTC Letter (included in Attachment F) may be sent to a selected household if the FI has been unable to contact a resident after multiple attempts. In situations where a respondent requests to complete the screening (or interview) online or refuses the in-person screening (or interview), FIs may fill out and provide a Participant Code Card (Attachment P) to a respondent that includes instructions for accessing the NHSBH screening/interviewing website.

For cases where FIs have been unable to gain access to a group of SDUs due to some type of access barrier, such as a locked gate or doorperson, Controlled Access Letters (included in Attachment F) can be sent to the gatekeeper to obtain their assistance in gaining access to the units. In situations where a doorperson is restricting access, the FI can provide that doorperson a card (Attachment W) to read to selected residents over the phone or intercom seeking permission to allow the FI into the building.

Aside from refusals and controlled access barriers, other field challenges attributing to a decline in response rates include:

An increase of respondents who are home but simply do not answer the door. In these instances, FIs try various conversion strategies (i.e., sending unable to contact/conversion letters) to address potential concerns that a respondent may have, while also respecting their right to refuse participation.

An increase in homes with video doorbells (RING, NEST, etc.), where a respondent can view someone at their door via a Smartphone. In these situations, FIs introduce themselves, show their ID badge and note that they are following up on the Lead Letter the respondent should have received in the mail.

An increase in respondents broadcasting messages across neighborhood social media platforms, such as Nextdoor.com, Facebook, etc. about FIs working in the area and making false claims that the study is a scam and warning residents not to open their door. In these situations, FIs inform the local police department they are working in the general area to address any concerns raised by the residents.

An increase in the number of respondents calling into the project’s toll-free number to refuse participation before ever being visited in person by an FI. In these instances, both the FS and FI are notified and the case is coded as a final refusal and no subsequent visits are made to that address. These respondents will also be reminded of their option to participate via web.

An increase in hostile and adamant refusals. These cases are coded out as a final refusal and no subsequent visits are made to that address.

In addition to procedures outlined in this section, NHSBH currently employs the following procedures to avert refusal situations in an effort to increase response rates:

All aspects of NHSBH are designed to project professionalism and thus enhance the legitimacy of the project, including the NHSBH respondent website and materials. FIs are instructed to always behave professionally and courteously.

The NHSBH FI Manual includes specific instructions to FIs for introducing both themselves and the study. Additionally, an entire chapter is devoted to “Obtaining Participation” and lists the tools available to field staff along with tips for answering questions and overcoming objections.

During new-to-project FI training, trainers cover details for contacting DUs and how to deal with reluctant respondents and difficult situations. During exercises and mock interviews, FIs practice answering questions and using letters and handouts to obtain cooperation.

During veteran FI training, time is spent reviewing various techniques for overcoming refusals. The exercises and ideas presented help FIs improve their skills and thus increase their confidence and ability to handle the many situations encountered in the field.

Data have also shown that experienced FIs are more successful in converting refusals. Beginning in 2018, NHSBH implemented a yearly FI Tenure-Incentive Plan to reward FIs monetarily for their dedication to the project and ultimately to reduce FI attrition and ensure more experienced FIs are retained.

Also, NHSBH data will continue to be collected from respondents using a combination of in-person and web-based data collection modes. Like respondent incentives and FI training and procedures, the web option has the potential to address nonresponse factors observed in recent NHSBH data. First, a second mode for completing the survey offers an alternative to respondents who would prefer not to complete the survey with an FI. A significant portion of respondents might simply have a strong preference for participating via web mode vs. with an FI-administered mode (Olson, Smyth & Wood, 2012). Others might be uncomfortable allowing an FI in their home and be more comfortable completing the survey via web mode. Web mode provides an option to respondents who would otherwise not participate due to their preferences or concerns regarding FI administration.

Second, adding web mode has the potential to address historical response rate challenges affecting NHSBH data collection. As noted in Section B.3, controlled access barriers such as gated communities and secured buildings have increased significantly in the past 12 years. Despite the controlled access procedures and materials field developed and refined for overcoming barriers, FIs are frequently unable to access these properties. Web mode provides an alternative means of response for members of sample DUs in controlled access settings to which FIs cannot gain access. Other increasingly common barriers include people not answering door to unexpected visitors and community forums that broadcasting false information about NHSBH of the FI assigned to their area. Web mode provides members of SDUs another option to determine the legitimacy and purpose of NHSBH and how to participate in the study without interacting in person with an FI.

Nonresponse Bias Studies:

In addition to the investigations noted above, several studies have been conducted over the years to assess nonresponse bias in NHSBH. For example, the 1990 NHSBH3 was one of six large federal or federally-sponsored surveys used in the compilation of a dataset that then was matched to the 1990 decennial census for analyzing the correlates of nonresponse (Groves and Couper, 1998). In addition, data from surveys of NHSBH FIs were combined with those from these other surveys to examine the effects of FI characteristics on nonresponse. One of the main findings was that those households with lower socioeconomic status were no less likely to cooperate than those with higher socioeconomic status; there was instead a tendency for those in high-cost housing to refuse survey requests, which was partially accounted for by residence in high-density urban areas. There was also some evidence that FIs with higher levels of confidence in their ability to gain participation achieved higher cooperation rates.

In follow-up to this research, a special study was undertaken on a subset of nonrespondents to the 1990 NHSBH to assess the impact of the nonresponse (Caspar, 1992). The aim was to understand the reasons people chose not to participate, or were otherwise missed in the survey, and to use this information in assessing the extent of the bias, if any, that nonresponse introduced into the 1990 NHSBH estimates. The study was conducted in the Washington, DC, area, a region with a traditionally high nonresponse rate. The follow-up survey design included a $10 incentive and a shortened version of the instrument. The response rate for the follow-up survey was 38 percent. Follow-up respondents appeared to have similar demographic characteristics to the original NHSBH respondents. Estimates of drug use for follow-up respondents showed patterns that were similar to the regular NHSBH respondents. Another finding was that among those who participated in the follow-up survey, one-third were judged by FIs to have participated in the follow-up because they were unavailable for the main survey request. Finally, 27 percent were judged to have been swayed by the incentive, and another 13 percent were judged to have participated in the follow-up due to the shorter instrument. Overall, the results did not demonstrate definitively either the presence or absence of a serious nonresponse bias in the 1990 NHSBH. Based on these findings, no changes were made to NHSBH procedures.

CBHSQ produced a report to address the nonresponse patterns obtained in the 1999 NHSBH (Eyerman et al., 2002). In 1999, the NHSBH changed from PAPI to CAI instruments. The report was motivated by the relatively low response rates in the 1999 NHSBH. The analyses presented in that report were produced to help provide an explanation for the rates in the 1999 NHSBH and guidance for the management of future projects. The report describes NHSBH data collection patterns from 1994 through 1998. It also describes the data collection process in 1999 with a detailed discussion of design changes, summary figures and statistics, and a series of logistic regressions comparing 1998 with 1999 nonresponse patterns. The results of this study were consistent with conventional wisdom within the professional survey research field and general findings in survey research literature: the nonresponse can be attributed to a set of FI influences, respondent influences, design features, and environmental characteristics. The nonresponse followed the demographic patterns observed in other studies, with urban and high crime areas having the worst rates. Finally, efforts taken in 1999 to improve the response rates were effective. Unfortunately, the tight labor market combined with the large increase in sample size caused these efforts to lag behind the data collection calendar. The authors used the results to generate several suggestions for the management of future projects. No major changes were made to NHSBH as a result of this research, although it—along with other general survey research findings—has led to minor tweaks to respondent cooperation approaches.

In 2004, focus groups were conducted with NHSBH FIs on the topic of nonresponse among the 50 or older age group to gather information on the root causes for differential response by age. The study examined the components of nonresponse (refusals, noncontacts, and other incompletes) among the 50 or older age group. It also examined respondent, environmental, and FI characteristics in order to identify the correlates of nonresponse among the 50 or older group, including relationships that are unique to this group. Finally, they considered the root causes for differential nonresponse by age, drawing from focus group sessions with NHSBH FIs on the topic of nonresponse among the 50 or older group. The results indicated that the high rate of nonresponse among the 50 or older age group was primarily due to a high rate of refusals, especially among sample members aged 50 to 69, and a high rate of physical and mental incapability among those 70 or older. It appeared that the higher rate of refusals among the 50 or older age group may, in part, have been due to fears and misperceptions about the survey and FIs’ intentions. It was suggested that increased public awareness about the study may allay these fears (Murphy et al., 2004).

In 2005, Murphy et al. sought a better understanding of nonresponse among the population 50 or older in order to tailor methods to improve response rates and reduce the threat of nonresponse error (Attachment X, Nonresponse among Sample Members Aged 50 and Older Report). Nonresponse to the NHSBH is historically higher among the 50 or older age group than lower age groups. Focus groups were again conducted, this time with potential NHSBH respondents to examine the issue of nonresponse among persons 50 or older. Participants in these groups recommended that the NHSBH contact materials focus more on establishing the legitimacy of the sponsoring and research organizations, clearly conveying the survey objectives, describing the selection process, and emphasizing the importance of the selected individual’s participation.

Another examination of nonresponse was done in 2005. The primary goal was to develop a methodology to reduce item nonresponse to critical items in the ACASI portion of the NHSBH questionnaire (Caspar et al., 2005). Respondents providing “Don’t know” or “Refused” responses to items designated as essential to the study's objectives received tailored follow-up questions designed to simulate FI probes. Logistic regression was used to determine what respondent characteristics tended to be associated with triggering follow-up questions. The analyses showed that item nonresponse to the critical items is quite low, so the authors caution the reader to interpret the data with care. However, the findings suggest the follow-up methodology is a useful strategy for reducing item nonresponse, particularly when the nonresponse is due to “Don’t know” responses. In response, follow-up questions were added to the survey and asked when respondents indicated they did not know the answer to a question or refused to answer a question. These follow-up items encouraged respondents to provide their best guess or presented an assurance of data confidentiality in order to encourage response.

Biemer and Link (2007) conducted additional nonresponse research to provide a general method for nonresponse adjustment that relaxed the ignorable nonresponse assumption. Their method, which extended the ideas of Drew and Fuller (1980), used level-of-effort (LOE) indicators based on call attempts to model the response propensity. In most surveys, call history data are available for all sample members, including nonrespondents. Because the LOE required to interview a sample member is likely to be highly correlated with response propensity, this method is ideally suited for modeling the nonignorable nonresponse. The approach was first studied in a telephone survey setting and then applied to data from the 2006 NHSBH, where LOE was measured by contact attempts (or callbacks) made by FIs.

The callback modeling approach investigation confirmed what was known from other studies on nonresponse adjustment approaches (i.e., there is no uniformly best approach for reducing the effects of nonresponse on survey estimates). All models under consideration were the best in eliminating nonresponse bias in different situations using various measures. Furthermore, possible errors in the callback data reported by FIs, such as underreporting of callback attempts, raise concerns about the accuracy of the bias estimates. Unfortunately, it is very difficult to apply uniform callback reporting procedures amongst the large NHSBH interviewing staff, which is spread across the country. An updated study of the callback modeling approach was completed in 2013 and similar conclusions were reached on errors in the callback data reported by FIs (Biemer, Chen, and Wang, 2013). For these reasons, the callback modeling approach was not implemented in the NHSBH nonresponse weighting adjustment process (Biemer and Link, 2007; Biemer, Chen, and Wang, 2013).

Methods to assess nonresponse bias vary and each has its limitations (Groves, 2006). Some methods include follow-up studies, comparisons to other surveys, comparing alternative post-survey adjustments to examine imbalances in the data, and analysis of trend data that may suggest a change in the characteristics of respondents. When comparing to other surveys such as Monitoring the Future (MTF), comparable trends were found even though the estimates themselves differ in magnitude (mostly due to differences in survey designs). For example, trends in NHSBH and MTF cigarette use between 2002 and 2012 show a consistent pattern:

https://www.samhsa.gov/data/sites/default/files/NSDUHresults2012/NSDUHresults2012.htm#fig8.2

Using a different approach, information in a NHSBH report suggests that alternative weighting methods based on variables correlated with nonresponse were not better or only slightly better than the current weighting procedure:

https://www.samhsa.gov/data/sites/default/files/NSDUHCallbackModelReport2013/NSDUHCallbackModelReport2013.pdf

In April 2015, a study was conducted on the potential for declines in the screening response rate to introduce nonresponse bias for trend estimates. The primary purpose of these analyses was to examine any potential impact of steep declines in the screening response rate observed in 2013 and the first three quarters of 2014 on nonresponse bias. The common approach in these analyses was to examine changes over time from 2007 through 2014, rather than estimating nonresponse bias only at one point in time.

The first analysis evaluated whether there were any apparent changes in the reliance on variables in the screening nonresponse weighting adjustment models over time.

The second analysis used household level demographic estimates from the Current Population Survey (CPS) as a “gold standard” basis of comparison for similar estimates constructed from the NHSBH screening. CPS quarterly estimates related to household size, age, gender, race, Hispanic origin and active military duty status were compared with NHSBH selection weighted estimates (i.e., NHSBH base weights that do not adjust for nonresponse and are not adjusted to population control totals).

The third analysis used data from the National Health Interview Survey (NHIS) to compare estimates of cigarette smoking and alcohol consumption over time with estimates from NHSBH. Overall, the results of these analyses provided little evidence of any systematic change in trend estimates during a period of relatively steeper declines in screening response rates. In January 2018, NHSBH benchmark comparisons with CPS and NHIS were reproduced through the 2016 survey year which confirmed earlier findings.

In accordance with Office of Management and Budget (OMB) standards, SAMHSA conducted a new nonresponse bias study in 2022 using 2021 NHSBH data. The goals of this nonresponse bias analysis were (1) to identify potential sources of bias in the survey estimates due to the nonresponse and (2) to determine the degree to which survey weight adjustments alleviate any bias that is found. The final report addressing both components of the nonresponse bias investigation conducted in 2022 was peer-reviewed but two external experts on nonresponse bias.

The first analysis examined potential nonresponse bias due to impeded in-person data collection by evaluating (1) the impact of omitting in-person data collection and (2) the impact of having reduced in-person data collection in some periods. For the former, population-weighted estimates based on the web data were compared with the population-weighted estimates based on the combined web and in-person data. This comparison provided estimates of nonignorable nonresponse bias if no in-person data collection is used—nonresponse bias that cannot be corrected through weighting. For the latter, the estimated nonresponse bias as described in the former was compared between the first half of 2021, when there was substantially limited in-person data collection, and the second half of 2021, when there were fewer restrictions on in-person data collection. This comparison provided estimates of nonignorable nonresponse bias due to limited in-person data collection. Three findings on mode-related nonresponse bias have several implications, including whether and when to pool data across years, whether and when to compare data across years, and how to weight the multimode design data. First, if in-person data collection is not possible, NHSBH estimates will be affected after weighting adjustments. The magnitude of the impact varies across estimates, and cigarette use estimates are of particular concern. Second, the average estimated bias is almost three times as large for youth aged 12 to 17, compared to adults aged 18 and older. This bias is likely larger, because the bias was highest for the only mental health outcome reported for this age group from the outcomes used in the evaluation. Third, the estimated bias from omitting the in-person data in the first and second halves of the year is remarkably similar, at least for the variation in in-person data collection observed during 2021. This finding means that as in-person data collection varied over 2021 as a function of COVID-19, the weighted estimates remain virtually unaffected. In addition, and perhaps even more importantly, it implies that going forward, fluctuations in in person data collection are unlikely to affect key survey estimates meaningfully.