0704-0614_wgrc_ssb_9.25.24

0704-0614_WGRC_SSB_9.25.24.docx

Workplace and Gender Relations Survey- Civilian

OMB: 0704-0614

SUPPORTING STATEMENT – PART B

B. COLLECTIONS OF INFORMATION EMPLOYING STATISTICAL METHODS

1. Description of the Activity

Describe the potential respondent universe and any sampling or other method used to select respondents. Data on the number of entities covered in the collection should be provided in tabular form for the universe as a whole and for each of the strata in the proposed sample. Indicate the expected response rates for the collection as a whole, as well as the actual response rates achieved during the last collection, if previously conducted.

The population of interest for the Department of Defense Civilian Employee Workplace and Gender Relations Survey (WGRC) consists of appropriated fund (APF) and nonappropriated fund (NAF) civilian employees at the time of sampling who are over 18 years of age, in a pay status, U.S. citizens, and not political appointees. In addition, for the employee to remain eligible for the survey, they must indicate they are a Department of Defense (DoD) civilian employee at the time of the survey. Estimates will be produced for reporting categories using 95% confidence intervals with the goal of achieving a precision of 5% or less. We select a single-stage, non-proportional stratified random sample to ensure statistically adequate expected number of responses for the reporting categories (i.e., domains). For WGRC, OPA uses agency, pay plan/grade, gender, and age to define the initial strata for APF civilian employees. No stratification is needed for the NAF civilian employee sample given it is a census.

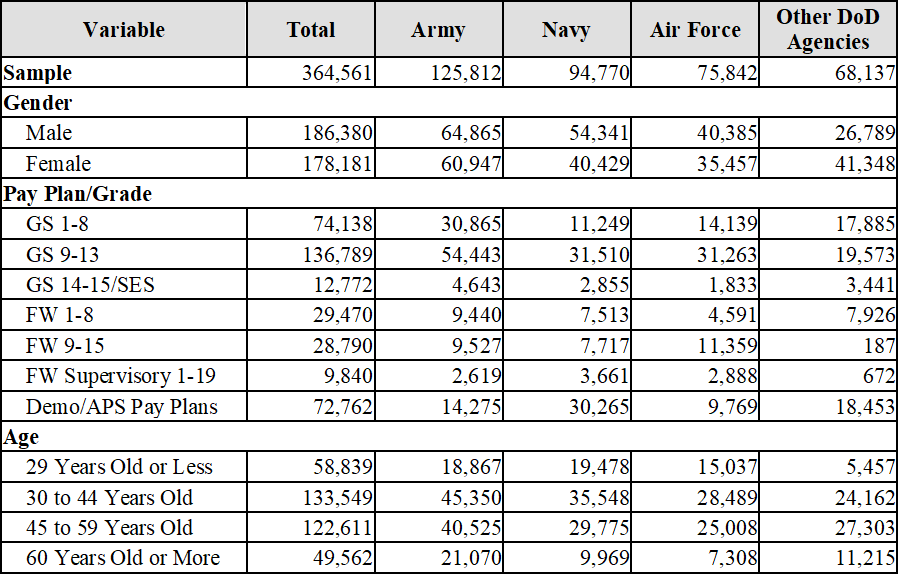

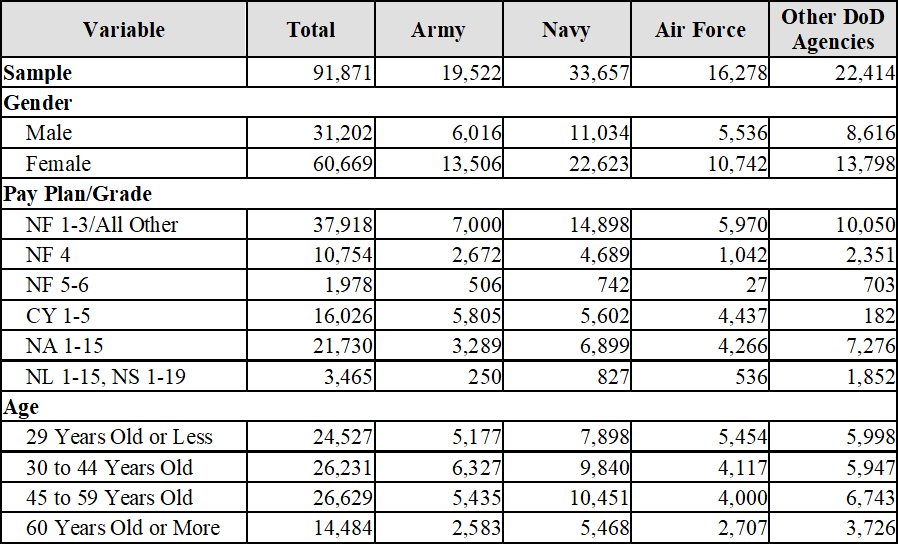

Anticipated eligibility for APF civilian employees on the WGRC will be based on the 2021 WGRC APF sample sizes provided in Table 1. The total sample size for the APF civilians will be approximately 364,000 employees which will provide a sufficient sample in even the smaller domains (e.g. APF Air Force Women). We will continue to do a census with NAF employees due to their small size, the anticipated eligibility for NAF civilian employees on the WGRC will be based on the 2021 WGRC NAF sample sizes provided in Table 2. The expected weighted response rate for this survey is about 20%, which is the same as the weighted response rate for this survey in 2021 and consistent with other federal surveys that include DoD civilian employees (e.g., Federal Employee Viewpoint Survey).

Table 1.

2021 APF Sample Size by Key Estimation Domains

Table 2.

2021 NAF Sample Size by Key Estimation Domains

2. Procedures for the Collection of Information

Describe any of the following if they are used in the collection of information:

a. Statistical methodologies for stratification and sample selection;

The OPA Sample Planning Tool, Version 2.1 (Dever and Mason, 2003) will be used to accomplish the allocation. This application will be based on the method originally developed by J. R. Chromy (1987), and is described in Mason, Wheeless, George, Dever, Riemer, and Elig (1995). We use a single-stage, non-proportional stratified random sample to ensure statistically adequate expected number of responses for the reporting domains. For WGRC, OPA uses agency, pay plan/grade, gender, and age to define the initial strata for APF civilian employees. Once OPA determines the stratum-level sample sizes, a random number is assigned to every member of the population and the population is sorted by stratum and random number prior to sampling, which results in a randomly-ordered population within each stratum. We then select the appropriate number of APF civilian employees from each stratum. For NAF civilian employees, a census is conducted.

b. Estimation procedures;

OPA weights the eligible respondents in order to make inferences about the entire DoD civilian employee population. The weighting methodology utilizes standard weighting processes. First, we assign a base weight to the sampled member based on the reciprocal of the selection probability. Second, OPA uses 20-30 administrative variables in the XGBoost application of Generalized Boosted Model (GBM) to predict survey eligibility and completion. OPA’s accurate and detailed administrative data on both survey respondents and nonrespondents provides confidence in our survey estimates. Finally, the current weights will be post-stratified to known population totals to reduce bias associated with the estimates.

c. Degree of accuracy needed for the Purpose discussed in the justification;

OPA creates variance strata so precision measures can be associated with each estimate. We produce precision measures for reporting categories using 95% confidence intervals with the goal of achieving a precision of 5% or less.

d. Unusual problems requiring specialized sampling procedures; and

None.

e. Use of periodic or cyclical data collections to reduce respondent burden.

To meet the Congressional requirements, OPA conducts the WGRC survey biennially. To reduce burden, we continue to refine survey questions, delete unnecessary questions, and examine the sample size needed to achieve precision around estimates.

3. Maximization of Response Rates, Non-response, and Reliability

Discuss methods used to maximize response rates and to deal with instances of non-response. Describe any techniques used to ensure the accuracy and reliability of responses is adequate for intended purposes. Additionally, if the collection is based on sampling, ensure that the data can be generalized to the universe under study. If not, provide special justification.

To maximize response rates, OPA offers the survey via the web and uses reminder emails to maximize response rates. For the hard to reach NAF population, OPA also uses a mailed letter to announce the survey to the employee’s address of record. To reduce respondent burden, web-based surveys use “smart skip” technology to ensure respondents only answer questions that are applicable to them. Multiple reminders will be sent to eligible DoD civilian employees during the survey field period to help increase response rates. E-mail communications will include text highlighting the importance of the surveys and be sent from senior DoD leadership. With the sampling procedures employed, OPA predicts enough responses will be received within all important reporting categories to make estimates that meet confidence and precision goals.

To deal with instances of nonresponse, OPA adjusts for nonresponse in the weighting methodology. To ensure the accuracy and reliability of responses, OPA conducts a nonresponse bias (NRB) analysis every third survey cycle. Historically OPA has found little evidence of significant NRB during these studies; however, OPA statisticians consider the risk of NRB high and consider it likely the largest source of error in OPA surveys. OPA uses probability sampling and appropriate weighting to ensure the survey data can be generalized to the universe under study.

4. Tests of Procedures

Describe any tests of procedures or methods to be undertaken. Testing of potential respondents (9 or fewer) is encouraged as a means of refining proposed collections to reduce respondent burden, as well as to improve the collection instrument utility. These tests check for internal consistency and the effectiveness of previous similar collection activities.

Items on this survey are used with all DoD Workplace and Gender Relations Surveys. Although they were initially developed with military populations, they were tested with DoD civilian employees within a large, diverse DoD agency to ensure proper translation to civilian employee populations. The metrics and associated questions allow trending to 2016, 2018 and 2021 WGRC surveys. The metrics to assess sexual harassment, gender discrimination, and sexual assault were approved by the Secretary of Defense as the only metrics to be used within the DoD in a May 2015 signed memorandum (Secretary of Defense, 2015). The amount of survey items on the WGRC is significantly less than the 2021 WGRC survey (42 fewer items). Finally, the current survey is anticipated to take 20-30 minutes for most participants to complete as it uses complex skip logic throughout to only show questions directly applicable to respondents based on their answers to previous questions. Indeed, only 32 questions go to all respondents.

5. Statistical Consultation and Information Analysis

a. Provide names and telephone number of individual(s) consulted on statistical aspects of the design.

Dr. Matthew Scheidt, Team Lead, Statistical Methods Branch – OPA; [email protected]

Mr. Stephen Busselberg, Researcher – Fors Marsh; [email protected]

b. Provide name and organization of person(s) who will actually collect and analyze the collected information.

The data will be collected by Data Recognition Corporation (DRC), which is OPA’s operations contractor.

Ms. Valerie Waller, Senior Managing Director – DRC; [email protected]

The data will be analyzed by OPA analysts. Contact information is listed below.

Dr. Jessica Marcon Zabecki, Chief Military Research Psychologist, Health & Resilience (H&R) Research Division – OPA, [email protected]

Dr. Ashlea Klahr, Acting Director of OPA, [email protected]

Dr. Rachel Lipari, Chief Military Sociologist, H&R Research Division – OPA, [email protected]

Ms. Lisa Davis, Deputy Director, H&R Research Division – OPA, [email protected]

Olga Schecter, Project Director, OPA, [email protected]

Ms. Kimberly Hylton, Chief of Survey Operations and Methodology, H&R Research Division – OPA, [email protected]

Mr. Andrew Luskin, Operations Analyst – Fors Marsh; [email protected]

Mr. DaCota Hollar, Operations Analyst – Fors Marsh; [email protected]

Ms. Ariel Hill, Operations Analyst – Fors Marsh; [email protected]

Ms. Jess Tercha, Associate Director – Fors Marsh; [email protected]

Ms. Amanda Barry, Director, Military Workplace Climate Research – Fors Marsh; [email protected]

Ms. Margaret H. Coffey – Fors Marsh, [email protected]

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Hill, Ariel E CTR DMDC |

| File Modified | 0000-00-00 |

| File Created | 2024-09-27 |

© 2026 OMB.report | Privacy Policy