MUREP Outcome Suite of Surveys (NASA MUREP Student Survey and NASA MUREP PI Survey) - short form

NASA MUREP Outcome Assessment (Suite of Surveys MT Generic Clearance) SHORT FORM (4-2-23).docx

Generic Clearance for the NASA Office of STEM Engagement Performance Measurement and Evaluation (Testing)

MUREP Outcome Suite of Surveys (NASA MUREP Student Survey and NASA MUREP PI Survey) - short form

OMB: 2700-0159

REQUEST FOR APPROVAL under the Generic Clearance for NASA STEM Engagement Performance Measurement and Evaluation, OMB Control Number 2700-0159, expiration 09/30/2024

_____________________________________________________________________________________

TITLE OF INFORMATION COLLECTION:

NASA MUREP Outcome Suite of Surveys (NASA MUREP Student Survey and NASA MUREP PI Survey)

TYPE OF COLLECTION:

|

Attitude/Behavior Scale |

|

Baseline Survey |

|

Cognitive Interview Protocol |

|

Consent Form |

|

Focus Group Protocol |

|

Follow-up Survey |

|

Instructions |

|

Satisfaction Survey |

|

Usability Protocol |

GENERAL OVERVIEW: NASA Science, Technology, Engineering, and Mathematics (STEM) Engagement is comprised of a broad and diverse set of programs, projects, activities and products developed and implemented by HQ functional Offices, Mission Directorates and Centers. These investments are designed to attract, engage, and educate students, and to support educators, and educational institutions. NASA’s Office of STEM Engagement (OSTEM) delivers participatory, experiential learning and STEM challenge activities for young Americans and educators to learn and succeed. NASA STEM Engagement seeks to:

Create unique opportunities for students and the public to contribute to NASA’s work in exploration and discovery.

Build a diverse future STEM workforce by engaging students in authentic learning experiences with NASA people, content, and facilities.

Strengthen public understanding by enabling powerful connections to NASA’s mission and work.

To achieve these goals, NASA makes vital investments toward building a future diverse STEM workforce across its portfolio of projects including the Minority University Research and Education Project (MUREP). NASA STEM Engagement strives to increase K-12 involvement in NASA projects, enhance higher education, support underrepresented communities, strengthen online education, and boost NASA's contribution to informal education. The intended outcome is a generation prepared to code, calculate, design, and discover its way to a new era of American innovation.

The Minority University Research and Education Project (MUREP) is administered through NASA's Office of STEM Engagement. Through MUREP, NASA provides financial assistance via competitive awards to Minority Serving Institutions, including Historically Black Colleges and Universities, Hispanic Serving Institutions, Asian American and Native American Pacific Islander Serving Institutions, Alaska Native and Native Hawaiian-Serving Institutions, American Indian Tribal Colleges and Universities, Native American-Serving Nontribal Institutions and other MSIs, as required by the MSI-focused Executive Orders. These institutions recruit and retain underrepresented and underserved students, including women and girls, and persons with disabilities, into science, technology, engineering and mathematics (STEM) fields. MUREP investments enhance the research, academic and technology capabilities of MSIs through multiyear cooperative agreements. Awards assist faculty and students in research and provide authentic STEM engagement related to NASA missions. Additionally, awards provide NASA-specific knowledge and skills to learners who have historically been underrepresented and underserved in STEM. MUREP investments assist NASA in meeting the goal of a diverse workforce through student participation in internships and fellowships at NASA centers and the Jet Propulsion Laboratory (JPL).

The overarching purpose of this outcome assessment is to help NASA understand the outcomes the MUREP project and its activities are achieving with particular interest in the following areas: student recruitment, strategic partnerships, student STEM persistence, student STEM identity, student sense of belonging, and student academic self-efficacy. This outcome assessment will provide tools and evidence that can be used to better understand what achievements are being realized by MUREP investments to help guide future investment decisions.

The two instruments (principal investigator survey and student survey) collect stakeholders’ perceptions that can support NASA’s continued improvement of the MUREP portfolio, and measure student outcomes of participation in NASA MUREP-funded programs, activities, and/or resources. Recruitment, STEM Identity, Sense of Belonging, Retention and Self-Efficacy are measures of interest.

INTRODUCTION AND PURPOSE: In FY22, a NASA MUREP Outcome Assessment Framework and Plan was developed in order to help NASA understand the outcomes the MUREP project and its activities are achieving with particular interest in the following constructs: student recruitment and retention and strategic partnerships. This outcome assessment will provide tools and evidence that can be used to better understand what achievements are being realized by MUREP investments to help guide future investment decisions. This evaluation plan address NASA STEM engagement goals and objectives by examining how MUREP and its activities achieve outcomes important to NASA’s work. Further, this evaluation plan will develop outcome assessment tools that MUREP activities may utilize in the future to understand their progress towards achieving NASA OSTEM goals and objectives. Prior to a full-scale administration of the outcome assessment, a small pilot study was conducted in the 1st quarter of FY23 to validate the survey instruments included in the outcome assessment for information collection. The pilot test served to validate two surveys as well as assess their robustness through expert review, psychometric analyses, and cognitive interviews. To ensure that the surveys were both valid and robust, a four-step survey validation process was completed: (1) reviewing survey construction; (2) conducting a small pilot test; (3) assessing validity and robustness with psychometric analyses; and (4) conducting cognitive interviews.

This evaluation study will utilize multiple modes of data collection: 1) participant surveys or questionnaires, 2) focus groups or interviews, 3) document analysis (of annual evaluation plans), and 4) student college enrollment and degree data from the National Student Clearinghouse (NSC). Survey research relies on the technique of self-report to obtain information about such variables as people’s attitudes, opinions, behaviors, and demographic characteristics. Although surveys cannot establish causality, they can explore, describe, classify, and establish associations among variables and the population of interest. There are multiple types of survey studies. This evaluation will use a cross-sectional survey method. That is, survey data will be collected from selected individuals at a single point in time. Cross-sectional designs are effective for providing a snapshot of the current behaviors, attitudes, and beliefs in a population. This design also has the advantage of providing data relatively quickly but is limited in understanding trends or development over time (Gay et al., 2012).

Focus group and/or interviews will allow stakeholders of MUREP activities to characterize the outcomes accomplished using their own perceptions and experiences. Document analysis will help reveal data reported by MUREP activities. Data from the NSC on student college or university enrollment in a STEM program major will allow for the analysis of STEM persistence.

Our interest is to measure students’ immediate outcomes of participating in a NASA MUREP engagement activity and to assess what are the psychometric properties of the new constructs developed to measure students’ STEM Identity, Sense of Belonging, and Self-Efficacy and to collect stakeholders’ perceptions that can support NASA’s continued improvement of the MUREP portfolio. Thus, the purpose for testing is to develop valid instruments that reliably explain the ways in which higher education participants are impacted by participation in these activities. Guided by current STEM education and measurement methodologies, it is the goal of this rigorous instrument development and testing procedure to provide information that becomes part of the iterative outcome assessment and feedback process for the portfolio of NASA MUREP engagement activities.

Hence, the goals of this study are to assess outcomes related to participation in MUREP activities or in collaboration with the MUREP program. To assess these short-term outcomes, we will use an outcome (also called effectiveness) evaluation design. It is important to distinguish an outcome evaluation study from an impact evaluation study. The goals of an outcome evaluation study are to identify the results or effects of a program and measure program beneficiaries’ changes in knowledge, attitude(s), and/or behavior(s) that result from participation in a program. Outcome evaluation measures program effects in the target population by assessing the progress in the outcomes or outcome objectives that the program is to achieve.

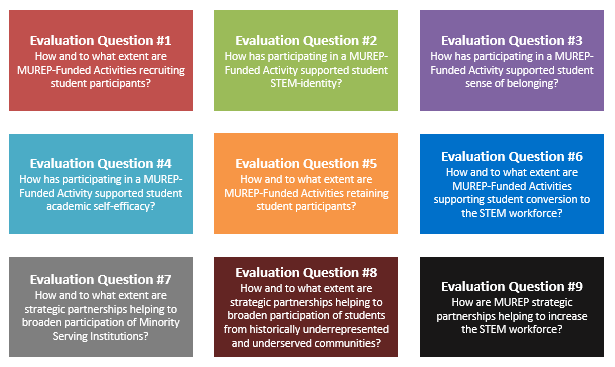

RESEARCH DESIGN OVERVIEW: NASA’s work in STEM Engagement is focused on serving students. MUREP provides support via competitive opportunities and awards to MSIs. MUREP investments enhance the research, academic, and technology capabilities of MSIs through multiyear cooperative agreements, bolstering their capacity in educating and preparing students for STEM careers. MUREP will continue to expand competitive opportunities to address specific gaps needs while building capacity at institutions. The two instruments (principal investigator survey and student survey) collect stakeholders’ perceptions that can support NASA’s continued improvement of the MUREP portfolio, and measure student outcomes of participation in NASA MUREP-funded programs, activities, and/or resources. Recruitment, STEM Identity, Sense of Belonging, Retention and Self-Efficacy are measures of interest. This study is program evaluation and will be guided by nine evaluation questions for the approach and design of this study. Evaluation questions are presented in Figure 1 below.

Figure 1. Evaluation Questions

The two develop instruments will be placed into Survey Monkey online software, and a survey link will be distributed through email to ~500 NASA MUREP principal investigators and higher education student participants. Quantitative and qualitative methods will be used to analyze survey data. Quantitative data will be summarized using descriptive statistics such as numbers of respondents, frequencies and proportions of responses, average response when responses categories are assigned to a Likert scale (e.g., 1 = “Strongly Disagree” to 6 = “Strongly Agree”), and standard deviations. Emergent coding will be used for the qualitative data to identify the most common themes in responses.

The survey instruments to be used in this study have been created based on the research questions proposed. Survey items have either been created specifically for this evaluation or have been identified in existing measures (e.g., the STEM-PIO-1). This evaluation effort proposes three indicators to measure student engagement and one indicator for measuring student retention in the STEM pathway. The first measure of student engagement is the STEM Professional Identity Overlap (STEM-PIO-1) developed by McDonald et al. (2019) which measures students’ perception of the overlap between the image they have of themselves compared to the image they have of STEM professionals. A systematic review of the research literature by Kuchynka et al. (2020) indicates that long-term commitment among students from groups historically underrepresented and underserved in the STEM discipline is associated with the cultivation and incorporation of STEM into one’s self-concept. The STEM-PIO-1 is a single survey item that asks students to respond to a prompt by selecting the image that best describes the current overlap of the image they have of “I” and that of what a STEM professional is.

The second measure for student engagement is adapted from the Departmental Sense of Belonging and Involvement (DeSBI) developed by Knetka et al. (2020). Although there are many survey instruments measuring school belonging available in the research literature, the DeSBI was selected because it specifically targets students’ sense of belonging to and involvement in university departments which is similar to the context of MUREP-Funded Activities. The original DeSBI will be modified to reflect the context of the current evaluation sites.

Since the DeBSI consists of 20 survey items, it is possible that students may experience survey fatigue and therefore resulting in decreased response rates and the quality of the data. To address this issue, the DeBSI will be shortened to include a total of 9 items. These 9 items will include 3 items with the highest factor loadings associated with each of the latent constructs examined in the survey. Although this approach will lose some of the psychometric properties associated with the original survey, data collected from the modified DeBSI survey will help inform the development of a new survey that may be more appropriate for the context of the current evaluation efforts.

The third measure for student engagement is academic self-efficacy. Self-efficacy is defined as the self-appraisal of one’s ability to successfully execute a course of action to reach a desired goal (Bandura, 1977). This broad definition has been applied across various settings such as smoking cessation therapy (Gwaltney, 2009), work-related performance (Judge et al., 20007), exercise (Schutzer & Graves, 2004), and education (Honicke & Broadbent, 2016), to name a few. To distinguish between academic self-efficacy and general self-efficacy (Scholz et al., 2002), academic self-efficacy is defined as a student’s confidence in his or her ability to complete specific academic tasks. Several longitudinal research studies revealed positive associations between academic self-efficacy and persistence in college (Gore, 2006) as well as course performance (Cassidy, 2012). The survey was modified to fit the contextual circumstances of NASA’s MUREP program and the Office of STEM Engagement goals and objectives.

TIMELINE: Testing of the two instruments (NASA MUREP Principal Investigator Survey and NASA MUREP Student Survey) will take place in April 2023 – March 2024 with principal investigators and higher education student participants from NASA MUREP engagements and activities in coordination with project management.

SAMPLING STRATEGY: The universe of NASA MUREP Principal Investigators (PIs) and higher education student participants for testing is 500 or below. Items for the two instruments (NASA MUREP PI Survey and NASA MUREP Student Survey) will be placed into Survey Monkey online software, and a survey link will be distributed through email to ~500 NASA MUREP Principal Investigators (PIs) and higher education student participants in the NASA MUREP to administer.

Table 1. Calculation chart to determine statistically relevant number of respondents

Data Collection Source |

(N) Population Estimate |

(A) Sampling Error +/- 5% (.05) |

(Z) Confidence Level 95%/ Alpha 0.05 |

(P) *Variability (based on consistency of intervention administration) 50% |

Base Sample Size |

Response Rate |

(n) Number of Respondents |

NASA MUREP Principal Investigators (PIs) |

75 |

N/A |

N/A |

N/A |

75 |

N/A |

75 |

NASA MUREP Student Participants |

425 |

N/A |

N/A |

N/A |

425 |

N/A |

425 |

TOTAL |

|

|

|

|

|

|

500 |

BURDEN HOURS: Burden calculation is based on a respondent pool of individuals as follows:

Data Collection Source |

Number of Respondents |

Frequency of Response |

Total minutes per Response |

Total Response Burden in Hours |

NASA MUREP Principal Investigators (PIs) |

75 |

1 |

15 |

18.75 |

NASA MUREP Student Participants |

425 |

1 |

15 |

106.25 |

TOTAL |

|

|

|

125.00 |

DATA CONFIDENTIALITY MEASURES: Any information collected under the purview of this clearance will be maintained in accordance with the Privacy Act of 1974, the e-Government Act of 2002, the Federal Records Act, and as applicable, the Freedom of Information Act in order to protect respondents’ privacy and the confidentiality of the data collected.

PERSONALLY IDENTIFIABLE INFORMATION:

Is personally identifiable information (PII) collected? Yes No

– NOTE: First and Last Name are not collected but demographic information is collected (e.g., gender, ethnicity, race, grade level, etc.)

If yes, will any information that is collected by included in records that are subject to the Privacy Act of 1974? Yes No

If yes, has an up-to-date System of Records Notice (SORN) been published?

Yes No

Published March 17, 2015, the Applicable System of Records Notice is NASA 10EDUA, NASA STEM Engagement Program Evaluation System - http://www.nasa.gov/privacy/nasa_sorn_10EDUA.html.

APPLICABLE RECORDS:

Applicable System of Records Notice: SORN: NASA 10EDUA, NASA STEM Engagement Program Evaluation System - http://www.nasa.gov/privacy/nasa_sorn_10EDUA.html

Completed surveys will be retained in accordance with NASA Records Retention Schedule 1,

Item 68D. Records will be destroyed or deleted when ten years old, or no longer needed, whichever is longer.

PARTICIPANT SELECTION APPROACH:

Does NASA STEM Engagement have a respondent sampling plan? Yes No

If yes, please define the universe of potential respondents. If a sampling plan exists, please describe? The universe of NASA MUREP Principal Investigators (PIs) and higher education student participants for testing is 500 or below. Items for the two instruments (NASA MUREP PI Survey and NASA MUREP Student Survey) will be placed into Survey Monkey online software, and a survey link will be distributed through email to ~500 NASA MUREP Principal Investigators (PIs) and higher education student participants in the NASA MUREP to administer.

If no, how will NASA STEM Engagement identify the potential group of respondents and how will they be selected? Not applicable.

INSTRUMENT ADMINISTRATION STRATEGY

Describe the type of Consent: Active Passive

How will the information be collected:

Web-based or other forms of Social Media

Telephone

In-person

Other

If multiple approaches are used for a single instrument, state the projected percent of responses per approach.

Will interviewers or facilitators be used? Yes No

DOCUMENTS/INSTRUMENTS ACCOMPANYING THIS REQUEST:

Consent form

Instrument (attitude & behavior scales, and surveys)

Protocol script (Specify type: Script)

Instructions NOTE: Instructions are included in the instrument

Other (Specify ________________)

GIFTS OR PAYMENT: Yes No If you answer yes to this question, please describe and provide a justification for amount.

ANNUAL FEDERAL COST: The estimated annual cost to the Federal government is $5,925. The cost is based on an annualized effort of 75 person-hours at the evaluator’s rate of $79/hour for administering the survey instrument, collecting and analyzing responses, and editing the survey instrument for ultimate approval through the methodological testing generic clearance with OMB Control Number 2700-0159, exp. exp. 09/30/2024.

CERTIFICATION STATEMENT:

I certify the following to be true:

The collection is voluntary.

The collection is low burden for respondents and low cost for the Federal Government.

The collection is non-controversial and does raise issues of concern to other federal agencies.

The results will be made available to other federal agencies upon request, while maintaining confidentiality of the respondents.

The collection is targeted to the solicitation of information from respondents who have experience with the program or may have experience with the program in the future.

Name of Sponsor: Richard Gilmore

Title: Performance Assessment and Evaluation Program Manager, NASA

Office of STEM Engagement (OSTEM)

Email address or Phone number: [email protected]

Date:

References

Cassidy, S. (2012). Exploring individual differences as determining factors in student academic achievement in higher education. Studies in Higher Education, 37(7), 793-810.

Bandura, A. (1977). Self-efficacy: toward a unifying theory of behavioral change. Psychological review,

84(2), 191.

Gay, L. R., Mills, G. E., & Airasian, P. W. (2012). Educational research: Competencies for analysis and

Application (10th Ed.). Pearson.

Gore Jr, P. A. (2006). Academic self-efficacy as a predictor of college outcomes: Two incremental validity

studies. Journal of career assessment, 14(1), 92-115.

Gwaltney, C. J., Metrik, J., Kahler, C. W., & Shiffman, S. (2009). Self-efficacy and smoking cessation: a

meta-analysis. Psychology of addictive behaviors: journal of the Society of Psychologists in Addictive Behaviors, 23(1), 56–66.

Honicke, T., & Broadbent, J. (2016). The influence of academic self-efficacy on academic performance: A

systematic review. Educational research review, 17, 63-84.

Judge, T. A., Jackson, C. L., Shaw, J. C., Scott, B. A., & Rich, B. L. (2007). Self-efficacy and work-related

performance: the integral role of individual differences. The Journal of applied psychology, 92(1), 107–127.

Knekta, E., Chatzikyriakidou, K., & McCartney, M. (2020). Evaluation of a Questionnaire Measuring

University Students’ Sense of Belonging to and Involvement in a Biology Department. CBE—Life Sciences Education, 19(3), ar27

Kuchynka, S. L., Gates, A. E., & Rivera, L. M. (2020). Identity Development during STEM Integration for

Underrepresented Minority Students. Cambridge University Press.

McDonald, M. M., Zeigler-Hill, V., Vrabel, J. K., & Escobar, M. (2019, July). A single-item measure for assessing STEM identity. In Frontiers in Education (Vol. 4, p. 78). Frontiers.

Scholz, U., Doña, B. G., Sud, S., & Schwarzer, R. (2002). Is general self-efficacy a universal construct? Psychometric findings from 25 countries. European journal of psychological assessment, 18(3), 242.

Schutzer, K. A., & Graves, B. S. (2004). Barriers and motivations to exercise in older adults. Preventive

medicine, 39(5), 1056-1061.

NASA Office of STEM

Engagement

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Teel, Frances C. (HQ-JF000) |

| File Modified | 0000-00-00 |

| File Created | 2024-09-11 |

© 2026 OMB.report | Privacy Policy