Supporting Statement B- NHTS 2024_CLEAN_8.12.2024

Supporting Statement B- NHTS 2024_CLEAN_8.12.2024.docx

2024 NextGen National Household Travel Survey (NHTS) Developing and Delivering Post-Pandemic National Traveler Behavior Core Data

OMB: 2125-0545

SUPPORTING STATEMENT

2024 NextGen National Household Travel Survey (NHTS) Developing and Delivering Post-Pandemic National Traveler Behavior Core Data

This is a request for an Office of Management and Budget (OMB) approved clearance for revision of a currently approved information collection entitled “2024 Next Generation National Household Travel Survey” (NextGen NHTS).

Part B. Statistical Methods

Describe potential respondent universe and any sampling selection method to be used

The NHTS is a study conducted by the U.S. Department of Transportation (DOT) Federal Highway Administration (FHWA) to obtain data on key aspects of travel by the American public. The survey is focused on the household as the basic unit of observation.

The sample design for the 2024 NHTS uses address-based sampling (ABS), a design that uses a frame that covers virtually all households. It is imperative also to address overall declining response rates, with significant focus placed on the respondent experience. For further information about the survey design contact Daniel Jenkins at FHWA, 202-366-1067, [email protected].

Respondent Universe

The population of inferential interest for the 2024 NextGen NHTS is defined as households living in the U.S, excluding group quarters1. The universe for sample selection is households within the 50 states and the District of Columbia.

The 2024 NextGen NHTS will comprise a sample of addresses that will be selected from the ABS frame maintained by Marketing Systems Group (MSG). MSG’s ABS frame originates from the U.S. Postal Service (USPS) Computerized Delivery Sequence file (CDS) and is updated monthly. The survey contractor team has decades-long experience in obtaining samples (both random digit dial and ABS) from MSG, and their evaluations have consistently found MSG’s sampling frames to be of very high quality.

MSG has taken great strides to evaluate and enhance the standard CDS-based list. For example, MSG has augmented simplified addresses with no specific street address to provide an ability to match auxiliary variables to a set of sampled addresses. In addition, use of this ABS frame can associate characteristics such as Hispanic surnames that can be matched to about 90 percent of addresses. Other characteristics of addresses (e.g., race/ethnicity, education, household income) may also be matched to some addresses. However, these items are subject to error (see Roth, Han & Montaquila, 20132), but may still prove useful for data collection purposes. Another benefit to MSG addresses is that their addresses are geocoded which can be appended to the Decennial Census and the American Community Survey characteristics of block, block group, and track level, which is very useful for data collection and/or weighting purposes.

The ABS frame is comprehensive, covering nearly 100 percent of the households in the United States. Variations in achieved coverage rates that have been described in the literature are due primarily to two factors: the treatment of households that receive mail only through P.O. box addresses (referred to as “only way to get mail,” or OWGM, P.O. box households) and the impact of geocoding errors. To ensure full coverage, OWGM P.O. box households will be included as a part of the sample frame. Additionally, since the NHTS is a national sample, geocoding errors (essentially, inaccurate placement of an address on a map) do not affect coverage.

Sample Selection

The 2024 NextGen NHTS will target the capture of travel behavior data from a sample of 7,500 households across the nation using an Address Based Sampling frame as discussed above. The sample will be stratified by Census Division and urban/rural classification (18 groups total). The target sample size (of responding households) will then be initially allocated among the strata according to the proportion of addresses falling in the stratum determined by the counts of occupied housing units from the 2020 Decennial Census.

Once the sample of responding households has been allocated in the manner described above, the sample size will be inflated to account for expected losses due to ineligible addresses (an assumed rate of eight percent of addresses, based on other National ABS mail studies conducted by the survey contractor team), response to the recruitment effort (an assumed response rate of 26 percent of eligible addresses), and response to the diary survey (an assumed response rate of 60 percent of recruited households). An estimated 52,258 addresses will be sampled to yield 7,500 completed households.

An additional reserve sample of addresses will be selected, to be fielded, if response rates are below expected rates. The rates given here are overall National rates; varying response rates among strata will be used based on relative rates from a prior NHTS (either the 2022 NHTS, if available, or the 2017 NHTS). These differences (departures from proportional allocation of responding households and variations in response rates) will result in a sample of addresses selected with variable sampling rates. These variations in sampling rates will be properly accounted for in the computation of the survey weights.

Within each of the 18 strata (9 Census Divisions by Urban/Rural classification), the ABS frame will be sorted in a prescribed manner prior to sample selection. The sort used by MSG is geographic in nature, and addresses are sampled systematically using the geographic sort. As summarized in Table 1, the survey contractor’s experience with similar past HTS suggests that a total of 52,258 households will need to be sampled to ensure no less than 7,500 surveys are completed for the NHTS 2024. Table 2 presents the estimated sample sizes aggregated over the sub-strata described above.

Table 1. Universe and Sampling Assumptions

Description |

Households |

Total U.S. |

140,498,736 |

Sampled Addresses |

52,258 |

Recruited but Not Completed |

5,000 |

Completed NHTS 2024 Travel Diary surveys |

7,500 |

Table 2. Expected Sample Sizes for the National Sample by Primary Stratum

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Region |

Division |

Housing Units |

Sample Allocation |

Completed Households |

||||||||

Total |

Urban |

Rural |

% Urban |

% Rural |

Overall |

Urban |

Rural |

Overall |

Urban |

Rural |

||

Northeast |

Middle Atlantic |

17,992,123 |

15,148,182 |

2,843,941 |

84.2% |

15.8% |

6,692 |

5,635 |

1,057 |

960 |

809 |

152 |

New England |

6,724,393 |

5,233,371 |

1,491,022 |

77.8% |

22.2% |

2,501 |

1,946 |

555 |

359 |

279 |

80 |

|

South |

East South Central |

8,634,203 |

5,102,039 |

3,532,164 |

59.1% |

40.9% |

3,211 |

1,898 |

1,313 |

461 |

272 |

189 |

South Atlantic |

29,133,804 |

22,945,567 |

6,188,237 |

78.8% |

21.2% |

10,836 |

8,539 |

2,297 |

1,555 |

1,225 |

330 |

|

West South Central |

16,774,596 |

12,978,221 |

3,796,375 |

77.4% |

22.6% |

6,239 |

4,829 |

1,410 |

895 |

693 |

203 |

|

Midwest |

East North Central |

20,890,027 |

15,903,089 |

4,986,938 |

76.1% |

23.9% |

7,770 |

5,913 |

1,857 |

1,115 |

849 |

266 |

West North Central |

9,568,952 |

6,495,301 |

3,073,651 |

67.9% |

32.1% |

3,559 |

2,417 |

1,142 |

511 |

347 |

164 |

|

West |

Mountain |

10,485,244 |

8,686,243 |

1,799,001 |

82.8% |

17.2% |

3,900 |

3,229 |

671 |

560 |

464 |

96 |

Pacific |

20,295,394 |

18,200,305 |

2,095,089 |

89.7% |

10.3% |

7,549 |

6,771 |

778 |

1,083 |

972 |

112 |

|

Total |

140,498,736 |

110,692,318 |

29,806,418 |

78.8% |

21.2% |

52,258 |

41,177 |

11,081 |

7,500 |

5,909 |

1,591 |

|

The ABS sample will be selected quarterly. The survey contractor team will select four samples, one each quarter, using the most current ABS frame as the sampling frame for each selection. This will allow the sample to reflect updates to the ABS frame after the original selection and will also allow adjustment to the sampling rates as needed (e.g., to account for response rates that differ from prior expectations).

One-fourth the target number of sampled addresses, in each sampling stratum, will be selected prior to the start of fieldwork. If, during the first quarter, there is not enough evidence to suggest a lower-than-expected eligibility or response rate, the size of the second sample will also be one quarter of the target number of sample addresses. If, on the other hand, the results show that response rates are higher or lower than expected, the size of the second sample will be adjusted downward or upward to reflect the observed response rates. This process will be repeated for the third and fourth quarter draws.

When deciding whether to make any possible adjustment to the rates for the next quarter of the sample, the survey contractor team will consider that the result will be an over- or under-sampling of the remainder of the year. While the differences between the four quarterly samples will be accounted for in the weighting, the variations in the weights (due to imbalances between the samples in the four quarter-years) will need to be considered in the decision of the extent to which to change the rates.

Sampled addresses will be randomly assigned a day of the week equally distributed across all days to ensure a balanced day of week distribution. This is the approach used by the survey contractor in other travel surveys. Sample will be released on a weekly basis, to control the balance of travel days by month, to the extent possible.

Describe procedures for collecting information, including statistical methodology for stratification, and sample selection, estimation procedures, degree of accuracy needed, and less than annual periodic data cycles:

Data Collection Procedures

As in previous series of the NHTS, the 2024 NextGen NHTS will maintain a two-phase study, which includes a household recruitment survey (phase 1) and trip level diary survey (phase 2) with all eligible household members ages 5 and older. As described in the sections above, the data collection will use an ABS frame to randomly invite households to participate in the 2024 NextGen NHTS. These addresses will be contacted by mail and asked to complete a brief, household-level survey instrument. In the diary phase, members of recruited households will be asked to keep track of all the places they go for one day and to report that information via a web survey, smartphone application or by telephone.

Accessibility. The survey website, online questionnaires, and respondent materials will be Section 508 compliant and will be in both English and Spanish language forms.

Privacy and Security. The study will use strong data encryption of all data entry and transmission points. Online applications and data pipelines run on containers which are orchestrated using Kubernetes clusters hosted at the survey contractor team’s data center. The study’s compute environment and security controls meet the Federal Information Security Modernization Act (FISMA) moderate standard, and all web applications will pass an in-depth automated security vulnerability scan before the start of data collection.

Cognitive Testing. Cognitive testing focused on new questions. Materials used in cognitive testing are included in Appendix 2. Experienced methodologists conducted all interviews with nine purposefully recruited respondents.

Recruitment Materials. The field materials will be used to introduce the survey’s purpose, provide instructions for survey participation, remind households to complete survey requirements, and thank respondents for their participation. These materials include:

Invitation Letter. The proposed methodology includes an invitation letter (Appendix 3) that will be mailed to each sampled address in a 9x12 envelope (Appendix 4). The letter will introduce the NextGen NHTS, discuss the importance of participation, include a cash pre-incentive ($2), and offer a promised incentive of up to $20 per person for completing the entire survey (i.e., recruitment and diary survey). Households will be eligible for this $10 per person (regardless of age) base incentive regardless of completion mode (web, app, telephone interview). An additional $10 per person will be offered for each person who reports travel using the app for a total promised incentive of $20 per person. The letter will encourage a household representative to complete a brief 5-minute survey online. To increase the perceived legitimacy of the study, the letter will include the USDOT logo and be signed by a USDOT official. Including a $2 cash “primer” incentive in the invitation letter is expected to encourage households to begin participation. A toll-free number on the survey materials will be provided and a QR code to facilitate easy access to the web survey. All data collected in the recruitment survey will be used to populate the household record in the diary survey database.

Reminder Mailings. Nonrespondents will receive three additional prompts after the invitation letter via the mailing of two postcards (see Appendix 5 and 6) and a second letter (see Appendix 7).

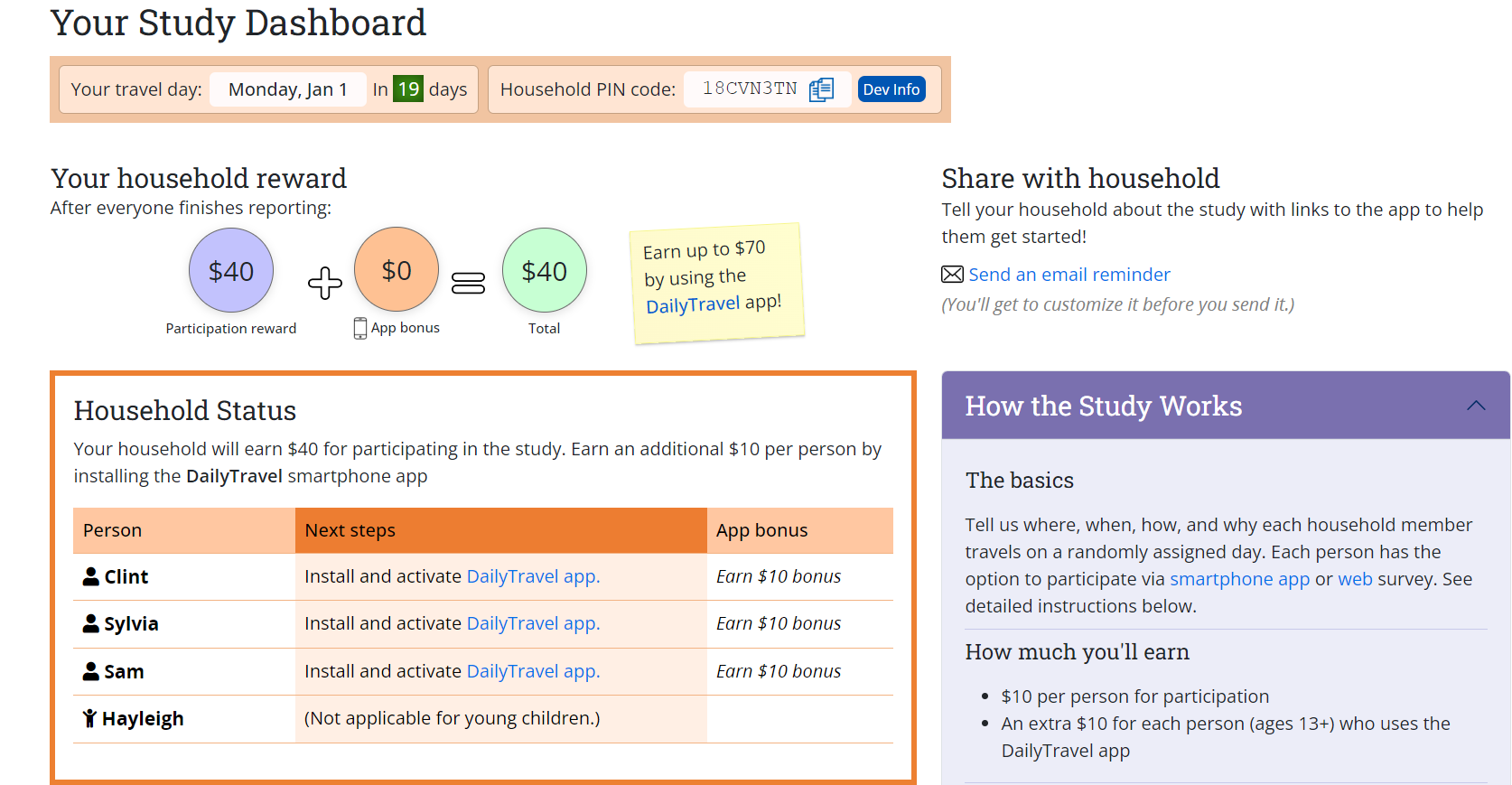

Travel Day Assignment. Once a participant completes the online recruitment survey, they will be routed to a participant dashboard (Figure 1 is an example of a dashboard used on a state travel study) designed to improve participation and present their assigned travel date, promote smartphone app use, communicate the steps to complete the travel diary survey and use gamification to show the total incentive their household is eligible to earn for participation. Households that complete the recruitment survey via telephone interview (5 – 10 percent), will be provided with the URL and their credentials to login to the participant dashboard.

Figure 1: Participant Dashboard Example

Diary Survey Invitation. The method of diary survey invitation will be varied based on household demographics, to provide a mode that increases their likelihood of participation. The overall content of the messaging will be consistent, but the mode (e.g., electronic or mailed letter invitation) will differ depending on the household’s assumed proclivity to use the smartphone app. Throughout the invitation and reminder process, participants will be continually encouraged to visit the participant dashboard, which will provide further detail about how to participate, the incentives that their household is eligible to earn, and a toll-free number that participants may call if they need help.

For smartphone households, in which the main respondent owns a smartphone, provides contact information, and is younger than 75 years of age, invitations to the diary survey will be sent electronically (e.g., email, text messages) detailing the smartphone-push approach, which guides participants on installing the app and on recording their travel for 7 days. Though the emphasis will be on recording travel via the app, because of improved data quality, online or telephone options will be offered as well so that households are able to participate in the mode that best suits them. Furthermore, household members may choose the individual participation mode they wish as well.

Non-smartphone households, in which the main respondent does not own a smartphone, does not provide contact information, or is older than 75 years of age, will be mailed a diary survey invitation package that includes a letter (Appendix 8) detailing the multimode approach to allow participants to report their travel by web, phone, or by using the Smartphone app3 to record 7 days of travel. The package will include a travel log (Appendix 9) for each household member, aged 5 and older, to jot down all the places they go on their assigned travel date. Packages will be mailed within a few days of recruitment completion with travel dates assigned at least 10 days in the future to allow for the package to arrive a few days prior to the travel date.

Statistical Methodology for Stratification

Please see write-up included in question 1.

Estimation Procedures

Sample weights for the 2024 NextGen NHTS will be developed and assigned to the records representing various analytic units associated with the study, including responding households, persons within responding households, vehicles, and trips. The weights will be designed to permit inference to the corresponding target populations. The weights will be designed to permit inference to the corresponding target populations. These “standard” weights will reflect a seven-day week. A second set of weights will be developed to reflect Monday through Friday travel days (weekday-only weights), and a third set of weights to reflect Saturday and Sunday travel days (weekend-only weights). The standard weights, weekday-only weights, and weekend-only weights will be created using the same procedures.

The primary set of household weights are the ‘useable household’ weights, in which households are defined as completed when travel behavior core data is collected for all eligible persons aged 5 and older in the household. The household-level weights are designed to represent all households in the study area. The vehicle-level weights are designed to represent all vehicles in the study area. The person-level weights are designed to represent all persons in the study area. The travel-day trip-level weights are designed to represent all trips in the designated time period in the study area.

The process of weighting has three major components. The first is the provision of a base weight, which is the inverse of the overall selection probability of each selected unit. Base weights are needed to account for the varying probabilities of selection among sample units in any given sample. Probabilities vary purposefully to some extent. Since no subsampling within households will take place, household- and person-level base weights will be identical. That is, the base weight for each person in a household is identical to their corresponding household base weight.

The second component of the weighting process consists of adjustments for nonresponse. Nonresponse can occur at the household level at the recruitment stage when a household declines to participate or at the retrieval stage when a household initially participates in the recruitment survey but does not actually participate in the retrieval survey. In either case, selected households who should have participated (according to the sampling scheme) ultimately did not participate. Results for the nonparticipating households are not reflected by the use of base weights, and therefore nonresponse adjustments to these weights are made in an effort to represent the full household population by adjusting the weights of the actual participating sample to account for the nonparticipating households.

The third major component of the weighting is the application of additional adjustments, including weight trimming and calibration. Unlike the previous two components, this step is not directed primarily at minimizing the bias of survey estimates, but rather at reducing their variance, permitting more precise statements to be made about the travel patterns and behavior and comparisons among subgroups and over time. Trimming consists of reducing the weights for each participating sample unit whose weight, as a result of the calculation of base weights and the application of nonresponse adjustments, makes an unduly large relative contribution to the total weighted data set. Calibration consists of adjusting the weights of sample units in population subgroups so that the sum is equal to an independent, relatively reliable benchmark estimate of the size of that population subgroup. Calibration will be done at both the household and person levels by using raking procedures. Characteristics such as race, ethnicity, Census Division, MSA size, household size, number of household vehicles, and worker status will be considered for calibration. The weight trimming procedure will be implemented iteratively with the raking process so that the trimmed portions of the weights will be redistributed across all the remaining weights. This will ensure that the final weights will achieve consistency with the external population distributions without any excessively large survey weights.

Deriving these components of survey weights and combining them to produce a final weight is standard practice in complex sample surveys with some nonresponse. The underlying principles and standard practices are described in texts on survey sampling (e.g., Sarndal, Swensson, and Wretman, 1992; Lohr, 1999; Heeringa et al., 2010) and are reflected in the survey contractor team’s standard weighting procedures.

In addition to the full-sample weights used to generate estimates from the survey, a set of replicate weights will also be created to allow users to compute variances of survey estimates and to conduct inferential statistical analyses. Replication methods work by dividing the sample into subsamples (also referred to as replicates) that mirror the sample design. A weight is calculated for each replicate using the same procedures as used for the full-sample weight (as described above). That is, the nonresponse, trimming, and calibration adjustments will be replicated so the replicate variance estimator correctly accounts for the effects of these adjustments. The variation among the replicate estimates is then used to estimate the variance for the survey estimates. Replicate weights for the NHTS sample will be generated using the jackknife procedure, in which sampled households are formed into groups reflecting the sample design and each replicate corresponds to dropping one group. The replicate weights can be used with a software package, such as WesVar, SUDAAN, STATA, SAS, or R to produce consistent variance estimators for totals, means, ratios, linear and logistic regression coefficients, etc. In addition, the survey contractor will provide the variables designating the variance strata and units to be used for Taylor series approximation.

Degree of Accuracy Needed

The goal is to achieve valid statistical representation (VSR) for a national sample of 7,500 completed households at a margin of error that does not exceed 5 percent with a confidence level of 95 percent. An effective sample size of 400 is required to meet this precision level. For the national and regional estimates, the requirement will be exceeded with 7,500 observations.

Table 3 shows the relative margins of error (MOE) for trip rates for several subgroups of interest highlighting the relative MOEs for both a 95 percent confidence limit and a 90 percent confidence limit. At the 95 percent confidence limit, cells with MOEs that exceed 5 percentage points are highlighted in red font and at the 90 percent confidence limit, cells with MOEs that exceed 10 percentage points are highlighted in red font. The results clarify that meeting precision requirements of MOEs within 5 percentage points at 95 percent confidence limit is not possible for all categories of all items. However, many more categories of interest in these items can meet the relaxed precision requirements of 90% and 10%.

There are 7 categories of respondents that are above the 10% MOE for Trip Rates, representing approximately 25% of the categories. As noted in Section 9 of Supporting Statement Part A, “as part of our survey approach to achieve a representative sample, we will recontact households that complete the recruitment survey but do not complete the travel diary survey. We will reassign them a new travel date and offer an additional $10 per household incentive, on top of their already promised incentive, for their participation.” This activity is further discussed here in Part B, immediately following Table 3 below.

Table 3. Relative margins of error for estimated total number of trips at 95% and 90% confidence levels for a national sample of 7,500 households

Subgroup |

Relative MOE* (95% CI)** |

Relative MOE (90% CI)*** |

Travel mode |

|

|

POV |

0.02 |

0.02 |

Transit |

0.10 |

0.08 |

Walk |

0.08 |

0.06 |

Other |

0.07 |

0.06 |

Household size |

|

|

One |

0.05 |

0.04 |

Two |

0.03 |

0.02 |

Three |

0.04 |

0.04 |

Four or more |

0.06 |

0.05 |

Household vehicle ownership |

|

|

0 vehicles |

0.10 |

0.08 |

1 vehicle |

0.06 |

0.05 |

2 vehicles |

0.03 |

0.03 |

3 vehicles |

0.07 |

0.05 |

4-or-more vehicles |

0.16 |

0.13 |

Gender |

|

|

Male |

0.03 |

0.03 |

Female |

0.02 |

0.02 |

Race |

|

|

White |

0.03 |

0.02 |

Black or African American |

0.03 |

0.03 |

Asian |

0.13 |

0.11 |

American Indian or Alaska Native |

0.38 |

0.32 |

Native Hawaiian or other Pacific Islander |

0.33 |

0.28 |

Multiple responses selected |

0.16 |

0.14 |

|

|

|

Employment status |

|

|

Working |

0.02 |

0.01 |

Temporarily absent from a job or business |

0.12 |

0.10 |

Looking for work / unemployed |

0.14 |

0.11 |

A homemaker |

0.07 |

0.06 |

Going to school |

0.09 |

0.08 |

Retired |

0.04 |

0.03 |

Something else |

0.07 |

0.06 |

* Relative MOE is the absolute MOE divided by the point estimate.

** Highlighted cells (red font) > 0.05

*** Highlighted cells (red font) > 0.10

Nonresponse Follow-up Methodology

A well-designed plan for follow-up of nonrespondents during data collection is critical to maximizing response rates. Because the information available to contact sampled households differs across the two phases of data collection, a nonresponse follow-up will be phase specific. As described in Part A, for the recruitment phase, a sequence of mail contacts will be made to each sampled household using information from the sample frame. Subsequently, during the recruitment survey contact information is collected for individual household members aged 16 and older. This information will be used during the diary survey phase to send telephone, email, app notifications, and text prompts. For example, a respondent, who provided their phone number and email address, could receive text or e-mail reminding them to start recording their travel tomorrow or prompting them to finish the survey. In addition to these messages, app respondents will also receive in-phone push notifications via the app.

Nonresponse may bias survey estimates if the characteristics of respondents differ from those of nonrespondents. Traditionally, the size of the bias has been viewed as a deterministic function of the extent and size of the response difference and the response rate (see, for example, Sarndal & Lundström, 2005,4 for discussion). More recently, the emphasis has shifted toward a stochastic perspective that characterizes nonresponse bias by examining the relationship between the key variable and the response propensity (Groves et al., 20075; Montaquila et al., 20076). Adjustments to the survey weights aim to reduce bias due to nonresponse. However, even with such adjustments, it is important to have a plan to evaluate the potential for nonresponse bias as described below.

With respect to nonresponse, the largest concern for the 2024 NextGen NHTS is nonresponse in the recruitment phase. There are a variety of methods available to assess nonresponse bias. Previous research has suggested that each of these methods has strengths and weaknesses, thus a multi-method approach is recommended for a comprehensive evaluation of nonresponse bias. In previous NHTS, the recruitment phase is the phase that historically yielded the bulk of the nonresponse. That is expected to be the case for the 2024 survey as well. The ABS approach affords the opportunity to link in covariates at both aggregate (e.g., tract-level characteristics from the American Community Survey) and address-level, for use in nonresponse adjustment and in bias analyses. In addition, the recruitment survey takes just five minutes to complete to reduce the initial burden of the respondent.

The recruitment survey contains several variables (e.g., number of household members, gender, and ethnicity) that may be associated with nonresponse to the retrieval phase and are associated with key survey outcome variables. Having this rich set of variables will be very useful for nonresponse adjustment and nonresponse bias analysis at the retrieval phase.

Describe methods to maximize response rate:

The study is implementing the best combination of low-cost and effective data collection modes to maximize response rates. As described in Part A, for the recruitment phase, a push-to-web design will be implemented with a series of mail contacts and an incentive strategy designed to get the household to complete a five-minute survey online. Subsequently, to improve cooperation, reduce burden and improve data quality, the smartphone mode and web modes will be encouraged. The telephone mode is being used to provide minor improvements to participation rates for a few respondents who will not participate by smartphone or web. Engaging respondents using user-friendly instruments is an essential ingredient to the success of any mode. Incentives are effective, but large incentives for low-cost alternatives are not necessary or even desirable.7 The survey design must appeal to the importance and relevance of the research and rely on social exchange theory8 as a way of generating responses. These principles have helped structure the research design.

The research approach is heavily influenced by the findings of previous research, especially Messer and Dillman (2011)9 and Millar and Dillman (2011)10 who explored ways of pushing sampled persons to the web to respond. Although the requirements for their surveys were very different from those of the Next Gen NHTS, their findings do generalize in several ways to this work. Other work in this area is also informative, but the lack of adequate experimental design in many cases makes it more difficult to generalize those results11,12.

The survey approach for the 2024 NextGen NHTS will prioritize assigning age-qualifying participants (aged 13 years and older) to use the smartphone app to record their travel. Households will be offered a mixed-mode data collection structure that allows them the opportunity to participate in the survey in their preferred mode. The app can be used at the individual level in addition to the household level, and participants within the same household can use multiple modes if desired. The survey contractor anticipates that 50 percent of households will have at least one smartphone app user.

Post-Recruitment Contacts. Contact information collected in the recruitment survey will be leveraged to send participants email, text, and interactive voice response (IVR) messages to increase engagement and prompt timely responses throughout data collection. Table 4 summarizes the planned contacts and reminders participants could receive. Electronic reminders (Appendix 10) will go out daily while alternating the content and timing of the messages to heighten effectiveness. The reminder system will automatically cease contact with a household once they complete the survey or refuse to participate.

Table 4: Summary of Data Collection Contacts with Households

Contact description |

Time sent |

Medium |

Invitation letter |

Upon release of the sample |

|

Initial reminder postcard 1 |

7 days after the sample is released |

|

Follow-up reminder postcard 2 |

14 days after the sample is released |

|

Nonresponse recruitment letter |

28 days after the sample is released |

|

Diary survey invitation |

Upon completion of recruitment |

Dashboard/email/mail |

Pre-travel date reminder |

The day before the first travel date |

Email/text/IVR |

Post-travel reminders |

Days 1 through 7 after travel date |

Email/text/IVR |

Smartphone app start travel |

The first travel day |

Phone notification |

Smartphone app daily survey |

At the end of each travel day |

Phone notification |

Nonresponse diary survey follow-up |

28 days after the travel date |

Email/text/mail |

Our mail communications will include a quick response (QR) code, linking to the web survey, along with a short URL, and toll-free hotline, maximizing options that work best for each participant. Further, a Spanish tagline will be included on all survey materials to provide instructions on how to complete the survey in Spanish.

A study website (Figure 2 is an example from a current study) will be developed made available, along with the online survey questionnaires, and smartphone app, in both English and Spanish. Professional bilingual interviewing staff for telephone support will also be available.

Figure 2: Example of Study Website

Diary Survey Nonresponse Follow-up. To achieve a representative sample, households that complete the recruitment survey but do not complete the travel diary survey will be recontacted as a part of the nonresponse follow-up protocol. These households will be reassigned a new travel date and offered an additional $10 per household incentive, on top of their already promised incentive, for their participation.

To facilitate responses from those with disabilities, the study will ensure that the study website and web instrument meet Section 508 compliance using the rules specified in sections 1194.22 – ‘Web-based intranet and internet information and applications’ and 1194.23 – ‘Telecommunications products.

All NextGen NHTS materials will be available in both English and Spanish language forms to help maximize participation by Hispanic households. An evaluation of previous surveys indicated that interviewing in Spanish was an important factor in gaining the cooperation of Hispanic respondents, a rapidly increasing proportion of the overall population. The Spanish translations of survey materials were developed using industry standards including reverse-translation protocols.

Describe tests of procedures or methods:

Mixed Mode Surveys

Experiments conducted by the survey contractor team,13,14 and confirmed by others,15,16 using mixed mode collection methods have found that telephone is not the best mode today for conducting interviews with ABSs. This does not imply telephone data collection cannot be used to enhance response rates, but that it is not as an effective choice as a primary mode. So, when pairing a data collection mode with an ABS frame, researchers have begun to look beyond the traditional telephone-based approach. Based on this research and the survey contractor team’s experience conducting national, state, and local surveys, the methodological approach planned for the 2024 NextGen NHTS is a mixed-mode design. Respondents will be able to use the web, a smartphone app, and the telephone to participate.

The push-to-web recruitment approach described here has been tested and found to be successful in several surveys with large sample sizes covering vast geographic regions. For these reasons, this approach has been selected in response to declining recruitment rates. In recent studies, the survey contractor team has observed response rates ranging from 28 to 38 percent with the proposed method.

Testing and Review

All field materials were subject to a series of tests to assess their utility and validity as survey instruments. These tests are described below.

Cognitive Testing

The survey contractor team conducted cognitive testing using in-depth, semi-structured interviews to gather insights into the cognitive sources of potential misinterpretation (Willis, 2005) for all new questions added since the 2022 NextGen NHTS. This qualitative methodology focuses on examining the respondents’ thought processes, allowing survey researchers to identify and refine question wordings that are either misunderstood or understood differently by different respondents; instructions that are insufficient, overlooked, or misinterpreted; ambiguous instructions; and confusing response options. In addition to question wording, cognitive testing can reveal any issues with the visual presentation of the survey and materials. Visual design elements such as color, contrast, or spacing are crucial to correctly guide respondents through an instrument.

In keeping with OMB regulations, the cognitive testing was limited to nine respondents per question. The survey contractor team conducted one set of 9 interviews with adults ages 18 and older to test all new questions to the 2024 NextGen NHTS. Respondent recruitment was purposeful and sought adults with a variety of day-to-day travel experiences. The interviewing staff consisted of senior survey methodologists who have extensive experience using cognitive interview methods to test survey questionnaires and materials. Results were compiled and presented to FHWA for consideration.

Survey Pretest

The pretest will include all facets of data collection and will be designed to complete surveys with 100 households. The pretest provides an opportunity to employ and evaluate all planned protocols and materials, and to determine whether changes are needed before the main survey is conducted. The final Survey Plan will reflect any findings from the Pretest. Details will include survey methodology, questionnaire design, sampling, nonresponse analysis, estimation procedures, and procedures and protocols used to conduct the study.

Provide name and telephone number of individuals who were consulted on statistical aspects of the IC and who will collect and/or analyze the information:

J. Michael Brick, Ph.D. 301-294-2004

Jill Montaquila 301-517-4046

Shelley Brock 301-517-8042

1 Group quarters, as defined by the Census, are places where people live or stay, in a group living arrangement, that is owned or managed by an entity or organization providing housing and/or services for the residents.

2 Roth, S.B., Han, D., and Montaquila, J.M. (2013). The ABS Frame: Quality and considerations. Survey Practice, 6(4). Available from http://surveypractice.org/index.php/SurveyPractice/article/view/73/pdf.

3 The app owned by the survey contractor team is designed to capture travel. See https://dailytravelapp.com/ for additional information.

4 Särndal, C. E., and Lundström, S. (2005). Estimation in surveys with nonresponse. John Wiley & Sons.

5 Groves, R. M., Couper, M. P., Presser, S., Singer, E., Tourangeau, R., Acosta, G. P., and Nelson, L. (2006). Experiments in producing nonresponse bias. Public Opinion Quarterly, 70(5), 720-736.

6 Montaquila, J. M., Brick, J. M., Hagedorn, M. C., Kennedy, C., and Keeter, S. (2007). Aspects of nonresponse bias in RDD telephone surveys. In Advances in telephone survey methodology (pp. 561-586). NJ: Wiley & Sons.

7 Han, D., Montaquila, J., and Brick, J.M. (2013). An evaluation of incentive experiments in a two-phase address-based sample mail survey. Survey Research Methods, 7, 207-218.

8 Dillman, D., Smyth, J., and Christian, L. (2009). Internet, mail, and mixed-mode surveys: The tailored design method. Hoboken, NJ: John Wiley & Sons.

9 Messer, B.L., and Dillman, D. (2011). Surveying the general public over the internet using address-based sampling and mail contact procedures. Public Opinion Quarterly, 75(3), 429-457.

10 Millar, M., and Dillman, D. (2011). Improving response to web and mixed-mode surveys. Public Opinion Quarterly, 75, 249-269.

11 Ormond, B.A., Triplett, T., Long, S.K., Dutwin, D., and Rapoport, R. (2010). 2009 District of Columbia Health Insurance Survey: Methodology report. Available from http://www.urban.org/publications/1001376.html.

12 Ormond, B.A., Triplett, T., Long, S.K., Dutwin, D., and Rapoport, R. (2010). 2009 District of Columbia Health Insurance Survey: Methodology report. Available from http://www.urban.org/publications/1001376.html.

13 Brick, J.M., Williams, D., and Montaquila, J. (2011). Address-based sampling for subpopulation surveys. Public Opinion Quarterly, 75, 409-428.

14 Edwards, S., Brick, J.M., and Lohr, S. (2013). Sample performance and cost in a two-stage ABS design with telephone interviewing. Paper presented at AAPOR Conference, Boston, MA.

15Leslyn, H., ZuWallack, R., and Eggers, F. (2012). Fair market rent survey design: Results of methodological experiment. U.S. Department of Housing and Urban Development. Available from http://www.huduser.org/portal/datasets/fmr/fmr2013p/FMR_Surveys_Experiment_Report_rev.pdf

16 Johnson, P., and Williams, D. (2010). Comparing ABS vs landline RDD sampling frames on the phone mode. Survey Practice, 3(3). Available from http://surveypractice.org/index.php/SurveyPractice/article/viewFile/251/pdf

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| File Modified | 0000-00-00 |

| File Created | 2025-05-19 |

© 2026 OMB.report | Privacy Policy