York River Pre-Test Survey Report

0648-0829 York River Pre-Test Survey Report.docx

Assessing Public Preferences and Values to Support Coastal and Marine Management

York River Pre-Test Survey Report

OMB: 0648-0829

York River Outdoor Recreation Survey: Understanding Visitor Experiences, Motivations, and Barriers

Pre-Test Survey Report

February 2025

Prepared for:

CSS-INC

National Centers for Coastal Ocean Science (NCCOS)

York River Outdoor Recreation Pre-Test Survey Report February 2025

Table of Contents

Project Background ....................................................................................................................2 Survey Methods..........................................................................................................................3 Results and Analysis ..................................................................................................................6 Conclusions..............................................................................................................................11 List of Appendices ....................................................................................................................12 References...............................................................................................................................12

York River Outdoor Recreation Pre-Test Survey Report February 2025

Project Background

This report examines the pre-test survey results from the 2024-2025 administration of the York River Outdoor Recreation Survey. This survey was conducted for the National Centers for Coastal Ocean Science (NCCOS) to collect statistically representative and reliable information from a sample of residents one hour from the York River. Data from the survey is intended to help characterize stakeholder activities, attitudes, knowledge, and preferences, for the Chesapeake Bay National Estuarine Research Reserve System (NERRS) in Virginia. This information will be used by the National Oceanic and Atmospheric Administration (NOAA), local policymakers, and others to understand human pressures on the York River and surrounding parks and natural areas, potential barriers to access for communities, and preferences related to management actions.

The 2024 York River Outdoor Recreation Survey was conducted by the Survey and Evaluation Research Laboratory (SERL) which is part of the Center of Public Policy (CPP) in the L. Douglas Wilder School of Government and Public Affairs at Virginia Commonwealth University (VCU), under contract to NCCOS.

Pre-Test Survey Development

The NCCOS team approached SERL with a draft questionnaire. SERL reviewed the draft questionnaire and made recommendations based on previous data on outdoor recreation from the Virginia Department of Conservation’s 2022 Outdoor Recreation survey. In accordance with VCU’s Institutional Review Board, the SERL and NCCOS team made minor revisions to the existing questionnaire including language to discourage minors (under 18 years of age) from participating in the survey. SERL and NCCOS worked together to enhance the flow of the survey and clarify questions. Other edits made included adjusting title page and map graphics for printing purposes and other minor formatting changes.

Survey platform selection

SERL contacted VCU Technology Services and verified that Qualtrics, the survey software used to administer the web version of the survey, was approved to handle Category II Information/Data, which was the type of content anticipated to be collected during the York River Outdoor Recreation Survey. SERL confirmed Qualtrics was FedRAMP® (Federal Risk and Authorization Management Program) Authorized.

VCU Institutional Board Approval (IRB)

SERL consulted with VCU IRB about the York River Outdoor Recreation Survey. All members of the project team completed initial or refresher human subjects research training through CITI (Collaborative Institutional Training Initiative) as required by VCU IRB. In July 2024, SERL initiated the first IRB submission. Drafts of the questionnaire and recruitment materials were submitted. Initial IRB approval was granted on July 25, 2024. VCU IRB determined that this protocol meets the criteria under 45 CFR 46.104(d), category, for exemption under IRB review:

“Research that only includes interactions involving educational tests, survey or interview procedures, or observation of public behavior when the information obtained is recorded in a manner that the identity of the subjects cannot readily be ascertained.”

In September 2024, SERL submitted an amendment to VCU IRB to reflect small modifications to the project (e.g. clarifying who the $2 incentive was from and adding the PI’s contact information to the survey invitation letter). The amendment was approved by VCU IRB on September 4, 2024.The study number was #HM20030190. IRB approval letters can be found in the Appendix A.

Survey Translation

Creating a language translation for the survey was discussed between the NCCOS team and SERL. The web survey platform utilized (Qualtrics) provides language translation functionality. SERL contracted a Professor of Spanish Translation & Interpreting Studies from the School of World Studies at VCU to translate the survey instrument to Spanish. The translation was entered into the Qualtrics platform and reviewed by a SERL team member proficient in Spanish. Both Qualtrics survey instruments can be found in Appendix B.

Survey Methods

Survey Completion Incentives

Based on discussions with the NCCOS team, SERL arranged to include a $2 cash incentive in the initial mailing of the survey in order to increase response rates. A literature review providing support for this conclusion is summarized later in this report.

Sample Design

NCCOS provided a sample design in their Statement of Work based on a two-stage stratified random sampling plan. NCCOS provided an updated sample design in November 2024 (Appendix C). The two-stage stratified random sampling plan was stratified geographically by county and block with households randomly selected within stratum and individuals. The sampling frames included all residential households within the requested strata, excluding non-residential units, and each address corresponds to a single household. SERL added FIPS code to this sampling plan. The mailing addresses for the sample frame were acquired through Dynata. The following process was used for the Dynata ABS sample:

1. Qualifying addresses are determined based on the selected geography and any demographic criteria requested. The selected addresses (universe) are then sorted by FIPS, ZIP and ZIP4.

2. A sampling interval is determined by dividing the desired quota by the universe.

3. A random start point is determined within the first interval and the sample is Nth-d through until the desired sample size is attained.

Additional information about the Dynata ABS File construction is included in the Appendix F. Survey Printing, Distribution, and Mailing

Individuals in the pilot sample received up to

four mailings contacts: (1) an invitation letter with a $2 cash

incentive, (2) a reminder postcard, (3) a paper survey booklet with a

cover letter and business reply return envelope, and (4) a final

reminder postcard. Each letter/postcard invited the recipient to

complete the survey online using the URL, Survey ID and PIN provided.

Contacts also include a Quick Response (QR) code and text back number

to access the survey. Respondents had to type in their unique survey

code to begin the questionnaire regardless of which one of these

methods they used. Letters/postcards were printed and mailed by Worth

Higgins & Associates working in collaboration with SERL. All

outbound mailing materials traveled using non-profit postage permit

No. 889. The business reply envelope for respondents to mail back

their survey packet (in the third mailing) was first class mail. Each

envelope was addressed to “VIRGINIA RESIDENT”. Throughout

fielding, SERL maintained a “pull list” to remove survey

completers from successive mailing waves. Table 1 summarizes the

mailing information. One SERL staff and three seed mails were

included to monitor USPS processing and mailing times. The

third-party printer required several days to update the mailing list,

so everyone in the first mailing also received the second postcard.

Additionally, everyone that received mailing 3 also received mailing

4. Recruitment materials can be found in the Appendix D. The survey

booklet can be found in Appendix E. Mailing materials included

instructions to contact SERL at [email protected] if potential respondents

had issues accessing the survey or questions about the survey.

Table 1: Pre-test mailing information

-

Pre-test Survey

Mailings

Description

Mailing Count*

Dates

Invitation Letter +

Incentive

Letter - white paper, personalized, color ink, VCU + NOAA logos, personalized, one sided, tri-folded inserted

Envelope - #10 white envelope, color, VCU logo

$2 incentive - two, $1 bills

1,171

November 27, 2024

Reminder Postcard

Cardstock, quarter page, personalized on both sides, color ink

1,171

December 4, 2024

Reminder Letter + Paper Booklets +

BRE envelope

Booklet - 2 sheets of white 11” x 17” paper, folded and saddle stapled,

Letter- white paper, color ink, personalized

Business Reply Envelope - White, 9” x 11”, black ink

Packaged in a 10” x 13” catalog envelope

1,067**

January 6, 2025

Final Reminder

Postcard

Cardstock, quarter page, personalized on both sides, color ink

1,067

January 22, 2025

*The

total count does not include the 4 additional seed mailing.

**

Two booklets were returned as undeliverable mail as of 2/18/2025

Quality Control Procedures

The SERL project team attended to quality control in several different ways. Prior to launch, SERL and NCCOS staff reviewed and proofed the survey multiple times. SERL also instituted a login credentials requirement for the web-based version of the pre-test survey; each respondent had to input a unique survey ID and PIN to start the questionnaire. Quality checks of the recruitment materials were done virtually by SERL after receiving pdfs proof of the mailing. A SERL staff member was included in each mailing as a mailing quality check. For received paper booklets, SERL recorded the date each survey was received. SERL staff entered the paper booklet data in a Qualtrics form (separate from the web survey). A separate SERL staff verified that data from each paper survey was entered correctly.

Based on the timing of each mailing, it is possible that a respondent could have completed the survey online and also mailed back a completed booklet. A respondent may have completed the survey online after the list of non-respondents was generated and sent to the printing vendor. SERL made every effort to only send mailings to non-respondents, but it is possible that respondents were included in later mailings depending on when they completed their online survey.

SERL did not receive inquiries about the survey at the SERL email address, suggesting the Qualtrics instrument worked as intended.

Challenges to printing and mailing

SERL had previously worked with the printing vendor for multiple large, mail to web surveys. The printing vendor underwent staff changes during December and did not inform SERL. The vendor did not communicate with SERL over the December holiday that printing of the booklets had been delayed. SERL is seeking out additional printing vendor options for the production mailing.

Based on response times and when SERL staff received each mailing, the invitation letter and postcards (mailings 1, 2, and 4) were received within 2 business days of postage. However, the third mailing (booklet) took 7-10 days to arrive. Starting on January 6th, 2025, Richmond and surrounding counties experienced a water outage, which may have contributed to delays in mailing since mail was sent from Richmond. SERL tracked undeliverable mail. To calculate the response rate, these undeliverable mailings were removed from the initial sample count.

Results and Analysis

Table 2: Pre-test response completions information

Initial Sample |

Undeliverable mail |

Web completions |

Paper completions |

Duplicate* completions |

Response rate |

1171 |

9 |

122 |

32 |

1 |

13.2% |

*If respondent completed both web and paper surveys, their completion was only counted once in the response/completion rates.

Undeliverable mail

SERL received a total of 9 pieces of mail as undeliverable mail – seven invitation letters were returned and two paper booklets packets. It is possible that SERL will receive additional undeliverable mailing materials.

Response and completion rates

SERL received a total of 154 responses, with one respondent completing the survey both by paper and web, for a total of 153 unique completions and an overall response rate of 13.2% (153/1162, Table 2). Thirty-two respondents completed the survey via paper. This was 3.0% of all booklets sent out and received by respondents (n = 1065), and 20.9% of all survey completions. Over 96% of web-based participants (118) made it all the way through the survey once they started. Three web respondents answered less than 80% of questions and one paper respondent answered only one question. Excluding these four individuals, the completion rate would be 149/1162 = 12.8%.

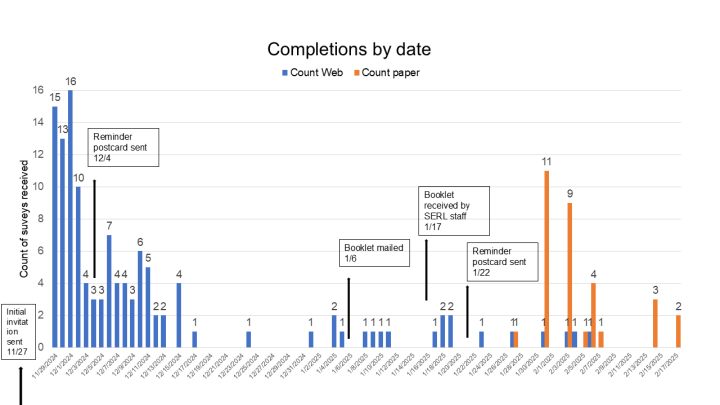

Timing of responses

Assuming respondents received the first

reminder postcard (mailed on December 4, 2024) on December 6, 2024,

the first 64 completions would be attributed to the invitation letter

(52.5% of total web completions). The third mailing (the booklet) was

mailed from Richmond on January 6, 2025. However, SERL staff in the

mailing did not receive the booklet until January 17, 2025.

Completions between December 7th

and January 17th

could be attributed to the

first postcard reminder. Assuming receipt of the third mailing was

similarly delayed, it is possible respondents received their packet

followed closely by the final postcard reminder. Key mailing dates

and completions are shown in Figure 1. In addition to the paper

completions, the booklet mailing also resulted in boosting later web

responses as evidenced by the 11 web completions in late January. The

median response time was 28 days. Approximately 81% of paper surveys

were received within 31 days of the initial mailings.

Figure 1: Timing of completions by mode

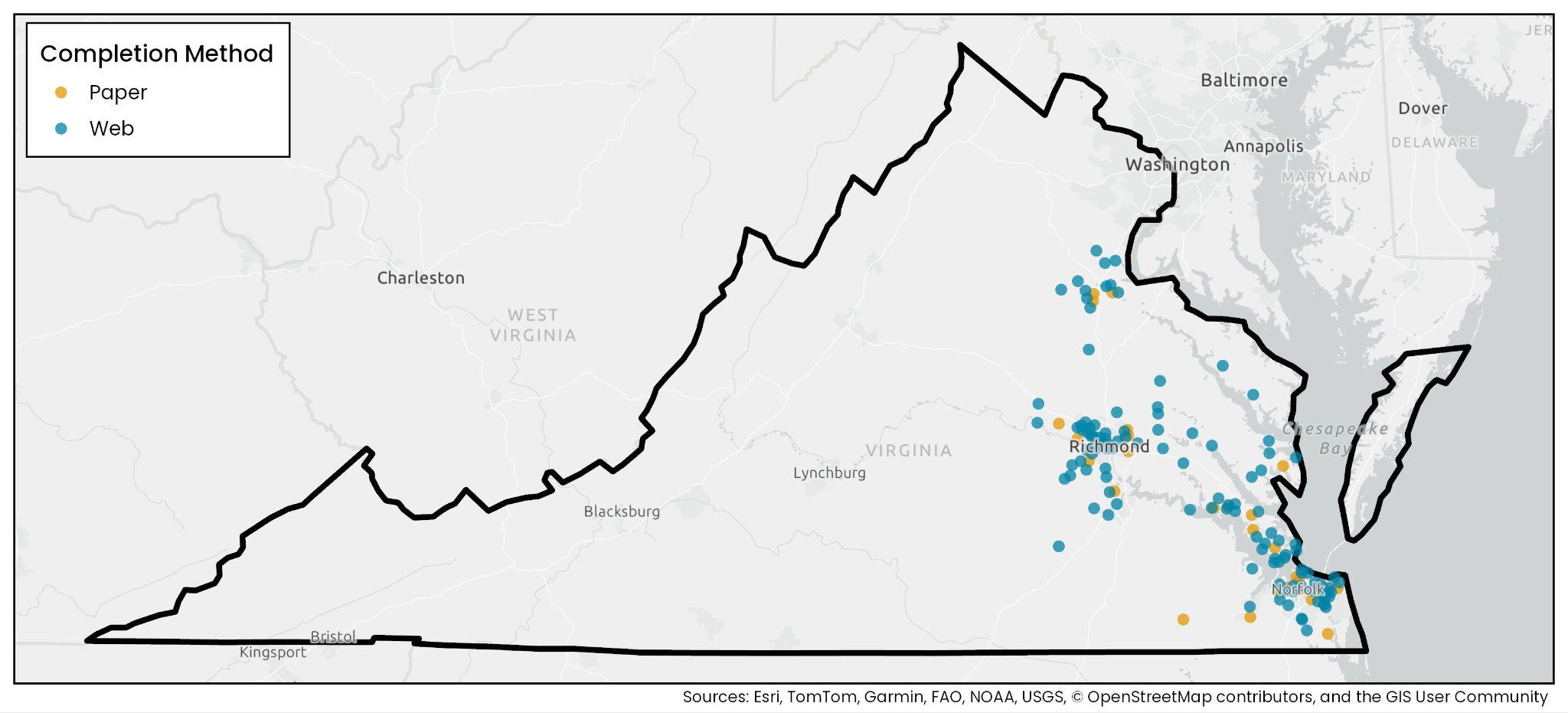

Geographic distribution of pre-test respondents

The geographic distribution of respondents by

completion method is displayed in Figure 2. Locations were mapped

using latitude/longitude coordinates of respondents’ sampled

mailing addresses.

Figure

2: Geographic distributions of respondents

Web responses

On average, web respondents completed the survey in under 10 minutes. One respondent completed the survey in approximately 87 minutes, but this is likely because they left their browser open, rather than actively completing the survey. All respondents completed the survey in English. Fifty-eight (58) respondents scanned the QR code and 64 used an anonymous link to access the survey. Respondent with ID 1974 completed the survey twice both via paper and web. All surveys were completed in English.

Open ended responses

At the end of the York River Outdoor Recreation Survey participants were invited to share open-ended written comments. A total of 32 comments were received across both the web and paper completions. All responses were reviewed by SERL staff. A formal thematic analysis of remarks was not conducted, but following are overall themes that emerged from a review of these comments in the pre-test.

Comments about incentives

Respondent expresses gratitude for incentive or other comments about the incentive.

“You might like to know that I completed this survey because of the cash incentive. I appreciate that you were willing to risk even a few dollars per response, and provided the incentive without requiring me to activate cards or submit forms.”

Comments about parks and facilities

Respondents answered with feedback about specific parks and suggestions about parks.

“The York River area is a wonderful resource for outdoor recreation. I especially like the facilities at Back Creek and at Gloucester Point. There needs to be more of these areas throughout Virginia's tidal rivers.”

You should only put signage in English. You can't provided signage in every language! If people come to America, they need to learn English!

Comments about their own park usage

Participants referenced their own usage of parks and outdoors spaces and how this may have influenced their answers to survey questions.

“We visit Buggs Island/Kerr Lake a lot, so we do love the outdoors. We just really do not travel that way.” “I'd go enjoy the outdoors more often, but as a grad student, college sucks up all my free time.”

“I'm glad there are such parks so that people can enjoy our beautiful area; however, this just isn't a priority for me personally at this stage of my life.”

Comments about the survey

Participants offered their opinions about the survey instrument. Both positive and negative comments are included in this theme,

“Why do you ask the personal questions? I like the outdoors, hiking, boating, etc. but I don't think it's necessary for me to let you know anything else about my personal life.”

“I don’t mind taking surveys. This is the first one i have ever received “

In response to comments about the demographic questions, the initial survey page clearly states that respondents can skip questions. The production survey could make it clearer to respondents they can skip the demographic questions by including a reminder before the demographic questions (e.g., before Question 27).

Literature Review - Incentives

Unconditional (prepaid/non-contingent/up-front/token) incentives are received with the survey invitation itself. Unconditional incentives are based on social exchange theory/altruism, and assume that because the recipient has received a gift, they will reciprocate and complete the survey (Dillman et al. 2014). The incentive creates a feeling of goodwill and encourages the respondent to read and consider the request, and ultimately complete the survey.

Multiple meta-analyses suggest that unconditional incentives are more likely to induce participation than are conditional incentives (Church, 1993; Edwards et al., 2009; Young et al., 2015; Robbins et al., 2018; Rao et al., 2020), although the effect may vary and may be confounded across survey modes (Cook et al., 2000; Mercer et al., 2015). For example, in surveys recruited only by web-based or electronic means, it is not possible to give cash up-front to a participant, although digital payment methods may help close this gap (Neman et al., 2022).

Unconditional incentives positively influence response rates, although the magnitude of this effect varies. Porter & Whitcomb’s (2011) suggests that up-front cash (from 50 cents to $5) increases response rate anywhere from 8.7 to 24 percentage points. Dillman et al. (2014) found that $2 incentives may boost response rates by 12 to 31 percentage points. Butler et al. (2017) found that a $2 incentive increased the response rate by 13 percentage points in a survey of family forest owners.

In mail surveys, unconditional incentives had the greatest per-dollar effect on response rate compared to other types of incentives, but the impact of each additional pre-paid dollar is non-linear and quickly asymptotic – the so-called “dose response.” Beyond the first dollar, each additional dollar has a smaller positive effect on response rates (Mercer et al., 2015). Gneezy & Rey-Biel (2014) tested unconditional incentives from $1 to $30 and found that an unconditional incentive greater than $8 does not result in further gains in response rates. Other NOAA surveys (e.g., NOAA’s Fishing Effort Survey) typically include a $2 incentive (Andrews et al. 2014, Anderson et al. 2021, Carter et al. 2024). Additionally, unconditional incentives may reduce non-response bias by increasing the propensity of some respondents to respond, although there is mixed empirical support for this theory (Groves et al. 2006, Oscarsson & Arkhede 2019). Recent work has also explored making unconditional incentives more obvious- this can improve response rate by 1 to 4 percentage points (Debell et al. 2020, Debell 2023, Zhang et al. 2023). Increasing the visibility of the unconditional incentive is presumed to increase the likelihood a respondent will open the initial invitation letter, and thus respond to the survey.

The last question in the York River Outdoor Recreation Survey asked if respondents had any additional comment. Three respondents expressed their gratitude for the incentive. One respondent wrote

“You might like to know that I completed this survey because of the cash incentive. I appreciate that you were willing to risk even a few dollars per response, and provided the incentive without requiring me to activate cards or submit forms.”

Although anecdotal, this comment illustrates how unconditional incentives function to elicit responses, and specifically how cash (rather than gift cards) can help boost completion rates.

Summary

The literature supports a $2 unconditional incentive for the York River Outdoor Recreation survey. Given the lower-than-expected responses rate in the pre-test (below 15%), SERL does not recommend eliminating the pre-paid incentive for this survey, despite the associated cost savings. Across survey modes, approximately 64% responded they had not visited the York River or surrounding parks in the last 12 months. If the York River Outdoor Recreation is interested in capturing barriers to access, the incentive may help motivate those not interested in the York River to respond. While offering only $1 could be explored, due to rising inflations since 2019, $2 to $5 are now typically offered as pre-paid incentives. Given the length and complexity of the survey (~36 questions), $2 seems to be an ideal incentive amount. SERL could explore making the cash incentive more obvious if the NCCOS is interested in this option.

Conclusions

The York River Outdoor Recreation pre-test survey had a slightly lower response rates than comparable parks and recreation surveys – 13.2% compared to 14.6% for the statewide 2022 Virginia Outdoor Surveys by the Virginia Department of Conservation and 15.0% for the Chesterfield Parks and Recreation Survey. Parks and outdoor recreation surveys are typically launched in the Spring and Summer and it is possible there was a lower interest in a survey about outdoor recreation during the Winter. Additionally, it is possible that respondents are more motivated to respond to letters from a local, county government and/or a Virginia state agency. Although the initial pre-test survey invitation would have been received after the Thanksgiving holiday and before the start of Hanukkah/Christmas, it is possible the holiday season depressed survey response rates overall. The production survey mailing should be launched to avoid any major holidays (e.g., Memorial Day).

More than 50% of the web completions occurred shortly after the invitation letter and incentive were received (Figure 1). The first postcard reminder also elicited a large portion of Web responses, although we are not able to ascertain exactly when each household received the first reminder. SERL could explore making the incentive more obvious (see literature review), although this could bring additional concerns

The holiday season and issues with the printing vendor delayed the mailing of the booklet. Additionally, the Richmond water crisis may have lengthened mailing times. The large gap between the initial mailing and the booklet may have contributed to lower paper completion rates. For the production survey, SERL will partner with a new printing vendor that will be able to meet a more streamlined mailing schedule.

No respondents completed the survey in Spanish. This is not unusual, as previous Virginia parks and recreation fielded by SERL also did not receive Spanish completions when one is offered. The production survey could highlight the Spanish language option in recruitment materials.

The Qualtrics survey performed well – most web respondents that started the survey completed the survey. SERL did not receive inquiries about access issues to the survey. There were no major issues in the open-ended comments about the survey Respondents that completed the paper by mail were able to follow the skip-logic instructions.

List of Appendices

Appendix A – VCU IRB approval letters (pp 1 – 5)

Appendix B – Qualtrics Instruments (pp 6 – 54)

Appendix C – Sample Design (pp 55 – 85)

Appendix D – Recruitment Materials (pp 86 – 95)

Appendix E – Survey Booklet (pp 96 – 104)

Appendix F – Dynata ABS File Construction (pp 105 – 106)

References

Anderson, L., Jans, M., Lee, A., Doyle, C., Driscoll, H., & Hilger, J. (2021). Effects of survey response mode, purported topic, and incentives on response rates in human dimensions of fisheries and wildlife research. Human Dimensions of Wildlife, 27(3), 201–219. https://doi.org/10.1080/10871209.2021.1907633

Andrews R., Brick J. M., Mathiowetz W. N. A. (2014). Development and Testing of Recreational Fishing Effort Surveys. National Oceanic and Atmospheric Administration, National Marine Fisheries Service, Silver Spring.

Butler, B. J., Hewes, J. H., Tyrrell, M. L., & Butler, S. M. (2017). Methods for increasing cooperation rates for surveys of family forest owners. Small-Scale Forestry, 16(2), 169–177. https://doi.org/10.1007/s11842-016- 9349-7

Carter D. W. et al. (2024). A comparison of recreational fishing demand estimates from a mail-push versus email-only sampling strategy: Evidence from a Survey of Gulf of Mexico Anglers, North American Journal of Fisheries Management

Church, A. H. (1993). Estimating the effect of incentives on mail survey response rates: A meta-analysis. Public Opinion Quarterly, 57(1), 62–79. https://doi.org/10.1086/269355

Cook, C., Heath, F., & Thompson, R. L. (2000). A meta-analysis of response rates in Web- or internet-based surveys. Educational and Psychological Measurement, 60(6), 821–836.

https://doi.org/10.1177/00131640021970934

DeBell M., Maisel N., Edwards B., Amsbary M., Meldener V. (2020). Improving survey response rates with visible money. Journal of Survey Statistics and Methodology, 8, 821–831.

DeBell, M. (2023). The visible cash effect with prepaid incentives: Evidence for data quality, response rates, generalizability, and cost, Journal of Survey Statistics and Methodology, 11(5), 991–1010. https://doi.org/10.1093/jssam/smac032

Dillman, D. A., Smyth, J. D., Christian, L. M. (2014). Internet, phone, mail, and mixed-mode surveys: The tailored design method. John Wiley and Sons Inc.

Edwards, P. J., Roberts, I., Clarke, M. J., DiGuiseppi, C., Wentz, R., Kwan, I., Cooper, R., Felix, L. M., & Pratap, S. (2009). Methods to increase response to postal and electronic questionnaires. Cochrane Database of Systematic Reviews, 3(3). https://doi.org/10.1002/14651858.MR000008.pub4

Gneezy, U., & Rey-Biel, P. (2014). On the relative efficiency of performance pay and noncontingent incentives. Journal of the European Economic Association, 12(1), 62–72. https://doi.org/10.1111/jeea.12062

Groves, R., Couper, M., Presser, S., Singer, E., Tourangeau, R., Acosta, G., and Nelson, L. (2006). Experiments in producing nonresponse bias, Public Opinion Quarterly, 70, 720–736.

Mercer, A., Caporaso, A., Cantor, D., & Townsend, R. (2015). How much gets you how much? Monetary incentives and response rates in household surveys. Public Opinion Quarterly, 79(1), 105–129. https://doi.org/10.1093/poq/nfu059

Neman, T. S., Dykema, J., Garbarski, D., Jones, C., Schaeffer, N. C., & Farrar-Edwards, D. (2022). Survey monetary incentives: Digital payments as an alternative to direct mail. Survey Practice, 15(1), 1–7. https://doi.org/10.29115/sp-2021-0012

National Marine Fisheries Service Office of Science and Technology (2023) Marine Recreational Information Program: Survey Design and Statistical Methods for Estimation of Recreational Fisheries Catch and Effort. Silver Spring: National Oceanic and Atmospheric Administration.

Oscarsson, H., & Arkhede, S. (2019). Effects of conditional incentives on response rate, non-response bias and measurement error in a high response-rate context. International Journal of Public Opinion Research. doi:10.1093/ijpor/edz015

Porter, S. R., & Whitcomb, M. E. (2011). The impact of lottery Incentives on Student Survey Response Rates. Research in Higher Education, 44(4), 389–407.

Rao, N. (2020). Cost effectiveness of pre- and post-paid incentives for mail survey response. Survey Practice, 13(1), 1–7. https://doi.org/10.29115/sp-2020-0004

Robbins, M. W., Grimm, G., Stecher, B., & Opfer, V. D. (2018). A comparison of strategies for recruiting teachers into survey panels. SAGE Open, 8(3). https://doi.org/10.1177/2158244018796412

Young, J. M., O’Halloran, A., McAulay, C., Pirotta, M., Forsdike, K., Stacey, I., & Currow, D. (2015). Unconditional and conditional incentives differentially improved general practitioners’ participation in an online survey: Randomized controlled trial. Journal of Clinical Epidemiology, 68(6), 693–697. https://doi.org/10.1016/j.jclinepi.2014.09.013

Zhang, S., West, B. T., Wagner, J., Couper, M. P., Gatward, R., & Axinn, W. G. (2023). Visible cash, a second incentive, and priority mail? An experimental evaluation of mailing strategies for a screening questionnaire in a national push-to-web/mail survey. Journal of Survey Statistics and Methodology, 11(5), 1011–1031.

13

| File Type | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| Author | Aleia Fobia |

| File Modified | 0000-00-00 |

| File Created | 2025-06-12 |

© 2026 OMB.report | Privacy Policy