Debriefing Interviews for the 2023 AIES

Attachment H - Debriefing Interviews for the 2023 AIES.docx

Annual Integrated Economic Survey

Debriefing Interviews for the 2023 AIES

OMB: 0607-1024

Attachment H

Department of Commerce

United States Census Bureau

OMB Information Collection Request

Annual Integrated Economic Survey

OMB Control Number 0607-1024

Debriefing Interviews for the 2023 AIES

September 30, 2024

Cognitive Testing Support Project: Final Report on Debriefing Interviews of the Annual Integrated Economic Survey (AIES)

Final Report

Prepared for

Economy-Wide Statistics Division

Economic Statistics and Methodology Division

The U.S. Census Bureau

Prepared by

Y. Patrick Hsieh, PhD, Katherine Blackburn,

Victoria Dounoucos, PhD, Tim Flanigan, and Chris Ellis

RTI International

3040 E. Cornwallis Road

Research Triangle Park, NC 27709

The Census Bureau has reviewed this data product to ensure appropriate access, use, and disclosure avoidance protection of the confidential source data (Project No. P-7530157, Disclosure Review Board (DRB) approval number: CBDRB-FY25-ESMD001-001).

Contents

Section Page

1. Introduction 1-1

2. Methodology 2-1

2.1 Goals And Research Questions 2-1

2.2.1 Population of Interest 2-1

2.2.2 Participant recruitment 2-1

2.2.3 Debriefing interview protocol and procedure 2-3

3. Findings from the Debriefing Interviews 3-1

3.1 Module 1: Introduction (n=51) 3-1

3.1.1 Process of responding to Census Bureau surveys 3-1

3.1.2 Process of finding the data for Census Bureau surveys 3-4

3.1.3 Process of entering the data for Census Bureau surveys 3-5

3.2 Module 2: Responding to the AIES (n=51) 3-6

3.2.1 Overall positive experience with the AIES 3-6

3.2.3 Company data does not match Census Bureau requests 3-10

3.2.4 Customizing reports and other accommodating activities for responding to Census surveys 3-11

3.2.5 High respondent burden associated with the integrated survey design for the new AIES 3-14

3.2.6 Question comprehension and applicability 3-15

3.2.7 Redundant or duplicative questions 3-18

3.2.8 Technical issues related to reporting for the AIES 3-19

3.3 Module 3: Locations in Puerto Rico (n=13) 3-21

3.4 Module 4: Explicit Response Choice and Error Checking (n=51) 3-22

3.4.1 Decision to use downloadable Excel file or online spreadsheet 3-22

3.4.2 Feedback about using online spreadsheet for data entry 3-27

3.4.3 Feedback about using downloadable Excel file for data entry 3-28

3.4.4 Additional feedback on reporting to the AIES independent from both methods 3-33

3.5 Module 6: Web Standards Exploratory (n=26) 3-37

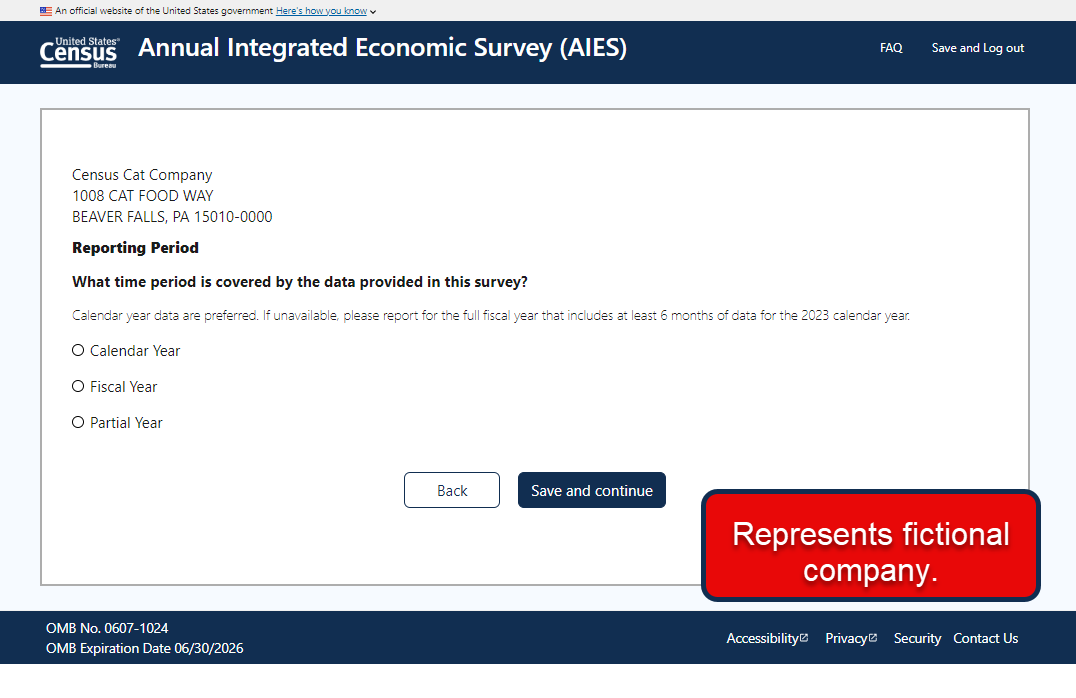

3.5.1 Screenshot 1: Step 2 Reporting Period 3-37

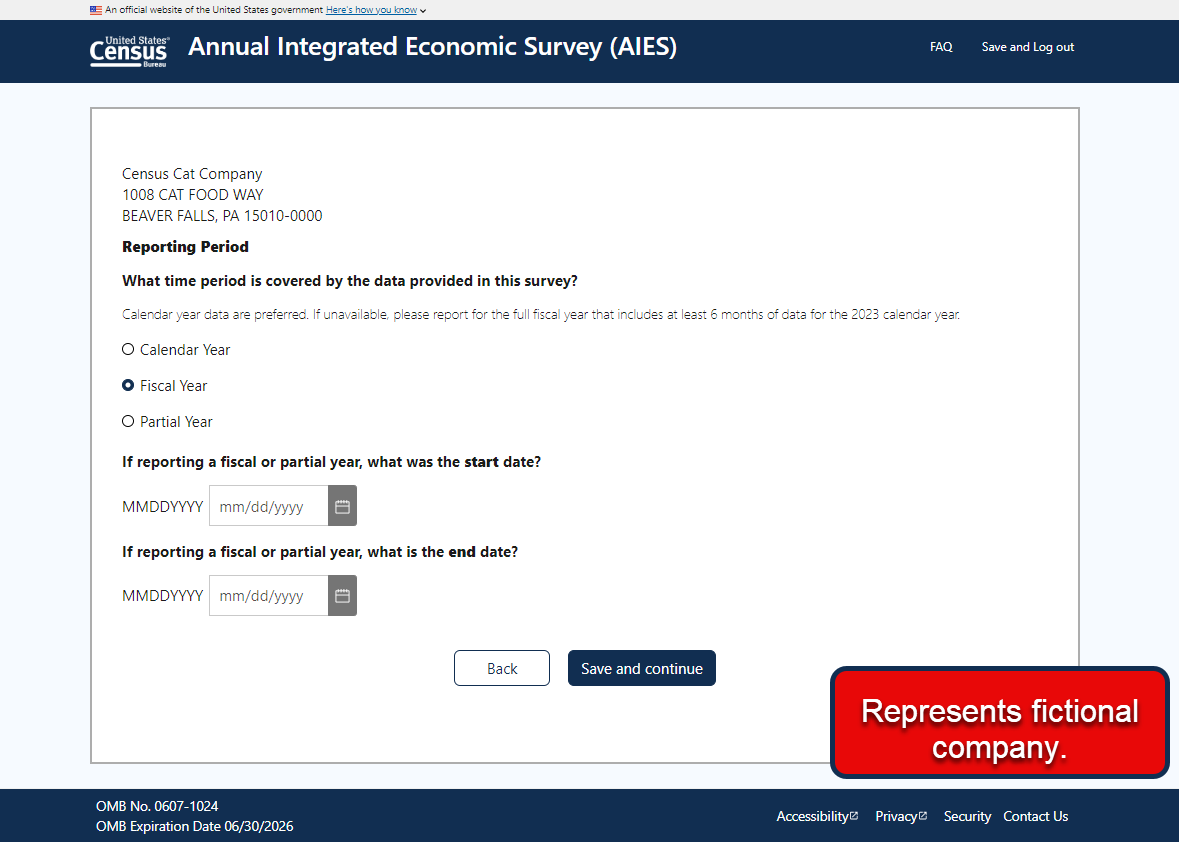

3.5.2 Screenshot 2: Fiscal Year Selected 3-39

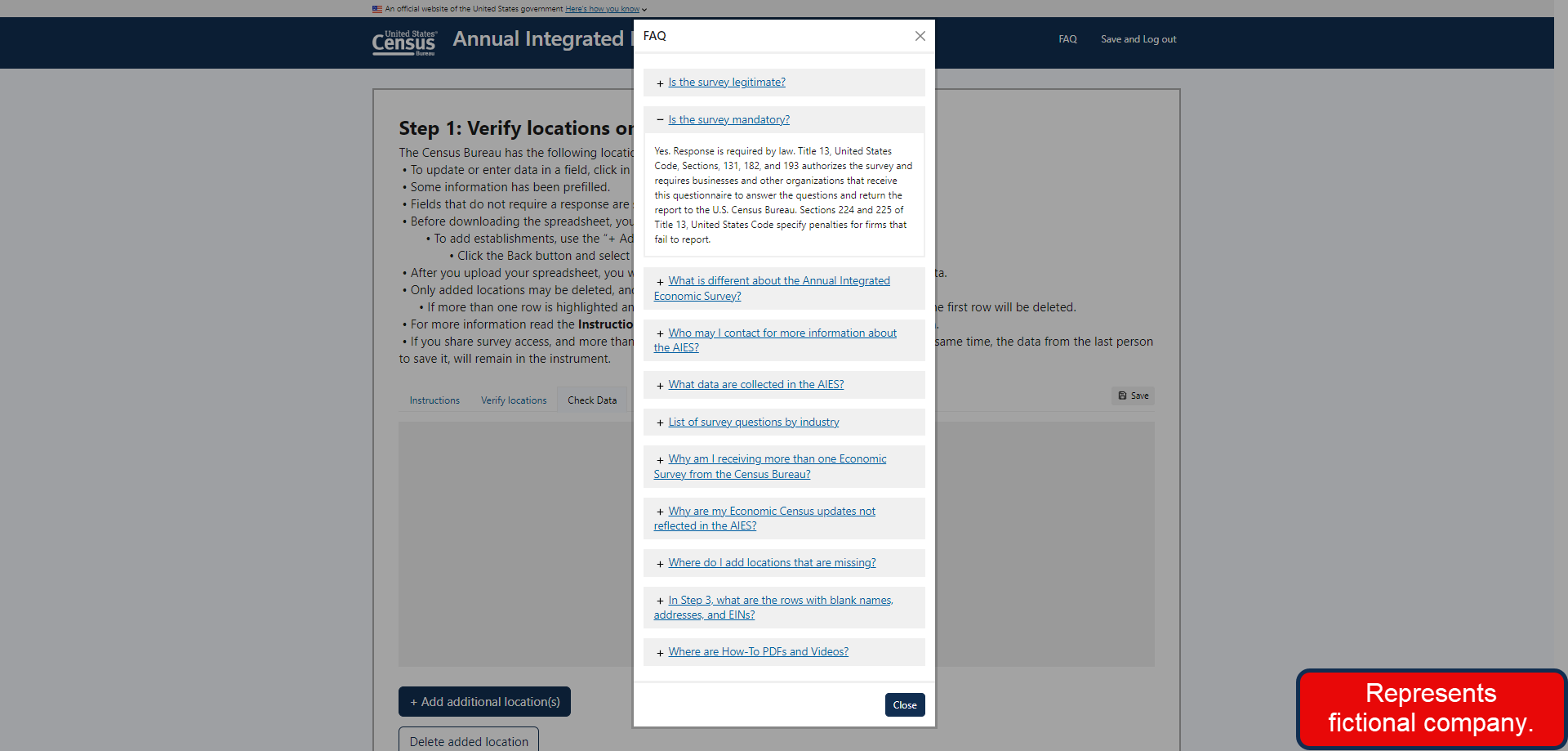

3.5.3 Screenshot 3: FAQ Modal Window 3-39

3.5.4 Screenshot 4: Step 2 Grid Format 3-40

3.6 Module 7: Interactive Content Tool (Protocol Version A, n=11) 3-42

3.6.1 Comprehension, prior knowledge, and navigation of the interactive content tool 3-42

3.6.2 Ease of selecting the industry that best represents the company 3-43

3.6.3 Engagement with the question export functionality 3-44

3.7 Module 8: AIES Website (Protocol Version B, n=13) 3-45

3.7.1 Feedback on the AIES landing page on Census website 3-45

3.7.2 Feedback on the AIES “Information for Respondents” page 3-46

3.7.3 Feedback on the AIES “FAQ” page 3-48

3.7.4 The most helpful page 3-49

3.8 Module 9: AIES Emails (Protocol Version C, n=13) 3-49

3.8.1 Feedback on postcard 3-49

3.8.2 Feedback on AIES advanced email 3-52

3.8.3 Feedback on due date reminder email 3-53

3.9 Module 10: AIES Letters (Protocol Version D, n=13) 3-54

3.9.1 Feedback on AIES –initial letter (L1) 3-54

3.9.2 Feedback on AIES – past due notice letter (L2) 3-55

3.9.3 Feedback on AIES – Office of General Council “Light” letter (L4L) 3-57

3.9.4 Feedback on AIES – experimental “Dear CEO” letter (ECSL1) 3-58

3.10 Module 11: Ideal Field Period (n=51) 3-60

4. Recommendations 4-1

4.1 Recommendations for the AIES instrument 4-1

4.1.1 Recommended improvements for the question arrangement and instructions 4-1

4.1.2 Recommended improvements for the online spreadsheet and the downloadable Excel spreadsheet 4-2

4.2 Recommendations for the AIES materials 4-4

4.3 Recommendations for the fielding time frame 4-6

5. Concluding Remarks 5-1

Appendix A. Debriefing Interview Protocol A-1

Appendix B. Participant Informed Consent Form B-1

Appendix C. Recruitment Materials C-1

Appendix D. Communication Materials D-1

Appendix E. Report of Debriefing Interviews with Firms Primarily or Exclusively Located in Puerto Rico E-1

Appendix F. Participant Informed Consent Form (Spanish) F-1

Appendix G. Recruitment Materials (Spanish) G-1

Exhibits

Exhibit 2.1. Sample Characteristics and Recruitment Outcomes 2-2

Exhibit 3.1. Time to Complete the AIES in Hours by Number of Locations 3-60

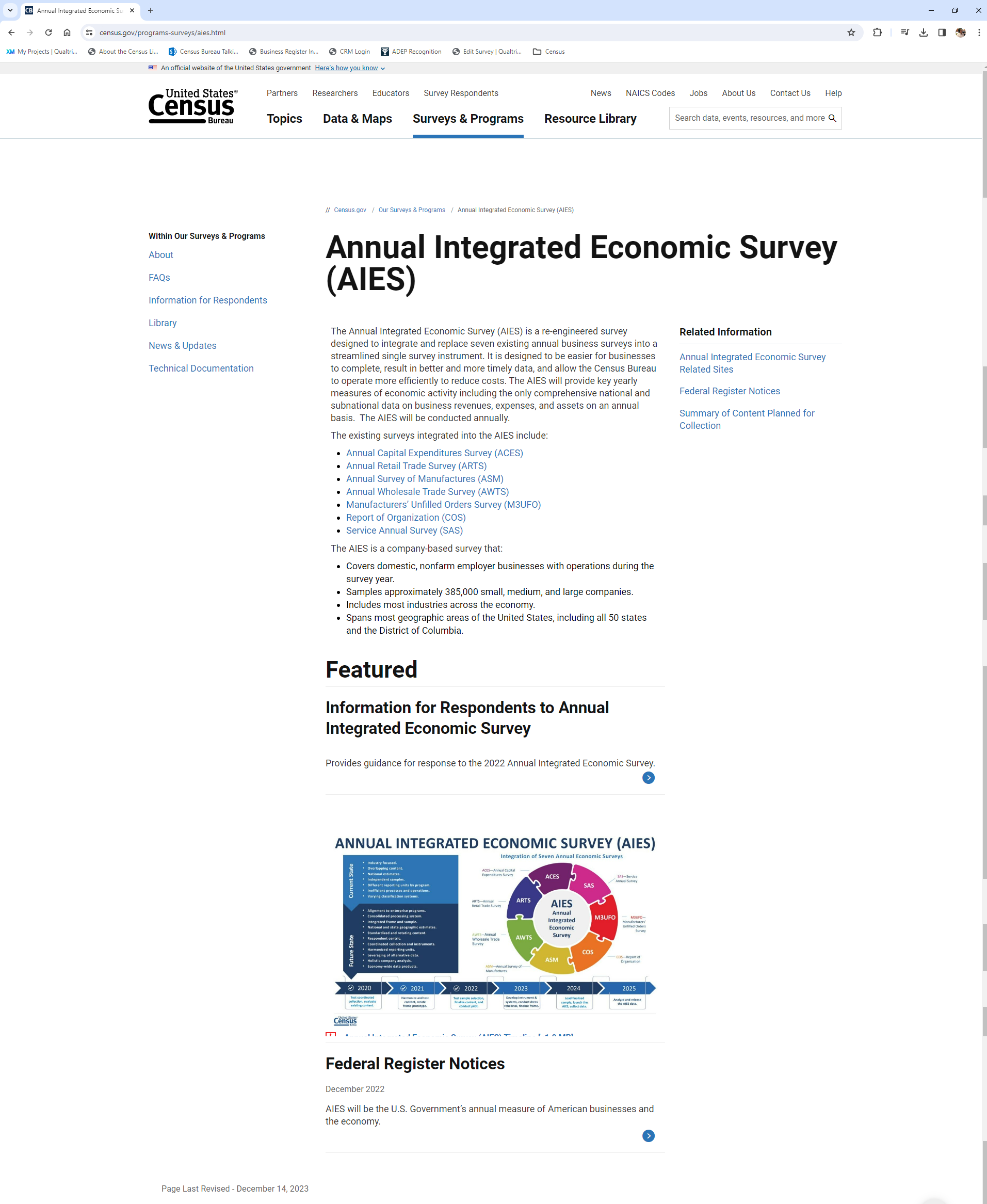

The Annual Integrated Economic Survey (AIES) is a survey re-designed to integrate and replace seven existing annual business surveys into a single streamlined instrument. The goals of the AIES redesign are to provide an easier reporting process for businesses, collect better and more timely data, and reduce costs for the U.S. Census Bureau through more efficient data collection. Designed to be conducted annually, the AIES will provide key yearly measures of economic activity, including the only comprehensive national and subnational data on business revenues, expenses, and assets.1

As part of the research effort for improving AIES, the Census Bureau contracted RTI International and Whirlwind Technologies, LLC, to conduct debriefing interviews with the respondents who completed the AIES web instrument to assess the clarity, effectiveness, and usability of the first AIES instrument launched in March 2024. From the interview feedback, the project team was able to assess these research questions and gather comments about the respondent-facing communication materials to provide relevant recommendations.

This report summarizes that effort, thematic research findings, and ensuing recommendations and includes the materials used in support of the effort.

This evaluation study explored the reporting experiences of the AIES respondents, focusing on soliciting feedback about their experience with completing the survey, including the level of effort for completion and their ideal length and timing for participation; their general impressions of the survey instrument, including the screen layout, font, and other features of the instrument; and the content and accessibility of the communication materials supporting the data collection. The findings from the debriefing interviews enabled the Census Bureau to understand the types of issues that emerged during the AIES, confirm the consistency of participants’ experiences with the findings from previous research efforts, and assess strategies to consider for further improving the usability of the web instrument and the clarity of the communication materials. The study contributed to the continuous effort of the AIES research by the Census Bureau for further improvement.

The population of interest for debriefing interviews consisted of businesses that completed the AIES instrument after April 1, 2024. Because of the rapid turnaround needed to meet the debriefing interview schedule, only businesses for which the Census Bureau had an email address and phone number in the dataset were eligible for this study. Census Bureau staff from the Economy-Wide Statistics Division (EWD) provided the project team with a sample list of businesses that completed the AIES instrument on a rolling basis throughout the debriefing interview data collection period. This sample list was used to contact potential respondents.

In addition to contact information, the EWD staff were also able to append to the recruitment file several key characteristics of the businesses, including the total number of establishments, North American Industry Classification System (NAICS) code, whether the business had establishments in Puerto Rico, and whether the business was in the manufacturing sector. However, these key characteristics were not available for all businesses and were used to track sample diversity of completed interviews rather than for targeted recruitment. The total number of establishments was used to categorize businesses into three different sizes: single unit, small business with less than 10 establishments, and large business with 10 establishments or more. Because of missing NAICS code data, business sector information was confirmed during the debriefing interview.

The goal of participant recruitment was to conduct approximately 50 debriefing interviews. Consistent with the previous effort of debriefing interviews for the AIES Dress Rehearsal, conducting 50 debriefing interviews from a diverse group of businesses was deemed adequate to provide sufficient feedback on the AIES experience and supportive communication materials.

The Census Bureau and the project team collaboratively designed a schedule for implementing two rounds of debriefing interviews, with a brief intermission to conduct a preliminary analysis to assess the insights learned from the participants during the first round and facilitate changes to the protocol as needed after half of the interviews were complete. On April 11, 2024, Census Bureau staff shared the first sample list with the project team to recruit debriefing interview participants. As the project team proceeded to contact and solicit research participation from the sample list, we also monitored the breakdowns of sectors and business size of completed interviews.

The project team completed 26 interviews from April 11 to May 24, 2024, for Round 1, and then another 25 interviews from June 25 to July 26, 2024, for Round 2. Across both rounds of data collection, the project team contacted 657 sampled businesses and completed 51 interviews; six did not attend their scheduled interview, and another 31 refused to participate. Among the 51 completed interviews, 12 participants represented a single-unit company, six participants represented a small company with less than 10 establishments, and 33 represented a large company with more than 10 establishments. Fourteen participants represented a manufacturing company, and another 11 participants represented a company with establishments located in Puerto Rico. Additionally, some participants in the concurrent e-Commerce Exploratory Interviews data collection were asked about their operations in Puerto Rico.2 Their comments on AIES were included in the analysis of the debriefing interviews. Exhibit 2.1 details the distribution of completed interviews by business size, noting the sum of 532 total samples across the three columns because of missing data on sector size from some sampled businesses.

Exhibit 2.1. Sample Characteristics and Recruitment Outcomes

n |

Interview status by type |

||

Number of units |

|||

Single Unit |

Small (<10) |

Large (≥10) |

|

Total sample |

163 |

130 |

239 |

Completed |

12 |

6 |

33 |

No show/withdrew/refused |

19 |

6 |

18 |

Data collection began with the following contact protocol: one email followed by one call, then a second email for all sample members. Because of low engagement and the rapid data collection timeline, this contact protocol was adjusted to focus primarily on emails, which allowed for a wider reach of potential participants more quickly. All 657 sample members received at least one email communication, and 292 were contacted by phone to solicit participation. Following calls, 206 sampled members received either a second email invitation or a second phone call as the last recruitment attempt (see Appendix C for details of the recruitment messaging materials).

Participant recruitment was challenging due to several factors. Sample members refused to participate in the interview primarily because of the time commitment; with many businesses engaged in end of fiscal quarter activities and tax filing season, typical points of contact for Census Bureau surveys were overwhelmed with other work. Future data collection efforts with AIES respondents should be sensitive to timing around year- or quarter-end activities and the deadline for tax filing and other businesses’ regulatory compliance activities.

As a survey pretesting method, debriefing interviews are typically employed at a later stage in the instrument design and evaluation process.3 Although the analytic goals of debriefing interviews may be similar to those of cognitive interviews, debriefing interviews tend to use a more wholistic approach to ask participants to respond to the debriefing questions or probes in a live survey setting. This method is suitable for collecting feedback on the survey instrument of focus as part of a field test or a production survey implementation like the AIES.

Census Bureau staff from EWD provided the project team the debriefing interview protocol for review, and in turn, the project team recommended some revisions for Census Bureau’s final approval to further clarify the debriefing questions and the flow of the protocol.

During data collection, RTI survey methodologists conducted all debriefing interviews virtually using Microsoft Teams. Participants were provided the phone number to call into the meeting or join via their computer. Depending on whether the interviewers possessed a Census Bureau–issued laptop or had access to Census Bureau’s virtual desktop, approximately half of the interviews were completed with audio only and the rest were completed with video interview. The debriefing interviews followed a semi-structured interview protocol (Appendix A). The protocol included questions that gauged participants’ experience in completing the AIES, how their experience compared with previous Census Bureau surveys, how participants retrieved information, participants’ perception of question difficulty, and what level of burden might be associated with data retrieval and reporting. The protocol also inquired about participants’ experience with, and feedback on, the interactive data entry functionality of the instrument, including error checking, the downloadable Excel spreadsheet (for reupload), and online spreadsheets. Lastly, the protocol also included questions that asked about participants’ impression of, and engagement with, AIES communication materials they received, including an interactive content tool for survey preview, a postcard, the emails, mailed letters, and help resources on the website. Interview length was approximately 45 to 60 minutes, and the study did not offer monetary incentives for research participation (see Appendix D for the details of the communication materials).

Before the interview, participants were asked to review the consent document, which was sent via email. At the start of each interview, the main points of consent were reviewed, and the interviewer confirmed that the participant consented to be interviewed. Additionally, participants were asked to consent to the recording of the interview. If consent was provided, the interviewer began the recording. The interviewer then reviewed the introduction to the survey with the participant, which focused on explaining the goal of the interview to gather feedback about their experiences with the AIES.

Each interview began with the interviewer asking a few background questions about the company’s core business and the participant’s experience with answering Census Bureau surveys, along with how their previous experience compared with completing the AIES. Participants were then asked questions about their interaction with the AIES instrument, including estimated burden and challenges with specific questions or topics. Some participants struggled to recall specific items with which they had difficulties, but others were able to access a copy of the survey they saved for their records during the interview to aid their recall. Participants were also asked specifically about their data entry experience, focusing on their feedback on the interactive elements of the web instrument, including the error checking functionality, the online spreadsheets, and the downloadable Excel spreadsheet. The second half of the debriefing interview focused on questions regarding participants’ engagement with the communication materials, including a review of an interactive content tool for survey preview, the survey information provided to respondents on the AIES website, a postcard, two emails, and four mailed letters that were sent to participants in advance of the interview. The following sections summarize the findings and suggested recommendations.

This section is organized by the flow of the interview protocol. Each section corresponds to the reports of the main themes that emerged from the specific module during the debriefing interviews.

Participants’ length of time in their role as point of contact for Census Bureau surveys correlated directly with their ease of response. Participants who have been reporting for more than 1 year find the process of completing the new AIES easier than those who reported for the first time this year. These new AIES respondents can be overwhelmed by the number of questions, format of data entry, granularity of the questions, and number of people they need to contact. This trend of increased ease with repeated reporting was confirmed by three participants who had been reporting for the AIES from 2 to 6 years, who indicated they believed the survey next year would be easier to complete.

“Because this was my first time it wasn’t that easy. And also, because we have several locations, they all needed to be reported separately…If I wasn’t such a novice, it would probably be easier. The next time it is going to be easier probably.”

“I will probably use the forms and reports I already created. It should be easy to move those forward and use the same methodology to populate next year’s forms as long as they don’t change a bunch of things, like columns or questions.”

Most participants find the data they need to answer Census Bureau surveys by reviewing internal reports and by requesting the support of other colleagues. Thirty-six participants reported working with one or more of their colleagues to pull the data they need. On the high end, one participant mentioned working with 18 different individuals within their large company to gather all the needed data and responses for the AIES.

“Partially due to the size of our company, there is a lot of coordination with individuals from other business units. I have payroll information, but in terms of locations and financial data for those locations, I have to work with about 8 other individuals…[in] real estate, accounting, finance.”

“Overall, this was a very wide-ranging survey. It definitely covered a lot of areas of our business and what comes to mind immediately with this question is we worked with many, many teams. It wasn’t all items we could pull ourselves. We worked with probably five to seven teams around our business to pull all of the different data just because it was really varied material.”

Those who collaborate with other colleagues typically work with individuals in the Human Resources (HR) department for payroll and employee data, information technology (IT) departments for technical questions, and Research and Development (R&D) units for specific R&D data. Although many of the points of contact at businesses are accountants or housed in finance departments, some are in administrative or HR roles. Participants in these roles must contact more people in their organization because they do not have easy access to the data they need. They serve as the liaison for the company, reviewing the questions and figuring out which colleague can provide the correct data. These participants also typically have limited specialized knowledge about finance and sometimes need assistance understanding and interpreting the questions.

Some participants must also work with outside resources when accounting is not done in house. Two participants talked about the need to consult with their accounting firm to obtain data on appreciable assets. These participants noted that working with their accounting firm can be expensive and is not a step that they have had to take for past Census Bureau surveys. A few participants also serve in roles as an external reporter for their companies although their primary role is to handle surveys and government reporting. These participants are typically at very large businesses and must work across many data sources and colleagues to gather the data needed for the AIES.

“Having to reach out to our accounting firm to get a refresher on reading the depreciation schedules on our financial statements. This cost us money to have to consult with them.”

“I’m not going to make anyone else do it or pay someone a lot of money per hour to try and figure it out.”

One pain point for the new AIES for participants who work extensively with other colleagues is the lack of an easy way to preview all the questions so they can send all data requests at one point in time. Although some participants found the question preview tool, most did not find it helpful because it seemed to list more general questions than those contained in the survey or questions that were not applicable for their industry. Only one participant thought being able to download the questions in advance was helpful and seemed to match the questions they were asked on the survey.

“[When I used the question preview tool,] I got every question it could possibly ask and some of those were not in my actual survey because mine is manufacturing of a certain type. It was dumb and a waste of time. It was tight, it was hard to read. It was before part 1 and you needed part 1 to know what to answer next, this was generic.”

“It was helpful to see the [question] preview but it doesn’t tell you everything you need to know. I’m trying to figure out what information I need to have and whether I will need to get information from other places. The whole process was really convoluted to have to go through all of the steps. I’d rather have one giant spreadsheet which is more or less the way it used to be.”

However, many participants seemed unaware of any way to preview the questions on the survey. Participants were accustomed to being able to preview all the survey questions, typically through a PDF, when answering Census Bureau surveys. Viewing all survey questions at once facilitates data collection when the point of contact needs to work with others to collect the information required for the survey. A PDF preview of the survey allows the point of contact to send all questions and requests for information to their colleagues at once, helping ensure the organizational burden remains at a manageable level for respondents. A preview of the questions also helps AIES respondents better prepare for the task of reporting. Many participants felt surprised and frustrated when they got to Step 3 and saw the amount of information being requested.

“One of the things that was frustrating that I tried to—and maybe it was an error on my part—but I tried to print all the questions beforehand so that I would know exactly who I needed to ask what, that way I could ask it all at one time. But I was unable to do that. So, there were some instances where I had to say ‘oh, here’s another question’… If I had a full view of what the survey looked like then I could kind of go through the questions and say okay let me parcel this portion out that’s specific to payroll so let me ask them all these specific questions…I think it would help kind of having access to view all of the questions up-front as opposed to as you’re going through the survey because that will help plan and reach out to others. I can be working on a portion of it while my counterpart is also working on a portion of it, as opposed to ‘oh, I can’t move forward because I don’t have all of this information to proceed’.”

“Maybe if it started with an outline of what it would ask me about, but when you are just clicking from question to question, there wasn’t any rhyme or reason for where they were going or what was going to be next…”

Relatedly, participants expressed frustration with their inability to move forward in the survey without providing a response. Moving freely through the survey would also address some of the pain points of not having a complete preview of all the survey questions. With the current format, participants had to reach out to their colleagues and wait for a response before they could proceed with the next question for data entry in the online platform. Participants copied and pasted questions, took screenshots, or sent copies of the Excel worksheet with fields highlighted to facilitate their requests. Participants could not make all requests at once and had a lot of back and forth and time delays while collecting the data they needed.

“As far as inputting the data, the biggest piece of feedback – not being able to advance to the next question until you answered the question was really difficult. And very difficult in the coordination of others as well. Some people were ready, and some people weren’t. It would have been great if they could have popped in and input their data as it was ready, but we weren’t able to do that.”

“It wasn’t easy to navigate because it wasn’t clear what I needed. After getting some of the data, I had to have multiple calls with the people collecting the data [colleagues] and share my screen so they could pull the data at that moment to give it to me.”

Some participants entered placeholder values (e.g., 0 or 1) to be able to view all the questions in each section, but this approach could lead to data entry error if they forget to update a response.

“For Step 2, I wanted to know everything it was going to ask me, specifically the PP&E section. It let me bypass it at first. I just put 0s and 1s until I saw everything that I needed but I was concerned that I wouldn’t be able to go back in there but then I was able to.”

“Typically there is a link that will show you the different questions they are going to ask so you can print that out and gather the data ahead of time before you go into the system…I ended up going through the survey and putting zero on a lot of them, taking notes on what I needed to complete, logged out, gathered the information, went back to the beginning and re-entered everything from start to finish, then I hit submit.”

For those who have access to the data to report directly, most use multiple reports or data sources to gather all of the data they need for Census Bureau surveys, and 10 participants reported using three or more reports to gather the requested information.

“I pull the reports from payroll, clinical software billing report, and prior taxes.”

“I used our payroll information and our accounting software and statements, month-end statements, year-end statements.”

“I have to gather it from several different places depending on what the question is. It might come from payroll reports, some things come from financials, some from our depreciation schedule, a conglomeration of different areas.”

When asked about how easy or difficult it is to find the data participants need for Census Bureau surveys, 21 participants indicated it is easy whereas 14 shared that it is difficult. The other participants found the process somewhere in the middle with some aspects of finding the data difficult but still manageable. Many of these participants reported that while finding the data is not particularly difficult, the process is time-consuming.

“Easy would not be a word that I use… It can be a bit daunting at times. Some of the questions just don’t seem like they fit together. So, I may have to send out different requests to try to get the answer to one question…”

“The amount of time [makes these surveys a nuisance]. You have to take time away from measurable work product to complete something that really beyond you and me and the Census Bureau, no one knows we’re doing.”

“It isn’t difficult but is time consuming. It takes time [for me] to find the data on the accounting software and also to get input from payroll and HR departments for questions about employees.”

“It’s not easy. Often times, I feel like what I get from the Census Bureau is designed for a single entity business. We have hundreds. Some qualify to go on the Census, some don’t. At times, I feel like I am hammering a square peg into a round hole.”

Furthermore, there is a trend related to business size and ease of locating data, with different challenges for small and large businesses. About half of participants from small businesses (those with fewer than 10 establishments) reported that finding the necessary data is easy; however, they still encountered some difficulties. Small businesses encounter issues with data mismatches between what they have access to and what the Census Bureau is asking for, lack of reporting at their business, and lack of granularity in their reports. About 40% of participants at larger businesses (those with 10 or more establishments) noted that reporting the data is easy and one-third noted it was difficult. Many larger businesses face issues with the granularity of questions, disparate reports, difficulty reporting for locations, and collaboration with more colleagues. However, larger businesses sometimes have the advantage of additional resources dedicated to reporting and more thorough and detailed reports.

Additionally, there are also some trends comparing non-manufacturers with manufacturers, with similar patterns to small versus large businesses. About 40% of manufacturers reported that finding the data was easy whereas another 40% reported it was difficult; about half of non-manufacturers reported finding the data was easy. Manufacturers are eligible for and required to complete additional questions in the AIES than their counterparts, which may influence perceptions of difficulty of the AIES. One participant noticed this issue regarding the number of questions, sharing “Depending on the NAICS code certain questions were asked. Manufacturing locations had a lot more questions than non-manufacturing locations.”

Of the participants who responded to the question about level of difficulty in entering data into Census Bureau surveys, the majority indicated that it is easy. Those who reported that it is difficult experienced a range of issues, including having to round their data as requested by the Census Bureau, encountering technical issues with the data entry, and understanding the type(s) of data being requested. Larger businesses find entering the data slightly more difficult than small businesses, but there are no apparent differences in difficulty for manufacturers and non-manufacturers. These points will be further discussed under the context of the AIES in the next section.

Module 2 of the Round 1 interviewer protocol (see Appendix A) asked participants to compare their experiences with previous Census Bureau surveys to the new AIES survey before gathering more feedback about the AIES. After reviewing the data from the 26 interviews in Round 1 of data collection, the project team and the Census Bureau decided that the comparison probes were not producing any additional insights about responding to the AIES. In fact, some of the questions comparing the AIES to other Census Bureau surveys were confusing to participants because they had completed the Economic Census in 2023, which is often confused with the AIES. Because of these reasons, we did not include the comparison probes in Round 2 of data collection. However, the other probes in Module 2 were retained for both rounds of data collection and are reported below for all 51 interviews.

Most participants (n=34) expressed that they had an overall positive experience with the AIES and appreciated that the Census Bureau was trying to improve the process for mandatory reporting. However, even those who saw positives to the new approach experienced technical or usability issues, which are outlined in this section. A few participants complained that the process of gathering the requested data and entering the data into the survey was very time-consuming. However, some did note that they will likely be more efficient with the process next year. In general, most participants approached the AIES in the same way that they have approached previous Census Bureau surveys, both in terms of how they gathered and submitted the needed data.4

Participants were asked to share their overall impressions of the AIES and how the survey compared with their previous experiences previous. Seven participants indicated that they thought the AIES was easier than prior surveys that they had completed for the Census Bureau.5 These participants thought that the format of the data entry and how the questions were organized helped speed up the process of entering the data, giving them the impression that this year’s AIES was shorter and simpler than in the past. Two participants who report for many different companies particularly appreciated the new combined survey approach, which made their data reporting easier and more straightforward for each company they have to report for.

“It was just the ease of getting through the survey. Last year’s survey I’d go through and populate stuff individually for the 8 different sites we have. This year, the way it was laid out, it was all there on one screen, just broken apart. It made it so much easier.”

“I thought the entry was easy this year. I didn’t have too much of a problem with that.”

“I loved [the new AIES]. I anticipated it taking a week and a half to do and it was mostly done in half a day. It was just a god send.”

“To be honest I don’t know exactly what it was but it felt a lot shorter, and less time consuming, I don’t know if it was less questions or the way it was presented, not sure what happened there... I have been doing these for a while and it just felt easier. Maybe it was just my familiarity with the data they were asking for this time around.”

“Overall impression when we learned that it was coming, happy when we learned that surveys were being consolidated. That had been a challenge. Different units were receiving different surveys, and we didn’t always know what other folks were doing or what involvement that they needed. It did bring some new challenges as far as having to coordinate and making sure everyone was providing their data in a timely manner. And, even knowing who to go to for the data.”

“I think it was much simpler just off the top of my head. I don’t think I spent as much time as I had in prior years.”

“It was a little bit more user–friendly and easier to complete because it was a combined thing instead of having to do multiple, separate censuses. I did appreciate that.”

“I think the fact that you had everything in that one [survey] as opposed to me having to do different surveys. Like I said I do surveys for 9 different companies, and before all those surveys were done other places so it felt like so many surveys. Now I noticed everything was in one place, there is just one area I need to focus on, and when I am done, I am done.”

“It was nice to have, there were a number of surveys that were combined into this one big survey, and that in itself was nice, so I didn’t have to worry about doing 10 individual surveys throughout the year. I didn’t have to worry about doing all of them, just this one. So that was the plus side.”

Many participants reported that the new AIES included more questions and more detailed information than they had to report previously. As one participant stated, “I don’t recall ever having to go into that much detail into our company expenses [in past Census Bureau surveys].” Sixteen participants expressed that they had difficulties with, or concerns about, the level of granularity that the AIES asked for, which makes reporting much more time-consuming. Participants tended to experience the most issues with the location-level questions, which many participants considered the most difficult and most time-consuming section of the survey. In particular, participants had difficulty reporting employee counts, depreciable assets, inventories, discrete expense line items, and revenue by locations. Larger businesses experienced more difficulties reporting data on their locations because they have more data to report and less insight into the detailed operations of all locations. Around 60% of the manufacturing companies interviewed reported issues with the granularity of the data requests, likely due to the additional questions required for each manufacturing location.

“It did take a little bit longer to go through all the locations because we do have 15 locations plus the other company and it’s 8 locations. That took longer because some of the other surveys are more like summary of the locations.”

“For this form, I tried to approach it different…in the prior [survey] we didn’t answer for each individual location, it used to be companywide. What is your Cap Ex for the company? Now I am looking for the Cap Ex for each location. So, it’s like a more granular level of detail. So, I had to redo the process of it to get that level of detail.”

“Oh, yeah, [the location data is] tricky too. That data's not readily available, you’ve got to go hunt it down. And then you have to make sense of it. I have to make sure I’m giving you something that makes sense and I have to make sense of it in my mind. We’ve got 30 locations and this location made XYZ income. Does that jive with the financial statements we’ve been putting out based on the report I’m about to plug into this? It’s not like I’m just flopping numbers down on a screen, it’s got to make sense.” –

“If I remember correctly on this report I think somewhere in there you had to break down your management from your front-line workers, to your clerical workers, and sales people. You know, that kind of stuff. It’s just time-consuming.”

“Very comprehensive… Having everything on a location level definitely requires a lot more detail and a lot more time...It asks a lot and when you’re dealing with 600 locations and different types of businesses outside of just our warehouse business such as some of our manufacturing businesses that we have, it just felt very comprehensive in the sense of the entirety of locations and then the amount of information required for each of those.”

“If you had three or four sites, it’s probably great. If you have over 2,000 line items to complete like I do, it’s absolutely miserable. If you’re a small company and maybe have a small number of sites, if you open a location, it’s a really big deal and you know about it. If you are like we are, with locations all over the globe that we are constantly getting in and out of…it’s a lot. We’re always moving around. The way I would see all of these movements is through the payroll report.”

Compared with location-level questions, company-level questions were much easier for most participants to answer because of more accessible data and fewer questions. Although location-level questions are the hardest for participants, company-level questions are almost universally the easiest. Most participants have no confusion about which parts of the company to include when responding to company-level questions in the AIES. However, one participant did experience some confusion over how to report employees by location because one location may have employees for several different companies. This participant was able to get guidance from the helpline to report on all employees and complete the company questions.

“The survey was sent to [Company A]. But we have 8 other companies under different FDINs. We have employees under [Company A] in these locations, but we also have employees from these other companies. They’re not in all locations. There were questions on who we should be reporting on. We received guidance to report on all employees, which opened up a much broader set of data.”

Participants were also probed about the difficulty of industry-level questions, and similar to company-level questions, most find industry-level information easy to report. However, several participants experienced difficulties with industry-level questions because of the organization of their company and the associated data. One participant reports for a parent company that includes many different entities and within each entity, multiple industries. The data for this company are organized at the location level and not at the industry level, which means the participant had to manually assign NAICS codes to the appropriate data to report by industry. Another participant shared a similar issue because their business has multiple industries at one location, making questions about the breakdown of work challenging to answer. A final participant also described some issues with retrieving industry-level data primarily because the colleagues they worked with missed that some of the data needed to be provided at a location level and some at an industry level. The fact that the questions asked for differing groupings was a problem.

“The [questions] reporting by NAICS [were the hardest] because we can run all of our reports by profit center [or location] but then I had to go in and assign all the profit centers to a NAICS code and then do another formula to pick up all those to populate…Each brand has numerous profit centers. So, like, if they have their wholesale division, or commerce division, or retail store division, restaurant division, so it’s all broken down into different profit centers. And then within those divisions there are more profit centers. So, it is more difficult pulling the data especially when it’s by those NAICS codes.”

“We do have multiple locations and at one, we have retail and manufacturing in one location. That’s where it got difficult for me to differentiate costs to either retail or manufacturing in the same building.”

In addition to the location-level data, participants also struggled with reporting sales, company expenses, depreciable assets, payroll, and inventory. Some of these topics were difficult for participants because gathering the requested data involved collaborating with colleagues, which means sending the data request, waiting for the data to be returned, answering clarification questions, and finally reporting the data in the survey.

“Where I’ve got to reach out to the IT or Facilities guys. Those are going to take more time…any time I’ve got to collaborate it takes more time.”

“Number of people and their individual payroll and the revenue. There was back–and–forth to figure out which employees are in which state.”

“Like this past year, you asked a lot of cyber security questions, you really made me dig for who could answer those.”

The topics of sales, company expenses, depreciable assets, payroll, and inventory were also difficult to report because the internal data structure for companies does not match the way the AIES requests the data. Seventeen participants reported that the way their data are organized does not match the way they need to report it for the AIES. Generally, the AIES is asking for data at a more granular level than businesses have in their internal reports. Without a motivated business need for tracking granular data, respondents struggle to report data at the detailed level that Census Bureau requests.

Location-level data were the most commonly mentioned data that participants did not have access to at a granular level. For example, one participant stated, “When it came to revenues, the survey asked for revenue by location. It’s not something we track. We don’t care about it.” This participant explained that their business does not have a need to track such revenue data by location and consequently, having to provide these data on the AIES was particularly burdensome. Another participant reported that their business organizes their finances by program rather than by location, making it more difficult to report location data. Other topics that participants had difficulty reporting at a more granular level or on a more detailed breakdown included software and hardware, operating expenses, depreciable assets, electric costs, utilities, internet sales, and capital expenditures. Larger businesses (those with 10 or more establishments) described more issues with their company data not matching the requests from the AIES compared to smaller businesses.

“Due to our own shortcomings on our internal statements and needing to add up different line items and come up with the correct total for the question on the survey was time consuming.”

“Our system doesn’t use the same terms that are used in the survey. I have to play with numbers to get them to spit out the answers.”

“Where it was asking about depreciable assets and capital expenditures, ending balance, what we had accumulated in any capital lease agreements. That section was nasty and difficult to work through. Then it gets a little bit more detailed after that asking about software vs hardware. That required more estimation because we do not split that out, we just combine our assets into one.”

“In general, it depends on how specific the data being requested is. For AIES, it is being categorized differently than we would categorize it for business use. So, it takes some backing out and exporting and reanalyzing to get to the buckets that the AIES wants to see the revenue and expense data and payroll…One of the things I ran into was in the cost information, a lot of the operational cost information. I have all the data I need existing in our systems, the difficult part becomes fitting it into the buckets requested.”

“The problem doesn’t lie with us getting the report from payroll, the problem lies with the correlation between the way our payroll department reports the data and the way Census Bureau wants it sent to them. It becomes a real issue when we try to merge the data together. It is very difficult.”

“The harder questions are when they are asking about different sales and expense categories. They don’t always line up with the way we prepare our financial statements. I know they can’t be tailored to us but basically I have to think about how they translate from us to the Census Bureau.”

Most participants want to report data as accurately as they can for Census Bureau surveys and have several strategies to manage data mismatches. Ten participants stated that they created custom reports to obtain the data that were being requested on the AIES. Although creating these reports took a significant amount of time this year, these participants hope that the process of creating these reports this year will result in increased efficiencies in future years. These participants are, however, concerned that any changes in the questions could make their newly created reports unusable. Additionally, some participants wrote extensive documentation about how questions were interpreted and how data were pulled to assist in the process next year. Following from the issues larger businesses face with their company’s data not matching the AIES requests, larger businesses are also more likely to need to create custom reports to respond to the AIES than smaller businesses.

“They are not standardized reports currently. We use [a specific accounting software] as our business software system, so it is a little bit dated. Most of it is creating custom reports to pull the information I want because we don’t have preset reports that are going to give me the information I need for these forms.”

“I’m a bean counter, so my approach is the same for all of these things. I read over it first. I say, ‘Okay, how can I get all the data together.’ I build some kind of model that brings in the data if I don’t have it readily available and then I go put it into your application…If you ask the same questions next year, it should be pretty easy.”

“One thing that we put in some effort to do as we went around the first time is make sure that the resources we were building and the step-by-step instructions we were building, the internal preparation perspective, are repeatable. So, what we did is for anything we were using our accounting software to pull, we outlined specific pages where you refresh the date, you refresh the specific locations and we can pull the data in a way that mirrors the prior year. So, we put some effort into making our research templates repeatable and then also building lists of the contacts we worked with within the company.”

A few participants admitted entering zeroes for data that they did not have readily available so that they could complete the survey, without any intention of filling in the data correctly. One participant stated, “I think I put zeroes in for some locations because I couldn’t even think about how to split that.” However, many participants spend time making manual calculations or custom reports to provide as accurate information as possible. Fourteen participants reported that they had to manually calculate responses to answer some of the questions within the AIES. About 53% of the manufacturing companies interviewed reported having to do custom calculations to report for the AIES. We suspect one of the reasons for manual calculations may be that businesses were asked to report information by a more granular level (e.g., by locations) than the level that they usually track (e.g., by company). Similarly, as previously noted in Section 3.2.3, there may be notable differences in the organization or breakdown of the records between the records businesses keep and the information the AIES requests from the businesses (e.g., the breakdown of expenses, payroll, or utility usage). As a result, participants need to engage in additional data management from their records to produce the appropriate response to the AIES.

“Sometimes there are requests asking for information [that are] not in line with how we track or present our information. I have to massage the data to get [it] into the survey.”

“Utilities specifically, it asked for electric costs. We don’t record electric costs, we record utility costs, we get joint billings with gas and electric. So to break that out is a manual process. You have to look at every invoice and split the electric cost.”

“For example, like one of the questions asked me how many kilowatts of electricity were used in manufacturing. In our building, we have a café, offices, and manufacturing and electric and gas is on the same bill. I have to look through my paper copies of old bills to figure out how much of the bill was electricity and do some kind of math to figure out what percentage was for manufacturing. It took forever just to answer that one question. If I just didn’t care, I’d make something up.”

“And then all the expenses are grouped by different things so I had to go and code all of our expenses into those groups. You’re doing a lot of coding and remapping before you can get it into that data.”

“Our reporting team prepares raw balances and income statements by site and company. We massage the data. We are not fancy enough to have a reporting system that I can mess with easily. So, I take theirs in Excel and mess with it.”

Another issue for participants is year-over-year survey question changes. Changes to the survey questions make reporting more difficult, especially for participants who try to keep records from each survey. When questions change, it makes it more challenging to gather and report the data. One participant felt that it is useless to try and prepare for next year’s survey given the number of changes with the survey questions from year to year.

“There were a few things in past surveys that I had reported as separate line items that were now combined. There was a bit of confusion as to what I did last time and now being asked to do it differently. I was trying to figure out which items were which.”

“It can be difficult. I usually try to keep notes from the prior year but sometimes the questions change, or which question you answer change, so that makes it more difficult to gather the data.”

“I am not sure how I could prepare, it seems to change as well so I am not sure next year’s report would be the same as this one, so I am not sure what to prepare for. I could separate and isolate data per location, but it wouldn’t be relevant, and it is time consuming so it would be a waste of time.”

“The only thing I could do [to prepare for next year], because we report on our fiscal year, is tell my team, these are the questions we got asked last year, go out and do that for the fiscal year in the fall this year. Then, it would be already done [before we get the next AIES], hopefully. But, if the questions change, I’ve wasted everyone’s time and the world would be furious with me.”

Another noteworthy issue experienced by participants is how time-consuming the process was to provide and submit the requested data for the AIES. Although thirteen participants spontaneously reported that having one single survey to complete the data collection was a positive, 20 said completing the survey was time-consuming. Such feedback may be related to the amount of information AIES is designed to collect from survey respondents, and the respondent burden may emerge from either the data retrieval or the data entry. For example, several participants stated that they did not have any issues finding, entering, or submitting the data, but the process required a significant amount of their time. When asked which part of the process required the most time, some participants indicated spending more time on retrieving the data than entering the data into survey, whereas others reported that their data entry was more time-consuming than their data retrieval. As expected, larger businesses (those with 10 or more establishments) protested the amount of time to complete the AIES more than smaller businesses.

“I did appreciate that there was only one survey that I had to submit but it took way longer than I expected to get all of that data in order to enter it into the report.”

“At first, I thought this was in addition to all the other surveys. So, I thought, oh boy, another survey! So then, I think you mentioned it and the person who called me to schedule this [interview], mentioned that this is INSTEAD of some of the surveys. That changed my opinion on it. Maybe this was a little more efficient. I’m not sure how many surveys this replaced that we would normally do. But I think then there was some efficiency gained…just pulling up the reporting portal, diving into all your financial info, submitting report, doing that two or three times, just takes that much more time than just doing it the one time.”

“I do appreciate that it looks like they are trying to consolidate some of these reports, so it is just one giant one instead of all of them…In our case […] it was a waste of energy, and it was disruptive. I can imagine a larger company with thousands of employees who probably have staff designated to specifically handle stuff like this, I don’t have someone like that. I am involved in revenue production and patient care, so to have to pull myself away for half a day was disruptive to our workflow and our patients.”

“It takes time. It’s somewhat easy but does take time to generate different reports depending on what is needed from the Census questions. It’s not horribly hard but it is time-consuming.”

“I didn’t think it was difficult to answer any of the questions, I just thought it was time consuming.”

“I think in some ways more efficient but in some ways I kept thinking wow is this ever going to end?”

“This is the closest that I’ve ever come to writing my representatives ever. It is a total waste of time. It is the closest I’ve come to quitting my job. Because it’s miserable doing it. I hate it. I think it’s the dumbest thing ever. To me, it’s the definition of governmental bloat.”

When directly probed about whether they would prefer to answer one survey versus multiple throughout the year, 22 out of 28 participants shared that they would prefer to complete one longer survey rather than multiple, shorter surveys throughout the year. These participants explained that one survey is easier to keep track of, makes logging into the portal more efficient, improves efficiencies when working with colleagues, and allows for easier yearly reporting. The three participants who preferred multiple, shorter surveys throughout the year felt that shorter surveys are easier to fit in around their other work commitments and do not require them to devote a significant amount of time at once to completing a survey. The other three participants explained that they see pros and cons to both approaches and do not prefer one over the other.

“I’m definitely down for just one survey and submitting once in a year because that’s just fewer things we have to worry about as an organization.”

“It’s nice to just knock it all out in one. It is less confusing because sometimes you think you finished one survey and then you get another request for another one and you think I already did that one but it’s not the same. So, it was nice to just have one in that regard, but it was bigger.”

“It was nice being one and done. Going in once and getting it done and not having to change your password, get in again, 3 months later change the password again and to get in again.”

“I would rather do it all at once especially if this can be consistent year over year. That would be great.”

“[I prefer] shorter surveys throughout the year because I have so many companies I have to report on. Dedicating a whole week to answering questions definitely took away from running the business.”

“I think if this could be a quarterly survey that would make it a bit more palatable because it is a lot of questions.”

Participants also reported issues or challenges regarding the definition or applicability of specific survey questions under the context of their business. For example, some questions were challenging for participants to understand because of confusing or unfamiliar terminology. Twelve participants mentioned issues with question comprehension, such as the examples below. Two participants found the length and wordiness of the questions especially challenging. Two participants also called out the potential for different interpretations across businesses and the potential for unreliable data because of ambiguously worded questions.

“…the question might be unclear and what do I think they are really looking for and trying to tie that back to our information. I will go back and read their instructions, see if that helps me any further, if that doesn’t, I might go back and look at the previous years, or get one of my accounting team members and see what their interpretation is.”

“Also, they had a huge section of questions like on ‘do you do last in, first out’ methods of accounting and I have never heard of that. Maybe this is some really obvious thing to a real accountant but I’m guessing that any small business owner who is not a real accountant but who knows how to enter expenses and income and pay their taxes is not going to ever have heard of this or know what these questions mean. There’s no option to say, ‘I don’t know what you’re talking about. I’m not going to answer this question.’ I even googled it. The Google answer didn’t even help me. I felt like I had to submit it because it’s the law. I honestly can’t even remember what I did. I think I just put zeroes in all of them because I had no idea. I had not encountered that before. I had never had no idea what a question was asking about until this survey.”

“Data are not hard to find. The interpretation of questions can be hard though. Sometimes contacts at Census Bureau aren’t sure exactly what it’s asking about specifically. This is often because the question is too general.”

“Sometimes I think there’s a little bit of vagueness in the interpretation especially if you’re asking six different people the same question and they interpret it their own way.”

“The [question] descriptions are very repetitive and wordy. It just takes time to read it all. You have to read it otherwise you might miss important information that you need to answer the question appropriately, but they’re hard to understand.”

Participants also reach out to the helpline when they struggle to understand the questions. Four participants who were explicitly asked about help resources in Round 2 of data collection reported contacting the customer helpline to seek guidance on ambiguous or confusing questions. One participant did not realize there was a helpline and requested access to a point person to help them better understand how to respond to questions that were confusing and unclear.

Furthermore, at least 12 participants encountered questions that they believed were not applicable to their business. Some of these questions were quite time-consuming for participants to navigate through, and as a result, participants felt uncertain as to how to answer the question. Topics that were not applicable included physical location questions for remote-only workers, depreciable assets, stocks, international locations, research and development activities, robotics, manufacturing questions for a business not involved in manufacturing, and foreign affairs. One participant explained that he believed some of the questions about operating leases were no longer relevant to any businesses because of changes to rules with the U.S. Securities and Exchange Commission. Encountering these questions left him frustrated because it appeared that the Census Bureau was not up to date on the latest rule changes. Some participants explained that they would prefer to have a filter question that could skip them past all of the questions that were not applicable or be able to select a “don’t know” response for such questions. A few participants noted that the grayed-out questions that were not applicable in both the online and Excel versions of the spreadsheet were difficult to navigate and made the spreadsheets more overwhelming.

“What I seemed to notice was that [there was much that wasn’t] applicable to our organization. It was a long survey where we answered a lot of questions with ‘No’ because they didn’t apply to us.”

“The data that I had was relatively easy to find, but most of the data requested was not relevant to our type of business. That was probably the most laborious portion to go through and read. For example, there was one section where they wanted to know how many robots we owned for production, how many we rented, how many were used, how many were new, but you had to read through the report and that was extremely laborious. I can tell you most of the data that we entered was zero…It was quite a laborious project just to read each line-item request, and very frustrating realizing I just put zeros for almost everything… The spreadsheet section that had column after column, I am not sure which one had the robot section, but I can remember some things were so off the wall, one had to do with like how many latex nipples do you produce, I guess that is pertinent to somebody but not us. It was that section where they wanted to know how much of this did you produce, how much of this did you use. I think I remember a lot of stuff about what was produced domestically or did you rely on foreign. We don’t produce anything foreign so anything that had to do with overseas production was irrelevant to us. So going through all of those categories it was just zero after zero after zero because it was geared toward a company that does manufacturing.”

“It was irritating though to have to go through non-applicable portions of the survey to make sure that they did not apply to the company. For example, the company is not in manufacturing, only in retail of clothes, but we had to go through a lot of manufacturing questions to make sure that we didn’t need to answer them.”

“There were probably some parts that I feel aren’t applicable and you just kind of have to get through them. Whether it’s putting a 0 in a number area or clicking no or NA or whatever it is. And that can be kind of cumbersome because you just want to get through it, but I don’t know what parts exactly without looking. Sometimes I feel like if you would answer certain answers to one question, it should take you and skip all that other stuff.”

In addition to being asked questions that were not applicable or relevant to their business, six participants noted that they were asked questions that seemed duplicative or redundant. They explained that it was as if they were being asked to report the same information multiple times in slightly different ways, such as by different time periods (e.g., quarterly and annually) or by different groupings (e.g., by locations and by industry). They felt frustrated by this effort to report the same information in different totals and did not understand the purpose of doing so.

“Some of the stuff is duplicative. You’re asking for quarterly information and you’re asking for annual information. Why precisely? I mean maybe some of that stuff is seasonal. I don’t know. Some of it seems duplicative.”

“It wasn’t bad, I remember going back a lot to the previous year’s March data and then through the end of the year. It felt like I was regurgitating the same information at times for two different time frames. Not sure what the end game was, maybe that’s data that they use. It was a little duplicative there. We had 14 locations for me to enter the same questions over and over again. They wanted the March information and then the year end information, just to see if business is running smoothly through the year or if there is big swings one way or the other.”

“Some of the questions were duplicated from company and industry level, is that right? It seemed to be. Some of those were the same and a little bit more elaborate of the two. At first I found that confusing, why am I doing this again and again, but then it made sense afterward.”

“It was a little messy. This line and this line is asking the exact same thing twice, then I realized you are separating from the manufacturing from the admin at the same site. So I answered the question even though in Part 1 I [had reported the totals].”

“I found in the most recent round of the AIES it requested a lot of duplicate information. So, I would answer the questions …and then it directed me to an Excel spreadsheet that wanted the exact same thing. I felt like I was answering the same thing twice. Or questions that were very similar to each other.”

The comments about duplicative questions also reflect another problem participants shared, which is a lack of understanding about the purpose of the AIES, especially around the level of detail requested by the survey. This lack of understanding can make the reporting process more frustrating for respondents. Participants do not understand why the Census Bureau needs to collect such granular information and what the Census Bureau does with that information. More participants from larger businesses (those with 10 establishments or more) expressed these sentiments about the purpose of the AIES, likely because of a higher number of questions larger businesses typically have to report.

“I hated it. I wondered why they needed all of that information, honestly. I can understand the labor aspect of it but I didn’t understand why they needed to know how much you spent on rent versus utilities versus how much you spent on laptops for employees this year.”

“To be honest, it is a real nuisance sometimes. Partly why I agreed to this [interview], it’s part of our duty to provide this information. I’m sure it’s helpful in ways I don’t even understand. So, we do it none the less. But at times it can be like I just wish I could skip this question. ... I’m sure there’s reasons for it. it’s our duty to provide that information and we do so to the best of our ability.”

“To be honest with you, [completing the AIES is] just a pain. I’m not getting paid for this time. It just adds 5 hours to my work week which is already pretty full. I think I’m doing it for the government’s benefit, which is fine, if you are doing things to make our lives better. I’m just doing it because I feel like I have a responsibility to do it because I was asked.”

“What is the penalty for not completing the survey? Also, how many surveys can they really make you do each year?”

“I would say [the AIES is] …I don’t know how to describe it. It’s just brutal. The level of granularity is insane. I don’t know what value this could have for anybody. It just feels like we’re throwing numbers at something. I don’t even know if I feel good about those numbers. One finance person may be interpreting those questions in one way and grabbing those numbers and then this person pulled a different number. Then, there’s not even a comparison.”

“So, the bigger question from me is do you really need it and is somebody following up and is it really being used when we put so much time into this stuff… I’m more curious, do you really need all of that information? What are the top ten things that you guys need that you are going to use? And are people filling them out credibly or just going through quick because it’s so hard!”

“My overall impression is that it’s there. I don’t know why I felt obligated to do it. I don’t know if other people do it. I’ve never seen actually the results of doing this—and that could be my fault for not looking at them—but to me it’s not extremely valuable. I just do it because I feel like I’m supposed to.”

Although the Census Bureau was aware very quickly that there was an issue with saving the data during the beginning of the AIES data collection and sought to address it, some participants mentioned this known issue. Three participants indicated issues that they had experienced with saving their data in the online survey.

“This year, I had filled out like half of it and while it was being reviewed, that information had been deleted. It was frustrating to have to do the re-upload. We had a new entity this year and that was also erased so I had to re-add the new entity. That was a pain. After I had redone it, I got an email that there was a server error during the time I had logged in and that everything had been lost. I had to do it three times. This was very frustrating. The email I got from the Census said do not go back in until we tell you it’s ok to do so but I never got the email. So eventually, I went in and it did get submitted right away so I didn’t risk it not going in.”

Some participants also reported other technical issues that interfered with their reporting process. For example, several participants reported inaccurate data populated in their surveys, such as closed locations, NAICS codes, and primary business activities. These inaccurate data posed the biggest challenge for participants when working in the Excel spreadsheet, where they could not update or correct such information. Further detail about how participants navigated these locked cells in the Excel sheet is provided in the next section.

“There is a location that closed in 2013, it’s still there. It’s not a terrible nuisance but it is frustrating because it’s not like you can just delete the row from the input form, you had to specify why you sold it, who you sold it to, the same information from 7 years ago. Adding new locations works fine, but removing old ones is difficult.”

Several other issues were reported by participants, including the desire for a printed report with their submitted responses, complaints about duplicative reporting across government agencies, and usability suggestions for the website. Two participants commented on the lack of an easy way to save the final submitted responses. Although the Excel sheet allows participants to save responses provided in spreadsheet format, it does not include the responses provided only in the survey portal. Many participants prefer to keep a copy of their responses for their records in case additional questions arise and to help prepare for the survey next year.

“Another thing: with other surveys once I completed it I’m able to pdf it like I retain a copy. For the AIES one I don’t recall there being an option to do that.”

“I’m trying to remember… I was looking for it this morning, but I assume I did it online because I couldn’t find a copy of it. I wanted to look at it again before we talked, but I couldn’t find it.”

One participant also brought up the issue of duplicative data reporting across government surveys and would prefer that the Census Bureau use the data submitted to other agencies. This participant shared, “there could be some cooperation with IRS and Census Bureau with depreciable assets. That information is all submitted with our tax return each year. It seems like why can’t the two of them talk instead of having to reproduce that for the Census Bureau.” Another participant echoed similar sentiments, sharing, “We’re a public company. We publish our financials to the world every quarter. Everybody knows what we’re doing. We’re a public company, the IRS is here constantly. We’re an open book. This is just somebody asking for a separate book that they want to look at differently.”

Finally, one participant mentioned experiencing issues with navigating through all the questions in the online portal. This participant used the Excel spreadsheet for Step 3 but wanted to have some way to navigate all items in the online portal easily, such as with a question pick list. He wanted to be able to confirm he had answered everything and easily move through the survey to check his responses.

“I think the only suggestion I had was having a dropdown menu of all the parts of the survey that needed to be completed so that if I had to go back to a question to refer, I think I had to keep clicking ‘Back’ to go back to an individual question. I may have missed something in the portal, but I didn’t see any way to look at a whole list and go “Oh, this is the question I wanted to go back to.”

Thirteen participants across the two research efforts were asked about their record keeping practices for business locations in Puerto Rico. All participants whose business has at least one location in Puerto Rico explained that the data for such locations were easily obtained and reported.

“It was very easy. We treat PR as a separate company, when we set them up on the books, they are set up separately.”

“[Getting the data for Puerto Rico is] pretty simple, [the Puerto Rico location] is integrated into the same system as any of our US [locations]. There is no currency exchange that needs to be done. For us, it is streamlined who I need to talk to. The [fact that it is in] Puerto Rico doesn’t add any extra layer of difficulty than any other [location].”

“[The records are from the] exact same source. Everything is generated off of location number and the database contains all locations, including those in Puerto Rico.”

“It should still be just the same as the others because it’s all in our system. So, it wouldn’t be any different really because all the data we have is in the same places.”

All participants indicated that they use the same steps to pull data for their locations in Puerto Rico as they do for their locations in the United States. Furthermore, the data about their locations in Puerto Rico are just as accessible as the data about their locations in the United States. One participant noted that gathering detailed capital expenditures data required an email to be sent to their location in Puerto Rico, but that the total level of effort in collecting this information was comparable to that of collecting this information about their domestic locations.

“They are treated and addressed the same, the only thing I’ve seen is one year they asked for more detailed Cap Ex data for them. So I have to reach out because that is not in our system. But anything operational I have. I would contact the controller in PR, it’s not an issue, send an email.”

“It is the same process as some of the others, I have to go down to the finance person, payroll person, and the operations person and ask them for the data, because we don’t have that information in our normal system, it has to all be pulled.”

Participants did not identify any issues with reporting data separately for their locations in Puerto Rico. Even the participant who explained that they needed to reach out to their locations in Puerto Rico for capital expenditures data did not have any issues with reporting and felt that the burden of obtaining and reporting this information was low.

Participants whose businesses have locations in Puerto Rico also reported that they have not encountered any language or cultural barriers in the process of gathering these data for the AIES. In some cases, the participants do not need to collaborate with any local staff to obtain the data, instead relying on reports they access themselves or colleagues based in the United States. However, even if they did need to collaborate with local staff, these participants clarified that all of the staff they interact with speak English.

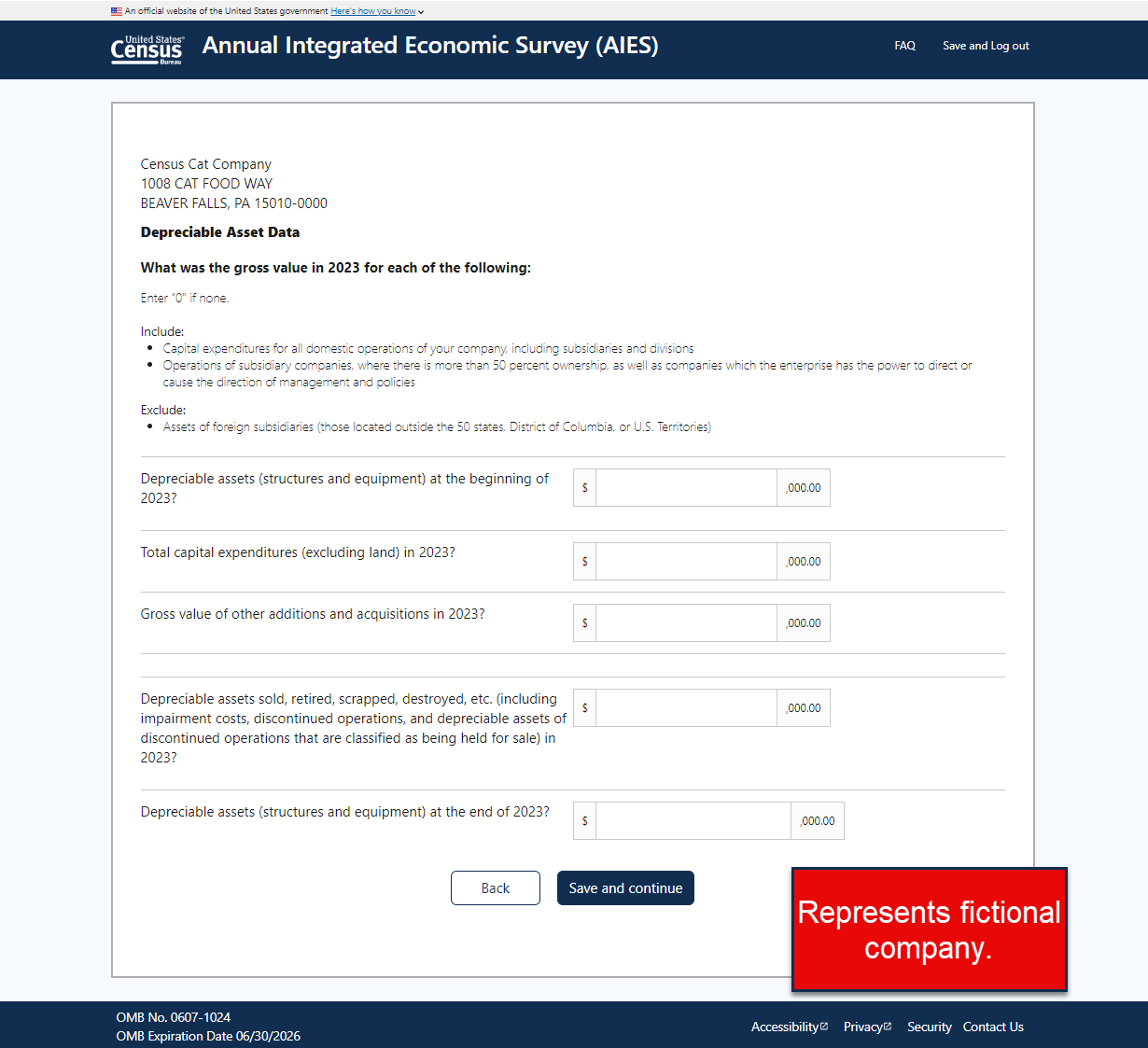

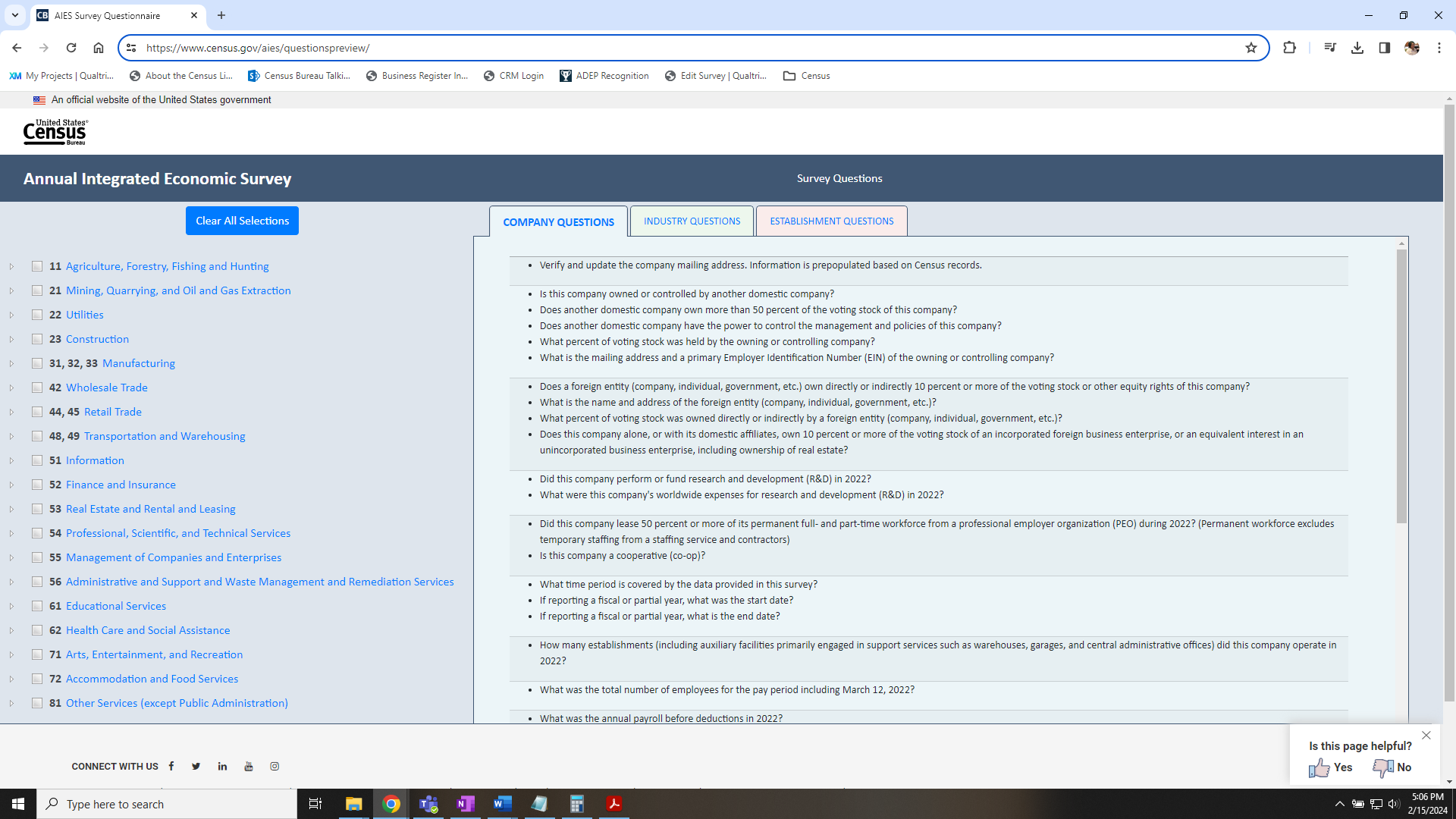

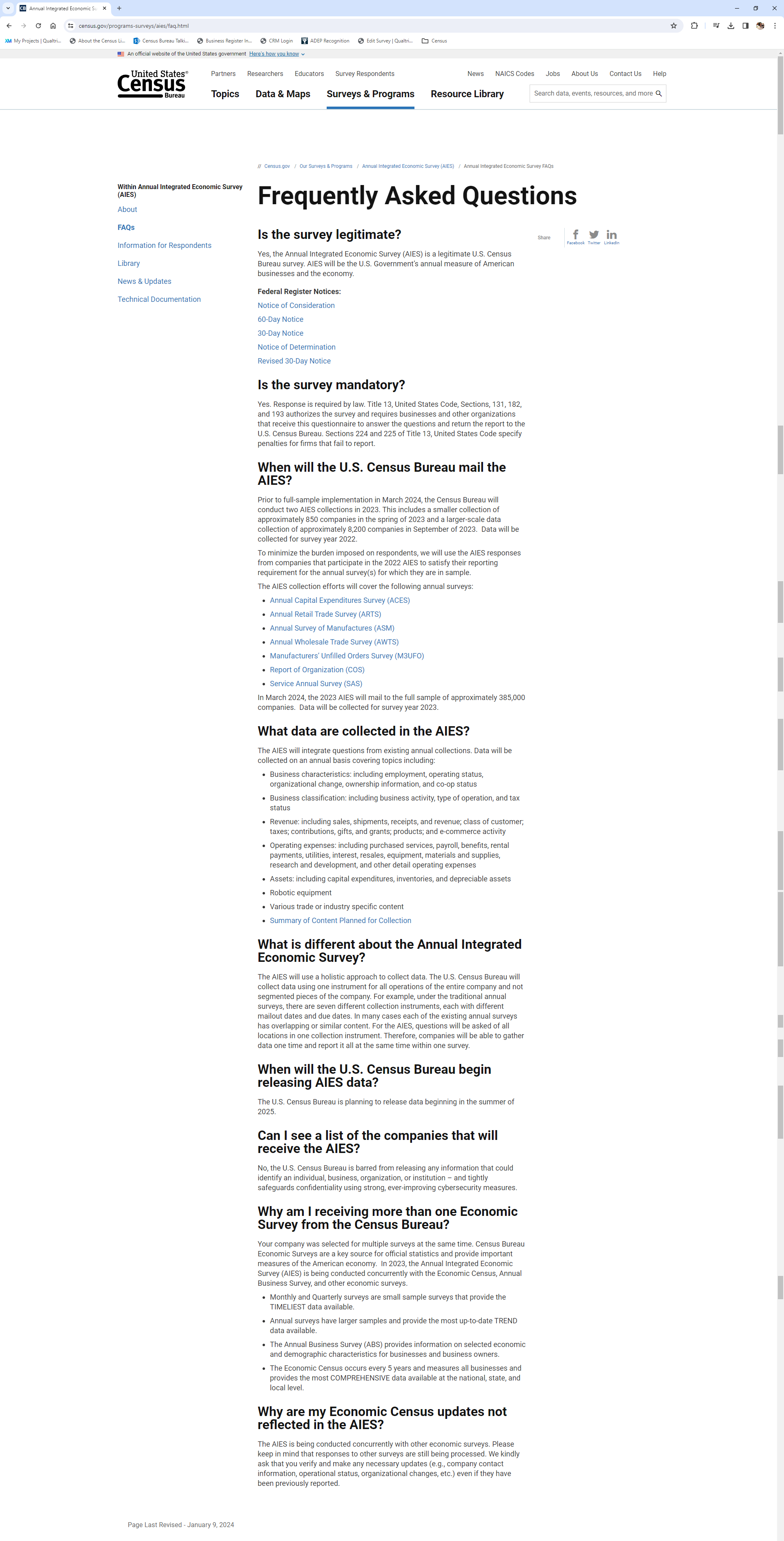

In addition, Census Bureau conducted another 11 debriefing interviews with businesses primarily or exclusively located in Puerto Rico, uncovering similar experiences with little language or cultural barriers for completing the AIES. See Appendix E for further details of these interviews.