AIES Content Selection Tool Usability Testing

Attachment J - AIES Content Selection Tool Usability Testing.docx

Annual Integrated Economic Survey

AIES Content Selection Tool Usability Testing

OMB: 0607-1024

Attachment J

Department of Commerce

United States Census Bureau

OMB Information Collection Request

Annual Integrated Economic Survey

OMB Control Number 0607-1024

AIES Content Selection Tool Usability Testing

Findings and Recommendations from Usability Testing for the Annual Integrated Economic Survey (AIES) Content Selection Tool

Prepared for:

Lisa Donaldson, EWD

Blynda Metcalf, ADEP

Prepared by:

Krysten Mesner, EWD

Melissa A. Cidade, EWD

U.S. Census Bureau

July 9, 2024

The Census Bureau has reviewed the report for unauthorized disclosure of confidential information and has approved the disclosure avoidance practices applied. (Approval ID: CBDRB-FY24-ESMD001-010).

Contents

AIES Pilot Phase III/Dress Rehearsal 11

Findings and Recommendations 22

Specific Findings: Interactive Content Tool 24

Next Steps and Future Research 28

Participant Debriefing Interviews 29

Future Research Considerations 30

Appendix B: Participant Informed Consent Form 35

Appendix E: Interviewing Protocol for the Content Selection Tool 58

Executive Summary

The Annual Integrated Economic Survey (AIES) launched in March 2024, integrating and replacing seven existing annual business surveys into one survey. The AIES will provide the only comprehensive national and subnational data on business revenues, expenses, and assets on an annual basis. The AIES is designed to combine Census Bureau collections to increase data quality, reduce respondent burden, and allow the Census Bureau to operate more efficiently.

Establishment survey respondents often rely on a survey preview to support response. However, there is no designated survey form for the AIES as questions are specific to a company’s industry (or industries). As such, the Census Bureau developed an interactive content selection tool for previewing questions specific to a company’s assigned North American Industry Classification System (NAICS) code(s) to support response to the AIES.

In January and February of 2024, researchers conducted 23 semi-structured usability and cognitive interviews with respondents to evaluate the content selection tool for the AIES. This report details the findings and recommendations from the testing of the AIES Content Selection Tool. This includes details around the major findings and recommendations, including:

General Findings:

Finding 1: Participants are unclear on the purpose of the survey and are unsure how to access the AIES.

Recommendation 1: Test messaging on communicating the purpose of the survey and how to access results in future AIES communications research projects.

Finding 2: Gathering data to respond to the survey can be a multi-step process.

Recommendation 2: Develop additional tools to support response delegation and assist respondents in data gathering efforts.

Finding 3: NAICS codes can be a source of confusion for respondents.

Recommendation 3: Engage additional investigation into the NAICS taxonomy from the respondents’ perspectives.

Specific Findings:

Finding 4: Participants like the tool and want early notification.

Recommendation 4: Include the tool in early communications, and make it more prominent in the materials ecosystem.

Finding 5: Participants struggle with their NAICS selection within the tool.

Recommendation 5: Improve the NAICS selection interface within the tool.

Finding 6: Participants struggle with the tool interface.

Recommendation 6: Update the default view and develop download or save capabilities.

Finding 7: Participants want the tool to relate to the survey more clearly.

Recommendation 7: Update the website label and include the question details in the preview.

Background

The Annual Integrated Economic Survey (AIES) launched into full production in March, 2024. To prepare for this launch, the Census Bureau conducted a series of research activities. Part of this research effort was the AIES Pilot Program, a three-phase research plan that resulted in iterative, respondent-centered instrument design.

At each phase of the AIES Pilot, researchers provided respondent support materials that reflected the instrument at that time. As the instrument iteratively developed, so too did the response support materials. In this section of the report, we trace the respondent support materials through the three phases of the Pilot, including an overview of the content selection tool at the end of the last phase.

AIES Pilot Phase I

The AIES Pilot Phase I represents the first tests of independent response of the first iteration of the survey instrument. In this section, we provide an overview of the survey, including the response support materials made available based on this version of the instrument.

Overview

Pilot Phase I launched in February 2022 to 78 companies; of those, 63 provided at least some response to at least one question on the survey. Firms were recruited to participate in the study, and participation was optional. Response to the AIES Pilot Phase I was “in lieu of” response to the legacy annual surveys for which the firm was in-sample for survey year 2021: that is, once a company responded to the AIES Pilot Phase I, they had met their mandatory reporting obligation to the legacy surveys for which they were in sample that year.

Pilot Phase I Response Support Materials

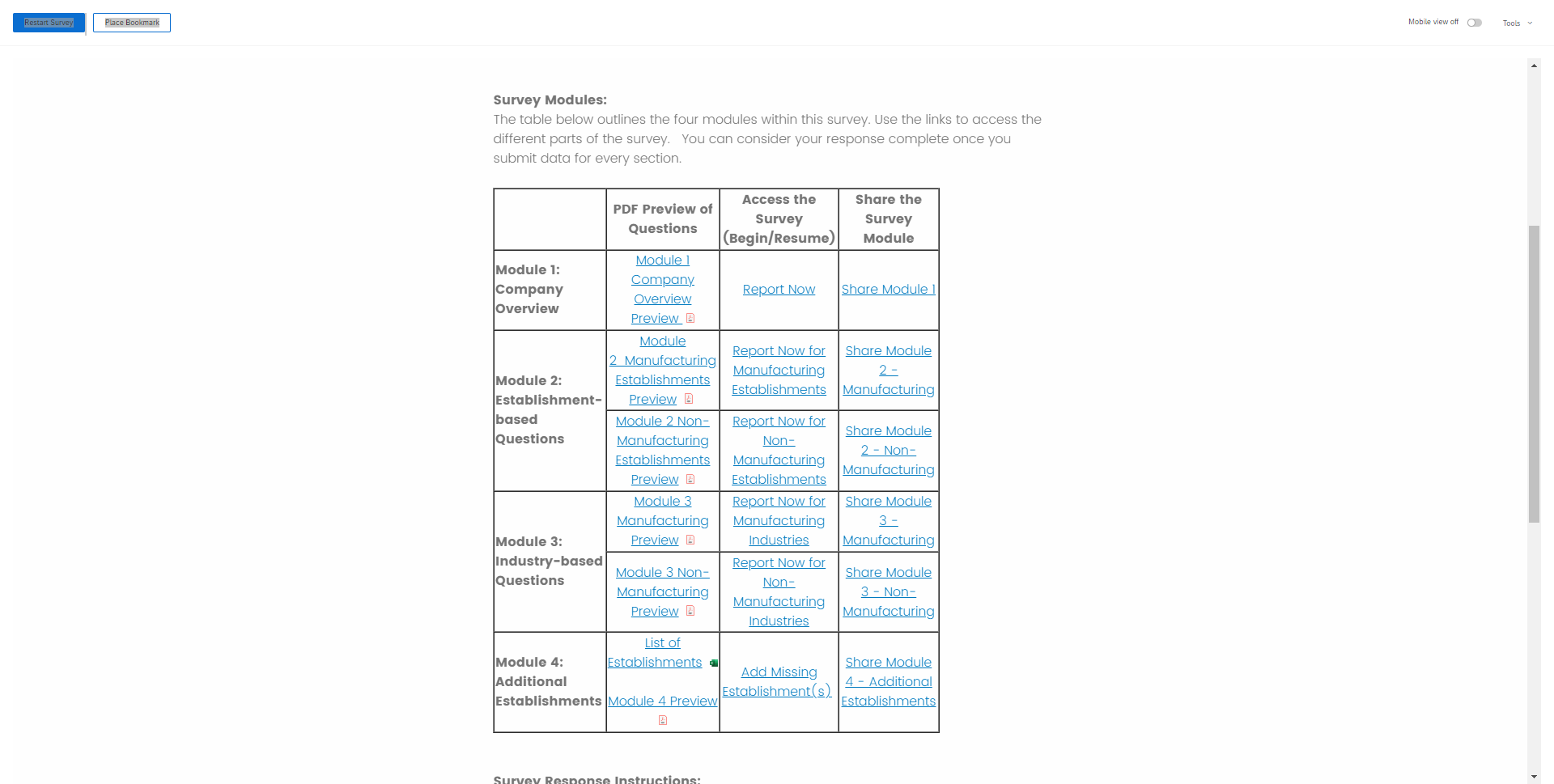

The Phase I instrument was organized into four modules, two of which had submodules that were assigned to companies based on what they do or make. Once a respondent logged into the survey, they were redirected to a rudimentary survey dashboard, shown in Figure 1. Note that the submodules only displayed if they were in-scope for the responding company.

Figure 1: AIES Pilot Phase I Instrument Dashboard

Represents fictional company

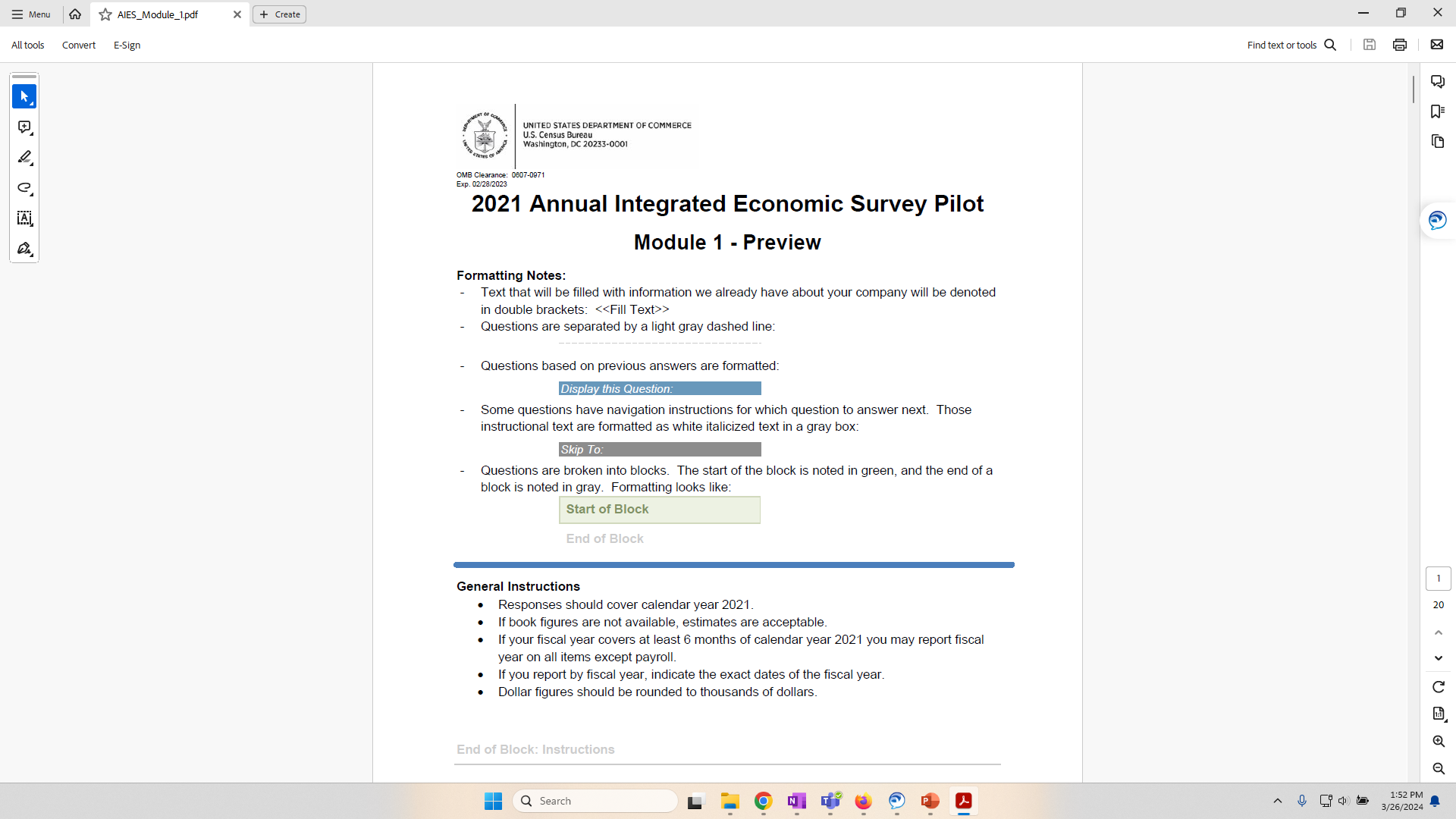

From this dashboard, respondents could access PDF previews of all the possible questions within a given module or submodule. Clicking on the link opened the PDF, which started with an overview of the formatting to help respondents understand which questions they may – or may not – expect to see for a given module. See Figure 2 for a screenshot of the formatting guide.

Figure 2: AIES Pilot Phase I Question Preview PDF

These PDF previews were not customized in any way. Throughout the document, we did note that some questions were in-scope for all companies, and some were not. We also noted which types of companies might see the question (mostly by sector, e.g., those in manufacturing, those in retail, etc.).

While the use and evaluation of response support materials was not a research focus for Phase I, in general, the survey preview PDFs in this round were very long, cumbersome, and not user-friendly. It would be challenging for a company to determine which questions they would see on their survey with certainty.

AIES Pilot Phase II

Building on the findings and recommendations from Phase I, the AIES Pilot Phase II instrument included new features, like an integrated unit model and optionality of response at the unit level. It increased in size and scope relative to the first Phase. In this section, we provide an overview of this next iteration of the survey, including the response support materials made available based on this version of the instrument.

AIES Pilot Phase II Overview

Pilot Phase II launched in March 2023 to 890 companies, of which, 572 uploaded a response spreadsheet. For Phase II, firms were randomly selected to participate in the Pilot in place of responding to the legacy annual surveys for which they were in-sample for that year. A company could refuse participation in the Pilot Phase II, in which case, they would be redirected to the legacy surveys for which they were in sample for that year. Responding to the Phase II survey satisfied a company’s mandatory reporting obligation for survey year 2022. All companies providing at least some response to the Phase I pilot instrument were included in the Phase II collection. Note that a major impact on the Phase II Pilot was its concurrence with the 2022 Economic Census.

Pilot Phase II Response Support Materials

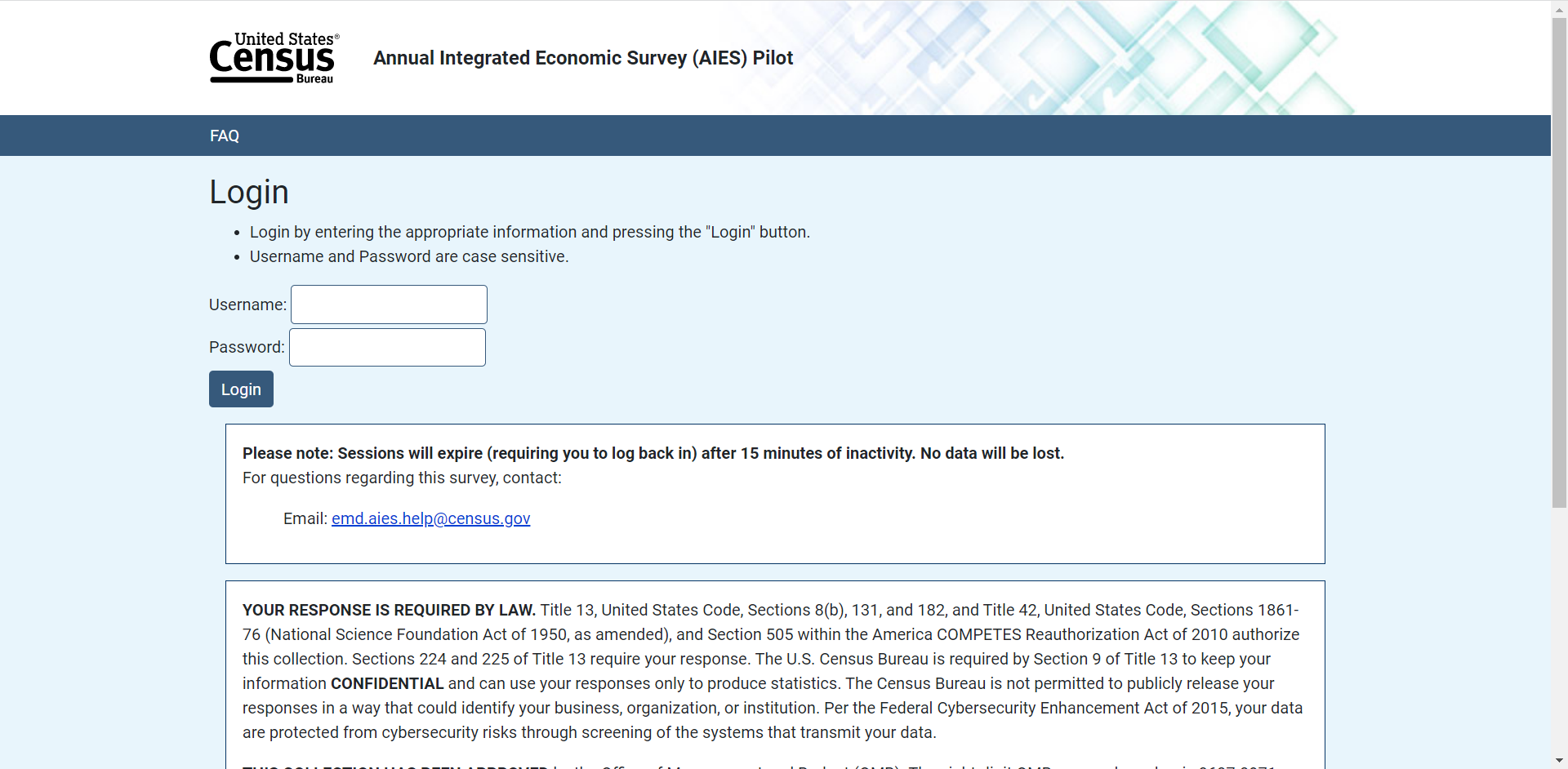

The Phase II instrument was a bespoke Excel spreadsheet that was pre-populated and hosted on a secure online portal. We mailed and emailed respondents notification of their inclusion in the Phase II Pilot, along with username and password credentials to log into this portal to retrieve their response spreadsheet. See Figure 3 for a screenshot of the login page.

Figure 3: Screenshot of Pilot Phase II Login Page

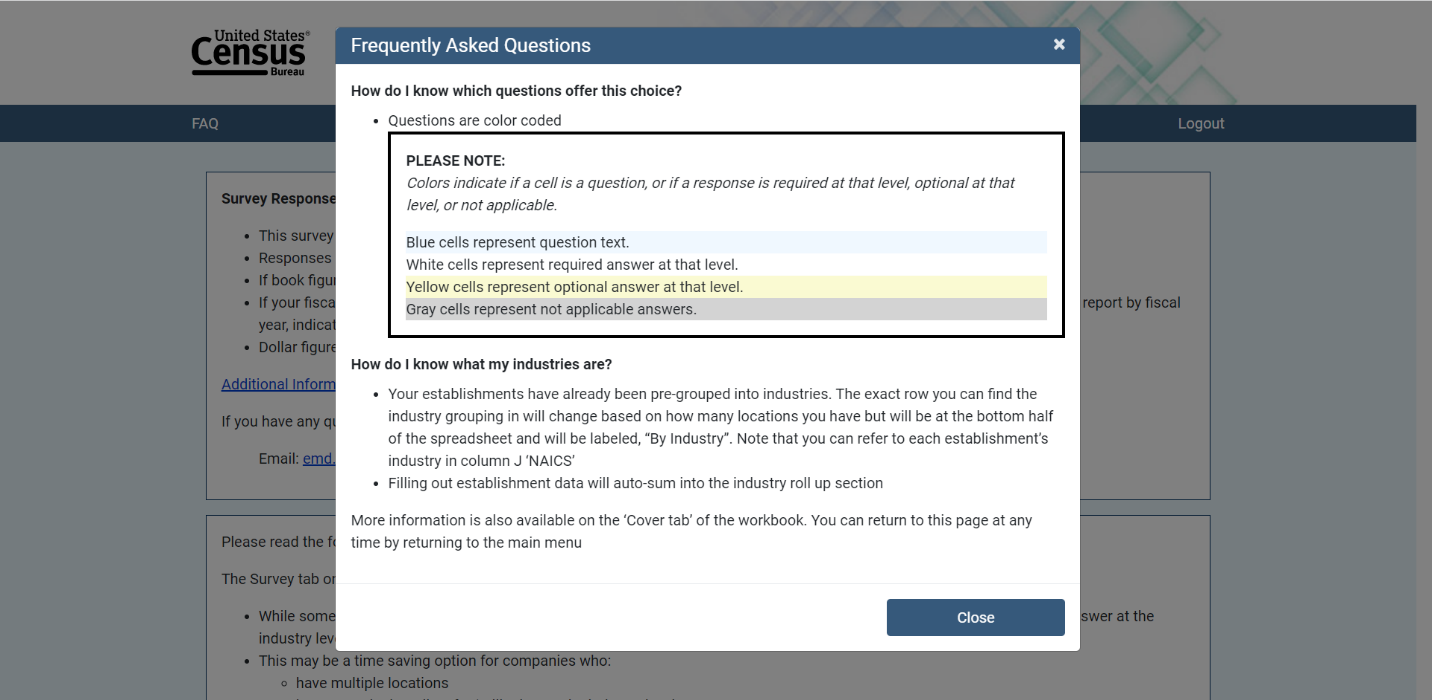

Upon accessing the portal, respondents could access Frequently Asked Questions through a link in the upper left corner of the screen. This brought up an overlay ‘modal’ screen with additional information for response. See Figure 4 for a screenshot of the FAQ overlay screen. The information contained in this overlay screen pertained to providing a response, and was not about any specific questions on the survey instrument. Respondents could also access a PDF document that provided additional response support – again, not the content of the survey, but rather instructions for providing response overall.

Figure 4: Pilot Phase II FAQ Overlay Screen

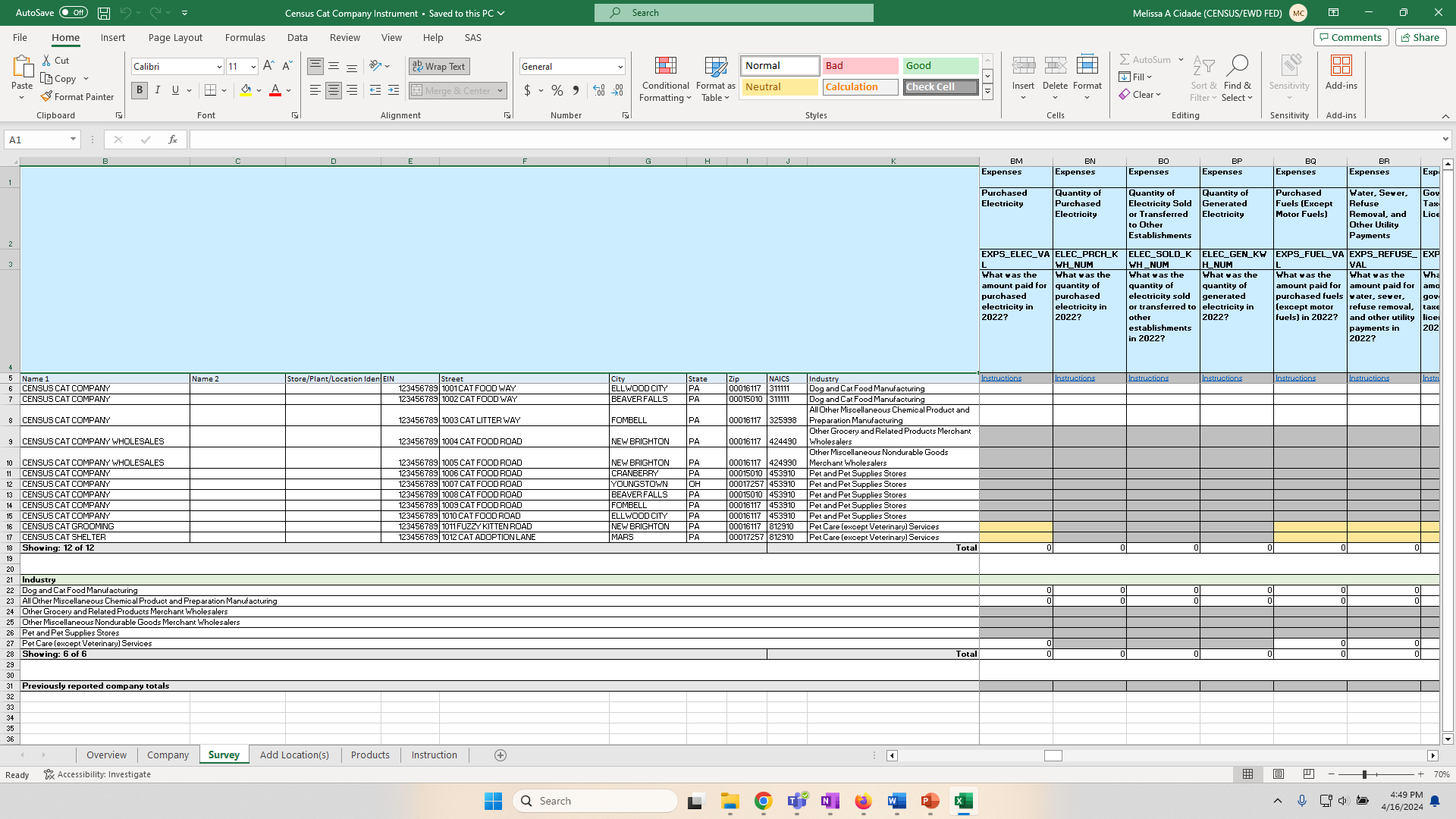

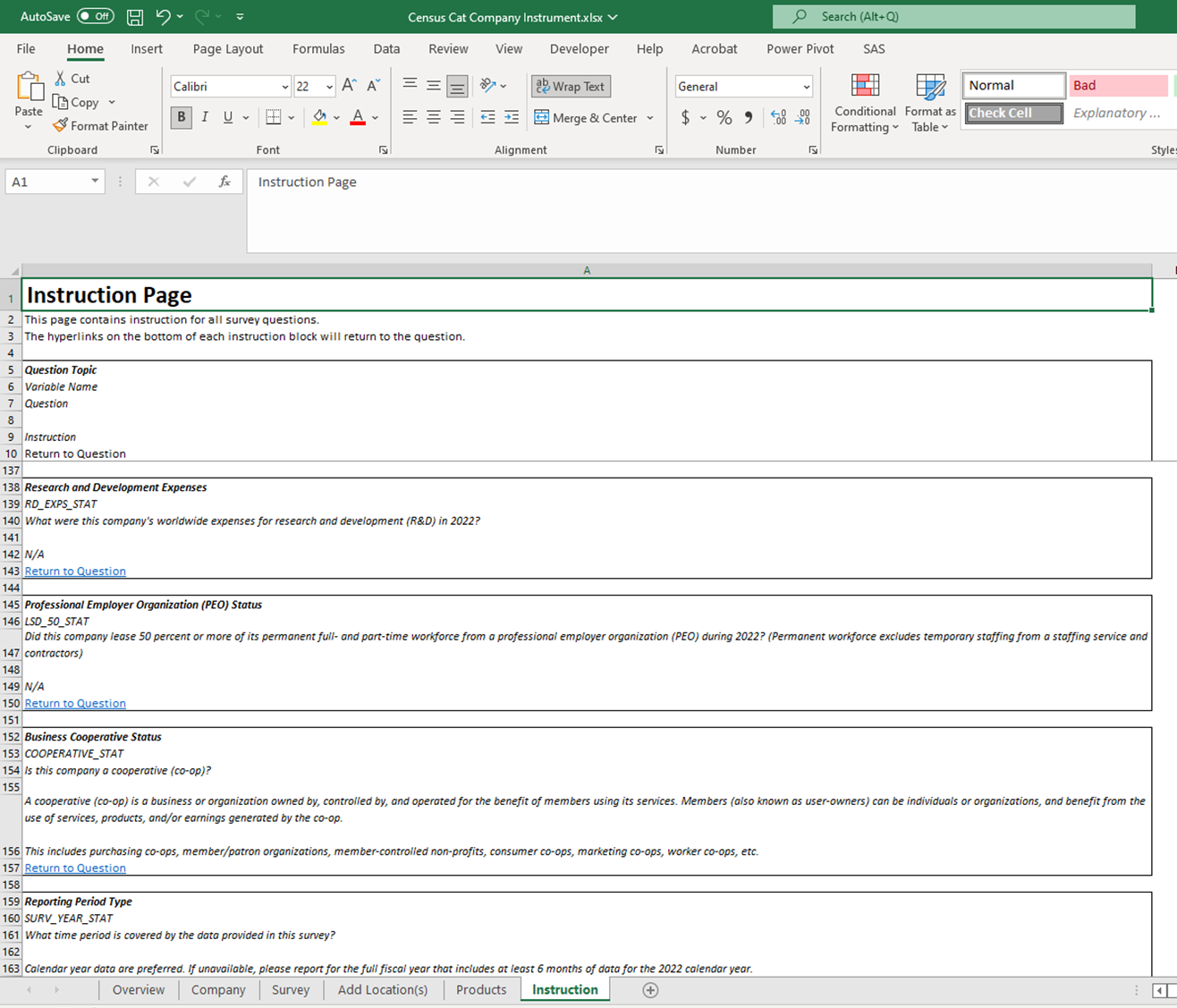

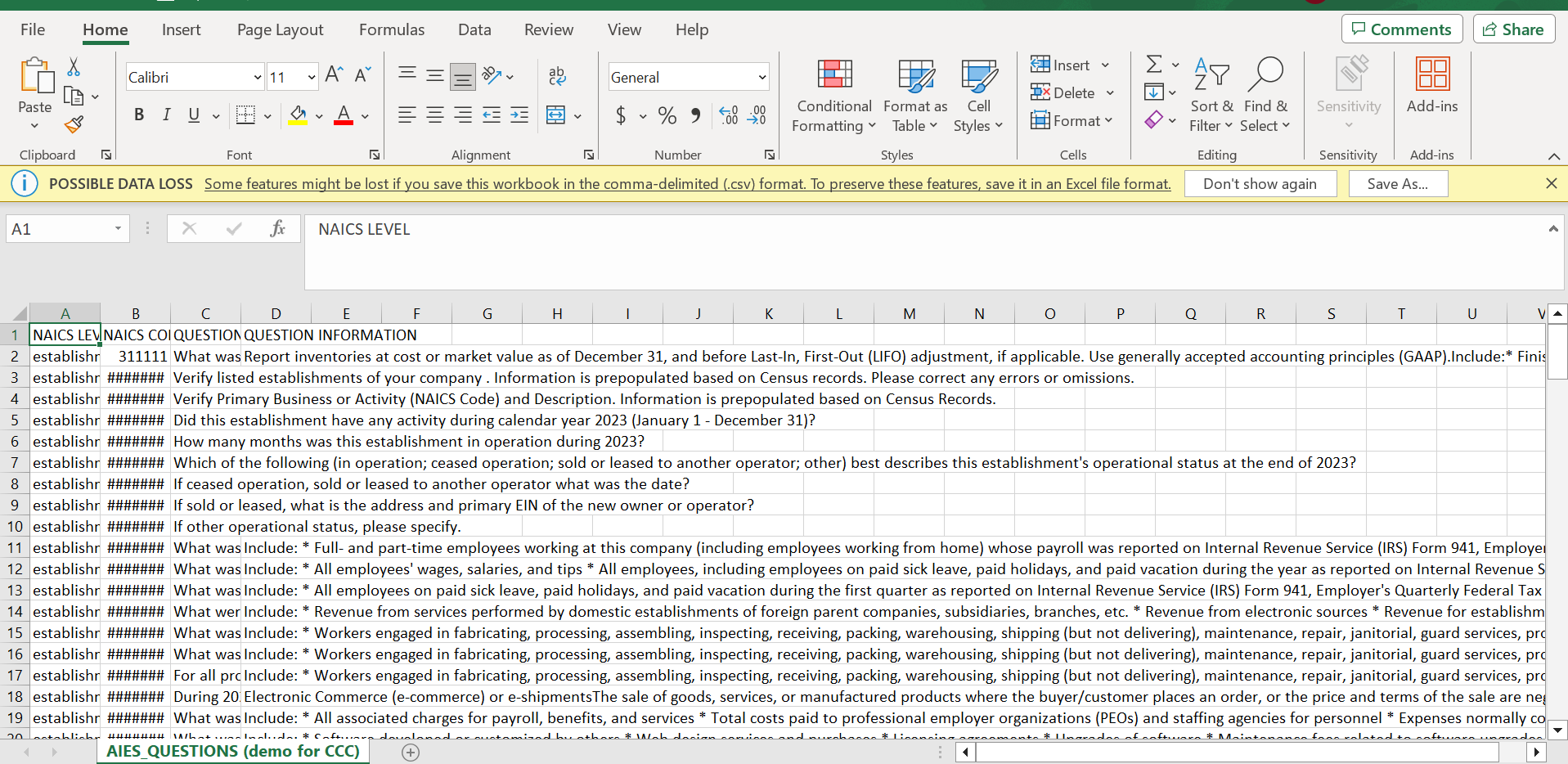

Instead, because the survey instrument was a downloaded Excel spreadsheet, the questions were displayed within that spreadsheet. Each question was contained in a column across the spreadsheet, and the corresponding rows contained the following information:

Row 1: The broad topic of the question (for example: Employment & Payroll)

Row 2: The specific topic of the question (for example: Annual Payroll)

Row 3: The variable name for that question (for example: PAY_ANN_VAL)

Row 4: The question text (for example: What was the annual payroll before deductions in 2022?)

Row 5: An instructions tab with additional information about that question.

See Figure 5 for a screenshot of a fictional company’s response spreadsheet.

Figure 5: Pilot Phase II Response Spreadsheet

Represents fictional company.

If a respondent clicked on the blue “Instructions” link under any given question, they were automatically directed to that specific question within the “Instructions” tab, where they would see additional information to aid in response, including what to include or exclude in their response, definitions, and other information. See Figure 6 for a screenshot of the Instructions tab. Respondents could then click “Return to Question” within that Instruction Tab to be redirected back to the question within the response spreadsheet.

Figure 6: Pilot Phase II Instruction Tab

Represents fictional company.

Pilot Phase II Response Support Findings

As part of the AIES Pilot Phase II, we conducted a series of respondent debriefing interviews. During these interviews, participants indicated that they liked the within-spreadsheet instruction functionality, and could describe it independently during those interviews. Said one, the “printed instructions were fine, and I really do like the instruction feature, that you could go to the specific instruction. I don't think we've had that before. Click to return to where you were is a good feature.” Another noted that the links condensed the information for them, saying that “maybe the instructions were long, useful sheet that people can easily reference…[the] links to specific parts were helpful.” Finally, one admitted that they “did use link for instructions” and that the “individual links were helpful for interpretation.”

AIES Pilot Phase III/Dress Rehearsal

In AIES Pilot Phase III – also known as the AIES Dress Rehearsal (DR) or the 2022 AIES – the instrument was designed to be as close to production field conditions as could be approximated at the time. This included a three-step survey instrument design, fuller implementation of the respondent contact strategy, and the use of newly developed Census Bureau infrastructure as part of the Data Ingest and Collection for the Enterprise (DICE) initiative. In this section, we will provide an overview of the last research iteration of the survey, highlighting response materials made available based on this version, and some initial feedback on the uses and usefulness of response support materials at this stage.

AIES Pilot Phase III/DR Overview

Pilot Phase III launched in September 2023 to 8,696 survey invitations, of which 4,920 submitted a response. For Phase III, firms were randomly selected to participate in place of responding to the legacy annual surveys for which they were in-sample that year. This Pilot phase was the only one that did not include a refusal mechanism – those companies that were in-sample for the Phase III Pilot had no other option to satisfy their annual reporting obligation due to the timing of fielding. However, these cases still answered ‘in lieu of’ any in-scope annual survey for which they may have been in sample that year. Phase III also ran concurrently with the end of the field period for the 2022 Economic Census, representing a crowded survey landscape.

Pilot Phase III/DR Support materials

In Phase III of the Pilot, respondent support materials took two forms: those found within the instrument and those hosted outside of the instrument. In this section we will briefly describe each.

Within Instrument

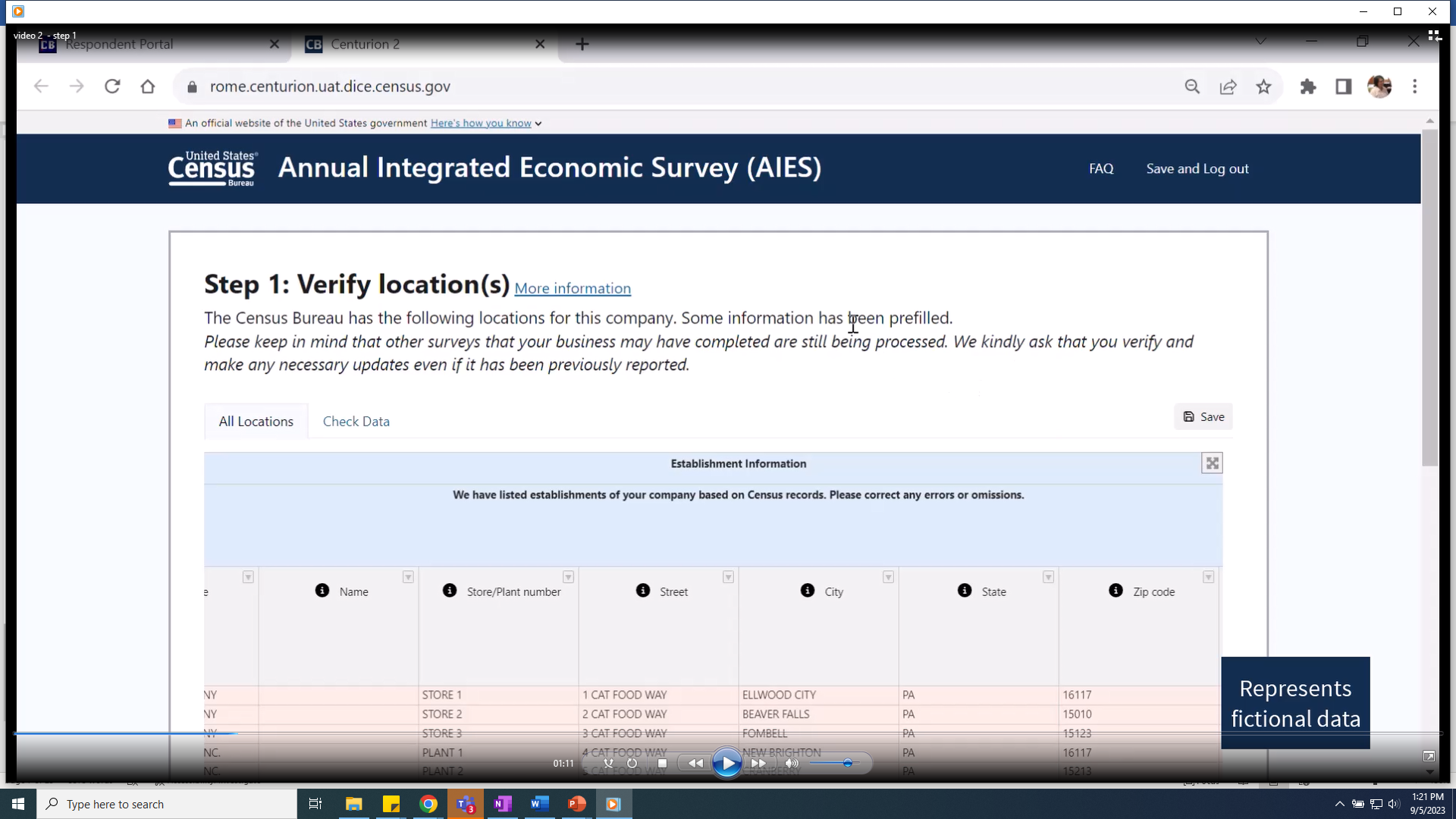

Once a respondent accessed their Phase III survey, there were several ways that they could access additional response support materials. First, on each screen of the survey, we displayed an “FAQ” link. See Figure 7 for a screenshot of the first page of the survey.

Figure 7: AIES Pilot Phase III Instrument First Screen

Once a respondent clicked on the FAQ in the upper right hand corner, a modal window appeared on screen with a list of questions we expected respondents to have based on prior rounds of research. Respondents could click on the question text or the plus (+) button to see a response and more information. See Figure 8 for a screenshot of an expanded FAQ.

Figure 8: Phase III Expanded FAQ Screenshot

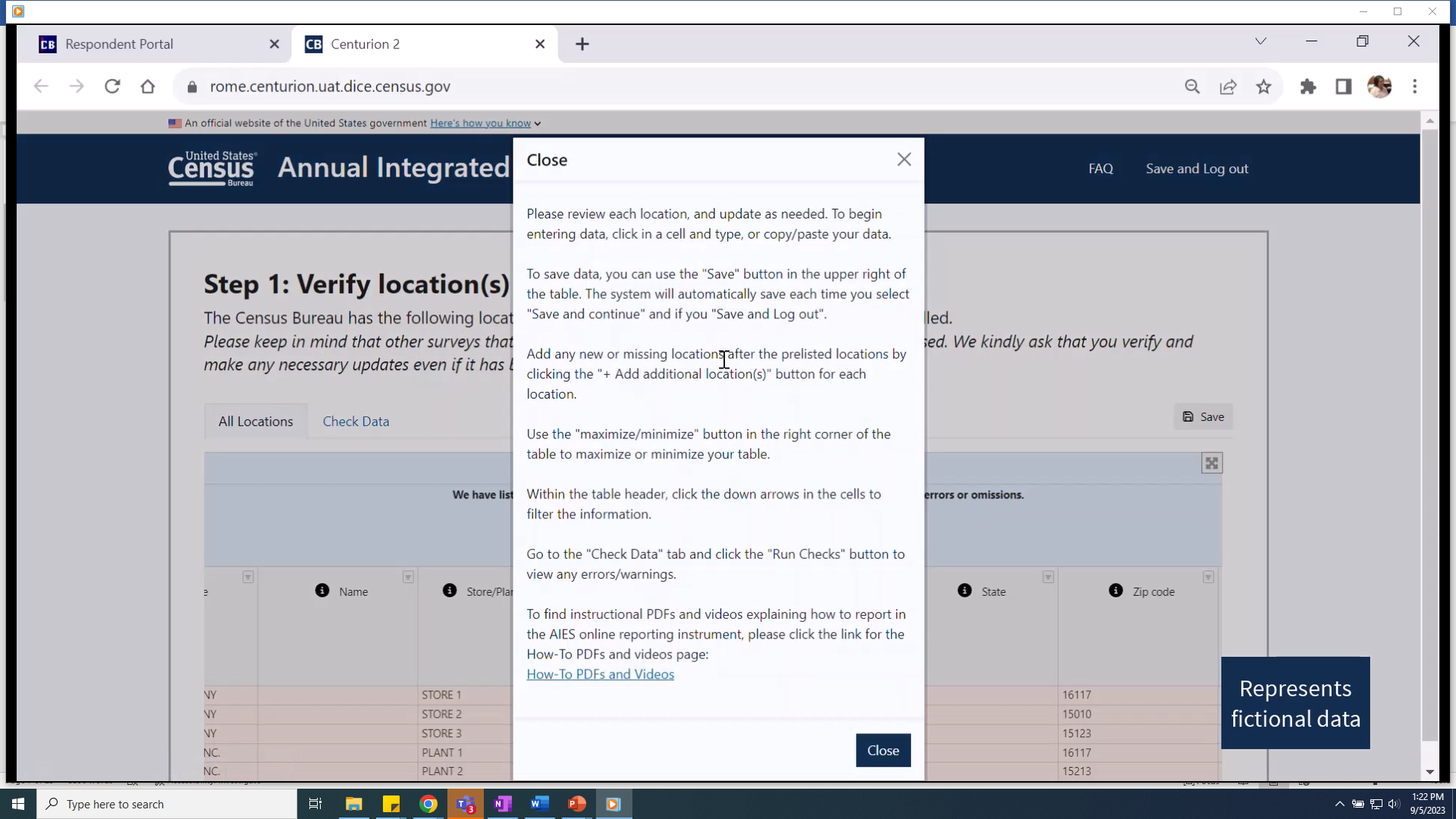

At each of the steps within the survey, we also provided a “More Information” link at the header. Again, clicking on this link displayed a modal window that contained information specific to that step of the survey response process. See Figure 9 for a screenshot of the “More Information” link and the window overlay.

Figure 9: Pilot Phase III "More Information" Link and Modal Window Screenshot

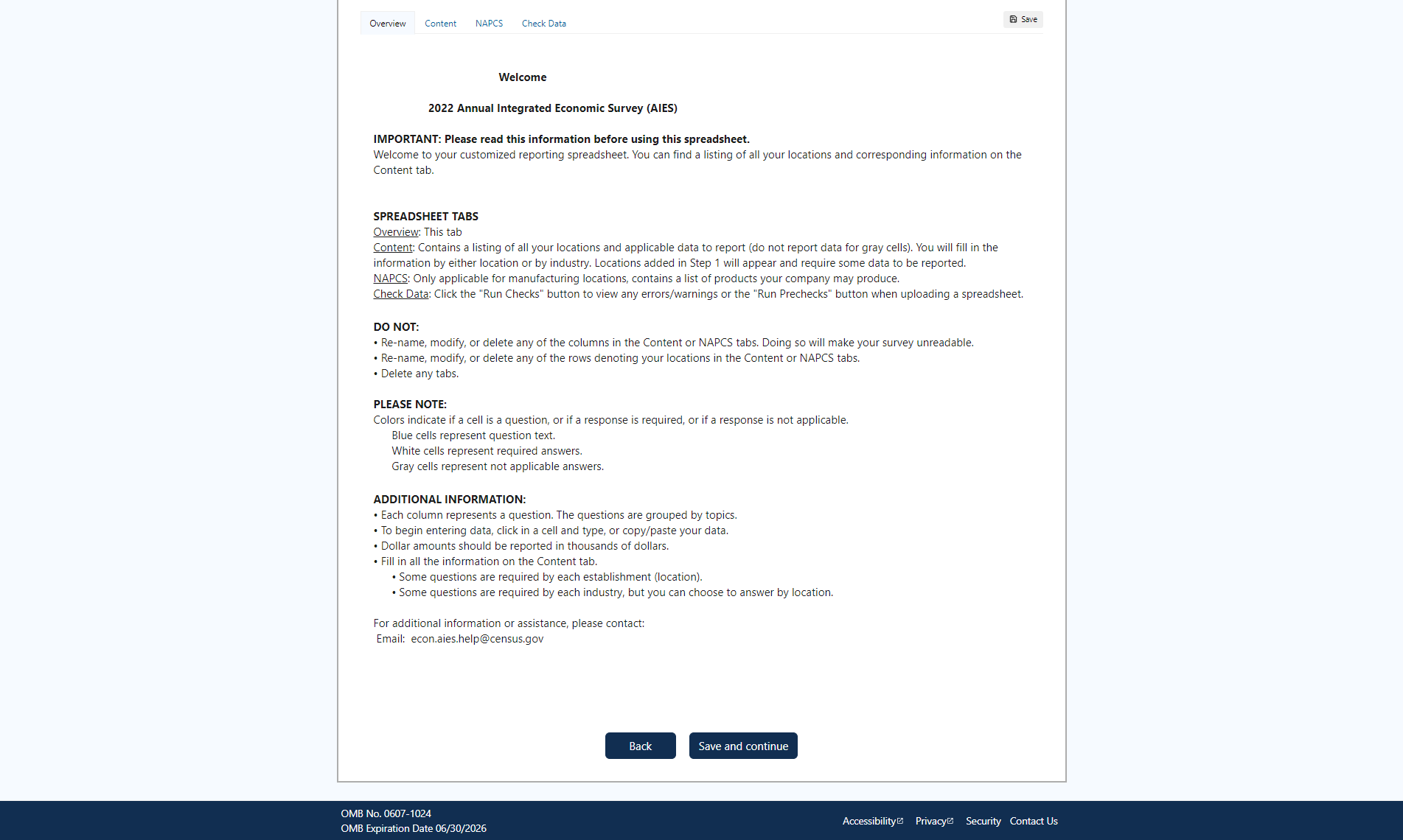

The Phase III Instrument also featured a response spreadsheet, either ‘online’ – contained entirely within the web survey browser – or ‘downloaded’ – a bespoke Excel spreadsheet respondents could download, fill out, and then upload back to the survey platform. Regardless of the chosen mode of response, the spreadsheet contained an “Overview” tab that provided additional detailed information about responding by spreadsheet. See Figure 10 for a screenshot of the Overview Tab for the online spreadsheet.

Figure 10: Pilot Phase III Response Spreadsheet Overview Tab Screenshot

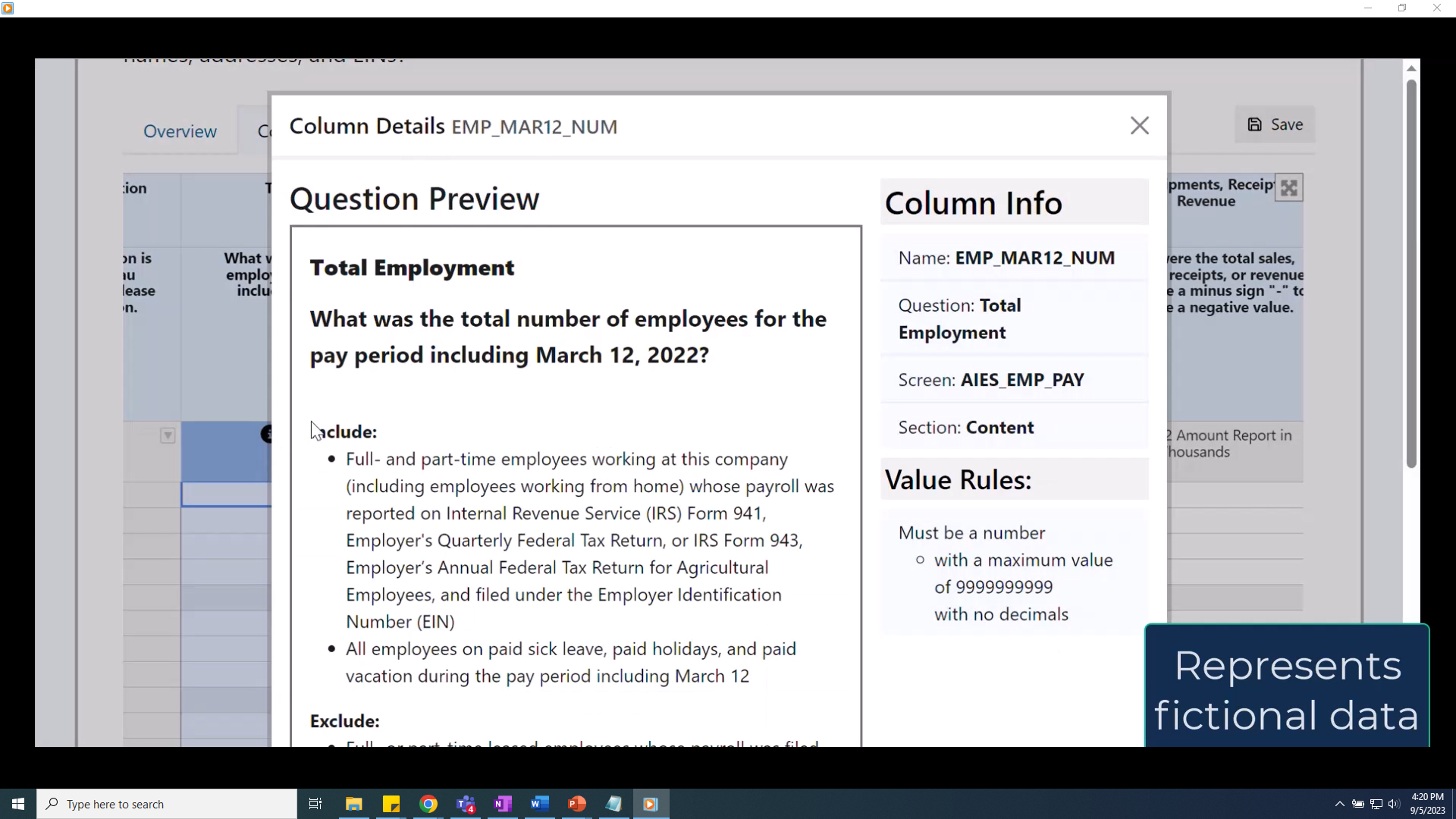

Within the online spreadsheet, we also provided question by question instructional text where appropriate. Respondents could click on an informational icon (a black circle with a white lower case ‘i' in the middle), and a question preview screen would populate over top of the survey instrument. See Figure 11 for an example of the question preview overlay screen for one question in Step 3 of the instrument.

Figure 11: Phase III Question Preview Screenshot

Outside of the Instrument

In addition to the additional response support materials that are embedded within the instrument, we also included materials that were hosted online and available without logging in to the instrument.

For the Pilot Phase III/DR, we developed a series of six walk-through videos that provided respondents with step-by-step instructions for completing each section of the survey. These videos were hosted on the AIES website, and covered the following topics:

Video 1: Introduction – provided a summary of the structure and intent of the survey, including how reporting has changed for a fictional example company.

Video 2: Step 1 – introduced the first step of the survey, updating lists of locations, including how to add missing locations, indicating operating status, and answering follow-up questions as driven by the instrument.

Video 3: Step 2 – provides a walkthrough of responding for Step 2 – providing company-level data, including moving through the screens for a fictional company and reporting data in this step.

Video 4: Step 3 online – gave an overview of responding to Step 3 – providing more granular response by using the online survey spreadsheet, including spreadsheet mode selection (online or download), the features included to aid response, and reporting by Kind of Activity Unit (KAU, or industry).

Video 5: Step 3 download – also gave an overview of responding to Step 3 but using the customized downloaded Excel spreadsheet, including orienting respondents to the spreadsheet, how to enter data, and how to upload the completed spreadsheet back to the survey, as well as reporting by industry.

Video 6: Reporting by Industry – this short video featured how to identify the industries in-scope for a fictional company, how to match locations to the appropriate industry for aggregation, and other details related to reporting for this unit.

Each of these videos was also distilled into standalone PDFs that provided the same information but in screenshots and text. The videos and PDFs were all hosted on the AIES website for respondents to access outside of the instrument.

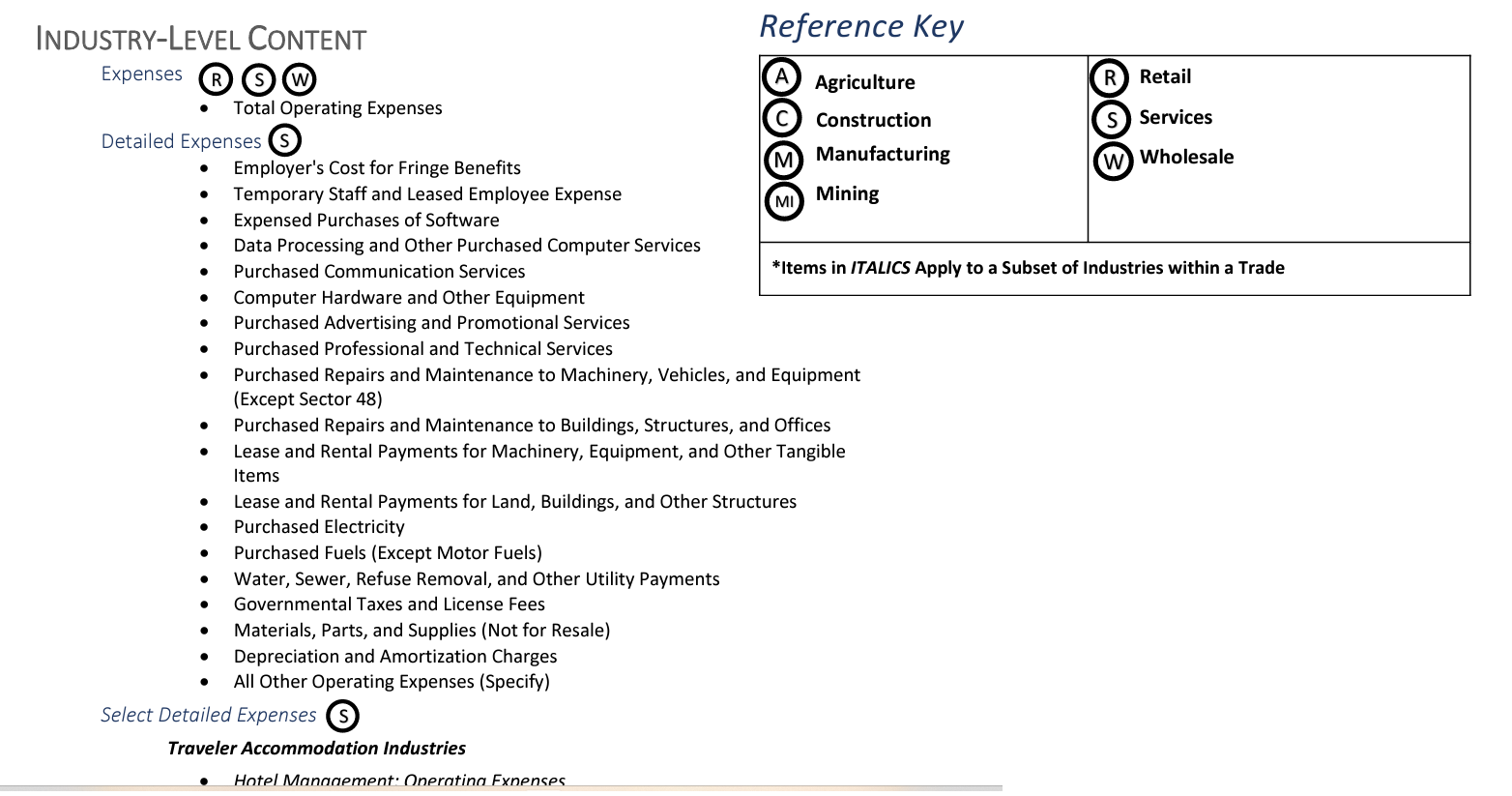

Also on the AIES website, respondents could access the AIES Content Summary. This is a PDF compendium of all of the topics within the AIES instrument, across all industries. It does not include the actual question text, but rather, the general question topics with an indication of the in-scope industries for those topics. For example, the Content Summary may note that there are questions on total operating expenses, and even that those questions are tailored for firms in retail, wholesale, and services, but not the actual questions collecting total operating expenses. See Figure 12 for a screenshot of the AIES Content Summary.

Figure 12: Screenshot of the AIES Content Summary

Finally, respondents could access the AIES Interactive Content Selector Tool to get a sense of the questions that may be on their survey. This tool is the focus of this report, so it is described in detail in the next section of this report.

AIES Interactive Content Selection Tool

In this section, we will document the development of the AIES Interactive Content Selection Tool as it existed at the conclusion of the AIES Pilot Phase III/Dress Rehearsal. This tool was developed to generate a listing of survey questions that a company may see on their AIES survey instrument as determined by the classification of their locations using the North American Classification System (NAICS) and units of collection (company, industry, and/or establishment).

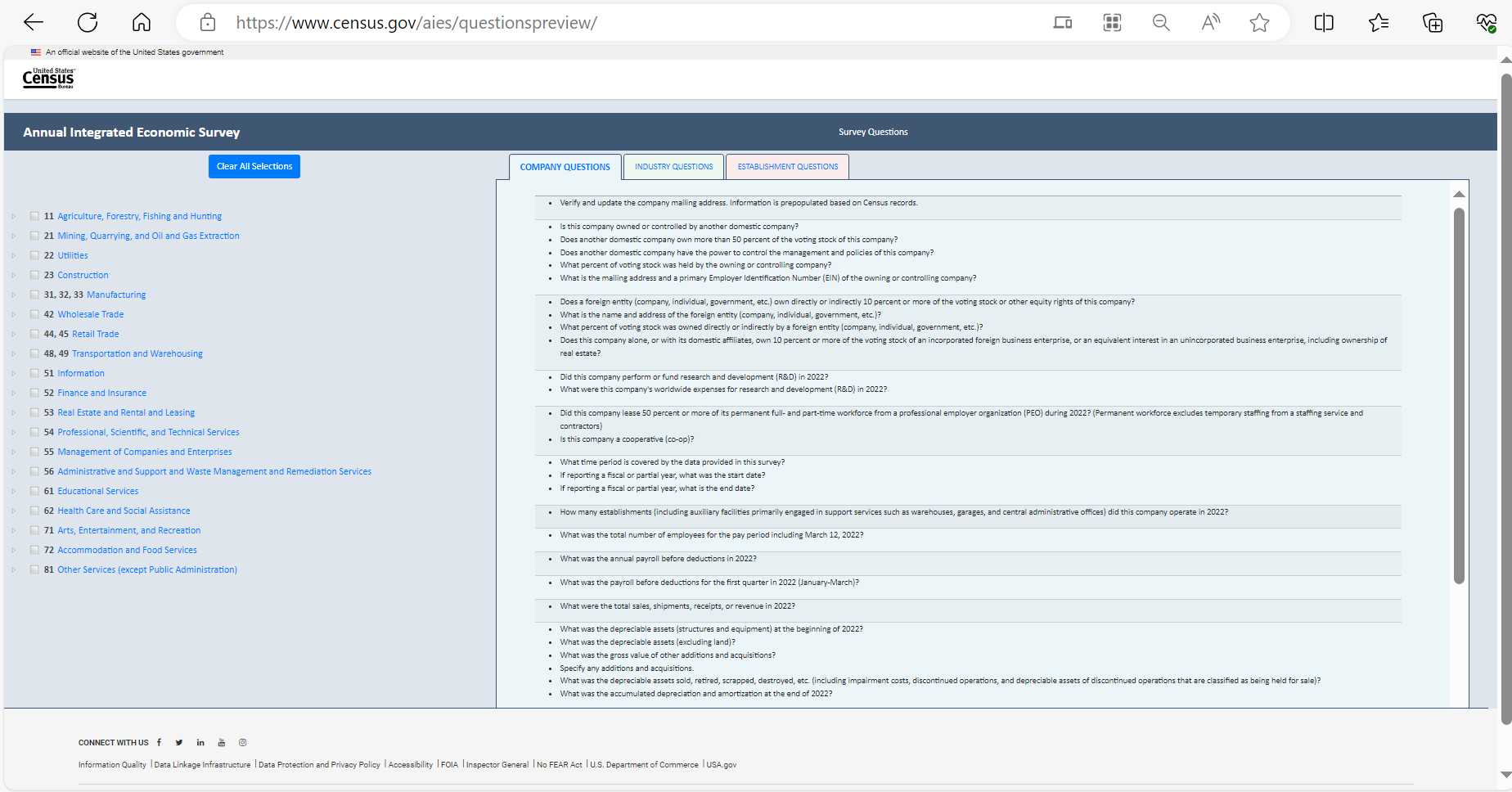

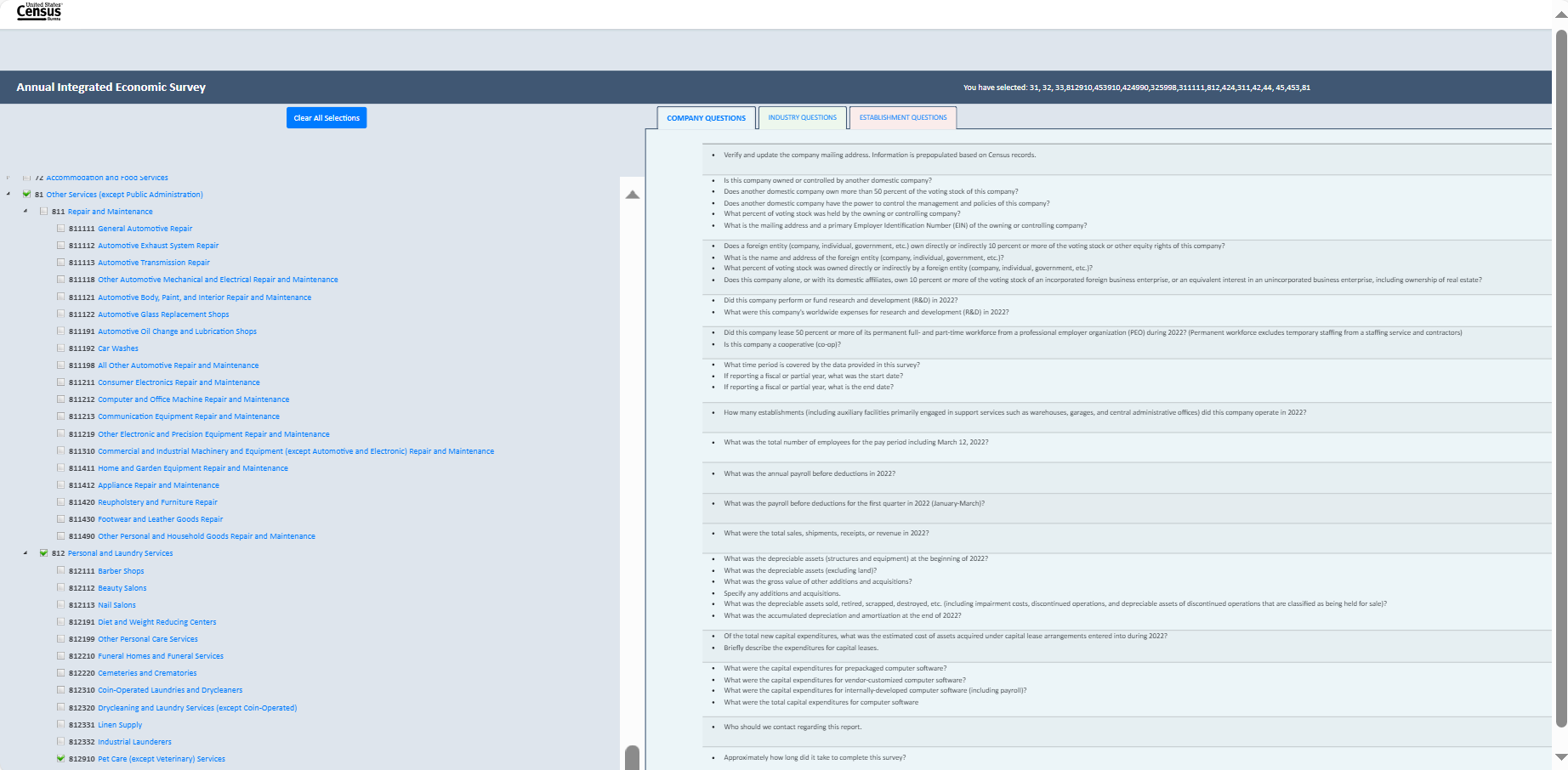

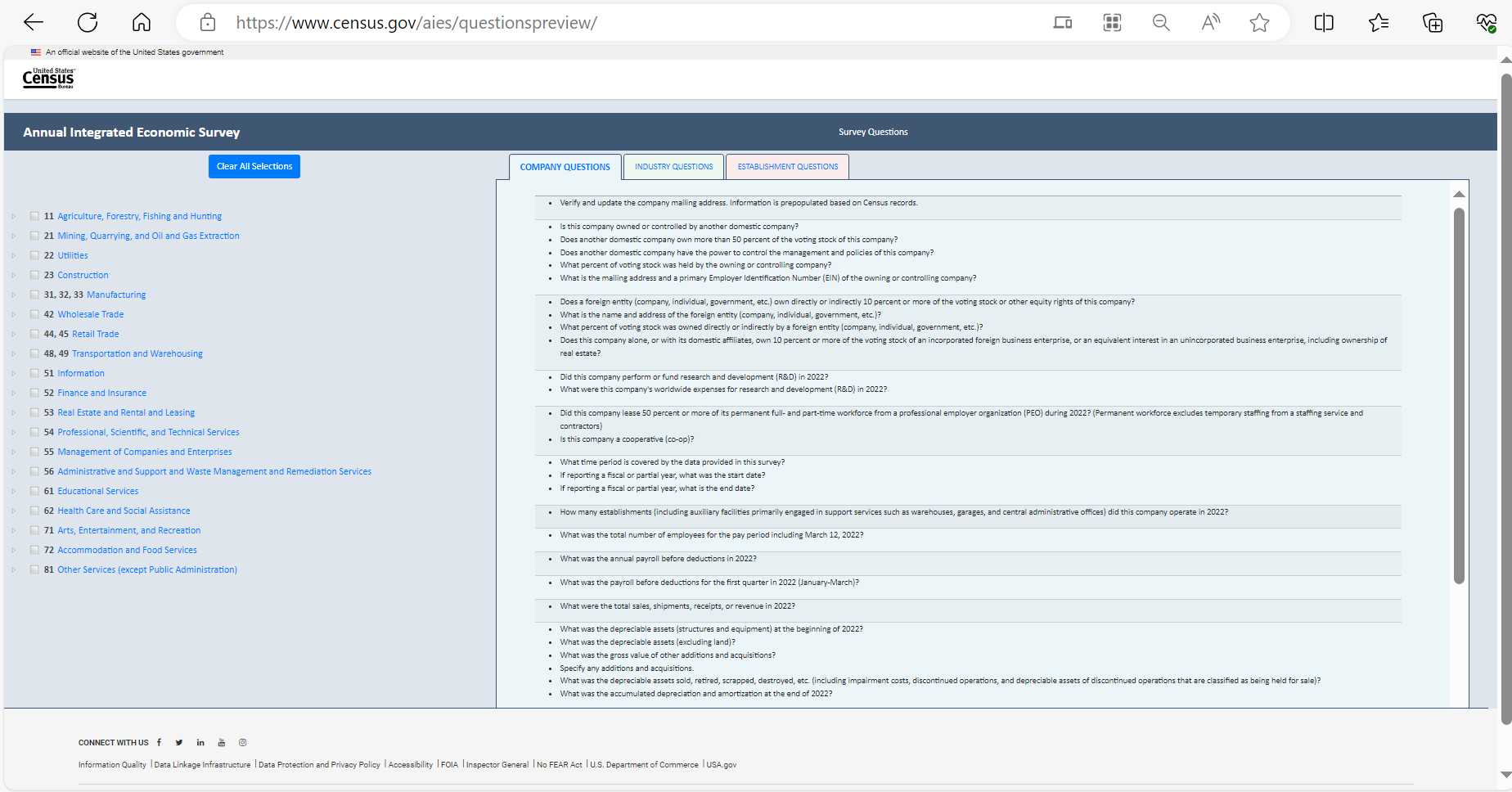

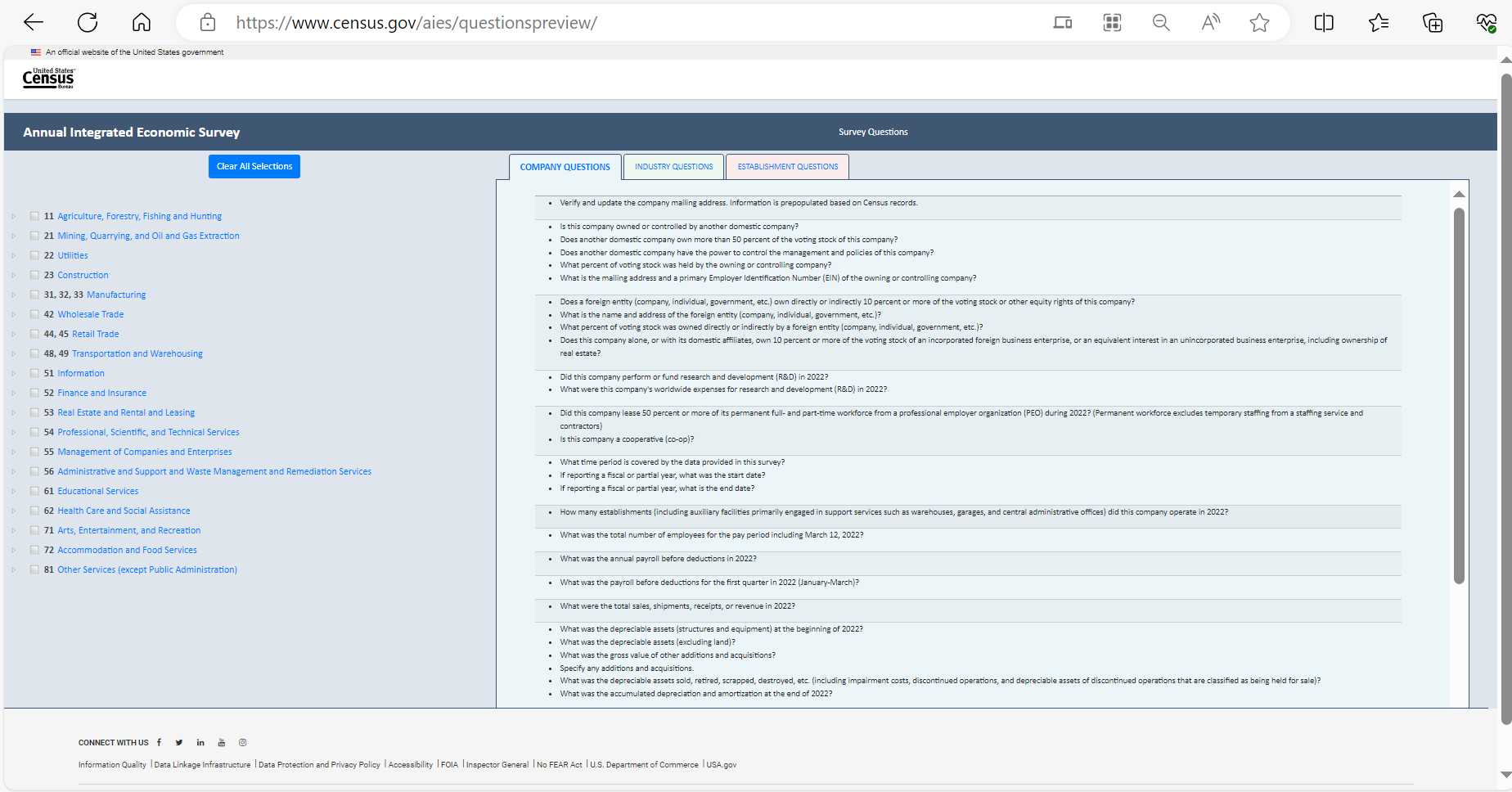

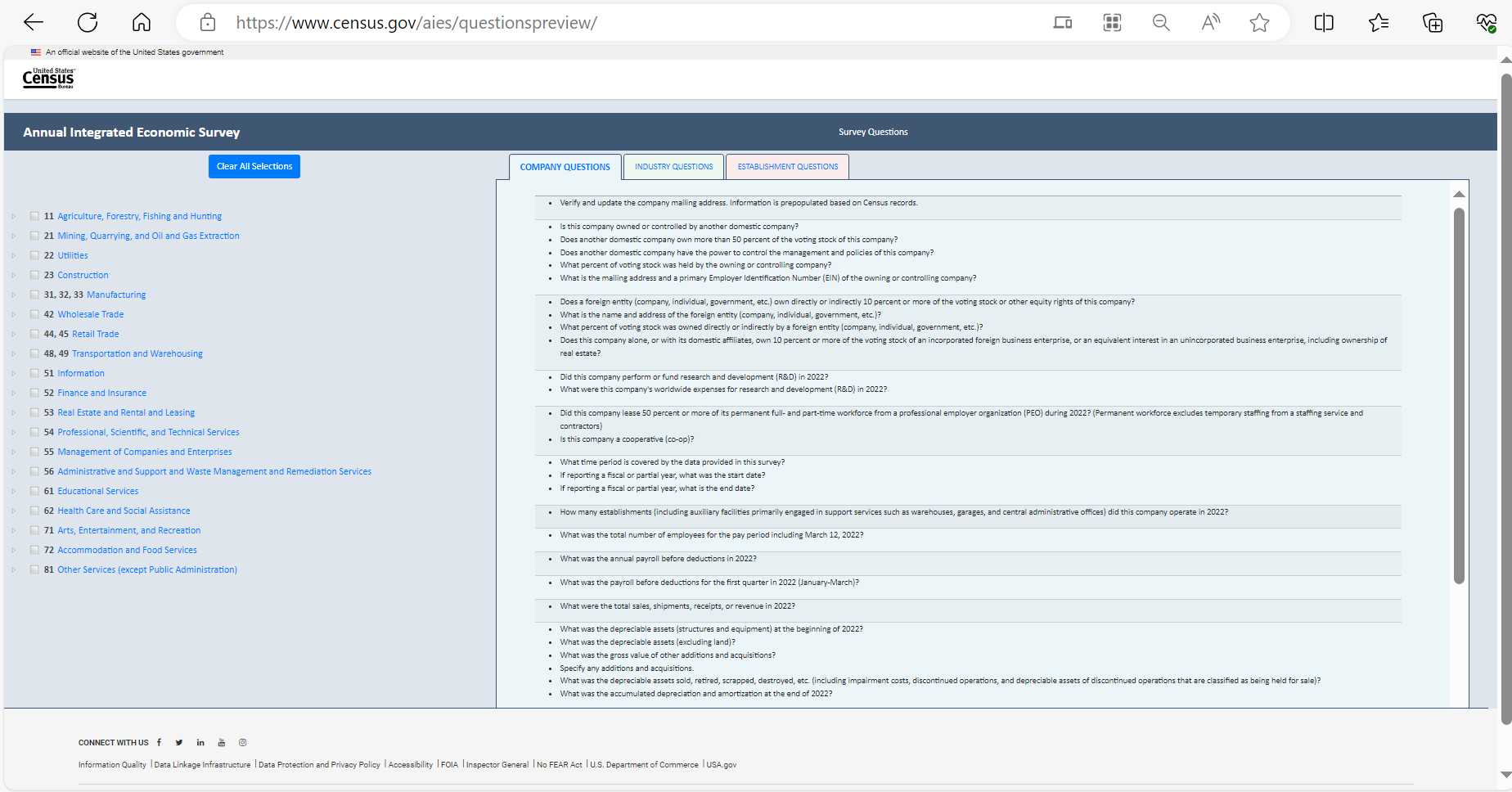

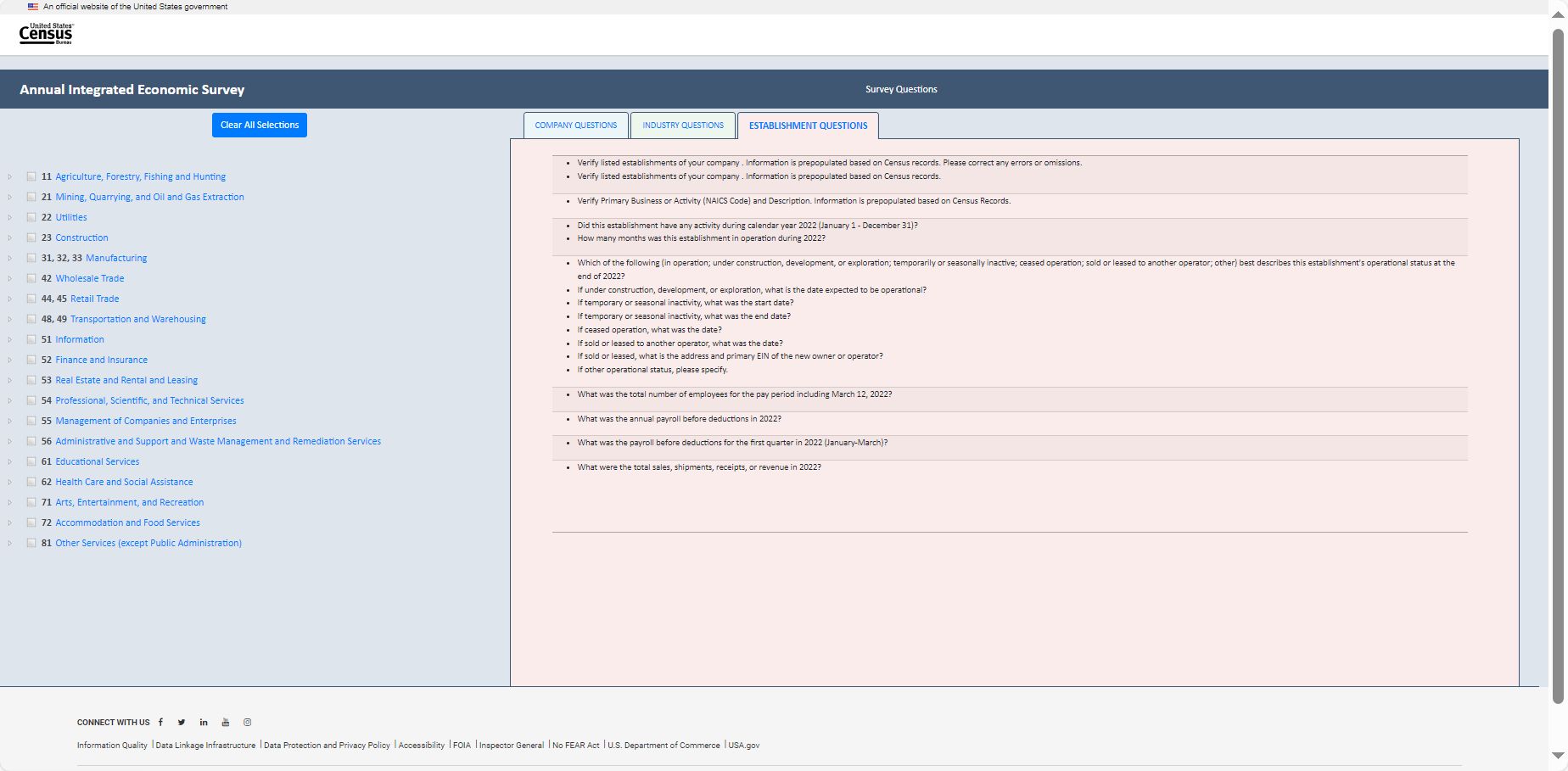

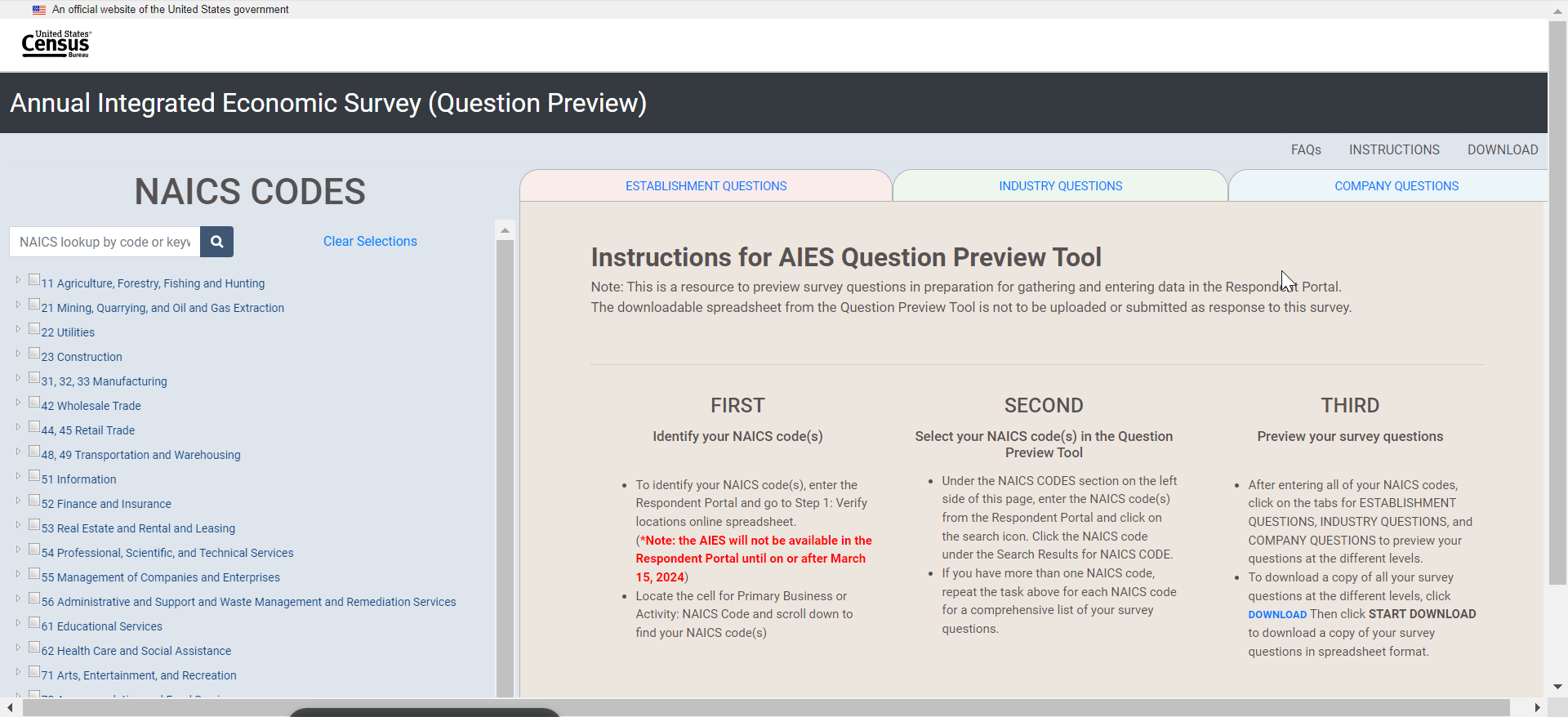

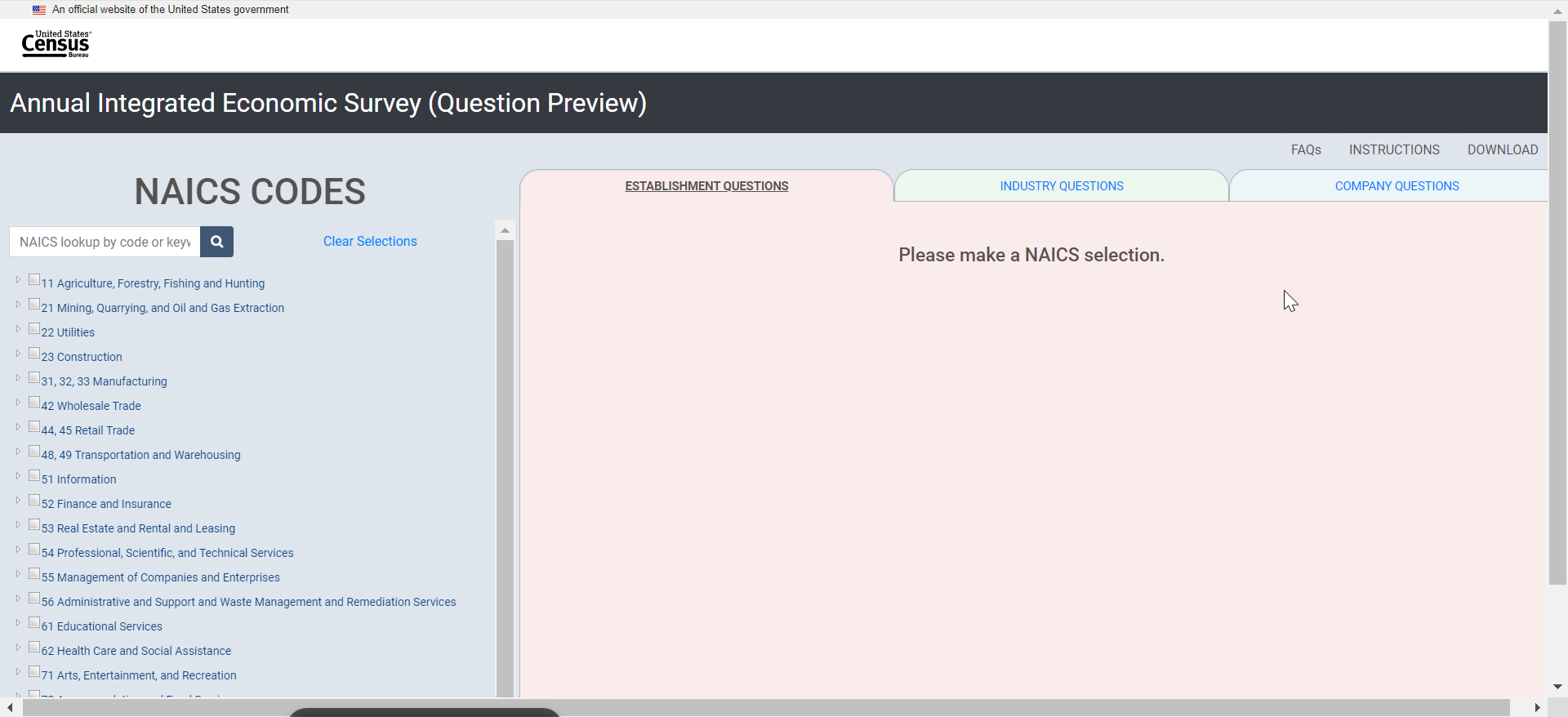

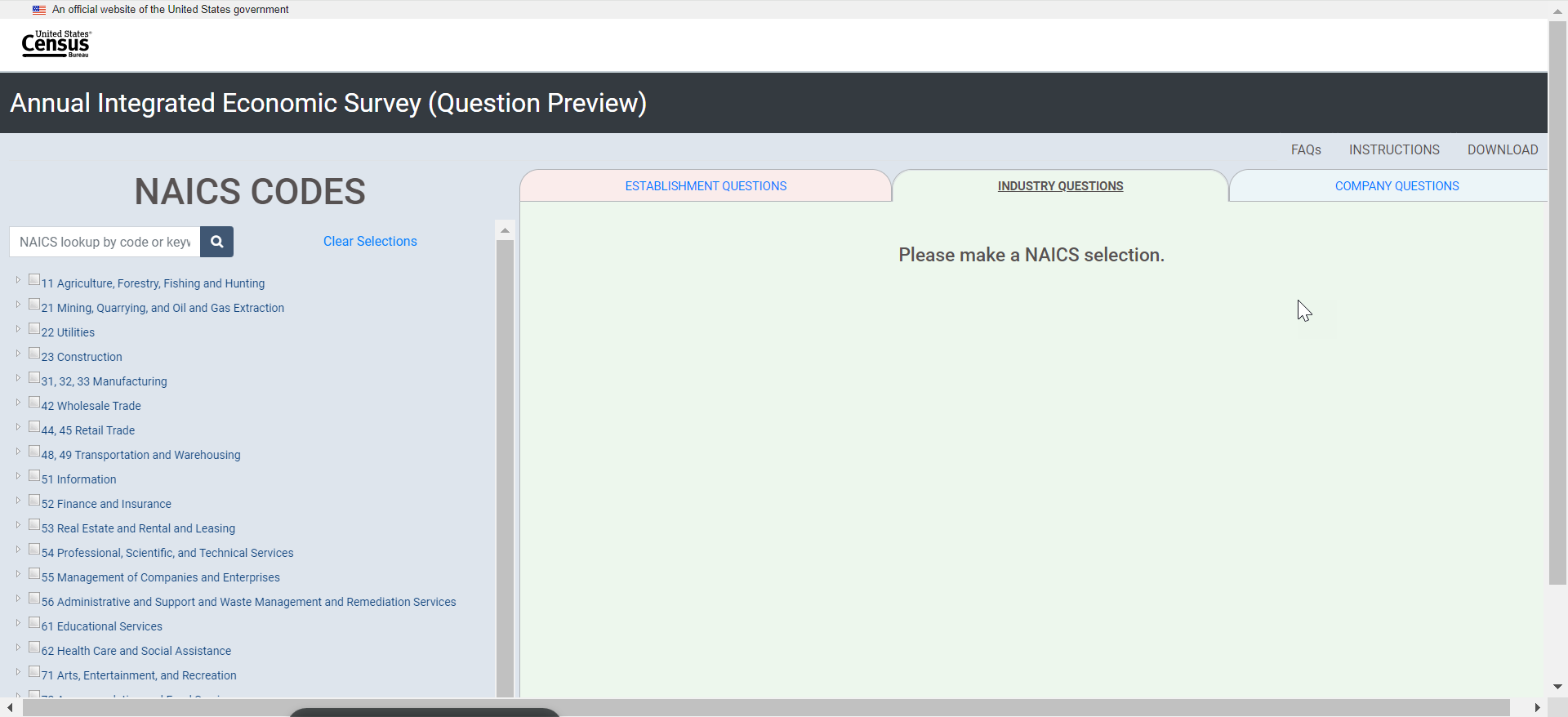

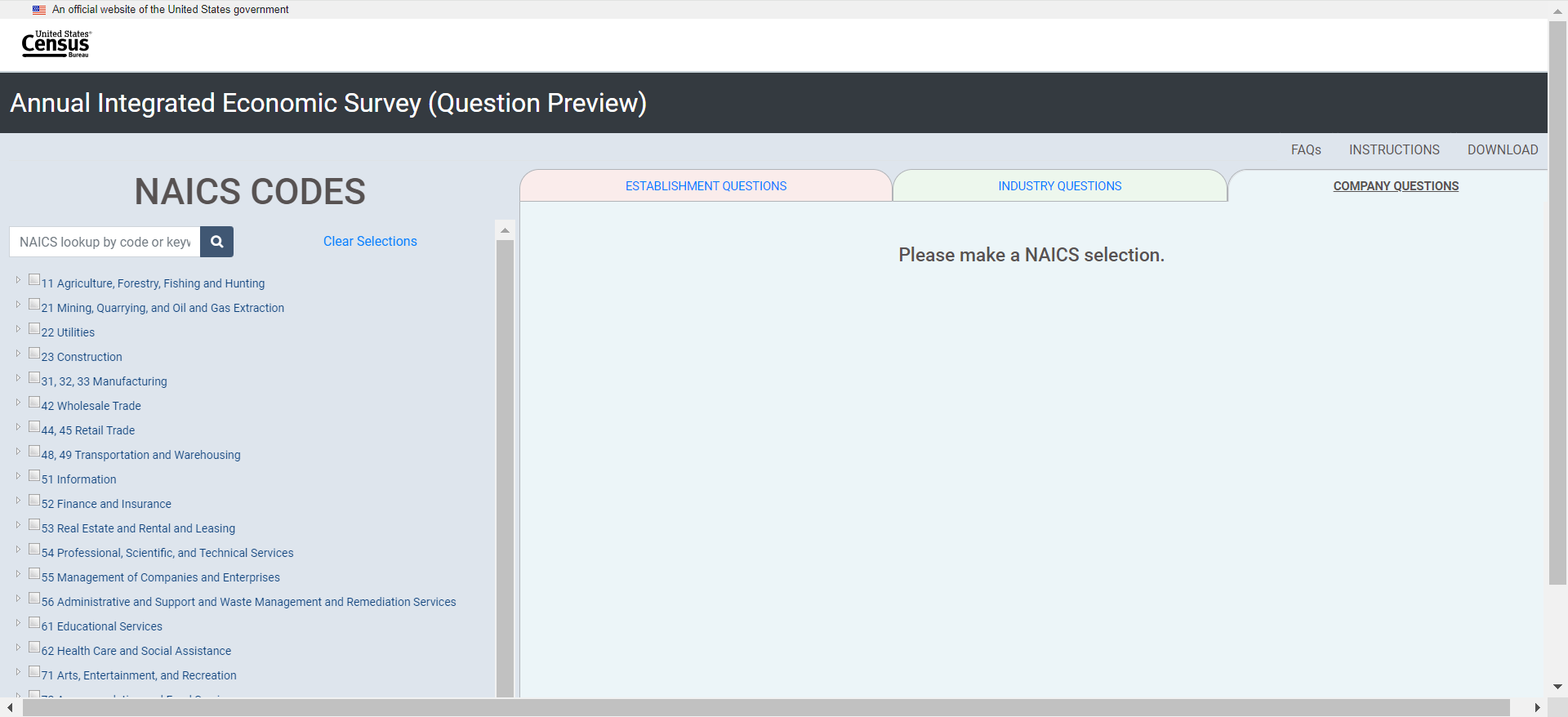

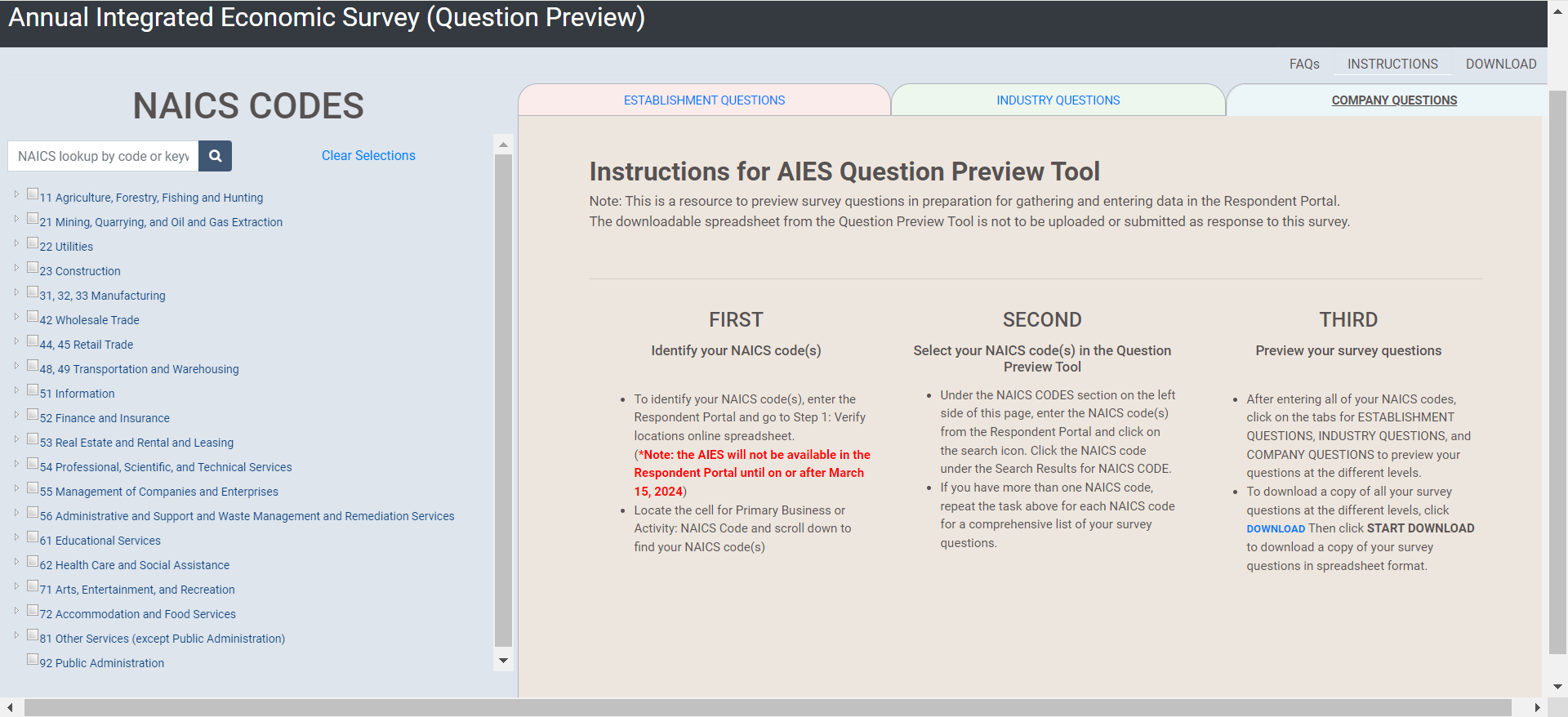

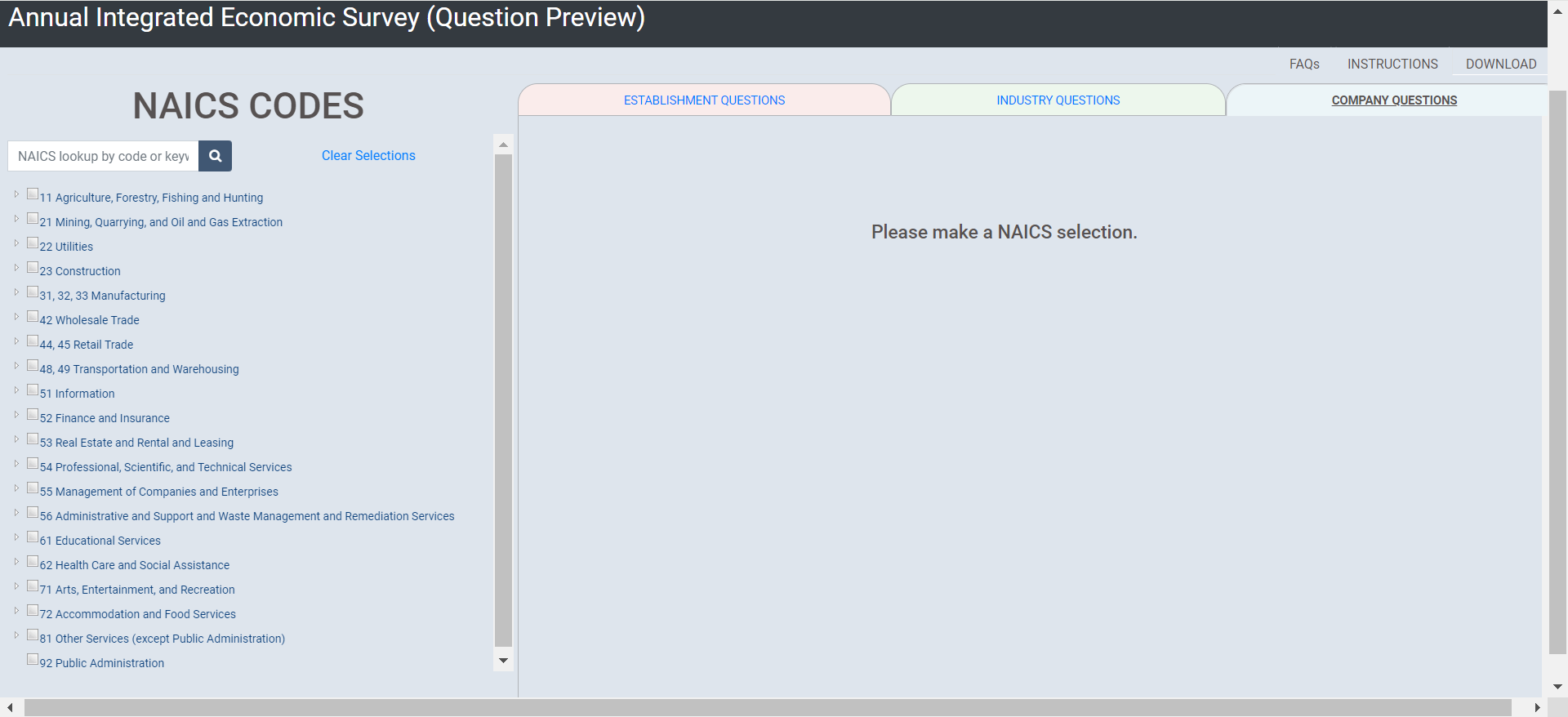

Upon entering the site, respondents are first presented with the company-level questions on the right and the NAICS classification scheme on the left. On the right are three tabs, representing the three levels of collection. See Figure 13 for the landing page for this tool.

Figure 13: Screenshot of the AIES Interactive Content Tool Landing Page

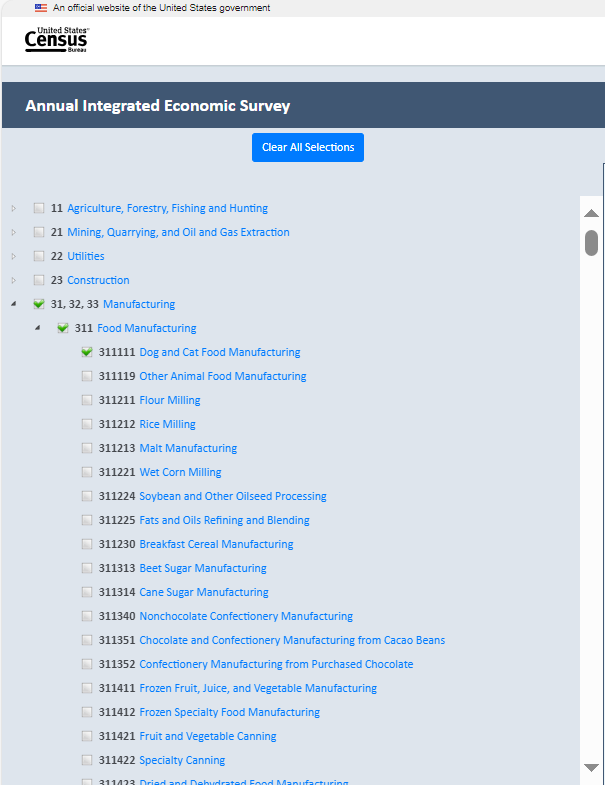

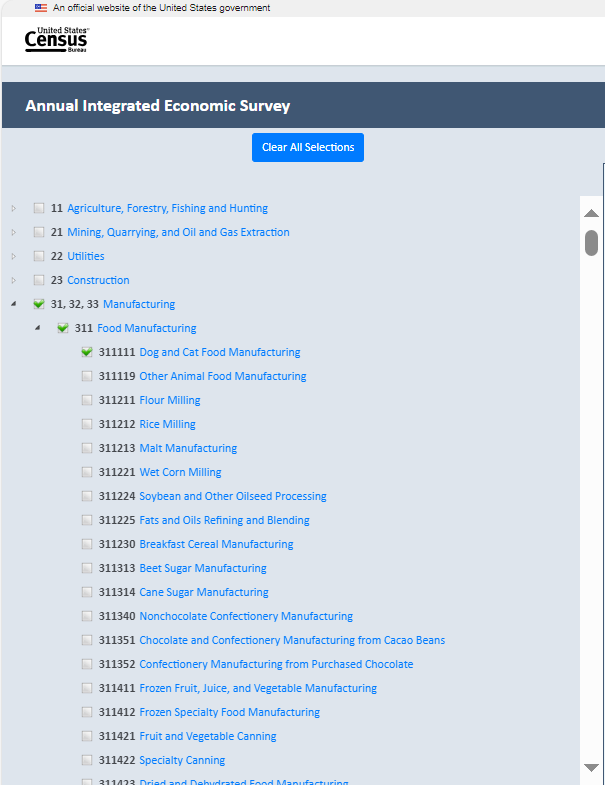

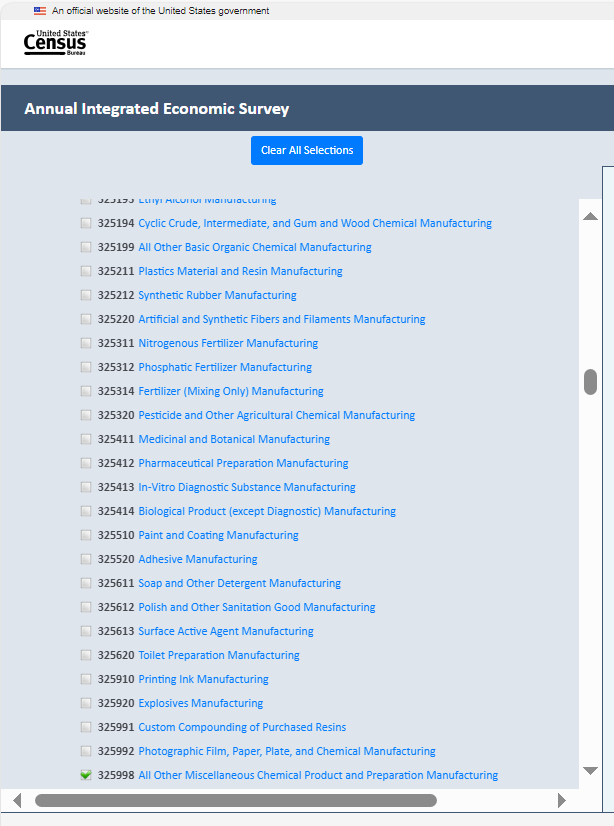

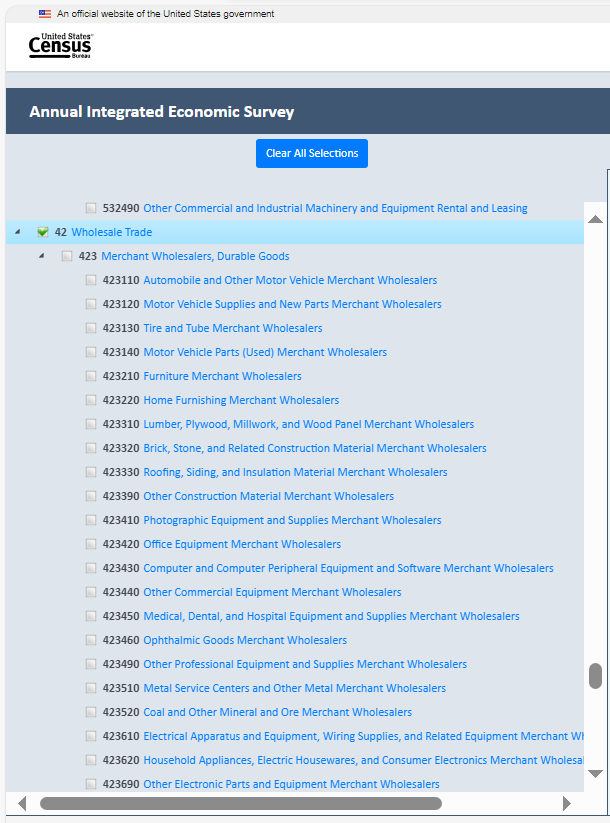

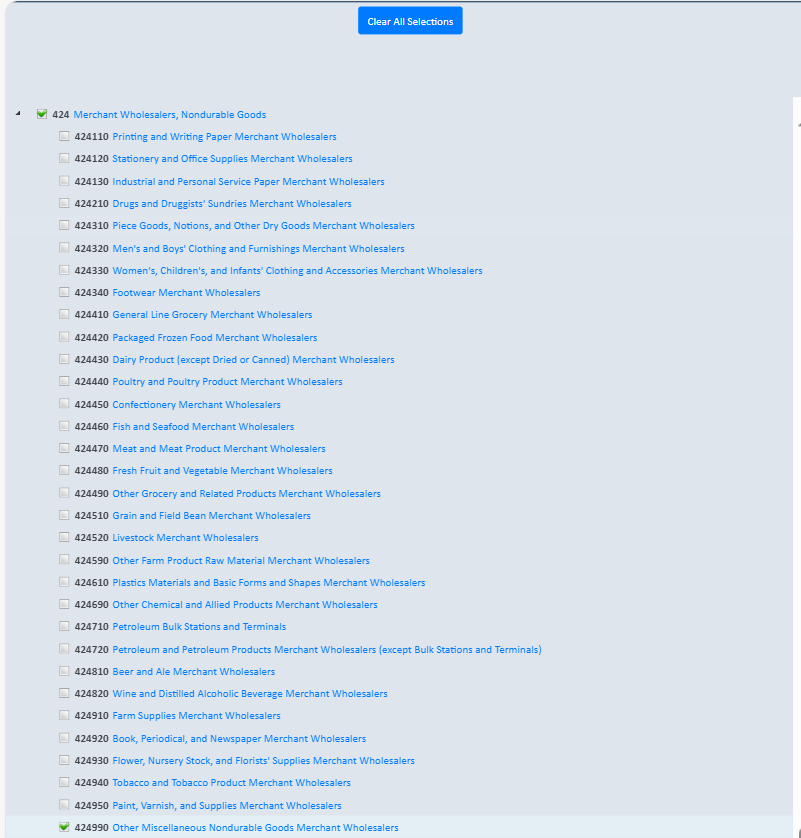

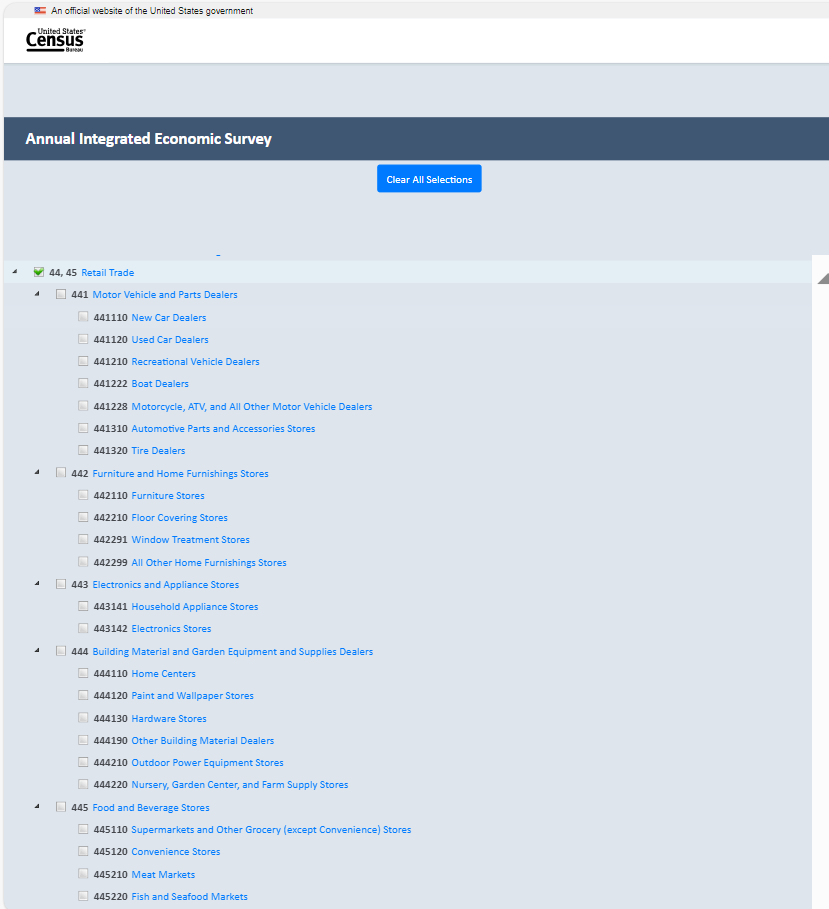

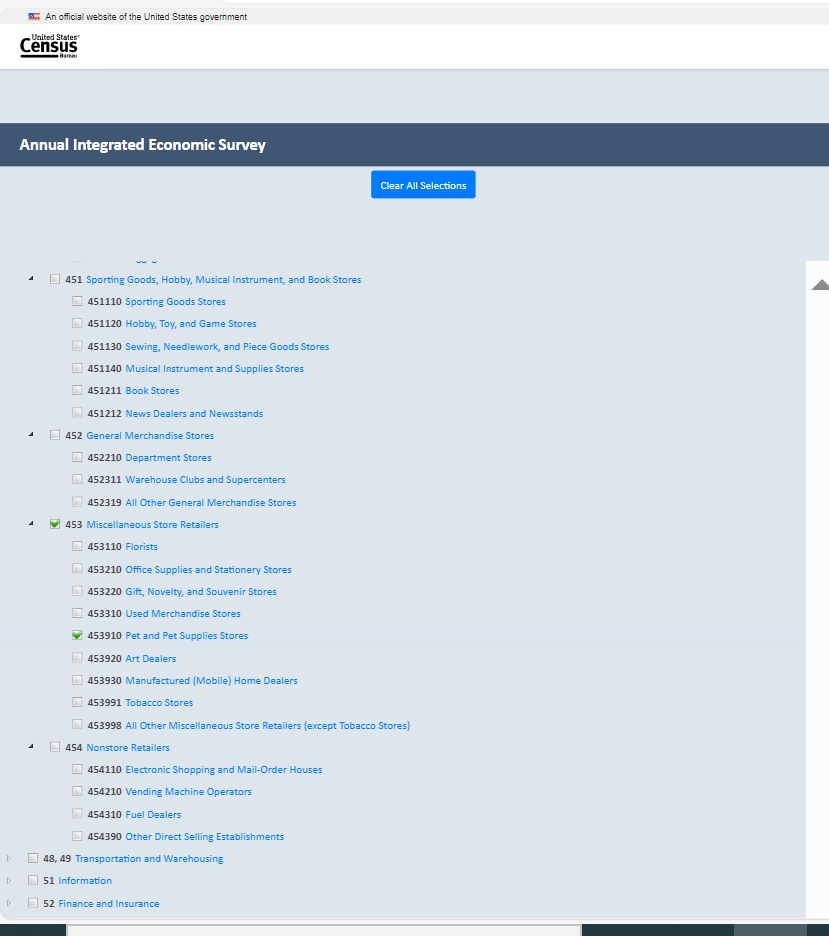

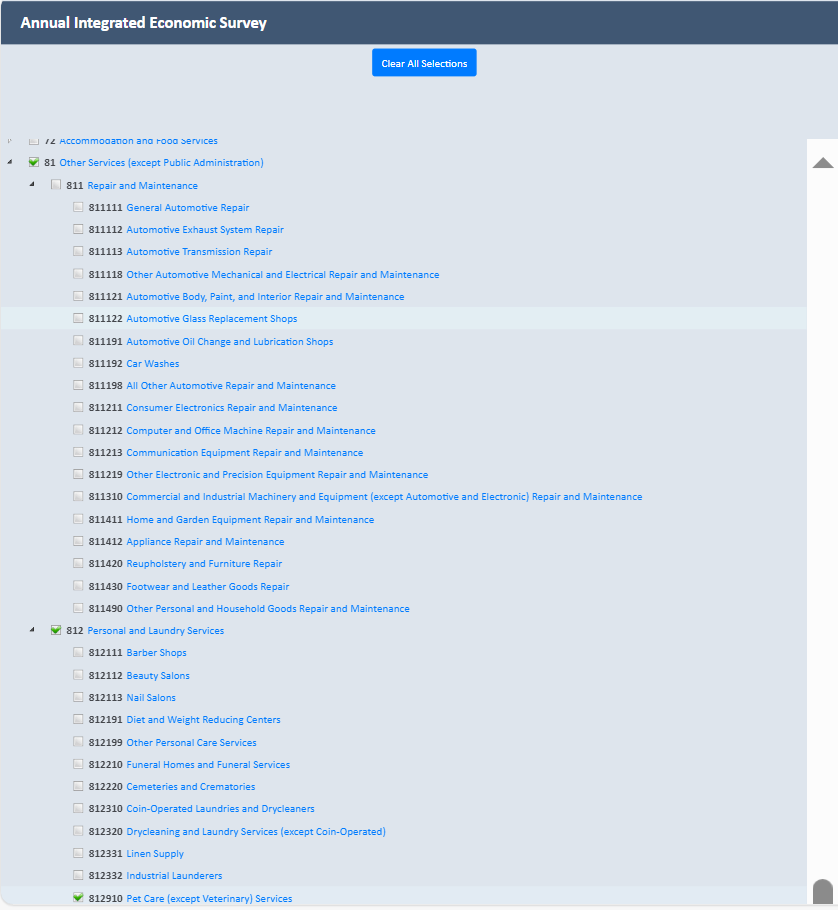

The NAICS display on the left first shows the two-digit NAICS titles, with a plus (+) button to expand out to more granular levels of NAICS. Respondents could select their NAICS at whatever level they preferred. Note, however, that the AIES is driven by the six-digit NAICS code of each location. See Figure 14 for an expanded screenshot of the NAICS classification selection tree.

Figure 14: NAICS Expanded Selection Tree on the AIES Content Tool

As a respondent selected their NAICS, the in-scope content automatically populates to the appropriate unit of collection tab on the right. Respondents could then click the tab to see what questions at that level will be in scope for their company. If a respondent wanted to clear their selections, they could press a blue “Clear all Selections” button at the top of the selection tree.

AIES Phase III Response Support Materials Findings

During the AIES Phase III collection, we conducted a series of additional investigations to gauge successes and areas of improvement for the survey. This included a Response Analysis Survey, respondent debriefing interviews, and others. Some of these methodologies included respondent feedback on the interactive content selection tool, and those findings are outlined here. Note that these are findings from research related to the implementation of the Pilot Phase III; we also conducted standalone testing of the tool, described later in this report.

Generally, respondents indicated that they rely on a survey preview to support response. They mentioned in interviews that not having this in place made response very challenging. Most were unaware of the support materials. As a result of this feedback, we suggested highlighting this tool and other information on the site earlier in communications with respondents.

Method of Research

In this section of the report, we will outline the details of the methods of research used to further develop the AIES Interactive Content Tool at the conclusion of the AIES Pilot Phase III. We conducted 23 semi-structured interviews over a two-and-a-half-week period in 2024.

Recruitment

In January 2024, researchers sent an e-mail requesting participation in the content tool testing to 278 companies that had participated in the AIES Pilot Phase III (Dress Rehearsal) but had not participated in any other research activities from this effort, including other debriefing interviews, the Response Analysis Survey, or usability testing. From that recruitment e-mail (see Appendix A), we scheduled and conducted 23 interviews. Interview participants were informed that participation in this study was voluntary and provided consent to participate (see Appendix B for the consent form).

Materials development

Survey methodologists in the Economic Directorate worked with programmers to make improvements to the early version of the content tool (see Appendix C for the tool as it existed at the end of Phase III of the Pilot). As the tool was updated, we incorporated these changes into our interviewing protocol to test that the changes were well-received by participants. With the feedback provided by respondents during the content tool testing as well as the interviewers’ observations, we continued to incorporate improvements to the content testing tool prior to the AIES launch in March. Screenshots of the version that we launched with the full production AIES can be found in Appendix D.

Participant overview

With the exception of one participant, the participants of the content tool testing had completed the AIES Pilot Phase III and were familiar with the survey.1 This testing included companies of varying geographic locations, sizes, industries, complexities (i.e., number of NAICS codes), and other characteristics. Furthermore, participants in this evaluation were in a variety of job titles, including:

Human Resources staff, including: Human Resources Specialist, Manager, Director

Accounting staff, including: Accountant, senior accountant, accounting manager

Financial staff, including: Financial analyst, financial consultant, head of finance department, director of finance

Executive staff, including: Chief Executive Office, Executive Vice President, Controller

Other professional staff, including: Office manager, senior project coordinator, payroll manager

Interviewing protocol

Researchers used a semi-structured interviewing protocol to guide data collection for this project. This protocol included articulated research questions, and drew on both cognitive testing – participants’ understanding of the purpose of the tool and the terminology within the tool – and usability testing – participants’ interactions with the tool interface. This section outlines the interviewing protocol; see Appendix E for the full interview protocol. Note that interviewers did not necessarily ask all questions on the protocol, and that as the tool was iteratively updated, interviewers updated the protocol to match.

Research Questions

This testing was guided by two main research questions, each with sub-questions nested underneath. These include:

Do users understand the purpose of the tool?

Do they identify that the content is industry driven?

Do they understand collection unit differences?

Does this tool meet the need for the ability to preview the survey prior to reporting?

Can users generate content that matches their AIES content?

What features of the tool are intuitive? What features are not?

What additional features might support response?

Cognitive testing

First, interviewers asked participants about their understanding of the tool and of the phrases and terms used within the content selection tool. We began with the following prompt to center participants within the response process:

Imagine that you have entered the AIES survey on the respondent portal and would like to preview questions to the survey in preparation for coordinating the gathering of the data you will need to respond to this survey. Where would you expect to find information on the Interactive Question Preview Tool?

By starting with this statement, we were inviting participants to consider their actual response processes once they had accessed the survey instrument. In this way, we were trying to induce a more realistic orientation to interacting with the tool, compared to just opening it fresh without context.

We then moved into a section on comprehension of the tool, both purpose and phrasing. This included standard cognitive interviewing probes like asking the participant to describe instructions using their own words, and asking about specific words or phrases known to be ambiguous or confusing from other testing.

Usability testing

Once we had a better understanding of participants’ interpretation of the tool purpose and phrasing, we engaged in some usability testing. Usability interviewing is a task-oriented, semi-structured interviewing methodology. Interviewers provide users with specific tasks designed to mimic the actions they would need to do when interacting with the content selection tool outside of the testing environment. The success or failure of the tasks allow researchers to assess the functionality, effectiveness, and efficiency of the tool.

For the purposes of this research, the usability tasks were focused on the participant’s ability to complete basic tasks such as selecting all industries that apply to the business, clearing selections, accessing questions across unit specific tabs (company, industry, and establishment), and exporting a question preview file.

Methodological Limitations

While the tool testing interviews resulted in a wealth of qualitative feedback from participants, we caution that this testing is prone to the same methodological limitations of most qualitative research.

First, we note that participant recruitment was geared toward representation, not representativeness. We do not select a representative random sample, nor do we assert that the results from this testing are representative of all respondents’ needs or experiences with the content selection tool specifically or the AIES generally. Instead, these interviews are a window into the experiences of those participants who volunteered to be interviewed for this project. We can take their feedback as reflective of the survey response process for them, and that this process may be similar for others, but we cannot know with certainty who we may have missed or if these participants are markedly different than other respondents to the AIES.

Next, we note that the content selection tool has a limited mission: to provide a means of previewing the survey questions for any given company in-sample for the AIES, but outside of the survey instrument. This is an important limitation: the tool is hosted outside of the survey instrument itself and so it is dependent upon the user to select the appropriate drivers of content (mostly their six digit North American Industry Classification System – NAICS – code or codes) to accurately display the correct questions for their company. Later in this report, we will outline the issues around selecting the correct NAICS code(s), but for now, we forewarn that this technological limitation may lead to content that is out-of-scope or may lead to content that does not display due to user error in selecting NAICS codes.

Finally, we note the very quick turnaround of this research. We identified the need for this testing at the conclusion of the field period for the AIES Phase III Pilot (November 2023) and needed changes implemented prior to the March 2024 launch of the full production AIES. Because of this tight timeline, we could not conduct the research in phases, following up on all changes to ensure implementation improved the user experience. Instead, we iteratively updated the interviewing protocol to address iterations of the tool as they were developed and published. We recommend more robust tool testing follow up once the full production survey has launched.

Findings and Recommendations

This section of the report outlines the general and specific findings from the described research. General findings pertain to patterns of responses that related widely to the survey response process, the survey generally, or other wider topics. Specific findings are those that relate to the research questions that guided these interviews.

General Findings

Finding 1: Participants are unclear on the purpose of the survey and are unsure how to access the AIES.

Participants often do not understand the purpose of the survey. Despite the burden they face in completing the survey in terms of the time commitment and level of effort required, they often do not know how their data are used or how to go about accessing results. One participant expressed this sentiment by saying “When we submit results, we never see anything but national news. I want to know the benefit of me submitting my data (i.e., business trends, executive summary, etc.). [It’s like] submitting data to the abyss.” They went on to note that completing the survey “would be a better use of my time if the results were applicable for me and printed outcomes or results would create a better incentive. This is a lot of work.” Said another, “there’s no ownership in this for us... there’s no motivation or inspiration to participate. Time is money for small businesses.” This is not unique to AIES, rather, it is feedback that researchers at the Census Bureau frequently hear from respondents.

Recommendation 1: Test messaging on communicating the purpose of the survey and how to access results in future AIES communications research projects.

In future AIES communications research projects, consider incorporating research on how to effectively and efficiently inform respondents about how their data are used and how to access it, as this may be a motivator for response for some respondents.

Finding 2: Gathering data to respond to the survey can be a multi-step process.

To complete the survey, most respondents must reach out to others in their organization and/or third-party vendors (i.e., contracted accountants) to gather the data required to complete this survey. This is often a source of frustration for respondents as it can take time and sometimes requires the respondent to provide reminders and additional information/clarification to get the data from others. Said one participant, “sometimes I have to reach out to our third-party administrator or vendor that helps handle our books and that creates just another step in the process [to respond]. I have learned to print out or save the [survey preview] and send to accounting, and they fill out numbers and they send back to me and I merge it all together.” Note here the participant’s reported reliance on being able to pass a preview to others within the company to get the data. This participant went on to say that once they request the data, “sometimes [others in the company] are too busy to get us the information and that becomes an issue too. [These are] issues out of our own control, it’s how the business is structured.”

In some larger and more complex companies, it may take some time to first identify the person or person(s) with access to the requested data required for some parts of the survey. Said one, “I have to reach out to our corporate office because we are a franchise. The owner has access to all of that information but he can be absent sometimes or unsure of where to find it. So, I have to reach out to corporate and track it down or guess based on the information available to me.” Said another, “I have to reach out to multiple other teams to get the information that we need,” calling this process “extremely frustrating” and noting that “Census surveys are the least favorite part of my job. They are very, very difficult because everything is done by EIN number and address and that’s just not how we organize our data internally.” In particular, the “the AIES last time was incredibly frustrating because I could not see my questions in advance. In the past, I have been able to send out questions and delegations in advance. For the AIES I had to do it piecemeal, and it takes a long time and lots of back and forth and these are very busy people I’m reaching out to and it’s just a very frustrating process,” again, highlighting the importance of survey previews for gathering the data to respond.

Recommendation 2: Develop additional tools to support response delegation and assist respondents in data gathering efforts.

In considering that collecting the data necessary to respond to the survey often involves coordinating more than one person at the business, we recommend developing additional tools to support and encourage response delegation and assist respondents in data gathering efforts. This could include letter or email templates that survey coordinators at businesses could use to assist them in their efforts to gather data from others to complete the survey, as well as flyers, instructions, or other information on survey delegation functionality built into the survey instrument. Further, we recommend that this topic be explored as part of a larger AIES communications research project in the future.

Finding 3: NAICS codes can be a source of confusion for respondents.

Respondents, especially those with numerous NAICS codes, sometimes do not agree with the NAICS codes that Census has assigned to their company. This is a source of confusion for respondents. Later in this report, we outline how this incongruity impacts the functionality of the content selection tool, but we have evidence to support that it impedes accurate reporting in other aspects of the survey response process. Said one interview participant about their company’s assigned NAICS codes, “I realized [that the Census Bureau] prepopulated NAICS codes based on your information. We don’t have things broken out that way, [so] Census must have assigned these codes… I think we should only have 4-5 NAICS codes but we have about 30.” Another expressed both confusion at their assigned NAICS codes and frustration at an inability to update or alter them, asking “How do I ever fix my NAICS codes or say this doesn’t really apply to my industry, can I skip that question or something along the lines?,” and then “I feel like there’s nowhere to go to explain [NAICS assignments] to me. A third participant suggested a mechanism for verifying NAICS codes, saying “It would be nice if we could download our options – like a full NAICS listing with details of what each code encompasses. We could take that list and circulate it and see with our executives if they agree [with the assigned classifications].”

Recommendation 3: Engage additional investigation into the NAICS taxonomy from the respondents’ perspectives.

The difference between how companies classify themselves and the classifications that are assigned by the Census Bureau warrants additional investigation into the NAICS taxonomy from the respondents’ perspective. While this investigation is outside of the scope of the current program, we continue to recommend that the assignment and pre-population of NAICS codes on the survey be a continued source of investigation in future research on economic surveys.

Specific Findings: Interactive Content Tool

In this section of the report, we outline the findings and recommendations related to the research questions guiding this interviewing, listed again:

Do users understand the purpose of the tool?

Do they identify that the content is industry driven?

Do they understand collection unit differences?

Does this tool meet the need for the ability to preview the survey prior to reporting?

Can users generate content that matches their AIES content?

Finding 4: Participants like the tool and want early notification.

The first finding from this testing related to the tool is that interviewing participants liked the tool once they heard about it. Many participants had positive responses to the tool once they were aware of it, some indicated that they would use the tool, and some mentioned wanting to know about the tool early in the response process.

Many participants provided positive feedback on the development of a content selection tool, and noted its importance to the response process. Said one, “previewing [the] questions in advance is essential to how I need to [respond to this survey] because I’m looking at the questions and trying to figure out how to delegate who has the information to answer what,” emphasizing the importance of a survey preview to delegating response. Another echoed this sentiment, saying that with a content selection tool, “I know exactly what I need to request from my partner departments, if needed, ahead of time. And I don’t have to wait until I’m in the survey…having this ahead of time is very, very helpful.”

We followed up by asking participants to estimate the likelihood that they would use a tool like this if they had known about it while completing the AIES Dress Rehearsal. Most answered affirmatively, stating that this tool would make responding easier. Said one, “Yeah, I think I would [use this tool] now that I understand. It would be helpful to review the questions…” Said another, emphatically, “Yes, I absolutely would use it. I would list out how I answered these questions last time and in this column would be how I would answer them this time. This would be my basis for answering the questions and having them reviewed before uploading or entering them into the Census portal,” outlining how this tool could be used to support entering response into the survey instrument.

We noticed that most participants indicated that they did not know about the tool when providing response. So, we asked them: how would you expect to learn about this tool? Most indicated that they would want to hear about it early in the response process, with one saying, “I would expect it when we get the first notification that it be readily available in an invitation e-mail to take you to it.” Another pointed to the same notification, the invitation email, saying “I would be looking for that in the email from Census, [as] a link in that initial email. Or in the portal after adding the survey in the dashboard, there should be a link telling me where to go to get the questions.”

Recommendation 4: Include the tool in early communications, and make it more prominent in the materials ecosystem.

Because participants positively reacted to the tool and indicated it would support the response process, we recommend including information about the tool, including a weblink, prominently and early in survey invitations. We also suggest raising the profile of this tool in the materials ecosystem on the AIES website, providing a link to it from the landing page if possible. The survey area may also want to consider including a link to the tool from within the survey and from within the respondent portal to raise awareness of its existence and to drive respondents to use it.

Finding 5: Participants struggle with their NAICS selection within the tool.

A second, major finding of testing the tool with participants is that they struggle with selecting their company’s NAICS selection. This is a critical part of interacting with the tool as the user’s NAICS selection drives the population of the questions in the tool. This breakdown is related to three key issues – first, some participants struggle with identifying the appropriate NAICS code for their business; next, others struggled with how the tool is related to the survey response; finally, some participants struggled with the usability of selecting their NAICS code from the selection tree on screen.

First, many participants struggled with identifying the appropriate NAICS code for their company. This finding is supported by other respondent-centered interviewing, which has identified that NAICS codes can be challenging for respondents to understand and to map on to their business. One simply stated, “It would be helpful if you told me what our NAICS code is or where to find it” when using the tool, suggesting that they do not know their NAICS code(s) handily. Several participants mentioned that they would “guesstimate” their NAICS code, with one saying “I would just pick one that seemed close” and another invoking “eenie, meenie, mine-ie, moe” from a few codes that could apply. One noted uncertainty about the “level of detail [they] need to select”, wondering if their two-digit NAICS code is enough to get them what they need in the tool.

Other participants wondered how the NAICS selection in the tool would interact with the NAICS populated in their survey. As a note, the tool and the survey are independent of each other; they do not interact at all, and selection on the tool has no bearing on population in the survey. Said one, the tool is “not clearly laid out,” and that “already sets an interesting tone for the survey. Crap, what if I input all of this in the wrong industry and get dinged on it?” within the survey, suggesting that their concern was that incorrect input on the tool would generate incorrect content in the survey.

Finally, many participants commented on the functionality of selecting their NAICS codes. The initial selection tree is at the two-digit NAICS labels, and users can drill down with increasing specificity to identify their six-digit NAICS code. This selection tree interface proved to be burdensome and confusing, especially for the largest companies (those that would be most in need of this tool). Said one “it would be helpful to have a box to type in [my industry keyword] to search” for the appropriate NAICS, while another said more simply, “I would like to have a search option.” Another requested “more ease in selecting a NAICS code” after struggling to select a code, prompting them to ask, “is my type of company or business not worthy of being able to easily figure out [the correct NAICS code]?”

Recommendation 5: Improve the NAICS selection interface within the tool.

Time and again, participants struggled with the NAICS selection tree interface. We suggest including a keyword search functionality, like the general NAICS look up website, as well as the ability to deselect all prior selections if a respondent has misclassified their business. Because NAICS does not necessarily reflect the ways that businesses think about themselves, we also recommend additional instruction on how to locate the correct NAICS classifications from within the survey instrument as a means of guiding users to correct selections on the tool.

Finding 6: Participants struggle with the tool interface.

The usability section of the interview revealed that participants struggled with some aspects of the tool interface. These issues are particularly related to the tool behavior upon NAICS selection as well as the inability to save or export survey question previews from the tool.

One issue identified early in testing was that the default view of the tool did not allow users to see that it was updating as they made selections, that is, participants could not tell that anything was changing as they were clicking their NAICS. This is because the original default view was at the company-level of the survey, the unit of collection with the least amount of content tailoring based on NAICS selection. Said one participant, “I’m assuming that if I hit the six-digit NAICS, that questions would come up, and nothing came up!” reflecting their confusion that they had made a selection, but the view of the tool had not updated in any discernable way. Another echoed this confusion, saying “I would assume I would click the blue words [for my company’s industry] and that something would come up, but nothing is coming up.”

Another issue was that participants noted that their questions were generated but they remained displayed within the tool; there was no way to share the question preview with others in their company or to save it for future uses. Said one participant, “it doesn’t show me where I can save a page and then go back and enter information later”, demonstrating a want to access the results later.

Recommendation 6: Update the default view and develop download or save capabilities.

Given this feedback, we recommend that the landing page for the content selection tool be a set of instructions for use – that way, users will have to click to the other tabs in the layout to access the dynamically generated preview of questions. In tandem, we recommend continued refinement to instructional text, including locating NAICS classification from within the survey, selecting NAICS and accessing survey previews, and saving or exporting results. This last component – the ability to save or export the survey preview for future use and to share with others within the company – is strongly recommended prior to full production implementation.

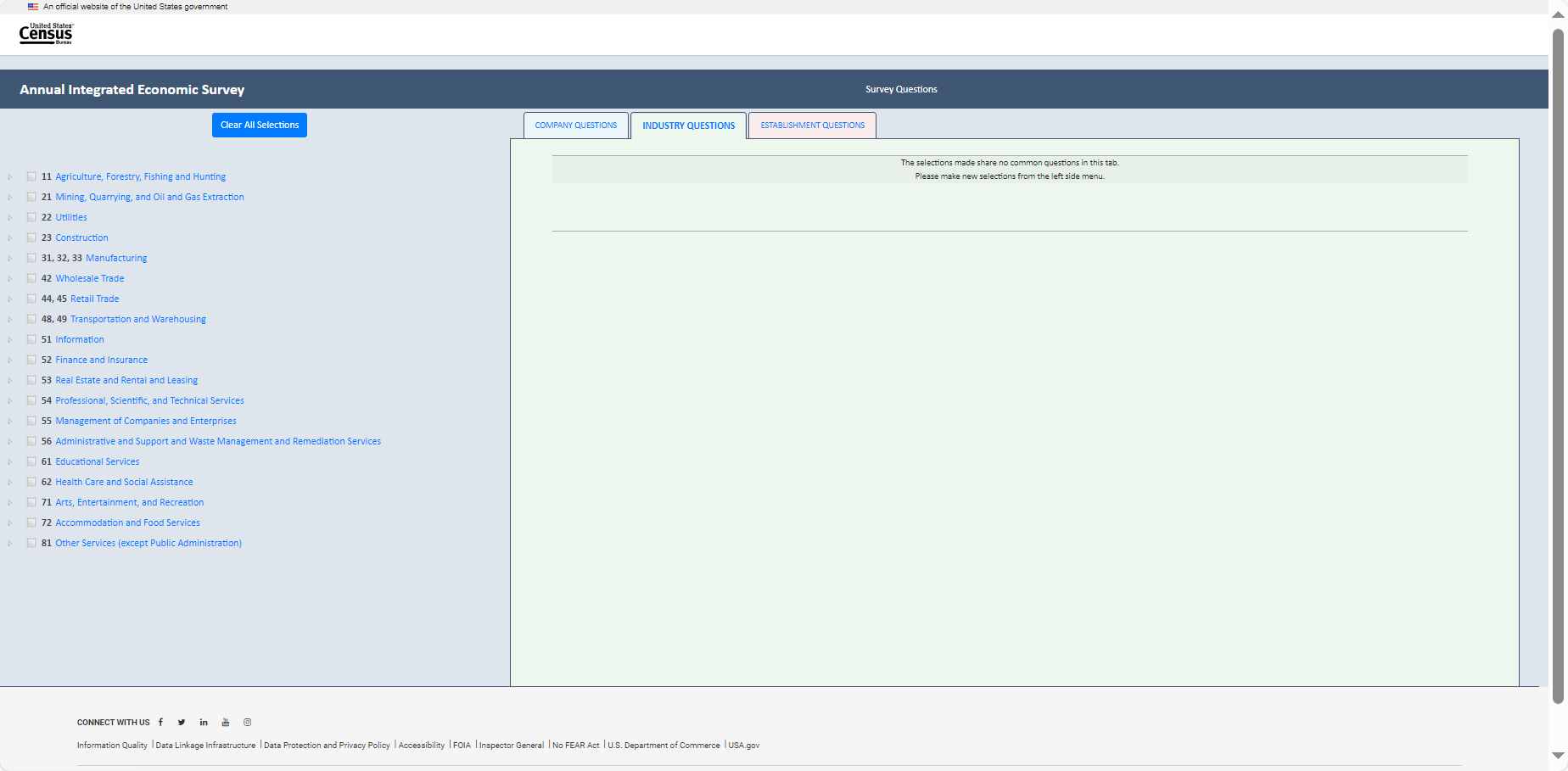

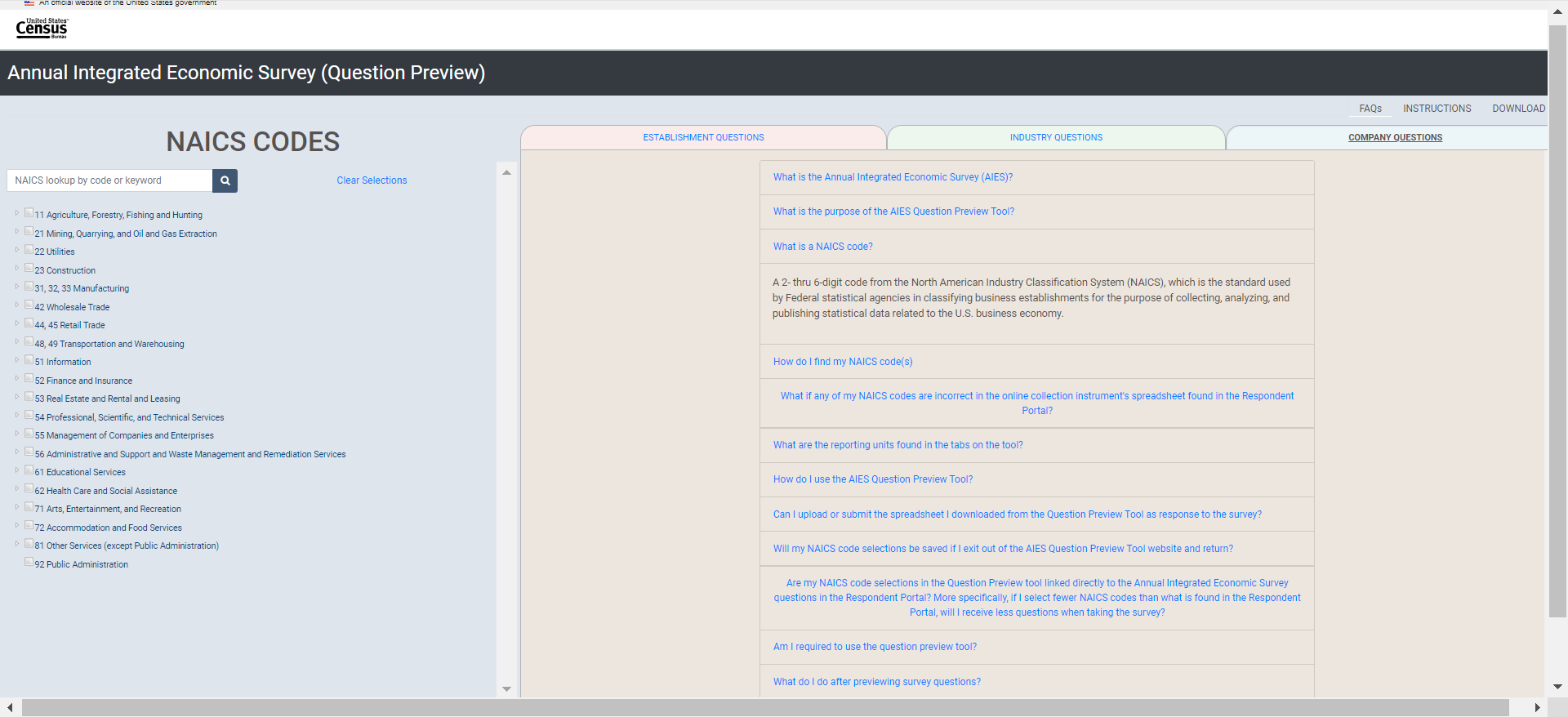

Finding 7: Participants want the tool to relate to the survey more clearly.

From the interviews, it became clear that respondents want the tool to relate to the survey more clearly. As illustrated in Figure 15 below, a screenshot of the content selection tool version we tested with respondents, the webpage header was labeled “Annual Integrated Economic Survey.”

Figure 15: Screenshot of the AIES Interactive Content Tool from Testing

For some participants in the selection content tool testing, this created some confusion as to the purpose of this website. More specifically, some participants thought that this was the actual AIES survey given how the page was labeled. Said one, “It is not necessarily clear that this is only for the purpose of previewing questions.” Another suggested that we re-label the page in a way that it would be clear that this page is for question preview only, saying “when I see Annual Integrated Economic Survey – I’m thinking that this is the actual survey and that I can preview and input [data] here. Maybe in parenthesis you can [label] “Preview Only”.”

Some participants noted that question details were not provided in the interactive question preview tool version that was tested, and expressed concern about it. More specifically, this version of the tool only included question text, and none of the ‘include/excludes’ or other question-specific instructional text. Respondents explained that question details are critical to the process of gathering data. Said one, “the instructions on what to include and exclude is critical, especially when I’m asking others for information and this [tool] does not do that. That is a critical part of this [tool] being helpful for me.” Furthermore, when a respondent has to reach out to others to obtain data required for the survey, it’s helpful to provide the question details in their data requests. To that point, one participant said “It’s always super helpful if I can include a definition in that [e-mail requesting data from others] – it’s key – because [the person receiving the e-mail] will always ask: how are we defining that [word or phrase].”

Recommendation 7: Update the website label and include the question details in the preview.

To make the purpose of the page clear to respondents, the website for the interactive content selection tool should be re-labeled as “Annual Integrated Economic Survey (Question Preview).” Additionally, we recommend that question details be part of the interactive content selection tool. To satisfy respondents’ need for more explicit support text, including providing includes and excludes and response instructions, we recommend adding an optional modal window on the website containing the question information so that respondents can access this feature. As for the CSV spreadsheet export generated by the content selection tool, we recommend including the same question details for respondents.

Next Steps and Future Research

This section of the report provides a brief outline of the next steps and future research, including updated research questions, participant debriefing interviews, website paradata, and future research considerations.

Updated Research Questions

The purpose of the testing described in this document was to identify ways that the AIES interactive content selection tool supports the response process, and ways that it fails to meet respondents’ needs. As a result of this interviewing, and in collaboration with programmers at the Census Bureau, we have made some improvements to the interface and functionality of the tool. However, because of timing and resources, we were unable to test whether these updates lead to improved response processes.

To that end, we suggest future research questions for consideration that address three aspects of tool performance. The first is tracking traffic to the site, including overall numbers of users, timing, and navigation routes. The second is additional user evaluation of the site, again focused on the cognitive and usability aspects of using the tool. And, the third is performance of the tool, including the patterns of interaction with NAICS selection. We intend to explore these topics in various ways, including participant debriefing interviews and website paradata. See Table 1 for an overview of suggested updated research questions.

Table 1: Research Questions and Modalities for Future Tool Refinements

Topic |

Question |

Method of Inquiry |

Traffic and timing |

How many unique visitors traffic the site over the AIES field period? |

Analyses of paradata – unique visitors |

How is traffic to the site related to the timing of respondent communications materials, like emails, letters, and telephone follow-up? |

Analyses of paradata – visitors by mail, email, and phone call timing |

|

How is traffic to the site related to the content of respondent communications materials? |

Analyses of paradata – visitors by communications content |

|

How are users getting to the site? |

Analyses of paradata – “click through” rates from email, use of bookmarks, and routes through the AIES website to get to the tool page |

|

User Evaluation |

Are the content selection tool and summary document sufficiently supporting response? |

Respondent debriefing interviews – questions about response processes |

Do users understand the purpose of the content selection tool? |

Respondent debriefing interviews – cognition questions |

|

Can respondents effectively and efficiently use the content selection tool? |

Respondent debriefing interviews – usability questions |

|

Performance metrics |

What are the patterns of searching for and identifying NAICS codes for users? |

Paradata analyses – NAICS search terms and selection practices |

With what frequency and with what patterns are users downloading their resulting survey previews? |

Paradata anslyses – download patterns |

Participant Debriefing Interviews

Beginning in April 2024, researchers will conduct participant debriefing interviews with up to 50 AIES respondents. The interactive content selection tool will be a topic that interviewers will discuss with respondents in these debriefing interviews. Questions in the interviewing protocol address both the cognitive aspects of the tool – do participants understand the purpose of the tool, the terminology used in the tool, and the instructional text that supports the tool? – as well as usability aspects of the tool – can participants generate a survey preview tailored to their business effectively and efficiently? This interviewing will continue through the field period, with findings and recommendations expected in fall, 2024. These findings should be carefully considered for future iterations of the content selection tool.

Website Paradata

Additionally, we suggest analyses of paradata generated by the interactive content selection tool website to learn about how survey respondents are interacting with this resource. It would be helpful to gain insight on when respondents are accessing the website, how much time is being spent on the website, the number of clicks to complete the task of using the website, how many hits the website gets, how many users are exporting their questions to a CSV file, and other interactions with the site. We suggest pulling the paradata from the site at the conclusion of the collection field period (late fall, 2024), as paradata will be helpful in identifying potential areas of improvement for the content selection tool.

Future Research Considerations

Testing for the AIES Interactive Content Selector Tool highlights two additional opportunities for investigation in future rounds of research. This includes a wider investigation into respondent communications as well as considerations for survey content in future iterations. In this section of the report, we outline considerations for farther-future research projects with the goal of continuous improvement, lowering respondent burden, and increasing data quality.

Respondent Communications

The AIES Interactive Content Selection Tool is one piece of a broader respondent communications ecosystem. Throughout the survey lifecycle, the Census Bureau sends respondents letters and emails, makes phone calls, posts on social media platforms, updates websites, attends community and professional events, and other respondent engagements to encourage reporting to the AIES. The totality of these efforts supports survey response, but the impact of any one piece of this strategy is under-researched.

To that end, we encourage survey leadership to consider a comprehensive program of research involving respondent communications. We envision this research rolling out in three phases. In the first phase, researchers should perform secondary empirical analyses on the seven annual legacy surveys that comprise the AIES. This analysis should investigate the impact of letters and emails from the Census Bureau to induce response to these surveys. This research could explore the following topics:

Mode of communication – are there differential impacts of email and mail?

“Shelf life” of engagement – how long after receiving a communication is response induced?

Inbound call volume – do specific communications increase inbound call volume?

Class of mail – does elevating the class of mail of letters increase respondent engagement with the survey?

Envelope characteristics – do the type of envelope, stamp, attention line, or other characteristics increase respondent engagement with the survey?

Timing: Are there particular days of the week, times of the day, times of the year, or other timing characteristics that positively predict respondent survey engagement?

Communications ecosystem – what impact do survey letters and emails have on other parts of the communications ecosystem, like survey webpages and social media engagement?

Communications sequencing – is there a ‘best sequence’ for messaging, timing, envelopes, and other mailing characteristics to induce survey response?

We recommend that these analyses be conducted using data from the seven legacy annual surveys covering the last ten years of collection prior to their sunsetting in 2023. We surmise that performance for these survey communications could be informative for the AIES, the integration of these seven surveys. We encourage the findings of these analyses to be presented in broader ‘best practices’ for implementation on the AIES, but also to inform the establishment survey field writ large. We also strongly recommend that this work be done in conjunction with wider efforts to implement adaptive design principles in the AIES.

A second research phase dedicated to survey communications would build on the empirical analyses to engage in targeted message testing with respondents. This could include letter, email, and website reviews with respondents, A/B message testing with respondents, and eye tracking and other performance indicators to understand what resonates, what suppresses response, and how (and if) messaging could be targeted or tailored to specific companies of interest. We envision phase two to be primarily qualitative in orientation, but could include additional quantitative analyses using the 2023 AIES in the context of the phase 1 findings. We also encourage a review of any available paradata and performance metrics on social media postings as additional data on messaging performance. We anticipate this work to occur during calendar year 2025 if appropriate resources are available.

Finally, a third phase of this communications research could take the form of a series of experimental panels implementing varying communication plans. This could include varying the content and timing of letters and emails, switching mode of contact at a different cadence depending on business characteristics, and exploring emerging modes of communication, like QR codes, AI chatbots, and automated “robo calls” to encourage response. These experiments would be carefully designed using random assignment of cases so as to be comparable, and change a single variable at a time so as to isolate differences to changes in the communications strategy.

See Figure 16 for an overview of this proposed three-phase communications research project.

Figure 16: Proposed Three Phase AIES Communications Research Project

Continued Content Refinements

In addition to the communications research project, we encourage survey leadership to explore continued content refinements. This includes two key components: continued content culling, and testing of newly harmonized content.

One key issue with the AIES content selector tool is the volume of questions on the survey instrument overall. Over the rounds of piloting research to support the launch of the AIES, researchers recommended content cuts as a major finding in each investigation. The bringing together of the seven legacy surveys was meant to reduce respondent burden, but in many cases, it simply compounded it by asking for data across multiple units within the business, and by expanding the in-scope content for some companies. We recommend a careful examination of each and every question in the universe of AIES for continued inclusion, with a preference for cuts of those items that are underperforming or poorly reported, outdated, or unnecessary. While we anticipate that future iterations of AIES may be able to replace some of these data requests with administrative records or system-to-system transfers, we cannot wait until that technology is in place to begin to reduce burden and streamline the content.

At the same time, we recognize that bringing together the seven legacy surveys led to a project of harmonizing some content that was sector-specific to be sector-agnostic. Some of this harmonization has not undergone the usual respondent testing to ensure comprehension and reportability, and we recommend a large-scale content test of any question that has changed in the migration from the seven legacy annual surveys to the AIES production instrument. This could include cognitive testing, debriefing interviews, unmoderated web probing, or other appropriate methodologies to refine content and document data accessibility and respondent comprehension.

Appendix A: Recruitment E-mail Draft for the AIES Interactive Question Preview Tool Respondent Testing

Recruitment E-mail Draft for the AIES Interactive Question Preview Tool Respondent Testing

Hello,

I

hope this message finds you well. I am a survey researcher at the

U.S. Census Bureau in the Economy-Wide Statistics Division. You are

listed as the contact for your company, and we are reaching out to

gather feedback on a potential new question preview tool for a Census

Bureau survey. We would greatly appreciate your feedback on it.

Below is a link to select a date and time to meet with us. The

meeting should take less than 45 minutes to complete. No advance

preparation required.

Follow

this link to the Survey:

Schedule

Meeting

Or

copy and paste the URL below into your internet

browser:

[https://research.rm.census.gov/________________________________________________]

If

you have any questions or concerns, please feel free to contact me

via e-mail or phone. Your participation in this research is

voluntary and invaluable.

Thanks in advance for your

consideration.

[NAME, TITLE]

Economy-Wide Statistics Division, Office of the Division Chief

U.S. Census Bureau

[O: PHONE]

Appendix B: Participant Informed Consent Form

CONSENT FORM

The U.S. Census Bureau routinely conducts research on how we, and our partners, collect information in order to produce the best statistics possible. You have volunteered to take part in a study of data collection procedures.

We plan to use your feedback to improve the design and layout of the form for future data collections. Only staff involved in this product design research will have access to any responses you provide. This collection has been approved by the Office of Management and Budget (OMB). This eight-digit OMB approval number, 0607-0971, confirms this approval and expires on 12/31/2025. Without this approval, we could not conduct this study.

You have volunteered to take part in a study of data collection procedures. In order to have a complete record of your comments, your interview will be recorded (e.g., audio and screen). We plan to use your feedback to improve the design and layout of survey forms for future data collections.

AUTHORITY AND CONFIDENTIALITY

This collection is authorized under Title 13 U.S. Code, Sections 131 and 182. The U.S. Census Bureau is required by Section 9 of the same law to keep your information confidential and can use your responses only to produce statistics. Your privacy is protected by the Privacy Act, Title 5 U.S. Code, Section 552a. The uses of these data are limited to those identified in the Privacy Act System of Record Notice titled “COMMERCE/CENSUS-4, Economic Survey Collection.” The Census Bureau is not permitted to publicly release your responses in a way that could identify you, your business, organization, or institution. Per the Federal Cybersecurity Enhancement Act of 2015, your data are protected from cybersecurity risks through screening of the systems that transmit your data.

BURDEN ESTIMATE

We estimate that completing this interview will take 60 minutes on average, including the time for reviewing instructions, searching existing data sources (if necessary), gathering and maintaining the data needed (if necessary), and completing and reviewing the collection of information. You may send comments regarding this estimate or any other aspect of this survey, including suggestions for reducing the time it takes to complete this survey to [email protected]

Click here to participate in this research.

I agree to participate in this research

Enter your information below:

First Name: __________________________________________________

Last Name: __________________________________________________

Appendix C: Screenshots of the Content Tool at the Conclusion of the Phase III Pilot (Prior to Interviewing)

Item 1: Landing page for the AIES Question Preview Tool

Item 2: NAICS Search

Item 3: Company Questions tab (no selections entered)

Item 4: Industry Questions tab (no selections entered)

Item 5: Establishment Questions tab (no selections entered)

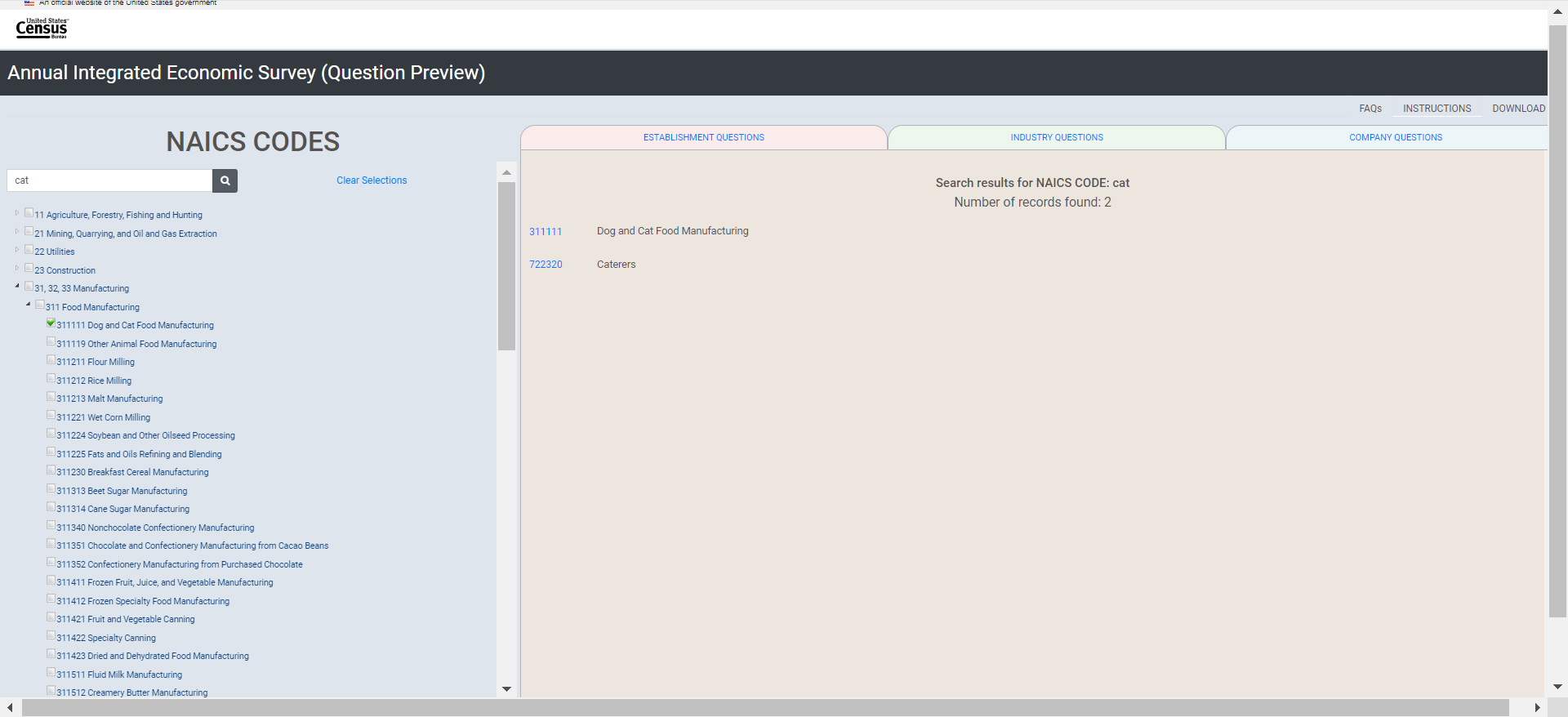

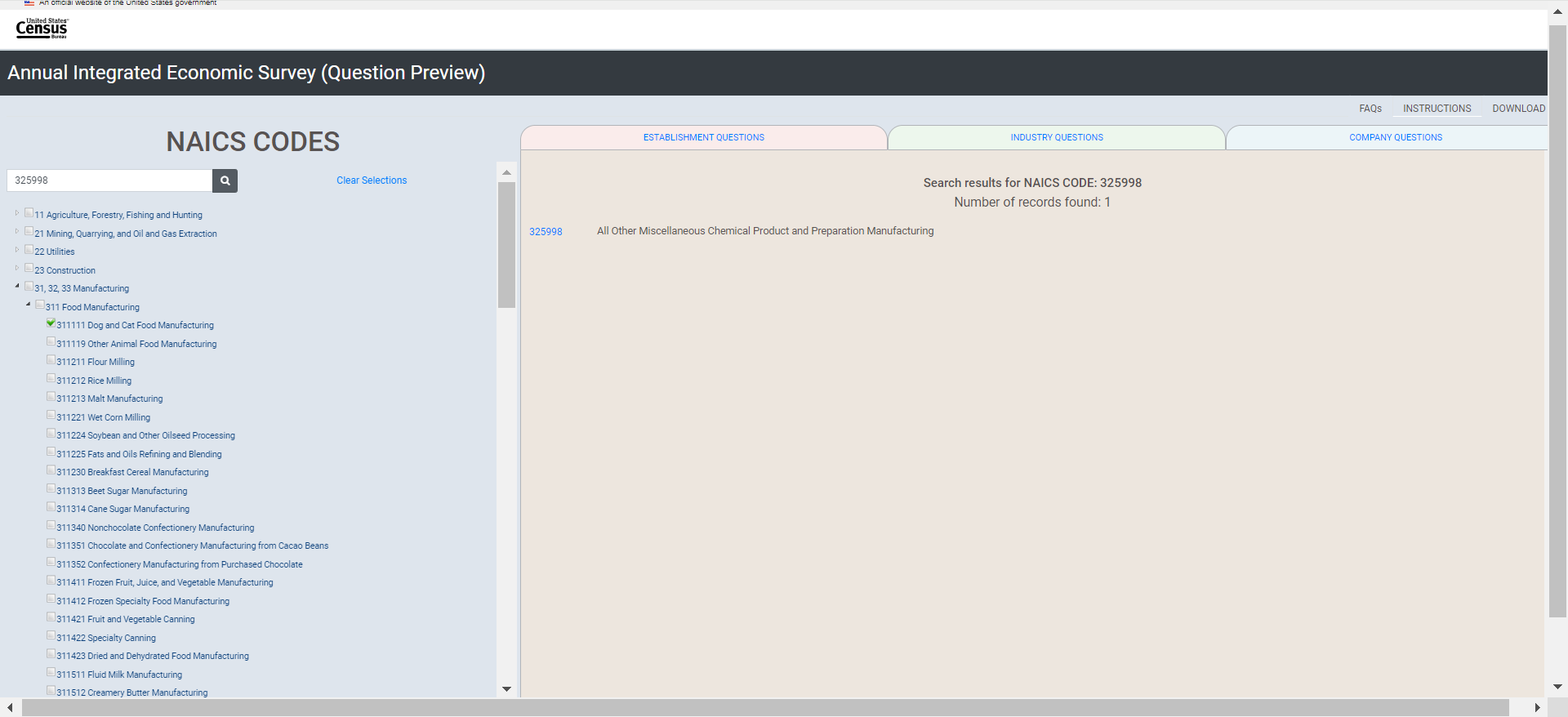

In this next section, we use the fictional example of the Census Cat Company as illustrative of interactions with the Content Selection Tool. Users will make selections based on their respective businesses. The Census Cat Company example is for illustrative purposes only.)

Item 7: Answer key for Example of Census Cat Company

NAICS |

Industry |

311111 |

Dog and Cat Food Manufacturing |

311111 |

Dog and Cat Food Manufacturing |

325998 |

All Other Miscellaneous Chemical Product and Preparation Manufacturing |

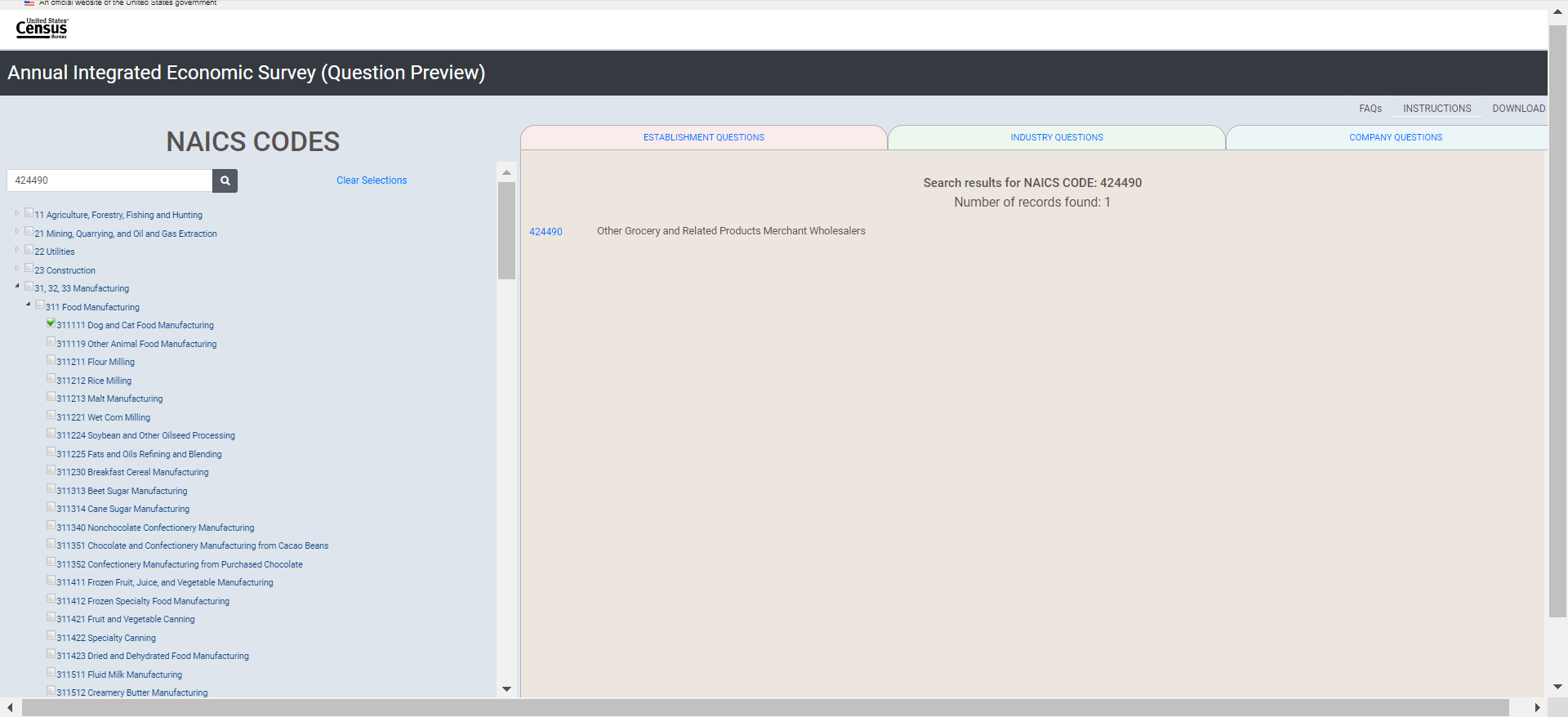

424490 |

Other Grocery and Related Products Merchant Wholesalers |

424990 |

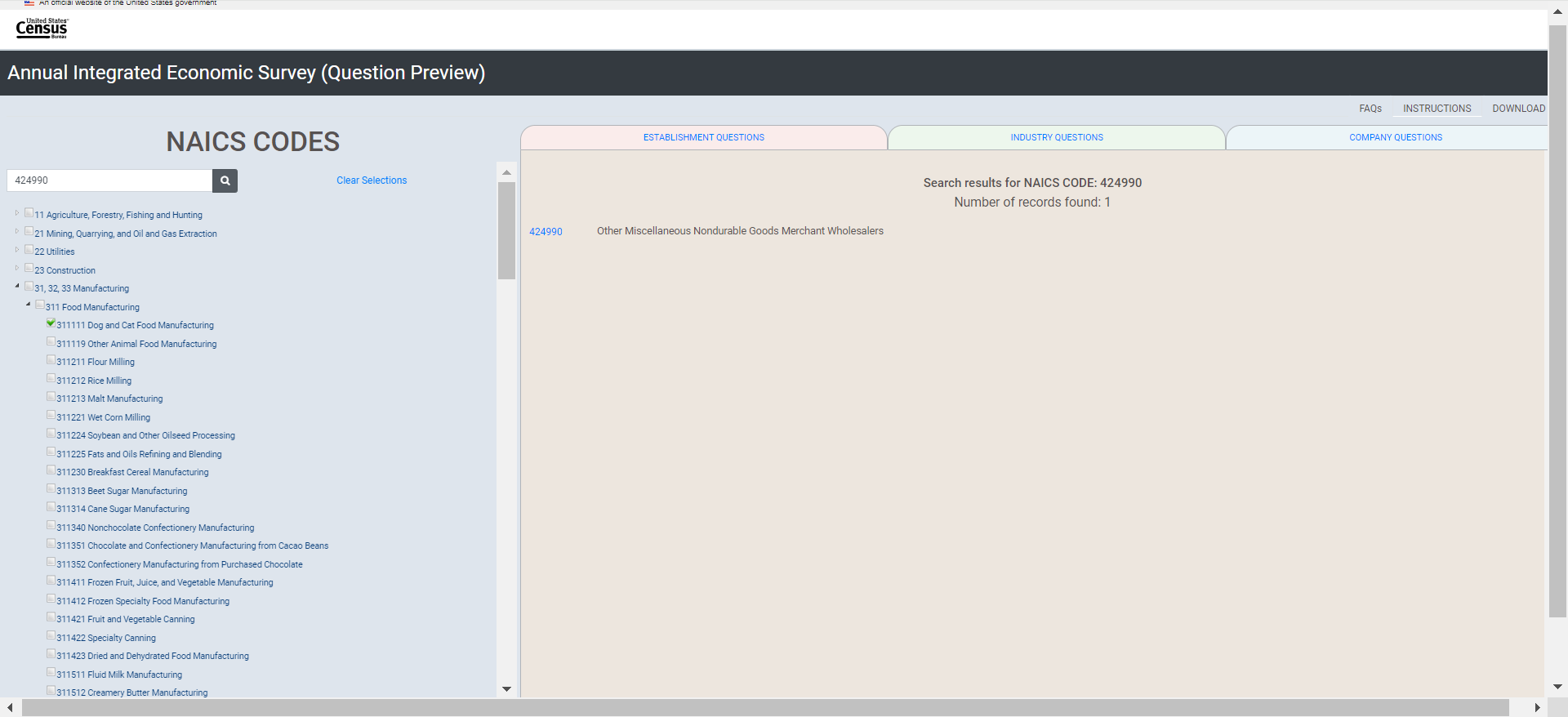

Other Miscellaneous Nondurable Goods Merchant Wholesalers |

453910 |

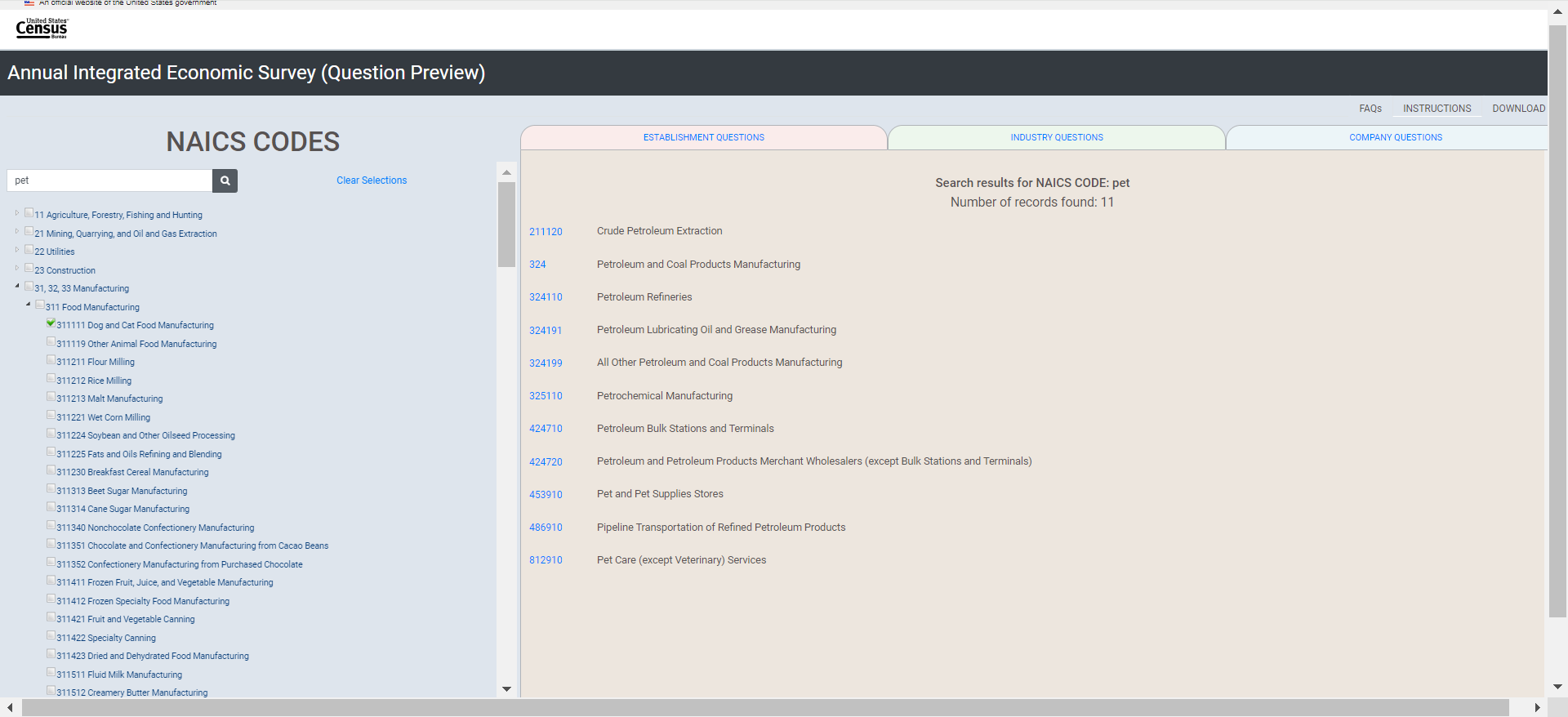

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

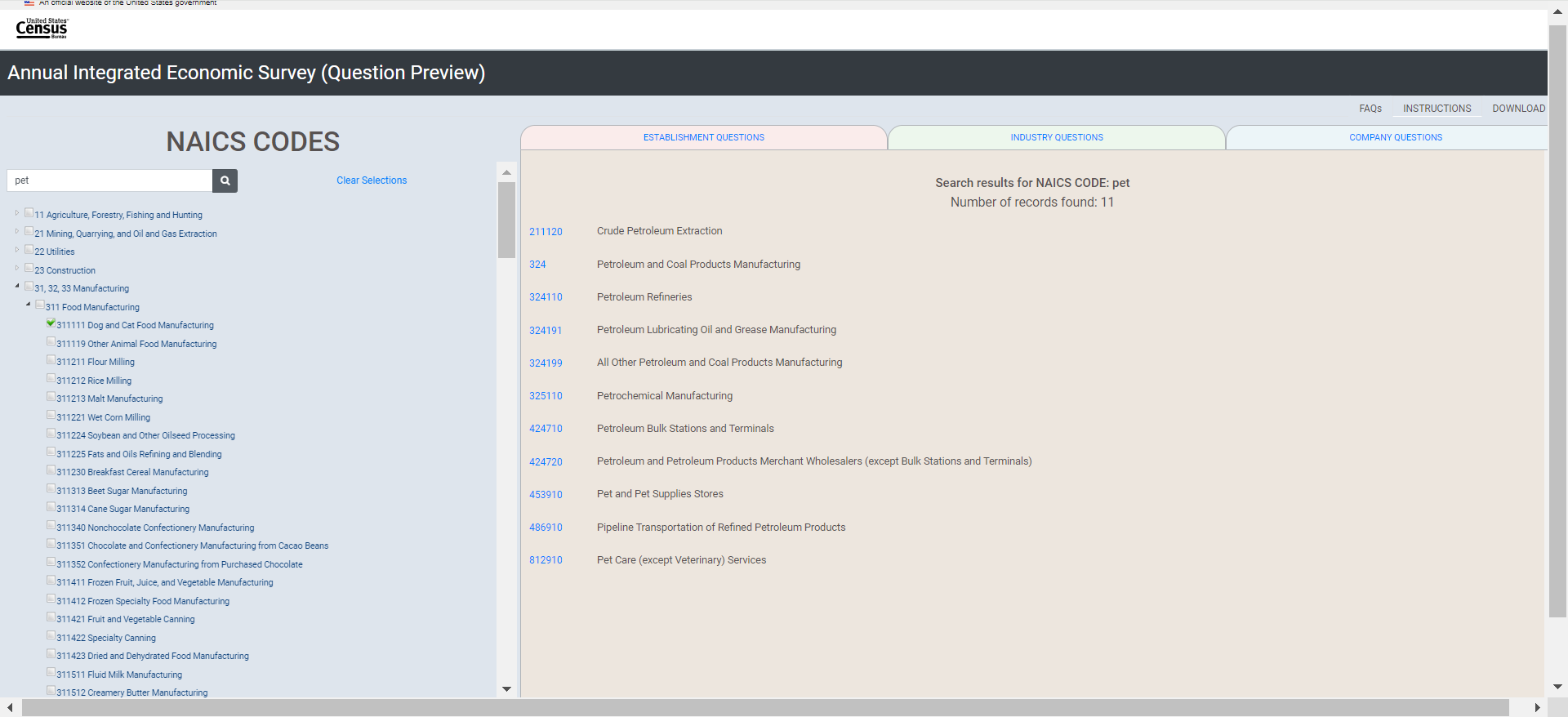

812910 |

Pet Care (except Veterinary) Services |

812910 |

Pet Care (except Veterinary) Services |

Making Selections for the Census Cat Company

Item 8.1: Making Selections for the Census Cat Company’s Cat Food Manufacturing (NAICS 311111)

Item 8.2: Making Selections for the Census Cat Company’s Cat Litter Manufacturing (NAICS 325998)

Item 8.3.1: Making Selections for the Census Cat Company’s Cat Food and Pet Supply Wholesaler (NAICS 424990)

Item 8.3.2: Making Selections for the Census Cat Company’s Cat Food and Pet Supply Wholesaler (NAICS 424990)

Item 8.4: Making Selections for the Census Cat Company’s Pet Supply Stores (NAICS 453910)

Item 8.4.1: Making Selections for the Census Cat Company’s Pet Supply Stores (NAICS 453910)

Item 8.5: Making Selections for the Census Cat Company’s Pet Grooming Business (NAICS 812910)

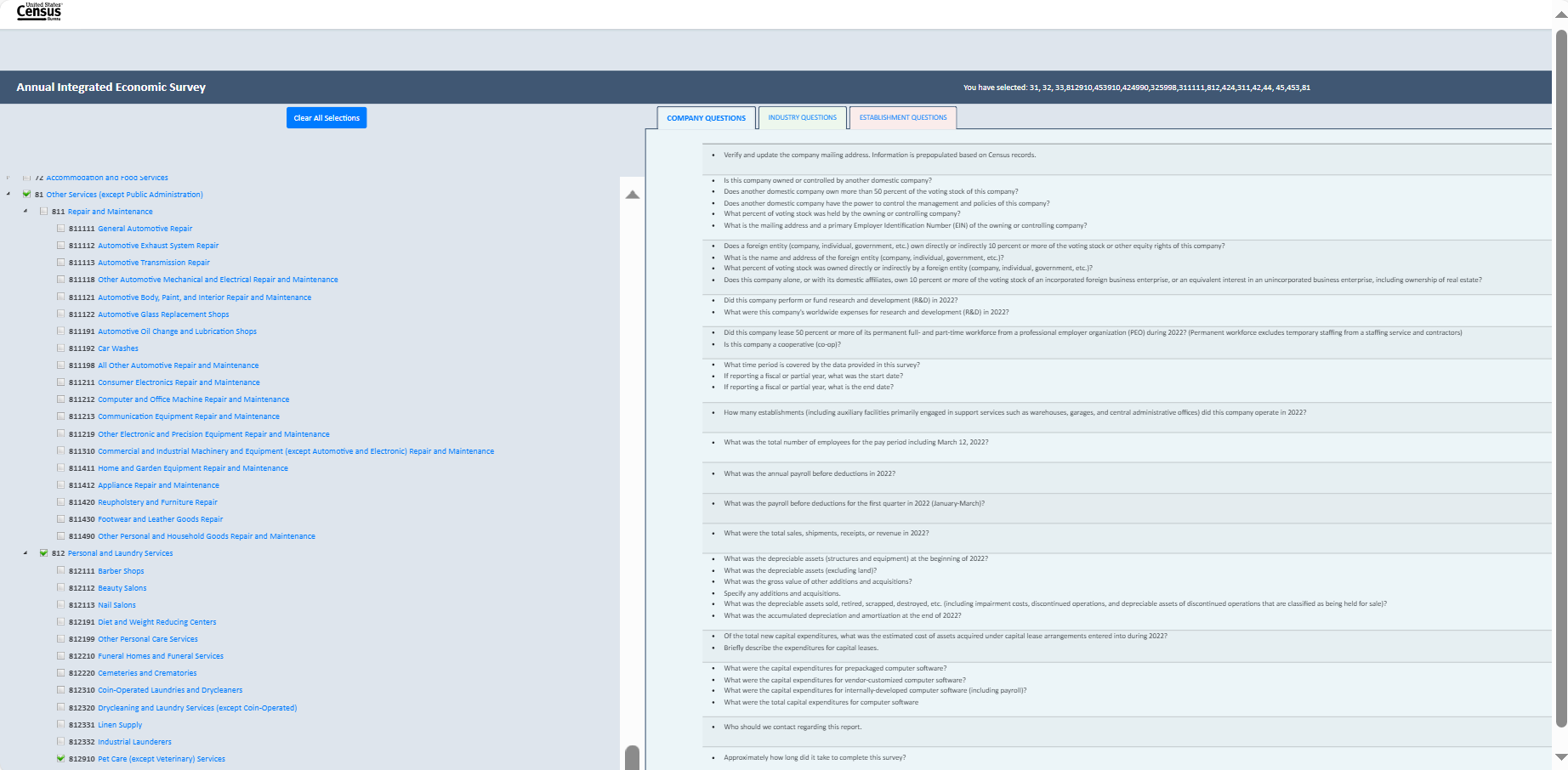

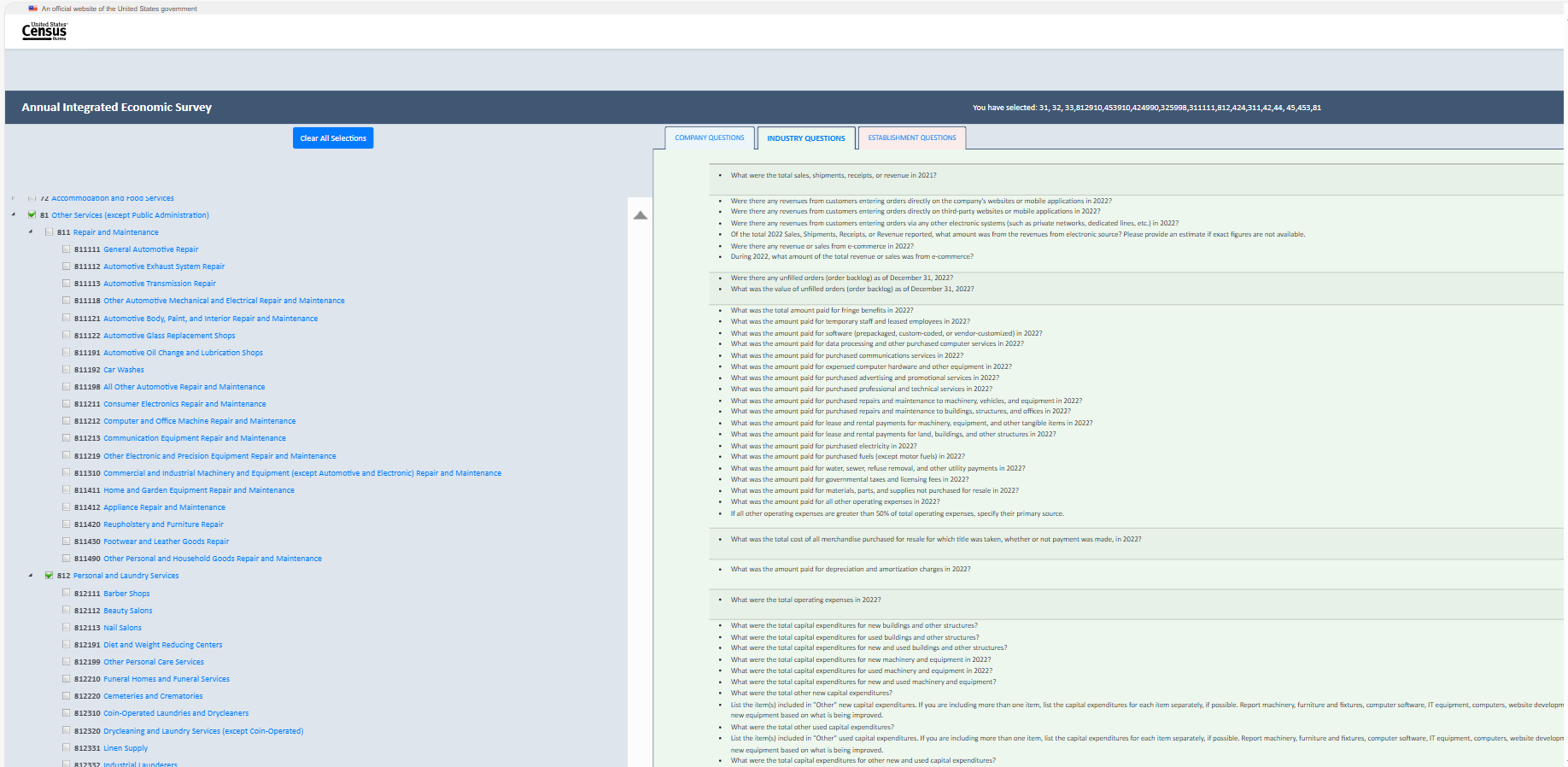

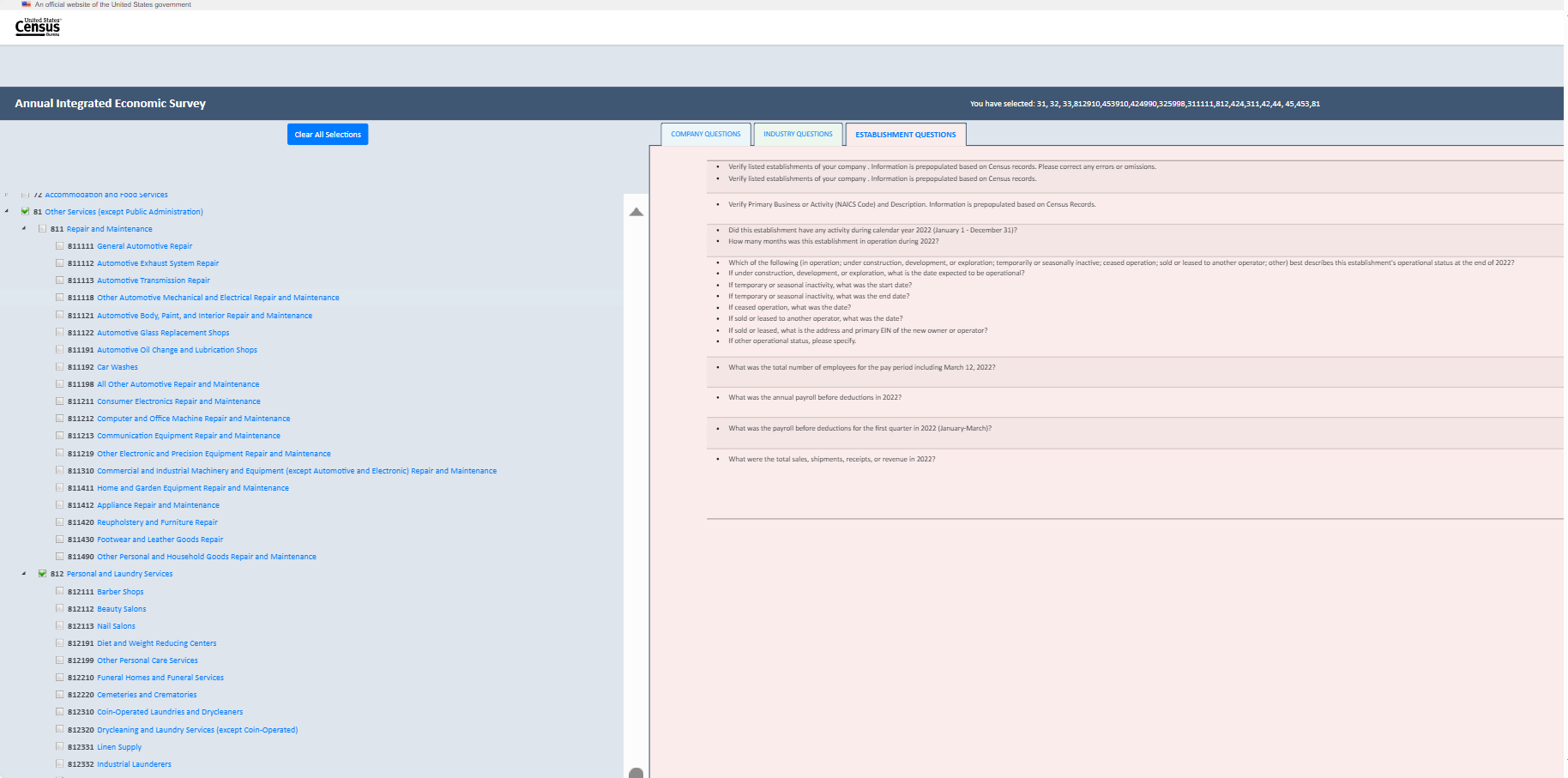

Item 9: View of Company tab after all selections have been made for the Census Cat Company

Item 10: View of Industry tab after all selections have been made for the Census Cat Company

Item 11: View of Establishment tab after all selections have been made for the Census Cat Company

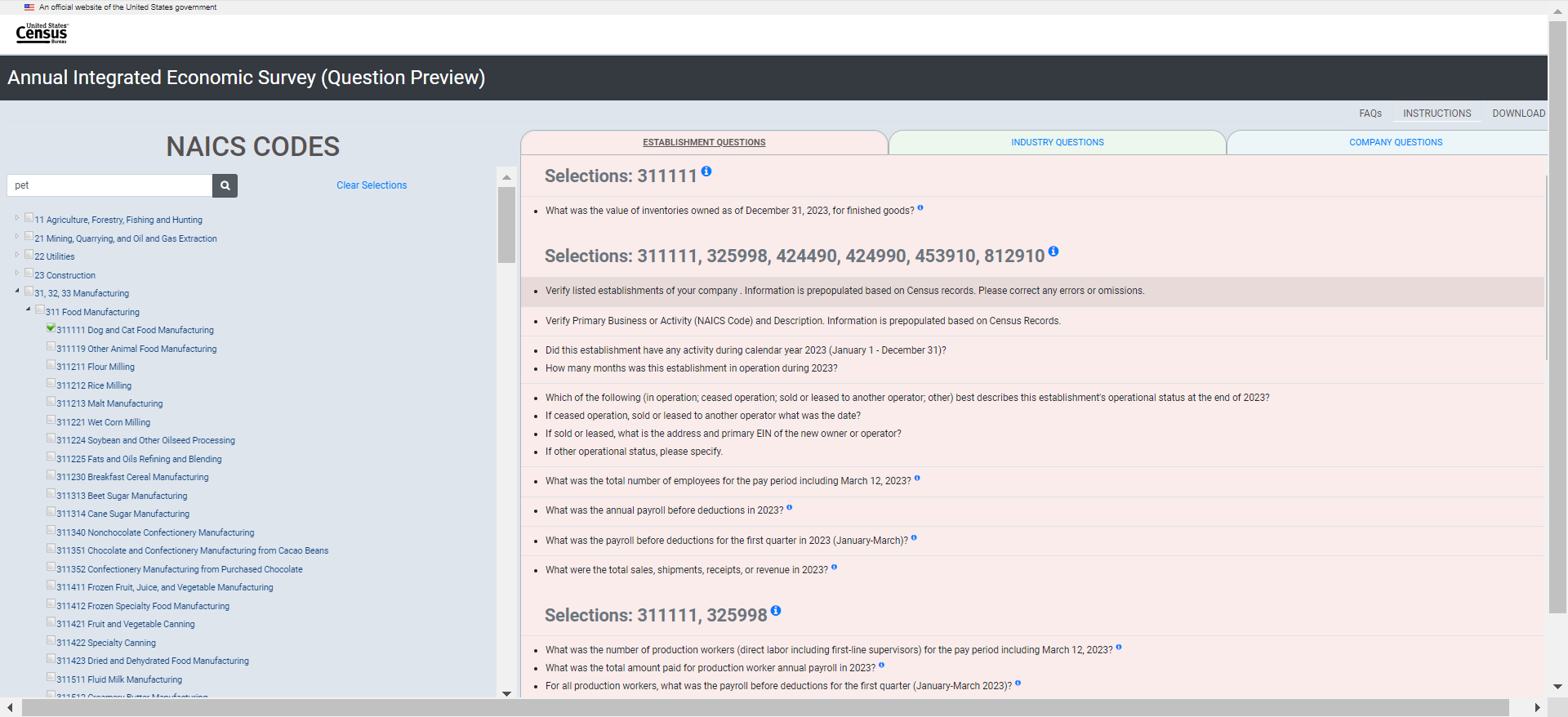

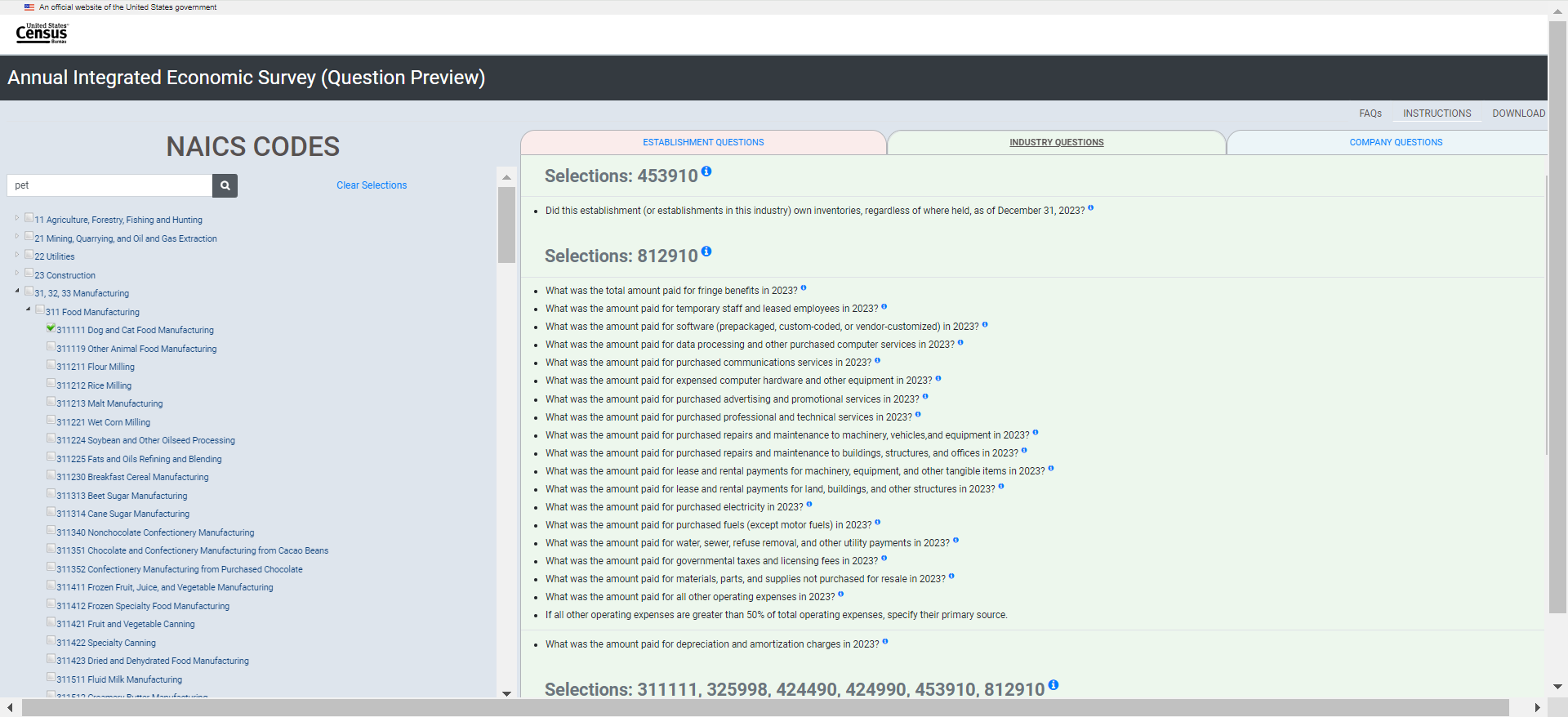

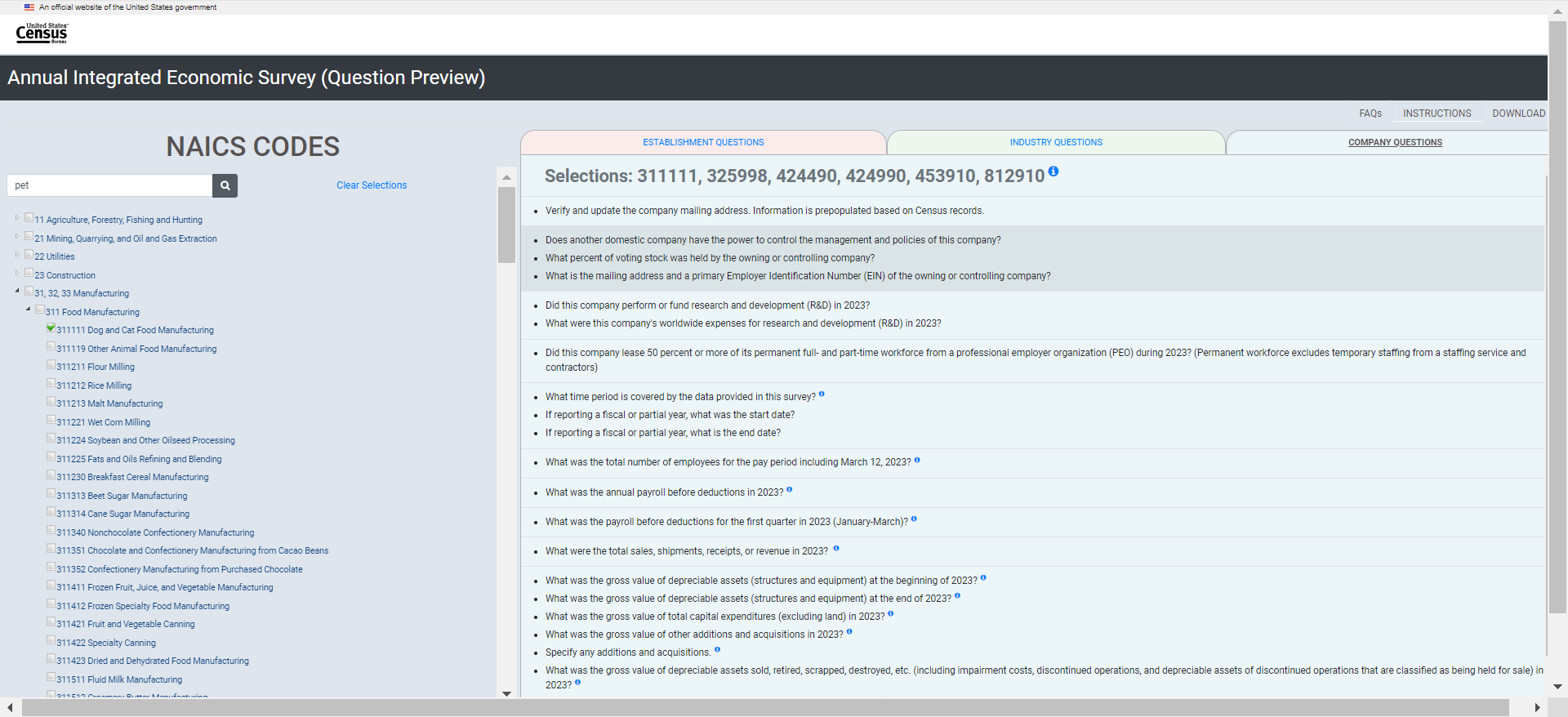

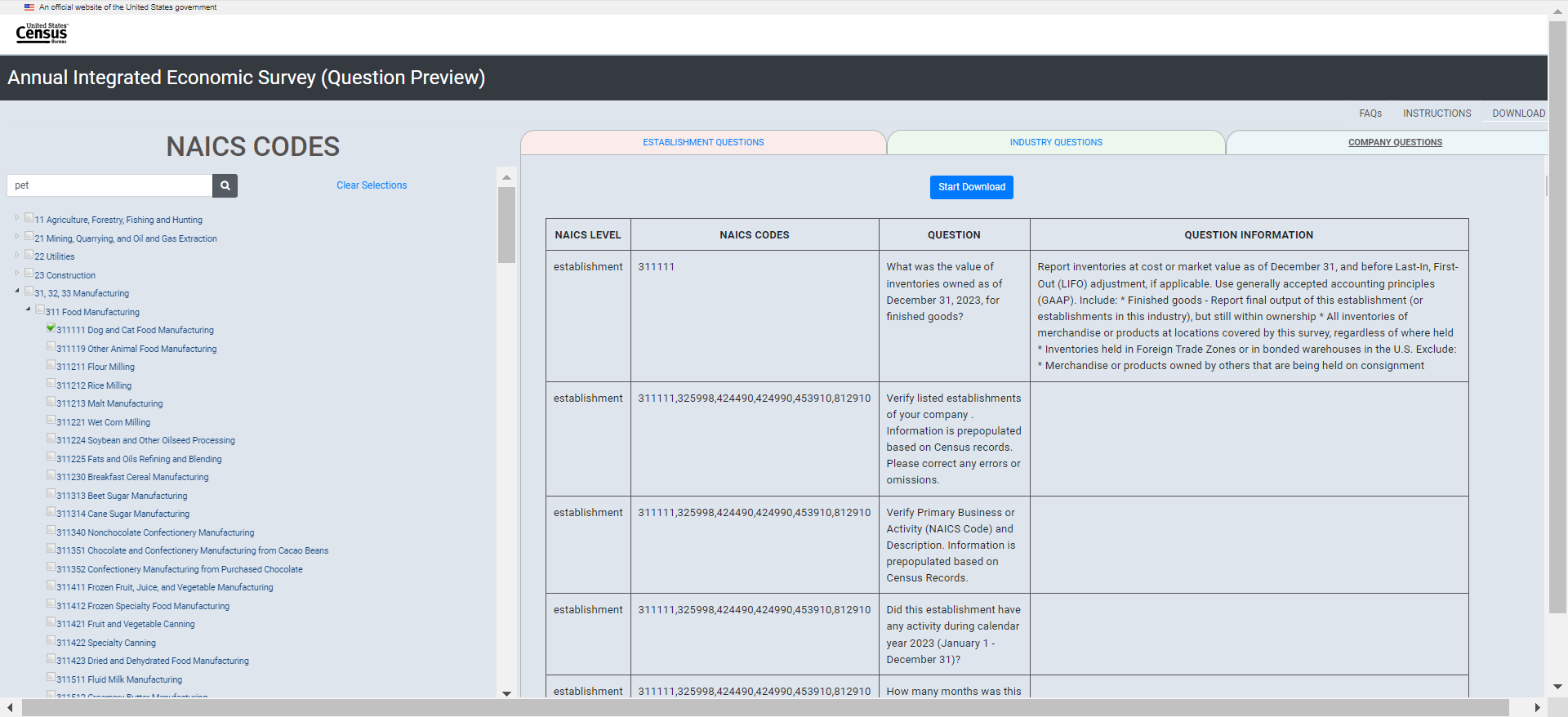

Appendix D: Screenshots of the Content Tool at the March 2024 Production Launch (At the Conclusion of Interviewing)

Screenshots of the Content Tool at the March 2024 Production Launch (At the Conclusion of Interviewing)

For this Appendix, we again use the fictional Census Cat Company as illustrative of interaction with the tool. During interviewing, participants used their company’s NAICS codes to interact with the Content Selection Tool.

NAICS |

|

311111 |

Dog and Cat Food Manufacturing |

311111 |

Dog and Cat Food Manufacturing |

325998 |

All Other Miscellaneous Chemical Product and Preparation Manufacturing |

424490 |

Other Grocery and Related Products Merchant Wholesalers |

424990 |

Other Miscellaneous Nondurable Goods Merchant Wholesalers |

453910 |

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

453910 |

Pet and Pet Supplies Stores |

812910 |

Pet Care (except Veterinary) Services |

812910 |

Pet Care (except Veterinary) Services |

Landing page:

Establishment Questions tab (without having made selections)

Industry Questions tab (without having made selections)

Company Questions tab (without having made selections)

FAQs

Instructions

Download (without having made selections)

Now, interaction using the Census Cat Company as an example:

Selecting for 311111 by searching for “cat” (Dog and Cat Food Manufacturing)

Then searching for NAICS 325998 (All Other Miscellaneous Chemical Product and Preparation Manufacturing)

(3) Then searching for NAICS 424490 (Other Grocery and Related Products Merchant Wholesalers):

(4) Then searching for NAICS 424990 (Other Miscellaneous Nondurable Goods Merchant Wholesalers):

Then searching for NAICS 453910 (Pet and Pet Supplies Stores) using the keyword search “pet”:

Last code to select: NAICS 812910 (Pet Care (except Veterinary) Services) using search for “pet”:

Establishment Questions tab (after making CCC selections)

Industry Questions tab (after making CCC selections)

Company Questions tab (after making CCC selections)

Download (after CCC selections have been made)

Download Questions (after CCC selections have been made)

Appendix E: Interviewing Protocol for the Content Selection Tool

2023 AIES Interactive Question Preview Tool Usability Evaluation

Purpose: Researchers in the Economy-Wide Statistics Division (EWD) will conduct usability interviews to assess functionality of the prototype interactive question preview tool. This interviewing will examine whether respondents can successfully complete tasks that are designed to mimic those they execute using the interactive question preview tool to support response to the AIES. Researchers will investigate whether the interactive question preview tool is intuitive by assessing respondents’ ability to navigate through the tool in an efficient way to a successful completion of the task.

Research Questions: The research will be guided by the following research questions:

Do users understand the purpose of the tool?

Do they identify that the content is industry driven?

Do they understand collection unit differences?

Does this tool meet the need for the ability to preview the survey prior to reporting?

Can users generate content that matches their AIES content?

What features of the tool are intuitive? What features are not?

What additional features might support response?

Informed Consent: Respondents will be asked to complete a consent form electronically before the time of the interview.

Materials Needed:

Electronically signed consent form

Link to the Interactive Question Preview Tool

Introduction

Thank you for your time today. My name is XX and I work with the United States Census Bureau on a research team that evaluates how easy or difficult Census surveys and products are to use. We conduct these interviews to get a sense of what works well, and what areas need improvement. We recommend changes based on your feedback.

[Confirm they signed the Consent Form; should be sent prior to interview.]

Thank you for signing the consent form, I just want to reiterate that we would like to record the session to get an accurate record of your feedback, but neither your name or your company name will be mentioned in our final report. Only those of us connected with the project will review the recording and it will be used solely for research purposes. We plan to use your feedback to improve the design of this survey instrument and make sure it makes sense to respondents like you. Do you agree to participate? Thank you.

[start CAMTASIA/Snagit screen recording, if yes.]

Thank you.

I am going to give you a little background about what we will be working on today. Today you will be helping us to evaluate the design of the interactive question preview tool for the Annual Integrated Economic Survey (or AIES). The interactive question preview tool is in the early stages of development, so this is an opportunity to make sure it works as smoothly as possible. You are being asked to be a part of this evaluation because your company recently responded to the 2022 AIES – and I just want to take a moment and thank you so much for your response!

To do this, we will have you complete various tasks using the interactive question preview tool. These will be consistent with tasks you would normally complete if you were using this tool. We are mainly interested in your impressions both good and bad about your experience. I did not create the tool so please feel free to share both positive and negative reactions.

I may ask you additional questions about some of the screens you see today and your overall impressions.

Do you have any questions before we begin? Ok let’s get started.

Warm-up

First, I would like to get some information to give me some context.

Can you tell me about the business, like what types of goods or services it provides? And how it is organized?

What is your role within the company?

Are you typically the person responsible for government surveys?

Do you typically have access to all of the data needed?

If not, what areas or positions do you usually reach out to?

In the past when responding to survey requests from the Census Bureau, did you preview survey questions before beginning the survey?

If yes, can you tell me more about that? How did you use that feature – simply to preview questions, to print and write in gathered responses, to share with colleagues as a means of gathering data, and/or some other way?

If not, do you think you’d be likely to use a question preview tool prior to completing AIES for the first time?

Introduction to the Tool

Great, thanks for that context. Next I’ll have you open the interactive question preview tool link, AIES Survey Questionnaire (census.gov), I sent to you and walk you through how to screenshare. You make take a moment to close anything you don’t need open.

[To screenshare, if necessary] Look for a button on Teams with a box with an arrow- it might be located next to the microphone button.

To begin, I’d like to set the stage by telling you more about the Interactive Question Preview Tool that we will be testing today. Since collection for the AIES is only by web and the questions you would receive are specific to your business, there is no designated survey form in which a respondent can preview the survey questions they will receive when taking the survey. Instead, there is an interactive question preview tool that respondents can use to indicate their industry (or industries) and preview questions at the company, industry, and establishment level. Again, this tool is in its early stages of development and your feedback will be helpful in improving this tool for its users.

Expectations of accessibility: Imagine that you have entered the AIES survey on the respondent portal and would like to preview questions to the survey in preparation for coordinating the gathering of the data you will need to respond to this survey. Where would you expect to find information on the Interactive Question Preview Tool?

[Show the following instructions to the respondent via Qualtrics (using the same link provided for the consent form)]: This is the Interactive Question Preview Tool for the Annual Integrated Economic Survey. This tool will allow users to select their type of business operation and view the question collected at the company, industry, and establishment or location levels. This tool is NOT to be uploaded with responses in the respondent portal or submitted to the Census Bureau for response to the AIES. Instead, this is to be used only as a tool for respondents to preview survey questions that are specific to their businesses.

Comprehension: In your own words, please describe what these instructions mean to you? (Interviewer: note whether respondent seems to understand that this tool is only for the purpose of previewing questions for the AIES).

Are the instructions clear and concise? If not, what requires clarification?

Now let’s turn our attention to the Interactive Question Preview Tool webpage. Please take a few seconds to look at what’s on your screen.

What are your first thoughts or impressions of what you’re seeing on this screen? What is the first thing you notice, or what pulls your eye?

Would instructions for using this tool be helpful? And, if so, where would you expect to see those instructions?

Tasks

Now we will work on some tasks related to using this interactive question preview tool. While you are completing the tasks, I would like for you to think aloud. It will be helpful for us to hear your thoughts as you move through the question preview tool. Once you have completed each task just let me know by saying finished or done- then we can discuss and/or move on to the next task.

[During the interview, respondents will use their device. Have participant go through each task, remind them to think out loud, and note participant’s questions or signs of difficulty. Note any content issues if they arise.]

Think about what your industry does or makes. Now, select the industry or industries that best represent your company. [Interviewer: Note respondents’ selections(s) and whether or not the respondent’s selection matches the Business Registry (BR) industry assignment.]

Did you find it easy or difficult to select your industry/industries?

If difficult, please tell me more about that and please provide some ideas for improvement. (Interviewer: Note if respondent selects all applicable boxes and/or expresses any uncertainty as to whether their selections triggered anything to happen in the tool – ie, questions to be added in the industry questions tab)