District Personnel Survey

Study of Education Data Systems and Decision Making

Att_NTA District Survey OMB pkg 1-10-07v4

Superintedent notification letter

OMB: 1875-0241

SRI International

January 10, 2007

National Technology Activities Task Order

OMB Package for District Survey

ED-04-CO-0040 Task 0002

SRI Project P16946

Submitted to:

Bernadette Adams Yates

Policy and Program Studies Service

Room 6W207

U.S. Department of Education

400 Maryland Avenue, SW

Washington, DC 20202

Prepared by:

Barbara Means

Christine Padilla

CONTENTS

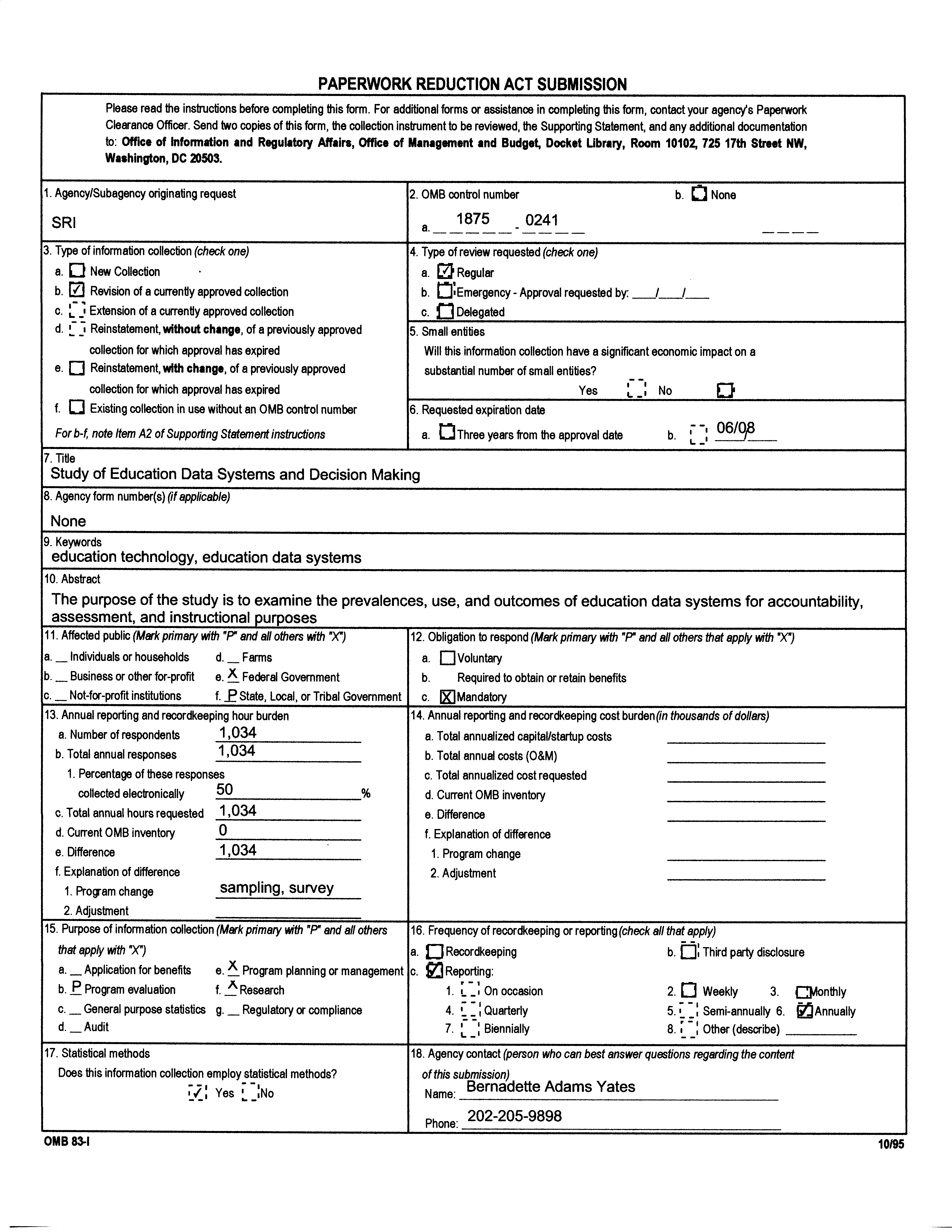

Paperwork Reduction Act Submission Standard Form OMB-83-I iv

I. Introduction 1

Overview of the Evaluation’s Goals, Research Questions, and Approach

to Data Collection 1

II. Supporting Statement for Paperwork Reduction Act Submission 9

A. Justification 9

1. Circumstances Making Collection of Information Necessary 9

2. Use of Information 10

3. Use of Information Technology 11

4. Efforts to Identify Duplication 11

5. Methods to Minimize Burden on Small Entities 11

6. Consequences If Information Is Not Collected or Is Collected Less Frequently 11

7. Special Circumstances 12

8. Federal Register Comments and Persons Consulted Outside the Agency 12

9. Respondent Payments or Gifts 13

10. Assurances of Confidentiality 13

11. Questions of a Sensitive Nature 14

12. Estimate of Hour Burden 14

13. Estimate of Cost Burden to Respondents 15

14. Estimate of Annual Costs to the Federal Government 15

15. Change in Annual Reporting Burden 15

16. Plans for Tabulation and Publication of Results 15

17. OMB Expiration Date 20

18. Exceptions to Certification Statement 20

B. Collections of Information Employing Statistical Methods 21

1. Respondent Universe 21

2. Data Collection Procedures 24

3. Methods to Maximize Response Rates 29

4. Pilot Testing 30

5. Contact Information 30

References 31

LIST OF EXHIBITS

Exhibit 1 Conceptual Framework 2

Exhibit 2 Relationship Between Evaluation Questions and Data Sources 6

Exhibit 3: Technical Working Group Membership 13

Exhibit 4: Estimated Burden for Site Selection and Notification 15

Exhibit 5: Schedule for Dissemination of Study Results 17

Exhibit 6: Distribution of Districts and Student Population, by District Size 22

Exhibit 7: Distribution of Districts and Student Population, by District Poverty Rate 23

Exhibit 8: Number of Districts in the Universe and Quota Sample, by Stratum 24

Exhibit 9: General Timeline of Data Collection Activities 25

Exhibit 10: District Survey Topics 26

Appendices

Appendix A: Draft Notification Letters A-1

Appendix B: Study Brochure B-1

Appendix C: District Survey C-1

I. Introduction

The Policy and Program Studies Service (PPSS), Office of Planning, Evaluation and Policy Development, U.S. Department of Education (Department), requests clearance for a district survey for the Study of Education Data Systems and Decision Making. OMB provided clearance for case study data collection activities for this study on October 23, 2006 (OMB Control Number 1875-0241). The study is conducted under the authority of Title II, Part D of the Elementary and Secondary Education Act (ESEA). Using a multi-method approach that includes case studies and a survey, the study will document the availability of education data systems, their characteristics, and the prevalence and nature of their use in districts and schools. In this submission, we request clearance for the design, sampling strategy, and district survey data collection activities to be undertaken by the study.

A. Overview of the Evaluation’s Goals, Research Questions, and Approach to Data Collection

Today’s accountability systems have led educators to focus on achievement test scores (and, more recently, college-ready high school graduation) as the primary outcomes of education. Setting goals or targets for improving these outcomes is common practice at the national, state, and local levels. The emerging consensus around goals and commitment to improving education are important progress toward giving all students a better education, but much remains to be done if commitment is to generate results. What accountability systems per se do not do is to link measured educational outcomes to the educational processes that produced them. Educators make a myriad of decisions regarding implementation of specific processes, programs, and policies, but systematic examination of the results of an adopted practice is more the exception than the rule.

Current data-driven decision-making (DDDM) efforts call on educators to adopt a continuous-improvement perspective with an emphasis on goal setting, measurement, and feedback loops so that teachers and administrators reflect on their programs and processes, relate them to student outcomes, make refinements suggested by the outcome data, and then implement the refined practices, again measuring outcomes and looking for places for further refinement. An important distinction between this innovation and most educational programs or reforms is that this improvement cycle does not end with the first set of program refinements but is continuous.

Over the past four years, meeting the data requirements of NCLB and adapting or acquiring database systems capable of generating the required student data reports has consumed much of the attention of district and state assessment and technology offices. This work has been necessary, but it is not sufficient for data-driven decision making to make a mark on education. Data-driven educational decision making is much more than a data system: it is a set of expectations and practices around the on-going examination of data to ascertain the effectiveness of educational activities in order to improve outcomes for students. It is a commitment on the part of an educational organization to continually work to refine and improve their programs and practices.

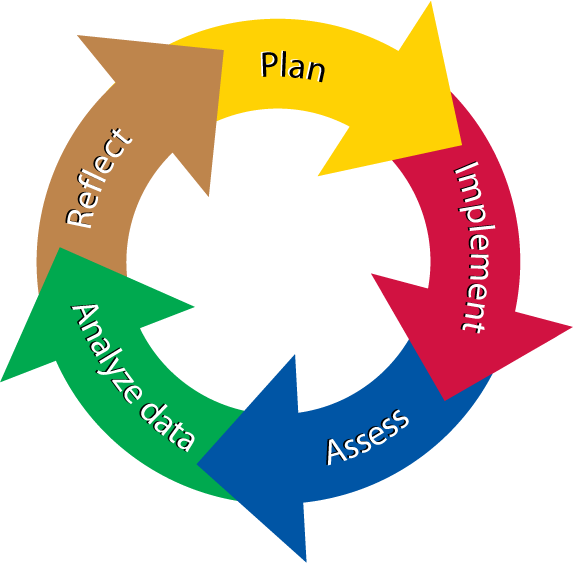

Exhibit 1 shows the stages in a data-driven continuous-improvement process—plan, implement, assess, analyze data, and reflect (as a precursor to more planning and a refined implementation). Although this process has been promoted within business for decades, it is an innovative approach for education.

E xhibit

1

xhibit

1

Conceptual Framework for Data-Driven Decision Making

Making the continuous-improvement perspective and the processes of data-driven decision making part of the way in which educators function requires a major cultural change in schools and districts. Such a change will not occur without leadership, effort, and well-designed supports. The bottom portion of Exhibit 1 identifies six major types of prerequisite supports for effective data-driven decision making: (1) state, district, and school data systems, (2) leadership for educational improvement and the use of data, (3) tools for generating data, (4) professional development and technical support for data interpretation, (5) social structures and supported time for analyzing and interpreting data, and (6) tools for acting on data. These systems and supports form the conceptual framework that guides the evaluation activities of the national Study of Education Data Systems and Decision Making. Examination of these key systems and supports will provide policy makers and practitioners with information both on promising practices regarding data-driven decision making and the gaps that inhibit effective implementation. We briefly describe the nature of these supports below.

Data systems: State education data systems typically include student enrollment information, basic demographic data, special program designation (if applicable), and scores on state-mandated achievement tests (in most cases, an annual spring testing in language arts and mathematics and often a proficiency or “exit” examination required for a high school diploma). School districts typically maintain their own data systems. In addition to student scores for state-mandated tests, which they get from the state or the state-designated vendor, district systems often include information about a student’s teachers, grades, attendance, disciplinary infractions, and scores on district tests. Other student data systems are targeted for school use, and these are more likely than state and district systems to incorporate teacher-developed formative assessments and to be closely aligned with the decisions principals and teachers make in the course of a school year (Means, 2005). These systems are more likely to contain annotated samples of student work, grades on students’ assignments, and information concerning student mastery status with respect to specific skills. In theory, all of these state, district, and school-level systems could be interoperable (U.S. Department of Education, 2004), and there could be “one-stop shopping” for educational data coming from sources ranging from the individual school to the U.S. Department of Education (what one vendor characterizes as the “secretary to Secretary” solution). Such a set of integrated systems does not yet exist, but federal efforts are moving toward uniform and consolidated reporting of data to the federal level, and some states are attempting to incorporate features in state systems that will make them more useful to districts and schools (Palaich, Good, and van der Ploeg, 2004).

Leadership for educational improvement and use of data: Pioneering efforts to promote data-driven decision making within districts and schools have found that the active promotion of the effort on the part of the superintendent or principal is vital (Cromey, 2000; Halverson, Grigg, Prichett, and Thomas, 2005; Herman and Gribbons, 2001). Typically, district and school leaders play a major role in framing targets for educational improvement, setting expectations around staff participation in data-driven decision making, and making resources, such as supported time, available to support the enterprise.

Tools for generating data: Increasingly, student achievement data are available at the school level in a form that can be disaggregated by student category (ethnicity, free or reduced-price lunch status, special education status, and so on) over the World Wide Web. Software systems to support data-driven decision making all generate standard student achievement reports, and many also produce custom reports for user-designated student groups (an important feature for school staff who want to examine the effects of locally developed services for specific student groups). These are major improvements over the level of access to student achievement data that schools had previously, but much remains to be done if teachers are to have timely, convenient access to user friendly reports of rich student data.

Social structures and supported time for analyzing and interpreting data: The most sophisticated data warehouse in the world will have no effect on instruction if no one has—or takes—the time to look at the data, reflect on those data, and draw inferences for instructional planning. Case studies of schools active in data-driven decision making suggest that organizational structures that include supported time for reviewing and discussing data in small groups greatly increase the likelihood that the examination of data will be conducted and will lead to well-informed decisions (Choppin, 2002; Cromey, 2000).

Professional development and technical support for data interpretation: Teacher training generally has not included data analysis skills or data-driven decision-making processes in the past (Choppin, 2002). Few administrators have this kind of training either. Moreover, the measurement issues affecting the interpretation of assessment data, and certainly the comparison of data across years, schools, or different student subgroups, are complicated. Data misinterpretation is a real concern (Confrey and Makar, 2005). For this reason, districts and schools are devoting increasing amounts of professional development time to the topic of data-driven decision making.

Tools for acting on data: The examination of data is not an end in itself but rather a means to improve decisions about instructional programs, placements, and methods. Once data have been analyzed to reveal weaknesses in certain parts of the education program or to identify students who have not attained the expected level of proficiency, educators need to reflect on the aspects of their processes that may contribute to less-than-desired outcomes and to generate options for addressing the identified weaknesses. Some of the data-driven decision-making systems incorporate resources that teachers can use in planning what to do differently.

Goals of the Evaluation

The Study of Education Data Systems and Decision Making will document the availability of education data systems, their characteristics, and the prevalence and nature of their use in districts and schools. It will examine the availability of the six supports for effective data-driven decision making identified in our conceptual framework and the nature of challenges that educators face in trying to use data systems in their efforts to improve instruction.

The data that educators need to design instruction for their students may be gathered at the classroom, school, district, or state level, and the systems in which the information is stored may be developed commercially or by national, state, district, or school organizations. A system developed or purchased at one level of the education system may be used by decision makers at another level (e.g., when teachers examine their school’s achievement and demographic data in a state or national database). In recognition of this complexity and the interactions among data systems, supports, and practices at different levels of the education system, we plan to analyze data collected at the state, district, and school levels.

When possible, we will use existing data sources in addressing study questions. Important resources for this study are the National Center for Educational Accountability (NCEA)1 review of state-level education data systems and the ongoing National Educational Technology Trends Study (NETTS) teacher survey, which includes a set of items explicitly addressing the use of data systems from the teacher perspective. Primary data collection activities for this study include a district administrator survey for which we are currently seeking clearance, and case studies of 30 schools focused on the use of data in instructional decision making (OMB Control Number 1875-0241).

Evaluation Questions

The Study of Education Data Systems and Decision Making seeks to answer five basic evaluation questions derived from our conceptual framework:

What kinds of systems are available to support district and school data-driven decision making?

Within these systems, how prevalent are tools for generating and acting on data?

How prevalent are state and district supports for school use of data systems to inform instruction?

How are school staff using data systems?

How does school staffs’ use of data systems influence instruction?

As noted above, we plan to analyze data collected at the state, district, and school levels. In Exhibit 2, the data collection activities for the study, along with secondary data sources, are matched with the questions that will guide this evaluation. District survey activities, for which we are seeking OMB clearance, are described in detail later in this document.

Exhibit 2

Relationship Between Evaluation Questions and Data Sources

Evaluation Question |

District Survey |

Case Studies |

Secondary Data Sources |

Q1: What kinds of systems are available to support school and district DDDM? How broadly are education data systems being implemented in states, districts, schools, and classrooms? What kinds of information do the systems contain? What functionalities do they offer? |

X |

|

NCEA survey(s) NETTS teacher survey

Wayman, Stringfield, and Yakimowski, 2004 |

Q2: Within these systems, how prevalent are tools for generating and acting on data? How common are online assessments, transaction capture, links to content standards, and instructional resources in the systems being developed or acquired by states and districts? Which of these tools are being used by teachers and administrators? |

X |

X |

NETTS teacher survey |

Q3: How prevalent are state and district supports for school use of data systems to inform instruction? What professional development, data coaching, and other supports are states and districts providing for school-level data-driven decision making? What data are collected to evaluate the effectiveness of data system supports? Have data collected to date led to changes in data system supports? What trade-offs have district staff had to make to allocate time and resources to data-driven decision making? |

X |

X |

NETTS teacher survey |

Q4: How are school staff using data? How broadly and frequently are education data systems being used by teachers nationally overall and in schools that vary in student demographics? How much time do teachers and administrators put into using data management systems? How is their time supported, and what outside resources facilitate their system use? What specific data do teachers and school administrators obtain from data management systems? For what purposes (e.g., class placement, curriculum decisions, planning of professional development) do they use these data? What kinds of data or system features are used less often? |

|

X |

NETTS teacher survey |

Exhibit 2 (concluded)

Relationship Between Evaluation Questions and Data Sources

Evaluation Question |

District Survey |

Case Studies |

Secondary Data Sources |

Q5: How does school staffs’ use of data systems influence instruction?? How do school staff interpret the data they obtain from these systems? What changes in school and classroom practices do school staff make in response to the inferences they make on the basis of data they obtain from these systems? |

|

X |

NETTS teacher survey |

Data Collection Approach

Sound data on the state of data-driven decision making in education are needed to inform educational policy and practice. Collecting high-quality data involves careful attention to each detail in the data collection process, from asking the right questions in surveys and interviews to ensuring that the data collected are handled accurately and ethically. We have planned a set of interrelated strands of data collection combining quantitative with qualitative methods as follows:

Case studies of a purposive sample of 30 schools in 10 districts.

A survey of a nationally representative sample of 500 school districts.

Secondary analysis of existing data sources, including state surveys focused on the features of state education data systems and portions of a national teacher survey focused on the use of data.

Information from the case studies will help inform the refinement of district survey items and the district survey will provide an opportunity to test hypotheses derived from the case studies.

II. Supporting Statement for Paperwork Reduction Act Submission

A. Justification

1. Circumstances Making Collection of Information Necessary

Section 2404(b)(2) of Title II, Part D, of the Elementary and Secondary Education Act of 2001 (P.L. 107-110) provides for the support of national technology activities, including technical assistance and dissemination of effective practices to state and local education agencies that receive funds to support the use of technology to enhance student achievement. In addition, sections 2415 (5) and 2416(b)(9) further call on states and districts to develop performance measurement systems as a tool for determining the effectiveness of uses of educational technology supported under Title IID. The Study of Education Data Systems and Decision Making will address both of these provisions by providing SEAs and LEAs with critical information to support the effective use of technology to generate data to support instructional decision making.

New standards and accountability requirements have led states’ to reexamine the appropriate uses of technology to support learning and achievement. For some years now, researchers and developers have promoted technology systems that give students access to high-quality content and carefully designed instructional materials (Roschelle, Pea, Hoadley, Gordin, and Means, 2001). More recently, both technology developers and education policymakers have seen great promise in the use of technology for delivering assessments that can be used in classrooms (Means, Roschelle, Penuel, Sabelli, and Haertel, 2004; Means, 2006) and for making student data available to support instructional decision making (Cromey, 2000; Feldman and Tung, 2001; Light, Wexler, and Heinze, 2004; Mason, 2002; U.S. Department of Education, 2004). These strategies are not only allowable uses of ESEA Title IID funds but also of great interest to educational technology policymakers because of their potential to link technology use with changes in practice that can raise student achievement—thus helping to fulfill the primary goal of both Title IID (Enhancing Education Through Technology) and ESEA as a whole. The purpose of the present study is to provide further information exploring these potential links between the use of data housed in technology systems and school-level practices that support better teaching and learning.

While data is being collected on the characteristics of database systems that manage, collect and analyze data (Means 2005; Wayman, Stringfield, and Yakimowski, 2004), much less is known about the relationship between various systems features and usage in ways that support better teaching and learning at the school and classroom levels. The wide variety in system architectures and components and the multiple ways in which educators have been encouraged to use them, suggest that a whole range of different practices and supports are being used in different places. However, survey questions used in the past have been at too large a grain-size to capture important distinctions. Moreover, the field has limited knowledge about how educators can best use data from different kinds of assessments (e.g., annual state tests versus classroom-based assessments) for informing instruction. Further study is needed to determine the system features and data types school staff have access to, and the kinds of supports they are receiving from their school districts.

Stringfield, Wayman, and Yakimowski-Srebnick (2005), have been studying these data systems for some time and believe that school-level data-driven decision making using such systems has great potential for improving student achievement but is not yet widely implemented in schools:

Formal research and our own observations indicate that being data-driven is a phenomenon that generally does not occur in the basic structure of the typical school. We believe that the absence of data-informed decision making is not due to educators’ aversion to being informed. Rather, the wealth of data potentially available in schools is often stored in ways that make it virtually inaccessible to teachers and principals and generally far from analysis-friendly. (p. 150).

Our conceptual framework suggests that in addition to making usable, system-wide data available through technology systems, schools and districts need to put in place processes for preparing educators to use these systems and to cultivate practices of inquiring about data within schools. Far more detailed studies of use of these systems are needed if we are to extract principles for designing systems and practices that support frequent and effective use (the six supports identified in our conceptual framework). This is the goal of the Study of Education Data Systems and Decision Making.

2. Use of Information

The data collected for this study will be used to inform educational policy and practice. More specifically, the data can be used:

By SEAs and LEAs to compare the contents and capabilities of their student data system to that of a nationally representative sample of districts, and to identify and develop policies to support implementation practices that are associated with more teacher use of student data in planning and executing instruction.

By ED staff to design outreach efforts to stimulate and enhance the use of data systems to improve instruction.

By researchers, who may use the data to inform future studies of data-driven decision making to improve instructional practice.

3. Use of Information Technology

The contractors will use a variety of advanced information technologies to maximize the efficiency and completeness of the information gathered for this evaluation and to minimize the burden the evaluation places on respondents at the district and school levels. For example, respondents will be given the option to complete either a paper and pencil or online version of the district survey. During the data collection period, an e-mail address and phone number will be available to permit respondents to contact the contractor with questions or requests for assistance. The e-mail address will be printed on all data collection instruments, along with the name and phone number of a member of the data collection team. For the district survey, a notification and response rate tracking system will be set up in advance so that task leaders and ED can have weekly updates on response rates. Finally, a project Web site, hosted on an SRI server, will be available for the purpose of communicating data collection activities and results (e.g., a copy of the study brochure, a copy of the district survey, study reports as available).

4. Efforts to Identify Duplication

The contractor is working with the U.S. Department of Education to minimize burden through coordination across Department studies to prevent unnecessary duplication (e.g., no repeating questions for which sufficient data are already available). In addition to using teacher survey data collected from other Department-sponsored evaluation activities to assess national trends regarding some aspects of data-driven decision making, secondary sources have also been identified for data on state-supported data systems, thereby eliminating the need for state-level data collection. Additional information on these secondary sources is provided in the discussion of data collection procedures.

5. Methods to Minimize Burden on Small Entities

No small businesses or entities will be involved as respondents.

6. Consequences If Information Is Not Collected or Is Collected Less Frequently

Currently, the Department is working to improve the quality of K-12 performance data provided by SEAs to assess the effectiveness of federally-supported programs. EDFacts is an initiative of the Department, SEAs, and other members of the education community to use state-reported performance data to support planning, policy, and program management at the federal, state, and local levels. Since much of the data that states gather on program effectiveness comes from the local level, it is important that local performance measurement systems are collecting high-quality data. No Child Left Behind also cites the importance of using data to improve the instruction that students receive, but many of the data system efforts to date have focused on improving state data systems without comparable attention to making the data in those systems available to and meaningful to the school staff who make instructional decisions. Improved use of data at the local level can improve the teaching and learning process by giving teachers the information they need to guide their instructional practices. A potential byproduct would be improvement of the quality of the data that the Department receives from states because states depend on districts and schools for their data.

To address the need for high-quality, actionable data at the local level, the Study of Education Data Systems and Decision Making will provide in-depth information in several key issue areas: the availability of education data systems, their characteristics, the prevalence and nature of their use in districts and schools, and the conditions and practices associated with data usage that adheres to professional standards for data interpretation and application. The study will also provide more detailed information on implementation of data-driven decision making than has previously been available at the local level.

If the data are not collected from the proposed study, there will be no current national data collection on the availability of education data systems at the local level and the nature of their use in districts and schools. There will also be only limited information on classroom and other school-level use of data. (Several investigations are exploring data use in individual schools, but no other study is producing cross-case analysis.) The result will be a continued gap in information on how districts and schools are using data to inform their instructional decision making, the barriers to effective use of data, and the supports that staff need to better employ data in their teaching in a way that can positively impact student achievement.

7. Special Circumstances

None of the special circumstances listed apply to this data collection.

8. Federal Register Comments and Persons Consulted Outside the Agency

A notice about the study will be published in the Federal Register when this package is submitted to provide the opportunity for public comment. In addition, throughout the course of this study, SRI will draw on the experience and expertise of a technical working group (TWG) that provides a diverse range of experience and perspectives, including representatives from the district and state levels, as well as researchers with expertise in relevant methodological and content areas. The members of this group and their affiliations are listed in Exhibit 3. The first meeting of the technical working group was held on January 26, 2006. Individual members of the TWG have provided additional input as needed (e.g., recommendations for revisions to the case study sample, review of the district survey).

Exhibit 3

Technical

Working Group Membership

Member |

Affiliation |

Marty Daybell |

Washington State Education Authority |

Dr. Jeff Fouts |

Fouts & Associates |

Aimee Guidera |

National Center for Educational Accountability |

Jackie Lain |

Standard and Poor’s School Evaluation Services |

Dr. Glynn Ligon |

ESP Solutions |

Dr. Ellen Mandinach |

Center for Children and Technology |

Dr. Jim Pellegrino |

University of Illinois-Chicago |

Dr. Arie van der Ploeg |

North Central Regional Education Laboratory (NCREL)/Learning Points |

Dr. Jeff Wayman |

University of Texas, Austin |

Katherine Conoly |

Executive Director of Instructional Support, Corpus Christi Independent School District |

9. Respondent Payments or Gifts

No payments or gifts will be provided to respondents.

10. Assurances of Confidentiality

SRI is dedicated to maintaining the confidentiality of participant information and the protection of human subjects. SRI recognizes the following minimum rights of every subject in the study: (1) the right to an accurate representation of the right to privacy, (2) the right to informed consent, and (3) the right to refuse participation at any point during the study. Because much of the Policy Division’s education research involves collecting data about children or students, we are very familiar with the Department’s regulation on protection of human subjects of research. In addition, SRI maintains its own Institutional Review Board. All proposals for studies in which human subjects might be used are reviewed by SRI’s Human Subjects Committee, appointed by the President and Chief Executive Officer. For consideration by the reviewing committee, proposals must include information on the nature of the research and its purpose; anticipated results; the subjects involved and any risks to subjects, including sources of substantial stress or discomfort; and the safeguards to be taken against any risks described.

SRI project staff have extensive experience collecting information and maintaining confidentiality, security, and integrity of interview and survey data. In accordance with the SRI’s institutional policies, privacy and data protection procedures will be in place. These standards and procedures for district survey data are summarized below.

Project team members will be educated about the privacy assurances given to respondents and to the sensitive nature of materials and data to be handled. Each person assigned to the study will be cautioned not to discuss confidential data.

Each survey will be accompanied with a return envelope so as to allow the respondent to seal it, once it has been completed. All respondents will mail their postpaid surveys individually to SRI. The intention is to allow respondents to respond honestly without the chance that their answers will be inadvertently read or associated personally with them. If the survey is taken online, security measures are in place to safeguard respondent identifies and to ensure the integrity of the data.

Participants will be informed of the purposes of the data collection and the uses that may be made of the data collected.

Respondent’s names and addresses will be disassociated from the data as they are entered into the database and will be used for data collection purposes only. As information is gathered on districts, each will be assigned a unique identification number, which will be used for printout listings on which the data are displayed and analysis files. The unique identification number also will be used for data linkage.

All paper surveys will be stored in secure areas accessible only to authorized staff members.

Access to the survey database will be limited to authorized project members only; no others will be authorized such access. Multilevel user codes will be used, and entry passwords will be changed frequently.

All identifiable data (e.g., tracking data) will be shredded as soon as the need for this hard copy no longer exits.

The reports prepared for this study will summarize findings across the samples and will not associate responses with a specific district, school or individual. Information that identifies a school or district will not be provided to anyone outside the study team, except as required by law.

At the time of data collection, all survey participants will be assured of privacy to the extent possible (see draft of survey cover letter in Appendix A).

11. Questions of a Sensitive Nature

No questions of a sensitive nature will be included in the district survey.

12. Estimate of Hour Burden

The estimates in Exhibit 4 reflect the burden for notification of study participants, as well as the survey data collection activity.

District personnel—time associated with reviewing study information, and if required, reviewing study proposals submitted to the district research committee; time associated with asking questions about the study and completing the survey about the district’s use of data systems.

Exhibit 4

Estimated

Burden for Site Selection and Notification

Group |

Participants |

Total No. |

No. of Hours per Participant |

Total No. of Hours |

Estimated Burden |

District Personnel |

Superintendent (notification) District staff (survey) |

534

500* |

0.5

1.0 |

267

500 |

$10,680

20,000 |

|

Total |

1,034 |

|

767 |

$30,680 |

*Anticipate some non-response. See Section B regarding respondent universe.

13. Estimate of Cost Burden to Respondents

There are no additional respondent costs associated with this data collection other than the burden estimated in item A12.

14. Estimate of Annual Costs to the Federal Government

The annual costs to the federal government for this study, as specified in the contract, are:

-

Fiscal year 2005

$272,698

Fiscal year 2006

$849,532

Fiscal year 2007

$242,696

Total

$1,364,926

15. Change in Annual Reporting Burden

This request is for a new information collection.

16. Plans for Tabulation and Publication of Results

During the summer of 2006, the data collection team worked with the secondary analysis team to analyze data from the NETTS 2005-06 teacher survey. Descriptive statistics were calculated to show the proportion of teachers nationwide who are aware of their access to student data systems and the support teachers have had for DDDM. Although an evaluation report is not required for the first year of the study, a policy brief is being prepared on the secondary analyses of the NETTS teacher survey data (additional information on this database is provided in Section B).

In 2007, analysis activities will focus on the case study data (OMB Control Number 1875-0241). These data will provide a more in depth look at the kinds of systems that are available to support district and school data-driven decision making, and the supports for school use of data systems to inform instruction. We will analyze teacher focus group and interview data on these topics. A separate analysis will examine responses to the portion of the teacher case study interviews in which teachers respond to hypothetical data sets. We will develop and apply rubrics for judging the quality of the inferences teachers make from data. The school-level and teacher-level data will be reported in an interim report to be drafted by October 2007 to answer the following evaluation questions:

To what extent do teachers use data systems in planning and implementing instruction?

How are school staff using data systems?

How does school staffs’ use of data systems influence instruction?

In 2008, analysis activities will focus on data from the district survey covered by this OMB package. These analyses, along with prior analyses of data collected at the state, district, and teacher levels, will be combined in a final report to be drafted by April 2008. The final report will focus on the following additional evaluation questions:

What kinds of systems are available to support district and school data-driven decision making (prevalence of system access; student information and functions of these data systems)?

Within these systems, how prevalent are tools for generating and acting on data?

How prevalent are state and district supports for school use of data systems to inform instruction?

Exhibit 5 outlines the report dissemination schedule.

Exhibit 5

Schedule for

Dissemination of Study Results

-

Activity/Deliverable

Due Date

Interim Report

Outline of interim report

First draft of interim report

Second draft of interim report

Third draft of interim report

Final version of interim report

8/07

10/07

12/07

2/08

3/08

Final Report

Outline of final report

First draft of final report

Second draft of final report

Third draft of final report

Final version of final report

3/08

4/08

6/08

7/08

9/08

Data Analysis

The Study of Education Data Systems and Decision Making will analyze quantitative data gathered through surveys and qualitative data gathered through case studies. Survey data will include the 2007-08 district survey of a nationally representative sample of approximately 500 districts administered in fall 2007 and, as noted above, secondary analyses of other data sets will also be conducted by the study team. Qualitative data will be collected through case studies of 30 schools conducted during winter and spring of the 2006-07 school year.

The evaluation will integrate findings derived from the quantitative and qualitative data collection activities. Results from the case studies may help to explain survey findings, and the survey data may be used to test hypotheses derived from the case studies. In this section, we present our approaches to analysis of the quantitative data; a description of our approach to analysis of qualitative data was presented with the OMB package for the case studies.

Survey Analyses

The processing and analysis of survey responses are designed to minimize sources of error that can mask or bias findings. Data management techniques are designed to produce accurate translation of survey responses to computerized databases for statistical analysis. The statistical analyses will use appropriate analytical weights so that the survey findings are unbiased estimates of parameters of the populations of interest.

The evaluation questions can be addressed with a few different statistical techniques. Many of the questions require descriptive information about the population of districts or schools on a single variable of interest. Some evaluation questions may be explored in more detail by examining relationships among variables or by examining whether the distributions of a variable differ from one subpopulation to another. These questions will be addressed through multivariate analyses.

Univariate Analyses. Characteristics of the measurement scale for a variable will determine the type of summary provided. For many variables, the measurement scale is categorical because survey respondents select one choice from a small number of categories. For other variables, the measurement scale is continuous because respondents provide a numerical answer that can take on many values, even fractional values, along an ordered scale. The distributions of categorical variables will be provided by presenting for each choice category the (weighted) percentage of respondents who selected the category.

The distributions of continuous variables will be summarized by an index of the central tendency and variability of the distribution. These might be the mean and standard deviation, if the distribution is symmetrical, or the median and interquartile range, if the distribution is skewed.

In some cases, the variable of interest can be measured by a single survey item. In other cases (e.g., for more complex or abstract constructs), it is useful to develop a measurement scale from responses to a series of survey items. The analyses will provide simple descriptive statistics that summarize the distribution of measurements, whether for single items or for scales. Whenever it is possible to do so within the set of survey data, we also will examine the validity of the measurement scale. Techniques, such as factor analysis, that examine hypothesized relationships among variables will be used to examine the validity of scale scores.

Bivariate and Multivariate Analyses. In addition to the univariate analyses, we plan to conduct a series of bivariate and multivariate analyses of the survey data. For example, we will examine relationships between specific demographic variables and district and school reports of the implementation of supports for data-driven decision making. Our primary purpose in exploring the relationships between context variables and responses to items about implementation of support systems is explanatory. For example, we will examine relationships of survey responses with district size because we expect that larger districts have more resources and, therefore, greater capacity to assist schools with data-driven decision making. Conversely, small districts (often rural) have very limited central office capacity to assist schools (McCarthy, 2001; Turnbull and Hannaway, 2000) and may be challenged to implement data-driven decision making well.

By looking at relationships among two or more variables, the bivariate and multivariate analyses may provide more refined answers to the evaluation questions than the simple summary statistics can provide. A variety of statistical techniques will be used, depending on the characteristics of the variables included in the analyses and the questions to be addressed. Pearson product-moment correlations will summarize bivariate relationships between continuous variables, unless the parametric assumptions are unreasonable, in which case Spearman rank order correlations will be used. Chi-square analyses will examine relationships between two categorical variables. We will use t-tests and analysis of variance (ANOVA) to examine relationships between categorical and continuous variables. Regression analyses will be used to examine multivariate relationships.

Integration of Quantitative and Qualitative Data. Findings from the surveys and case studies provide different perspectives from which to address the evaluation questions. For example, whereas the district survey can estimate the number of districts in the nation that provide assistance for data-driven decision making, the case studies can describe the nature and quality of the assistance that districts might provide. The survey findings can be generalized to national populations, but they may have limited descriptive power. The opposite is true for the case studies, which can provide detailed descriptions and explanations that may have limited ability to generalize.

A goal of the analysis is to integrate findings from the different methods, building on the strengths of each. When they are consistent, the findings from different perspectives can provide rich answers to evaluation questions. When they are inconsistent, the findings point to areas for further study. For example, the case studies may identify hypotheses worth testing in the survey data, and survey results might identify an unexpected correlation between variables that case study data could help to explain.

Our strategy for integration is to share findings across the perspectives in a way that maintains the integrity of each methodology. The evaluation questions provide the framework for integration. Separately, the quantitative and qualitative data will be analyzed to a point where initial findings are available to address the evaluation questions. Then the findings will be shared and discussed to identify robust findings from both types of data, as well as inconsistencies suggesting the need for further analyses. Frequent communication at key points in this process will be important. We will have formal meetings of the case study and quantitative analysts to share findings. The first will be held after preliminary results are available from each type of study, for the purpose of identifying additional analyses to conduct. Subsequent meetings will focus on interpretation of analyses and planning for new examinations of the data. Communication between analytic teams will be facilitated by having some core members common to both.

The integration of different types of data, like the integration of data from different sites within the qualitative analyses, requires an iterative approach. Each step builds on the strengths of the individual data sets to provide answers to the evaluation questions. If, for example, we find through analyses of survey data that schools vary on the degree to which they receive technical support for data interpretation, we will first test with the survey data whether responses vary with characteristics identified through previous research (e.g., size of the district in which the school is located, number of years the school has been in identified as in need of improvement). If a characteristic is predictive of the amount of assistance that schools receive, it will be explored further through our case studies. Data from the case study schools will be examined to see whether they support the relationship between the characteristic and technical assistance; to provide additional details, such as other mitigating factors; and to identify examples that could enhance the reporting of this finding.

17. OMB Expiration Date

All data collection instruments will include the OMB expiration date.

18. Exceptions to Certification Statement

No exceptions are requested.

B. Collections of Information Employing Statistical Methods

1. Respondent Universe

The proposed sampling plan for the district survey was developed with the primary goal of providing a nationally representative sample of districts to assess the prevalence of district support for data-driven decision making. A secondary goal was to provide numbers of districts adequate to support analyses focused on subgroups of districts. To conduct such analyses with reasonable statistical precision, we have created a six-cell sampling frame stratified by district size and poverty rate. From the population of districts in each cell, we will sample at least 90 districts. Below, we discuss our stratifying variables, describe the overall sampling frame, and show the distribution of districts in the six-cell design.

District Size

Districts vary considerably in size, the most useful available measure of which is pupil enrollment. A host of organizational and contextual variables that are associated with size exert considerable potential influence over how districts can support data-driven decision making. Most important of these is the capacity of the districts to design data management systems, actively promote the use of data for educational improvement, and provide professional development and technical support for data interpretation. Very large districts are likely to have professional development and research offices with staff to support school data use, whereas extremely small districts typically do not have such capacity. Larger districts also are more likely to have their own assessment and accountability processes in place, which may support accountability and data-driven decision making practices. District size is also important because of the small number of large districts that serve a large proportion of the nation’s students. A simple random sample of districts would include few—if any—of these large districts. Finally, accountability-related school improvement efforts are much more pronounced in large districts. For example, longitudinal analyses of district-level data from the Title I Accountability Systems and School Improvement Efforts study indicated that although the total number of schools identified for improvement has remained approximately the same from 2001 to 2004, there has been a steady trend toward a greater concentration of identified schools in large districts (U.S. Department of Education, 2006).

We propose to sort the population of districts nationally into three categories so that each category serves approximately equal numbers of students, based on enrollment data provided by the National Center for Education Statistics’ Common Core of Data (CCD):

Large (estimated enrollment 25,800 or greater). These are either districts in large urban centers or large county systems, which typically are organizationally complex and often are broken up into subdistricts.

Medium (estimated enrollment from 5,444 to 25,799). These are districts set in small to medium-size cities or are large county systems. They also are organizationally complex, but these systems tend to be centralized.

Small (estimated enrollment from 300 to 5,443). The small district group typically includes suburban districts, districts in large rural towns, small county systems, and small rural districts. These districts tend to have more limited organizational capacity.

Districts with 299 or fewer students will be excluded from the study. Such districts account for approximately one percent of all students and 21 percent of districts nationwide. The distribution of districts among the size strata and the proportion of public school students accounted for by each stratum are displayed in Exhibit 6. The proportion of districts among the three size strata in the district sample (excluding districts with 299 or fewer students) are: large (2.2 percent), medium (13.5 percent), and small (84.3 percent).

Exhibit 6

Distribution

of Districts and Student Population, by District Size*

|

Number of Districts |

Percent of Districts |

Number of Students (000s) |

Percent of Students |

Large (>25,800) |

249 |

1.8 |

15,834 |

33.0 |

Medium (5,444 – 25,799) |

1,497 |

10.6 |

15,844 |

33.0 |

Small (300 – 5,443) |

9,378 |

66.6 |

15,853 |

33.0 |

Very small (299 or less) |

2,956 |

21.0 |

478 |

1.0 |

TOTAL |

14,080 |

100.0 |

48,008 |

100.0 |

* Based on 2004-05 NCES Common Core of Data (CCD).

District Poverty Rate

Because of the relationship between poverty and achievement, schools with large proportions of high-poverty students are also more likely than schools with fewer high-poverty students to be low achieving, and thus to be identified as in need of improvement. Under NCLB, districts are required to provide identified Title I schools with technical assistance to support school improvement activities, including assistance in analyzing data from assessments and other student work to identify and address problems in instruction and assistance in identifying and implementing professional development strategies and methods of instruction that have proven effective in addressing the specific instructional issues that caused the school to be identified for improvement. We expect that high-poverty districts face greater demands for educational improvement, as well as the demands of working with larger numbers (or higher proportions) of schools identified for improvement. Consequently, we want our sample to include a sufficient number of both relatively high-poverty and relatively low-poverty districts so that survey results from these districts can be compared.

As a measure of district poverty, we will use the percentage of children ages 5 to 17 who are living in poverty, as reported by the U.S. Census Bureau and applied to districts by the National Center for Education Statistics. The distribution of districts among strata and the proportion of students accounted for by each stratum are displayed in Exhibit 7.

Exhibit 7

Distribution

of Districts and Student Population, by District Poverty Rate*

District |

Number of Districts |

Percent of Districts |

Number of Students (000s) |

Percent of Students |

Other (≤20%) |

8,555 |

76.9 |

33,370 |

70.2 |

High (>20%) |

2,569 |

23.1 |

14,160 |

29.8 |

TOTAL |

11,124 |

100.0 |

47,530 |

100.0 |

* Excluding districts with 299 or fewer students. Based on data from the 2003 U.S. Census for the percentage of children ages 5 to 17 who are living in poverty and applied to districts by NCES.

District Sample Selection Strategy

Our original proposal called for a sample of 500 districts. We had anticipated an 85 percent participation rate, which would result in approximately 425 respondents. We currently propose to increase our sample size (up to 588 districts) with the goal of obtaining approximately 500 respondents.

The two variables of district size and poverty rate generate a six-cell grid into which the universe of districts (excluding very small districts) can be fit. Exhibit 8 shows the strata, a preliminary distribution of the number of districts in each stratum, and the initial sample size in each cell.

For

most analyses, we will be combining data across cells. When examining

data from the full 498 responding districts, the confidence interval

is ± 6.5 percent. With 498 district respondents, if we are

looking at data from all 249 high-poverty district respondents, the

confidence interval is ± 9 percent. In those cases where we

are examining survey responses in a single cell, 83 respondents will

yield a statistic with a confidence interval of no more than

±

11 percent.2

For example, if we find that 50% of medium-size, high-poverty

districts report a particular approach to supporting schools for

data-based decision making activities, then the true population

proportion is likely to be within 11 percentage points of 50 percent.

More precisely, in 95 out of every 100 samples, the sample value

should be within 11 percentage points of the true population value.

Exhibit 8

Number of

Districts in the Universe and Quota Sample Size, by Stratum

|

District Poverty Rate |

|

|

|

Low |

High

|

|

Large |

|

|

|

Medium |

|

|

|

Small |

|

|

|

TOTAL |

|

|

491* |

* Initially we will sample 89 districts in each cell (i.e., 534 districts). As required, additional samples will be added (up to a total of 588 districts) to meet our quota of respondents in each strata.

2. Data Collection Procedures

As described in the first section of this document, the district survey is a component of an interrelated data collection plan that also includes case studies of 30 schools in 10 districts and a review of secondary sources that address the same set of evaluation questions. Exhibit 9 outlines the schedule of data collection activities for which clearance is being sought. OMB has already approved case study data collection activities (OMB Control Number 1875-0241).

Exhibit 9

General

Timeline of Data Collection Activities

|

Conduct Case Studies |

|

Winter 2006 to Spring 2007 |

|

|

Fall 2007 |

|

|

District Survey

The evaluation questions outlined in the first section of this document, along with an analysis of the literature and pretesting of case study protocols, have generated a list of key constructs that have guided survey development. Below we describe the district survey in greater detail.

The district survey will focus on the characteristics of district data systems and district supports for data-driven decision-making processes within schools. Proposed questions for the district survey will include transferability of data between state and district systems through the use of unique student and teacher identifiers, uses of the systems for accountability, and the nature and scale of the supports districts are providing for school-level use of data to improve instruction. In addition, the district survey will include a section on the types of student information available in district systems (information on types of student data available from state systems will be drawn from the survey conducted by NCEA). Our district survey will cover the suggested topics shown in Exhibit 10.

Exhibit 10

District Survey Topics

Topic |

Subtopic |

Evaluation Question Addressed |

Data systems |

|

Q1: What kinds of systems are available to support district and school data-driven decision making? |

DDDM tools for generating and acting on data |

|

Q2: Within these systems, how prevalent are tools for generating and acting on data? |

Supports for DDDM |

|

Q3: How prevalent are state and district supports for school use of data systems to inform instruction? |

The district survey will be formatted to contain structured responses that allow for the quantification of data, as well as open-ended responses in which district staff can provide more descriptive information on how DDDM is carried out in their district.

As noted earlier, respondents will also be given the option to complete the district survey online. The paper survey will contain the URL for the electronic version. In order to determine which districts have responded using the online survey, respondents will be requested to enter the identification number at the top of the survey as well as the name and location of their district on their online survey form.

Our approach to survey administration is designed to elicit a high response rate and includes a comprehensive notification process to achieve “buy-in” prior to data collection as well as multiple mailings and contacts with nonrespondents described later in this document. In addition, a computer-based system will be used to monitor the flow of data collection—from survey administration to processing and coding to entry into the database. This monitoring will help to ensure the efficiency and completeness of the data collection process.

Secondary Data Sources

The use of secondary data sources will enhance our analysis and avoid duplication of efforts. We have currently identified two main sources of additional data on how states, districts, and schools are using data systems.

The first source of data for secondary analysis is the NETTS study which is focusing on the implementation of the Enhancing Education Through Technology (EETT) program at the state and local levels. A teacher survey was completed through NETTS in January 2005 that gathered data from over 5,000 teachers in approximately 850 districts nationwide. Teachers were asked about their use of technology in the classroom, including the use of technology-supported databases. Questions about data systems addressed issues related to the accessibility of an electronic data management system with student-level data, the source of the system (state, district, school), the kinds of data and supports provided to teachers to access data from the system, and the frequency with which teachers use data to carry out specific educational activities, and the types of supports available to teachers to help them use student data.

The second major source of data for secondary analysis is the National Center for Educational Accountability (NCEA) state survey, first administered in August 2005, which focused on data system issues related to longitudinal data analysis. The second administration of the NCEA state survey was scheduled for completion by the end of September 2006. The 2006 survey updates data from the 2005 survey and adds some new items as well. The NCEA state survey will continue to be used as a secondary data resource for this study in the future. NCEA data provide key information on the data systems that states are building and maintaining as they gear up to meet NCLB requirements for longitudinal data systems (i.e., NCEA’s “ten essential elements”).3

Prepare Notification Materials and Gain District Cooperation

Gaining the cooperation of district representatives is a formidable task in large-scale data collection efforts. Increasingly, districts are beset with requests for information, and many have become reluctant to participate. Our efforts will be guided by three key strategies to ensure adequate participation: (1) an introductory letter signed by the Department, (2) preparation of high-quality informational materials, and (3) follow up contacts with nonrespondents.

U.S. Department of Education Letter. A letter from the Department will be prepared that describes the purpose, objectives, and importance of the study (i.e., documenting the prevalence of data-driven decision making, identifying practices for effective data-driven decision making, identifying challenges to implementation) and the steps taken to ensure privacy. The letter will encourage cooperation and will include a reference to the U.S. Department of Education Department General Administrative Regulations (EDGAR) participation requirements, stating that the law requires grantees to cooperate with evaluations of ESEA-supported programs (EDGAR Section 76.591). As noted earlier, the study is part of the national technology activities supported under Section 2404(b)(2) of Title II, Part D, of the Elementary and Secondary Education Act and 85 percent of districts receive EETT funds under ESEA. A draft of the letter is included in Appendix A.

High-Quality Informational Materials. Preparing relevant, easily accessible, and persuasive informational materials is critical to gaining cooperation. The primary component of the project’s informational materials will be a tri-fold brochure. This brochure includes the following information:

The study’s purpose.

Information about the design of the sample and the schedule for data collection.

The organizations involved in designing and conducting the study.

A draft copy of the brochure is included in Appendix B. All informational materials will be submitted to ED for approval before they are mailed. Mailing of informational materials to districts will begin in spring 2007, prior to the mail out of the survey.

Contacting Districts. The first step in contacting districts will include the notification letter and information packet sent to the district superintendent. As part of the notification process, we will request the most appropriate respondent for the district survey (i.e., the district staff member who has primary responsibility for leading data-driven activities related to instructional improvement). As initial pretest activities have shown, the position held by this particular staff member is not consistent across districts (e.g., Director of Technology, Director of Research and Assessment, Director of Curriculum). Therefore, we will take extra steps during the notification process to identify the best respondent for the survey (this will be particularly important in very large districts). The survey will then be shipped via Priority Mail to the district staff member identified by the superintendent. (A copy of the notification letter is included in Appendix A.)

Every effort is being made to minimize the burden on districts, but at the same time, very large districts that serve large numbers of students will be included in multiple studies given the proportion of the student population they serve. In these districts, great care will be taken during notification activities to respond to the concerns and questions of participants. If needed, project staff will be prepared to submit proposals to district research committees.

3. Methods to Maximize Response Rates

A number of steps have been built into the data collection process to maximize response rates. Special packaging (e.g., Priority Mail) and a cover letter from the U.S. Department of Education have served to increase survey response rates in other recent national studies (e.g., NETTS, Evaluation of Title I Accountability Systems and School Improvement Efforts). In addition, by targeting the appropriate respondent for the survey, we are more likely to obtain a completed survey. Finally, all notification materials will include a reference to the U.S. Department of Education Department General Administrative Regulations (EDGAR) participation requirements, stating that the law requires grantees to cooperate with evaluations of ESEA-supported programs. The Study of Education Data Systems is part of the national technology activities supported under Section 2404(b)(2) of Title II, Part D, of the Elementary and Secondary Education Act and 85 percent of districts receive EETT funds under ESEA.

Other steps to be taken to maximize response rates include multiple mailings and contacts with nonrespondents:

The surveys will be mailed with postage-paid return envelopes and instructions to respondents to complete and return the survey within 3 weeks.

Three weeks after the initial mailing, a postcard will be sent out reminding respondents of the survey closing date and offering to send out replacement surveys as needed or the option of completing the survey online.

Four weeks after the initial mailing, a second survey will be sent to all nonrespondents, requesting that they complete and return the survey (in the postage-paid envelopes included in the mailing) or complete the survey online within 2 weeks.

Six weeks after the initial mailing, telephone calls will be placed to all nonrespondents reminding them to complete and return the survey. A third round of surveys will be sent after telephone contact, if necessary.

The final step will be for SRI to conduct interviews by phone (i.e., in the event of response rates below 80 percent) to increase the response rate. We will use the data gathered through the phone interview to do a study of nonresponse bias. The responses obtained in the phone interview will be compared with those obtained from respondents to see whether people who did not respond to the mail and online survey are different in systematic ways from those who did. Respondents will also be asked their reasons for not responding to the mail or online survey to learn the reasons for nonresponse.

4. Pilot Testing

To improve the quality of data collection instruments and control the burden on respondents, all instruments will be pre-tested. Pilot tests of the district survey will be conducted with several respondents in districts near SRI offices in Arlington and Menlo Park, with districts among the case study pool that were not selected for inclusion in the case study sample, and with selected members of the TWG. The results of the pre-testing will be incorporated into revised instruments that will become part of the final OMB clearance package. If needed, the revised survey will be piloted in a small set of local districts with nine or fewer respondents prior to data collection. The district survey can be found in Appendix C.

5. Contact Information

Dr. Barbara Means is the Project Director for the study. Her mailing address is SRI International, 333 Ravenswood Avenue, Menlo Park, CA 94025. Dr. Means can also be reached at 650-859-4004 or via e-mail at [email protected].

Christine Padilla is the Deputy Director for the study. Her mailing address is SRI International, 333 Ravenswood Avenue, Menlo Park, CA 94025. Ms. Padilla can also be reached at 650-859-3908 or via e-mail at [email protected].

References

Choppin, J. (2002). Data use in practice: Examples from the school level. Paper presented at the annual meeting of the American Educational Research Association, New Orleans.

Confrey, J., and Makar, K. M. (2005). Critiquing and improving the use of data from high-stakes tests with the aid of dynamic statistics software. In C. Dede, J. P. Honan, and L. C. Peters (Eds.), Scaling up success: Lessons learned from technology-based educational improvement (pp. 198-226). San Francisco: Jossey-Bass.

Cromey, A. (2000). Using student assessment data: What can we learn from schools? Policy Issues, Issue 6. Oak Brook, Illinois: North Central Regional Educational Laboratory.

Feldman, J., and Tung, R. (2001). Using data based inquiry and decision-making to improve instruction. ERS Spectrum 19(3), 10-19.

Halverson, R., Grigg, J., Prichett, R., & Thomas, C. (2005). The new instructional leadership: Creating data-driven instructional systems in schools. Madison, Wisconsin: Wisconsin Center for Education Research, University of Wisconsin.

Herman, J., and Gribbons, B. (2001). Lessons learned in using data to support school inquiry and continuous improvement: Final report to the Stuart Foundation. Los Angeles, California: UCLA Center for the Study of Evaluation.

Light, D., Wexler, D., and Heinze, J. (2004). How practitioners interpret and link data to instruction: Research findings on New York City schools’ implementation of the Grow Network. Paper presented at the annual meeting of the American Educational Research Association, San Diego.

Mason, S. (2002). Turning data into knowledge: Lessons from six Milwaukee public schools. Paper presented at the annual meeting of the American Educational Research Association, New Orleans.

McCarthy, M.S. (2001). Making standards work: Washington elementary schools on the slow track under standards-based reform. Seattle, Washington: Center on Reinventing Public Education.

Means, B. (2006). Prospects for transforming schools with technology-supported assessment. In R. K. Sawyer (Ed.), The Cambridge handbook of the learning sciences. Cambridge, UK: Cambridge University Press, pp. 505-519.

Means, B. (2005). Evaluating the impact of implementing student information and instructional management systems. Background paper prepared for the Policy and Program Studies Service, U.S. Department of Education.

Means, B., Roschelle, J., Penuel, W., Sabelli, N., and Haertel, G. (2004). Technology’s contribution to teaching and policy: Efficiency, standardization, or transformation? In R. E. Floden (Ed.), Review of research in education (Vol 27 pp. 159-181). Washington, D.C.: American Educational Research Association.

Palaich, R. M., Good, D. G., and van der Ploeg, A. (2004). State education data systems that increase learning and improve accountability. Policy Issues (no. 16). Naperville, Illinois: Learning Point Associates.

Roschelle, J., Pea, R., Hoadley, C., Gordin, D., and Means, B. (2001). Changing how and what children learn in school with computer-based technologies [Special Issue, Children and Computer Technology]. The Future of Children, 10(2), 76-101.

Stringfield, S., Wayman, J. C. and Yakimowski-Srebnick , M. E. (2005). Scaling up data use in classrooms, schools, and districts. In C. Dede, J. P. Honan, and L. C. Peters (Eds.), Scaling up success: Lessons learned from technology-based educational improvement (pp. 133-152). San Francisco: Jossey-Bass.

U.S. Department of Education, Office of Educational Technology. (2004). Toward a new golden age in american education: How the Internet, the law and today’s students are revolutionizing expectations. Washington, D.C.: Author.

Turnbull, B. J., and Hannaway, J. (2000). Standards-based reform at the school district level: Findings from a national survey and case studies. Washington, D.C.: U.S. Department of Education.

U.S. Department of Education. (2006). Title I accountability and school improvement from 2001 to 2004. Washington, D.C.: Author.

Wayman, J. C., Stringfiled, S., and Yakimowski, M. (2004). Software enabling school improvement through analysis of student data. (Report No. 67). Baltimore: Center for Research on the Education of Students Placed at Risk, Johns Hopkins University.

Appendix A

Notification Letters

[informational letter to be sent to survey districts]

Date

[name and address]

Dear Superintendent:

We are writing to inform you about an upcoming evaluation sponsored by the U.S. Department of Education. The Study of Education Data Systems and Decision Making is part of the National Technology Activities Task Order (NTA) which is an effort by the Department to provide analytic and policy support for educational technology leadership activities focused on the implementation of Title II Part D of NCLB. A copy of the study brochure is enclosed for your information. The study will document the availability of systems and supports for using data to improve instruction, and the prevalence and nature of data-informed decision making in districts and schools. It will examine the challenges that educators face in trying to use data systems in their efforts to improve instruction and the practices employed by districts and schools that have used data to inform their decisions and have obtained successful outcomes for their students. Data for the study will include case studies of 30 schools conducted during the 2006-07 school year and a nationally representative survey of 500 districts conducted in spring 2007. Your district has been selected to be part of the national survey sample.

Given the topics covered in the survey, the most appropriate respondent will be your district staff member who has primary responsibility for leading data-driven instructional improvement activities. We would appreciate it if you can provide this information through an e-mail message to SRI International4 who is conducting the study under contract to the U.S. Department of Education ([email protected]) or in the envelope provided.

The information collected for this study can be used by (1) states and districts to identify and develop policies to support promising practices in data-driving decision making and to develop and implement performance measurement systems that utilize high quality data, and by (2) U.S. Department of Education staff to design outreach efforts to stimulate and enhance the use of data systems to improve instruction. Please note that we will provide participants with an executive summary of our findings that will include information on promising practices in data-driven decision making drawn from the study.

The information that is collected for this study will be used only for statistical purposes and no information that identifies individual districts will be provided to anyone outside the study team, except as required by law. Reports prepared for the Department of Education will summarize findings across the sample of schools and districts, and will not associate responses with a specific district, school or individual.

If you have any comments or questions regarding the study, please contact the Deputy Project Director, Christine Padilla, at 650-859-3908 or via e-mail at [email protected]. Thank you very much for your time and cooperation.

Sincerely,

Timothy J. Magner Alan Ginsburg

Director, Office of Educational Technology Director, Policy and Program Studies Service

Enclosures (2)

[cover letter for district survey]

Date

Dear Colleague: