Att_NEIREL SS Part A

Att_NEIREL SS Part A.doc

An Evaluation of the Thinking Reader Software Intervention

OMB: 1850-0837

An Evaluation of the Thinking Reader Software Intervention

OMB Clearance Request

Supporting

Statement

Part A

April 2007

Prepared For:

Institute of Education Sciences

United States Department of Education

Contract No. ED‑06‑CO‑0025

Prepared By:

Regional Educational Laboratory—Northeast and the Islands

55 Chapel Street

Newton, MA 02458-1060

Supporting Statement

request for clearance of information collection forms for

an evaluation of the thinking reader software intervention

A1. Justification

The Institute of Education Sciences of the U.S. Department of Education is conducting an Evaluation of the Thinking Reader Software Intervention, and the research is to be carried out by the Northeast Regional Educational Laboratory (REL-NEI). The current authorization for the Regional Educational Laboratories (REL) program is under the Education Sciences Reform Act of 2002, PL107-279, Part D, Section 174, administered by the Institute of Education Sciences’ National Center for Education Evaluation and Regional Assistance (see Attachment 1).

The national priority for the 2005-2010 REL awards is addressing the goals of the reauthorized Elementary and Secondary Education Act (ESEA). ESEA requires that schools and districts measure academic performance in reading and mathematics in grades three through eight plus one grade in high school to identify weaknesses and make changes appropriately. Schools are considered to have made adequate yearly progress only if all student groups, including poor and minority students, students with limited English proficiency, and students with disabilities meet the state’s adequate yearly progress targets. Schools and districts that do not make sufficient progress toward meeting their State’s adequate yearly progress targets, based on State-defined academic achievement standards and adequate yearly progress measures, are classified as schools in need of improvement. The Regional Educational Laboratories are charged with

…carrying out applied research projects that are designed to serve the particular educational needs (in prekindergarten through grade 16) of the region in which the regional educational laboratory is located, that reflect findings from scientifically valid research, and that result in userfriendly, replicable school-based classroom applications geared toward promoting increased student achievement, including using applied research to assist in solving site-specific problems and assisting in development activities (including high-quality and on-going professional development and effective parental involvement strategies)

Improving adolescent literacy is one of the high-priority needs of the Northeastern region (Connecticut, Maine, Massachusetts, New Hampshire, New York, Puerto Rico, Rhode Island, Vermont, and the Virgin Islands), and this rigorous study has been designed to provide causally valid answers and follows IES standards, as described in this authorizing legislation, for studies of effectiveness through field tests based on experimental designs.

The Need to Improve Adolescents’ Literacy

Improving adolescent literacy is a critical step toward improving adolescent academic achievement more broadly. More than 3,000 students drop out of high school every school day (Biancarosa & Snow, 2004), in part because they lack literacy skills to keep up with an increasingly challenging curriculum (Kamil, 2003; Snow & Biancarosa, 1993). Eight million students in grades 4-12 perform below the proficient level on national assessments, which suggests that many are unable to understand the texts that are required reading in high school (NCES, 2003).

The Northeast region reflects this national problem. The needs analysis conducted by REL-NEI identifies adolescent literacy as a key need, and indeed, between 30 percent and 43 percent of 8th graders scored at the proficient level in the 2003 NAEP (Table 1). Those students who did not reach the proficient level have varied reading needs (Biancarosa & Snow, 2004). Up to 25 percent or more of students entering the 6th grade in urban districts may lack essential phonemic awareness, decoding, and comprehension strategies (Kotula, 2005). Others struggle to comprehend and learn from the varied texts required in the content areas (RAND Reading Study Group, 2002). There remains a persistent gap between white, black, and Hispanic students who score at the proficient level in the Northeast Region. Table 1 reflects similar national results (NCES, 2003).

Table

1

Percent of Students at the Proficient Level, NAEP 2003 8th

Grade Reading Assessment

State |

Overall |

White |

Black |

Hispanic |

Asian/PI |

CT |

37 |

45 |

12 |

13 |

54 |

ME |

36 |

37 |

Low (n) |

Low (n) |

Low (n) |

MA |

43 |

49 |

18 |

14 |

53 |

NH |

40 |

41 |

Low (n) |

Low (n) |

Low (n) |

NY |

35 |

48 |

14 |

18 |

42 |

RI |

30 |

35 |

14 |

9 |

22 |

A prevailing view of this problem is that at a time when students need a high degree of literacy for academic learning, they are not receiving the reading instruction they need. Secondary teachers tend to focus on content more than on the reading process, and many lack the knowledge and skills to teach reading. Districts lack policies to address older students’ reading needs, and the effectiveness of many programs have not been established empirically (Kamil, 2003).

While programs for improving adolescents’ literacy are only beginning to undergo rigorous evaluations, certain teaching and learning strategies have a strong empirical base. A robust evidence base supports building motivation (Guthrie & Wigfield, 2000); teaching comprehension strategies (Duke & Pearson, 2002; NICHD, 2000); and engaging students, including those with disabilities, in cooperative learning activities around text (Klingner, Vaughn, Arguelles, Hughes & Leftwich, 2004; Palincsar & Herrenkohl, 2002; RAND Reading Study Group, 2002). Indeed, Rosenshine and his colleagues’ meta-analyses found that when standardized tests of reading comprehension were used as outcome measures, the median effect sizes for reciprocal teaching, and for teaching students to generate questions were 0.32 and 0.36, respectively (Rosenshine & Meister, 1994; Rosenshine, Meister, & Chapman, 1996).

The research challenge is to integrate these evidence-based teaching and learning strategies into coherent interventions that address the varied reading needs of adolescents entering middle school, and to test those interventions in middle schools (Biancarosa & Snow, 2004). The Thinking Reader intervention represents that coherent integration of teaching and learning strategies, and the study proposed here will serve as an empirical test of its effectiveness.

Study Overview

The Thinking Reader (TR) study will examine the effect of using this software program on 6th graders’ reading comprehension, vocabulary, use of comprehension strategies, and motivation to read. The study design involves within-school random assignment of teachers (and their intact classrooms) into the intervention or control condition. To balance the research activities done within the Northeast region, the Thinking Reader study will take place in the state of Connecticut. During the 2007-2008 school year, districts in Connecticut will have the opportunity to participate in this study, implementing the program with 6th graders. REL-NEI prefers to implement the program in high need, low resource, urban areas (e.g., Bridgeport, Harford, New Haven). Ultimately, 25 schools will participate in the study. We plan to recruit a total of 50 two 6th grade English/Language Arts (ELA) teachers in each of these in 25 schools to participate in the study.1

Because Thinking Reader is intended to be implemented as part of students’ ELA instruction, the classroom is the most obvious choice as the unit of assignment. However, our team has decided to use the teacher – and his/her multiple classrooms – as the unit of assignment. We found in a prior study that randomizing classrooms within teacher if a teacher taught one of her classrooms using the software and her other section in the traditional manner, this changed the dynamic of the instruction, where the teacher felt like he/she was in competition with the software. Therefore, each 6th grade ELA teachers and all his/her classroom sections within each school will be randomly assigned into the Thinking Reader or control group condition. We assume that on average, there will be:

Two 6th grade ELA teachers in each school (one treatment and one control teacher in each school)

Each teacher teaches two classrooms (four classrooms in each school, the two classrooms taught by the treatment teacher, and two classrooms taught by the control teacher)

24 students in each classroom

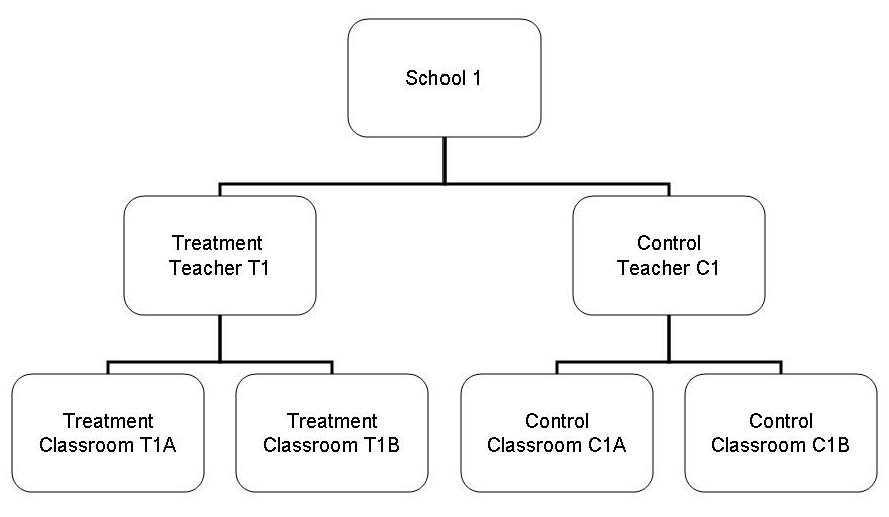

We will exclude schools that have only one 6th grade ELA teacher. Where more than two teachers are available, we will randomize odd-numbered teachers into the treatment condition, helping us to maintain our treatment sample size. For example, if there are four 6th grade ELA teachers in a school, two would be randomly assigned into the treatment condition, and two would be randomly assigned into the control condition. If there are five 6th grade ELA teachers in a school, three would be randomly assigned into the treatment condition, and two would be randomly assigned into the control condition. The randomization plan within each school is depicted below, in Figure 1.

Figure 1. Thinking Reader Within-School Randomization Plan

Note: Unit of assignment is the teacher.

We are targeting large, urban, high-need school districts in Connecticut. Connecticut groups its districts into Education Reference Groups (ERGs) for reporting purposes,2 and districts that fit the large, urban, high-need label fall into ERG I. Districts in this group are Ansonia, Bridgeport, Danbury, Derby, East Hartford, Hartford, Meridien, New Britain, New Haven, New London, Norwalk, Norwich, Stamford, Waterbury, West Haven, and Windham. We are also prepared to recruit in Vermont and Maine, if needed.

We will focus on Bridgeport, Hartford, and New Haven. Of the seven high-need districts, these three districts have 76 of the 86 schools in the ERG that enroll a 6th grade and that hold regular school status,3 according to the most recent Common Core Data (2004-2005).

Intervention to be Tested

We propose to study the effect of the Thinking Reader software program (Tom Snyder Productions, Scholastic, 2004) on the reading comprehension, vocabulary, and engagement of 6th grade struggling readers and their typically achieving peers. Sixth grade, usually the first year of middle school, was chosen in order to deliver the intervention as early as possible, so that reading skills are supported as reading for content-area comprehension becomes more important for academic success. Based on the universally designed research prototype developed and studied by Dalton and colleagues, Thinking Reader presents nine award winning digital novels in a supported reading environment that embeds strategy instruction, five levels of student strategy responses with feedback, animated coaches who model reading strategies by thinking aloud, audio narration and text-to-speech access to the text, and hyperlinked glossary items in English and Spanish. Thinking Reader also includes ongoing assessment tools in the form of individual student work logs that collect all of students’ responses to the strategy prompts, quick check comprehension quizzes, and guided student self assessment. The student version of the digital novels are complemented by a teacher management system that allows teachers to review students’ work, sorting by student, date, or type of assessment, and to send electronic feedback messages. Finally, the Thinking Reader includes a literature guide with discussion questions and activities, a reading strategies poster, and strategy bookmarks for students.

While the Thinking Reader is scaffolded to support struggling readers, it is designed to be used with the full range of students found in today’s classrooms. Thinking Reader is typically used as a replacement curriculum, in which the grade appropriate digital novels replace those the teacher normally uses. It is aligned with state standards and curriculum frameworks for comprehension and literature. According to Tom Snyder Productions, Thinking Reader is currently being used at approximately 760 sites in 46 states, by 67,000 students.4

The main goal of Thinking Reader is to develop engaged, active, and strategic readers who are able to comprehend academically rigorous literature. The primary targeted outcomes for Thinking Reader are:

Improved reading comprehension

Improved reading vocabulary

Improved use of reading comprehension strategies

Improved motivation to read

The proposed research study is a well-powered, randomized field trial intended to provide a rigorous test of Thinking Reader’s effectiveness. The central research questions are:

What is the effect of Thinking Reader on students’ reading achievement?

What is the effect of Thinking Reader on students’ motivation to read?

Does using Thinking Reader as part of English/Language Arts instruction differentially affect the reading achievement and/or motivation of subgroups of students?

Student reading achievement and motivation for reading are the main outcomes for this evaluation. We intend to administer four measures:5

Gates-MacGinitie Reading Tests (MacGinitie, MacGinitie, Maria, Dreyer, & Hughes, 1999): reading comprehension subtest

Gates-MacGinitie Reading Tests (MacGinitie et al., 1999): reading vocabulary subtest

Metacognitive Awareness of Reading Strategy Inventory (MARSI; Mokhtari & Reichard, 2002)

Motivation for Reading Questionnaire (MRQ: Guthrie & Wigfield, 2000; Wigfield & Guthrie, 1997)

Each of these measures will be administered twice, once at the beginning of the study (approximately December 2007), and again the end of the study (approximately May 2008). We estimate that we will need a total of 90 minutes (two class periods) at the beginning and at the end of the study to administer the four measures. All measures will be administered in English.6

Because we wish to examine the effect of the intervention on subgroups of students, we will ask schools to provide us with students’ ELL (English Language Learner) status and special education status.7 These are data that schools collect routinely and can easily provide.

We propose sending trained field staff to visit pay three visits to one randomly selected classroom of each of the 50 teachers. The observational data will provide us witha random subset of 24 classrooms six times during the course of the study, so that we may gather information on how instruction, processes, and engagement differ between the Thinking Reader and the control group classrooms. The observation data for the treatment classrooms also will help us describe the quality of implementation of the intervention. We will schedule these visits to coincide with the treatment teachers’ coverage of each of the Thinking Reader novels, which will likely take place between December January 20087 and April 2008. Twelve treatment classrooms and 12 control classrooms will be visited twice during each 4-week period that a Thinking Reader novel is covered.

We have selected the CIERA Classroom Observation Scheme (Taylor & Pearson, 2000) as a reliable, low-inference tool that can be used to document reading instruction in both the intervention and control classrooms.8 The measure documents seven parameters of the instructional environment: (1) Who in the classroom is providing instruction and working with students; (2) the observed instructional groupings; (3) the major academic/social area being covered; (4) the materials used by the teacher and students; (5) the specific literacy activity; (6) the interaction style used by the teacher, and (7) the mode of student response. The CIERA Classroom Observation Scheme protocol has been used in previous research and inter-rater reliability statistics between 0.92 and 0.95 are reported.

In addition to classroom observations, we will document the fidelity of treatment by examining teachers’ responses to email probes, and by reviewing the electronic student work logs (automatically collected by the software as part of its standard use). Biweekly email probes will be sent to each teacher in the form of a short checklist that teachers can complete to indicate whether they are on track with their implementation schedule and to highlight any technical issues that might be impacting implementation and need to be addressed by the technical support staff (see Attachment 2 for an example). The electronic work logs collected automatically by the software will serve as an excellent measure of fidelity, because they record the date, time, minutes per session, strategy responses, quick check comprehension scores, and teacher messages for each session, and for each student. To judge whether implementation is occurring as intended, we will compile instructional data including time students are spending reading with Thinking Reader, the number of lab sessions per week and per novel, and teachers' ratings of the quality of student work as acceptable (e.g., basic or advanced responses). We will score the treatment classrooms on a matrix to document variability in Thinking Reader implementation. These data will be used for descriptive purposes only, to contextualize and help interpret the results of the HLM analyses.

Finally, a brief teacher background survey (see Attachment 3) will be given to all teachers. This survey will include questions such as years of experience, highest degree completed, and certification status.

Request for OMB Clearance

This submission is a request for approval of the data collection instruments (teacher background questionnaire and biweekly email probes) that will be used to support the evaluation of the Thinking Reader software intervention. This statement describes the study approach and methodology for collecting and analyzing data. All instruments are appended to this document, which addresses OMB concerns regarding respondent burden and paperwork control. This document has been prepared according to guidelines for completing the justification statement to accompany OMB IC Data Parts 1 and 2.

A2. Purpose and Use of the Information Collected

This research study is a well-powered, randomized field trial intended to provide a rigorous test of Thinking Reader’s effectiveness on reading comprehension, vocabulary, and engagement of 6th grade struggling readers and their typically achieving peers. Our goal is to address the following research questions:

What is the effect of Thinking Reader on students’ reading achievement?

What is the effect of Thinking Reader on students’ motivation to read?

Does using Thinking Reader as part of English/Language Arts instruction differentially affect the reading achievement and/or motivation of subgroups of students?

The data will be useful for state and local policymakers, districts, and schools. An important goal of this evaluation is to produce findings that will determine how students improve their reading outcomes (and by extension, enhancing school improvement efforts).

Because there is much less information available to guide the improvement of adolescent literacy, the information will support policy decisions about funding of reading comprehension software programs used in middle schools. In addition, the data will be a resource to support additional research on reading comprehension, vocabulary and engagement, by academic researchers or others interested in developing students’ reading development.

A3. Use of Technology in Information Collection

To decrease burden, REL-NEI will collect data electronically, to the fullest extent possible. The teacher background survey will be administered online using Edoceon, a web-based survey tool developed at AIR. Students’ ELL and special education status will be collected in whatever medium the school prefers, which may be electronic files (e.g., Excel spreadsheets) or hard copies, which we would then transfer into a database. We will also use technology to collect data on fidelity of implementation from the treatment group teachers. The biweekly e-mail probes will be sent to each teacher in the form of a short checklist that teachers can easily and quickly complete.

Electronic data collection of student assessments (not counted towards burden). Our current plan is to administer the Gates-MacGinitie reading tests traditionally, as paper-and-pencil assessments. Although the Gates MacGinitie test is offered online, only one of the forms (Form S) is available for electronic administration. Riverside Publishing has no set date for when Form T will be available online, although they state that it will be “soon.” We require two different forms for the pretest and the posttest to avoid practice effects. The alternative might be to administer the pretest online, and the posttest on paper (or vice versa), but that creates the problem of confounding the timing of measure with mode of administration. Should Form T become available by the time this study begins, we will administer both the pretest and posttest Gates MacGinitie measures (the reading comprehension and reading vocabulary subtests) online. As with the teacher background survey, the MARSI and MRQ also will be administered through the Edoceon online survey system.

Classroom observations (not counted towards burden). The protocol we have chosen (the CIERA) must be done in person by trained site visitors. Technology will not be used to conduct the classroom observations.We are exploring the possibility of an electronic classroom observation protocol, which will allow us to import the data directly into a statistical analysis program and eliminate a separate step for data entry.

A4. Efforts to Identify Duplication

To our knowledge, there are no other randomized controlled trials of the Thinking Reader program being conducted in the nation. None of the information we request is available elsewhere. While Connecticut does administer state reading tests at the end of 5th and 6th grade, the state program does not have a reading vocabulary subscale that we need to test our outcomes. We also prefer to use the intact Gates MacGinitie tests (of reading comprehension and reading vocabulary) because this assessment is aligned with the uses and goals of Thinking Reader, and its subtests are the outcome measures used in prior, smaller studies of the software. Using the Gates MacGinitie in this study will provide a continuous chain of evidence that will allow us to be confident about the intervention’s effect across different student samples and study settings.

We require student achievement data beyond what is typically collected on a yearly basis. For this study, we must collect data from students to establish baseline equivalence, improve our statistical power, conduct intent-to-treat analyses, and assess the impact of the intervention on student achievement.

A5. Burden on Small Entities

The primary entities for the study are schools. All primary data collection will be coordinated by AIR employees, to reduce the burden on school employees. As noted in our response to A3, we will collect data electronically to the fullest extent possible, and have tried to limit the amount of data being collected to the minimum. That is, we are limiting the data collected to that which is directly relevant to measuring the outcomes targeted by the intervention, and limiting our data collection from teachers to a brief background survey (teacher characteristics will be used as covariates in our analyses). Student ELL and special education status information is gathered by all schools as standard procedure, and providing this information to us should be of minimal burden.

No special provisions are necessary for small organizations or small businesses. The size of the program is not relevant to this data collection effort.

A6. Consequences of Less Frequent Collection

If the proposed data were not collected, IES and REL-NEI would be unable to provide information on the efficacy of using a reading comprehension program practices and conditions. As a result, IES and REL-NEI would not know whether the program has any impacts, either positive or negative, on participating 6th grade students.

We have made every effort to limit the frequency of data collection. Teacher background and student ELL and special education data will be collected only once. We do require pretest and posttest data from students to establish baseline equivalence, improve our statistical power, conduct intent-to-treat analyses, and to assess the impact of the intervention on student achievement. We will send field staff to visit a random subset of 24 classrooms six times each year, so that we may gather information on how instruction, processes, and engagement differ between the Thinking Reader and the control group classrooms. We believe it is important to gather this information a minimum of six times a year, to trace the development and changes in teachers’ literacy instruction. Because classroom observations do not impose any burden on the persons being observed, the frequency of the classroom observations should not create a hardship on the teachers or students.

A7. Special Circumstances

No special circumstances apply to this study.

A8. Federal Register Announcement and Outside Consultations

a. Federal Register Announcement

The 60-day notice for this collection was published in the Federal Register on [insert date] May 10, 2007 and ended July 7, 2007 [insert date]. We will publish a 30-day Federal Register Notice to allow for additional public comment.

b. Consultation Outside the Agency

The Technical Working Group (TWG) assembled for this project is composed of nine leading researchers who can provide invaluable expertise in the fields most relevant to this study (literacy, randomized controlled trials, evaluation design, and statistics). The members of the TWG are:

Dr. J. Lawrence Aber, New York University

Dr. Anthony Bryk, Stanford University

Dr. Larry Hedges, Northwestern University

Dr. Stephen Klein, RAND Corporation

Dr. Don Leu, University of Connecticut

Dr. Richard Murnane, Harvard University

Dr. Michael Nettles, Educational Testing Services

Dr. Aline Sayer, University of Massachusetts, Amherst

Dr. Barbara Schneider, Michigan State University

c. Unresolved Issues

None.

A9. Payment or Gift to Respondents

Partner schools receive all Thinking Reader materials at no cost prior to the onset of the study:

Thinking Reader software with free technical assistance available by telephone

Supporting equipment (headphones and microphones) provided, if not currently installed

Hard copies of the selected novels in sufficient quantity for all treatment classrooms

Additional software and hard copies of novels for use in the control classrooms during the subsequent year after completion of the study

Free professional development for teachers participating in the study. Treatment group teachers will receive training on how to use Thinking Reader prior to the onset of the study (approximately November 2007), and control group teachers will receive training after the study is completed, but before the beginning of the next school year (approximately August 2008).

Because the professional development activities will take place outside of school hours, and to abide by requirements of the agreements between the teacher unions and the districts, teachers will be compensated at the district hourly rate for the study training.

A10. Assurance of Confidentiality

All project staff follow the confidentiality and data protection requirements of IES (The Education Sciences Reform Act of 2002, Title I, Part E, Section 183). We will protect the confidentiality of all information collected for the study and will use it for research purposes only. No information that identifies any study participant will be released. Information from participating institutions and respondents will be presented at aggregate levels in reports. Information on respondents will be linked to their institution but not to any individually-identifiable information. No individually-identifiable information will be maintained by the study team. All institution-level identifiable information will be kept in secured locations and identifiers will be destroyed as soon as they are no longer required. The American Institutes for Research (AIR) obtains signed NCEE Affidavits of Nondisclosure from all employees, subcontractors, and consultants that may have access to this data and submits them to our NCEE COR (OK Park). In addition, all members of the study team having access to the institution-level data have been certified by AIR’s Institutional Review Board as having received training in the importance of confidentiality and data security.

We will follow procedures for ensuring and maintaining confidentiality, consistent with the Family Educational Rights and Privacy Act of 1974 (20 USC 1232g) and the Privacy Act of 1974 (5 USC 552a), which covers the collection, maintenance, and disclosure of information from or about identifiable individuals. Data to be collected will not be released with individual student, teacher, or school identifiers. During the course of the project, only project analysts under the supervision of the Principal Investigator will keep any necessary identifying information and documents. No public use files are anticipated. After the project is completed, however, the contractor will destroy all identifying information and provide the Department with the data files.

Project staff will inform respondents both in writing and orally that they will not be identified individually in any analyses or publications. To ensure that any information obtained through the study is not available to anyone other than authorized project staff, a set of standard confidentiality procedures will be followed:

All project staff will sign an assurance of confidentiality which will be kept on file until data are delivered to IES at the end of the project.

Project staff will keep completely confidential the names of study participants, and any information learned incidentally.

Reasonable caution will be exercised in limiting access to observational, achievement, and survey data only to persons working on the project who have been instructed in the applicable confidentiality requirements of the project.

The Principal Investigator will be responsible for ensuring that contractor personnel involved in handling data on the project are instructed in these procedures and will comply with these procedures throughout the period.

Respondents will be assured that all information identifying them or their school or program will be kept confidential, in compliance with the legislation (PL 103-382).9

A11. Sensitive Questions

None of the requested information on the teacher background survey is sensitive in the traditional sense. Teachers will be asked information about their education and professional backgrounds. Such items may be sensitive to some respondents; however, they are key variables that may be associated with student outcomes. Analyses of all items will be presented in the aggregate.

A12. Estimates of Hour Burden

The total reporting burden associated with data collection for the Thinking Reader study is 58.5 hours. This includes 8.5 hours for the teacher background survey, 25 hours for the biweekly email checklists to treatment group teachers (to measure treatment fidelity), and 25 hours for the schools to provide REL-NEI with the student ELL and special education status data. The Gates-MacGinitie Reading Tests, the MARSI, and the MRQ are assessments of student ability/knowledge, and are not included in our burden calculations, nor is the CIERA, our classroom observation protocol. Table 2 provides a summary of the instruments, total sample sizes, estimated response rates, number of administrations, and the total burden. All estimates of time are shown in hours.

The estimated monetary cost of burden is $1,696.50, based on an average teacher hourly rate of $29. The annualized number of respondents (teachers and schools) is 75 (50 teachers and 25 schools; the 25 teachers who will be asked to complete the email probes are a subset of the 50 teachers counted previously).

We have provided copies of the teacher background survey and the biweekly email probes in this submission package. Please note that because ELL status and special education status are data we are requesting schools to provide us in whatever form is most convenient to them (e.g., spreadsheet, a text document, a printout/hard copy) we have not created or attached a standardized protocol for the 25 schools to use.

Table

2

Estimates of Hour Burden

Task |

Total Sample Size |

Estimated Response Rate |

Number of Respondents |

Time Estimate (in hours) |

Number of Administrations |

Hours |

Estimated Monetary Cost of Burden |

Teacher Background Survey (all treatment and control teachers) |

50 |

100% |

50 |

0.17 |

1 |

8.5 |

$246.50 |

Brief Email Probes (treatment teachers only) |

25 |

100% |

25 |

0.17 |

6 |

25.0 |

$725.00 |

Student ELL and special education status |

25 |

100% |

25 |

1 |

1 |

25.0 |

$725.00 |

Totals |

75 |

100% |

75 |

|

|

58.5 |

$1,696.50 |

A13. Estimate of Annual Cost Burden to Respondents

There are no additional respondent costs associated with this data collection other than the hour burden accounted for in item 12.

A14. Estimate of Annual Cost to the Federal Government

The estimated cost for this study, including development of a detailed study design, intervention and implementation plan, data collection instruments, justification package, data collection, data analysis, and preparation of reports, is $1,544,805 overall, with an annualized cost of $386,201.25 per year. This amount includes $177,656 for implementing Thinking Reader and $1,367,149 for evaluation activities.

Table

3

Estimates of Annual Cost to the Federal Government

Study Year (dates) |

Activities |

Total Study Costs per Year |

Year 1 (10/1/06 to 03/14/07) |

Development of study design; development/identification of data collection measures |

$140,886 |

Year 2 (03/15/07 to 03/14/08) |

Development of intervention and implementation plans; justification package; teacher training; pretest data collection; administration of teacher background survey |

$679,742 |

Year 3 (03/15/08 to 03/14/09) |

Posttest data collection; biweekly treatment teacher probes; classroom observations; data analysis |

$585,359 |

Year 4 (03/15/09 to 06/30/09) |

Data analysis and report writing |

$138,818 |

Total |

$1,544,805 |

|

A15. Program Changes or Adjustments

Not applicable. This request is for a new information collection.

A16. Plans for Tabulation and Publication of Results

Subcontractors to REL-NEI will produce the study reports. AIR will analyze the data and produce the final report, which will contain an appendix written by CAST that describes the progress of the implementation in the 25 schools. After the final report has been approved, AIR will develop two additional products: a 10-page study summary for schools and districts, and a 1-page summary for parents and the general public. We will revise this list of products as needed, to align with the coordinated dissemination plans that are being developed across the regional education laboratories.

A17. Approval to Not Display OMB Expiration

All data collection instruments will include the OMB expiration date.

A18. Explanation of Exceptions

We request no exceptions.

1 If there are more than two 6th grade ELA teachers in the schools recruited, our total school n will be lower than 25. Our main target is to secure the participation of 50 6th grade ELA teachers.

2 According to the Terms and Definitions document on the Connecticut State Department of Education website, “ERGs are a classification of the state’s public school districts into groups based on similar socio-economic status and need.” Factors that were considered in creating the ERGs were: family income, parental education, parental occupation, district poverty, family structure, home language, and district enrollment.

3 This excludes vocational, special education, and alternative/other schools.

4 These figures are estimated, based on number of software licenses sold.

5 Because the four student measures of knowledge do not count towards burden, we have not included them as attachments to this submission.

6 Although Thinking Reader does offer scaffolding delivered in Spanish, this feature functions as a Spanish-English dictionary, and is meant only to assist in comprehension, in case the English definition is not sufficiently clear to the student.

7 We are also interested in low-achieving students, and plan to use pretest data to determine student reading achievement status.

8 Because the CIERA classroom observation protocol does not place any burden on the teachers being observed, we have not included it as an attachment to this submission.

9 The legislation provides that no person may use “individually identifiable information furnished under this title for any purpose other than a statistical purpose, make any publication whereby the data furnished by any particular person under this title can be identified; or permit anyone other than the individuals authorized by the Commissioner to examine the individual reports.”

| File Type | application/msword |

| Author | Courtney Zmach, Ph.D. |

| Last Modified By | DoED User |

| File Modified | 2007-07-10 |

| File Created | 2007-07-10 |

© 2026 OMB.report | Privacy Policy