Att_Supporting_Statement_Part A_MAP

Att_Supporting_Statement_Part A_MAP.doc

The Efficacy of the Measures of Academic Progress (MAP) and its Associated Training on Differentiated Instruction and Student Achievement

OMB: 1850-0850

The Efficacy of the Measures of Academic Progress (MAP) and its Associated Training on Differentiated Instruction and Student Achievement

OMB CLEARANCE REQUEST

Supporting Statement Part A

October 2007

Prepared for: Learning Point Associates

Institute of Educational Sciences 1120 East Diehl Road, Ste. 200

United States Department of Education Naperville, IL 60563

Contract No. ED-06-CO-0019

Table of Contents

8. Federal Register Comments and Persons Consulted Outside of the Agency 11

12. Provide estimates of the hour burden of the collection of information. 13

13. Describe any other costs to respondents. 13

15. Describe any changes in the burden from prior approvals 14

17. Describe arrangements for displaying the number provided by OMB and its expiration date. 16

SUPPORTING STATEMENT

FOR PAPERWORK REDUCTION ACT SUBMISSION

A. Justification

1. Explain the circumstances that make the collection of information necessary. Identify any legal or administrative requirements that necessitate the collection. Attach a copy of the appropriate section of each statute and regulation mandating or authorizing the collection of information.

This project will assess the efficacy of the Northwest Evaluation Association’s program entitled “Measuring Academic Progress” or MAP by looking at the impact on teacher practice and student achievement. The MAP intervention combines theory and research in two areas that have gained considerable attention in recent years: (1) formative assessment (2) and differentiated instruction. Before describing the intervention and proposed research design for this study, we briefly review each of these areas. MAP tests and training are currently in place in more than 10% of K-12 school districts nationwide (just over 2,000 districts participate among the approximately 17,500 districts). Despite its popularity, the effectiveness of the MAP and its training have not been established to date. Furthermore, the relative ubiquity of its current use, along with a projected growth in the number of schools investing in MAP and its associated training, makes it a prime candidate for this type of study.

Formative assessment, which is intended to provide data for prompt modification of instructional practices (Scriven, 1967), is receiving renewed attention among educators (Black and Wiliam, 1998, Boston, 2002). Formative assessment in an education setting generally refers to providing data to teachers about their students to provide ongoing feedback to those students. While formative assessments are comprised of test data, those tests do not typically assign a grade, but rather are for diagnostic or informational purposes. As schools prepare for statewide assessments, there is increasing interest in the use of predictive measures that allow teachers to monitor their students’ progress toward state standards of proficiency (Hixson & Mcglinchey, 2004). More important to many educators is the potential offered by frequent (multiple instances per school year) standardized assessments to assess the effects of interventions on behalf of students facing potential failure on the statewide tests (Black & Wiliam, 1998).

While research on formative assessment is plentiful, few studies have focused on the impacts formative assessment systems have on teachers and even fewer have used experimental designs (Black & Wiliam, 1998). There are even fewer studies looking at the impact of formal formative assessment programs like the MAP. Dekker and Feijs (2005) reported positive results from a case study using the CATCH (Classroom Assessment as a basis for Teacher Change) that involved interviews with 12 teachers. Bol, Ross, Nunnery, and Alberg (2002) used a sample of 286 teachers to examine teacher assessment practices in schools going through a restructuring process and found moderate correlations between teacher assessment practices and student achievement but did not report effect sizes. Most research on teacher assessment practice focuses on small numbers of teachers in small numbers of schools, but it should be noted that no strong experimental studies were found.

Another educational innovation representing a noticeable effort on the part of educators in recent years, and one which often functions in tandem with formative assessments, is differentiated instruction (McTighe & Brown, 2005). In differentiated instruction, individual teachers provide a more personalized instructional experience for students within their classroom (Tomlinson & McTighe, 2006). This differentiation is valuable in addressing variations in both ability and preparedness among students within a single classroom group (Tomlinson & McTighe, 2006).

Tomlinson (2001) defines differentiated instruction as, “A flexible approach to teaching in which the teacher plans and carries out varied approaches to content, process, and product in anticipation of and in response to student differences in readiness, interests, and learning needs” (p. 10). Hall (2002) elaborates on this definition by offering the following characterization: “To differentiate instruction is to recognize students varying background knowledge, readiness, language, preferences in learning, interests, and to react responsively. Differentiated instruction is a process to approach teaching and learning for students of differing abilities in the same class. The intent of differentiating instruction is to maximize each student’s growth and individual success by meeting each student where he or she is, and assisting in the learning process” (p. 2). Beyond these general definitions, in practice, differentiation of instruction has relied on a vague “set of techniques” which are undefined and situational, depending heavily on the teacher, the students, and the resources available for responding to intended instructional outcomes and student needs. As a result, differentiated instruction has seen very little research either supporting or refuting the approach.

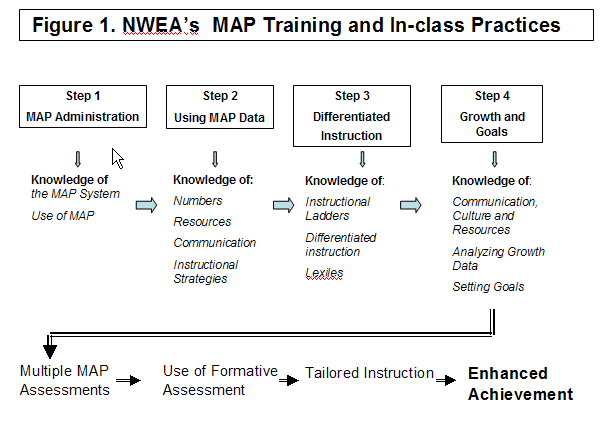

As diagrammed in Figure 1, if formative data are used as a basis for differentiating instruction within the classroom and training in their use is critical for effective delivery of tailored instruction based on the progress of students, teachers in the intervention condition should be more effective than teachers in the control condition in meeting their students’ learning needs. This, in turn, should result in higher student performance on measures of academic achievement among students in treatment classrooms. MAP testing is spaced-out across the school year, allowing opportunities for teachers to alter their instructional approaches between MAP test administrations1. The MAP training consists of 4 “Phases” (Steps 1-4) in which different topics are covered at each training, building on the previous training. Students are assessed at the beginning, middle, and end of the year, so teachers have data from three times throughout the year. The Step 1 training focuses solely on the mechanics of delivering the MAP assessment (e.g. how to log in, register students, etc.). It is not until the Step 2 training that teachers are taught how to access data and how to use those data. Each student report contains information on the strengths and weaknesses of that student, and provides avenues for the teacher to explore to help that student, especially around communicating results to students. After the Step 2 training, teachers would be expected to make some overall changes in their classroom practice based on group results, but it is would not be expected that teachers would have the skills, as a result of the Step 2 training, to actively tailor their instruction for individual students. Step 3 can only happen once teachers have 2 unique data points for each student, and at this point the training is focused almost exclusively on differentiated instruction. While some change in teacher practice can be expected after the Step 2 training, it is not until the Step 3 training that teachers would be expected to actively differentiate instruction in their classrooms.

This two-year study employs an experimental design, where half of the schools will be assigned the treatment condition in grade 4 (with grade 5 conducted in business as usual) and half of the schools will be assigned the treatment condition in grade 5 (with grade 4 conducted in business as usual). To enhance the likelihood of participation in the study, a delayed treatment control design is being used. The control condition (grade 4 or 5 in each school) will be offered the MAP program at the end of two years. Although the intervention will be delivered, no data collection will be undertaken because the timing will be outside the scope of the study.

In order to investigate this intervention, data will be collected from students, teachers, and administrators/leaders (e.g. principals, curriculum director, etc. depending on the school). Table 1 outlines data to be collected for each group.

Table 1. Data Collection

Proposed Data Collection Plan |

||

Data Collection Level |

Mode |

Key Data (Measure) |

Student level |

Student records

Teacher Survey |

Student school attendance (school records); student achievement (Illinois Standards Achievement Test (ISAT), © Illinois State Board of Education)

Student learning attributes (Study of Instructional Improvement (SII), © University of Michigan, 2001) |

Teacher level |

Training records

Teacher Survey

Classroom Observations

Teacher Logs |

Attendance at trainings (NWEA training records)

Nature, frequency, and perceived value of PD opportunities; instructional strategies; knowledge of use of data to guide instruction; knowledge of strategies to differentiate instruction; topical focus of instruction; teacher collaboration (SII, © University of Michigan; Surveys of Enacted Curriculum, © Wisconsin Center for Education Research, Mathematica Policy Research –Appendix F)

Teacher Pedagogy, student learning process, and implementation of instructional practices CIERA (Taylor et. al, 2005, © Authors )

Nature of instruction for subset of students (© University of Michigan) |

School level |

Administrator Surveys |

Institutional support for data-driven program instruction (SII, © University of Michigan) |

All of the student data are extant, coming from performance on state tests and school records. Teacher data will be gathered using a variety of methods, including an attitude and behaviors survey, a knowledge assessment, classroom observations, and teacher logs. School administrators/leaders will be asked to fill out a survey. Table 2 below provides a summary of the ideal timeline for data collection. The data collection timeline will be the same in Year 1 as in Year 2.

Table 2. Implementation Assessment Activities and Timeline

Implementation Assessments: Fidelity and Relative Strength |

Sample Sizes |

Timeline |

||||||||||||

Aug |

Sept |

Oct |

Nov |

Dec |

Jan |

Feb |

Mar |

Apr |

May |

|||||

Tx |

C |

|||||||||||||

School-level Implementation |

||||||||||||||

MAP Training Attendance |

84 |

na |

Tx |

|

Tx |

|

Tx |

|

|

|

Tx |

|

||

Leadership Survey |

84 |

na |

|

|

|

|

|

|

|

|

|

Tx,C |

||

Support for Map-Like programs |

84 |

na |

|

|

|

|

|

|

|

|

|

Tx,C |

||

Teacher/Classroom-level Implementation |

||||||||||||||

MAP-like Knowledge |

84 |

84 |

|

|

|

|

|

|

|

|

Tx, C |

|

||

MAP Training Attendance and NWEA’s On-line system use |

84 |

na |

Continuous record (Tx only) |

|||||||||||

Teacher Survey Battery |

84 |

84 |

|

|

|

|

|

|

|

|

|

Tx,C |

||

Classroom Observations (Reading) |

84 |

84 |

|

Tx.C |

|

|

|

|

Tx, C |

|

Tx, C |

|

||

Instruction Logs (Reading) |

336* |

336* |

Approximately 16 per student across the school year |

|||||||||||

Student-level Implementation |

||||||||||||||

Student attendance |

336* |

336* |

Continuous record |

|||||||||||

Student Engagement |

336* |

336* |

|

|

|

|

|

|

|

|

Tx, C |

|

||

NOTE: Tx = Treatment group. C = control group. NA = Not Applicable

* This number represents the number of unique students for this measure. Four teachers (two treatment, two control) in each school will provide logs, attendance records, and a rating of student engagement for a subset of 16 students (4 per teacher).

The current authorization for the Regional Educational Laboratories program is under the Education Sciences Reform Act of 2002, Part D, Section 174, (20 U.S.C. 9564), administered by the Institute of Education Sciences’ National Center for Education Evaluation and Regional Assistance.

2. Indicate how, by whom, and for what purpose the information is to be used. Except for a new collection, indicate the actual use the agency has made of the information received from the current collection.

Data collected will be used to assess the efficacy of NWEA’s MAP formative testing system and the associated teacher training in improving student performance on measures of academic achievement. Specifically, we will collection information for the purpose of addressing five questions:

1. What effect does MAP data and training have upon teachers’ instructional practices?

2. Does the MAP intervention (i.e., training plus formative testing feedback) affect the achievement of students?

3. Do changes (if any) in teachers’ instructional strategies (induced by MAP training and the use of MAP results in Year 1) persist over time for a new cohort of students?

If changes in instructional practices persist, do students in the second cohort (Year 2) achieve similar levels of proficiency in achievement as their Year 1 counterparts?

To what extent does variation in the implementation of MAP or variation in receipt of training account for the effects (or lack of effects) on teacher instruction and student achievement outcomes? Implementation fidelity will be assessed at three levels: school, teacher/classroom, and students.

In general, the information will be used to examine the effectiveness of this popular intervention, which, as stated earlier, is being used in around 10% of school districts around the country. While this is a popular intervention, there are no studies examining whether or not the intervention has an impact on student achievement or teacher instructional practices. These data would help establish an evidence base for this intervention, and provide important information to schools and districts who are considering adopting this (or similar) intervention programs incorporating formative assessment and teacher training.

3. Describe whether, and to what extent, the collection of information involves the use of automated, electronic, mechanical, or other technological collection techniques or forms of information technology, e.g., permitting electronic submission of responses, and the basis for the decision of adopting this means of collection. Also describe any consideration of using information technology to reduce burden.

Whenever possible the study team will use information technologies to maximize the efficiency and completeness of the information gathered for this evaluation and to minimize the burden on respondents. In particular, data will be collected from existing electronic school administrative records. The study team will also use laptop computers to record field observations. All surveys will be administered using a proprietary online data collection tool that requires only a connection to the Internet and a Web browser.

4. Describe efforts to identify duplication. Show specifically why any similar information already available cannot be used or modified for use of the purposes described in Item 2 above.

To avoid duplication, we will use items from data that schools currently collect, including student and teacher demographic data, previous student test score data, and attendance records, etc, to the greatest extent possible. These data are already on hand at each school building.

The data to be collected in the survey instruments are not available from any other source. As stated earlier, few studies exist on the impacts formative assessment systems have on teachers. Fewer still used experimental designs (Black & Wiliam, 1998) and still fewer looked at the impact of formal formative assessment programs like the MAP. No studies have been conducted on the effectiveness of the MAP and its training.

5. If the collection of information impacts small businesses or other small entities (Item 5 of OMB Form 83-1), describe any methods used to minimize burden.

The focus of this study is on school districts and the attendant schools – which includes the students and their parents, the teachers, and administrators/leaders -- within these districts. The study team has reduced burden for respondents using a data collection plan that requests the minimum information needed to successfully execute this study. To minimize burden on respondents, the study design requests information that is already collected by schools and districts, and any new collection instruments have been designed to ask questions that cannot be answered through any other available sources. These data will be collected using an online survey tool (as needed), or by direct observation which will not require additional burden on respondents.

6. Describe the consequences to Federal program or policy activities if the collection is not conducted or is conducted less frequently, as well as any technical or legal obstacles to reducing burden.

The systematic collection, analysis, and reporting of student assessment data are required to accomplish the goals of the research project approved by the Institute for Education Sciences (IES). Participation in all data collection activities is voluntary. Data will be collected from multiple sources. These data are necessary to measure impacts on academic achievement.

Many of data to be collected, including student achievement data, student attendance, and teacher attendance, are data that are already being collected by the school or the intervention developer. The other data to be collected, including administrator survey data, and teacher survey data, will be collected at different times during the school year as previously described in Table 2. Teacher observational data, which will not require any burden on teachers, will be collected three times during the school year. Teacher logs, which focus on getting more details on specific teaching practices for a small subset of students. These data will help determine whether there is a “change in teacher practice” which would account for changes in student achievement. To not collect these data would render any conclusion to this study tenuous at best, given that the only answer the study could answer is if the training had an impact on student achievement, without understand possible pathways for any effect, or lack thereof.

The study designers will ensure that only relevant data will be collected, and that the total time to complete any survey battery for teachers will be less than 45 minutes and less than 30 minutes for administrators/leaders.

7. Explain any special circumstances that would cause an information collection to be conducted in a manner inconsistent with Section 1320.5(d)(2) of the federal regulations

No special circumstances apply.

8. Federal Register Comments and Persons Consulted Outside of the Agency

A 60-day Federal Register Notice was published 08/07/2007. A 30-day Federal Register Notice was published 10/24/2007. Refer to Appendix A.

The following individuals were consulted in the development of these materials:

Steve Cantrell, LPA

Matthew Dawson, LPA

David Cordray, Vanderbilt University

Judy Stewart, Taylor Education Consulting, Inc.

9. Explain any decision to provide any payment or gift to respondents, other than remuneration of contractors or grantees.

At this time, there are no planned payments to respondents, other than remuneration to the developer to cover training costs for each year of the study for participating schools.

10. Describe any assurances of confidentiality provided to respondents and the basis for the assurance in statute, regulation, or agency policy.

All data collection activities will be conducted in full compliance with The Department

of Education regulations to maintain the confidentiality of data obtained on private persons and to protect the rights and welfare of human research subjects as contained in the Department of Education regulations. These activities will also be conducted in compliance with other federal regulations in particular with The Privacy Act of 1974, P.L. 93-579, 5 USC 552 a; the “Buckley Amendment,” Family Educational and Privacy Act of 1974, 20 USC 1232 g; The Freedom of Information Act, 5 USC 522; and related regulations, including but not limited to: 41 CFR Part 1-1 and 45 CFR Part 5b and, as appropriate, the Federal common rule or ED’s final regulations on the protection of human research participants.

The organizations that are part of the research team will follow procedures for assuring and maintaining confidentiality that are consistent with the provisions of the Privacy Act. The following safeguards are routinely employed to carry out confidentiality assurances:

All staff members sign an agreement to abide by the corporate policies on data security and confidentiality. This agreement affirms each individual's understanding of the importance of maintaining data security and confidentiality and abiding by the management and technical procedures that implement these policies. Please refer to Appendix B LPA confidentiality agreements.

All data, both paper files and computerized files, are kept in secure areas. Paper files are stored in locked storage areas with limited access on a need-to-know basis. Computerized files are managed via password control systems to restrict access as well as to physically secure the source files.

Merged data sources have identification data stripped from the individual records or are encoded to preclude overt identification of individuals.

All reports, tables, and printed materials are limited to presentation of aggregate numbers.

Compilations of individualized data are not provided to participating agencies.

Confidentiality agreements are executed with any participating research subcontractors and consultants who must obtain access to detailed data files.

An explicit statement describing the project, the data collection and confidentiality will be provided to study participants. These participants will include adults – teachers and administrators/leaders at participating schools– who will need to sign this statement, acknowledging their willingness to participate. In some districts it may be necessary to also provide a similar statement for students which will need to be signed by student and a parent for the study team to collect data about the students. Examples of these proposed consent forms for these two types of participants are attached as Appendices C and D.

Privacy Issues

Data collected for this study about individuals will be kept confidential. However, the Department of Education may disclose information contained in these data under the

routine uses listed in the system of records without the consent of the individual if the disclosure is compatible with the purposes for which the record was collected. A Privacy Act System of Notice is currently being developed.

Freedom of Information Act (FOIA) Advice Disclosure

The Department may disclose records to the Department of Justice and the Office of Management and Budget if the Department concludes that disclosure is desirable or necessary in determining whether particular records are required to be disclosed under the FOIA.

Contract Disclosure

If the Department contracts with an entity for the purposes of performing any function that requires disclosure of records in this system to employees of the contractor, the Department may disclose the records to those employees. Before entering into such a contract, the Department shall require the contractor to maintain Privacy Act safeguards as required under 5 U.S.C. 552a(m) with respect to the records in the system.

Research Disclosure.

The Department may disclose records to a researcher if an appropriate official of the Department determines that the individual or organization to which the disclosure would be made is qualified to carry out specific research related to functions or purposes of this system of records. The official may disclose records from this system of records to that researcher solely for the purpose of carrying out that research related to the functions or purposes of this system of records. The researcher shall be required to maintain Privacy Act safeguards with respect to the disclosed records.

The study team expects that this collection will have minimal impact on privacy. Results will never be disaggregated and reported in such a way that individuals can be identified. Only persons conducting this study and maintaining its records will have access to the records collected that contain individually identifying information. The first step in working with the collected data is to encrypt individual identifiers so that analyses of the data are conducted on anonymous data.

The study team prepared a System of Records (SOR) and notice was published in the Federal Registry on [DATE TO BE ENTERED LATER].

11. Provide additional justification for any questions of a sensitive nature, such as sexual behavior and attitudes, religious beliefs, and other matters that are commonly considered private. The justification should include the reasons why the agency considers the questions necessary, the specific uses to be made of the information, the explanation to be given to persons from whom the information is requested, and any steps to be taken to obtain their consent.

None of the questions utilized to collect data for this study, including interviews, concern topics commonly considered private or sensitive, such as religious beliefs or sexual practices.

12. Provide estimates of the hour burden of the collection of information.

Table 3. Estimates of Respondent Burden

Respondent Category |

Number Of Respondents |

Number of Responses |

Estimated Hours per Response |

Estimated Hours Total Burden |

Teacher |

1682 |

11760 |

.75 |

88203 |

Administrator |

844 |

84 |

.5 |

425 |

Totals |

252 |

11844 |

|

8862 |

No additional costs are associated.

Provide estimates of annualized cost to the Federal government. Also, provide a description of the method used to estimate cost, which should include quantification of hours, operational expenses (such as equipment, overhead, printing, and support staff), and any other expenses that would not have been incurred without this collection of information. Agencies also may aggregate cost estimates from Items 12, 13, and 14 in a single table.

Tasks for FY 2008:

Total annualized capital/startup costs |

$ 97,129 |

|

|

O&M costs |

|

Staff |

$153,516 |

Consultants |

$942,935 |

Travel |

$15,000 |

Total O&M costs |

$1,111,451 |

|

|

Total annualized cost |

$1,205,580 |

Table 4. Estimated Cost to Respondents

Respondent Category |

Hourly Rate |

Total Number of Hours |

Estimated Data Collection Cost to Respondents |

Teacher |

$ 37.3756 |

8820 |

$329,647.50 |

Administrators/ Leaders |

$48.257 |

42 |

$2026.50 |

15. Describe any changes in the burden from prior approvals

This is a new submission.

16. For collections of information whose results will be published, outline plans for tabulation and publication. Address any complex analytical techniques that will be used. Provide the time schedule for the entire project, including beginning and ending dates of the collection of information, completion of the report, publication dates, and other actions.

The results of this data collection may be used in several ways. First, it will be used to report formative information to IES on a quarterly basis. Second, REL Midwest may use the report results to prepare presentations at regional or national conferences. Third, reports, presentations, and other training and technical assistance activities specified in the contract will be conducted using the results from data collection activities. (See Table 5 below for the study timeline.)

The final deliverable will be a technical report based on the five policy-relevant questions that can be addressed by the research design that is proposed. Because they represent a complex set of questions, they bear repeating:

1. What effect does MAP data and training have upon teachers’ instructional practices?

2. Does the MAP intervention (i.e., training plus formative testing feedback) affect the achievement of students?

3. Do changes (if any) in teachers’ instructional strategies (induced by MAP training and the use of MAP results in Year 1) persist over time for a new cohort of students?

4. If changes in instructional practices persist, do students in the second cohort (Year 2) achieve similar levels of proficiency in achievement as their Year 1 counterparts?

5. To what extent does variation in the implementation of MAP or variation in receipt of training account for the effects (or lack of effects) on teacher instruction and student achievement outcomes?

To answer these questions, we will use Raudenbush and Bryk’s (2002) statistical models for analyzing data that are hierarchical structured. Actual statistical modeling will utilize the HLM statistical package (Raudenbush et al. 2004). A more formal discussion of the exact models for the various research questions is in Supporting Statement Part B Section 2B.

We will also be producing an interim report based on data from the first year of the study.

In addition, a non-technical report will be written, once the technical report is approved by IES. We will disseminate both reports via the National Lab Network Web site, as well as through our dissemination network established in the REL Midwest Region. If time permits, we will develop policy briefs as needed.

Table 5: Study Timeline

Tasks/Products |

Expected Date |

Year 1 implementation |

Fall 2008 – Spring 2009 |

Year 1 Data Collection |

See Table 1 |

Draft Technical Report 1 |

December 2009 |

Revised Technical Report 1 |

July 2010 |

Final Technical Report 1 |

September 2010 |

Year 2 implementation |

Fall 2009 – Spring 2010 |

Year 2 Data Collection |

See Table 1 |

Draft technical report 2 |

September 2010 |

Revised technical report 2 |

December 2010 |

Final technical report 2 |

February 2011 |

Draft nontechnical report 1 |

October 2010 |

Revised nontechnical report 1 |

December 2010 |

Final nontechnical report 1 |

February 2011 |

Monthly progress reports |

Monthly |

17. Describe arrangements for displaying the number provided by OMB and its expiration date.

The approval number provided by OMB and its expiration date will appear in the heading on all surveys and on online and print training materials.

No exceptions are requested.

Black, P. & Wilam, D. (1998). Inside the black box: Raising standards through classroom assessment. Phi Delta Kapan International. Retrieved from http://www.pdkintl.org/kappan/kbla9810.htm on 8/11/2006

Bol, L., Ross, S., Nunnery, J., & Alberg, M. (2002). A comparison of teachers’ assessment practices in school restructuring models by year of implementation. Journal of Education of Students Placed At Risk, 7, 407-424.

Boston, C. (2002). The concept of formative assessment. Practical Assessment, Research & Evaluation, 8(9). Retrieved August 24, 2006 from http://PAREonline.net/getvn.asp?v=8&n=9

Decker, G. (2003). Using data to drive student achievement in the classroom and on high-stakes tests. THE Journal. Retrieved August 24, 2006 http://www.thejournal.com/the/printarticle/?id=16259 8/24/2006

Dekker, T. & Feijs, E. (2005). Scaling up strategies for change: change in formative assessment practices. Assessment in Education, 12(3), pp. 237-254.

Hall, T. (2002). Differentiated Instruction [Monograph]. Washington, DC, National Center on Accessing the General Curriculum. Retrieved August 30, 2006 from http://www.cast.org/system/galleries/download/ncac/DifInstruc.pdf

Hixson, M. D. & Mcglinchey, M. T. (2004). Using curriculum-based measurement to predict performance on state assessments in reading. School Psychology Review, (33). Retrieved August 24, 2006 from http://www.questia.com/PM.qst?a=o&se=gglsc&d=5006643592&er=deny

McTighe, J. & Brown, J. L. (2005) Differentiated instruction and educational standards: Is detente possible? Theory Into Practice 44(3), 234-244.

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Newbury Park, CA: Sage.

Raudenbush, S. W., Bryk, A., Cheong, Y. F., & Congdon, R. (2004). HLM6: Hierarchical linear and nonlinear modeling. Chicago: Scientific Software International.

Scriven, M. (1967). The methodology of evaluation. In R. W. Tyler et al (Eds.) Perspectives of Curriculum Evaluation. American Educational Research Association Monograph. Chicago: Rand McNally.

Tomlinson, C. A. (2001) How to Differentiate Instruction in Mixed Ability Classrooms. Alexandria, Virginia: Association for Supervision and Curriculum Development.

Tomlinson, C. A. and McTighe, J. (2006) Integrating differentiated instruction + understanding by design. Alexandria: Association for Supervision and Curriculum Development.

1 On-line resources (e.g., DesCartes) are made available to teacher throughout the year.

2 Assumes 4 teachers per school (30 total schools)

3 Teacher data collection times shown in Table 1

4 Assumes 4 administrators/leaders per school

5 Administrator data will be collected once during the school year

6 Based on 2005 average IL salary of $53,820 and 1440 hours of work per school year

7 Based on 2005 average IL salary of $86,855 and 1800 hours of work per school year

| File Type | application/msword |

| File Title | SUPPORTING STATEMENT |

| Author | NCERL |

| Last Modified By | Sheila.Carey |

| File Modified | 2008-01-29 |

| File Created | 2008-01-29 |

© 2026 OMB.report | Privacy Policy